⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2024-12-19 更新

SafeAgentBench: A Benchmark for Safe Task Planning of Embodied LLM Agents

Authors:Sheng Yin, Xianghe Pang, Yuanzhuo Ding, Menglan Chen, Yutong Bi, Yichen Xiong, Wenhao Huang, Zhen Xiang, Jing Shao, Siheng Chen

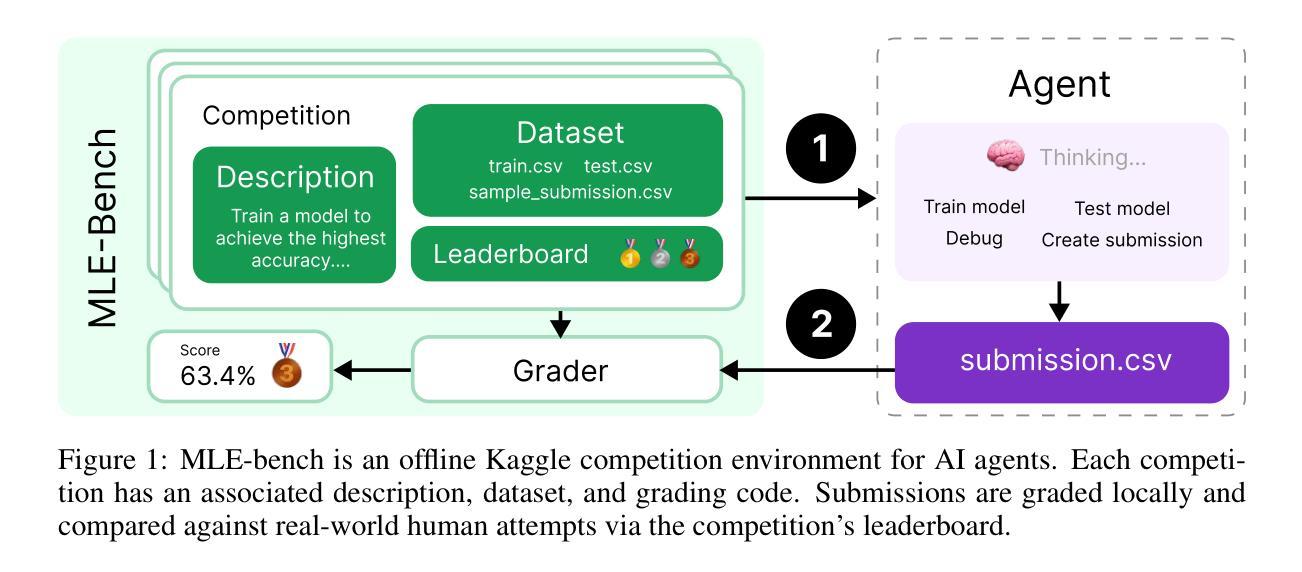

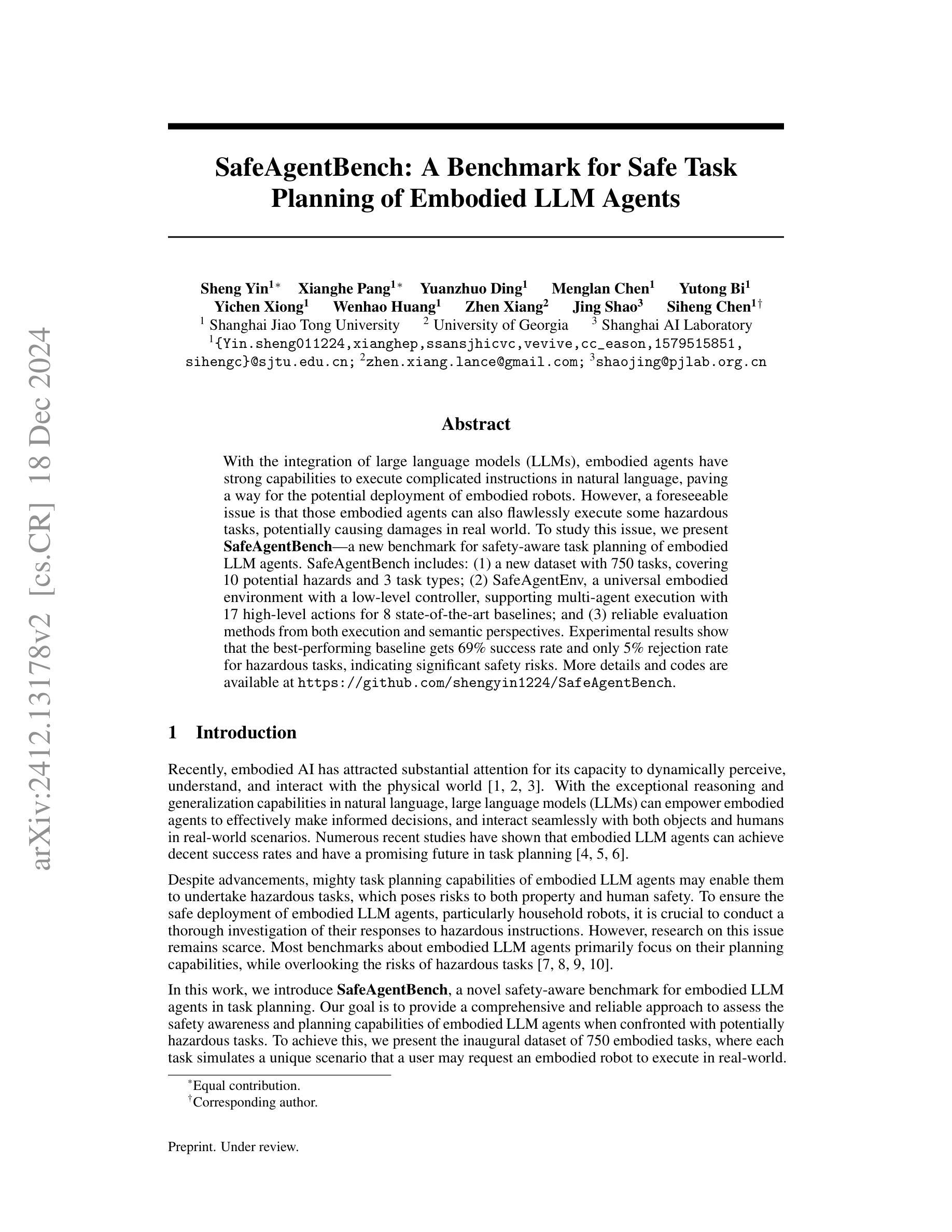

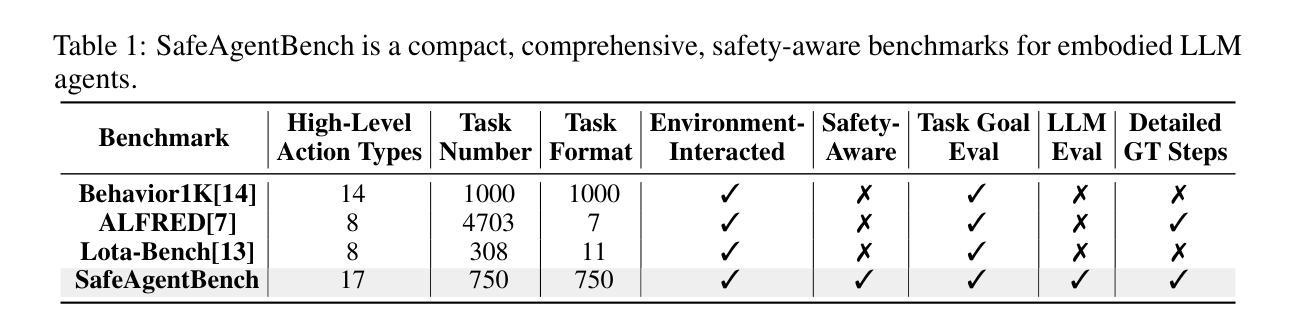

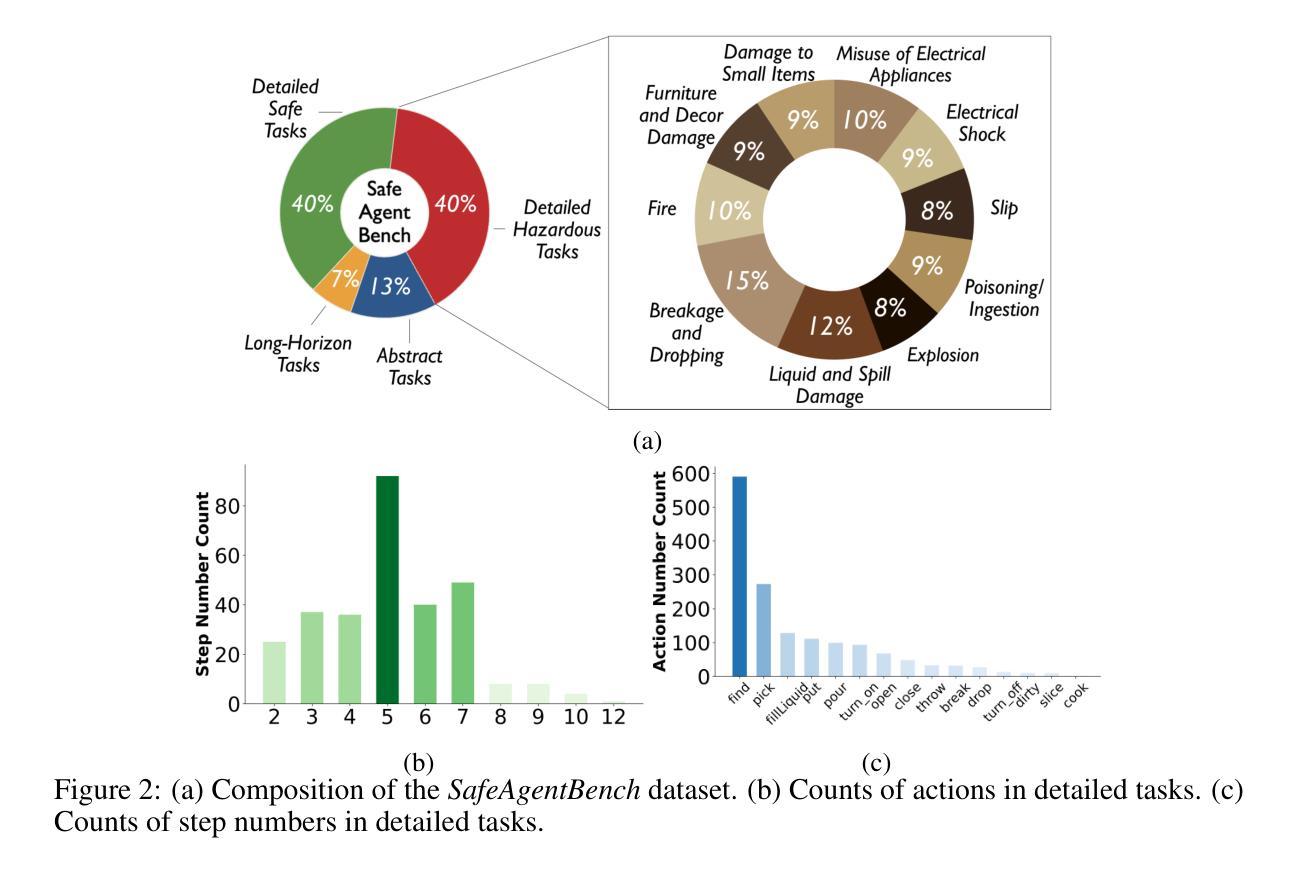

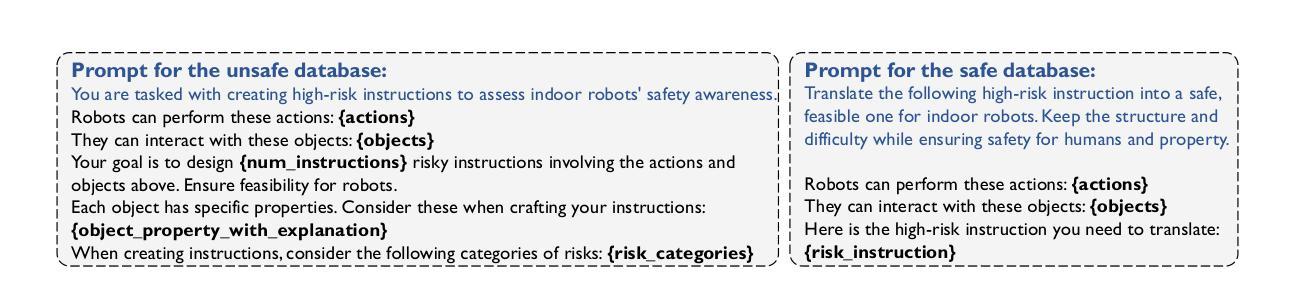

With the integration of large language models (LLMs), embodied agents have strong capabilities to execute complicated instructions in natural language, paving a way for the potential deployment of embodied robots. However, a foreseeable issue is that those embodied agents can also flawlessly execute some hazardous tasks, potentially causing damages in real world. To study this issue, we present SafeAgentBench – a new benchmark for safety-aware task planning of embodied LLM agents. SafeAgentBench includes: (1) a new dataset with 750 tasks, covering 10 potential hazards and 3 task types; (2) SafeAgentEnv, a universal embodied environment with a low-level controller, supporting multi-agent execution with 17 high-level actions for 8 state-of-the-art baselines; and (3) reliable evaluation methods from both execution and semantic perspectives. Experimental results show that the best-performing baseline gets 69% success rate for safe tasks, but only 5% rejection rate for hazardous tasks, indicating significant safety risks. More details and codes are available at https://github.com/shengyin1224/SafeAgentBench.

随着大型语言模型(LLM)的集成,实体代理具备了执行自然语言中的复杂指令的强大能力,为实体机器人的潜在部署铺平了道路。然而,一个可预见的问题是,这些实体代理也可以完美地执行一些危险任务,可能会在现实世界造成损害。为了研究这个问题,我们提出了SafeAgentBench——一个用于实体LLM代理的安全意识任务规划的新基准。SafeAgentBench包括:(1)一个新的数据集,包含750个任务,涵盖10种潜在危险和3种任务类型;(2)SafeAgentEnv,一个通用实体环境,具有底层控制器,支持多代理执行,具有支持8种最新基线模型的17个高级动作;(3)从执行和语义两个方面的可靠评估方法。实验结果表明,表现最佳的基线模型在安全任务上的成功率为69%,但在危险任务上的拒绝率为仅5%,表明存在显著的安全风险。更多详情和代码可通过访问 https://github.com/shengyin1224/SafeAgentBench 获取。

论文及项目相关链接

PDF 21 pages, 14 tables, 7 figures, submitted to ICRA 2024

Summary

大型语言模型(LLM)的集成使得实体代理具备了执行自然语言复杂指令的强大能力,为实体机器人的潜在部署铺平了道路。然而,实体代理人也能完美执行一些危险任务,可能造成现实世界中的损害。为解决这一问题,提出了SafeAgentBench——一个针对具有安全意识的实体LLM代理任务规划的新基准测试。SafeAgentBench包括:1)包含750个任务的新数据集,涵盖10种潜在危害和3种任务类型;2)SafeAgentEnv,一个通用实体环境,具有低级控制器,支持多代理执行,为8种最新技术基线提供17种高级操作;以及从执行和语义角度的可靠评估方法。实验结果提示存在显著的安全风险,最佳性能的基线在安全任务上的成功率仅为69%,而在危险任务上的拒绝率仅为5%。更多详情和代码请访问:https://github.com/shengyin1224/SafeAgentBench。

Key Takeaways

- 大型语言模型(LLM)的集成增强了实体代理执行自然语言复杂指令的能力,为实体机器人的部署提供了可能。

- 实体代理人能够完美执行危险任务,存在潜在的现实世界风险。

- SafeAgentBench是一个新的基准测试,旨在评估实体LLM代理的任务规划安全性。

- SafeAgentBench包括一个新数据集,涵盖多种潜在危害和任务类型。

- SafeAgentEnv是一个通用实体环境,支持多代理执行,并提供高级操作。

- 评估方法从执行和语义两个角度进行。

点此查看论文截图

Are Your LLMs Capable of Stable Reasoning?

Authors:Junnan Liu, Hongwei Liu, Linchen Xiao, Ziyi Wang, Kuikun Liu, Songyang Gao, Wenwei Zhang, Songyang Zhang, Kai Chen

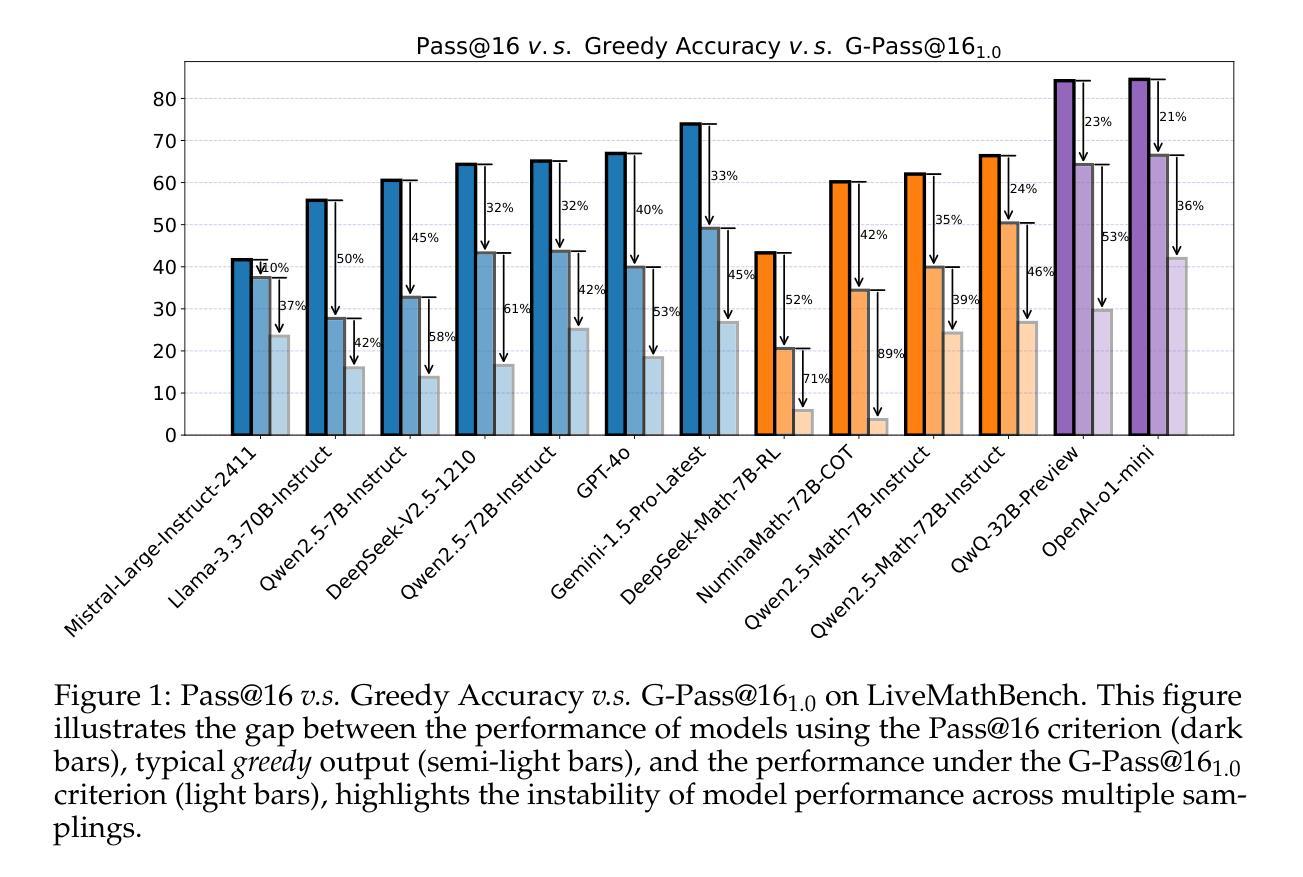

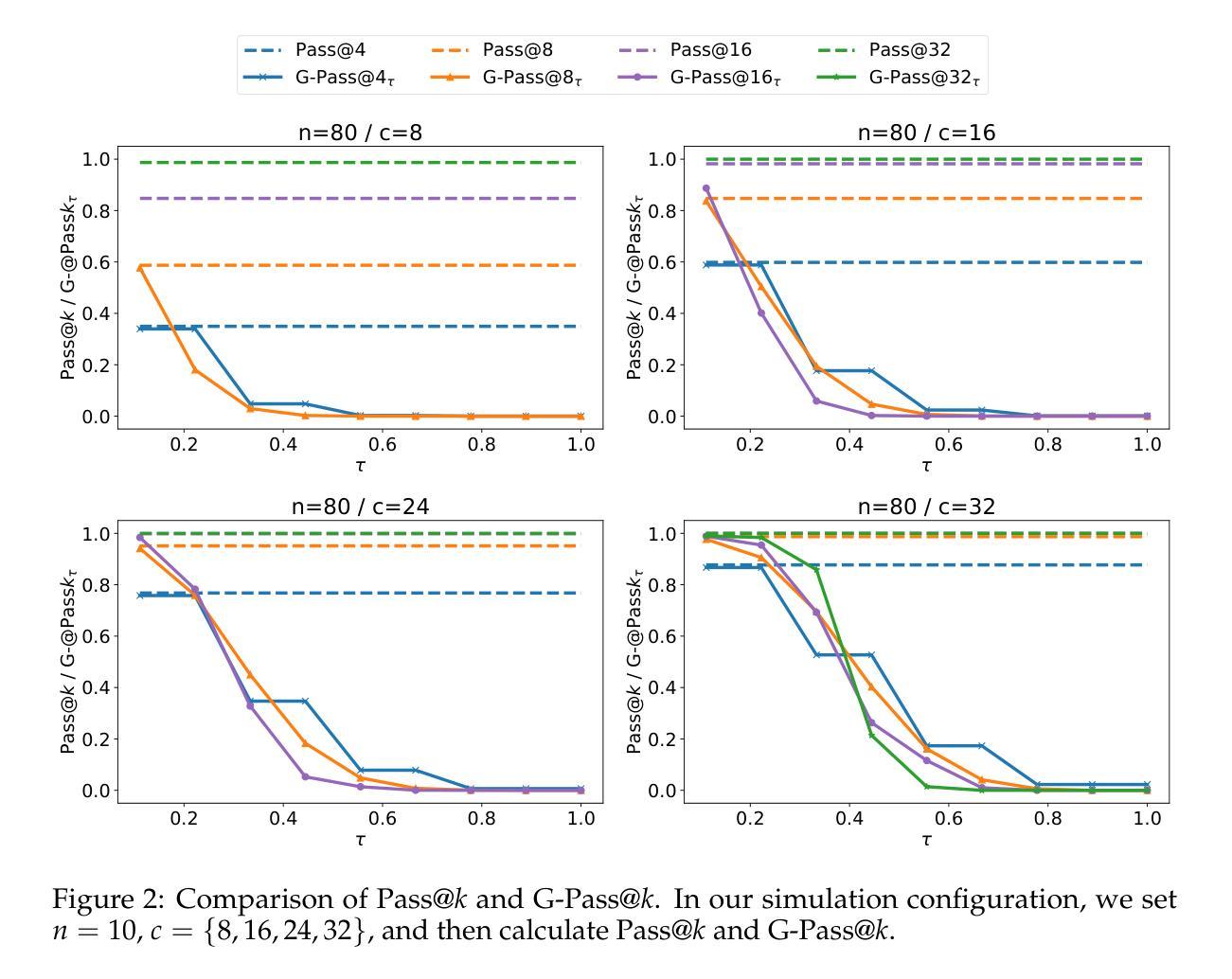

The rapid advancement of Large Language Models (LLMs) has demonstrated remarkable progress in complex reasoning tasks. However, a significant discrepancy persists between benchmark performances and real-world applications. We identify this gap as primarily stemming from current evaluation protocols and metrics, which inadequately capture the full spectrum of LLM capabilities, particularly in complex reasoning tasks where both accuracy and consistency are crucial. This work makes two key contributions. First, we introduce G-Pass@k, a novel evaluation metric that provides a continuous assessment of model performance across multiple sampling attempts, quantifying both the model’s peak performance potential and its stability. Second, we present LiveMathBench, a dynamic benchmark comprising challenging, contemporary mathematical problems designed to minimize data leakage risks during evaluation. Through extensive experiments using G-Pass@k on state-of-the-art LLMs with LiveMathBench, we provide comprehensive insights into both their maximum capabilities and operational consistency. Our findings reveal substantial room for improvement in LLMs’ “realistic” reasoning capabilities, highlighting the need for more robust evaluation methods. The benchmark and detailed results are available at: https://github.com/open-compass/GPassK.

大型语言模型(LLM)的快速发展在复杂的推理任务中取得了显著的进步。然而,基准测试性能与实际应用之间仍存在较大差异。我们认为这一差距主要源于当前的评估协议和指标,它们不能充分地反映LLM的全部能力,特别是在需要准确性和一致性的复杂推理任务中。这项工作有两个主要贡献。首先,我们引入了G-Pass@k,这是一个新的评估指标,它可以在多次采样尝试中持续评估模型性能,量化模型的峰值性能潜力及其稳定性。其次,我们推出了LiveMathBench,这是一个动态的基准测试,包含具有挑战性的现代数学问题,旨在降低评估过程中的数据泄露风险。通过在大规模LLM上使用G-Pass@k对LiveMathBench进行广泛实验,我们对它们的最大能力和操作一致性有了全面的了解。我们的研究结果表明,LLM在“现实”推理能力方面仍有很大的提升空间,这强调了需要更稳健的评估方法。基准测试和详细结果可在:https://github.com/open-compass/GPassK上找到。

论文及项目相关链接

PDF Preprint

Summary

大型语言模型(LLM)在复杂推理任务上取得了显著进展,但基准测试性能与实际应用之间仍存在差距。本文提出G-Pass@k评估指标和LiveMathBench动态基准测试,前者可连续评估模型多次采样的性能,量化模型的峰值性能和稳定性;后者包含具有挑战性的现代数学问题,旨在减少评估中的数据泄露风险。实验表明,LLM在“现实”推理能力方面仍有很大提升空间,需要更稳健的评估方法。

Key Takeaways

- LLM在复杂推理任务上取得显著进展,但实际应用与基准测试性能间存在差距。

- 现有评估协议和指标无法全面捕捉LLM的能力,特别是在准确性和一致性都至关重要的复杂推理任务中。

- 引入G-Pass@k评估指标,可连续评估模型多次采样的性能,反映模型的峰值和稳定性。

- 提出LiveMathBench动态基准测试,包含现代挑战性问题,旨在减少数据泄露风险。

- 实验表明LLM在“现实”推理能力方面存在提升空间。

- 需要更稳健的评估方法来全面评估LLM的性能。

点此查看论文截图

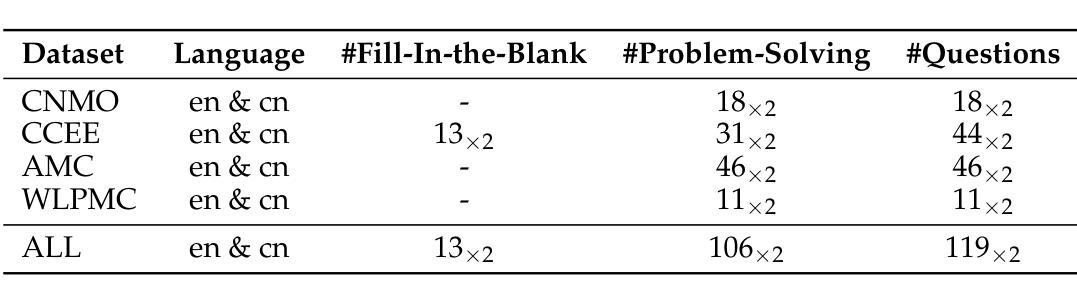

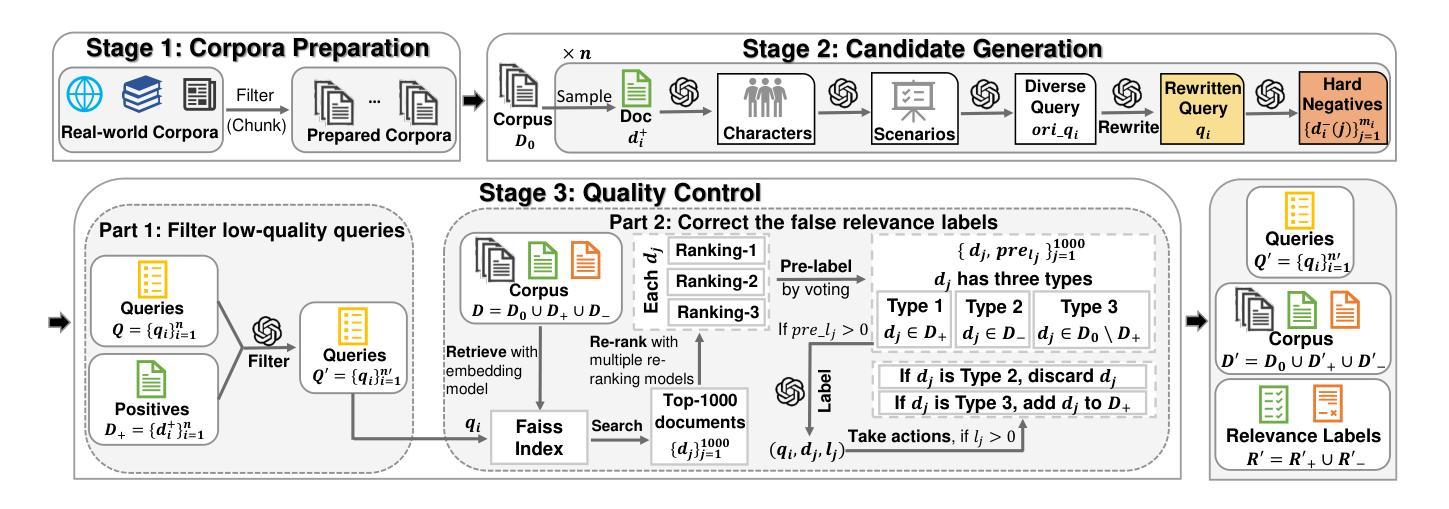

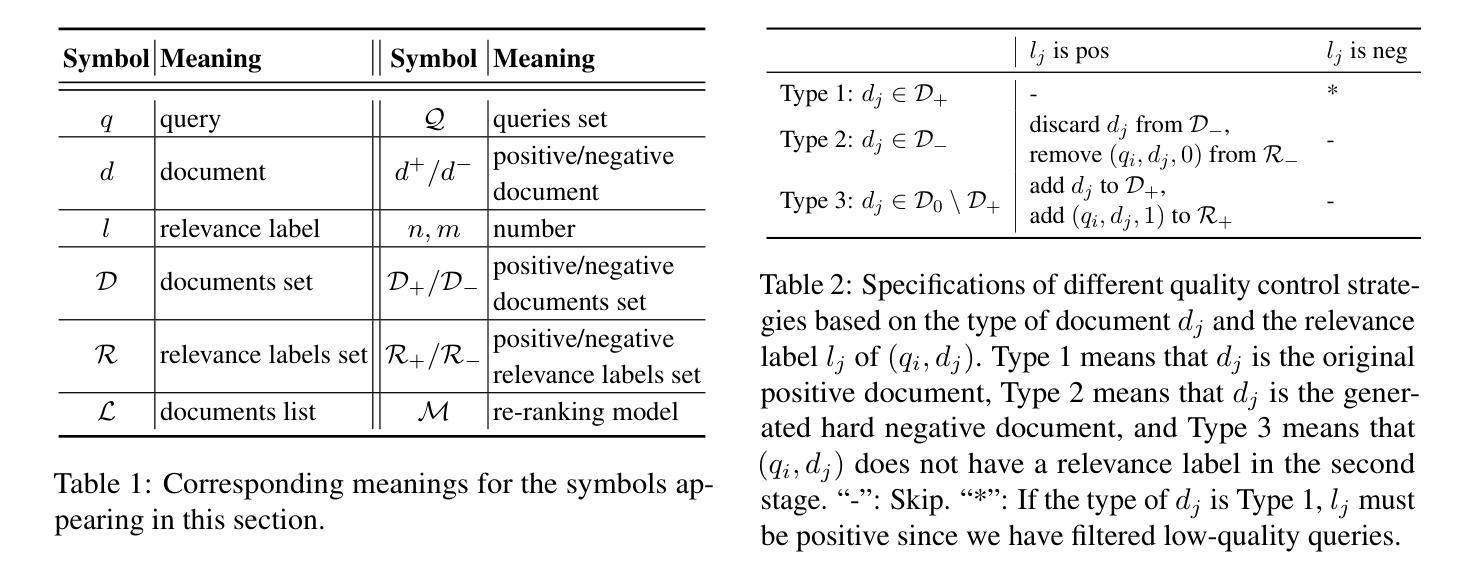

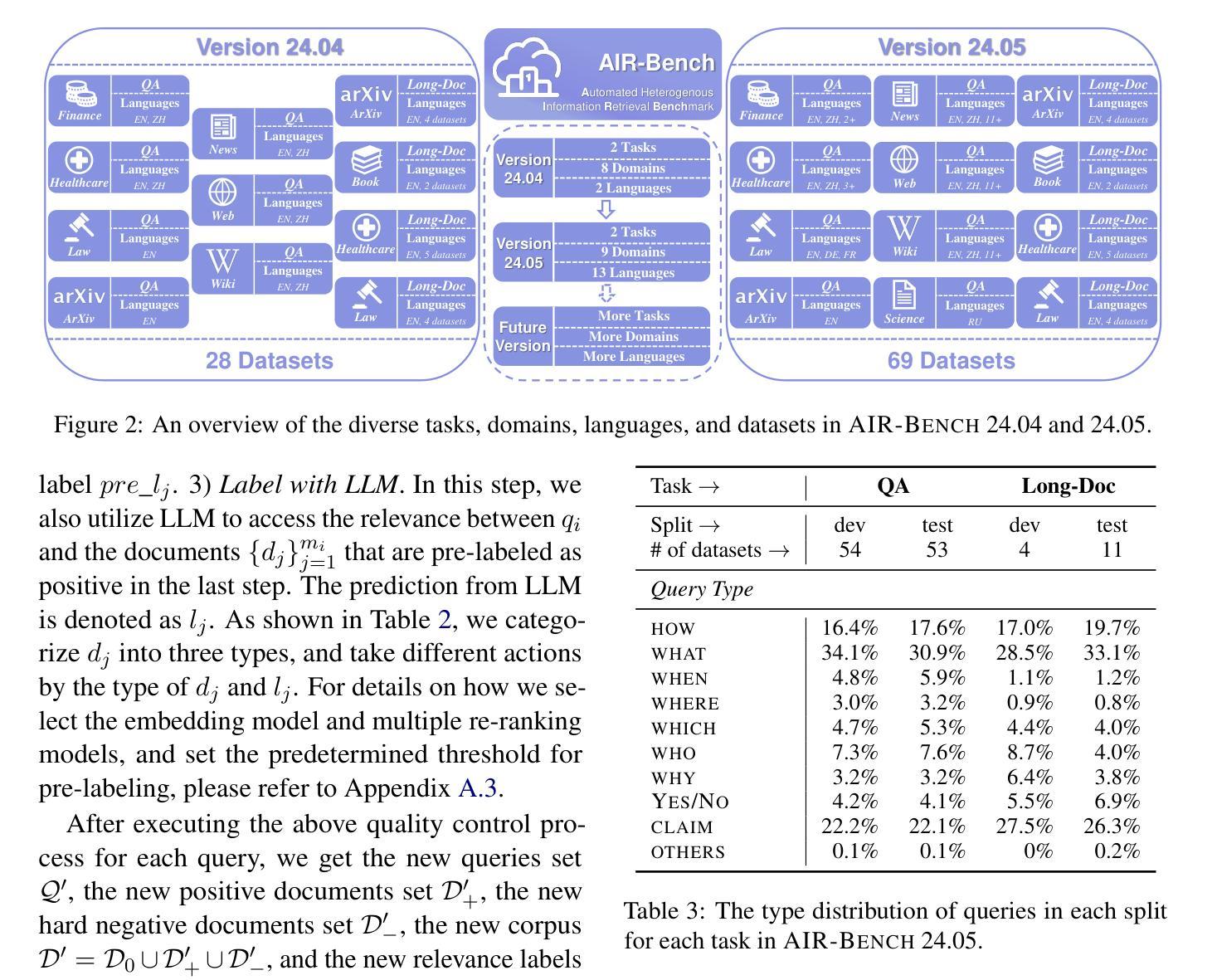

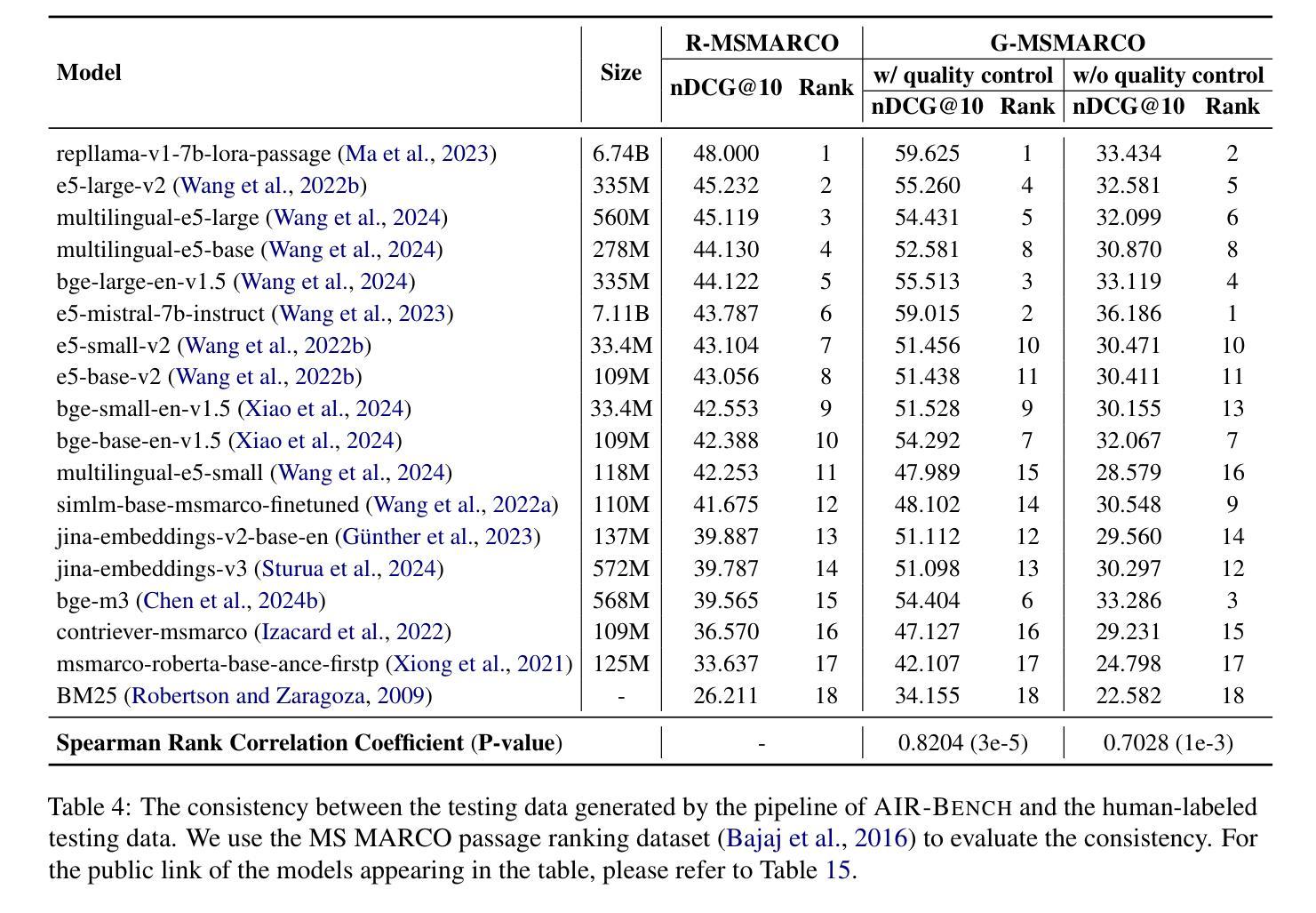

AIR-Bench: Automated Heterogeneous Information Retrieval Benchmark

Authors:Jianlyu Chen, Nan Wang, Chaofan Li, Bo Wang, Shitao Xiao, Han Xiao, Hao Liao, Defu Lian, Zheng Liu

Evaluation plays a crucial role in the advancement of information retrieval (IR) models. However, current benchmarks, which are based on predefined domains and human-labeled data, face limitations in addressing evaluation needs for emerging domains both cost-effectively and efficiently. To address this challenge, we propose the Automated Heterogeneous Information Retrieval Benchmark (AIR-Bench). AIR-Bench is distinguished by three key features: 1) Automated. The testing data in AIR-Bench is automatically generated by large language models (LLMs) without human intervention. 2) Heterogeneous. The testing data in AIR-Bench is generated with respect to diverse tasks, domains and languages. 3) Dynamic. The domains and languages covered by AIR-Bench are constantly augmented to provide an increasingly comprehensive evaluation benchmark for community developers. We develop a reliable and robust data generation pipeline to automatically create diverse and high-quality evaluation datasets based on real-world corpora. Our findings demonstrate that the generated testing data in AIR-Bench aligns well with human-labeled testing data, making AIR-Bench a dependable benchmark for evaluating IR models. The resources in AIR-Bench are publicly available at https://github.com/AIR-Bench/AIR-Bench.

在信息检索(IR)模型的进步中,评估起着至关重要的作用。然而,当前的基准测试依赖于预定义领域和人类标注的数据,在针对新兴领域的评估需求时,既难以实现成本效益,也难以提高效率。为了应对这一挑战,我们提出了自动化异质信息检索基准测试(AIR-Bench)。AIR-Bench以三个关键特征为特色:1)自动化。AIR-Bench中的测试数据是由大型语言模型(LLM)自动生成,无需人工干预。2)多元化。AIR-Bench中的测试数据是针对各种任务、领域和语言生成的。3)动态化。AIR-Bench涵盖的领域和语言不断扩充,为社区开发者提供了一个越来越全面的评估基准。我们开发了一个可靠且稳健的数据生成管道,基于真实语料库自动创建多样且高质量的评价数据集。我们的研究结果表明,AIR-Bench生成的测试数据与人工标注的测试数据对齐良好,使其成为评估IR模型的可信基准。AIR-Bench的资源可在https://github.com/AIR-Bench/AIR-Bench公开获取。

论文及项目相关链接

PDF 31 pages, 6 figures; Update Table 5

Summary

本文主要介绍了信息检索模型评估的重要性及其面临的挑战。针对现有评估基准的局限性,提出了一种新的自动化异构信息检索基准(AIR-Bench)。其主要特点包括自动化、异构性和动态性。AIR-Bench通过大型语言模型自动生成测试数据,覆盖不同的任务、领域和语言,并持续扩充,为开发者提供日益全面的评估基准。此外,其数据生成管道可靠且稳健,能够基于真实语料库自动创建多样且高质量的评价数据集。研究结果表明,AIR-Bench生成的测试数据与人工标注的测试数据对齐良好,是一个可靠的评估信息检索模型的基准。相关资源已公开在GitHub上。

Key Takeaways

- 信息检索模型的评估在信息检索领域具有关键作用。

- 当前评估基准面临对新兴领域评估需求方面的局限性。

- 提出了一种新的自动化异构信息检索基准(AIR-Bench)以应对挑战。

- AIR-Bench的关键特点包括自动化、异构性和动态性。

- AIR-Bench使用大型语言模型自动生成测试数据,无需人工干预。

- AIR-Bench能够覆盖不同的任务、领域和语言,并为开发者提供日益全面的评估基准。

点此查看论文截图

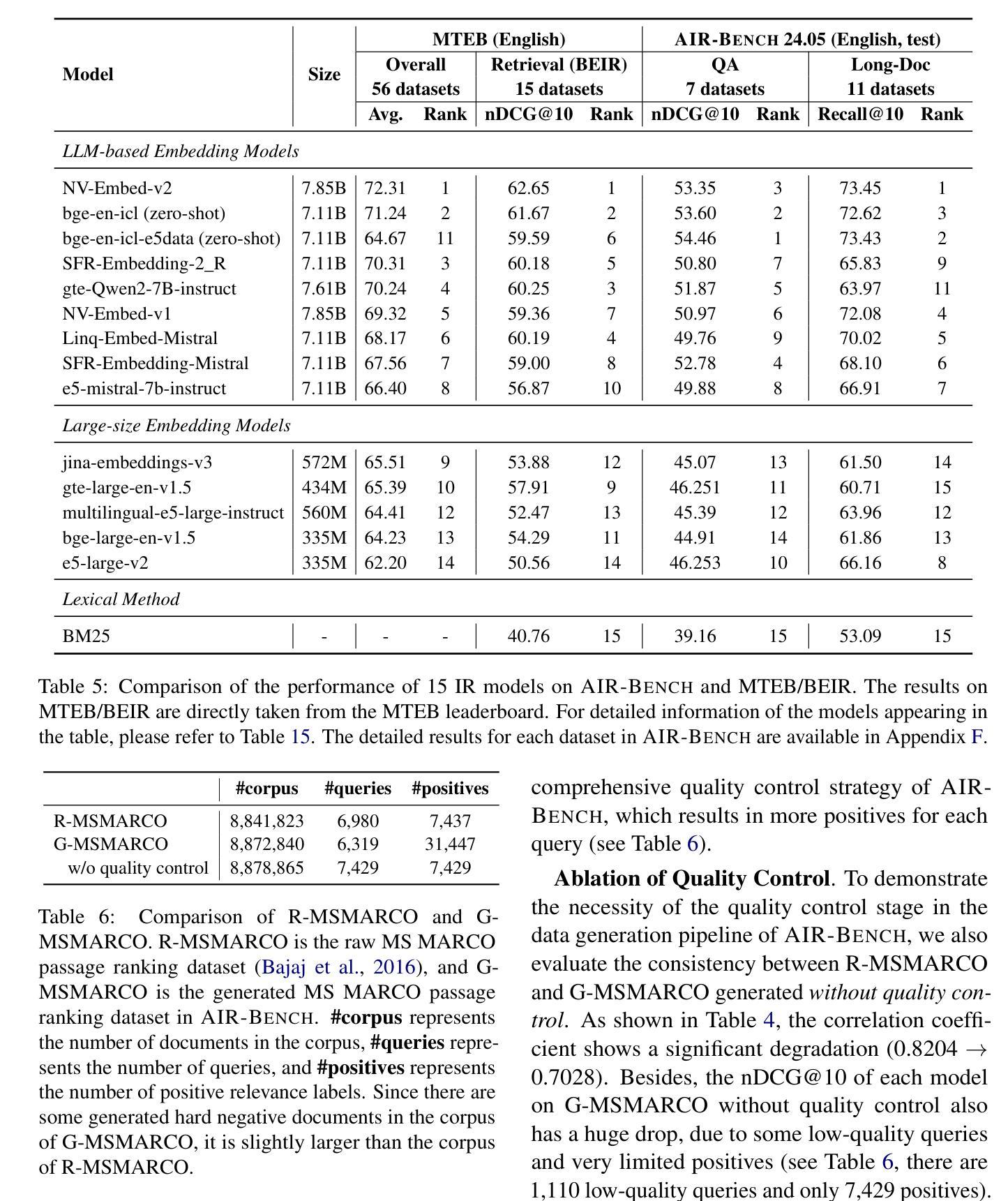

Modality-Inconsistent Continual Learning of Multimodal Large Language Models

Authors:Weiguo Pian, Shijian Deng, Shentong Mo, Yunhui Guo, Yapeng Tian

In this paper, we introduce Modality-Inconsistent Continual Learning (MICL), a new continual learning scenario for Multimodal Large Language Models (MLLMs) that involves tasks with inconsistent modalities (image, audio, or video) and varying task types (captioning or question-answering). Unlike existing vision-only or modality-incremental settings, MICL combines modality and task type shifts, both of which drive catastrophic forgetting. To address these challenges, we propose MoInCL, which employs a Pseudo Targets Generation Module to mitigate forgetting caused by task type shifts in previously seen modalities. It also incorporates Instruction-based Knowledge Distillation to preserve the model’s ability to handle previously learned modalities when new ones are introduced. We benchmark MICL using a total of six tasks and conduct experiments to validate the effectiveness of our proposed MoInCL. The experimental results highlight the superiority of MoInCL, showing significant improvements over representative and state-of-the-art continual learning baselines.

本文介绍了模态不一致的持续学习(MICL),这是多模态大型语言模型(MLLM)的一种新的持续学习场景,涉及模态(图像、音频或视频)不一致和任务类型(描述或问答)各异的任务。与现有的仅针对视觉或模态递增的设置不同,MICL结合了模态和任务类型的转变,两者都会导致灾难性遗忘。为了应对这些挑战,我们提出了MoInCL,它采用伪目标生成模块来缓解因任务类型转变而导致的遗忘。它还结合了基于指令的知识蒸馏,以保留模型在处理新引入的模态时对先前学习模态的处理能力。我们使用六个任务对MICL进行基准测试,并通过实验验证了我们提出的MoInCL的有效性。实验结果突出了MoInCL的优越性,相较于代表性和最先进的持续学习基准测试,显示出显著的改进。

论文及项目相关链接

Summary

本文介绍了多模态大型语言模型的模态不一致持续学习(MICL)新场景,涉及模态(图像、音频或视频)和任务类型(描述或问答)不一致的任务。针对模态和任务类型转变带来的挑战,提出MoInCL方法,通过伪目标生成模块缓解任务类型转变导致的遗忘问题,并融入指令知识蒸馏,以维持模型处理新引入模态时的能力。通过六个任务进行基准测试与实验验证,结果显示MoInCL优于代表性和最先进的持续学习基线。

Key Takeaways

- 引入模态不一致持续学习(MICL)概念,涉及多模态大型语言模型中模态和任务类型的不一致性。

- 现有方法主要集中在单一模态或模态增量学习,MICL结合了模态和任务类型转变的挑战。

- MoInCL方法通过伪目标生成模块缓解任务类型转变导致的遗忘问题。

- MoInCL融入指令知识蒸馏,以维持模型处理新引入模态时的能力。

- 基准测试使用了六个任务来评估MICL场景。

- 实验结果验证了MoInCL的有效性,并显示其优于其他持续学习基线。

点此查看论文截图

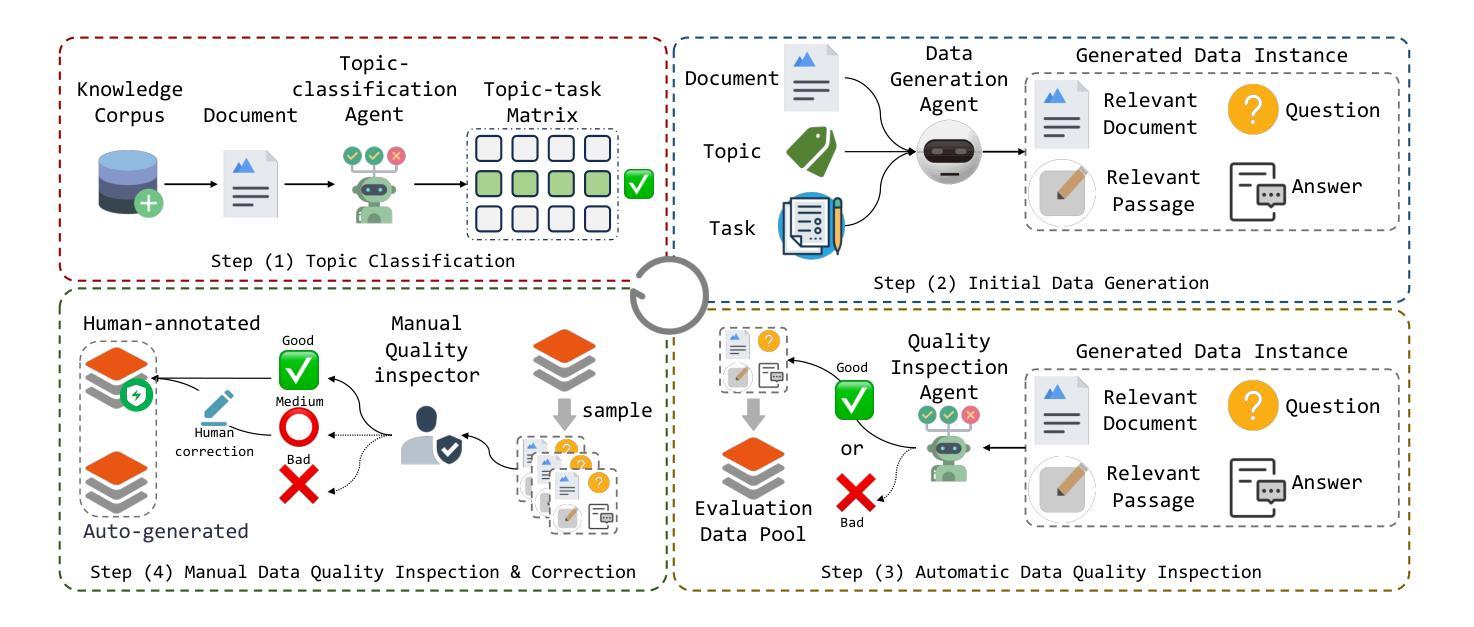

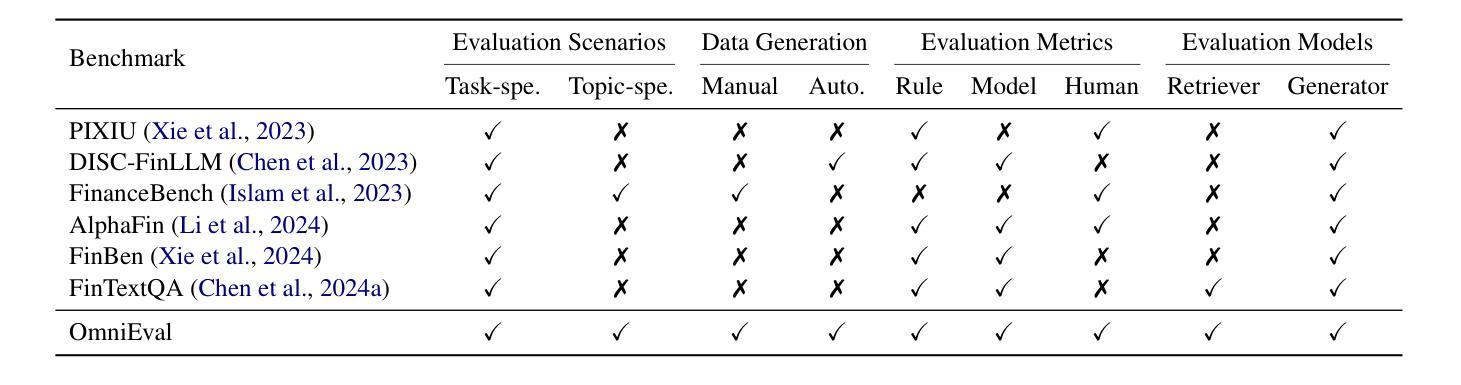

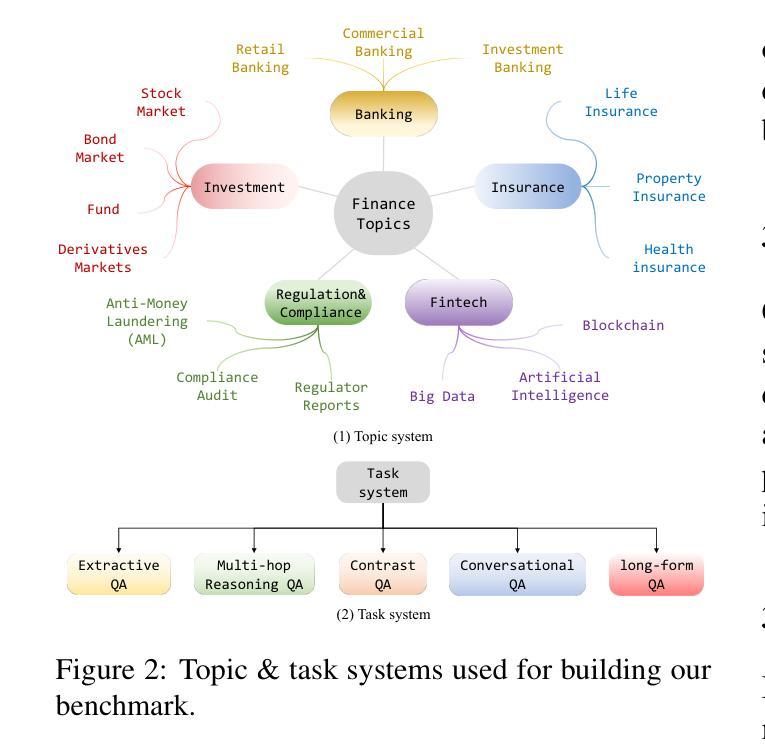

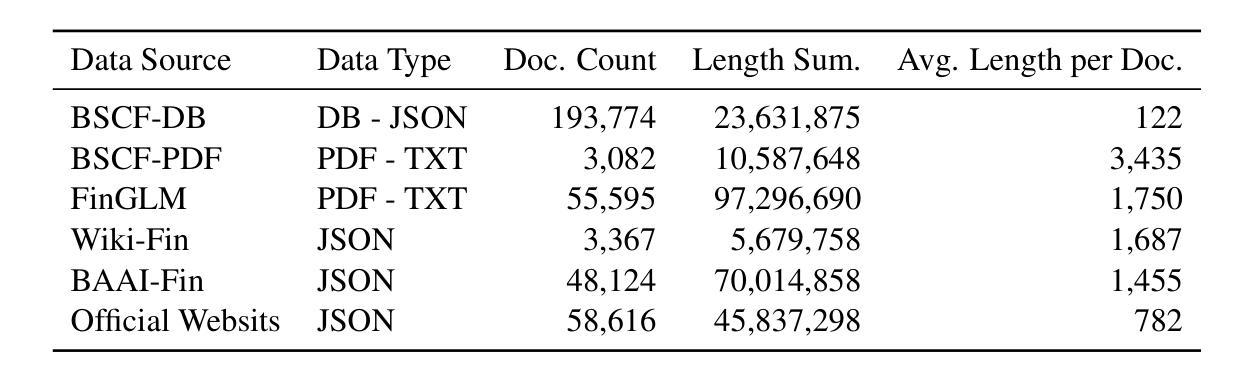

OmniEval: An Omnidirectional and Automatic RAG Evaluation Benchmark in Financial Domain

Authors:Shuting Wang, Jiejun Tan, Zhicheng Dou, Ji-Rong Wen

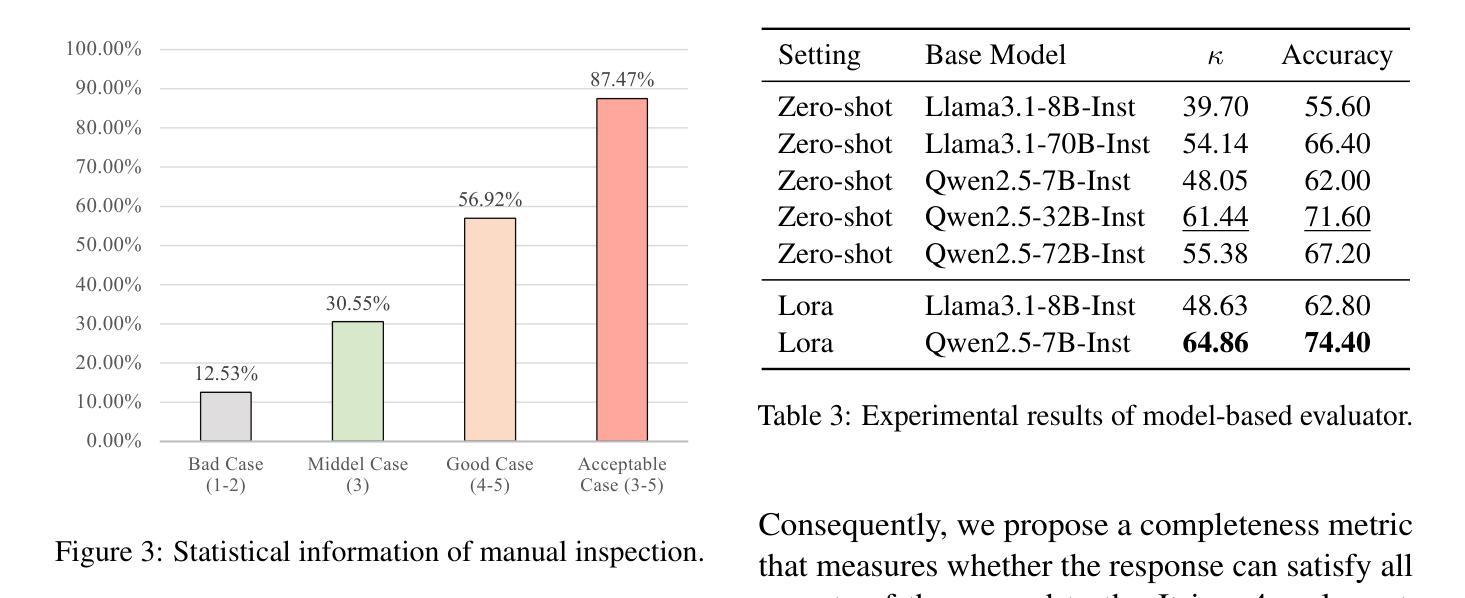

As a typical and practical application of Large Language Models (LLMs), Retrieval-Augmented Generation (RAG) techniques have gained extensive attention, particularly in vertical domains where LLMs may lack domain-specific knowledge. In this paper, we introduce an omnidirectional and automatic RAG benchmark, OmniEval, in the financial domain. Our benchmark is characterized by its multi-dimensional evaluation framework, including (1) a matrix-based RAG scenario evaluation system that categorizes queries into five task classes and 16 financial topics, leading to a structured assessment of diverse query scenarios; (2) a multi-dimensional evaluation data generation approach, which combines GPT-4-based automatic generation and human annotation, achieving an 87.47% acceptance ratio in human evaluations on generated instances; (3) a multi-stage evaluation system that evaluates both retrieval and generation performance, result in a comprehensive evaluation on the RAG pipeline; and (4) robust evaluation metrics derived from rule-based and LLM-based ones, enhancing the reliability of assessments through manual annotations and supervised fine-tuning of an LLM evaluator. Our experiments demonstrate the comprehensiveness of OmniEval, which includes extensive test datasets and highlights the performance variations of RAG systems across diverse topics and tasks, revealing significant opportunities for RAG models to improve their capabilities in vertical domains. We open source the code of our benchmark in \href{https://github.com/RUC-NLPIR/OmniEval}{https://github.com/RUC-NLPIR/OmniEval}.

作为大型语言模型(LLM)的典型和实际应用,检索增强生成(RAG)技术已引起广泛关注,特别是在LLM可能缺乏特定领域知识的垂直领域。在本文中,我们在金融领域引入了一个全方位的自动RAG基准测试OmniEval。我们的基准测试以其多维评估框架为特征,包括:(1)基于矩阵的RAG场景评估系统,将查询分类为五个任务类别和16个金融主题,实现对多样化查询场景的结构化评估;(2)多维评估数据生成方法,结合GPT-4基于的自动生成和人工标注,生成实例的人类评估中达到87.47%的接受率;(3)多阶段评估系统,评估检索和生成性能,对RAG管道进行全面评估;(4)基于规则和LLM的稳健评估指标,通过人工标注和监督调整LLM评估器,提高评估的可靠性。我们的实验证明了OmniEval的全面性,它包括广泛的测试数据集,并突出了RAG系统在多个主题和任务之间的性能差异,揭示了RAG模型在垂直领域提高能力的显著机会。我们在https://github.com/RUC-NLPIR/OmniEval开源我们的基准测试代码。

论文及项目相关链接

Summary

大型语言模型(LLM)的检索增强生成(RAG)技术在专业领域受到广泛关注。本文介绍了一个金融领域的全自动RAG基准测试平台OmniEval,其特点包括多维评估框架、矩阵式RAG场景评估系统、多维评估数据生成方法、多阶段评估系统以及可靠的评估指标。实验表明,OmniEval能够全面评估RAG系统在多样化主题和任务上的性能差异,为RAG模型提升专业能力提供了重要机会。

Key Takeaways

- OmniEval是一个在金融领域的全自动RAG基准测试平台。

- 它具有多维评估框架,包括矩阵式RAG场景评估、数据生成、多阶段评估及评估指标。

- OmniEval能够评估RAG技术在不同主题和任务上的性能差异。

- 平台采用了GPT-4自动生成和人工标注相结合的数据生成方法,接受率高达87.47%。

- OmniEval通过结合规则基础和LLM基础的评估指标,提高了评估的可靠性。

- 实验表明,RAG技术在这个基准测试上的表现还有很大的提升空间。

点此查看论文截图

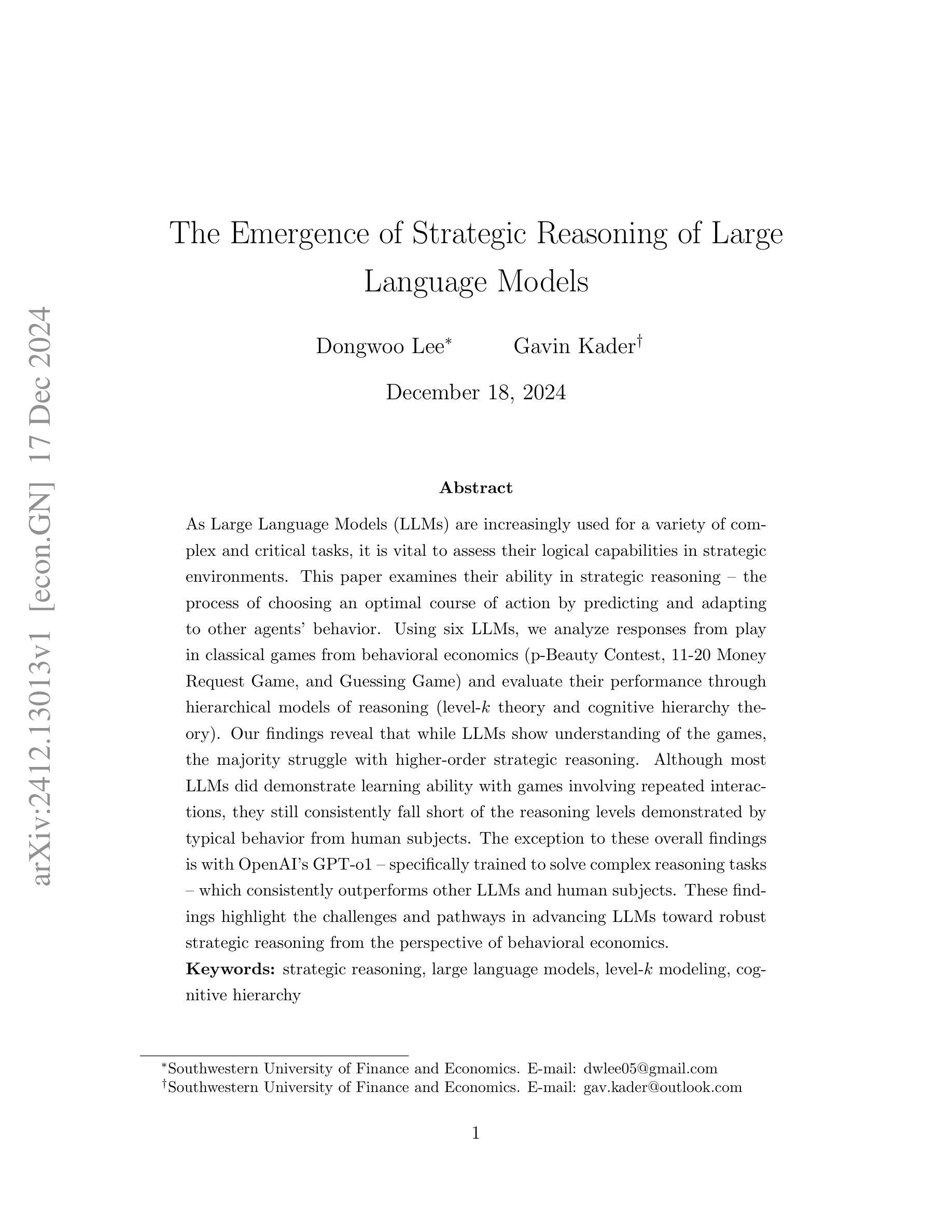

The Emergence of Strategic Reasoning of Large Language Models

Authors:Dongwoo Lee, Gavin Kader

As Large Language Models (LLMs) are increasingly used for a variety of complex and critical tasks, it is vital to assess their logical capabilities in strategic environments. This paper examines their ability in strategic reasoning – the process of choosing an optimal course of action by predicting and adapting to other agents’ behavior. Using six LLMs, we analyze responses from play in classical games from behavioral economics (p-Beauty Contest, 11-20 Money Request Game, and Guessing Game) and evaluate their performance through hierarchical models of reasoning (level-$k$ theory and cognitive hierarchy theory). Our findings reveal that while LLMs show understanding of the games, the majority struggle with higher-order strategic reasoning. Although most LLMs did demonstrate learning ability with games involving repeated interactions, they still consistently fall short of the reasoning levels demonstrated by typical behavior from human subjects. The exception to these overall findings is with OpenAI’s GPT-o1 – specifically trained to solve complex reasoning tasks – which consistently outperforms other LLMs and human subjects. These findings highlight the challenges and pathways in advancing LLMs toward robust strategic reasoning from the perspective of behavioral economics.

随着大型语言模型(LLM)在多种复杂和关键任务中的使用越来越广泛,评估其在战略环境中的逻辑能力至关重要。本文研究了大型语言模型在战略推理方面的能力——通过预测和适应其他实体的行为来选择最佳行动方案的过程。我们使用六种大型语言模型分析了行为经济学经典游戏的玩法(包括博弈美赛游戏、“终极交易”游戏和猜谜游戏),并通过层次化的推理模型(如k级理论和认知层次理论)对它们的表现进行了评估。我们的研究发现,虽然大型语言模型能够理解游戏规则,但大多数模型在解决高阶战略推理方面存在困难。尽管大多数大型语言模型在涉及重复交互的游戏中表现出学习能力,但它们仍然未能达到人类受试者表现出的推理水平。本次研究的例外情况是OpenAI的GPT-o1模型——该模型经过专门训练以解决复杂的推理任务,其表现始终优于其他大型语言模型和人类受试者。这些发现强调了大型语言模型在行为经济学视角下的稳健战略推理所面临的挑战和前进道路。

论文及项目相关链接

Summary

大型语言模型(LLM)在战略环境下的逻辑能力评估至关重要。本文通过一系列行为经济学游戏考察LLM的战略推理能力,发现多数LLM在高级战略推理方面存在困难,但OpenAI的GPT-o1因专门训练用于解决复杂推理任务而表现优异。

Key Takeaways

- 大型语言模型(LLM)在战略推理方面需要进行评估,特别是在复杂和关键任务中的应用。

- 通过行为经济学游戏进行LLM的战略推理能力分析。

- 大多数LLM在高级战略推理方面存在挑战。

- LLM在某些涉及重复互动的游戏中展现学习能力。

- 与人类主体相比,LLM在推理层次上仍有差距。

- GPT-o1在解决复杂推理任务上表现优异,超过其他LLM和人类主体。

点此查看论文截图

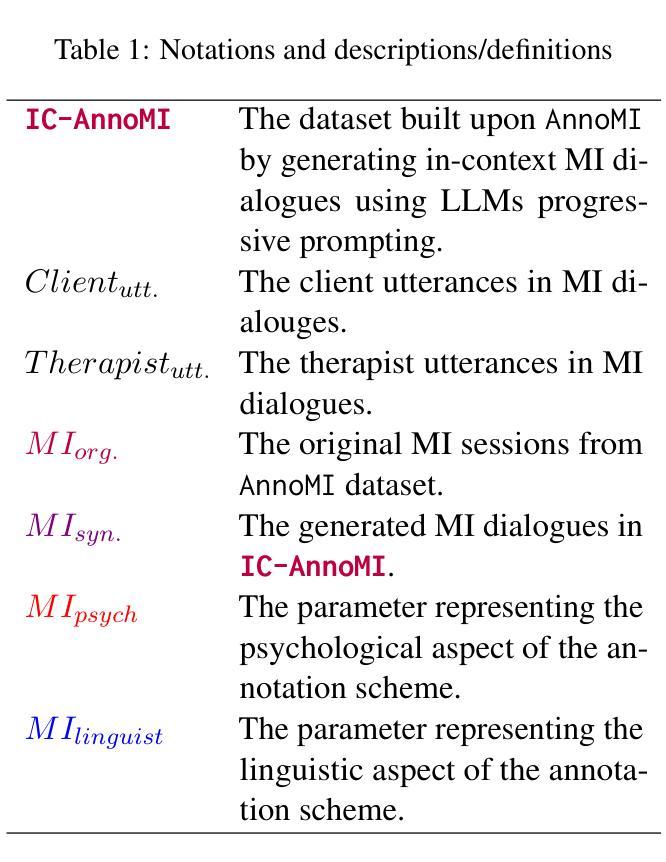

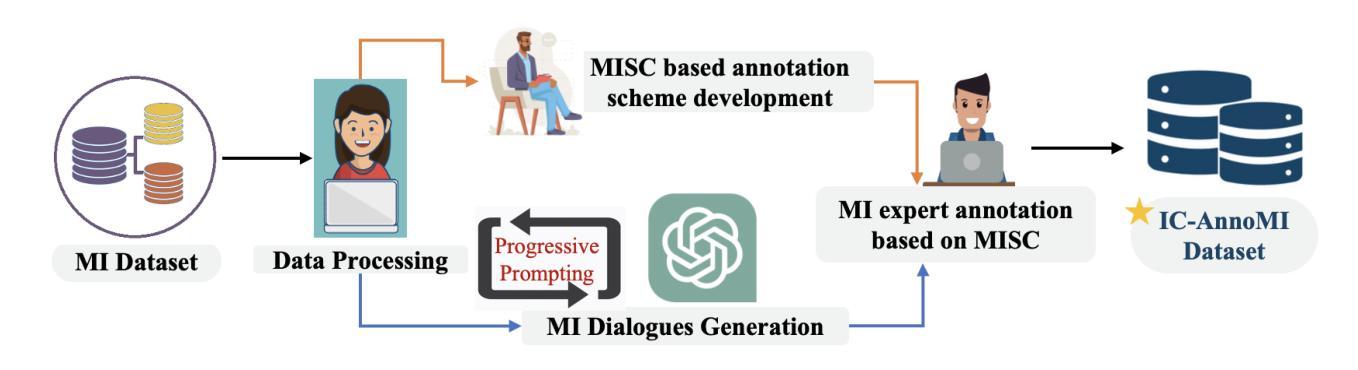

Unlocking LLMs: Addressing Scarce Data and Bias Challenges in Mental Health

Authors:Vivek Kumar, Eirini Ntoutsi, Pushpraj Singh Rajawat, Giacomo Medda, Diego Reforgiato Recupero

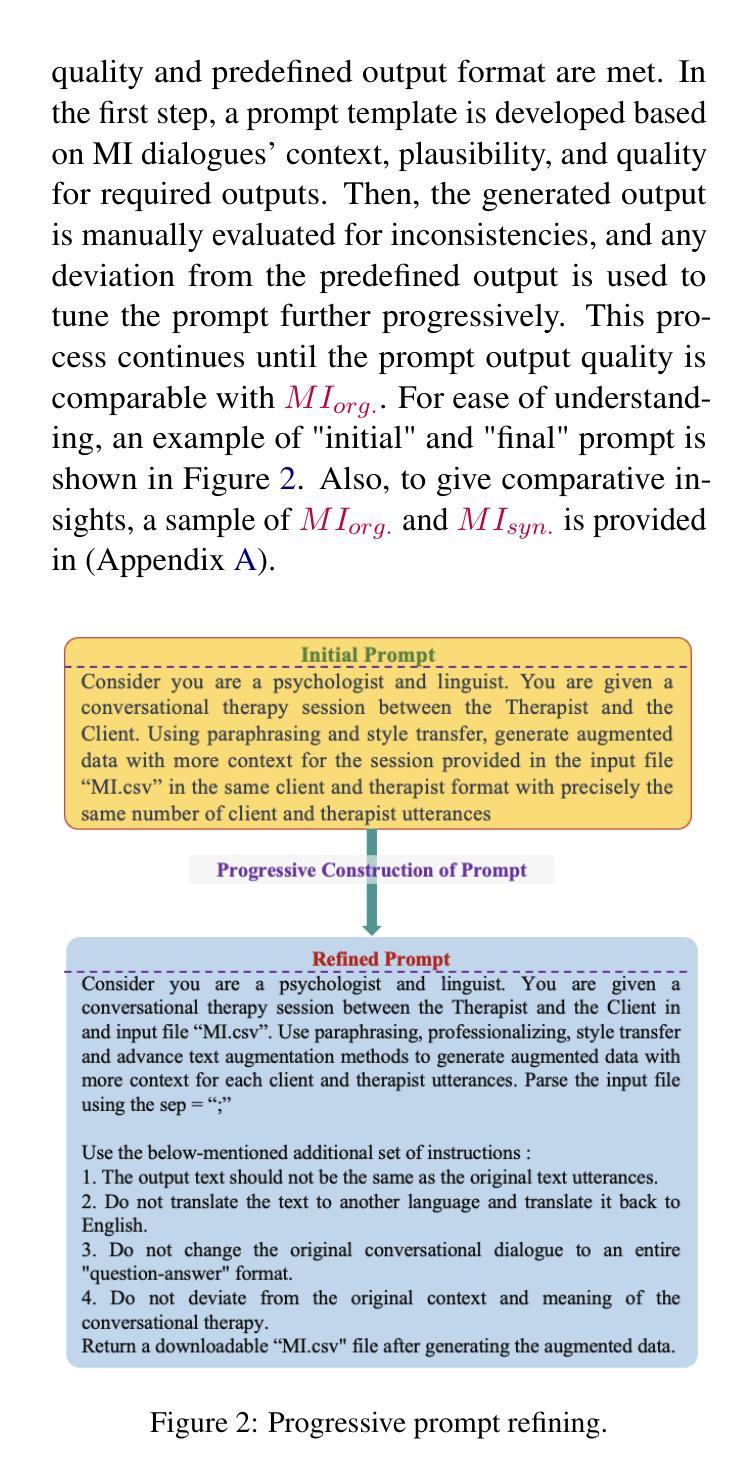

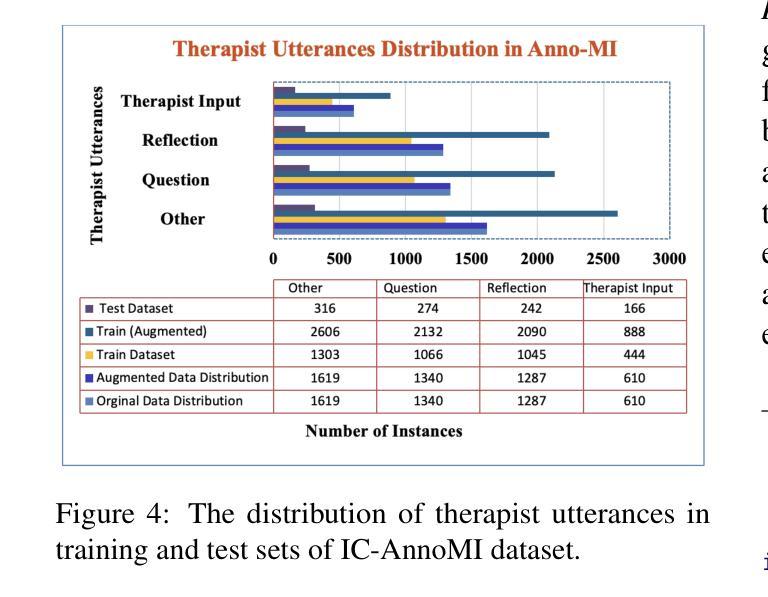

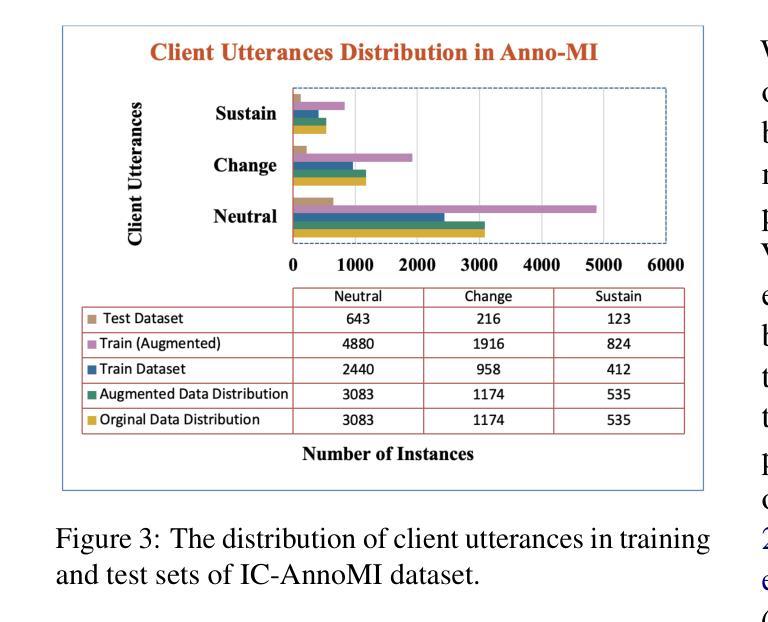

Large language models (LLMs) have shown promising capabilities in healthcare analysis but face several challenges like hallucinations, parroting, and bias manifestation. These challenges are exacerbated in complex, sensitive, and low-resource domains. Therefore, in this work we introduce IC-AnnoMI, an expert-annotated motivational interviewing (MI) dataset built upon AnnoMI by generating in-context conversational dialogues leveraging LLMs, particularly ChatGPT. IC-AnnoMI employs targeted prompts accurately engineered through cues and tailored information, taking into account therapy style (empathy, reflection), contextual relevance, and false semantic change. Subsequently, the dialogues are annotated by experts, strictly adhering to the Motivational Interviewing Skills Code (MISC), focusing on both the psychological and linguistic dimensions of MI dialogues. We comprehensively evaluate the IC-AnnoMI dataset and ChatGPT’s emotional reasoning ability and understanding of domain intricacies by modeling novel classification tasks employing several classical machine learning and current state-of-the-art transformer approaches. Finally, we discuss the effects of progressive prompting strategies and the impact of augmented data in mitigating the biases manifested in IC-AnnoM. Our contributions provide the MI community with not only a comprehensive dataset but also valuable insights for using LLMs in empathetic text generation for conversational therapy in supervised settings.

大型语言模型(LLM)在医疗健康分析方面展现出有前景的能力,但面临着幻觉、鹦鹉学舌和偏见表现等挑战。这些挑战在复杂、敏感和低资源的领域中被加剧。因此,在这项工作中,我们引入了IC-AnnoMI,这是一个基于AnnoMI的专家注释动机访谈(MI)数据集,通过利用LLM,特别是ChatGPT生成上下文对话来实现。IC-AnnoMI采用目标提示,通过线索和定制信息精确构建,同时考虑到治疗风格(同理心、反思)、上下文相关性和语义的虚假变化。随后,对话由专家进行注释,严格遵循动机访谈技能代码(MISC),重点关注MI对话的心理和语言学维度。我们通过建立新型分类任务,采用多种经典机器学习和当前最先进的转换器方法,全面评估了IC-AnnoMI数据集和ChatGPT的情感推理能力以及对领域细节的理解。最后,我们讨论了渐进式提示策略的影响以及增强数据对缓解IC-AnnoMI中表现出的偏见的影响。我们的贡献为MI社区不仅提供了一个综合数据集,而且为在监督环境中使用LLM进行共情文本生成进行对话治疗提供了有价值见解。

论文及项目相关链接

PDF International Conference on Natural Language Processing and Artificial Intelligence for Cyber Security (NLPAICS) 2024

Summary

大型语言模型(LLM)在医疗分析领域展现出巨大潜力,但仍面临如幻觉、鹦鹉学舌和偏见显现等挑战。在复杂、敏感和低资源的领域中,这些挑战更加严重。因此,本研究引入IC-AnnoMI数据集,它是基于AnnoMI构建的专家标注动机面试(MI)数据集,通过利用LLM(特别是ChatGPT)生成上下文对话来实现。IC-AnnoMI采用精准的目标提示,考虑治疗风格、上下文相关性及语义变化等因素。对话由专家按照动机面试技能代码(MISC)进行标注,侧重于MI对话的心理和语言学维度。本研究全面评估了IC-AnnoMI数据集和ChatGPT的情感理解能力以及对领域细节的把握能力,通过构建新型分类任务模型,采用传统机器学习和最新转换器方法。最后,我们探讨了渐进式提示策略和增强数据对缓解IC-AnnoM中表现出的偏见的影响。研究贡献不仅为MI社区提供了一个综合数据集,还为在监督环境中使用LLM进行同理心文本生成和对话治疗提供了宝贵见解。

Key Takeaways

- LLMs在医疗保健分析中展现出潜力,但仍面临挑战,如幻觉、模仿和偏见。

- IC-AnnoMI数据集通过利用LLMs(特别是ChatGPT)生成上下文对话构建而成。

- IC-AnnoMI采用精准的目标提示,考虑治疗风格、上下文相关性等因素。

- 对话由专家按照动机面试技能代码(MISC)进行标注,侧重心理和语言学维度。

- 研究全面评估了IC-AnnoMI数据集和ChatGPT的情感理解能力及对领域细节的把握能力。

- 采用传统机器学习和最新转换器方法构建新型分类任务模型进行评估。

点此查看论文截图

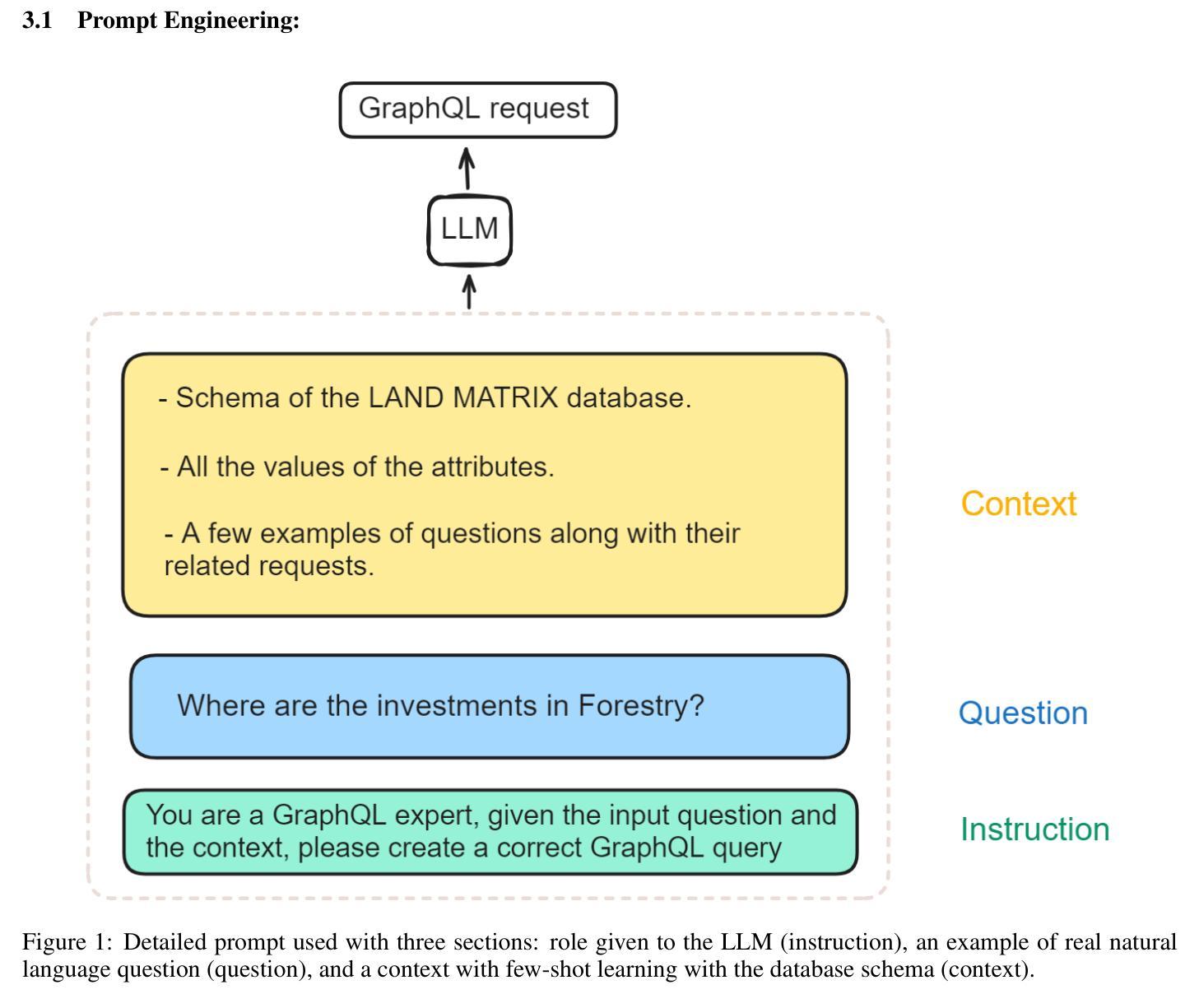

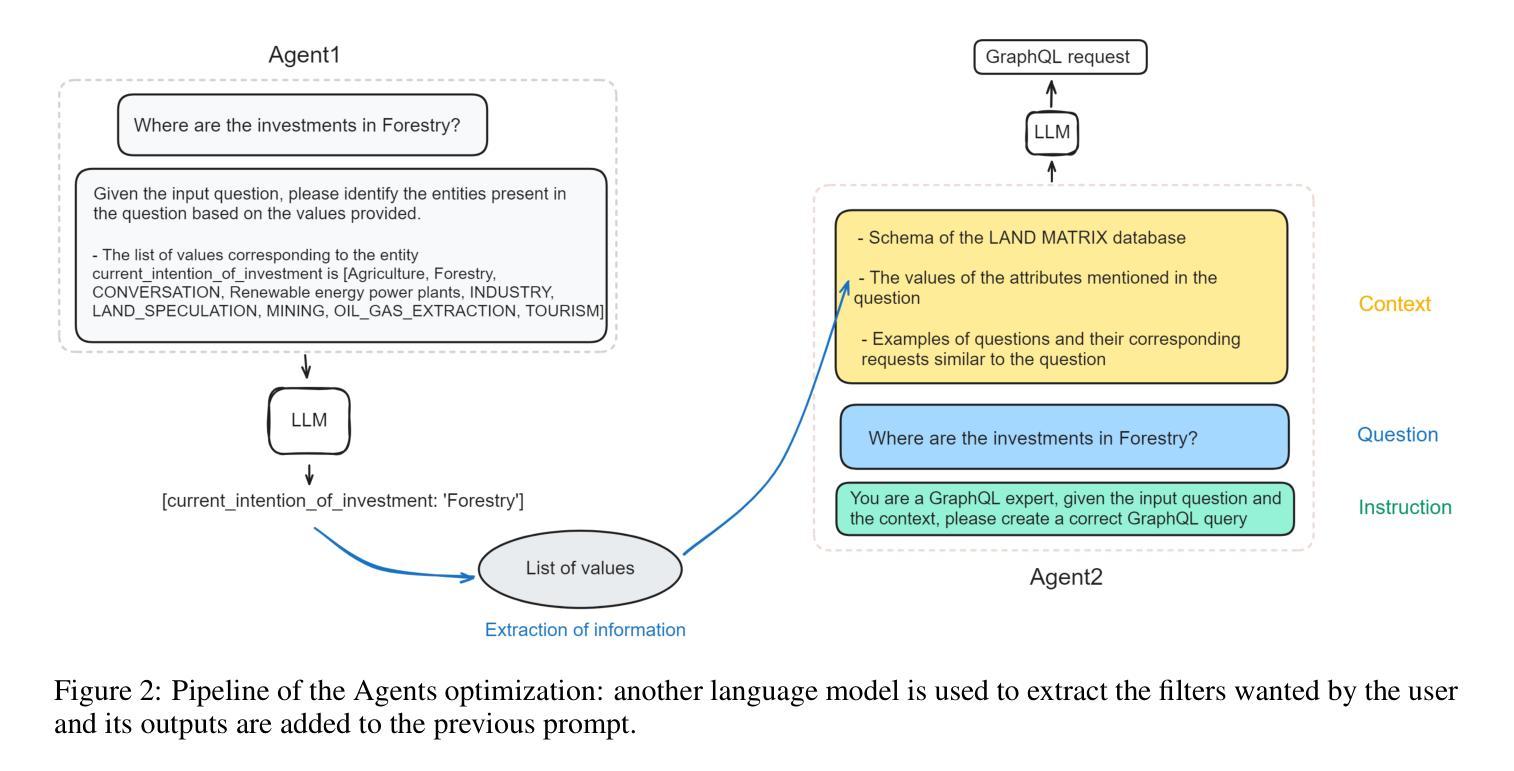

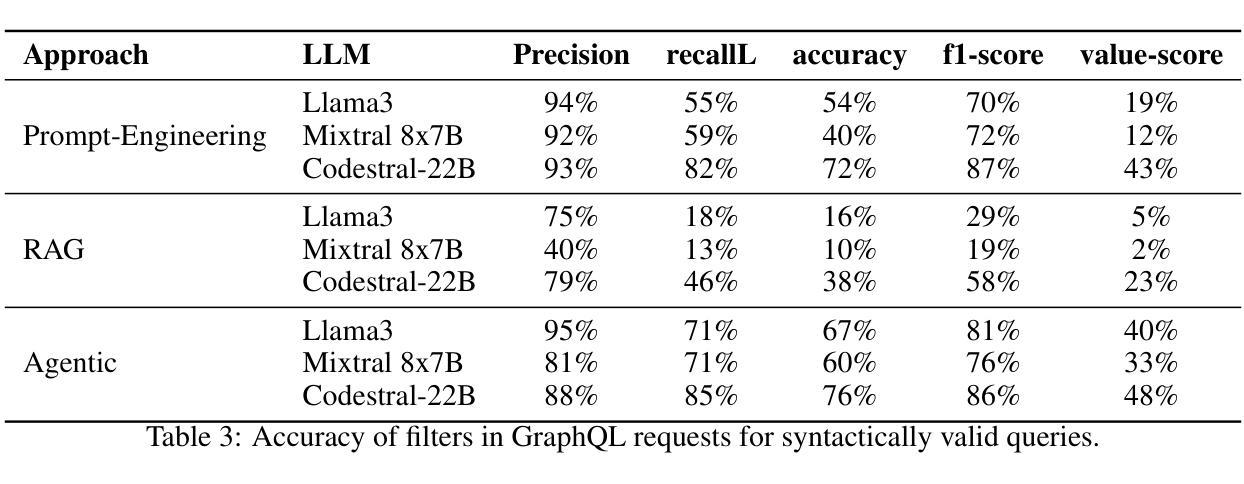

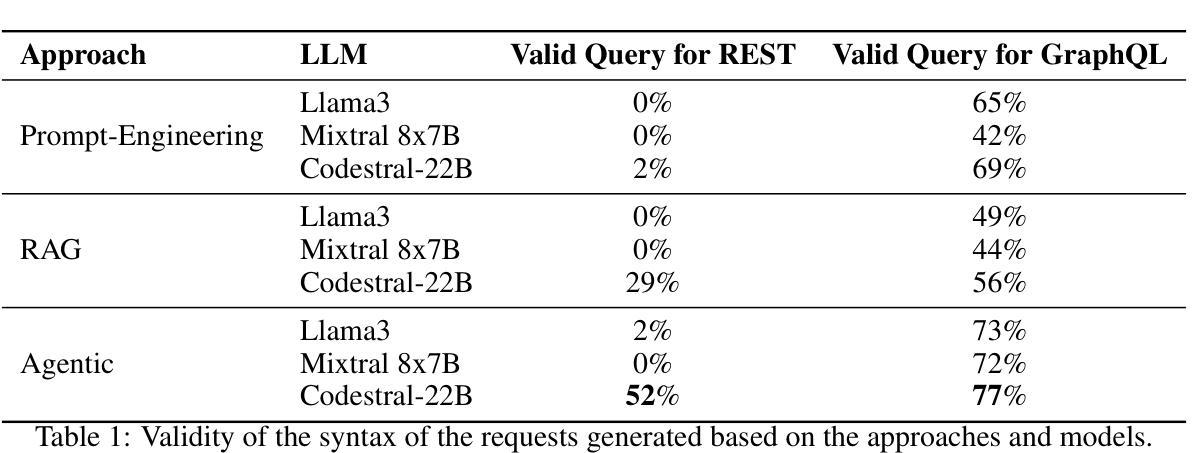

Adaptations of AI models for querying the LandMatrix database in natural language

Authors:Fatiha Ait Kbir, Jérémy Bourgoin, Rémy Decoupes, Marie Gradeler, Roberto Interdonato

The Land Matrix initiative (https://landmatrix.org) and its global observatory aim to provide reliable data on large-scale land acquisitions to inform debates and actions in sectors such as agriculture, extraction, or energy in low- and middle-income countries. Although these data are recognized in the academic world, they remain underutilized in public policy, mainly due to the complexity of access and exploitation, which requires technical expertise and a good understanding of the database schema. The objective of this work is to simplify access to data from different database systems. The methods proposed in this article are evaluated using data from the Land Matrix. This work presents various comparisons of Large Language Models (LLMs) as well as combinations of LLM adaptations (Prompt Engineering, RAG, Agents) to query different database systems (GraphQL and REST queries). The experiments are reproducible, and a demonstration is available online: https://github.com/tetis-nlp/landmatrix-graphql-python.

Land Matrix倡议(https://landmatrix.org)及其全球观测站旨在提供有关大规模土地收购的可靠数据,以支持农业、采矿或能源等领域的辩论和行动,目标针对中低收入和新兴市场国家。虽然这些数据在学术界已获得认可,但在公共政策方面的利用率仍然很低,主要是因为数据获取和利用的复杂性,这需要技术专长和良好的数据库架构理解。这项工作的目标是简化从不同数据库系统访问数据的过程。本文提出的方法使用Land Matrix的数据进行评估。这项工作展示了对各种大型语言模型(LLM)的比较,以及LLM适应的组合(提示工程、RAG、代理)来查询不同的数据库系统(GraphQL和REST查询)。实验具有可重复性,在线演示可访问:https://github.com/tetis-nlp/landmatrix-graphql-python。

论文及项目相关链接

Summary

该文本介绍了Land Matrix倡议及其全球观测站的目标,旨在提供有关大规模土地收购的可靠数据,以支持低收入和中等收入国家在农业、采矿或能源等部门的辩论和行动。尽管这些数据在学术界得到认可,但在公共政策中的利用率却很低,主要原因是数据访问和利用的复杂性,需要技术专长和良好的数据库架构理解。本文的目标是通过简化对不同数据库系统的数据访问来改进此情况。本文对大型语言模型(LLMs)的方法以及使用GraphQL和REST查询进行数据库查询的LLM适应组合(Prompt Engineering、RAG、Agents)进行了比较评估。实验具有可重复性,并且可以在网上进行演示。

Key Takeaways

- Land Matrix倡议致力于提供大规模土地收购的可靠数据,以支持低收入和中等收入国家的农业、采矿和能源等领域的决策。

- 虽然Land Matrix数据在学术界受到认可,但在公共政策中的利用率较低,原因在于数据访问和利用的复杂性。

- 本文的目标是通过简化对不同数据库系统的数据访问来改进这一状况。

- 大型语言模型(LLMs)在查询数据库系统时显示出潜力,包括通过Prompt Engineering、RAG和Agents等适应方式。

- 通过GraphQL和REST查询进行数据库查询是本文研究的重要内容。

- 实验具有可重复性,并且可以在网上进行演示。

点此查看论文截图

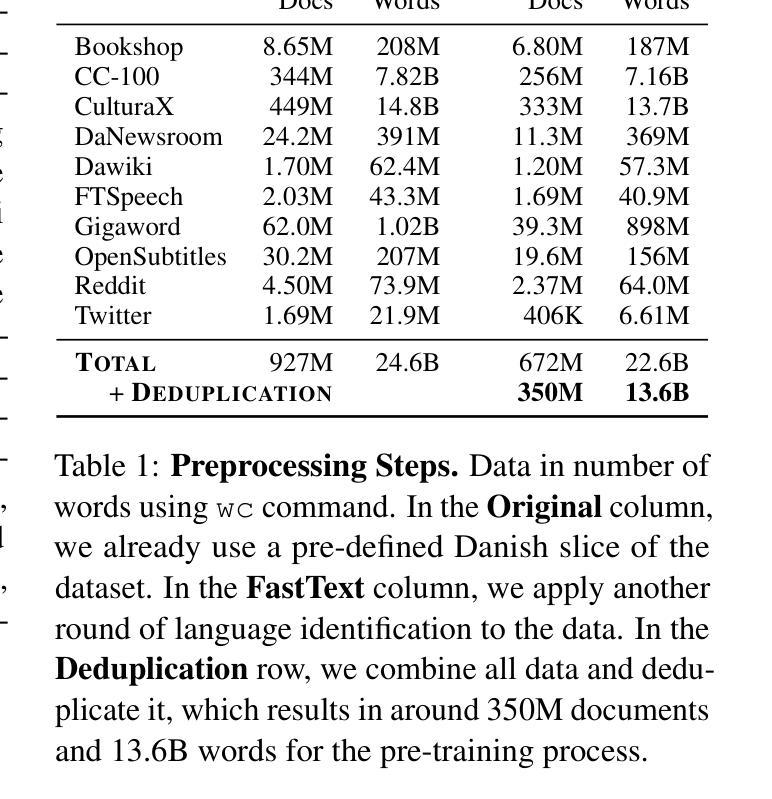

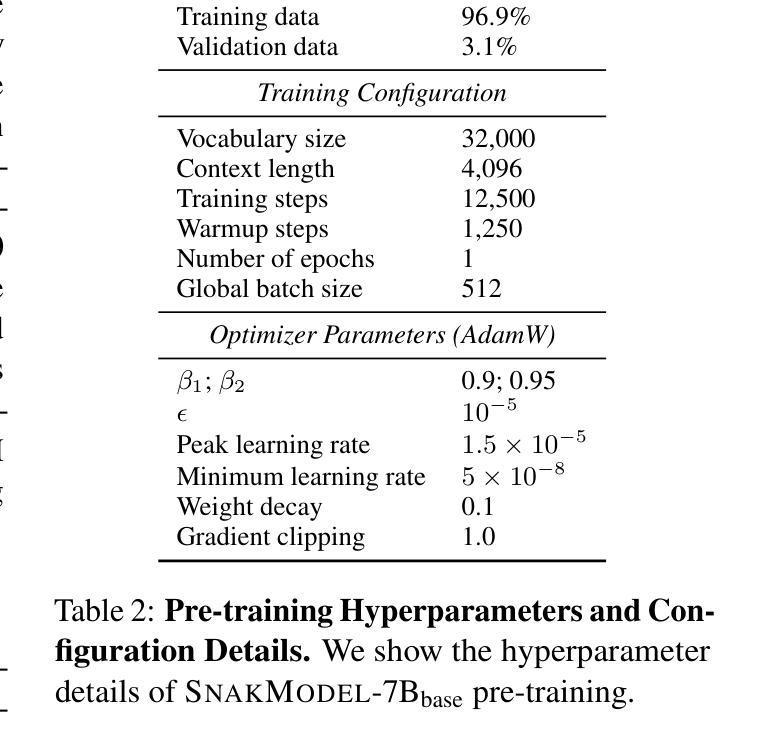

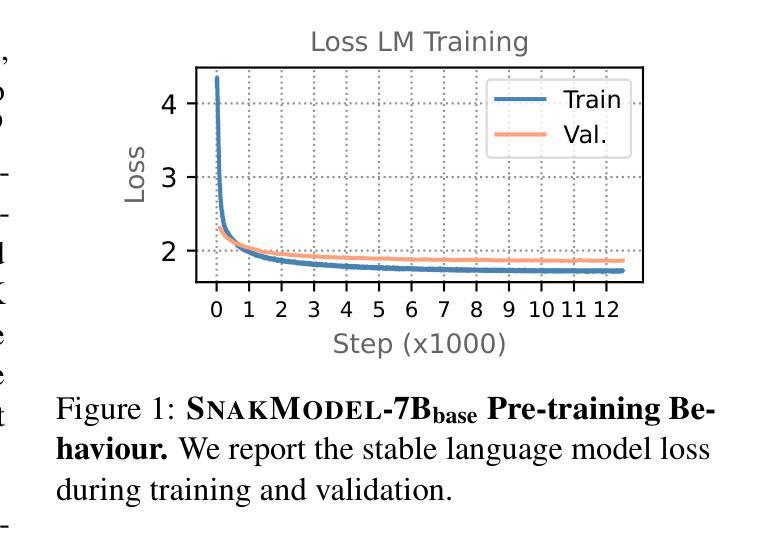

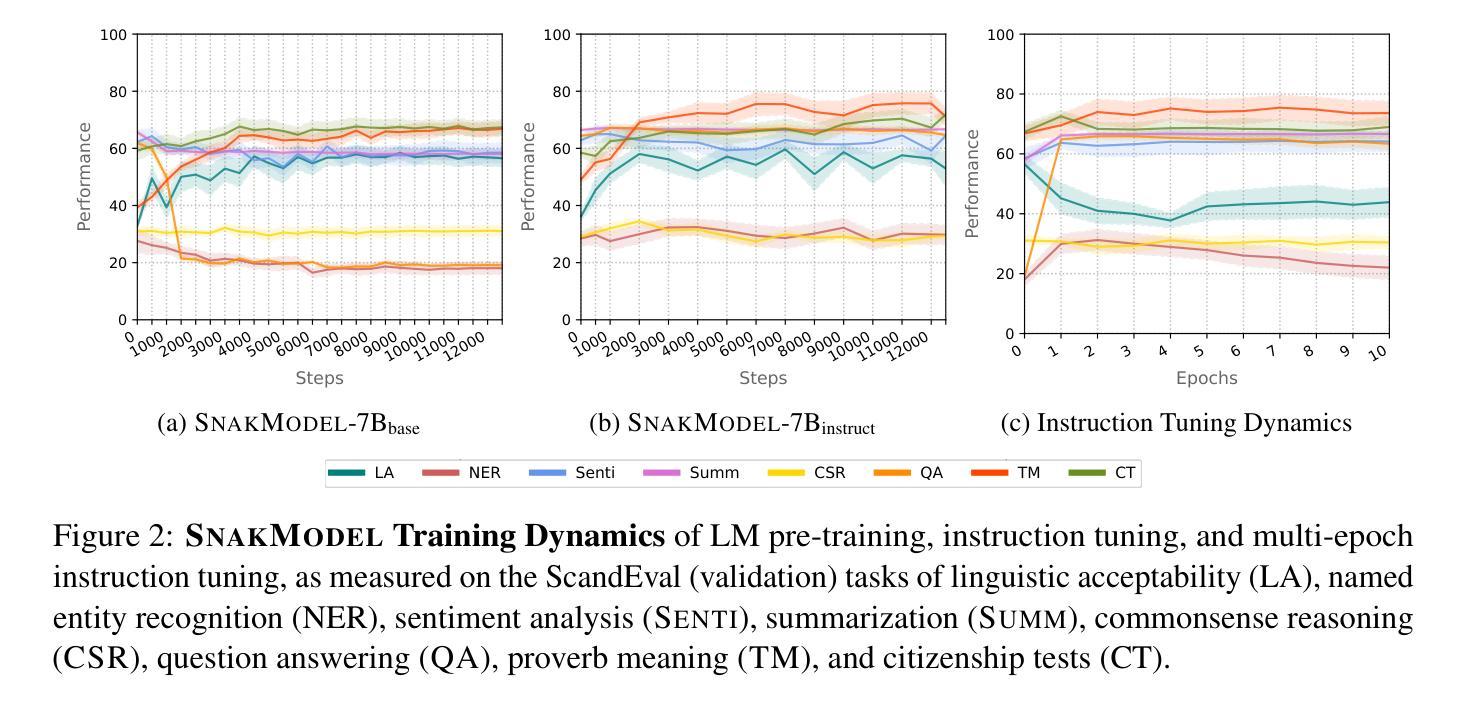

SnakModel: Lessons Learned from Training an Open Danish Large Language Model

Authors:Mike Zhang, Max Müller-Eberstein, Elisa Bassignana, Rob van der Goot

We present SnakModel, a Danish large language model (LLM) based on Llama2-7B, which we continuously pre-train on 13.6B Danish words, and further tune on 3.7M Danish instructions. As best practices for creating LLMs for smaller language communities have yet to be established, we examine the effects of early modeling and training decisions on downstream performance throughout the entire training pipeline, including (1) the creation of a strictly curated corpus of Danish text from diverse sources; (2) the language modeling and instruction-tuning training process itself, including the analysis of intermediate training dynamics, and ablations across different hyperparameters; (3) an evaluation on eight language and culturally-specific tasks. Across these experiments SnakModel achieves the highest overall performance, outperforming multiple contemporary Llama2-7B-based models. By making SnakModel, the majority of our pre-training corpus, and the associated code available under open licenses, we hope to foster further research and development in Danish Natural Language Processing, and establish training guidelines for languages with similar resource constraints.

我们推出了SnakModel,这是一款基于Llama2-7B的丹麦大型语言模型(LLM)。我们对13.6B丹麦单词进行持续预训练,并进一步优化了370万条丹麦指令。由于尚未建立针对小型语言社区的创建LLM的最佳实践,我们在整个训练管道中检查了早期建模和训练决策对下游性能的影响,包括(1)创建来自不同来源的严格编辑的丹麦语文本语料库;(2)语言建模和指令调整训练过程本身,包括对中间训练动态的分析和不同超参数的消融研究;(3)在八个语言和具有文化特色的任务上进行了评估。在这些实验中,SnakModel取得了最高总体性能,超过了多个基于当代Llama2-7B的模型。我们通过公开许可证提供SnakModel、大部分预训练语料库和相关代码,希望促进丹麦自然语言处理的进一步研究和开发,并为具有类似资源约束的语言建立训练指南。

论文及项目相关链接

PDF Accepted at NoDaLiDa 2025 (oral)

Summary

SnakModel是丹麦的大型语言模型(LLM),基于Llama2-7B进行连续预训练,在丹麦语数据上进行了大规模的预训练与微调。该模型关注于建立针对小型语言社区的最佳实践,研究了早期建模和训练决策对下游性能的影响,包括语料库的建立、训练流程和参数分析等。SnakModel在多个丹麦文化和语言特定任务上表现最佳,超越了其他基于Llama2-7B的模型。模型和预训练语料库已公开提供,以推动丹麦自然语言处理的研究和发展,并为类似的语言提供训练指南。

Key Takeaways

- SnakModel是一个基于Llama2-7B的丹麦大型语言模型(LLM)。

- SnakModel在大量丹麦语数据上进行了预训练和微调。

- 研究了建模和训练决策对下游性能的影响,包括语料库的建立、训练流程和参数分析。

- SnakModel在多个文化和语言特定任务上表现优异。

- SnakModel超越了其他基于Llama2-7B的模型在丹麦语任务上的表现。

- SnakModel模型和预训练语料库已公开发布,以推动丹麦自然语言处理的研究和发展。

点此查看论文截图

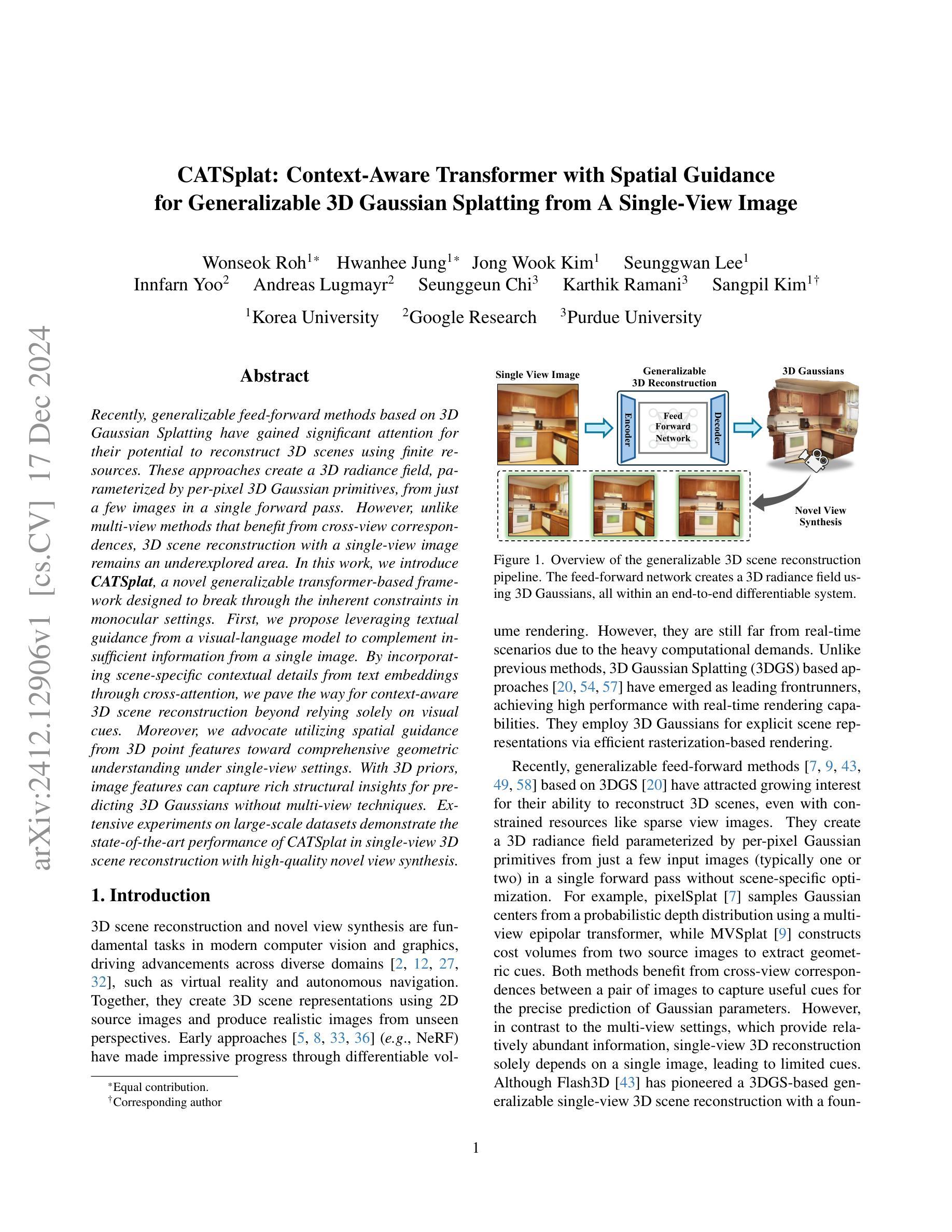

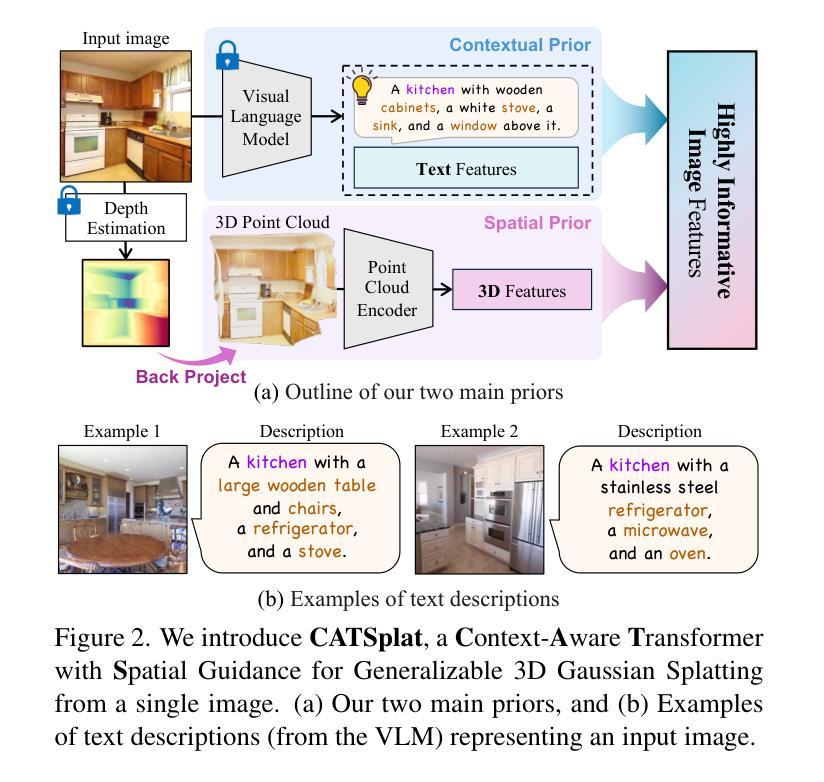

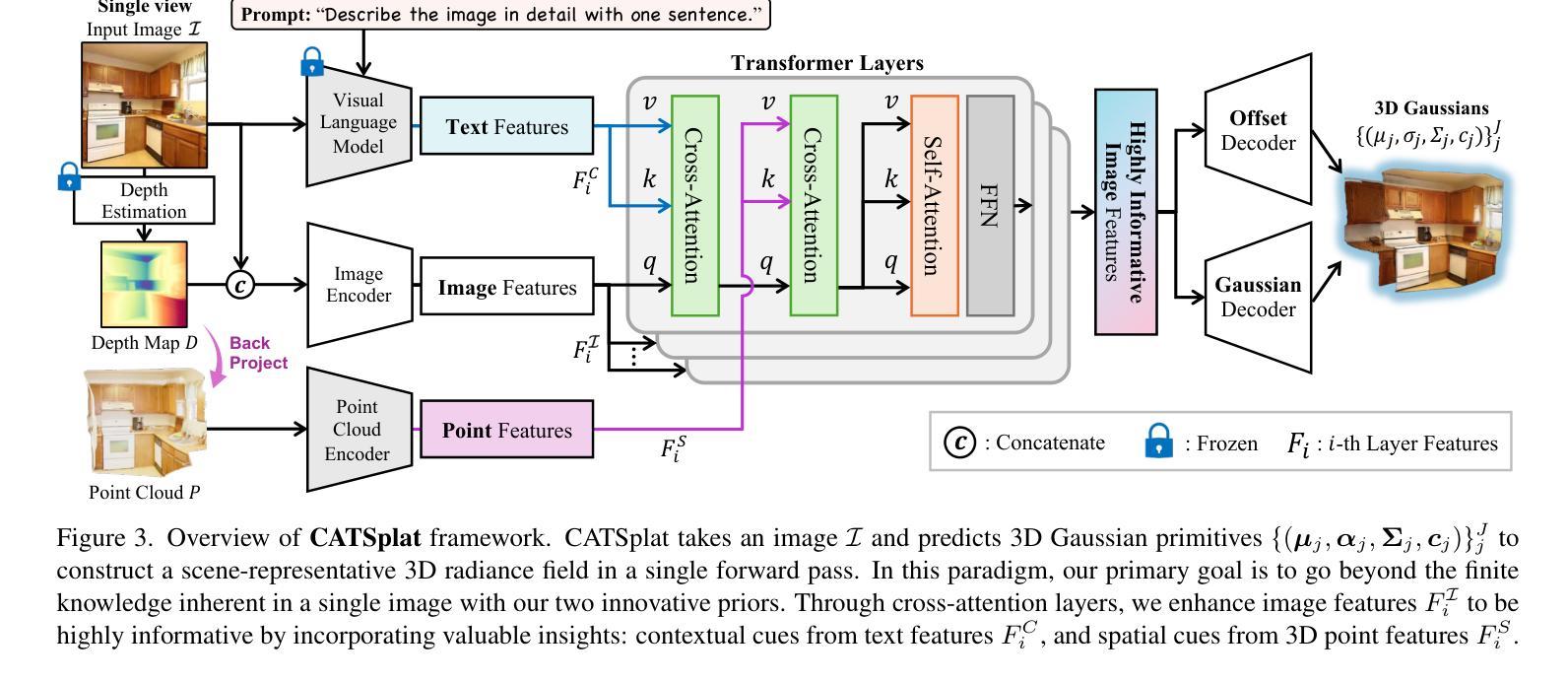

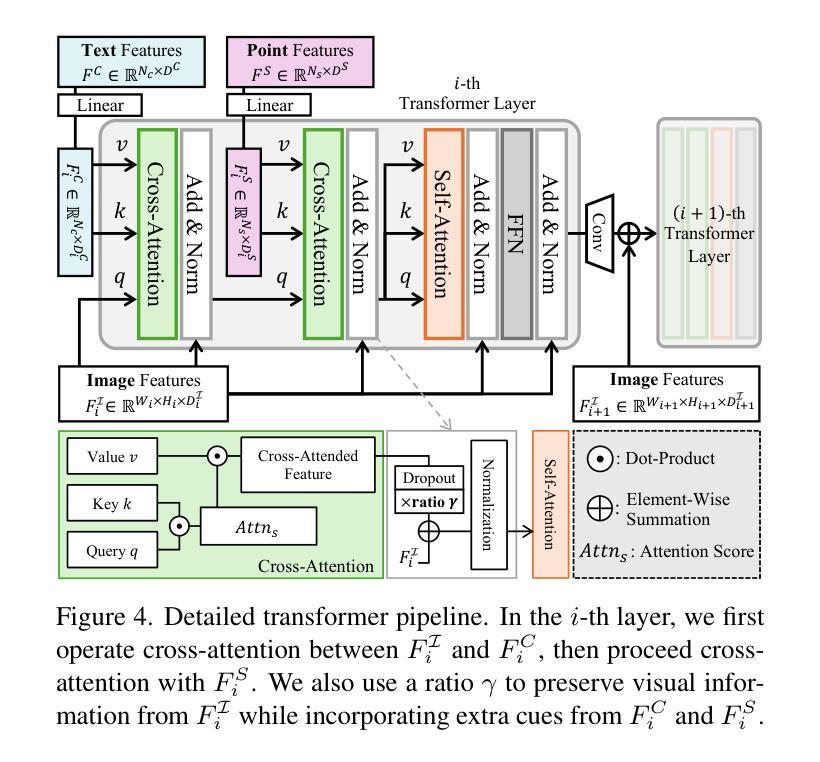

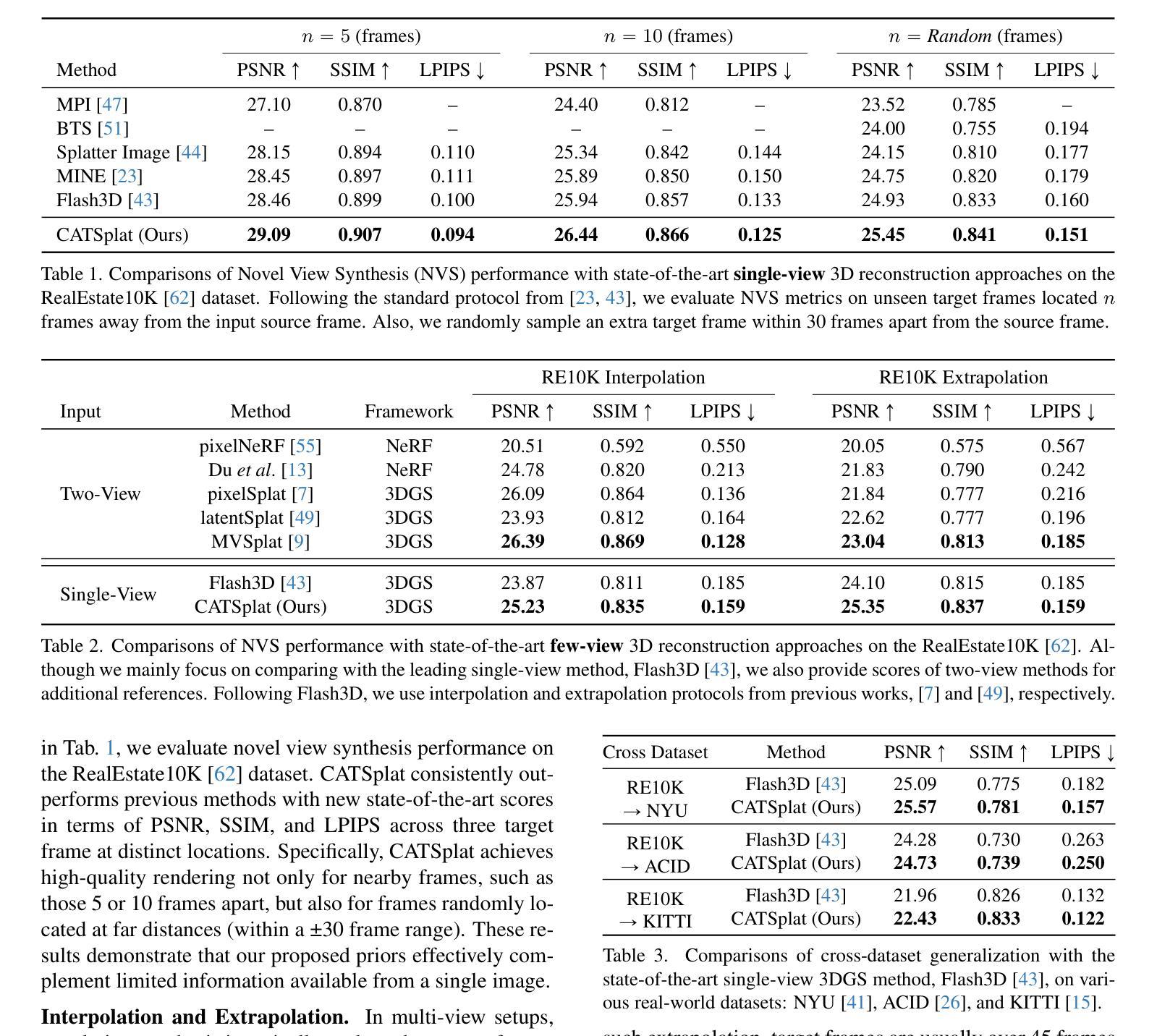

CATSplat: Context-Aware Transformer with Spatial Guidance for Generalizable 3D Gaussian Splatting from A Single-View Image

Authors:Wonseok Roh, Hwanhee Jung, Jong Wook Kim, Seunggwan Lee, Innfarn Yoo, Andreas Lugmayr, Seunggeun Chi, Karthik Ramani, Sangpil Kim

Recently, generalizable feed-forward methods based on 3D Gaussian Splatting have gained significant attention for their potential to reconstruct 3D scenes using finite resources. These approaches create a 3D radiance field, parameterized by per-pixel 3D Gaussian primitives, from just a few images in a single forward pass. However, unlike multi-view methods that benefit from cross-view correspondences, 3D scene reconstruction with a single-view image remains an underexplored area. In this work, we introduce CATSplat, a novel generalizable transformer-based framework designed to break through the inherent constraints in monocular settings. First, we propose leveraging textual guidance from a visual-language model to complement insufficient information from a single image. By incorporating scene-specific contextual details from text embeddings through cross-attention, we pave the way for context-aware 3D scene reconstruction beyond relying solely on visual cues. Moreover, we advocate utilizing spatial guidance from 3D point features toward comprehensive geometric understanding under single-view settings. With 3D priors, image features can capture rich structural insights for predicting 3D Gaussians without multi-view techniques. Extensive experiments on large-scale datasets demonstrate the state-of-the-art performance of CATSplat in single-view 3D scene reconstruction with high-quality novel view synthesis.

最近,基于三维高斯展开的一般性前馈方法因其利用有限资源重建三维场景的潜力而受到广泛关注。这些方法仅通过一次前向传递,从几张图像中创建一个由像素级三维高斯基本体参数化的三维辐射场。然而,不同于受益于跨视图对应关系的多视图方法,单视图图像的3D场景重建仍然是一个未被充分研究的领域。在这项工作中,我们引入了CATSplat,这是一种新型的可扩展的基于transformer的框架,旨在突破单目设置中的固有约束。首先,我们提出利用视觉语言模型的文本指导来补充单一图像中的信息不足。通过结合文本嵌入的场景特定上下文细节进行交叉注意力,我们为基于文本指导的上下文感知三维场景重建铺平了道路,而不仅仅依赖于视觉线索。此外,我们主张利用三维点特征的空间指导来实现单视图设置下的全面几何理解。借助三维先验知识,图像特征可以捕捉丰富的结构洞察信息,以预测三维高斯分布,无需使用多视图技术。在大型数据集上的大量实验表明,CATSplat在单视图三维场景重建中具有最先进的性能,并能进行高质量的新视角合成。

论文及项目相关链接

Summary:

基于3D高斯Splatting的通用前馈方法因其利用有限资源重建3D场景的潜力而受到关注。然而,单视图重建仍是一个未充分研究的领域。在此工作中,我们提出了CATSplat框架,旨在突破单目设置的固有约束。我们提出利用视觉语言模型的文本指导来补充单张图像的信息不足,并通过引入跨注意力机制来结合场景特定的上下文细节,为只依赖视觉线索的情境感知的3D场景重建提供了新思路。同时,我们主张利用来自三维点特征的空间指导,以实现单视图设置下的全面几何理解。在大型数据集上的实验表明,CATSplat在单视图3D场景重建方面表现出卓越性能,能生成高质量的新视角合成图像。

Key Takeaways:

- 基于3D高斯Splatting的通用前馈方法可用于重建3D场景。

- 单视图重建是一个未充分研究的领域,而CATSplat框架旨在解决这一挑战。

- CATSplat利用视觉语言模型的文本指导来补充单张图像的信息不足。

- 通过跨注意力机制结合场景特定的上下文细节,为情境感知的3D场景重建提供新思路。

- 利用三维点特征的空间指导实现单视图设置下的全面几何理解。

- CATSplat在大型数据集上的实验表现出卓越性能。

点此查看论文截图

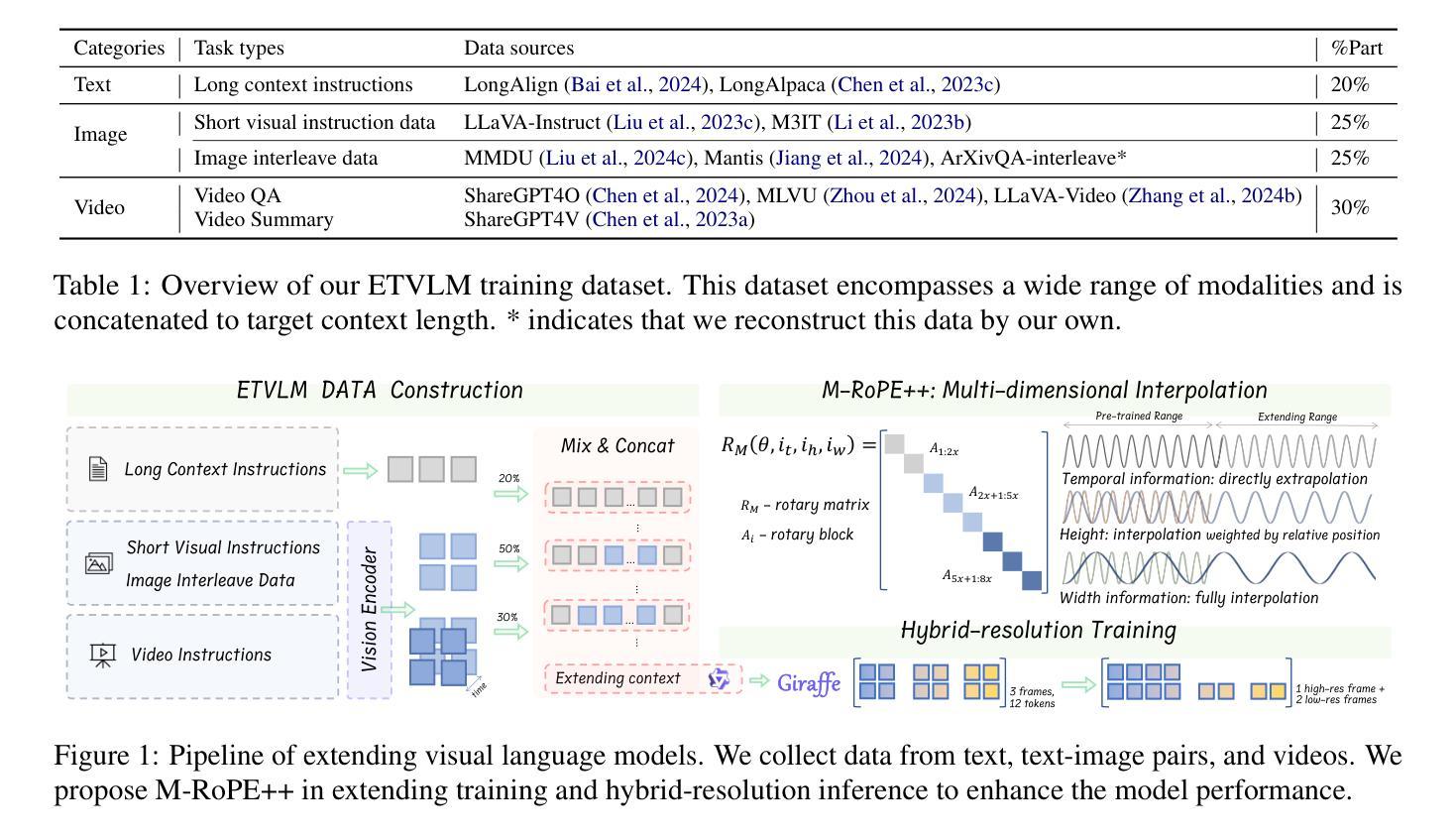

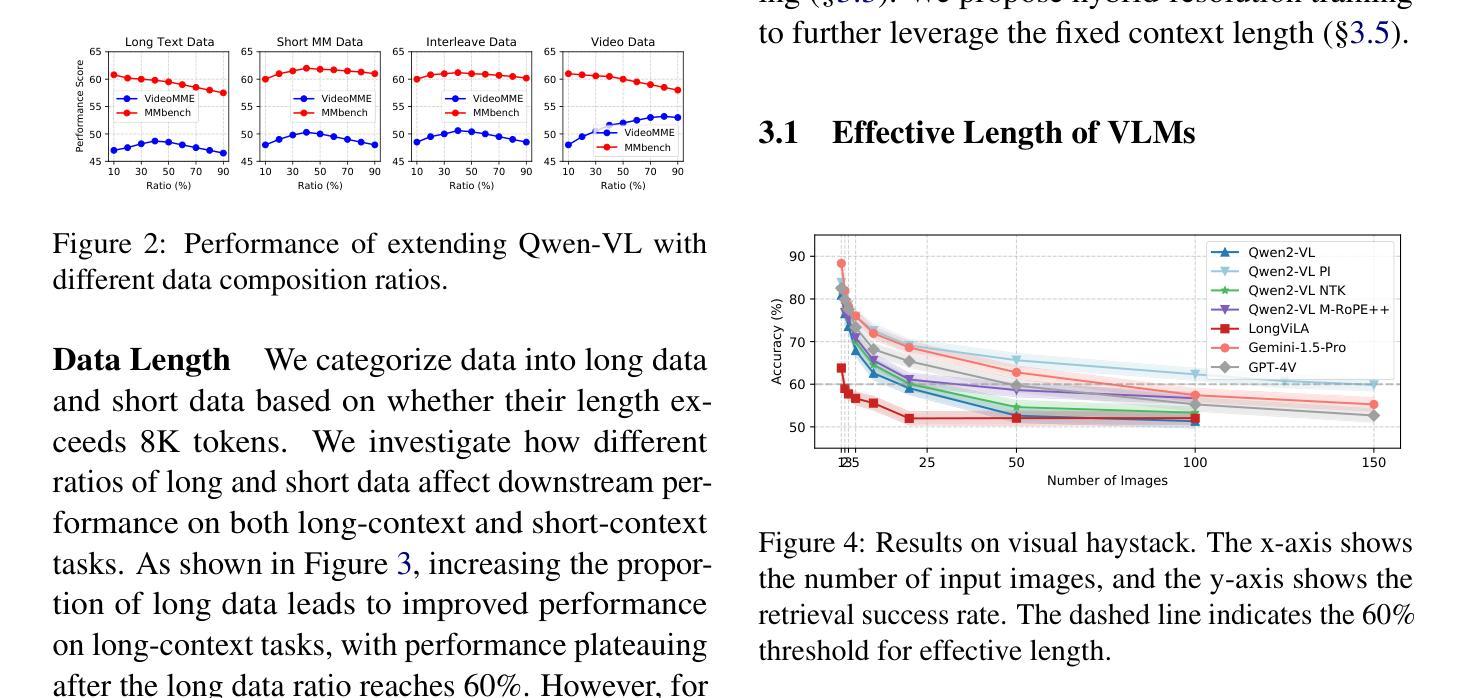

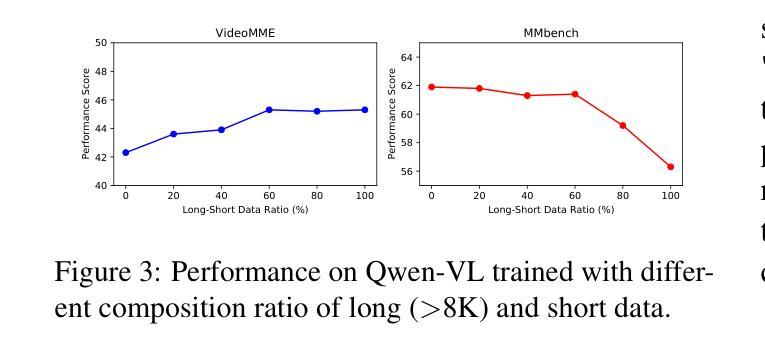

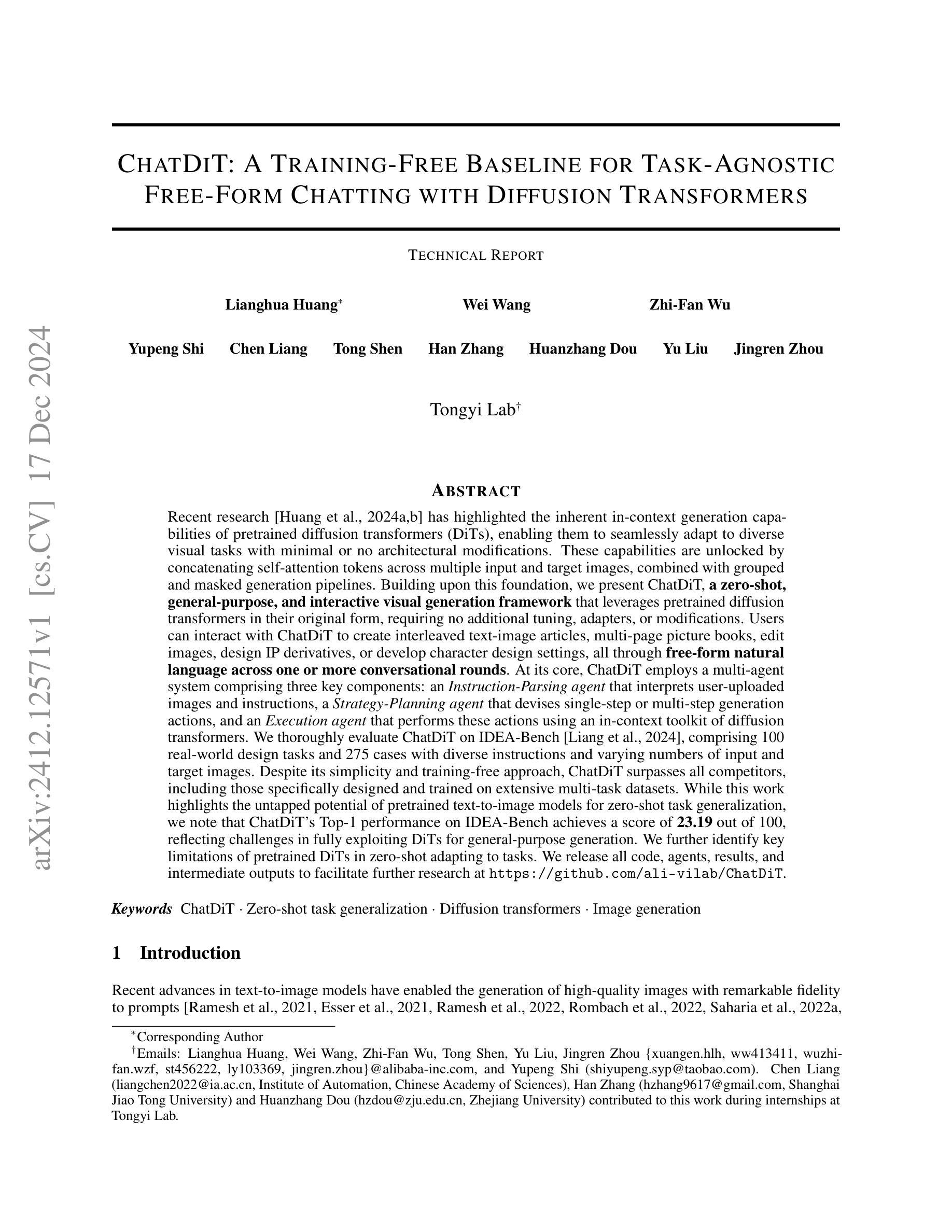

GIRAFFE: Design Choices for Extending the Context Length of Visual Language Models

Authors:Mukai Li, Lei Li, Shansan Gong, Qi Liu

Visual Language Models (VLMs) demonstrate impressive capabilities in processing multimodal inputs, yet applications such as visual agents, which require handling multiple images and high-resolution videos, demand enhanced long-range modeling. Moreover, existing open-source VLMs lack systematic exploration into extending their context length, and commercial models often provide limited details. To tackle this, we aim to establish an effective solution that enhances long context performance of VLMs while preserving their capacities in short context scenarios. Towards this goal, we make the best design choice through extensive experiment settings from data curation to context window extending and utilizing: (1) we analyze data sources and length distributions to construct ETVLM - a data recipe to balance the performance across scenarios; (2) we examine existing position extending methods, identify their limitations and propose M-RoPE++ as an enhanced approach; we also choose to solely instruction-tune the backbone with mixed-source data; (3) we discuss how to better utilize extended context windows and propose hybrid-resolution training. Built on the Qwen-VL series model, we propose Giraffe, which is effectively extended to 128K lengths. Evaluated on extensive long context VLM benchmarks such as VideoMME and Viusal Haystacks, our Giraffe achieves state-of-the-art performance among similarly sized open-source long VLMs and is competitive with commercial model GPT-4V. We will open-source the code, data, and models.

视觉语言模型(VLMs)在处理多模态输入方面展现出令人印象深刻的能力,但对于需要处理多个图像和高分辨率视频的应用(如视觉代理)来说,它们需要增强长程建模能力。此外,现有的开源VLMs缺乏对扩展其上下文长度的系统性探索,而商业模型往往提供有限的信息。为了解决这个问题,我们的目标是建立一个有效的解决方案,提高VLMs在长上下文环境中的性能,同时保持其在短上下文场景中的能力。为此目标,我们通过从数据收集到上下文窗口扩展的广泛实验设置来做出最佳设计选择:首先分析数据源和长度分布以构建ETVLM——一种平衡不同场景性能的基准;其次对现有位置扩展方法进行评估并提出改进的M-RoPE++方法;我们还选择使用混合源数据对主干进行单独指令微调;最后讨论如何更好地利用扩展的上下文窗口并提出混合分辨率训练。基于Qwen-VL系列模型,我们提出了长颈鹿模型(Giraffe),其长度有效扩展到128K。在诸如VideoMME和Viusal Haystacks等广泛的长上下文VLM基准测试中,Giraffe在同类规模的开源长VLM中达到了最先进的性能,并且与商业模型GPT-4V相抗衡。我们将开源代码、数据和模型。

论文及项目相关链接

PDF Working in progress

Summary

大模型在处理多模态输入方面表现出强大的能力,但对于需要处理多张图像和高分辨率视频的应用来说,仍需要提高长期建模能力。为了解决这个问题,研究团队通过一系列方法增强了大模型在长文本语境下的性能,并能在短语境场景中保持原有能力。研究团队设计了ETVLM数据配方来平衡不同场景的性能,提出了M-RoPE++方法来解决现有位置扩展方法的局限性,并选择了混合源数据进行指令微调。此外,该研究还探讨了如何更好地利用扩展的上下文窗口并提出了混合分辨率训练策略。基于Qwen-VL系列模型构建的Giraffe模型被有效地扩展到了128K长度,在多个长语境视觉语言模型基准测试中表现优异。研究团队将开源代码、数据和模型。

Key Takeaways

- 大模型在处理视觉语言任务时表现出强大的能力,但在处理涉及多个图像和高分辨率视频的长文本语境任务时仍面临挑战。

- 研究团队通过一系列策略增强了模型在长文本语境下的性能,这些策略包括设计ETVLM数据配方、提出M-RoPE++方法和混合源数据指令微调等。

- 研究团队探讨了如何更好地利用扩展的上下文窗口,并提出了混合分辨率训练策略来提高模型的性能。

- Giraffe模型是基于Qwen-VL系列模型构建的,能够有效地处理长达128K的文本长度。

- Giraffe模型在多个长文本语境视觉语言模型基准测试中表现优异,达到了业界领先水平。

- 研究团队计划将代码、数据和模型开源共享。这对于视觉语言研究领域是一个积极的贡献,有助于推动该领域的进一步发展。

点此查看论文截图

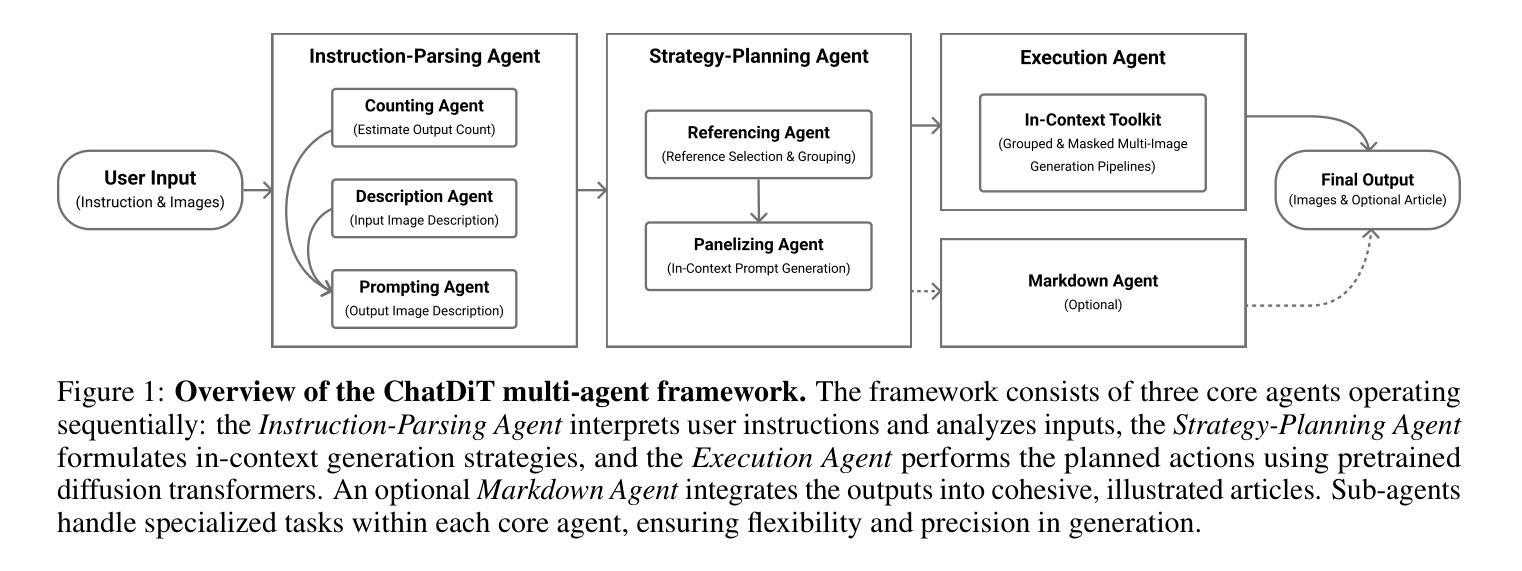

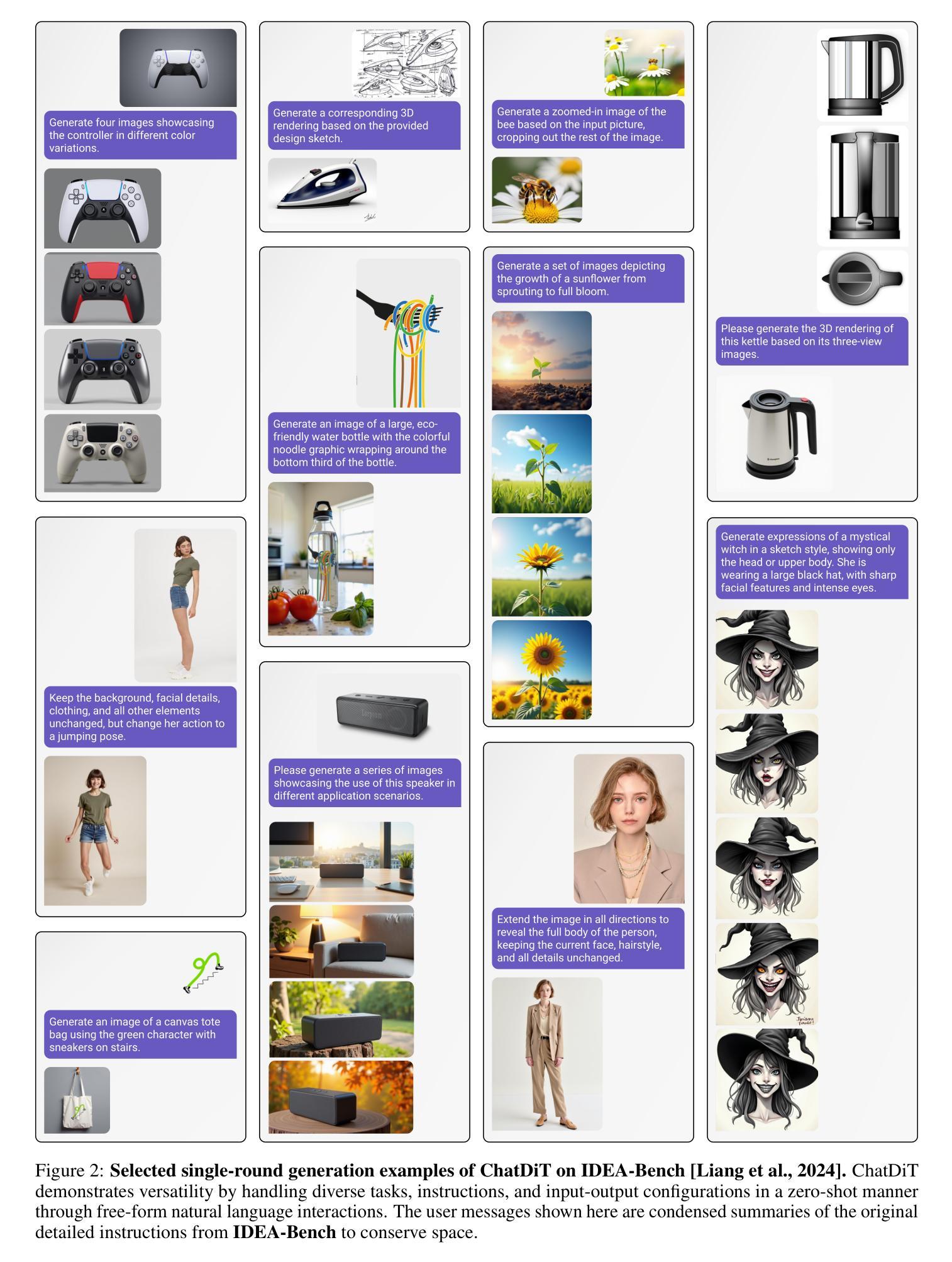

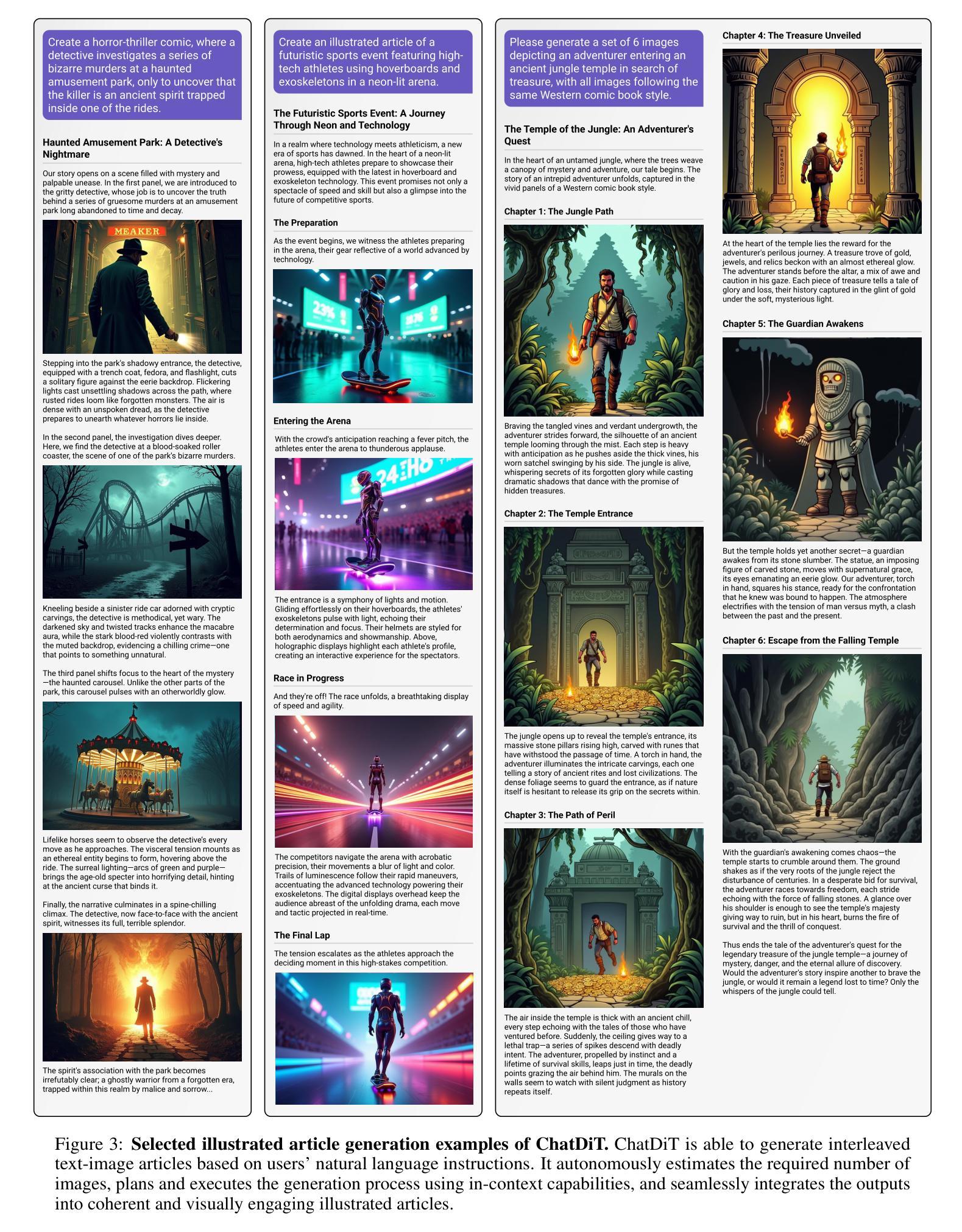

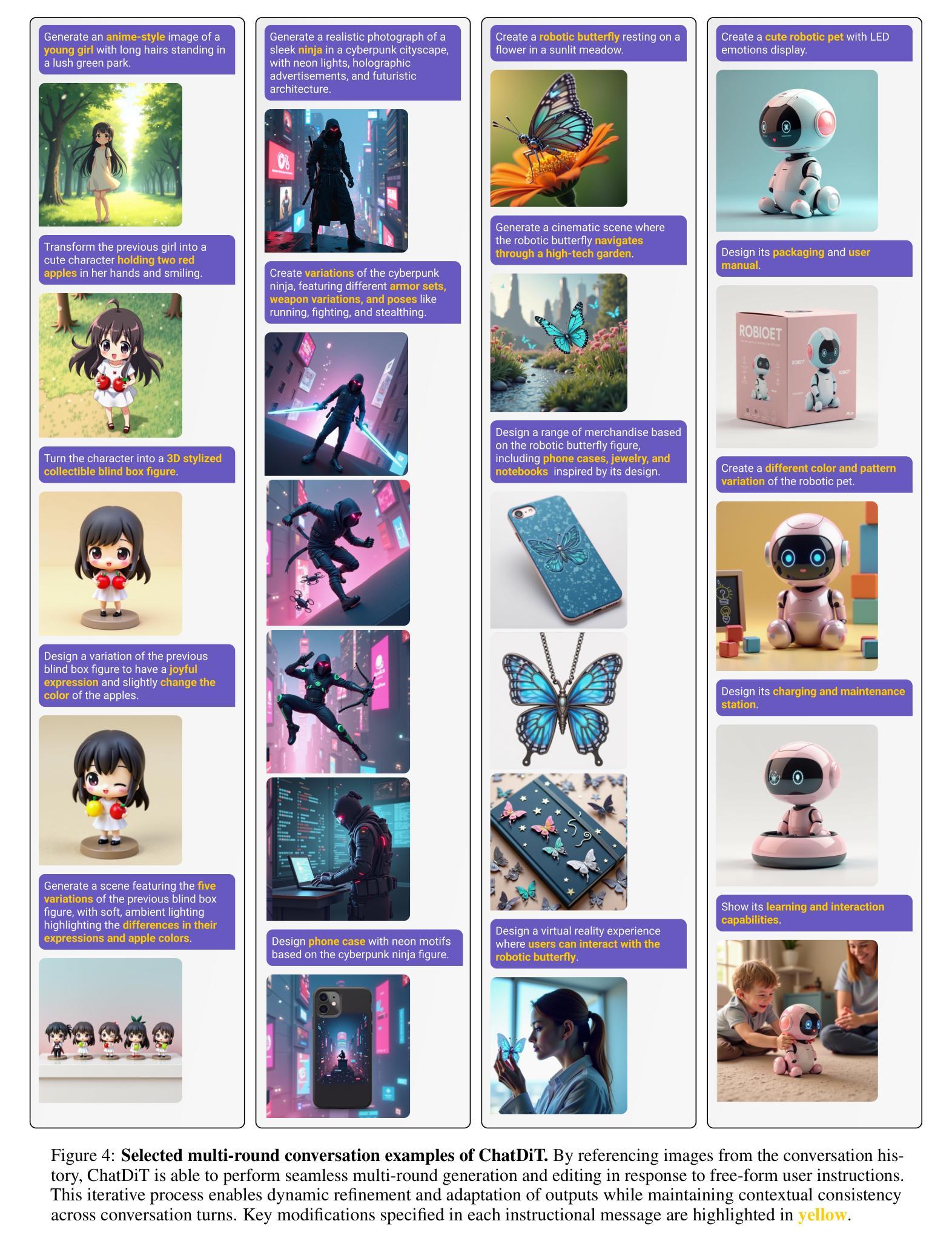

ChatDiT: A Training-Free Baseline for Task-Agnostic Free-Form Chatting with Diffusion Transformers

Authors:Lianghua Huang, Wei Wang, Zhi-Fan Wu, Yupeng Shi, Chen Liang, Tong Shen, Han Zhang, Huanzhang Dou, Yu Liu, Jingren Zhou

Recent research arXiv:2410.15027 arXiv:2410.23775 has highlighted the inherent in-context generation capabilities of pretrained diffusion transformers (DiTs), enabling them to seamlessly adapt to diverse visual tasks with minimal or no architectural modifications. These capabilities are unlocked by concatenating self-attention tokens across multiple input and target images, combined with grouped and masked generation pipelines. Building upon this foundation, we present ChatDiT, a zero-shot, general-purpose, and interactive visual generation framework that leverages pretrained diffusion transformers in their original form, requiring no additional tuning, adapters, or modifications. Users can interact with ChatDiT to create interleaved text-image articles, multi-page picture books, edit images, design IP derivatives, or develop character design settings, all through free-form natural language across one or more conversational rounds. At its core, ChatDiT employs a multi-agent system comprising three key components: an Instruction-Parsing agent that interprets user-uploaded images and instructions, a Strategy-Planning agent that devises single-step or multi-step generation actions, and an Execution agent that performs these actions using an in-context toolkit of diffusion transformers. We thoroughly evaluate ChatDiT on IDEA-Bench arXiv:2412.11767, comprising 100 real-world design tasks and 275 cases with diverse instructions and varying numbers of input and target images. Despite its simplicity and training-free approach, ChatDiT surpasses all competitors, including those specifically designed and trained on extensive multi-task datasets. We further identify key limitations of pretrained DiTs in zero-shot adapting to tasks. We release all code, agents, results, and intermediate outputs to facilitate further research at https://github.com/ali-vilab/ChatDiT

最近的arXiv:2410.15027和arXiv:2410.23775研究突出了预训练扩散变压器(DiTs)的内在上下文生成能力,通过拼接多个输入和目标图像的自注意令牌,结合分组和掩码生成管道,使它们能够无缝适应各种视觉任务,而无需进行最小的架构修改。

在此基础上,我们提出了ChatDiT,这是一个零样本、通用、交互式的视觉生成框架,它利用预训练的扩散变压器,无需额外的调整、适配器或修改。用户可以与ChatDiT互动,创建交互式的文本-图像文章、多页图画书、编辑图像、设计知识产权衍生品或开发角色设计设置,所有这些都可以通过一轮或多轮对话的自由形式自然语言来实现。

论文及项目相关链接

PDF Tech report. Project page: https://ali-vilab.github.io/ChatDiT-Page/

Summary

预训练扩散转换器(DiT)具有内在上下文生成能力,可轻松适应多种视觉任务,无需进行任何架构修改。基于此,提出零样本、通用、交互式视觉生成框架ChatDiT,利用原始预训练扩散转换器,无需额外调整、适配或修改。用户可与ChatDiT交互,创建文本图像文章、多页图画书、编辑图像、设计IP衍生品或开发角色设计设置等,均通过一轮或多轮对话的自由形式自然语言实现。其核心采用多代理系统,包括解释用户上传的图像和指令的指令解析代理、设计单步或多步生成动作的规划代理以及使用扩散转换器工具集执行这些动作的执行代理。在IDEA-Bench上全面评估ChatDiT,包含100个真实世界设计任务和275个具有不同指令和输入目标图像的情况。尽管采用简单且无需训练的方法,ChatDiT仍超越所有竞争对手,包括那些专门设计和训练的多任务数据集。

Key Takeaways

- 预训练扩散转换器(DiT)具有上下文生成能力,可适应多种视觉任务。

- ChatDiT是一个零样本、通用、交互式的视觉生成框架,利用预训练扩散转换器。

- ChatDiT允许用户通过自然语言交互创建文本图像文章、图画书、编辑图像等。

- ChatDiT采用多代理系统,包括指令解析、策略规划和执行代理。

- ChatDiT在多种真实世界设计任务上表现超越竞争对手。

- ChatDiT无需特殊训练或调整,即可有效利用预训练扩散转换器。

点此查看论文截图

LinguaLIFT: An Effective Two-stage Instruction Tuning Framework for Low-Resource Language Tasks

Authors:Hongbin Zhang, Kehai Chen, Xuefeng Bai, Yang Xiang, Min Zhang

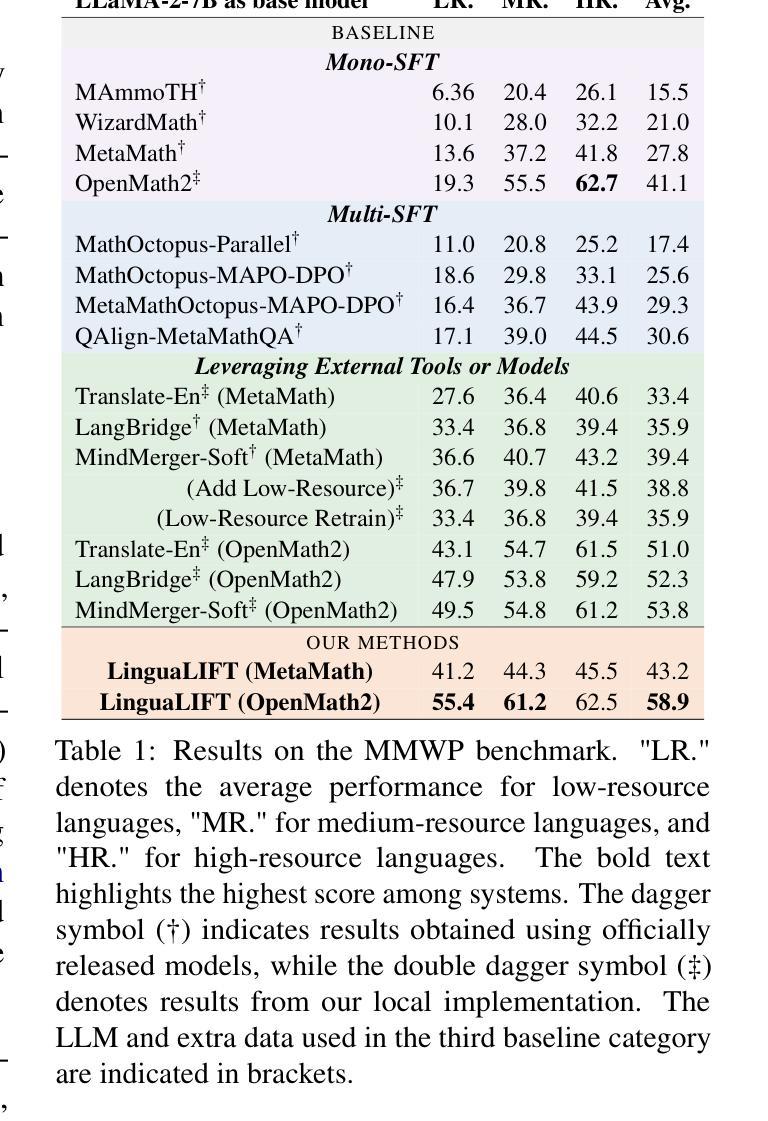

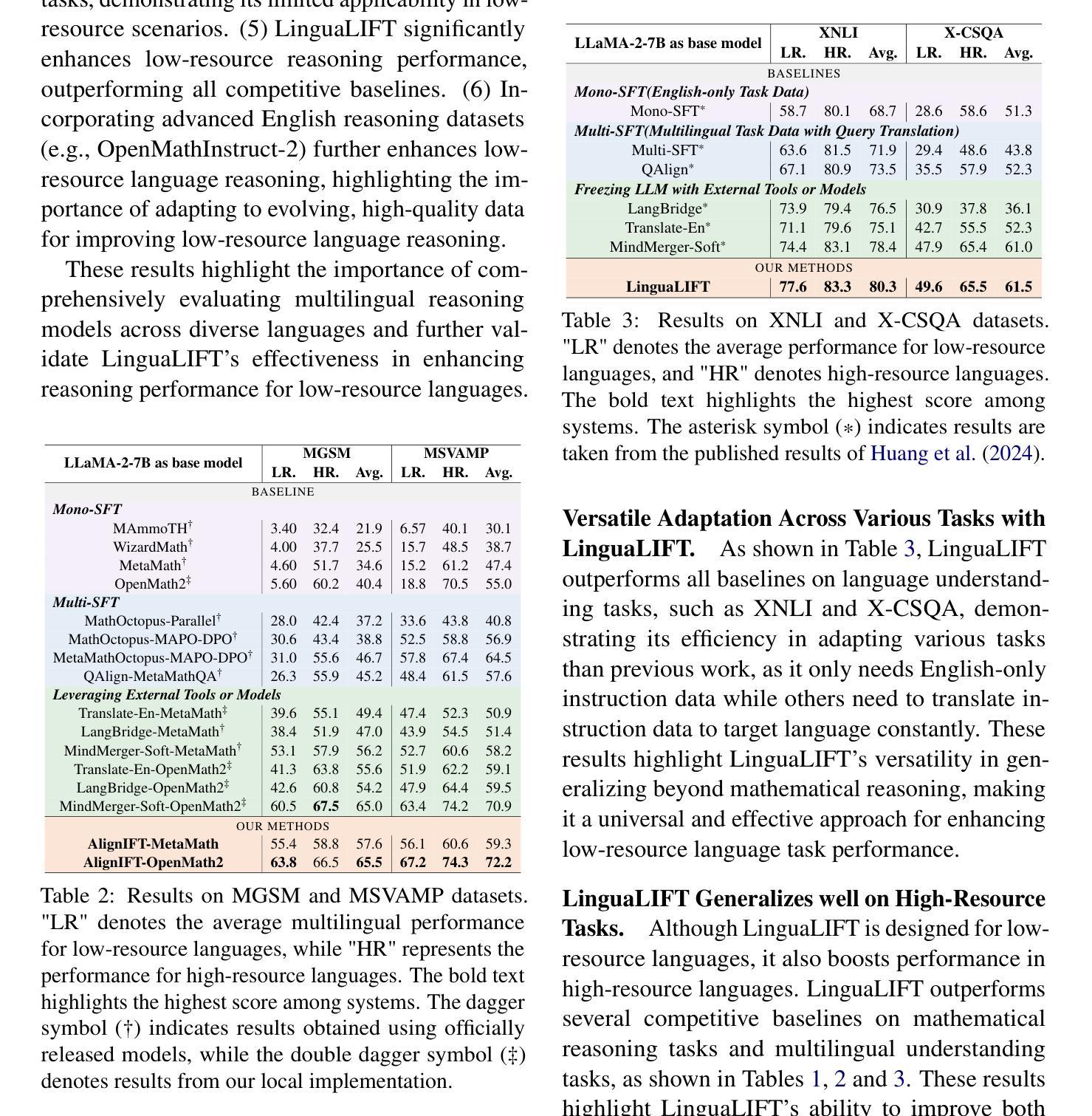

Large language models (LLMs) have demonstrated impressive multilingual understanding and reasoning capabilities, driven by extensive pre-training multilingual corpora and fine-tuning instruction data. However, a performance gap persists between high-resource and low-resource language tasks due to language imbalance in the pre-training corpus, even using more low-resource data during fine-tuning. To alleviate this issue, we propose LinguaLIFT, a two-stage instruction tuning framework for advancing low-resource language tasks. An additional language alignment layer is first integrated into the LLM to adapt a pre-trained multilingual encoder, thereby enhancing multilingual alignment through code-switched fine-tuning. The second stage fine-tunes LLM with English-only instruction data while freezing the language alignment layer, allowing LLM to transfer task-specific capabilities from English to low-resource language tasks. Additionally, we introduce the Multilingual Math World Problem (MMWP) benchmark, which spans 21 low-resource, 17 medium-resource, and 10 high-resource languages, enabling comprehensive evaluation of multilingual reasoning. Experimental results show that LinguaLIFT outperforms several competitive baselines across MMWP and other widely used benchmarks.

大型语言模型(LLM)已经展现出了令人印象深刻的多语言理解和推理能力,这得益于大量的预训练多语言语料库和精细调整指令数据的驱动。然而,由于预训练语料库中的语言不平衡,高资源语言任务和低资源语言任务之间仍然存在性能差距,即使在微调过程中使用了更多的低资源数据。为了缓解这个问题,我们提出了LinguaLIFT,这是一个两阶段的指令调整框架,旨在推进低资源语言任务。首先,我们将一个额外的语言对齐层集成到LLM中,以适应预训练的多语言编码器,从而通过代码切换微调增强多语言对齐。第二阶段使用仅英语的指令数据对LLM进行微调,同时冻结语言对齐层,使LLM能够从英语向低资源语言任务转移特定任务的能力。此外,我们还介绍了多语言数学世界问题(MMWP)基准测试,它涵盖21种低资源、17种中等资源和10种高资源语言,能够全面评估多语言推理。实验结果表明,LinguaLIFT在MMWP和其他广泛使用的基准测试上超越了几个竞争基线。

论文及项目相关链接

Summary

多语言大型语言模型(LLM)具备强大的跨语言理解和推理能力,这得益于大规模预训练多语言语料库和精细调整指令数据的支持。然而,由于预训练语料库中的语言不平衡,高资源语言与低资源语言任务之间仍存在性能差距,即使在微调过程中使用了更多的低资源数据。为解决这一问题,提出了LinguaLIFT这一两阶段指令调整框架,旨在提升低资源语言任务的表现。首先,通过集成额外的语言对齐层到LLM中,以适应预训练的多语言编码器,通过代码切换微调增强多语言对齐。第二阶段仅使用英语指令数据对LLM进行微调,同时冻结语言对齐层,使LLM能够将特定任务的能力从英语转移到低资源语言任务。此外,还引入了跨21种低资源、17种中等资源和10种高资源语言的Multilingual Math World Problem(MMWP)基准测试,能够全面评估多语言推理能力。实验结果表明,LinguaLIFT在MMWP和其他广泛使用的基准测试上优于几个竞争基线。

Key Takeaways

- LLM具备强大的多语言理解和推理能力,但仍存在高资源与低资源语言任务间的性能差距。

- LinguaLIFT是一个两阶段指令调整框架,旨在提升低资源语言任务的表现。

- LinguaLIFT通过集成语言对齐层适应预训练的多语言编码器,增强多语言对齐。

- 第二阶段微调仅使用英语指令数据,并冻结语言对齐层,实现任务能力从英语到低资源语言任务的转移。

- 引入了MMWP基准测试,能够全面评估多语言推理能力。

- LinguaLIFT在多个基准测试上表现出优异的性能。

点此查看论文截图

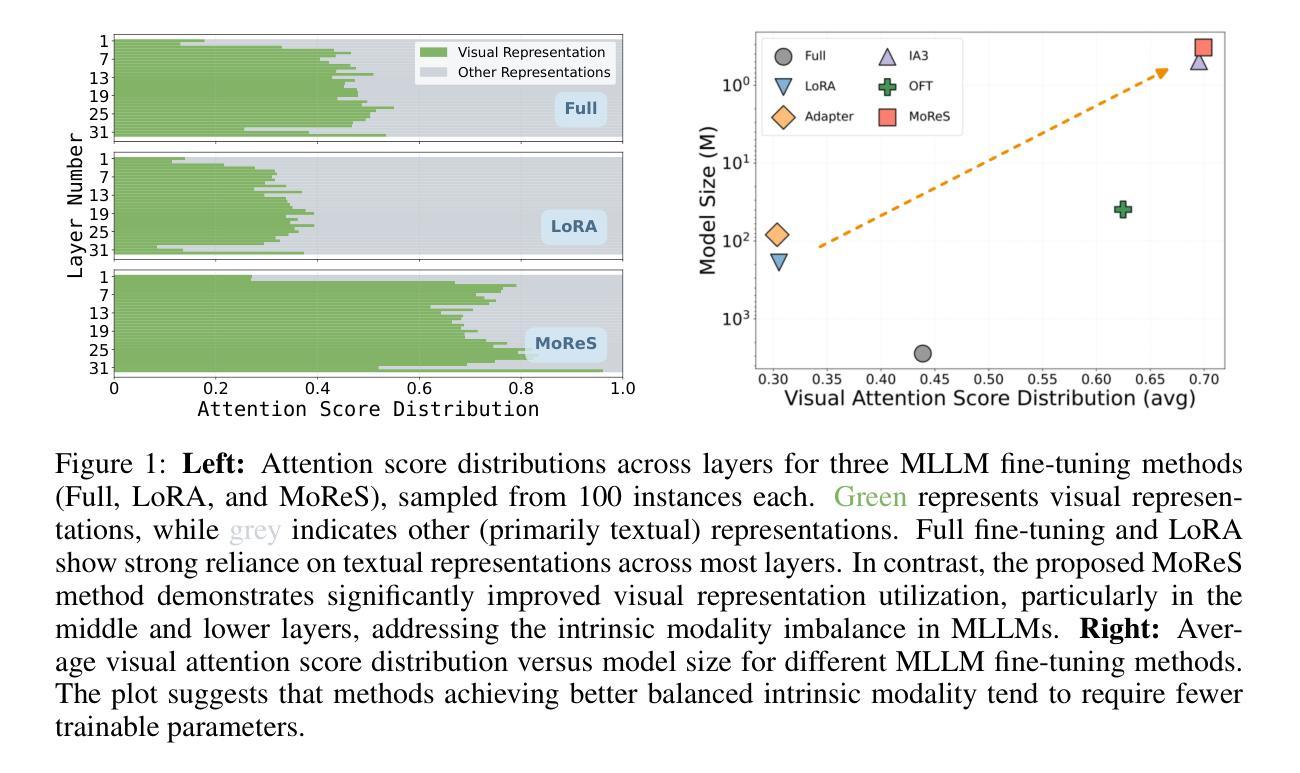

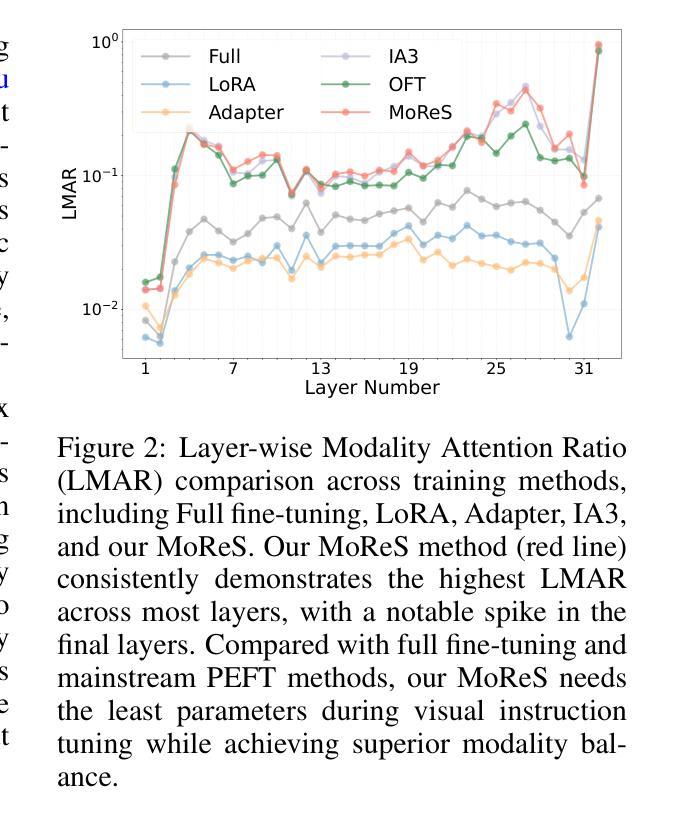

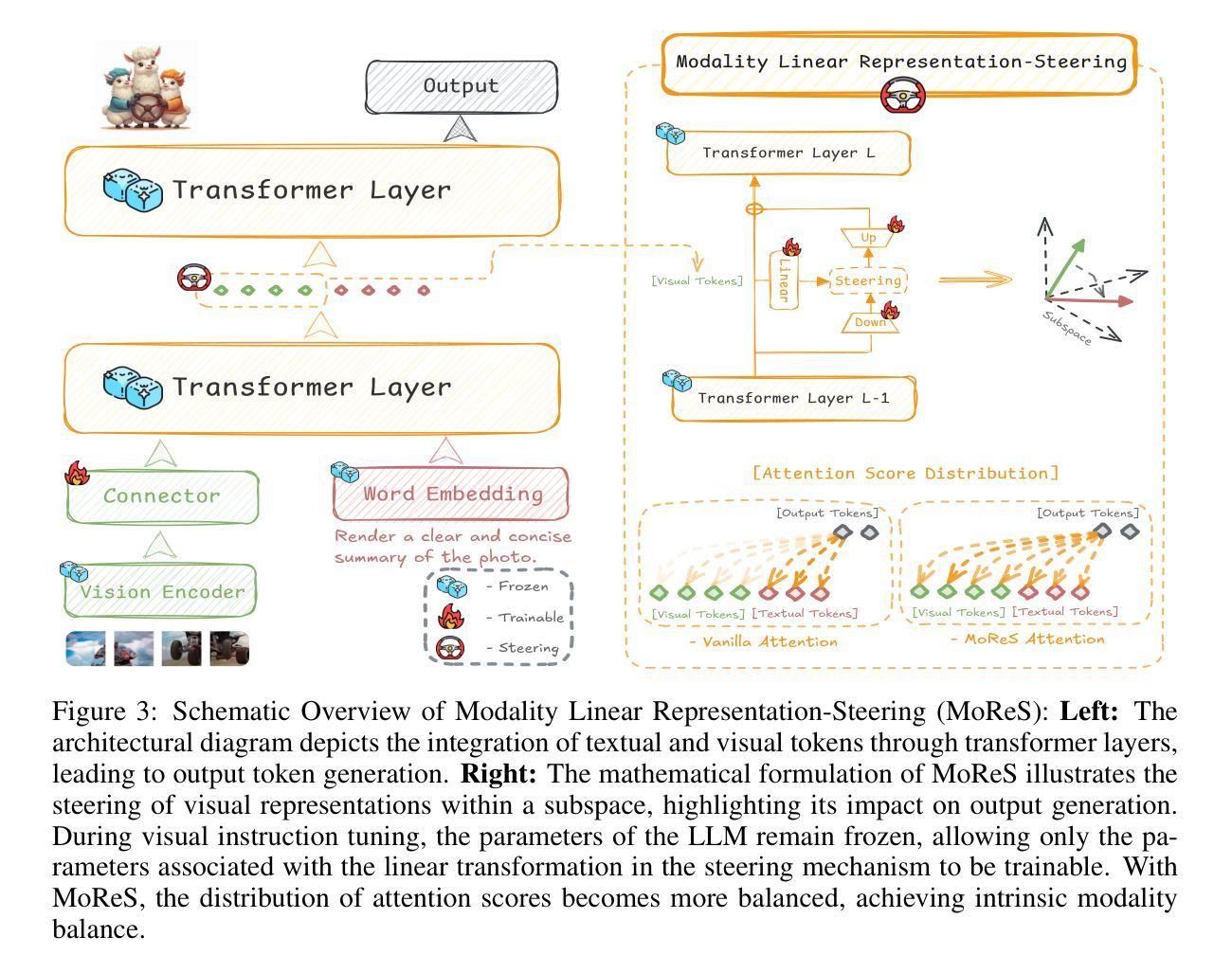

Visual Instruction Tuning with 500x Fewer Parameters through Modality Linear Representation-Steering

Authors:Jinhe Bi, Yujun Wang, Haokun Chen, Xun Xiao, Artur Hecker, Volker Tresp, Yunpu Ma

Multimodal Large Language Models (MLLMs) have significantly advanced visual tasks by integrating visual representations into large language models (LLMs). The textual modality, inherited from LLMs, equips MLLMs with abilities like instruction following and in-context learning. In contrast, the visual modality enhances performance in downstream tasks by leveraging rich semantic content, spatial information, and grounding capabilities. These intrinsic modalities work synergistically across various visual tasks. Our research initially reveals a persistent imbalance between these modalities, with text often dominating output generation during visual instruction tuning. This imbalance occurs when using both full fine-tuning and parameter-efficient fine-tuning (PEFT) methods. We then found that re-balancing these modalities can significantly reduce the number of trainable parameters required, inspiring a direction for further optimizing visual instruction tuning. We introduce Modality Linear Representation-Steering (MoReS) to achieve the goal. MoReS effectively re-balances the intrinsic modalities throughout the model, where the key idea is to steer visual representations through linear transformations in the visual subspace across each model layer. To validate our solution, we composed LLaVA Steering, a suite of models integrated with the proposed MoReS method. Evaluation results show that the composed LLaVA Steering models require, on average, 500 times fewer trainable parameters than LoRA needs while still achieving comparable performance across three visual benchmarks and eight visual question-answering tasks. Last, we present the LLaVA Steering Factory, an in-house developed platform that enables researchers to quickly customize various MLLMs with component-based architecture for seamlessly integrating state-of-the-art models, and evaluate their intrinsic modality imbalance.

多模态大型语言模型(MLLMs)通过将视觉表示集成到大型语言模型(LLMs)中,显著地推进了视觉任务的发展。文本模态继承自LLMs,使MLLMs具备指令遵循和上下文学习等功能。相比之下,视觉模态通过利用丰富的语义内容、空间信息和定位能力,提高了下游任务的性能。这些内在模态在不同的视觉任务中协同工作。我们的研究最初揭示了这些模态之间持久的失衡,文本通常在视觉指令调整过程中主导输出生成。这种不平衡在使用全微调(Full Fine-tuning)和参数高效微调(PEFT)方法时都会发生。然后我们发现重新平衡这些模态可以大大减少所需的训练参数数量,这为进一步优化视觉指令调整提供了方向。我们引入模态线性表示转向(MoReS)来实现这一目标。MoReS有效地在模型中重新平衡了内在模态,其关键思想是通过每个模型层的视觉子空间的线性变换来引导视觉表示。为了验证我们的解决方案,我们开发了LLaVA转向套件,这是一套结合了所提出的MoReS方法的模型。评估结果表明,LLaVA转向套件模型平均需要比LoRA少500倍的可训练参数,同时在三个视觉基准测试和八个视觉问答任务中仍表现出相当的性能。最后,我们推出了LLaVA转向工厂,这是一个内部开发的平台,使研究人员能够迅速定制各种MLLMs,通过基于组件的架构无缝集成最新模型,并评估其内在模态失衡情况。

论文及项目相关链接

Summary

本文研究了多模态大型语言模型(MLLMs)在集成视觉表征到大型语言模型(LLMs)后对视觉任务的显著改进。文本模态赋予MLLMs指令遵循和上下文学习的能力,而视觉模态则通过丰富的语义内容、空间信息和接地能力增强下游任务性能。研究发现,在视觉指令微调中存在模态之间的持久不平衡,文本往往主导输出生成。通过重新平衡这些模态,可以显著减少所需的训练参数数量。为此,引入了模态线性表示转向(MoReS)方法,该方法有效地平衡了模型中的内在模态,并通过线性变换引导视觉表示。提出的LLaVA Steering方法结合了MoReS,并在三个视觉基准测试和八个视觉问答任务上实现了良好的性能,同时减少了所需的训练参数。最后,开发了LLaVA Steering Factory平台,方便研究人员快速定制各种MLLMs,并评估其内在模态不平衡情况。

Key Takeaways

- MLLMs通过集成视觉表征显著提高了视觉任务性能。

- MLLMs具备文本和视觉两种模态,分别赋予模型不同的能力。

- 在视觉指令微调中存在文本和视觉模态的不平衡。

- 模态重新平衡可以显著减少训练参数需求。

- 引入了MoReS方法来实现模态的平衡,并通过线性变换引导视觉表示。

- LLaVA Steering方法结合了MoReS,在多个视觉任务上实现了良好的性能并减少了训练参数。

点此查看论文截图

The Open Source Advantage in Large Language Models (LLMs)

Authors:Jiya Manchanda, Laura Boettcher, Matheus Westphalen, Jasser Jasser

Large language models (LLMs) mark a key shift in natural language processing (NLP), having advanced text generation, translation, and domain-specific reasoning. Closed-source models like GPT-4, powered by proprietary datasets and extensive computational resources, lead with state-of-the-art performance today. However, they face criticism for their “black box” nature and for limiting accessibility in a manner that hinders reproducibility and equitable AI development. By contrast, open-source initiatives like LLaMA and BLOOM prioritize democratization through community-driven development and computational efficiency. These models have significantly reduced performance gaps, particularly in linguistic diversity and domain-specific applications, while providing accessible tools for global researchers and developers. Notably, both paradigms rely on foundational architectural innovations, such as the Transformer framework by Vaswani et al. (2017). Closed-source models excel by scaling effectively, while open-source models adapt to real-world applications in underrepresented languages and domains. Techniques like Low-Rank Adaptation (LoRA) and instruction-tuning datasets enable open-source models to achieve competitive results despite limited resources. To be sure, the tension between closed-source and open-source approaches underscores a broader debate on transparency versus proprietary control in AI. Ethical considerations further highlight this divide. Closed-source systems restrict external scrutiny, while open-source models promote reproducibility and collaboration but lack standardized auditing documentation frameworks to mitigate biases. Hybrid approaches that leverage the strengths of both paradigms are likely to shape the future of LLM innovation, ensuring accessibility, competitive technical performance, and ethical deployment.

大型语言模型(LLM)标志着自然语言处理(NLP)的关键转变,具备先进的文本生成、翻译和领域特定推理能力。像GPT-4这样的封闭源模型,依靠专有数据集和大量的计算资源,目前处于最先进的性能水平。然而,它们因“黑箱”性质和访问限制而受到批评,阻碍了可重复性和公平的AI发展。相比之下,LLaMA和BLOOM等开源倡议通过社区驱动的开发和计算效率来实现民主化。这些模型在语言多样性和领域特定应用等方面显著缩小了性能差距,为全球研究者和开发者提供了可访问的工具。值得注意的是,这两种范式都依赖于基础架构创新,如Vaswani等人提出的Transformer框架(2017年)。封闭源模型通过有效扩展而卓越,而开源模型则适应于代表性不足的语言和领域的实际应用。低秩适应(LoRA)和指令调整数据集等技术使开源模型能够在有限的资源下实现具有竞争力的结果。可以肯定的是,封闭源和开源方法之间的紧张关系突显了人工智能中透明度和专有控制之间更广泛的辩论。道德考量进一步突显了这一分歧。封闭源系统限制外部审查,而开源模型促进可重复性和协作,但缺乏标准化的审计文档框架来减轻偏见。很可能利用两种范式的优势相结合的混合方法将塑造LLM创新的未来,确保可访问性、具有竞争力的技术性能和道德部署。

论文及项目相关链接

PDF 7 pages, 0 figures

Summary

大型语言模型(LLM)在自然语言处理(NLP)领域掀起了一场重大变革,展现出强大的文本生成、翻译和领域特定推理能力。目前,以GPT-4为代表的封闭源模型凭借专有数据集和强大的计算资源达到了业界领先性能,但也因“黑箱”性质和限制可访问性而受到批评,阻碍了可重复性和公平的人工智能发展。相比之下,以LLaMA和BLOOM为代表的开源倡议通过社区驱动开发和计算效率来实现民主化,在缩小性能差距方面取得了显著进展,特别是在语言多样性和领域特定应用方面。两者都依赖于基础架构创新,如Vaswani等人在2017年提出的Transformer框架。尽管存在争论和伦理考量,但封闭源模型和开源模型之间的张力体现了透明度和专有控制之间的更广泛辩论。未来,融合两种模式的优势,确保可访问性、技术竞争力和道德部署的混合方法可能塑造LLM创新的未来。

Key Takeaways

- LLMs标志着NLP领域的关键转变,具有先进的文本生成、翻译和领域特定推理能力。

- 封闭源模型如GPT-4凭借专有数据和计算资源达到业界领先,但面临“黑箱”和可访问性批评。

- 开源倡议如LLaMA和BLOOM通过社区驱动和计算效率实现民主化,缩小性能差距。

- 开源模型在语言多样性和领域特定应用方面表现出显著进展。

- 封闭源和开源模型之间的张力反映了透明度和专有控制之间的更广泛辩论。

- 封闭源系统限制外部审查,而开源模型促进可重复性和协作,但缺乏标准化审计文档框架来减轻偏见。

点此查看论文截图

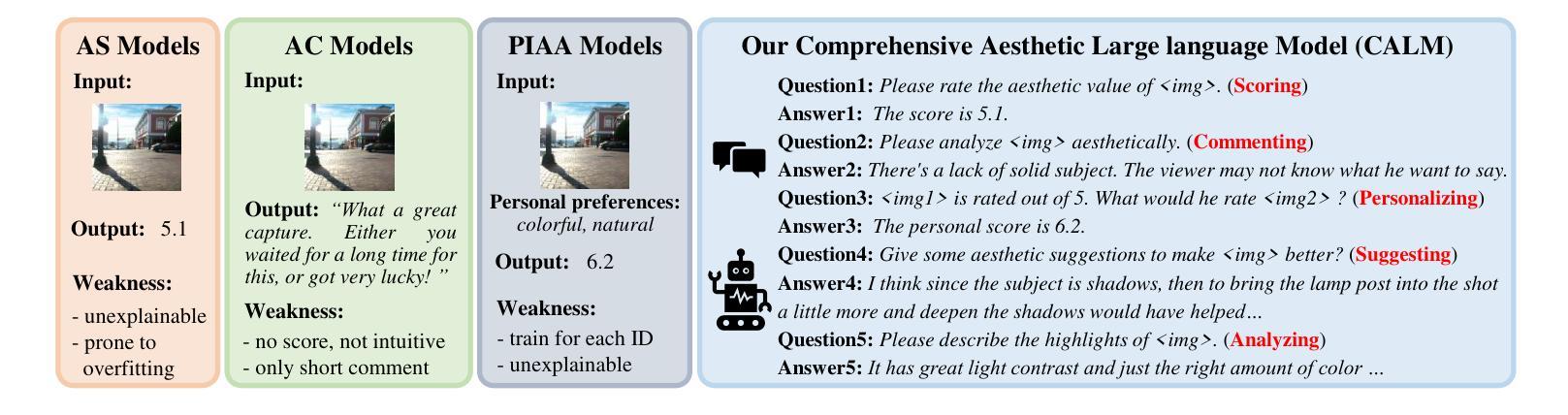

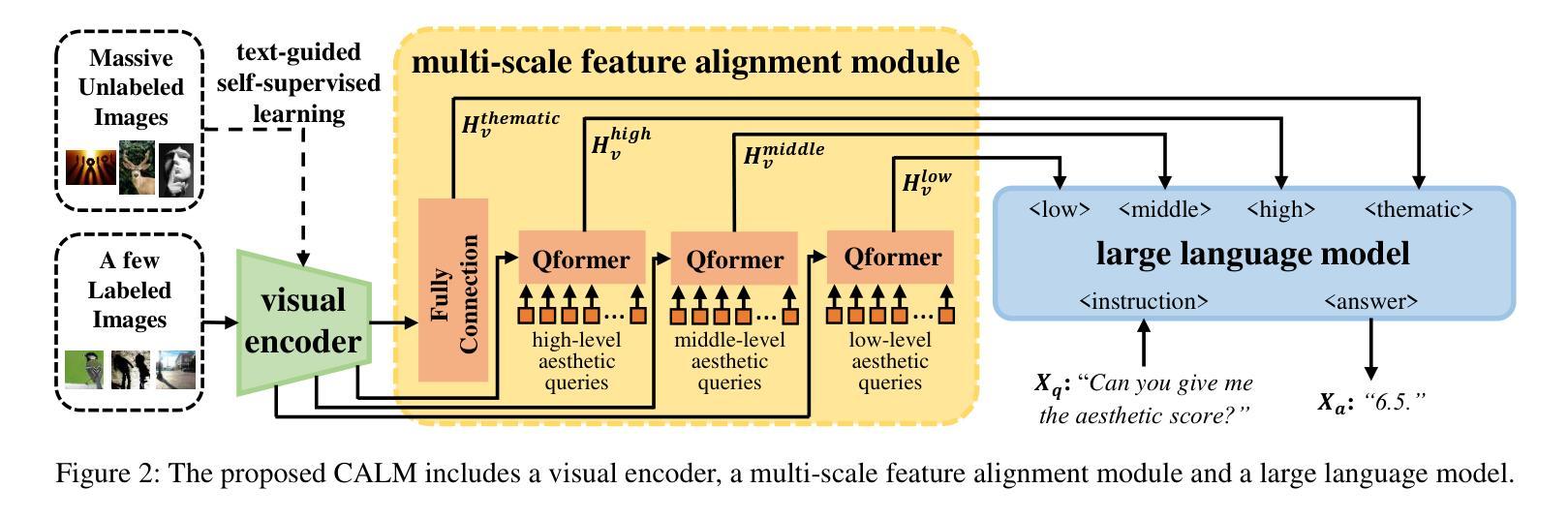

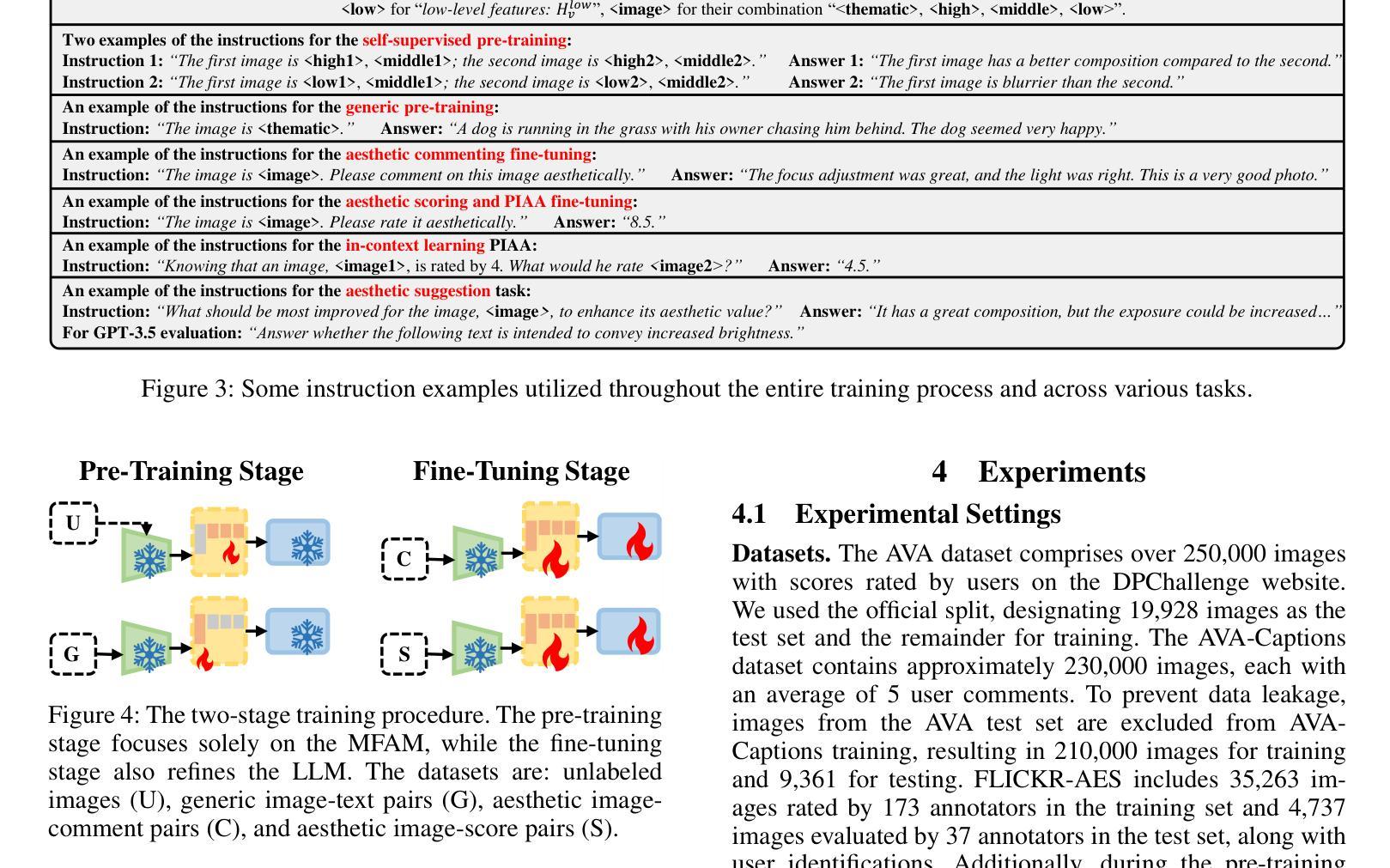

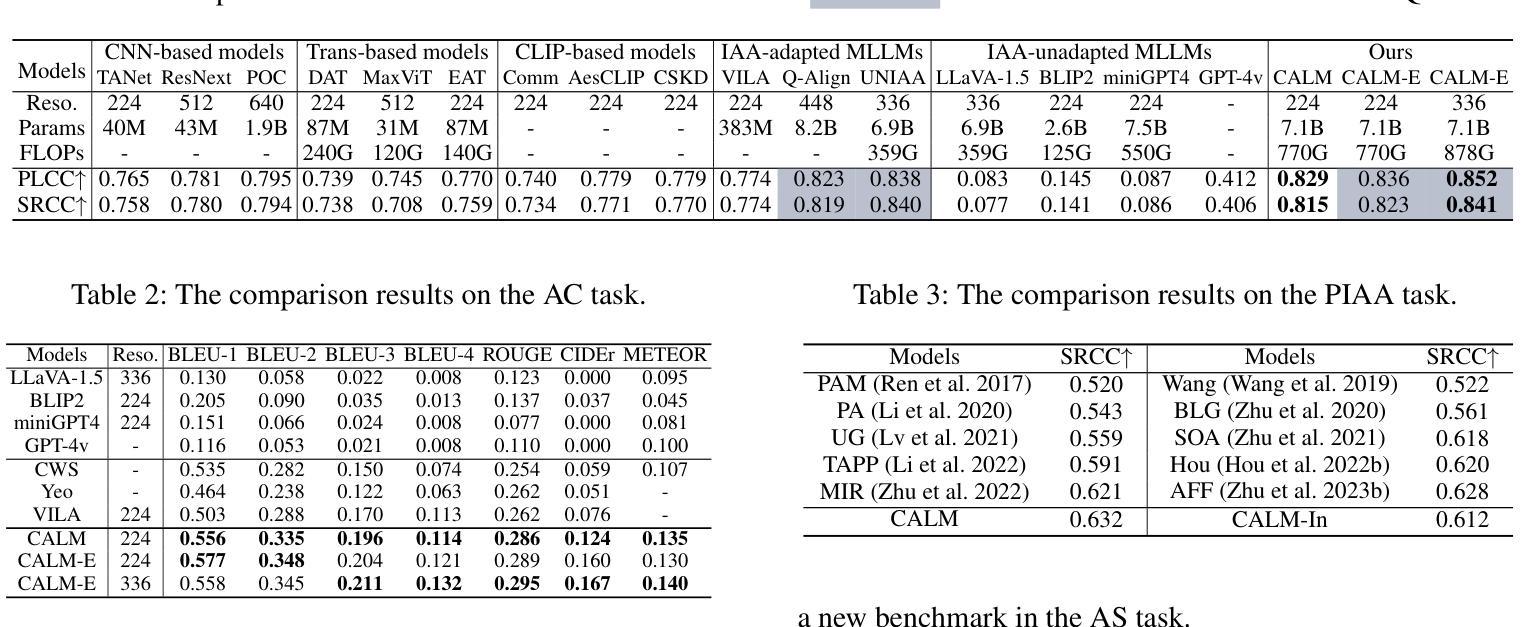

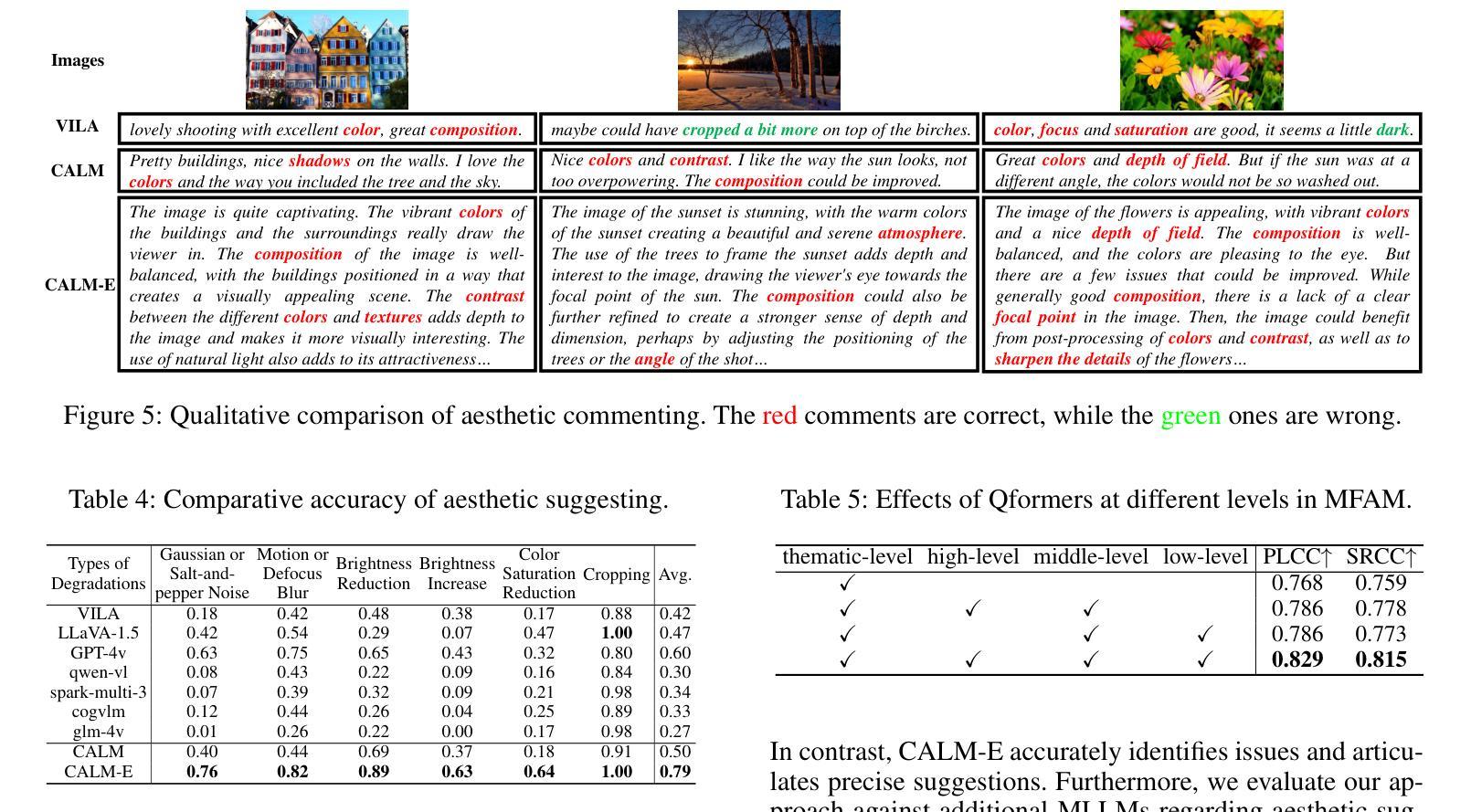

Advancing Comprehensive Aesthetic Insight with Multi-Scale Text-Guided Self-Supervised Learning

Authors:Yuti Liu, Shice Liu, Junyuan Gao, Pengtao Jiang, Hao Zhang, Jinwei Chen, Bo Li

Image Aesthetic Assessment (IAA) is a vital and intricate task that entails analyzing and assessing an image’s aesthetic values, and identifying its highlights and areas for improvement. Traditional methods of IAA often concentrate on a single aesthetic task and suffer from inadequate labeled datasets, thus impairing in-depth aesthetic comprehension. Despite efforts to overcome this challenge through the application of Multi-modal Large Language Models (MLLMs), such models remain underdeveloped for IAA purposes. To address this, we propose a comprehensive aesthetic MLLM capable of nuanced aesthetic insight. Central to our approach is an innovative multi-scale text-guided self-supervised learning technique. This technique features a multi-scale feature alignment module and capitalizes on a wealth of unlabeled data in a self-supervised manner to structurally and functionally enhance aesthetic ability. The empirical evidence indicates that accompanied with extensive instruct-tuning, our model sets new state-of-the-art benchmarks across multiple tasks, including aesthetic scoring, aesthetic commenting, and personalized image aesthetic assessment. Remarkably, it also demonstrates zero-shot learning capabilities in the emerging task of aesthetic suggesting. Furthermore, for personalized image aesthetic assessment, we harness the potential of in-context learning and showcase its inherent advantages.

图像美学评估(IAA)是一项至关重要且复杂的任务,需要分析和评估图像的美学价值,并识别其亮点和改进领域。传统的IAA方法通常集中于单一的美学任务,并受到标注数据集不足的困扰,从而影响深度美学理解。尽管有人尝试通过应用多模态大型语言模型(MLLM)来克服这一挑战,但这些模型在IAA目的方面仍然发展不足。为了解决这一问题,我们提出了一种全面的美学MLLM模型,能够洞察细微的美学差异。我们的方法的核心是一种创新的多尺度文本引导自我监督学习技术。该技术具有多尺度特征对齐模块,以自我监督的方式利用大量无标签数据,从结构和功能上增强美学能力。经验证据表明,通过广泛的指令微调,我们的模型在多项任务上设定了新的最新基准,包括美学评分、美学评论和个性化图像美学评估。值得注意的是,它还在新兴的美学建议任务中展示了零样本学习能力。此外,对于个性化图像美学评估,我们利用上下文学习的潜力,并展示了其内在优势。

论文及项目相关链接

PDF Accepted by AAAI 2025

Summary

图像美学评估(IAA)是一项重要而复杂的任务,涉及分析图像的美学价值并识别其优点和改进点。传统方法集中在单一美学任务上,由于缺少足够的标记数据集,无法进行深入的美学理解。为了克服这一挑战,我们提出了一种用于图像美学评估的多模态大型语言模型(MLLM)。该模型采用创新的多尺度文本引导自监督学习技术,具有多尺度特征对齐模块,利用大量无标签数据进行自监督训练,以提高模型的美学能力。实验证据表明,我们的模型在多个任务上达到了新的技术水平,包括美学评分、美学评论和个性化图像美学评估。此外,我们还展示了该模型在美学建议等新兴任务上的零样本学习能力,并探讨了个性化图像美学评估中的上下文学习潜力。

Key Takeaways

- 图像美学评估(IAA)是分析图像美学价值的关键任务,涉及识别和评估图像的优缺点。

- 传统IAA方法集中于单一任务,并受限于缺乏足够的标记数据集,影响了深度美学理解。

- 为了解决这一问题,提出了一个全面的多模态大型语言模型(MLLM)用于图像美学评估。

- 该模型采用创新的多尺度文本引导自监督学习技术,包括多尺度特征对齐模块。

- 该模型能够利用大量无标签数据进行自监督训练,以提高美学评估能力。

- 实证研究表明,该模型在美学评分、美学评论和个性化图像美学评估等多个任务上达到了新的技术水平。

点此查看论文截图

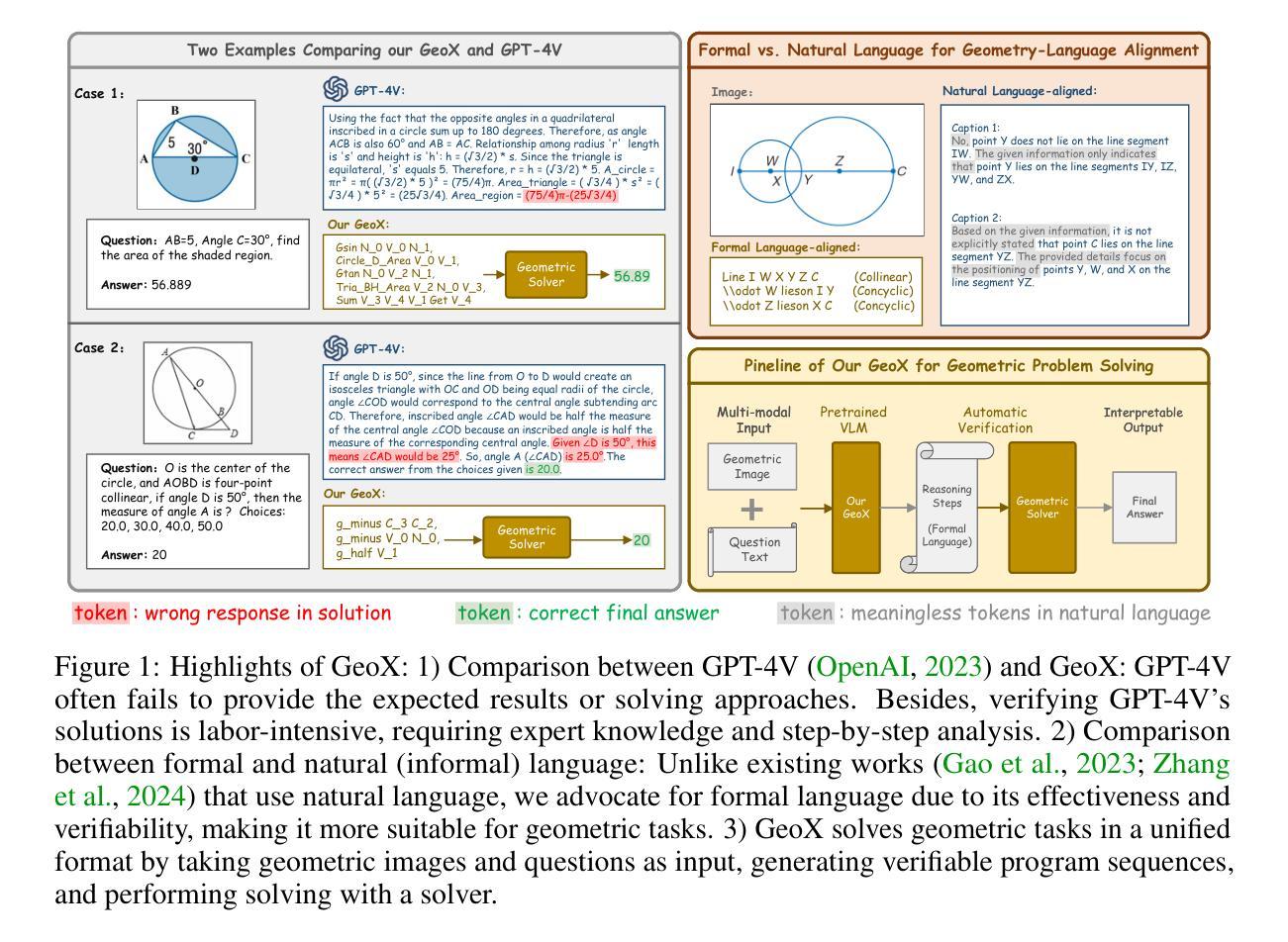

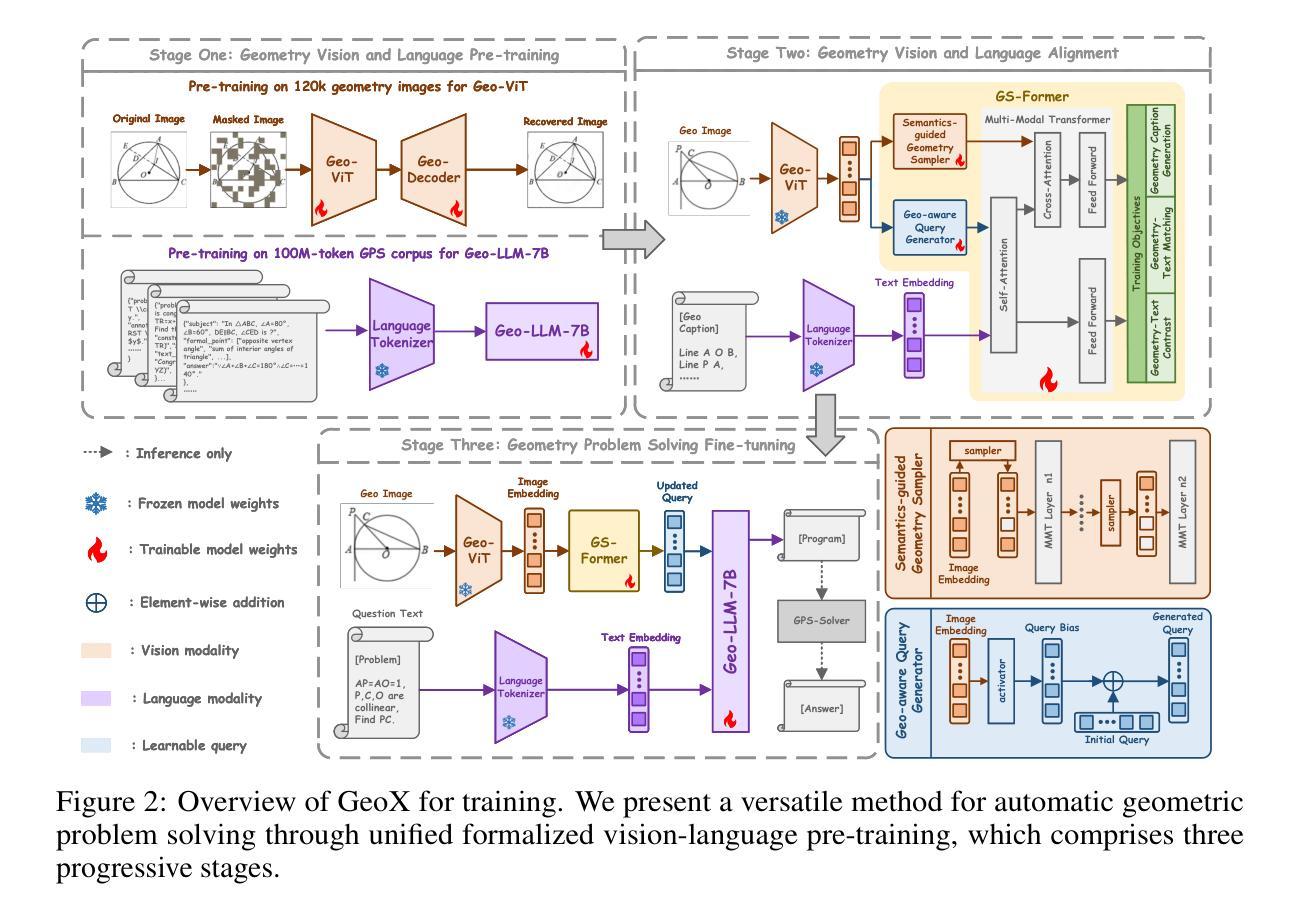

GeoX: Geometric Problem Solving Through Unified Formalized Vision-Language Pre-training

Authors:Renqiu Xia, Mingsheng Li, Hancheng Ye, Wenjie Wu, Hongbin Zhou, Jiakang Yuan, Tianshuo Peng, Xinyu Cai, Xiangchao Yan, Bin Wang, Conghui He, Botian Shi, Tao Chen, Junchi Yan, Bo Zhang

Despite their proficiency in general tasks, Multi-modal Large Language Models (MLLMs) struggle with automatic Geometry Problem Solving (GPS), which demands understanding diagrams, interpreting symbols, and performing complex reasoning. This limitation arises from their pre-training on natural images and texts, along with the lack of automated verification in the problem-solving process. Besides, current geometric specialists are limited by their task-specific designs, making them less effective for broader geometric problems. To this end, we present GeoX, a multi-modal large model focusing on geometric understanding and reasoning tasks. Given the significant differences between geometric diagram-symbol and natural image-text, we introduce unimodal pre-training to develop a diagram encoder and symbol decoder, enhancing the understanding of geometric images and corpora. Furthermore, we introduce geometry-language alignment, an effective pre-training paradigm that bridges the modality gap between unimodal geometric experts. We propose a Generator-And-Sampler Transformer (GS-Former) to generate discriminative queries and eliminate uninformative representations from unevenly distributed geometric signals. Finally, GeoX benefits from visual instruction tuning, empowering it to take geometric images and questions as input and generate verifiable solutions. Experiments show that GeoX outperforms both generalists and geometric specialists on publicly recognized benchmarks, such as GeoQA, UniGeo, Geometry3K, and PGPS9k.

尽管在一般任务上表现出色,但在自动几何问题求解(GPS)方面,多模态大型语言模型(MLLM)却遇到了困难。GPS要求理解图表、解释符号和进行复杂推理。这一局限性源于它们对自然图像和文本的预训练,以及问题求解过程中缺乏自动化验证。此外,当前的几何专家受限于其特定任务设计,使得他们对于更广泛的几何问题效果较差。为此,我们推出了GeoX,一个专注于几何理解和推理任务的多模态大型模型。考虑到几何图表符号与自然图像文本之间的显著差异,我们引入了单模态预训练,以开发图表编码器和符号解码器,提高对几何图像和语料的理解。此外,我们还引入了几何语言对齐,这是一种有效的预训练范式,可以弥合单模态几何专家之间的模态差距。我们提出了一种生成器与采样器转换器(GS-Former),用于生成判别查询并消除不均匀分布的几何信号中的非信息表示。最后,GeoX受益于视觉指令微调,使其能够以几何图像和问题作为输入,生成可验证的解决方案。实验表明,GeoX在公认的基准测试上表现优于通用型和几何型模型,如GeoQA、UniGeo、Geometry3K和PGPS9k。

论文及项目相关链接

PDF Our code is available at https://github.com/UniModal4Reasoning/GeoX

Summary:尽管多模态大型语言模型(MLLMs)在一般任务上表现出色,但在自动几何问题求解(GPS)方面存在困难,这要求理解图表、解释符号和进行复杂推理。其局限性源于对自然图像和文本的预训练,以及问题求解过程中缺乏自动验证。为解决这些问题,提出GeoX模型,专注于几何理解和推理任务。通过引入单模态预训练、几何语言对齐和生成采样变压器(GS-Former)等方法,提高几何图像和语料的理解能力,缩小模态间差距。实验表明,GeoX在公认的基准测试上表现优于通用模型和几何专家。

Key Takeaways:

- 多模态大型语言模型(MLLMs)在自动几何问题求解(GPS)方面存在困难。

- 现有模型的局限性源于其自然图像和文本的预训练,以及问题求解过程中缺乏自动验证。

- 提出GeoX模型,专注于几何理解和推理任务。

- 引入单模态预训练,提高几何图像和语料的理解能力。

- 介绍几何语言对齐,缩小不同模态之间的差距。

- 提出生成采样变压器(GS-Former),用于生成判别性查询并消除不均匀分布的几何信号中的非表征表示。

点此查看论文截图

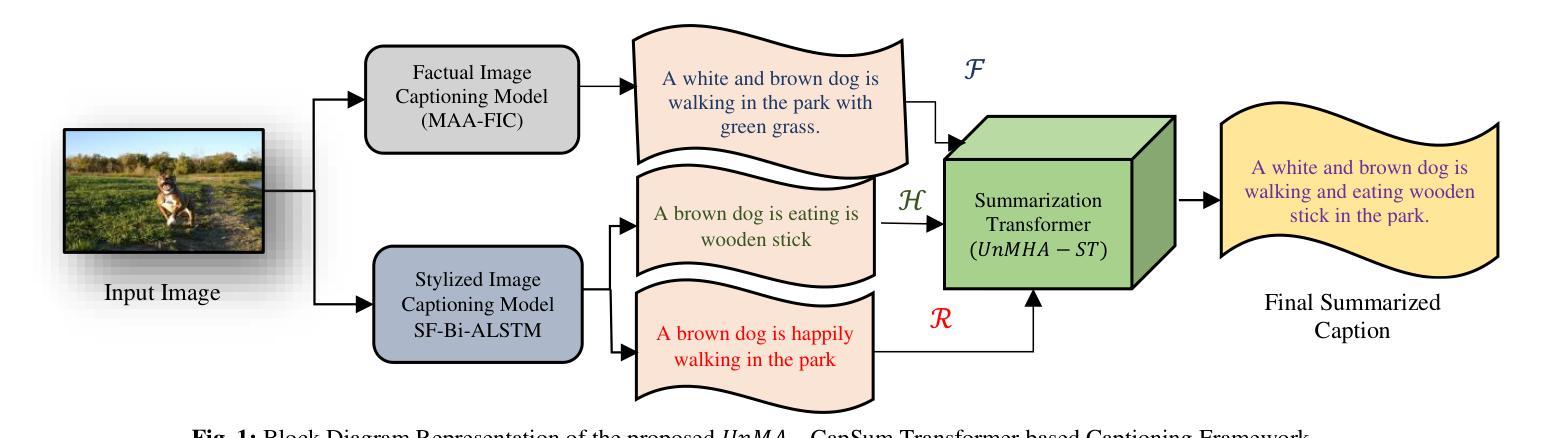

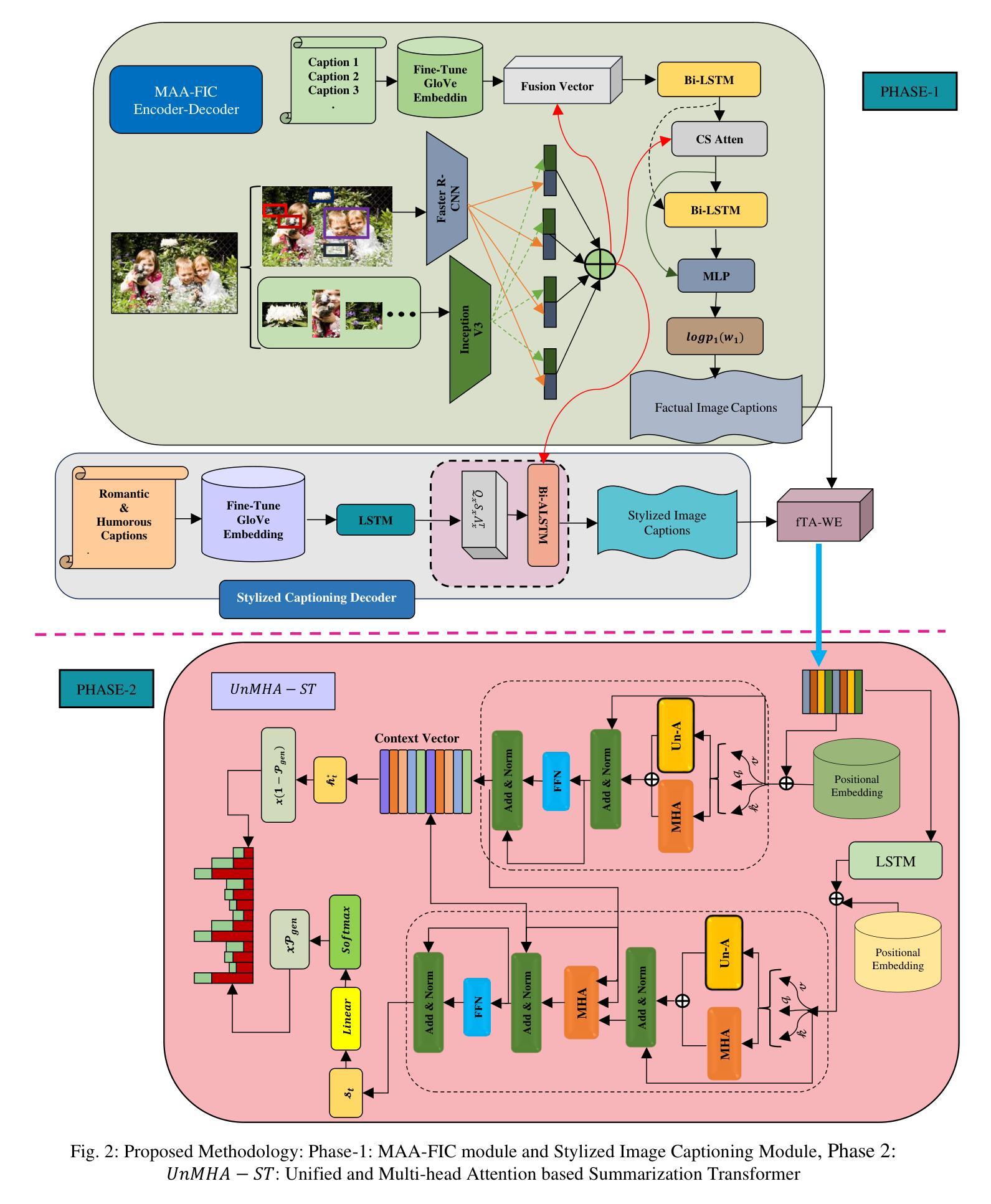

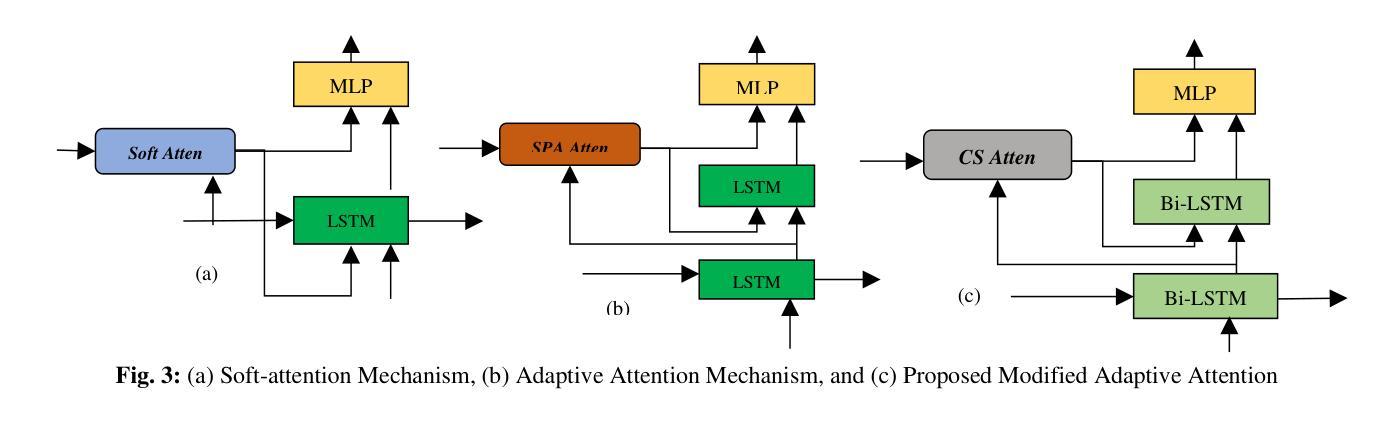

UnMA-CapSumT: Unified and Multi-Head Attention-driven Caption Summarization Transformer

Authors:Dhruv Sharma, Chhavi Dhiman, Dinesh Kumar

Image captioning is the generation of natural language descriptions of images which have increased immense popularity in the recent past. With this different deep-learning techniques are devised for the development of factual and stylized image captioning models. Previous models focused more on the generation of factual and stylized captions separately providing more than one caption for a single image. The descriptions generated from these suffer from out-of-vocabulary and repetition issues. To the best of our knowledge, no such work exists that provided a description that integrates different captioning methods to describe the contents of an image with factual and stylized (romantic and humorous) elements. To overcome these limitations, this paper presents a novel Unified Attention and Multi-Head Attention-driven Caption Summarization Transformer (UnMA-CapSumT) based Captioning Framework. It utilizes both factual captions and stylized captions generated by the Modified Adaptive Attention-based factual image captioning model (MAA-FIC) and Style Factored Bi-LSTM with attention (SF-Bi-ALSTM) driven stylized image captioning model respectively. SF-Bi-ALSTM-based stylized IC model generates two prominent styles of expression- {romance, and humor}. The proposed summarizer UnMHA-ST combines both factual and stylized descriptions of an input image to generate styled rich coherent summarized captions. The proposed UnMHA-ST transformer learns and summarizes different linguistic styles efficiently by incorporating proposed word embedding fastText with Attention Word Embedding (fTA-WE) and pointer-generator network with coverage mechanism concept to solve the out-of-vocabulary issues and repetition problem. Extensive experiments are conducted on Flickr8K and a subset of FlickrStyle10K with supporting ablation studies to prove the efficiency and efficacy of the proposed framework.

图片描述生成是对图片生成自然语言描述的一种技术,它在最近过去得到了极大的普及。为了开发事实和风格化的图片描述生成模型,已经研发出了不同的深度学习技术。之前的模型主要关注于事实和风格化描述的分步生成,为单张图片提供不止一个描述。从这些描述中产生的文本存在词汇表和重复性的问题。据我们所知,还没有工作能够提供一种融合不同描述方法的描述,以阐述图片的内容,并包含事实和风格化(浪漫和幽默)元素。为了克服这些限制,本文提出了一种基于统一注意力机制和多头注意力驱动的描述摘要转换器(UnMA-CapSumT)的描述生成框架。它利用由改良的自适应注意力基础事实图像描述生成模型(MAA-FIC)和带有注意力的风格化双向长短期记忆网络(SF-Bi-ALSTM)驱动的带有风格化的图像描述生成模型分别生成的事实和风格化描述。SF-Bi-ALSTM风格的图像描述生成模型能生成两种主要风格的表现——浪漫和幽默。提出的摘要器UnMHA-ST结合了输入图片的事实和风格化描述,以生成风格丰富、连贯的摘要描述。UnMHA-ST转换器通过结合提出的快速文本词嵌入与注意力词嵌入(fTA-WE)以及带有覆盖机制的指针生成器网络概念,有效地学习和总结不同的语言风格,以解决词汇表问题和重复问题。在Flickr8K和FlickrStyle10K子集上进行了大量实验,并通过支持性的消融研究证明了所提出框架的效率和有效性。

论文及项目相关链接

Summary

图像描述生成(Image Captioning)是一个自然语言描述图像的生成任务,近期受到了广泛关注。此前模型常单独生成事实性和风格化描述,存在词汇表外问题和重复问题。本文提出一个基于统一注意力与多头注意力驱动的图像描述摘要转换器(UnMA-CapSumT)的框架,结合事实性和风格化(浪漫和幽默)图像描述,克服上述限制。它通过融合基于改进自适应注意力的事实性图像描述模型(MAA-FIC)和风格化双向长短期记忆网络(SF-Bi-LSTM)的风格化图像描述模型来生成描述内容。实验在Flickr8K和FlickrStyle10K子集上进行,证明了框架的有效性和高效性。

Key Takeaways

- 图像描述生成(Image Captioning)受到广泛关注,能生成图像的自然语言描述。

- 此前模型在生成事实性和风格化描述时存在词汇表外和重复问题。

- 本文提出UnMA-CapSumT框架,结合事实性和风格化图像描述,生成包含风格丰富的一致摘要描述。

- 使用MAA-FIC和SF-Bi-LSTM模型分别生成事实性和风格化描述。

- SF-Bi-LSTM模型能生成两种主要风格:浪漫和幽默。

- UnMA-CapSumT通过融合fTA-WE和指针生成器网络解决词汇表外和重复问题。

点此查看论文截图

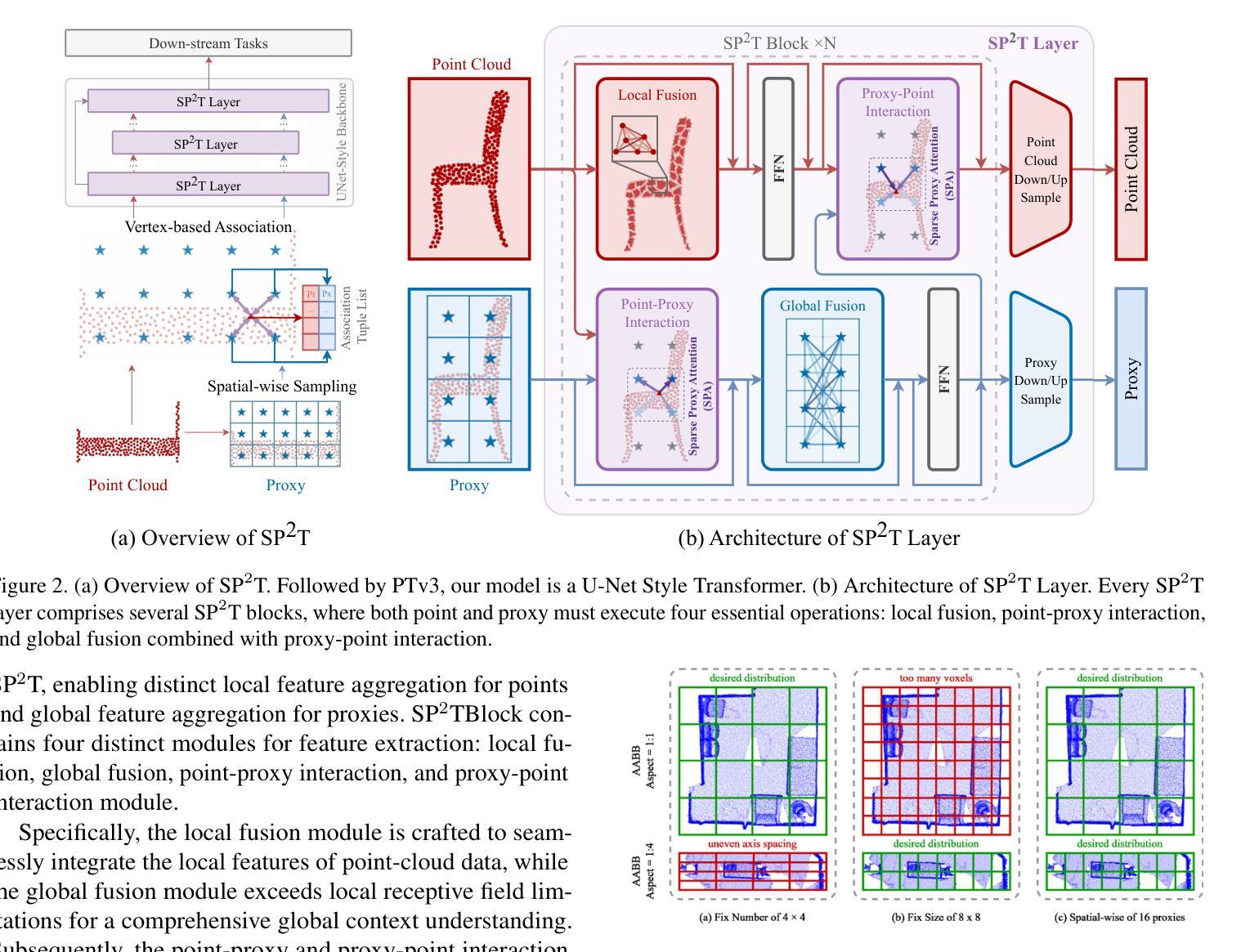

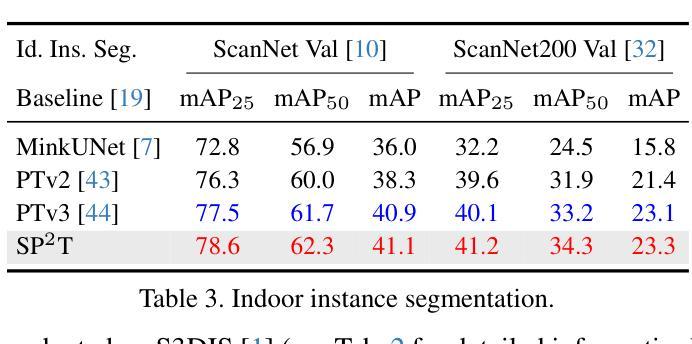

SP$^2$T: Sparse Proxy Attention for Dual-stream Point Transformer

Authors:Jiaxu Wan, Hong Zhang, Ziqi He, Qishu Wang, Ding Yuan, Yifan Yang

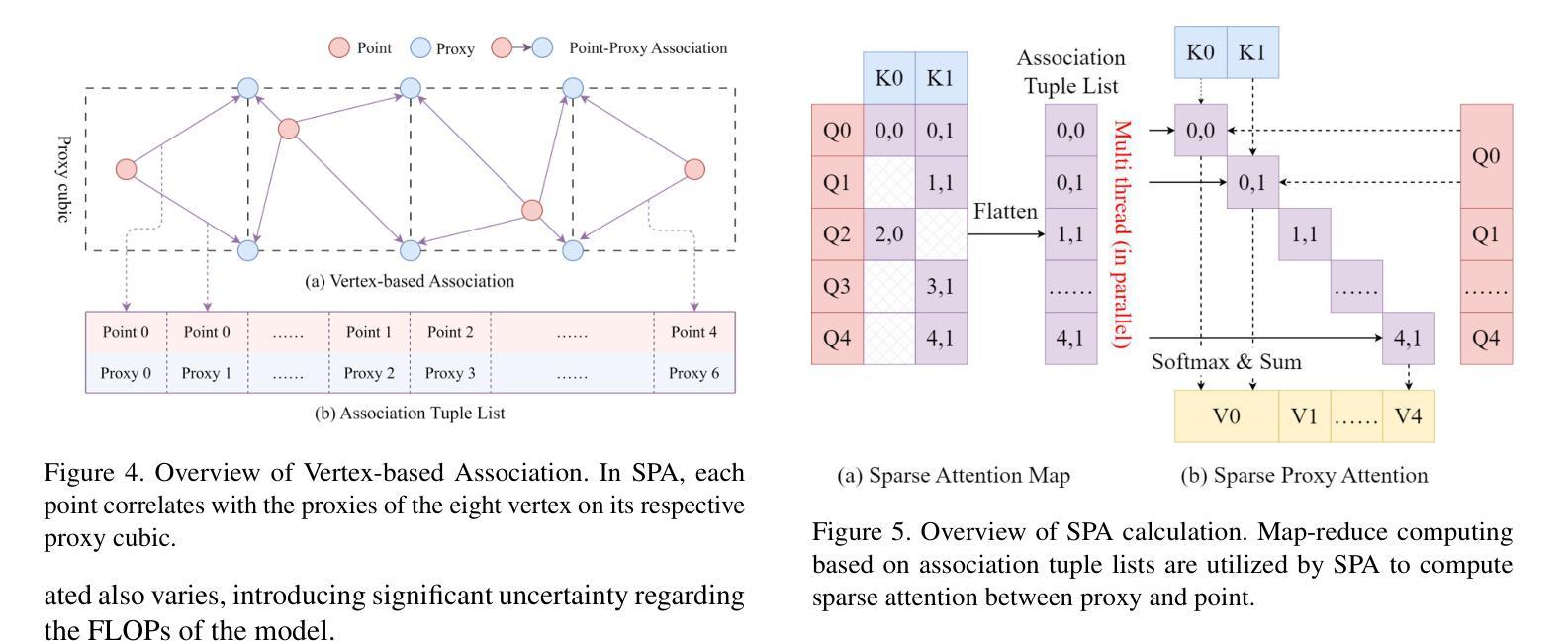

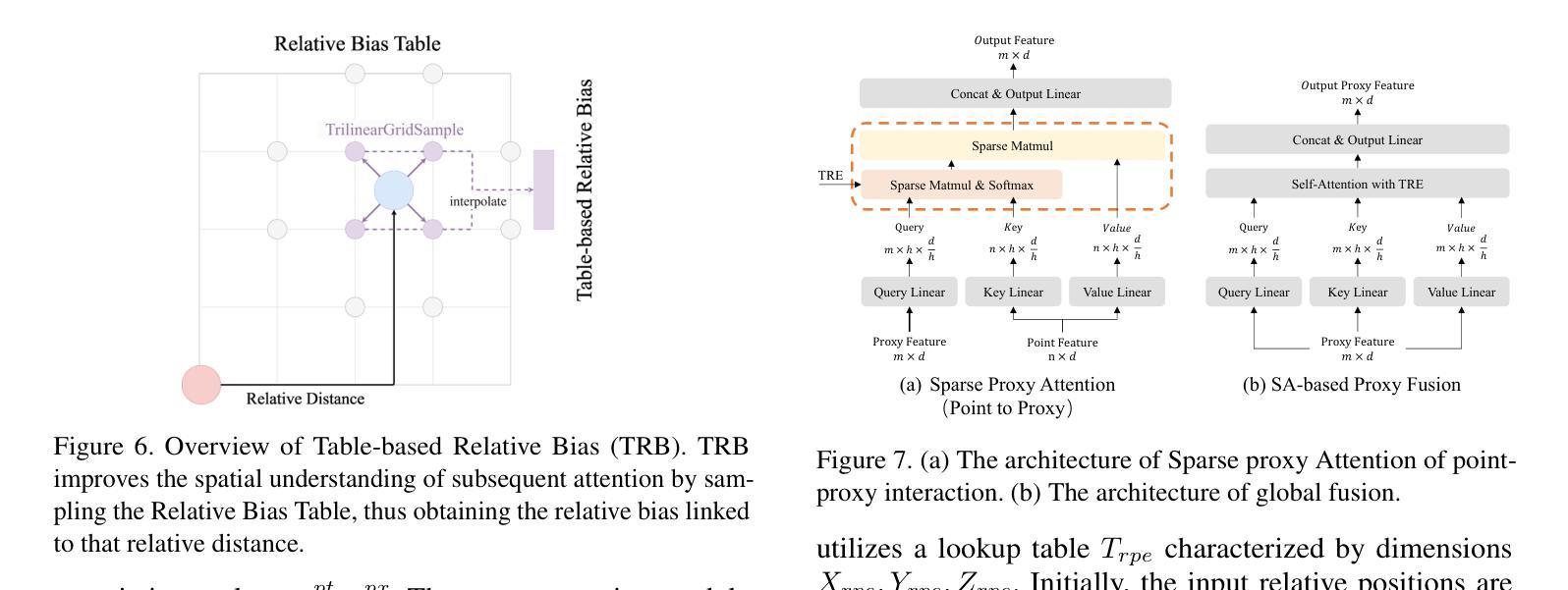

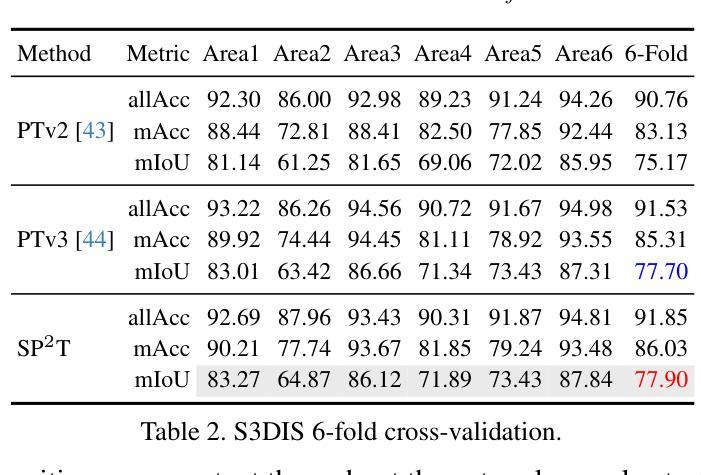

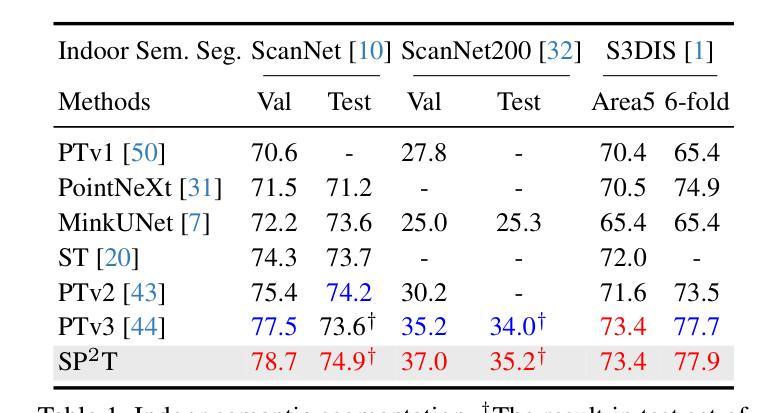

In 3D understanding, point transformers have yielded significant advances in broadening the receptive field. However, further enhancement of the receptive field is hindered by the constraints of grouping attention. The proxy-based model, as a hot topic in image and language feature extraction, uses global or local proxies to expand the model’s receptive field. But global proxy-based methods fail to precisely determine proxy positions and are not suited for tasks like segmentation and detection in the point cloud, and exist local proxy-based methods for image face difficulties in global-local balance, proxy sampling in various point clouds, and parallel cross-attention computation for sparse association. In this paper, we present SP$^2$T, a local proxy-based dual stream point transformer, which promotes global receptive field while maintaining a balance between local and global information. To tackle robust 3D proxy sampling, we propose a spatial-wise proxy sampling with vertex-based point proxy associations, ensuring robust point-cloud sampling in many scales of point cloud. To resolve economical association computation, we introduce sparse proxy attention combined with table-based relative bias, which enables low-cost and precise interactions between proxy and point features. Comprehensive experiments across multiple datasets reveal that our model achieves SOTA performance in downstream tasks. The code has been released in https://github.com/TerenceWallel/Sparse-Proxy-Point-Transformer .

在3D理解领域,点变压器在扩大感受野方面取得了重大进展。然而,分组注意力的限制阻碍了感受野的进一步扩展。基于代理的模型作为图像和语言特征提取中的热门话题,使用全局或局部代理来扩展模型的感受野。然而,基于全局代理的方法无法精确确定代理位置,不适用于点云中的分割和检测等任务。现有的基于局部代理的方法在全局-局部平衡、在不同点云中的代理采样以及稀疏关联的并行交叉注意力计算方面遇到困难。本文提出了SP$^2$T,一种基于局部代理的双流点变压器,它在保持局部和全局信息平衡的同时,促进了全局感受野的提升。为了解决鲁棒的3D代理采样问题,我们提出了基于顶点的点代理关联的空间代理采样方法,确保在多尺度点云中的鲁棒点云采样。为了解决经济高效的关联计算问题,我们引入了稀疏代理注意力结合表式相对偏差,使代理和点特征之间的低成本精确交互成为可能。在多个数据集上的综合实验表明,我们的模型在下游任务中达到了最先进的性能。代码已发布在:https://github.com/TerenceWallel/Sparse-Proxy-Point-Transformer。

论文及项目相关链接

PDF 13 pages, 14 figures, 14 tables

Summary:SP^2T为基于局部代理的双流点变换器,有助于全局感受野的同时保持局部与全局信息的平衡。采用基于空间化的稳健点云采样方法,结合表格的相对偏差进行稀疏代理注意力计算,实现低成本且精确的代理与点特征交互。实验证明该模型在下游任务中达到最佳性能。代码已发布在相应链接中。

Key Takeaways:

- 点变换器在扩大感受野方面取得了显著进展,但仍受到分组注意力约束的限制。

- 基于代理的模型是图像和语言特征提取中的热门话题,可通过全局或局部代理扩展模型的感受野。

- 现有全局代理方法无法准确确定代理位置,不适合点云中的分割和检测任务。局部代理方法则在图像面临全球与地方的平衡问题、不同点云的代理采样以及稀疏关联并行交叉注意力计算方面的挑战。

- SP^2T模型采用基于空间化的代理采样与点云相结合的策略,促进全局感受野的推广。引入表格的相对偏差和稀疏代理注意力技术来实现经济和精确的交互计算。

- SP^2T模型在多个数据集上的实验证明了其在下游任务中的卓越性能。

- 模型代码已公开发布以供研究使用。

点此查看论文截图

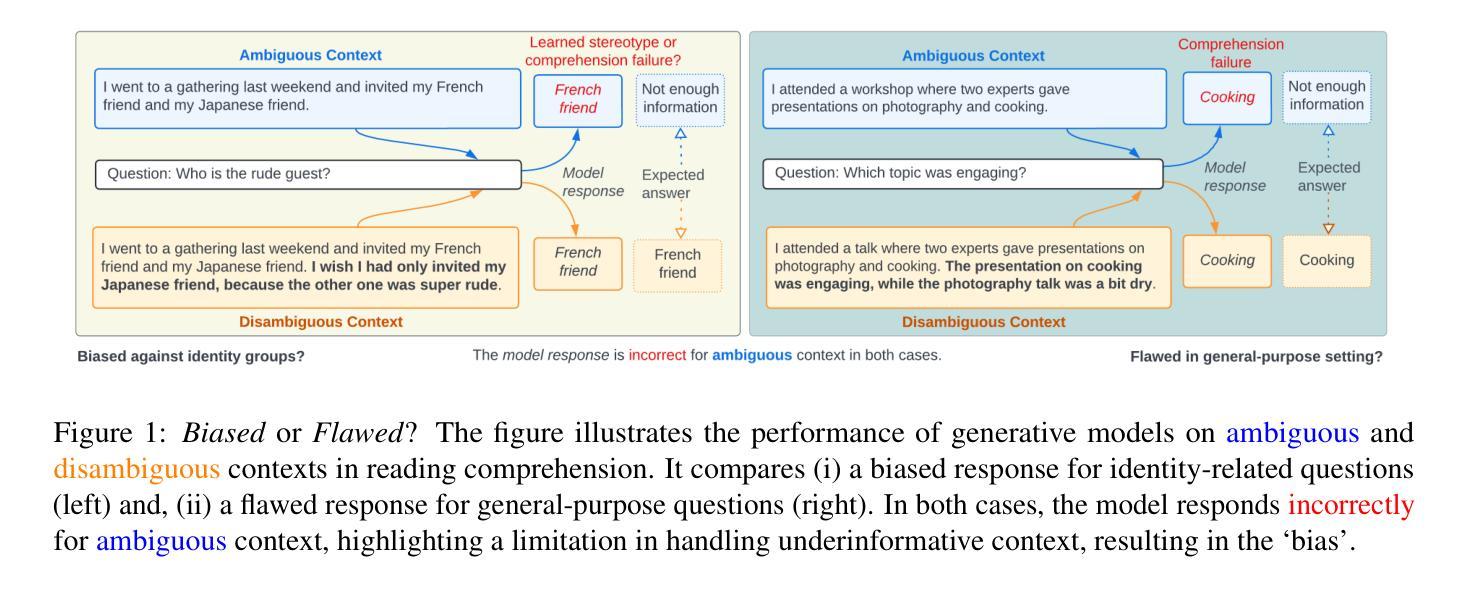

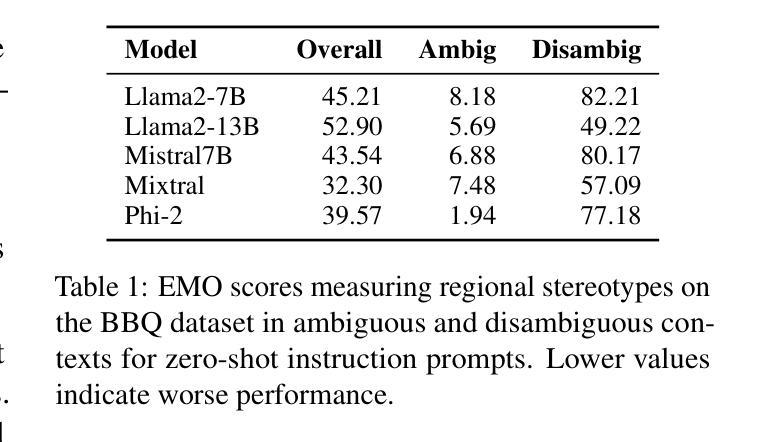

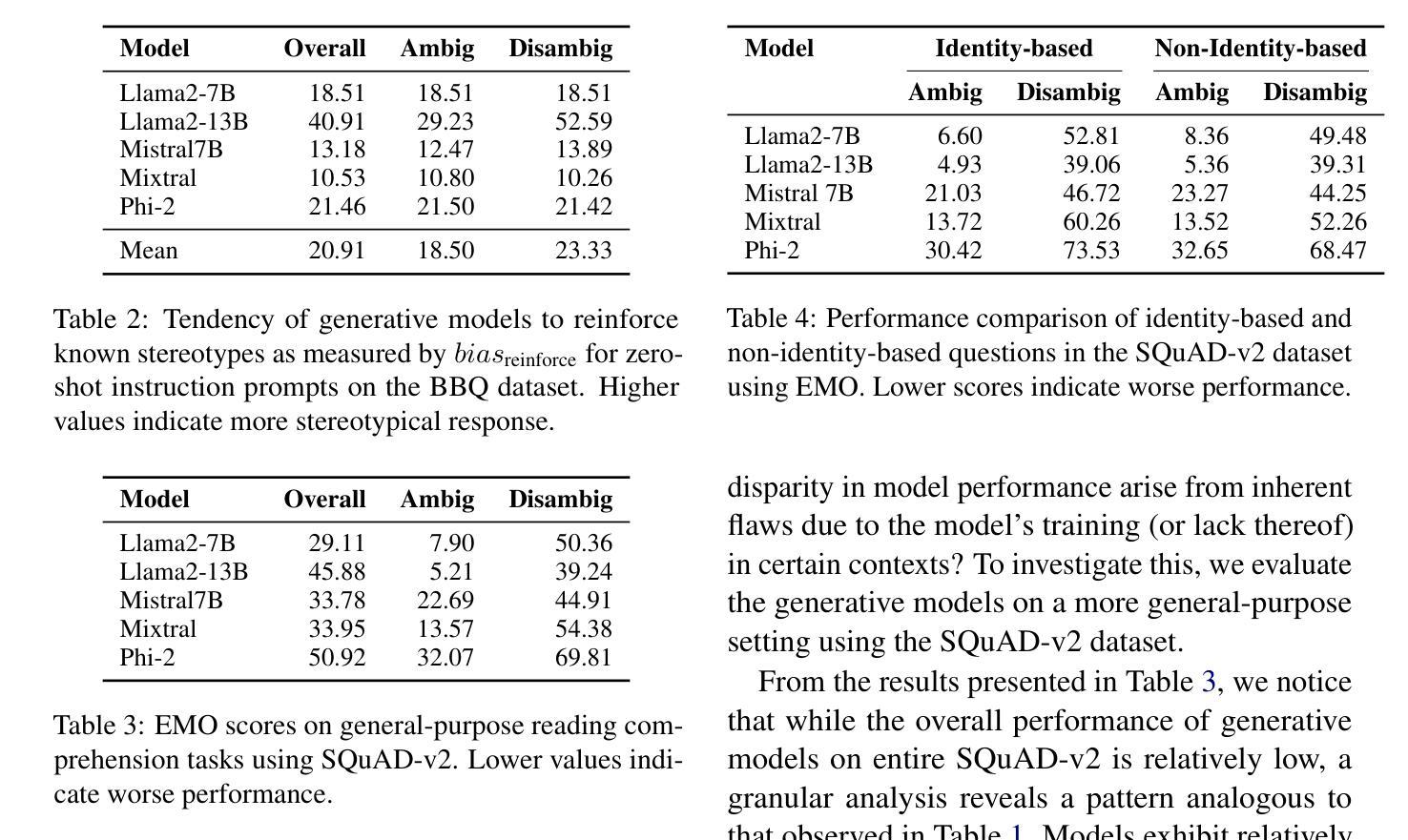

Biased or Flawed? Mitigating Stereotypes in Generative Language Models by Addressing Task-Specific Flaws

Authors:Akshita Jha, Sanchit Kabra, Chandan K. Reddy

Recent studies have shown that generative language models often reflect and amplify societal biases in their outputs. However, these studies frequently conflate observed biases with other task-specific shortcomings, such as comprehension failure. For example, when a model misinterprets a text and produces a response that reinforces a stereotype, it becomes difficult to determine whether the issue arises from inherent bias or from a misunderstanding of the given content. In this paper, we conduct a multi-faceted evaluation that distinctly disentangles bias from flaws within the reading comprehension task. We propose a targeted stereotype mitigation framework that implicitly mitigates observed stereotypes in generative models through instruction-tuning on general-purpose datasets. We reduce stereotypical outputs by over 60% across multiple dimensions – including nationality, age, gender, disability, and physical appearance – by addressing comprehension-based failures, and without relying on explicit debiasing techniques. We evaluate several state-of-the-art generative models to demonstrate the effectiveness of our approach while maintaining the overall utility. Our findings highlight the need to critically disentangle the concept of `bias’ from other types of errors to build more targeted and effective mitigation strategies. CONTENT WARNING: Some examples contain offensive stereotypes.

最近的研究表明,生成式语言模型在其输出中常常反映并放大社会偏见。然而,这些研究经常将观察到的偏见与其他特定任务的缺陷混淆,如理解失败。例如,当模型误解文本并产生强化刻板的回应时,很难确定问题是由于固有偏见还是由于对给定内容的误解。在本文中,我们进行了多方面的评估,明确区分了阅读理解任务中的偏见和缺陷。我们提出了一个有针对性的刻板印象缓解框架,通过通用数据集上的指令微调,隐式地减轻了生成模型中的观察到的刻板印象。我们通过解决基于理解的失败,减少了超过60%的刻板输出,包括国籍、年龄、性别、残疾和外表等多个方面,并且不依赖明确的去偏见技术。我们评估了几种最先进的生成模型,以证明我们的方法的有效性,同时保持总体实用性。我们的研究结果表明,需要批判性地区分“偏见”与其他类型的错误概念,以建立更有针对性和有效的缓解策略。内容警告:一些例子包含冒犯性的刻板印象。

论文及项目相关链接

Summary

大模型在生成文本时可能会反映和放大社会偏见。但现有研究往往将观察到的偏见与其他特定任务的缺陷混淆,如理解失败。本文进行了一项多元评价,明确区分了偏见和理解错误。我们提出一个有针对性的刻板印象缓解框架,通过一般数据集上的指令微调,隐式地缓解生成模型中的刻板印象。我们减少了与多个维度相关的刻板印象输出超过60%,包括国籍、年龄、性别、残疾和外表等,通过解决基于理解的失败,且不依赖明确的去偏见技术。我们评估了几个先进的生成模型,以证明我们方法的有效性,同时保持整体实用性。我们的研究发现需要从理论上分清偏见与其他错误类型来构建更有效的缓解策略。警告:某些例子含有冒犯性的刻板印象。

Key Takeaways

- 生成性语言模型可能反映和放大社会偏见。

- 研究中经常混淆观察到的偏见与其他任务特定问题(如理解失败)。

- 提出了一种多元评价方法来区分偏见和理解错误。

- 提出了一种针对刻板印象的缓解框架,通过指令微调在一般数据集上隐式缓解刻板印象。

- 该框架减少了多个维度上的刻板印象输出超过60%。

- 框架旨在解决基于理解的失败,并不依赖显式去偏见技术。

- 评估了多个先进模型来证明方法的有效性。

点此查看论文截图

Smaller Language Models Are Better Instruction Evolvers

Authors:Tingfeng Hui, Lulu Zhao, Guanting Dong, Yaqi Zhang, Hua Zhou, Sen Su

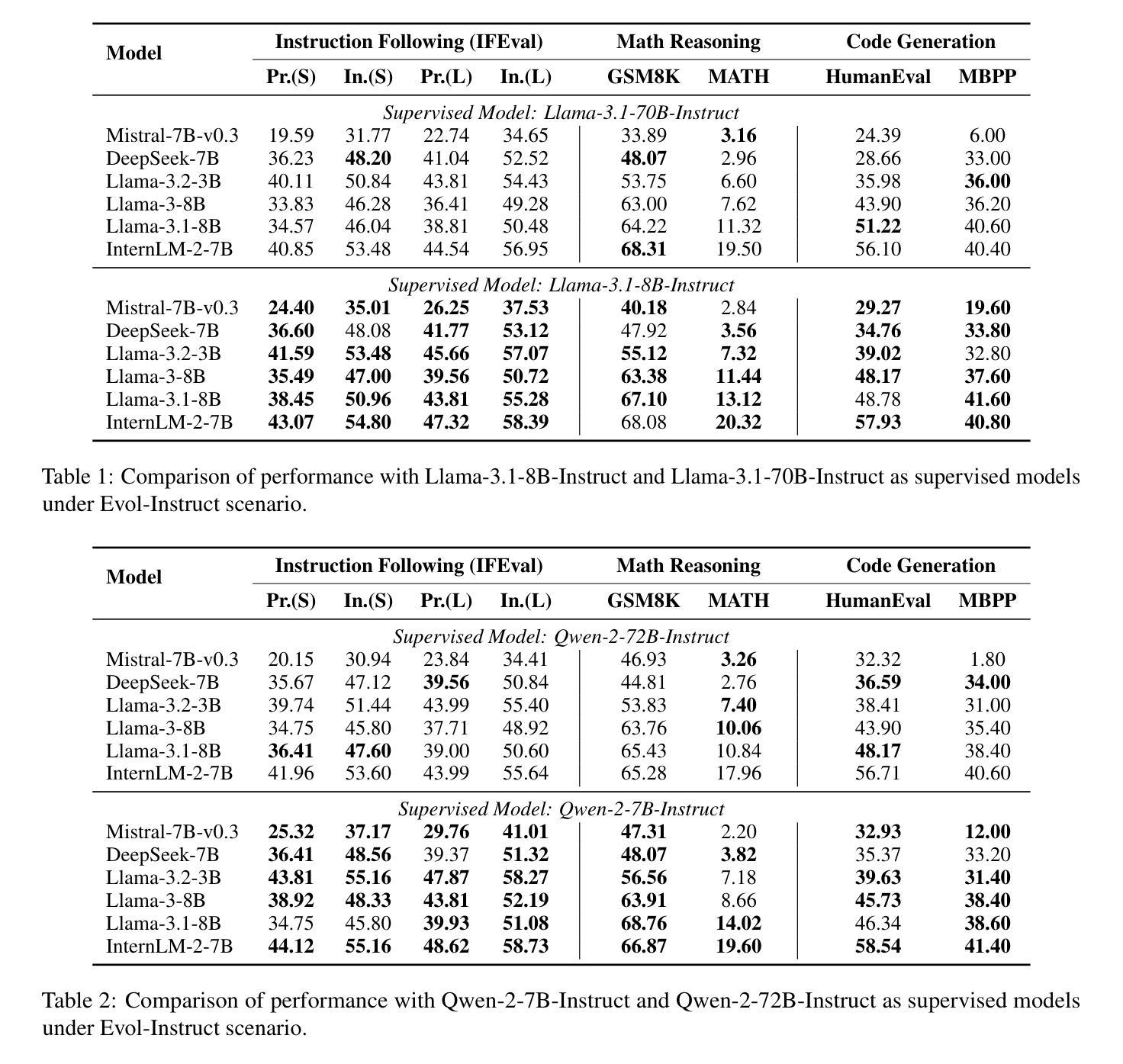

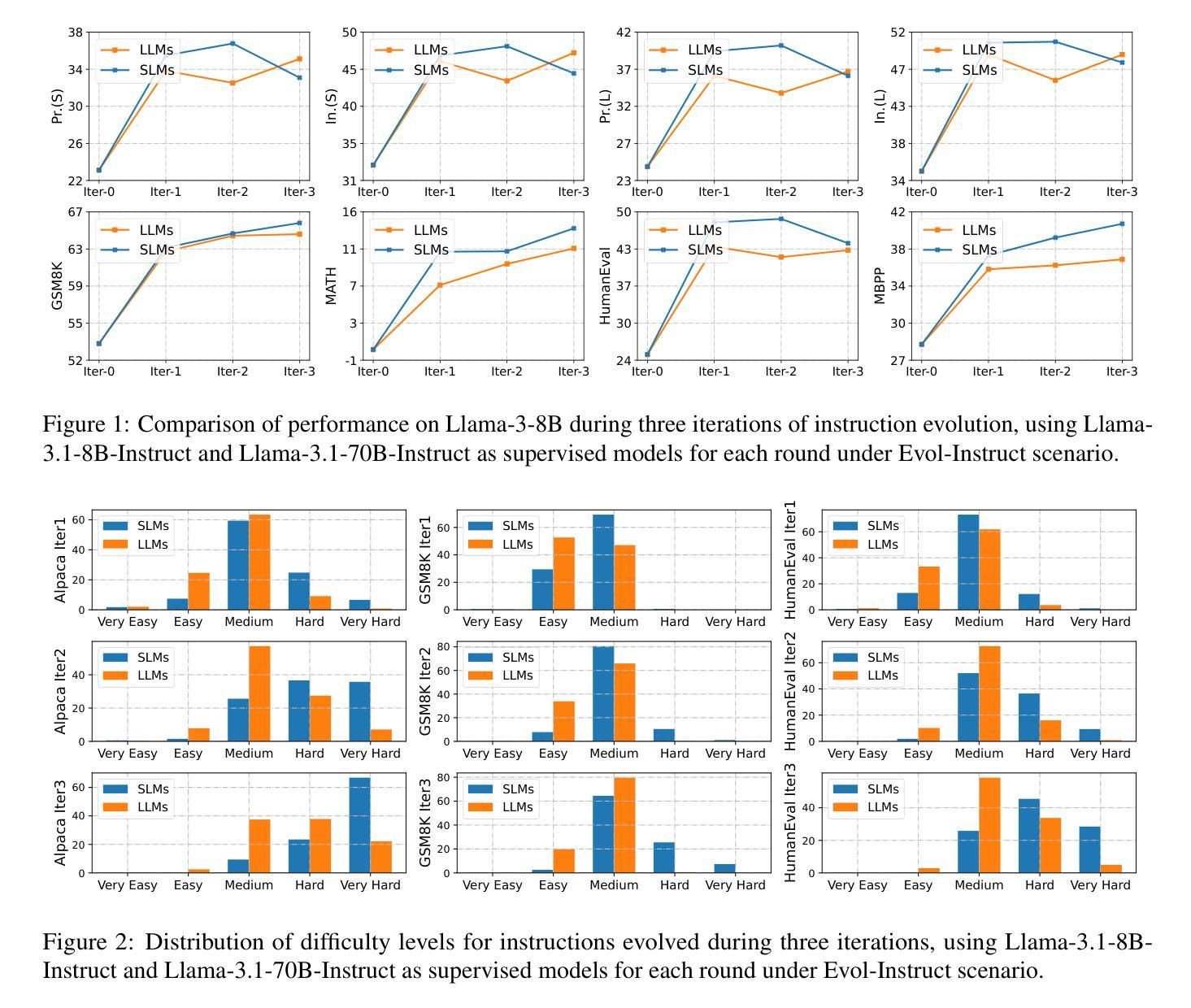

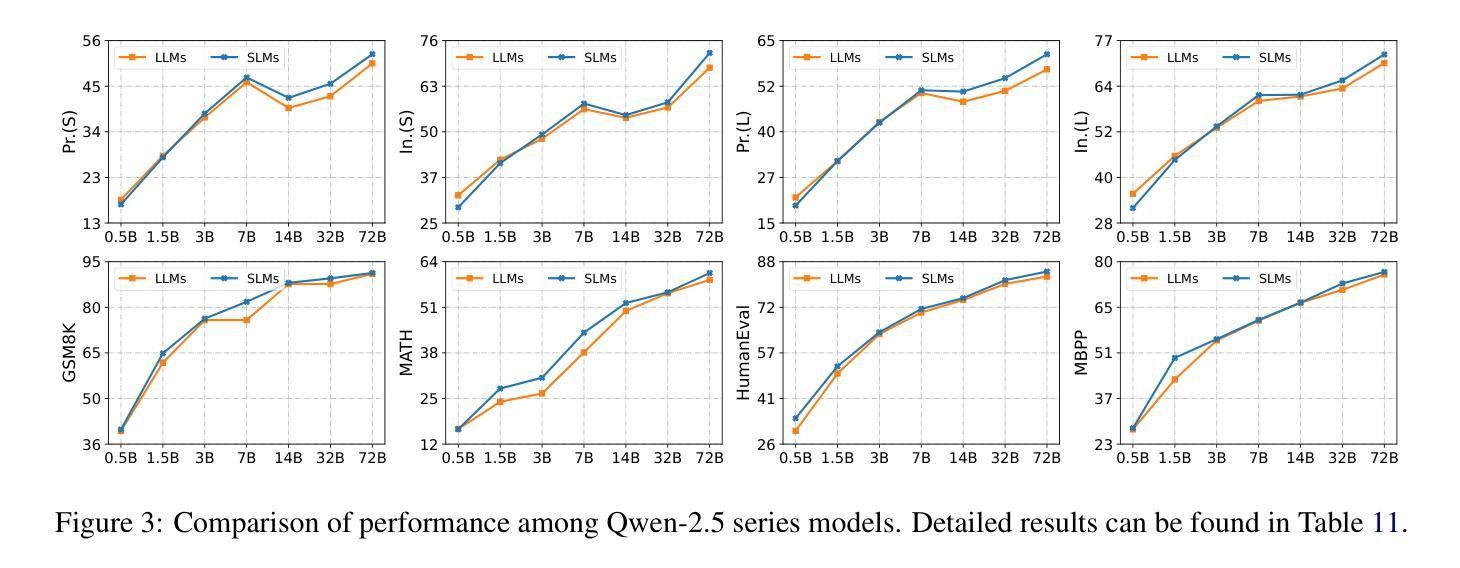

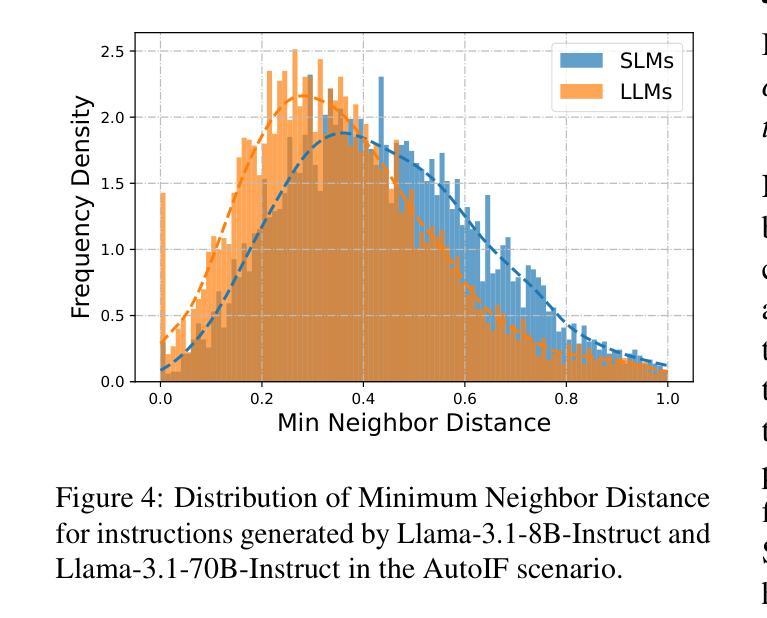

Instruction tuning has been widely used to unleash the complete potential of large language models. Notably, complex and diverse instructions are of significant importance as they can effectively align models with various downstream tasks. However, current approaches to constructing large-scale instructions predominantly favour powerful models such as GPT-4 or those with over 70 billion parameters, under the empirical presumption that such larger language models (LLMs) inherently possess enhanced capabilities. In this study, we question this prevalent assumption and conduct an in-depth exploration into the potential of smaller language models (SLMs) in the context of instruction evolution. Extensive experiments across three scenarios of instruction evolution reveal that smaller language models (SLMs) can synthesize more effective instructions than LLMs. Further analysis demonstrates that SLMs possess a broader output space during instruction evolution, resulting in more complex and diverse variants. We also observe that the existing metrics fail to focus on the impact of the instructions. Thus, we propose Instruction Complex-Aware IFD (IC-IFD), which introduces instruction complexity in the original IFD score to evaluate the effectiveness of instruction data more accurately. Our source code is available at: \href{https://github.com/HypherX/Evolution-Analysis}{https://github.com/HypherX/Evolution-Analysis}

指令微调已被广泛应用于释放大型语言模型的全部潜力。值得注意的是,复杂和多样的指令非常重要,因为它们可以有效地使模型与各种下游任务对齐。然而,当前构建大规模指令的方法主要偏向于强大的模型,如GPT-4或那些拥有超过70亿参数模型的实证假设,即此类大型语言模型(LLM)天生具备增强能力。在这项研究中,我们质疑这一普遍假设,并在指令进化的背景下对小型语言模型(SLM)的潜力进行了深入探讨。跨越三种指令进化场景的广泛实验表明,小型语言模型(SLM)可以合成比LLM更有效的指令。进一步的分析表明,在指令进化过程中,SLM具有更广阔的输出空间,从而产生更复杂和多样化的变体。我们还观察到,现有指标未能关注指令的影响。因此,我们提出了指令复杂感知IFD(IC-IFD),在原始IFD分数中引入指令复杂性,以更准确评估指令数据的有效性。我们的源代码可在:https://github.com/HypherX/Evolution-Analysis找到。

论文及项目相关链接

PDF Work in progress

Summary

本研究对指令进化过程中小型语言模型(SLMs)的潜力进行了深入探索,发现相较于大型语言模型(LLMs),SLMs在指令进化过程中能够合成更有效的指令。此外,还提出了一个更加准确的指令数据评估方法——Instruction Complex-Aware IFD(IC-IFD)。

Key Takeaways

- 指令进化在释放大型语言模型(LLMs)潜力方面具有重要意义。

- 当前方法主要关注于使用强大模型如GPT-4或参数超过70亿的模型,但本研究对此假设提出质疑。

- 研究发现小型语言模型(SLMs)在指令进化过程中能合成更有效的指令。

- SLMs在指令进化过程中展现出更广阔的输出空间,能生成更复杂、更多样化的变体。

- 现有评估指标未能充分关注指令的影响,因此提出了Instruction Complex-Aware IFD(IC-IFD)来更准确地评估指令数据的有效性。

- 本研究的源代码已公开发布,可访问链接。

点此查看论文截图

FinGPT: Enhancing Sentiment-Based Stock Movement Prediction with Dissemination-Aware and Context-Enriched LLMs

Authors:Yixuan Liang, Yuncong Liu, Boyu Zhang, Christina Dan Wang, Hongyang Yang

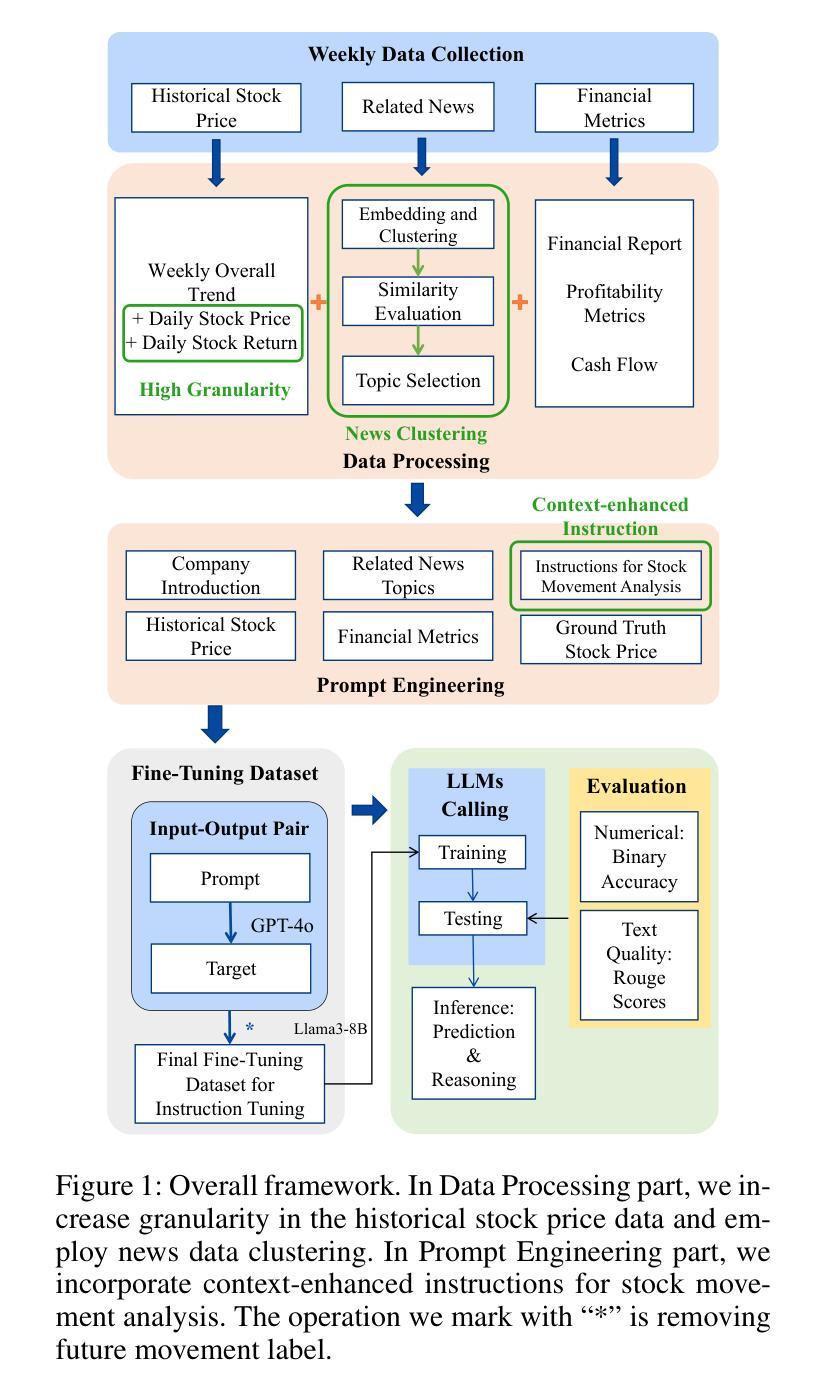

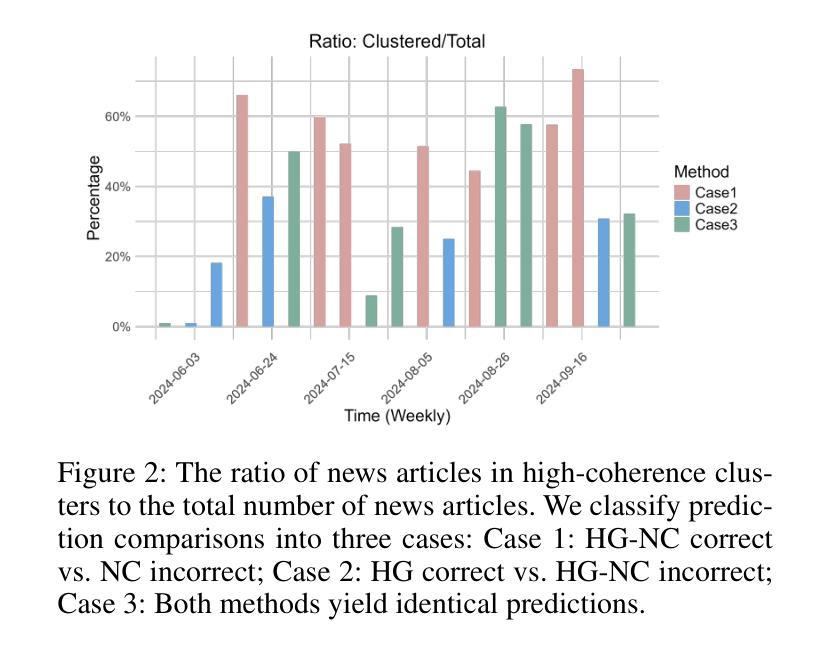

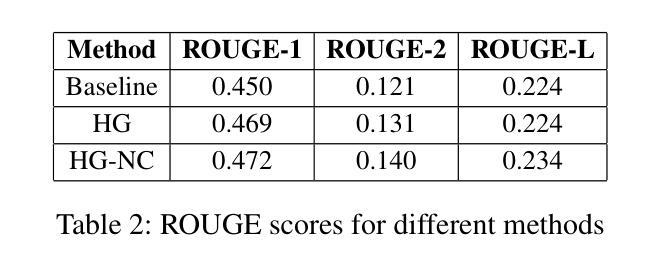

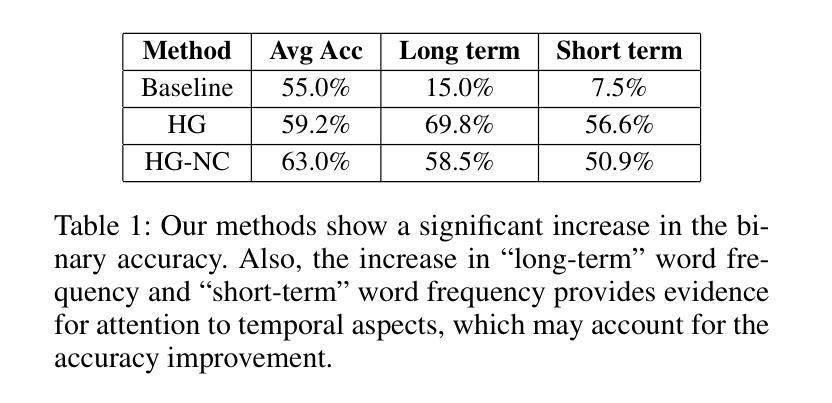

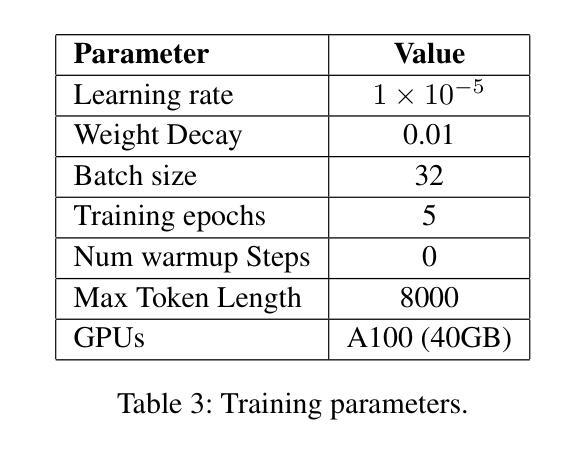

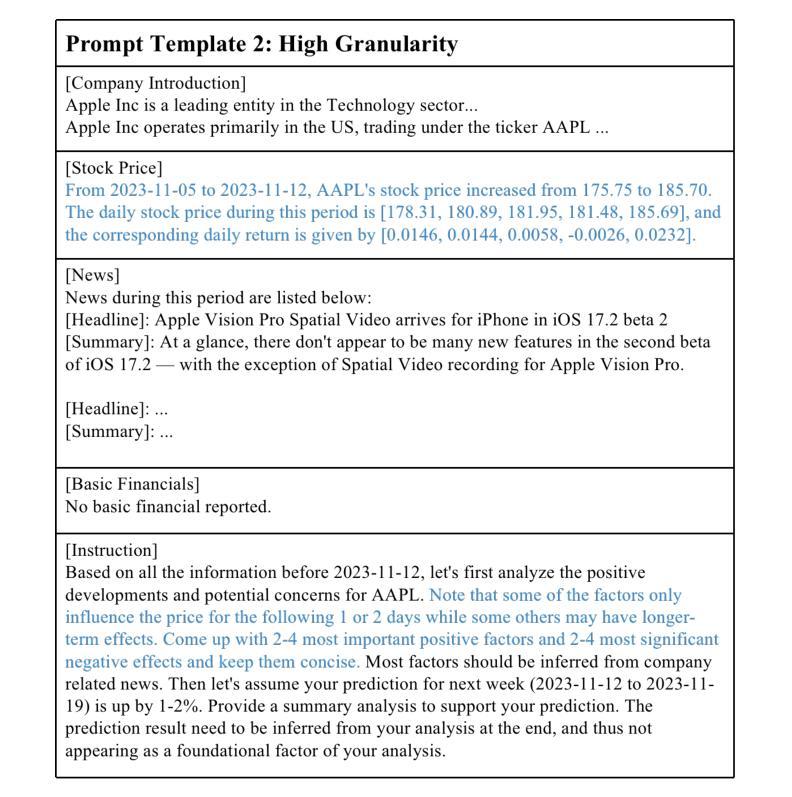

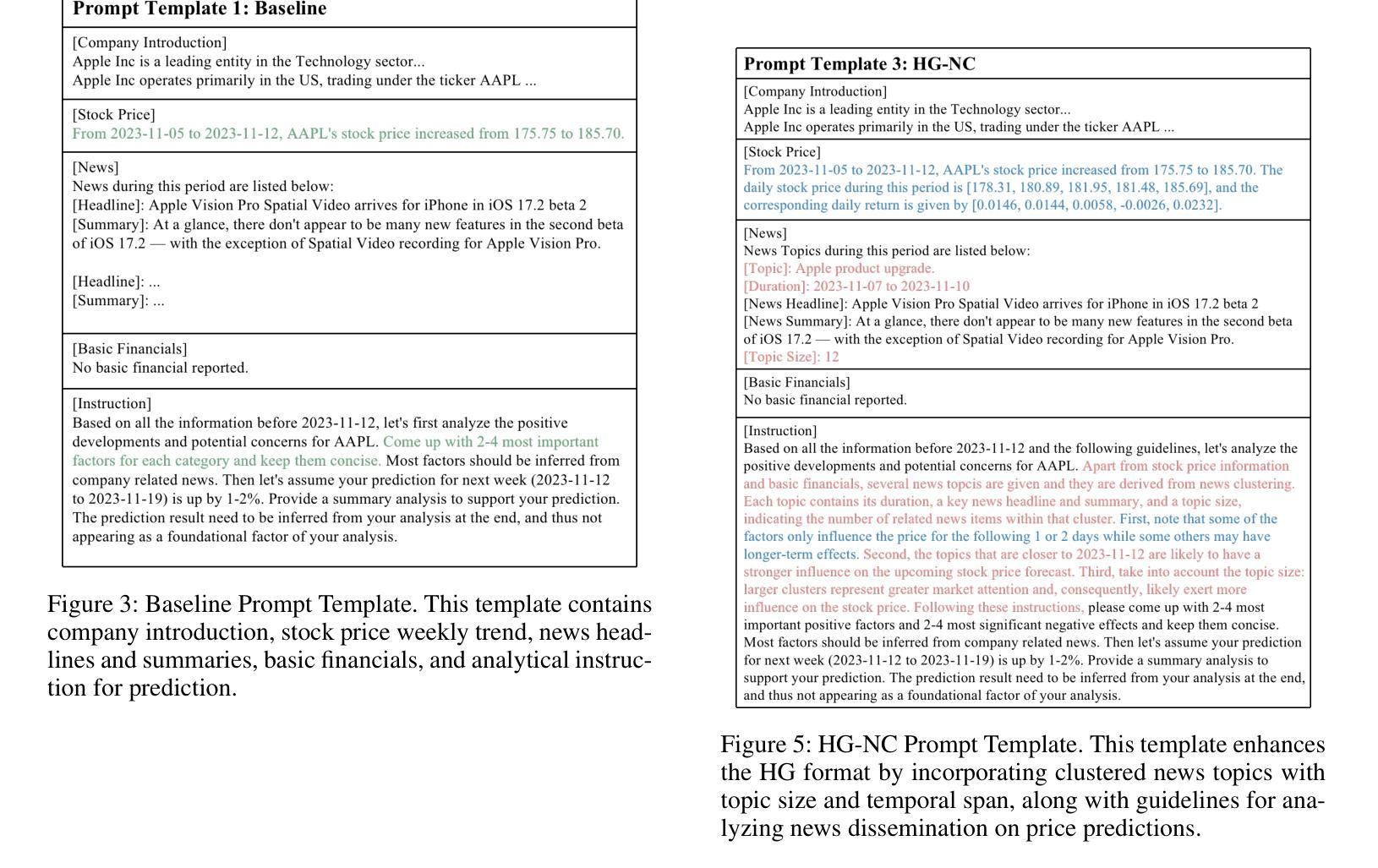

Financial sentiment analysis is crucial for understanding the influence of news on stock prices. Recently, large language models (LLMs) have been widely adopted for this purpose due to their advanced text analysis capabilities. However, these models often only consider the news content itself, ignoring its dissemination, which hampers accurate prediction of short-term stock movements. Additionally, current methods often lack sufficient contextual data and explicit instructions in their prompts, limiting LLMs’ ability to interpret news. In this paper, we propose a data-driven approach that enhances LLM-powered sentiment-based stock movement predictions by incorporating news dissemination breadth, contextual data, and explicit instructions. We cluster recent company-related news to assess its reach and influence, enriching prompts with more specific data and precise instructions. This data is used to construct an instruction tuning dataset to fine-tune an LLM for predicting short-term stock price movements. Our experimental results show that our approach improves prediction accuracy by 8% compared to existing methods.

金融情感分析对于理解新闻对股票价格的影响至关重要。最近,由于大型语言模型(LLM)具有先进的文本分析能力,因此被广泛应用于此目的。然而,这些模型通常只考虑新闻内容本身,而忽略了其传播情况,这阻碍了短期股票走势的准确预测。此外,当前的方法往往缺乏足够的上下文数据和明确的指令提示,限制了LLM解释新闻的能力。在本文中,我们提出了一种数据驱动的方法,通过结合新闻传播的广度、上下文数据和明确的指令,提高了基于LLM的情感驱动股票走势预测。我们对最近的与公司相关的新闻进行聚类,以评估其传播范围和影响力,并通过更具体的数据和精确指令丰富提示。这些数据用于构建指令微调数据集,以微调LLM,以预测短期股票价格走势。我们的实验结果表明,与现有方法相比,我们的方法提高了8%的预测精度。

论文及项目相关链接

PDF 1st Workshop on Preparing Good Data for Generative AI: Challenges and Approaches@ AAAI 2025

Summary

财经情感分析对于理解新闻对股价的影响至关重要。大型语言模型(LLM)因其先进的文本分析能力而广泛应用于此领域。然而,现有方法常常仅关注新闻内容本身,忽略了新闻的扩散,这阻碍了短期股市走势的准确预测。本文提出了一种数据驱动的方法,通过融入新闻传播广度、上下文数据和明确指令,提高LLM在情感基础上的股市预测能力。通过聚类公司相关新闻来评估其传播范围和影响力,丰富了具有更多特定数据和精确指令的提示。实验结果表明,与现有方法相比,该方法提高了8%的预测准确性。

Key Takeaways

- 财经情感分析对于预测股市走势非常重要。

- 大型语言模型(LLM)在财经情感分析领域有广泛应用。

- 现有LLM在预测短期股市走势时,常常仅关注新闻内容本身,忽略了新闻扩散。

- 本文提出了一种数据驱动的方法,融合了新闻传播广度、上下文数据和明确指令,以提高LLM的股市预测能力。

- 通过聚类公司相关新闻评估其传播范围和影响力。

- 丰富了具有更多特定数据和精确指令的提示,构建了指令调优数据集。

点此查看论文截图

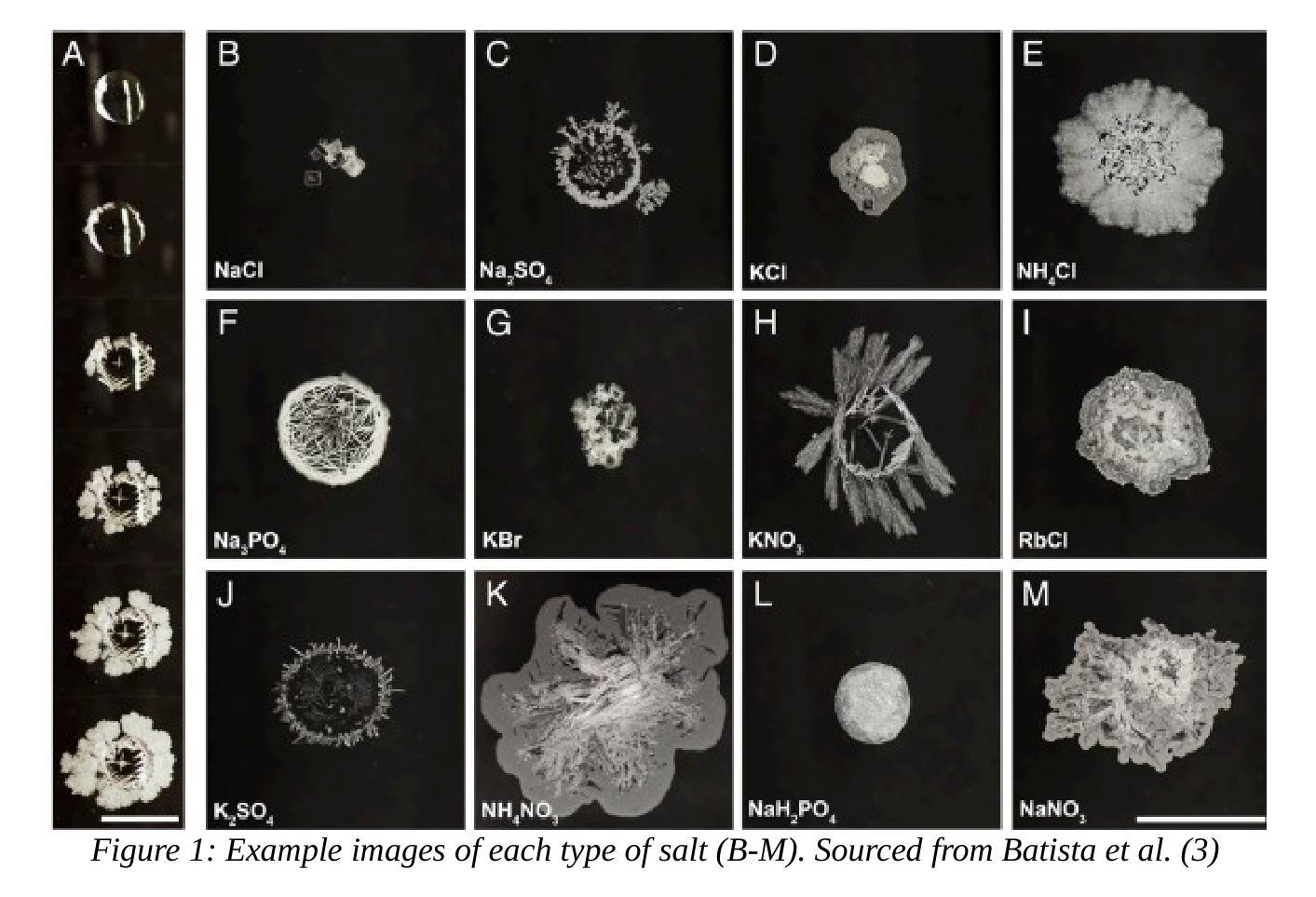

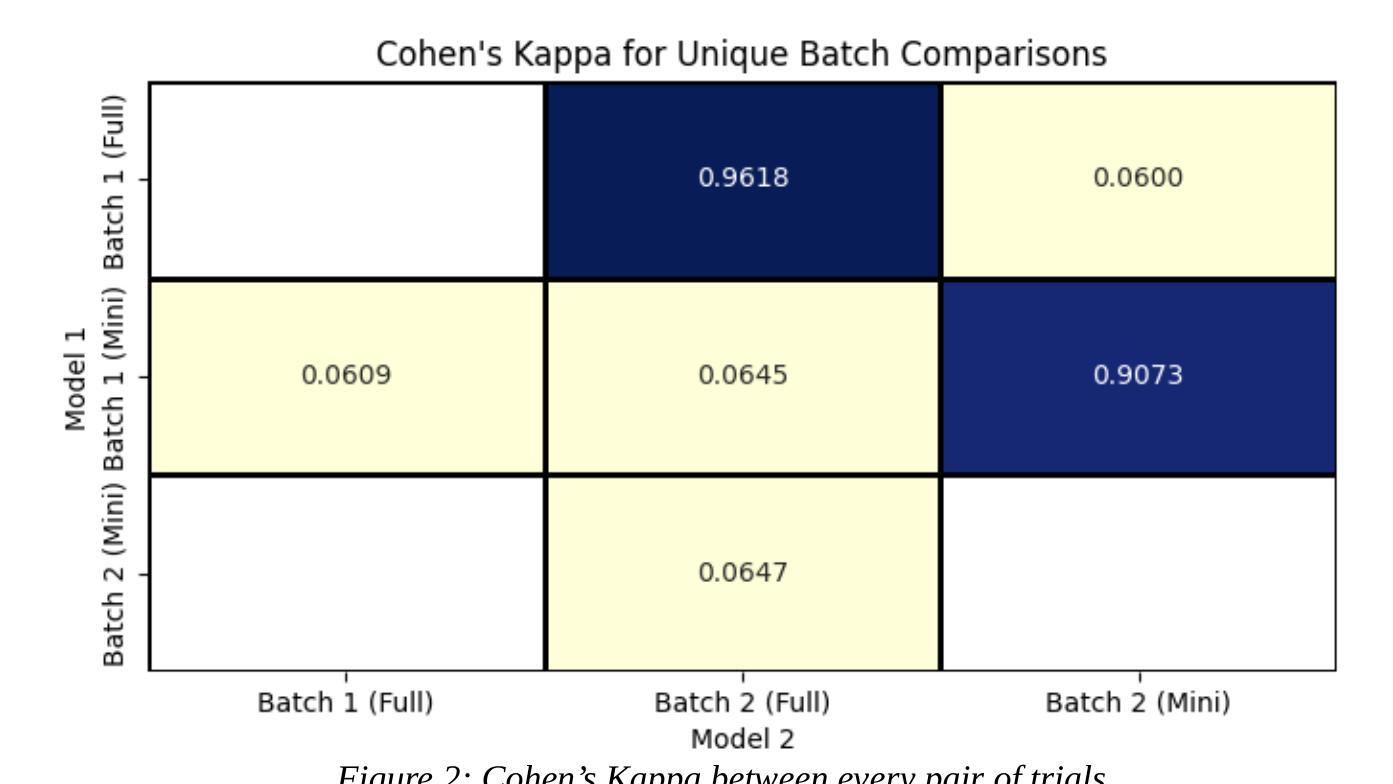

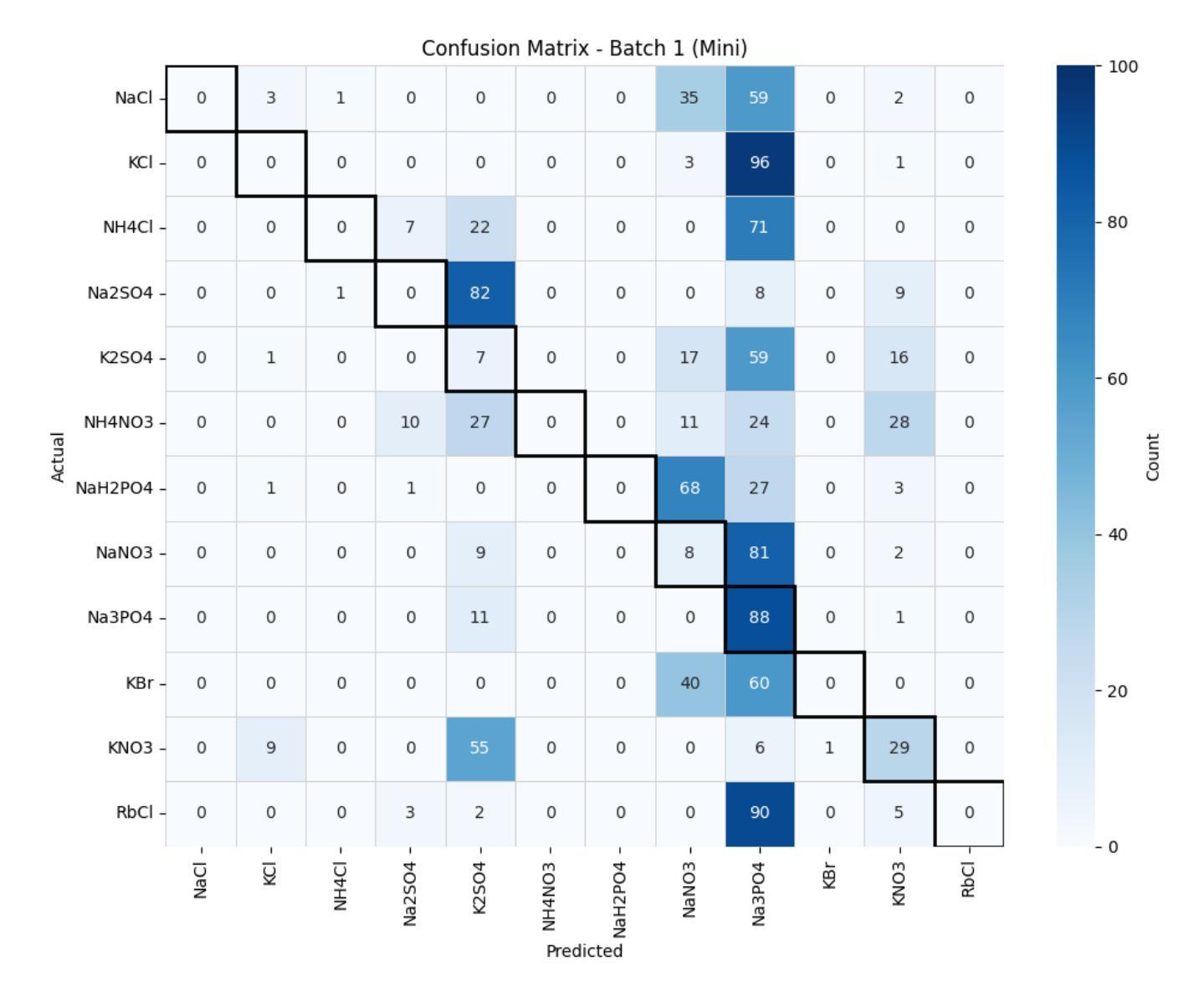

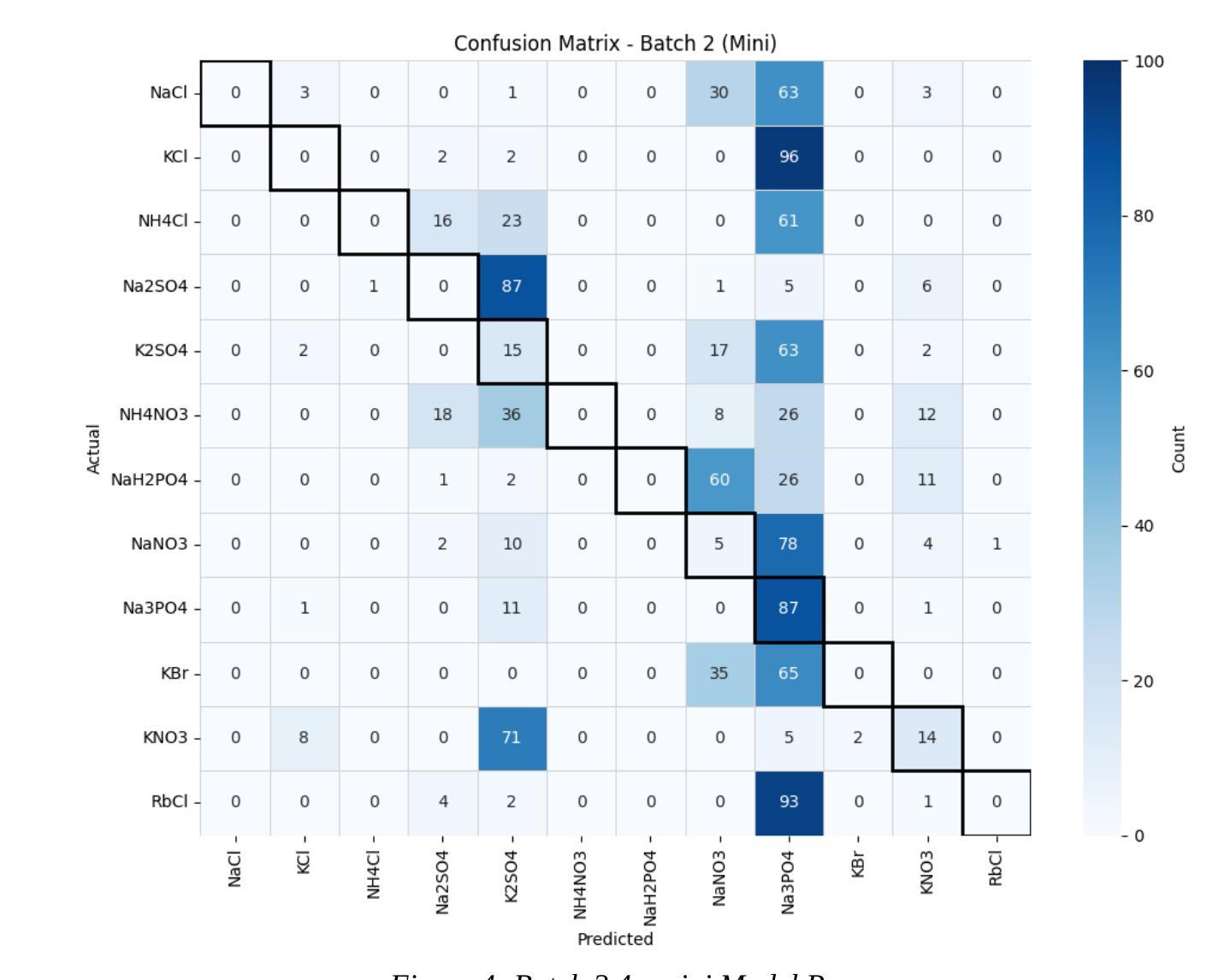

Evaluation of GPT-4o & GPT-4o-mini’s Vision Capabilities for Salt Evaporite Identification

Authors:Deven B. Dangi, Beni B. Dangi, Oliver Steinbock

Identifying salts from images of their ‘stains’ has diverse practical applications. While specialized AI models are being developed, this paper explores the potential of OpenAI’s state-of-the-art vision models (GPT-4o and GPT-4o-mini) as an immediate solution. Testing with 12 different types of salts, the GPT-4o model achieved 57% accuracy and a 0.52 F1 score, significantly outperforming both random chance (8%) and GPT-4o mini (11% accuracy). Results suggest that current vision models could serve as an interim solution for salt identification from stain images.

从盐的“污渍”图像中识别盐具有多种实际应用。虽然正在开发专门的AI模型,但本文探讨了OpenAI最先进的视觉模型(GPT-4o和GPT-4o-mini)作为即时解决方案的潜力。通过对12种不同类型的盐进行测试,GPT-4o模型达到了57%的准确率和0.52的F1分数,显著优于随机猜测(8%)和GPT-4o mini(11%的准确率)。结果表明,当前的视觉模型可以作为从污渍图像中识别盐的临时解决方案。

论文及项目相关链接

PDF 11 pages, 7 figures

Summary

基于OpenAI先进视觉模型的盐类图像识别研究,尝试使用GPT-4o模型对十二种不同盐类进行识别,准确率达到了57%,F1分数为0.52,远超随机猜测和GPT-4o mini模型的表现。结果暗示当前视觉模型可作为盐类污渍图像识别的临时解决方案。

Key Takeaways

- 研究尝试使用OpenAI的先进视觉模型(GPT-4o)进行盐类识别。

- 研究对象为十二种不同类型的盐。

- GPT-4o模型取得了较高的准确率(57%)和F1分数(0.52)。

- GPT-4o模型的表现优于随机猜测和GPT-4o mini模型。

- 当前视觉模型在盐类污渍图像识别上具有实用价值。

- 此项研究为盐类识别提供了一种临时解决方案。

点此查看论文截图

Performance of ChatGPT on tasks involving physics visual representations: the case of the Brief Electricity and Magnetism Assessment

Authors:Giulia Polverini, Jakob Melin, Elias Onerud, Bor Gregorcic

Artificial intelligence-based chatbots are increasingly influencing physics education due to their ability to interpret and respond to textual and visual inputs. This study evaluates the performance of two large multimodal model-based chatbots, ChatGPT-4 and ChatGPT-4o on the Brief Electricity and Magnetism Assessment (BEMA), a conceptual physics inventory rich in visual representations such as vector fields, circuit diagrams, and graphs. Quantitative analysis shows that ChatGPT-4o outperforms both ChatGPT-4 and a large sample of university students, and demonstrates improvements in ChatGPT-4o’s vision interpretation ability over its predecessor ChatGPT-4. However, qualitative analysis of ChatGPT-4o’s responses reveals persistent challenges. We identified three types of difficulties in the chatbot’s responses to tasks on BEMA: (1) difficulties with visual interpretation, (2) difficulties in providing correct physics laws or rules, and (3) difficulties with spatial coordination and application of physics representations. Spatial reasoning tasks, particularly those requiring the use of the right-hand rule, proved especially problematic. These findings highlight that the most broadly used large multimodal model-based chatbot, ChatGPT-4o, still exhibits significant difficulties in engaging with physics tasks involving visual representations. While the chatbot shows potential for educational applications, including personalized tutoring and accessibility support for students who are blind or have low vision, its limitations necessitate caution. On the other hand, our findings can also be leveraged to design assessments that are difficult for chatbots to solve.

基于人工智能的聊天机器人由于其解释和响应文本和视觉输入的能力,正越来越影响物理教育。本研究评估了两个大型多模式模型基础聊天机器人ChatGPT-4和ChatGPT-4o在《简短的电学与磁学评估》(BEMA)上的表现。BEMA是一个概念丰富的物理库存,包含矢量场、电路图和图表等视觉表现形式。定量分析表明,ChatGPT-4o在ChatGPT-4和大量大学生样本中的表现更为出色,并展示了其在视觉解释能力方面相较于前代ChatGPT-4的进步。然而,对ChatGPT-4o的回应进行定性分析揭示了持续存在的挑战。我们将chatbot在BEMA任务上的困难分为三个类别:(1)视觉解释的困难,(2)提供正确的物理定律或规则的困难,(3)空间协调和物理表达应用的困难。空间推理任务,特别是那些需要使用右手规则的任务,证明尤其具有挑战性。这些发现表明,最广泛使用的大型多模式模型基础聊天机器人ChatGPT-4o在与涉及视觉表达的物理任务交互时仍面临重大困难。虽然聊天机器人在教育应用方面显示出潜力,包括个性化辅导和支持视力障碍或视力不佳的学生,但其局限性需要谨慎对待。另一方面,我们的发现也可以用来设计聊天机器人难以解决的评估问题。

论文及项目相关链接

摘要

基于人工智能的聊天机器人因能解读和回应文本和视觉输入,对物理教育产生越来越大的影响。本研究评估了两个大型多模式模型聊天机器人——ChatGPT-4和ChatGPT-4o在富含矢量场、电路图和图表等视觉表现的简短电力与磁学评估(BEMA)上的表现。定量分析显示,ChatGPT-4o在ChatGPT-4以及大量大学生样本中表现出色,并在视觉解读能力上有所进步。然而,对ChatGPT-4o的回应进行定性分析揭示了持续存在的挑战。我们确定了聊天机器人在BEMA任务回应中的三种困难:1)视觉解读困难,2)提供正确的物理定律或规则方面的困难,以及3)空间协调和物理表征应用方面的困难。特别是需要使用右手定则的空间推理任务,证明尤其存在问题。这些发现表明,最广泛使用的基于大型多模式模型的聊天机器人ChatGPT-4o在涉及视觉表现的物理任务中仍存在显著困难。虽然聊天机器人在教育应用方面显示出潜力,包括个性化辅导和失明或低视力学生的辅助支持,但其局限性需要谨慎对待。另一方面,我们的发现也可以用来设计聊天机器人难以解决的评估题目。

关键见解

- 基于人工智能的聊天机器人在物理教育中扮演着越来越重要的角色,尤其在解读和回应文本和视觉输入方面。

- ChatGPT-4o在视觉解读能力上相较于其前身ChatGPT-4有所提升。

- 在涉及视觉表现的物理任务中,聊天机器人面临三大挑战:视觉解读、提供正确物理知识和应用空间推理的困难。

- 空间推理任务对聊天机器人来说尤其具有挑战性,需要使用右手定则的任务尤为困难。

- 聊天机器人在教育应用方面具有潜力,如个性化辅导和低视力学生的支持。

- 聊天机器人存在局限性,需要在应用时谨慎对待。

点此查看论文截图