⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2024-12-24 更新

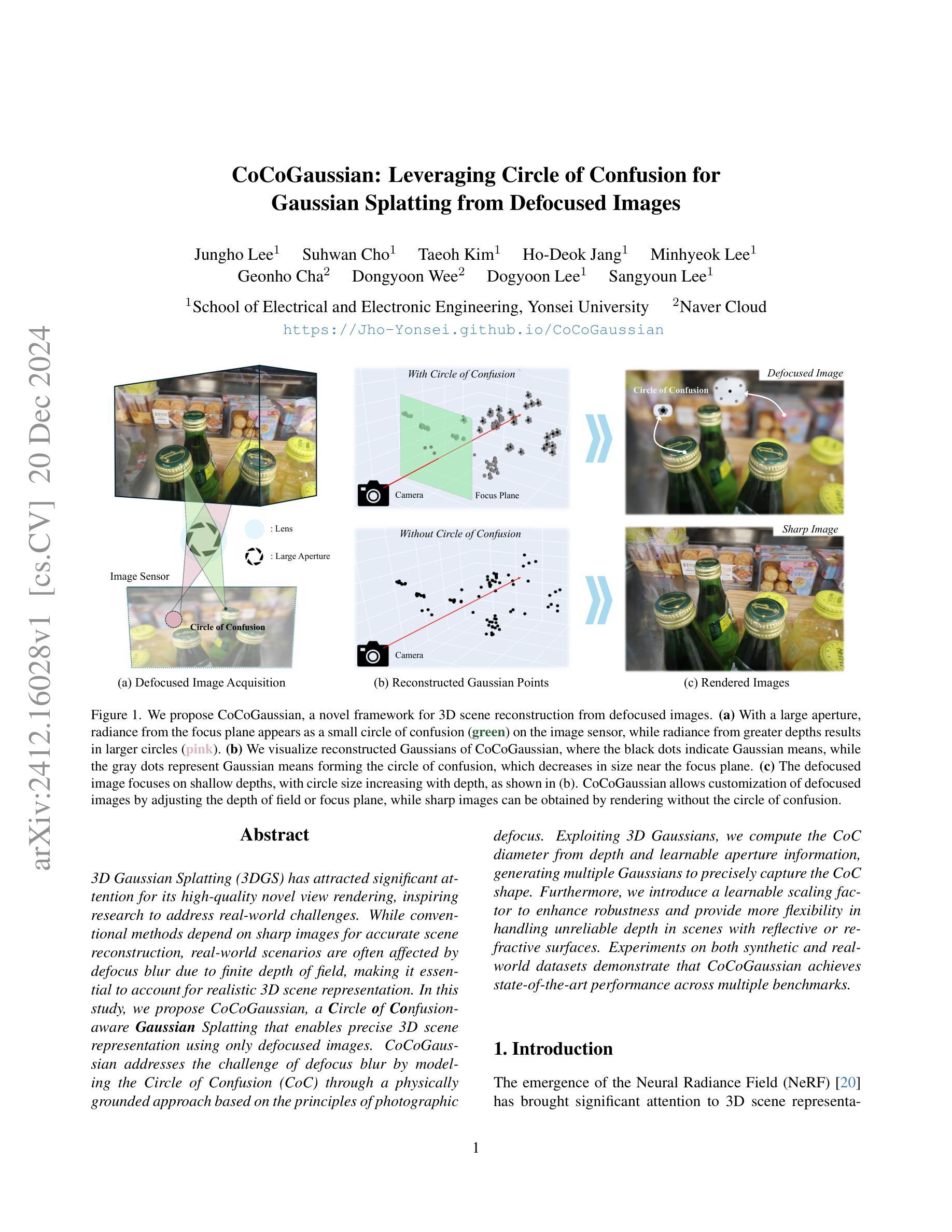

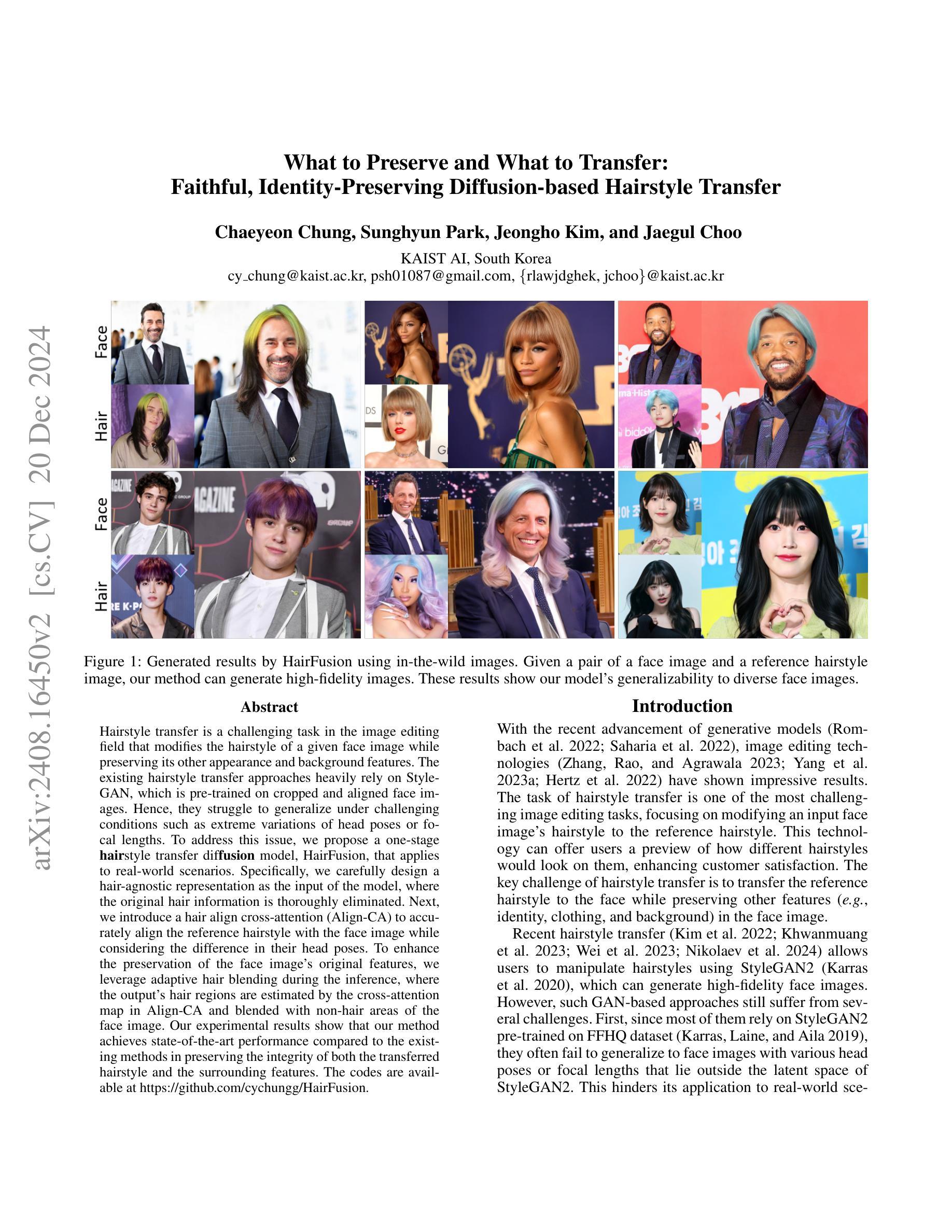

What to Preserve and What to Transfer: Faithful, Identity-Preserving Diffusion-based Hairstyle Transfer

Authors:Chaeyeon Chung, Sunghyun Park, Jeongho Kim, Jaegul Choo

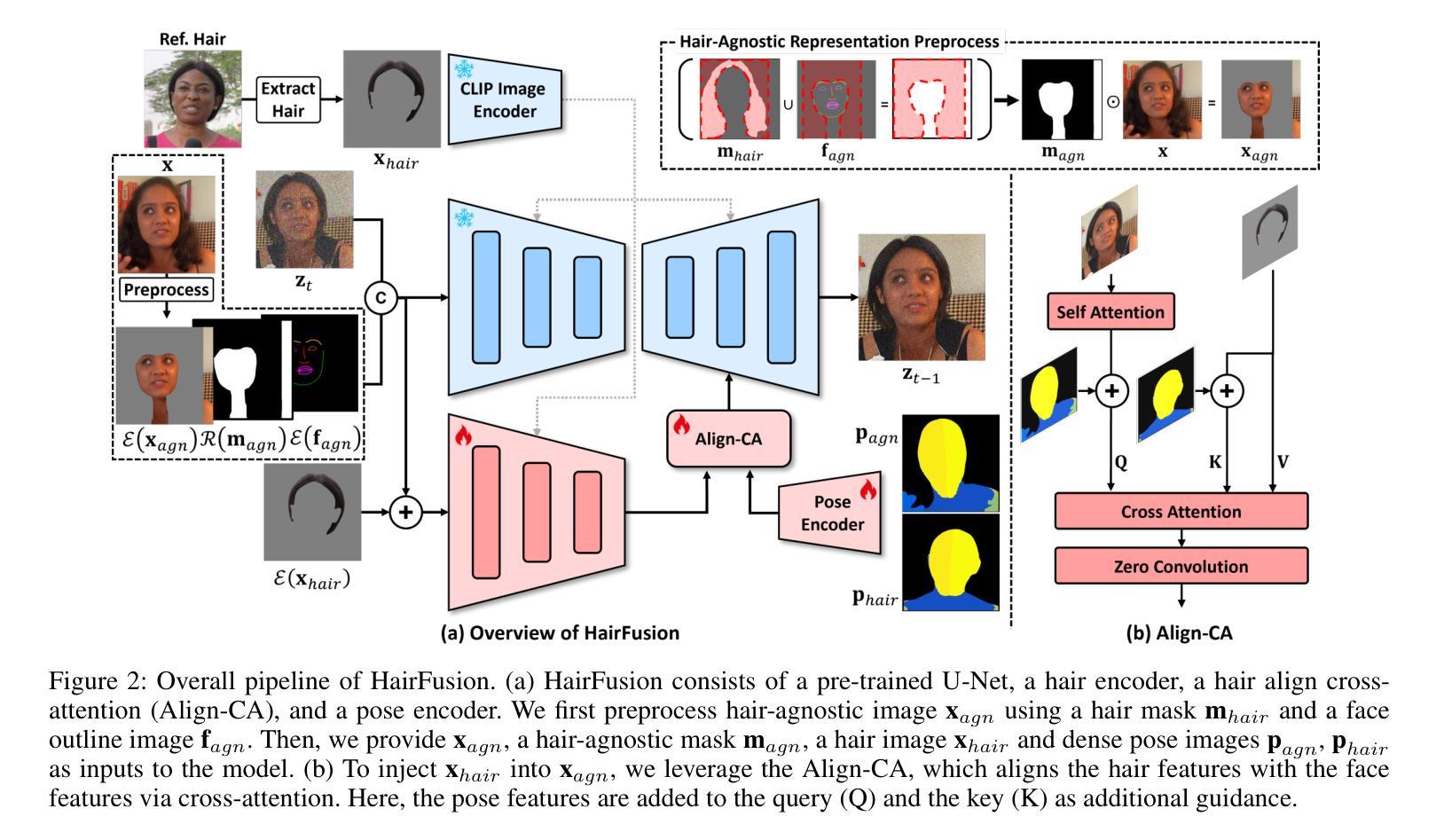

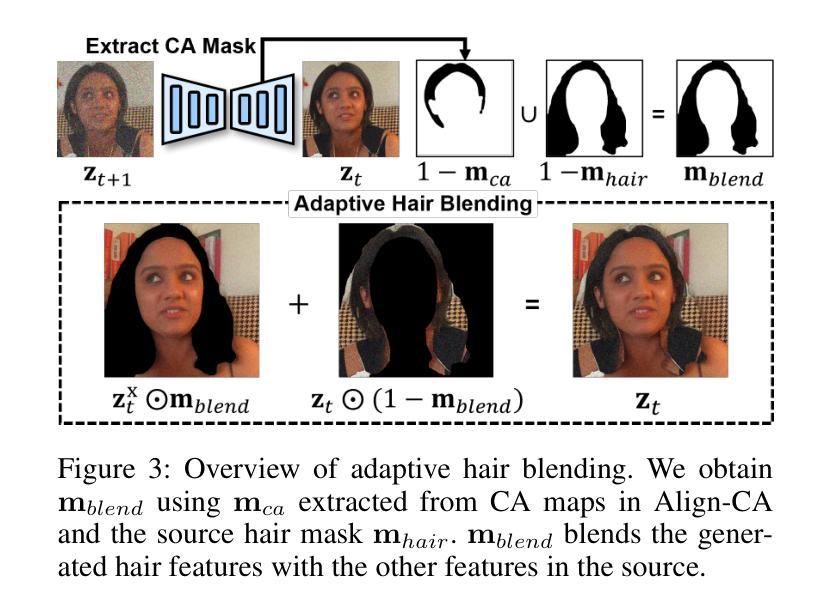

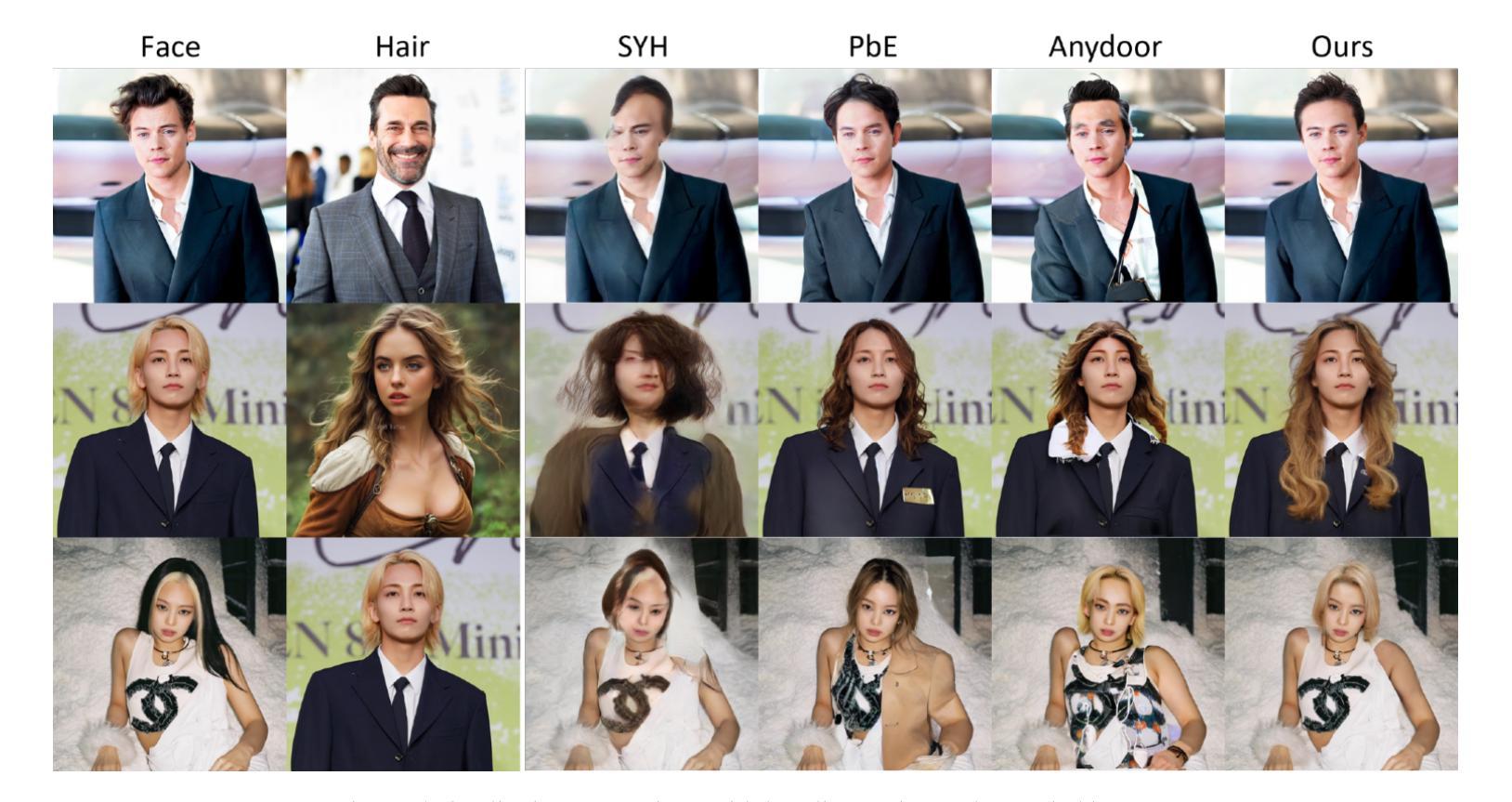

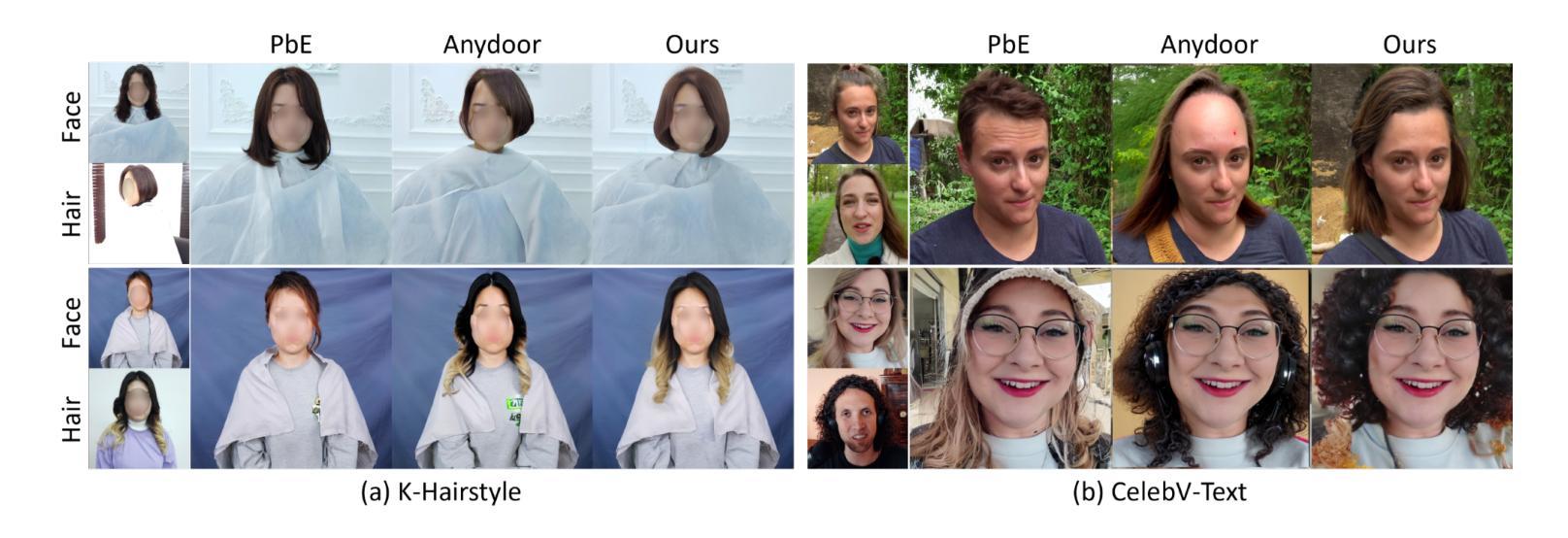

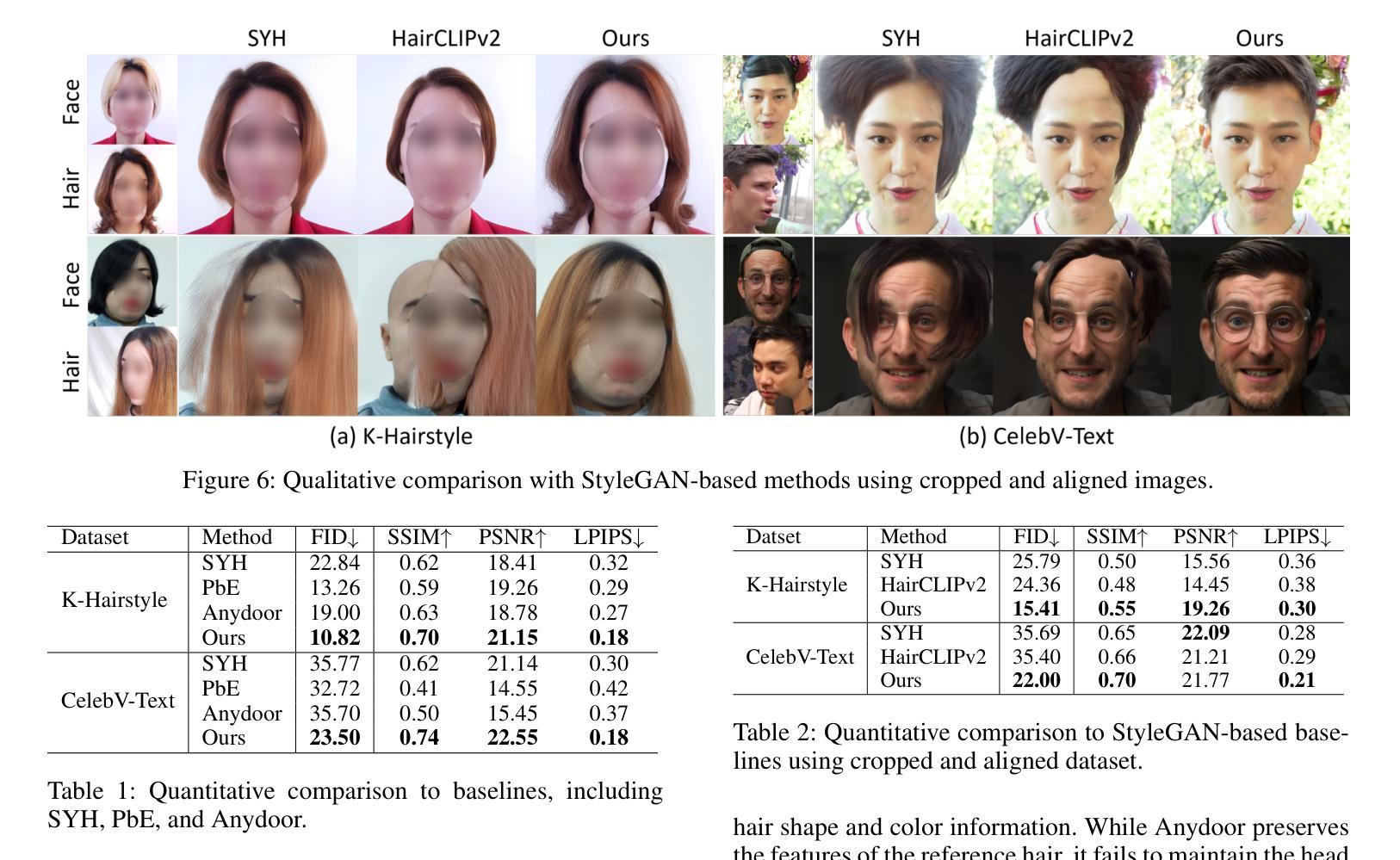

Hairstyle transfer is a challenging task in the image editing field that modifies the hairstyle of a given face image while preserving its other appearance and background features. The existing hairstyle transfer approaches heavily rely on StyleGAN, which is pre-trained on cropped and aligned face images. Hence, they struggle to generalize under challenging conditions such as extreme variations of head poses or focal lengths. To address this issue, we propose a one-stage hairstyle transfer diffusion model, HairFusion, that applies to real-world scenarios. Specifically, we carefully design a hair-agnostic representation as the input of the model, where the original hair information is thoroughly eliminated. Next, we introduce a hair align cross-attention (Align-CA) to accurately align the reference hairstyle with the face image while considering the difference in their head poses. To enhance the preservation of the face image’s original features, we leverage adaptive hair blending during the inference, where the output’s hair regions are estimated by the cross-attention map in Align-CA and blended with non-hair areas of the face image. Our experimental results show that our method achieves state-of-the-art performance compared to the existing methods in preserving the integrity of both the transferred hairstyle and the surrounding features. The codes are available at https://github.com/cychungg/HairFusion

发型转换是图像编辑领域中的一项具有挑战性的任务,它要求对给定的面部图像进行发型修改,同时保留其其他外观和背景特征。现有的发型转换方法严重依赖于StyleGAN,该模型是在裁剪和对齐的面部图像上进行预训练的。因此,它们在极端头部姿态或焦距等挑战条件下很难推广。为了解决这一问题,我们提出了一种一站式的发型转换扩散模型HairFusion,适用于真实场景。具体来说,我们精心设计了模型的输入为一个不依赖于发型的表示,其中彻底消除了原始发型信息。接下来,我们引入了一种发型对齐交叉注意力(Align-CA)机制,以准确地将参考发型与面部图像对齐,同时考虑它们头部姿态的差异。为了提高对面部图像原始特征的保留效果,我们在推理过程中利用了自适应头发混合技术,其中输出图像的头发区域由Align-CA中的交叉注意力图估计,并与面部图像的头发区域外的部分进行混合。我们的实验结果表明,与现有方法相比,我们的方法在保留转移发型和周围特征完整性方面达到了最先进的性能。代码可通过https://github.com/cychungg/HairFusion获取。

论文及项目相关链接

PDF Accepted to AAAI 2025

Summary

本文提出了一种基于扩散模型的发型转移方法,称为HairFusion,适用于真实场景。该方法通过设计一种无发型的表示作为模型输入,彻底消除原始发型信息。引入头发对齐交叉注意力(Align-CA)机制,准确对齐参考发型与面部图像,并考虑头部姿态差异。通过自适应头发融合增强面部图像原始特征的保留。实验结果显显示,该方法在保留转移发型和周围特征完整性方面达到最新技术水平。

Key Takeaways

- HairFusion是一种基于扩散模型的发型转移方法,适用于真实场景。

- 该方法通过设计无发型的表示作为模型输入,消除原始发型信息。

- 引入头发对齐交叉注意力(Align-CA)机制,实现参考发型与面部图像的对齐。

- Align-CA考虑头部姿态差异,提高发型转移的准确性。

- 通过自适应头发融合增强面部图像原始特征的保留。

- 实验结果表明,该方法在保留转移发型和周围特征完整性方面达到最新技术水平。

点此查看论文截图

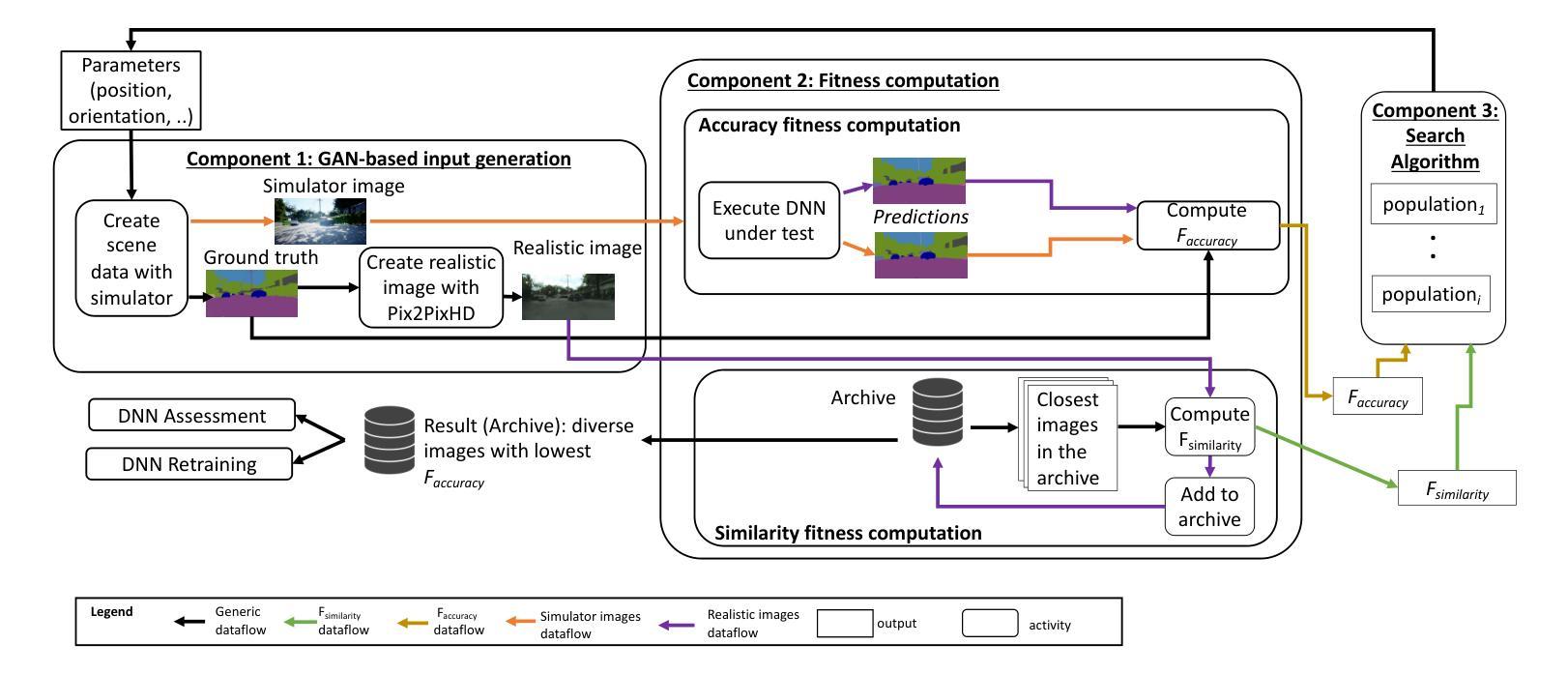

Search-based DNN Testing and Retraining with GAN-enhanced Simulations

Authors:Mohammed Oualid Attaoui, Fabrizio Pastore, Lionel Briand

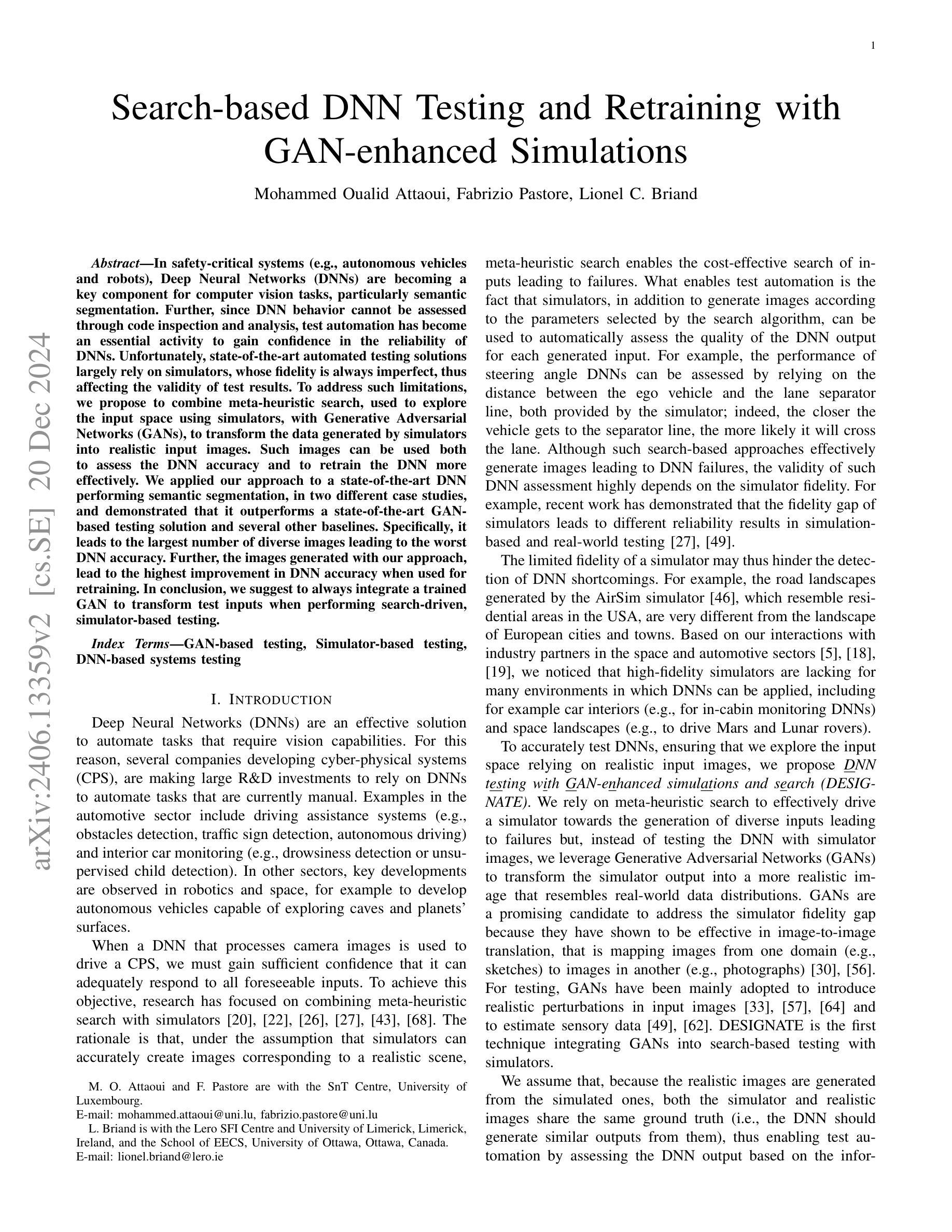

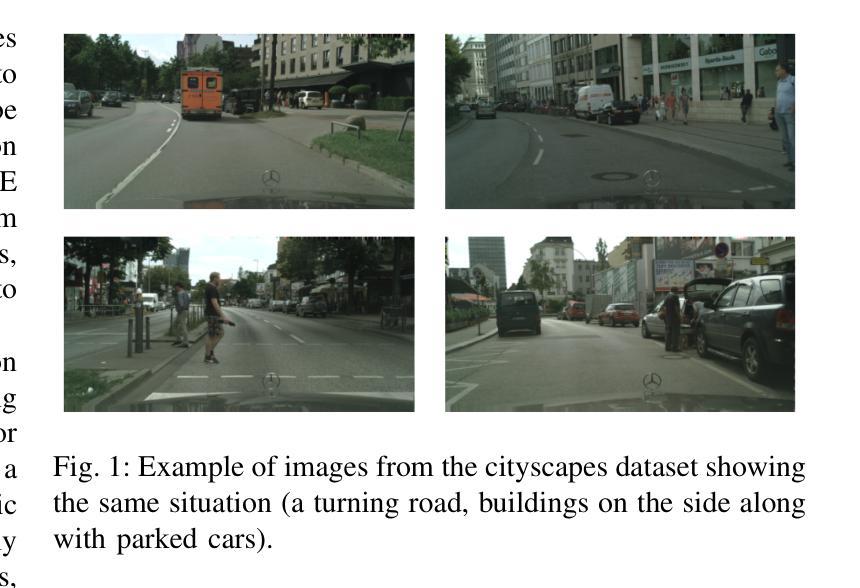

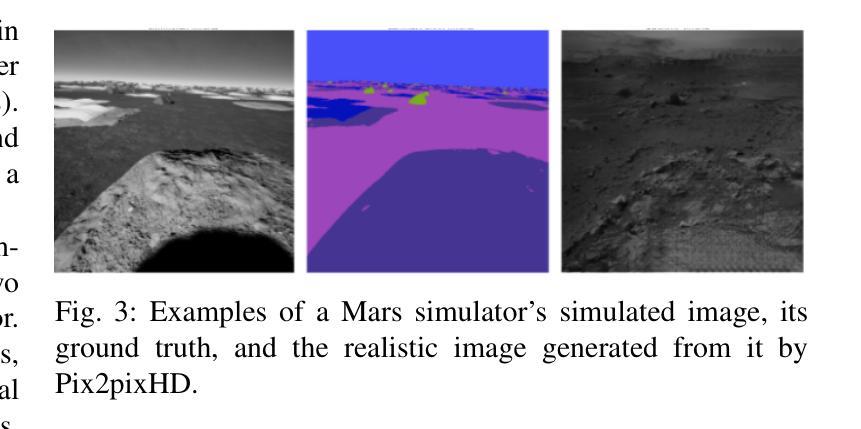

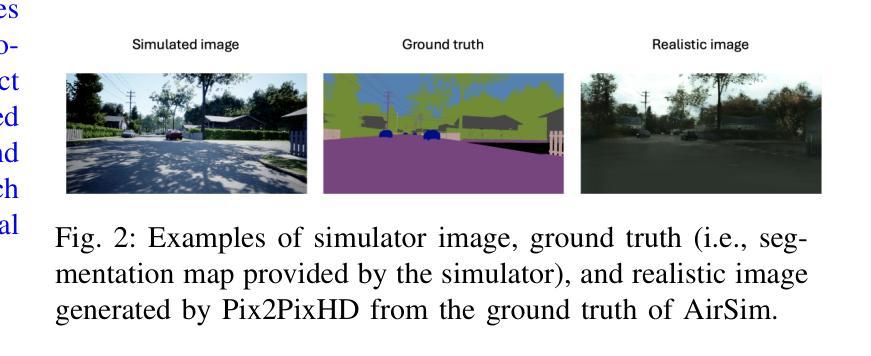

In safety-critical systems (e.g., autonomous vehicles and robots), Deep Neural Networks (DNNs) are becoming a key component for computer vision tasks, particularly semantic segmentation. Further, since the DNN behavior cannot be assessed through code inspection and analysis, test automation has become an essential activity to gain confidence in the reliability of DNNs. Unfortunately, state-of-the-art automated testing solutions largely rely on simulators, whose fidelity is always imperfect, thus affecting the validity of test results. To address such limitations, we propose to combine meta-heuristic search, used to explore the input space using simulators, with Generative Adversarial Networks (GANs), to transform the data generated by simulators into realistic input images. Such images can be used both to assess the DNN performance and to retrain the DNN more effectively. We applied our approach to a state-of-the-art DNN performing semantic segmentation and demonstrated that it outperforms a state-of-the-art GAN-based testing solution and several baselines. Specifically, it leads to the largest number of diverse images leading to the worst DNN performance. Further, the images generated with our approach, lead to the highest improvement in DNN performance when used for retraining. In conclusion, we suggest to always integrate GAN components when performing search-driven, simulator-based testing.

在安全关键系统(例如自动驾驶汽车和机器人)中,深度神经网络(DNN)已成为计算机视觉任务的关键组件,特别是在语义分割方面。此外,由于无法通过对代码的检查和分析来评估DNN的行为,因此测试自动化已成为对DNN可靠性建立信心的一项基本活动。然而,最先进的自动化测试解决方案在很大程度上依赖于模拟器,模拟器的逼真度总是不完美的,从而影响测试结果的有效性。为了解决这些限制,我们提议将元启发式搜索(用于使用模拟器探索输入空间)与生成对抗网络(GANs)相结合,将模拟器生成的数据转换为逼真的输入图像。这些图像可用于评估DNN的性能并更有效地重新训练DNN。我们将该方法应用于执行语义分割的最先进的DNN,并证明其优于基于GAN的测试解决方案和几个基准测试。具体来说,它产生了导致DNN性能最差的最多样化图像数量最多。此外,使用我们的方法生成的图像在用于重新训练时,导致DNN性能得到最大提高。总之,在进行基于搜索和模拟器的测试时,建议始终集成GAN组件。

论文及项目相关链接

PDF 18 pages, 5 figures, 13 tables

Summary

深度神经网络(DNN)在自动驾驶汽车和机器人等安全关键系统中,已成为计算机视觉任务特别是语义分割的关键组件。为评估DNN的可靠性,测试自动化变得至关重要。然而,当前自动化测试解决方案主要依赖模拟器,其逼真度始终有限,影响测试结果的有效性。本研究结合元启发式搜索和生成对抗网络(GANs),将模拟器生成的数据转化为逼真的输入图像,用于评估DNN性能和更有效地对其进行再训练。研究结果表明,该方法在生成对抗网络测试解决方案和若干基线测试中表现最佳,并导致DNN性能最大改善。因此,建议在搜索驱动、模拟器测试中始终集成GAN组件。

Key Takeaways

- 深度神经网络(DNN)在安全关键系统的计算机视觉任务中扮演重要角色,特别是在语义分割方面。

- 当前自动化测试解决方案主要依赖模拟器,但其逼真度有限,影响测试结果的有效性。

- 结合元启发式搜索和生成对抗网络(GANs)能有效将模拟器生成的数据转化为逼真输入图像。

- 该方法不仅可用于评估DNN性能,还能更有效地对DNN进行再训练。

- 研究结果表明,该方法在现有GAN测试解决方案和基线测试中表现最佳。

- 该方法生成的图像能导致DNN性能最大改善。

点此查看论文截图