⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-02 更新

ReTaKe: Reducing Temporal and Knowledge Redundancy for Long Video Understanding

Authors:Xiao Wang, Qingyi Si, Jianlong Wu, Shiyu Zhu, Li Cao, Liqiang Nie

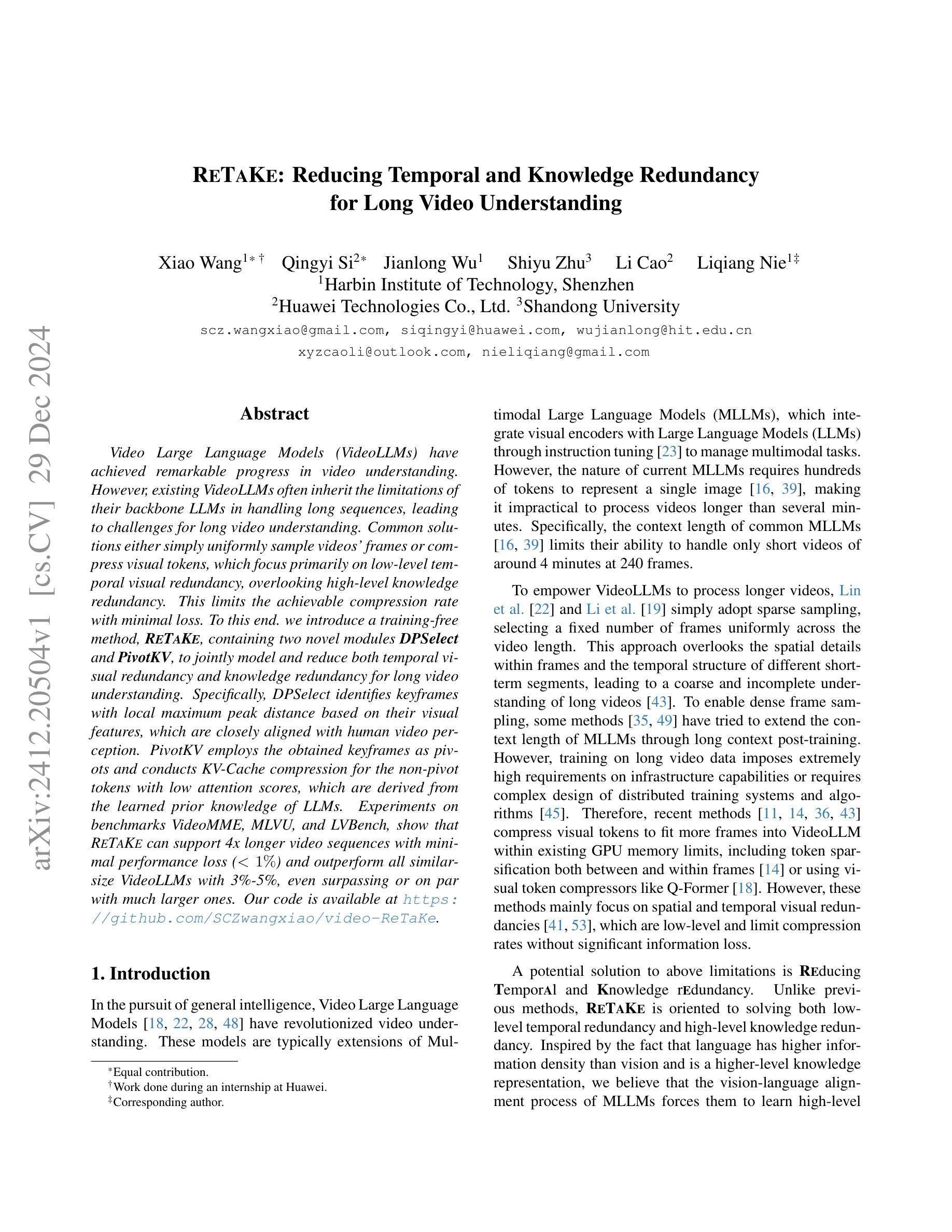

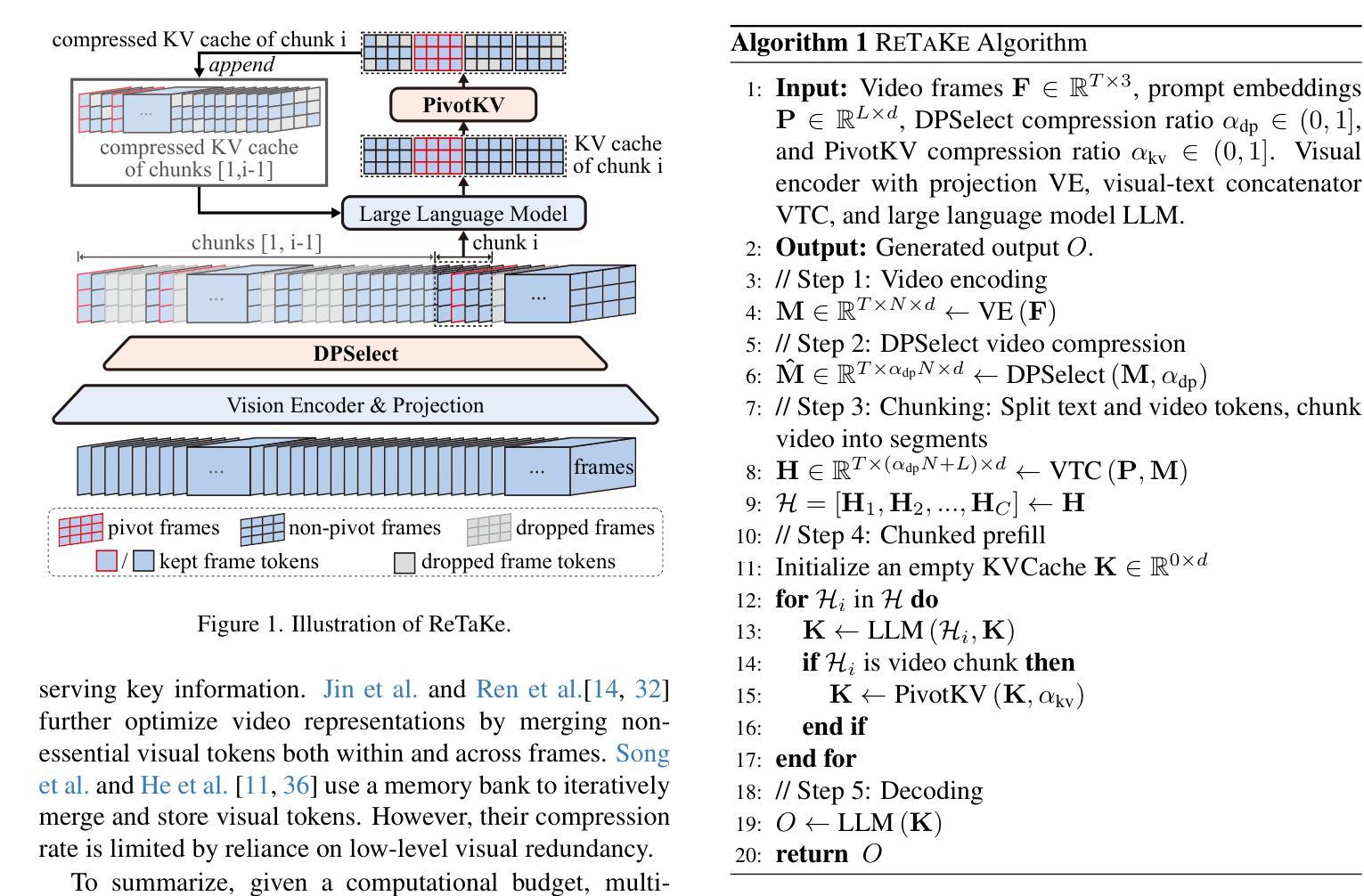

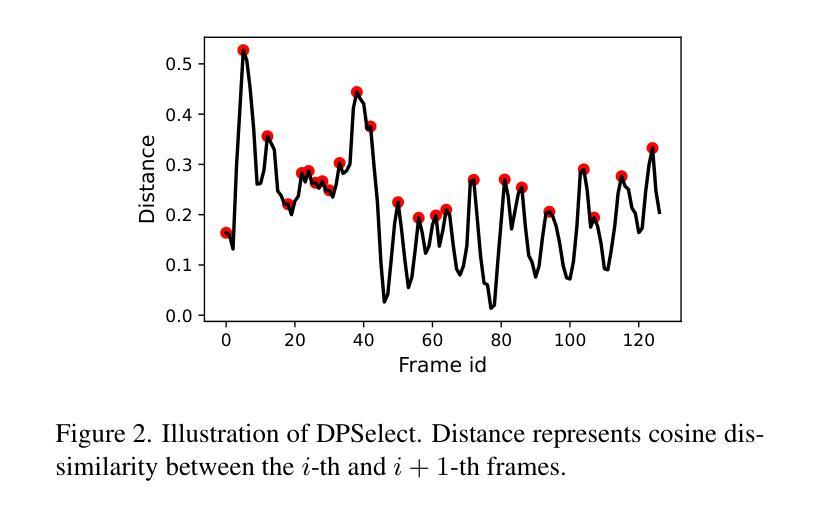

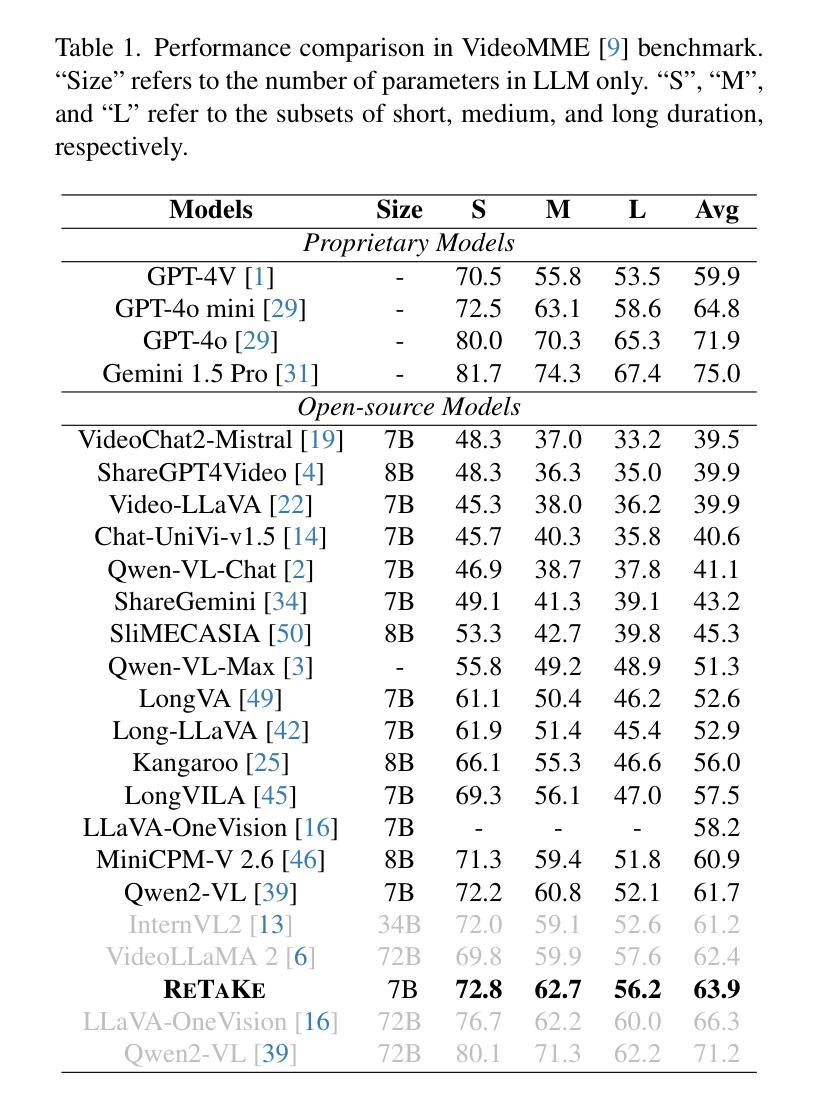

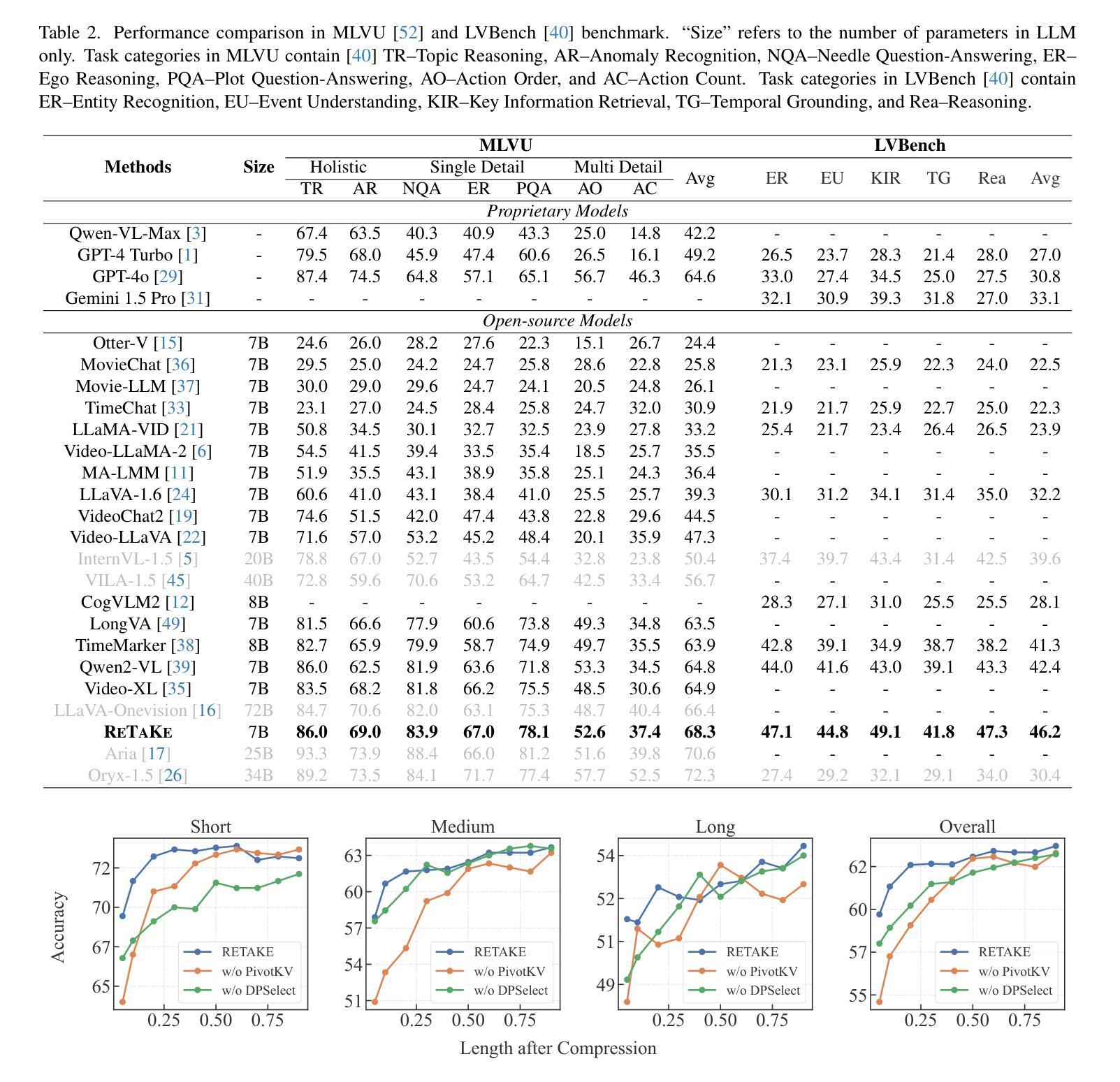

Video Large Language Models (VideoLLMs) have achieved remarkable progress in video understanding. However, existing VideoLLMs often inherit the limitations of their backbone LLMs in handling long sequences, leading to challenges for long video understanding. Common solutions either simply uniformly sample videos’ frames or compress visual tokens, which focus primarily on low-level temporal visual redundancy, overlooking high-level knowledge redundancy. This limits the achievable compression rate with minimal loss. To this end. we introduce a training-free method, $\textbf{ReTaKe}$, containing two novel modules DPSelect and PivotKV, to jointly model and reduce both temporal visual redundancy and knowledge redundancy for long video understanding. Specifically, DPSelect identifies keyframes with local maximum peak distance based on their visual features, which are closely aligned with human video perception. PivotKV employs the obtained keyframes as pivots and conducts KV-Cache compression for the non-pivot tokens with low attention scores, which are derived from the learned prior knowledge of LLMs. Experiments on benchmarks VideoMME, MLVU, and LVBench, show that ReTaKe can support 4x longer video sequences with minimal performance loss (<1%) and outperform all similar-size VideoLLMs with 3%-5%, even surpassing or on par with much larger ones. Our code is available at https://github.com/SCZwangxiao/video-ReTaKe

视频大语言模型(VideoLLMs)在视频理解方面取得了显著的进步。然而,现有的VideoLLMs通常继承了其主干LLMs在处理长序列时的局限性,这给长视频理解带来了挑战。常见的解决方案要么简单地均匀采样视频的帧,要么压缩视觉令牌,这些解决方案主要关注低级别的时序视觉冗余,而忽略了高级别的知识冗余。这限制了可达到的压缩率,同时损失很小。为此,我们介绍了一种无需训练的方法ReTaKe,包含两个新颖模块DPSelect和PivotKV,以联合建模和减少时序视觉冗余和知识冗余,用于长视频理解。具体来说,DPSelect基于其视觉特征识别具有局部最大峰值距离的关键帧,这与人类视频感知密切相关。PivotKV将获得的关键帧作为基准点,并使用KV-Cache压缩具有低注意力分数的非基准令牌,这些令牌来源于LLMs学习的先验知识。在VideoMME、MLVU和LVBench等基准测试上的实验表明,ReTaKe可以在性能损失极小(<1%)的情况下支持4倍更长的视频序列,并超越所有类似规模的VideoLLMs达3%-5%,甚至超过或与之相当的大型模型。我们的代码可在https://github.com/SCZwangxiao/video-ReTaKe找到。

论文及项目相关链接

Summary

视频大型语言模型(VideoLLMs)在视频理解方面取得了显著进展,但在处理长序列视频时存在挑战。现有方法主要关注低级别的时间视觉冗余,而忽视了高级别知识冗余。为此,引入了一种无需训练的方法ReTaKe,包含DPSelect和PivotKV两个新模块,以联合建模和减少时间视觉冗余和知识冗余,用于长视频理解。实验表明,ReTaKe可以在性能损失极小的情况下支持4倍长的视频序列,并在基准测试中表现优异。

Key Takeaways

- VideoLLMs在视频理解方面取得显著进展,但在处理长序列视频时存在挑战。

- 现有方法主要关注低级别的时间视觉冗余,忽视高级别知识冗余。

- ReTaKe是一种无需训练的方法,旨在联合建模和减少时间视觉冗余和知识冗余。

- ReTaKe包含两个新模块:DPSelect和PivotKV。

- DPSelect基于视觉特征识别具有局部最大峰值距离的关键帧,与人类视频感知紧密对齐。

- PivotKV使用获得的关键帧作为中心点,对非中心点的低关注度标记进行KV-Cache压缩。

- 实验表明,ReTaKe在性能损失极小的情况下支持4倍长的视频序列,并在基准测试中表现优于其他类似规模的VideoLLMs,甚至超过了一些更大的模型。

点此查看论文截图