⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-02 更新

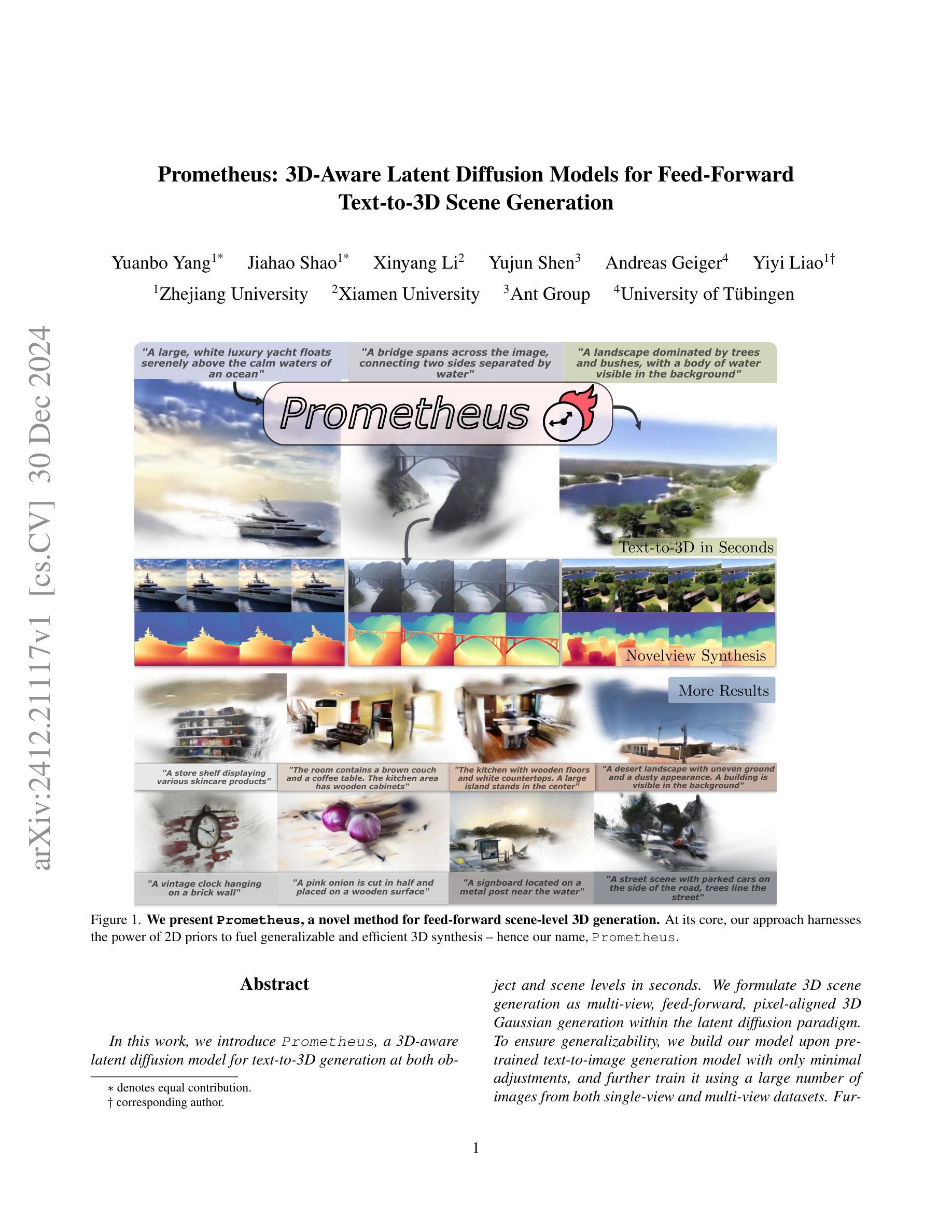

Prometheus: 3D-Aware Latent Diffusion Models for Feed-Forward Text-to-3D Scene Generation

Authors:Yuanbo Yang, Jiahao Shao, Xinyang Li, Yujun Shen, Andreas Geiger, Yiyi Liao

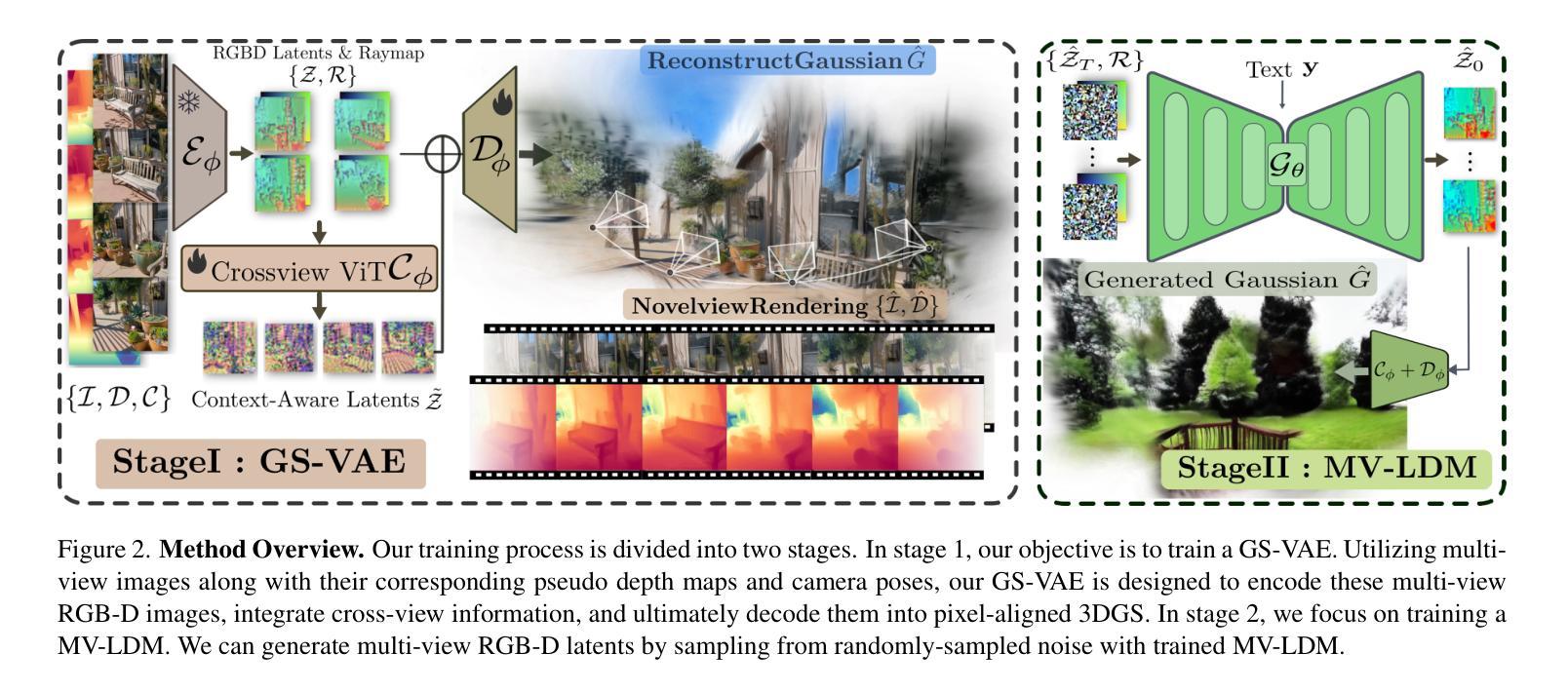

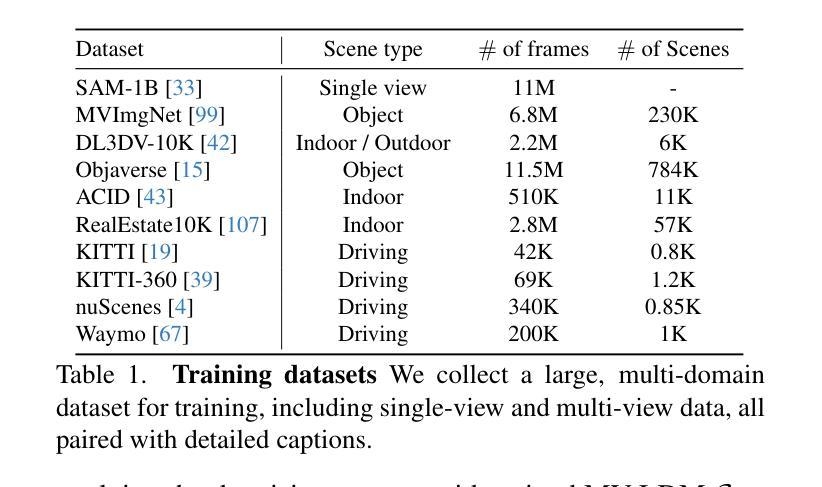

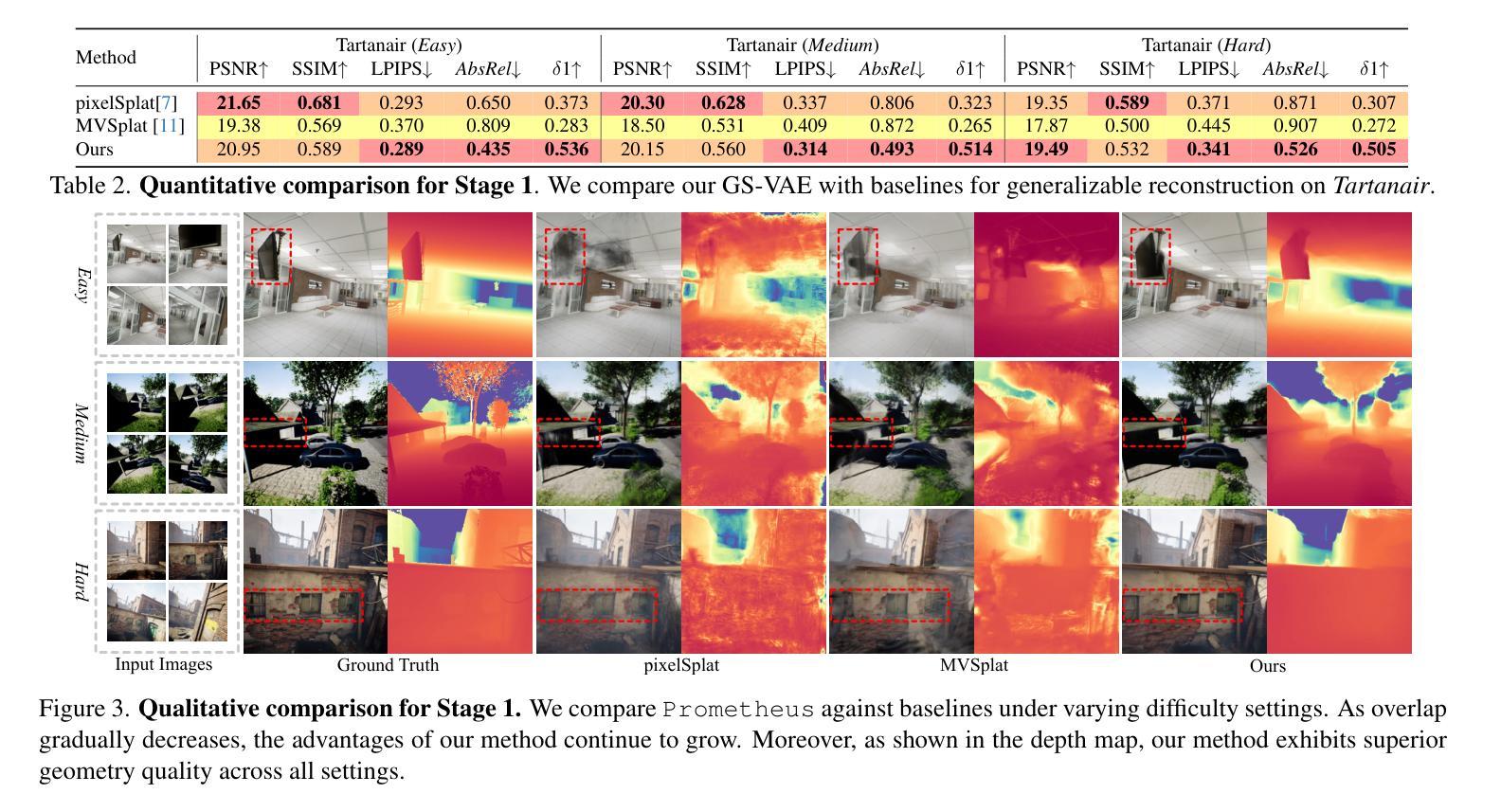

In this work, we introduce Prometheus, a 3D-aware latent diffusion model for text-to-3D generation at both object and scene levels in seconds. We formulate 3D scene generation as multi-view, feed-forward, pixel-aligned 3D Gaussian generation within the latent diffusion paradigm. To ensure generalizability, we build our model upon pre-trained text-to-image generation model with only minimal adjustments, and further train it using a large number of images from both single-view and multi-view datasets. Furthermore, we introduce an RGB-D latent space into 3D Gaussian generation to disentangle appearance and geometry information, enabling efficient feed-forward generation of 3D Gaussians with better fidelity and geometry. Extensive experimental results demonstrate the effectiveness of our method in both feed-forward 3D Gaussian reconstruction and text-to-3D generation. Project page: https://freemty.github.io/project-prometheus/

在这项工作中,我们介绍了Prometheus,这是一个3D感知潜在扩散模型,可在对象和场景级别实现文本到3D的即时生成。我们将3D场景生成公式化为潜在扩散范式内的多视角、前馈、像素对齐的3D高斯生成。为了确保模型的通用性,我们在基于预训练的文本到图像生成模型的基础上仅进行微调构建我们的模型,并使用大量的来自单视角和多视角数据集图像进一步训练。此外,我们将RGB-D潜在空间引入3D高斯生成中,以分离外观和几何信息,实现高效的前馈3D高斯生成,提高保真度和几何效果。广泛的实验结果证明了我们的方法在前馈3D高斯重建和文本到3D生成中的有效性。项目页面:https://freemty.github.io/project-prometheus/。

论文及项目相关链接

Summary

本文介绍了名为Prometheus的3D感知潜在扩散模型,该模型可在对象和场景级别实现文本到3D的即时生成。模型基于潜在扩散范式,将多视角、前馈、像素对齐的3D高斯生成应用于场景生成任务。模型在预训练文本到图像生成模型的基础上构建,通过调整较小的数据微调用于进一步训练模型的大型图像集数据涵盖单一视角和多种视角数据集,为增加其效果添加了RGB-D潜在空间进入高斯的维度以提高生成的三维图像的保真度和几何形状。实验证明该模型在多种任务上表现出优异性能。

Key Takeaways

- Prometheus是一个用于文本到三维生成的3D感知潜在扩散模型。

- 模型能够同时处理对象和场景级别的生成任务。

- 采用潜在扩散范式和多视角像素对齐进行场景生成。

- 模型在预训练文本到图像生成模型的基础上构建,并进行了大型图像集的数据微调。

点此查看论文截图

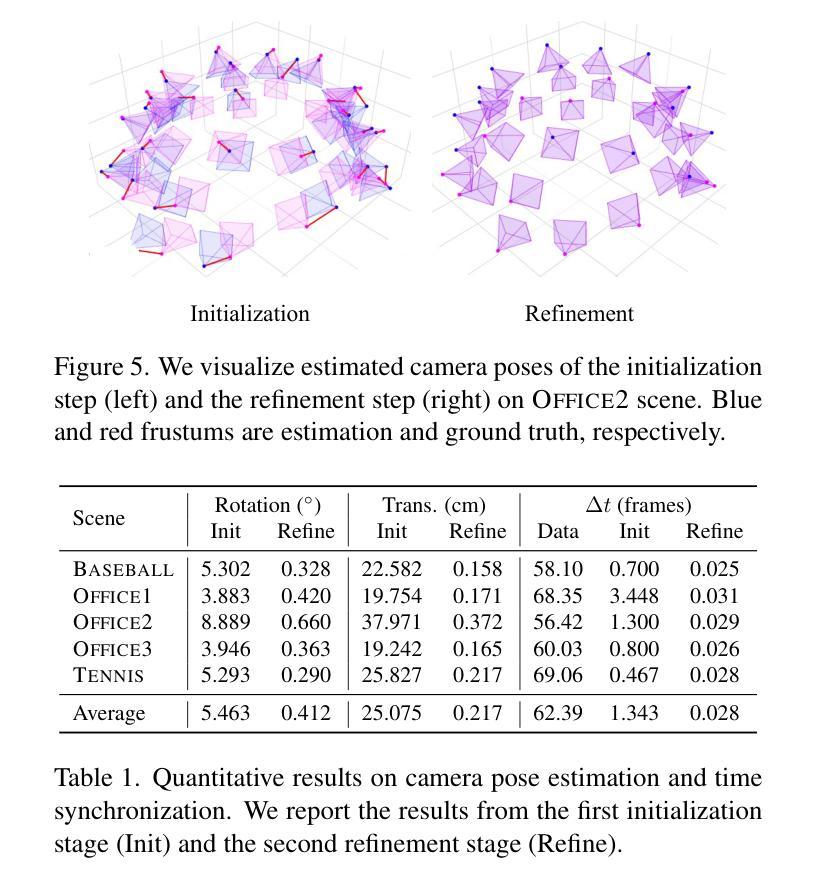

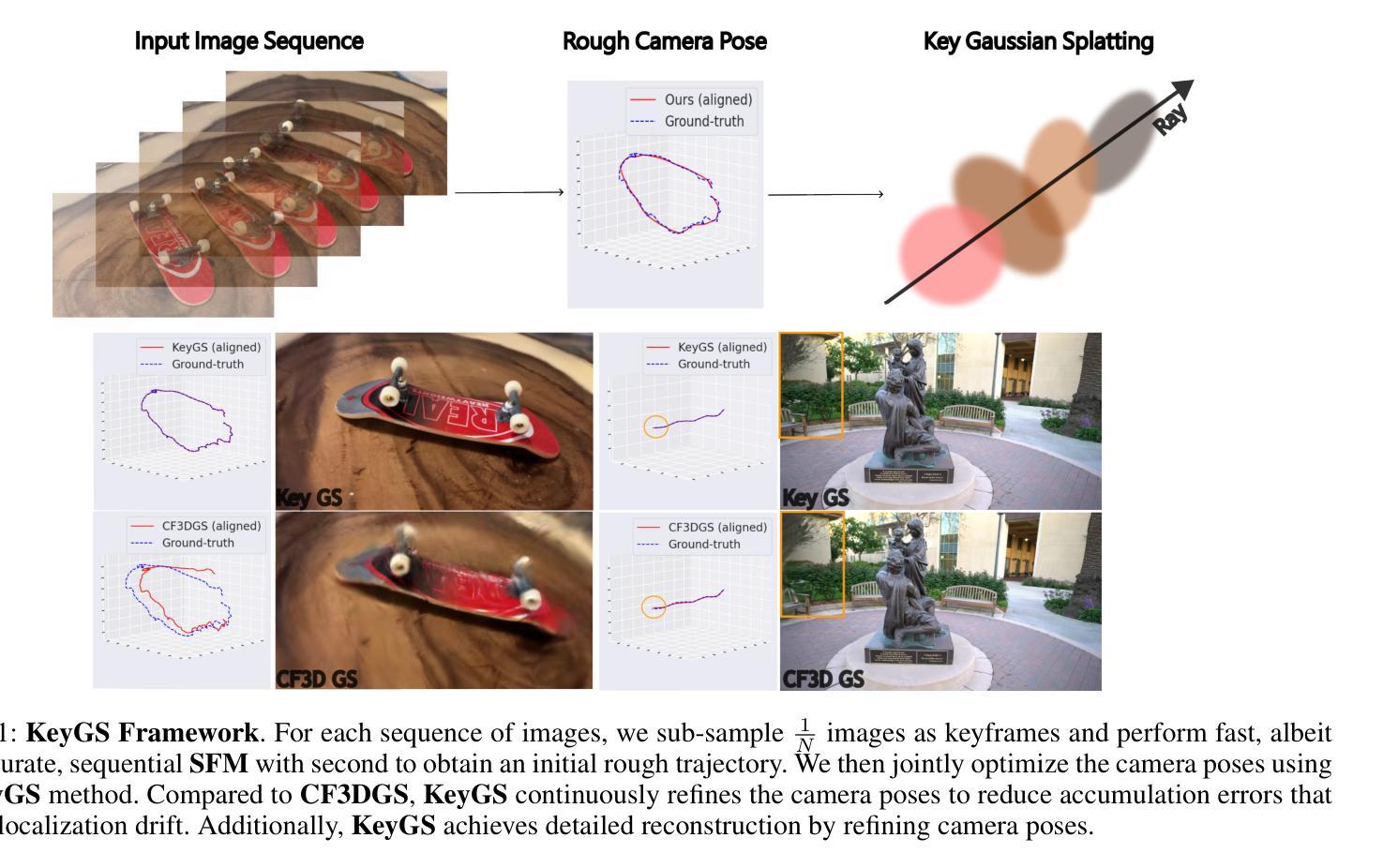

KeyGS: A Keyframe-Centric Gaussian Splatting Method for Monocular Image Sequences

Authors:Keng-Wei Chang, Zi-Ming Wang, Shang-Hong Lai

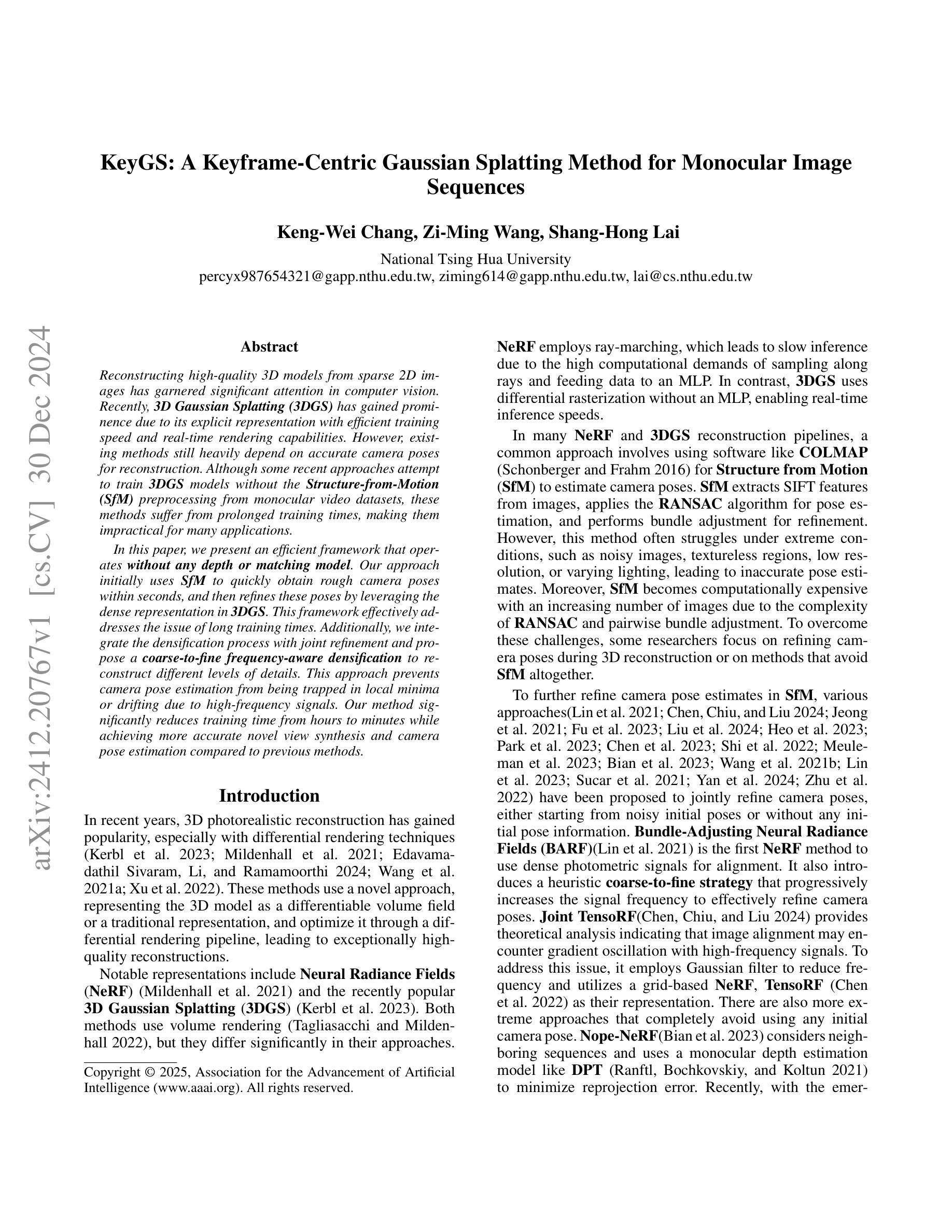

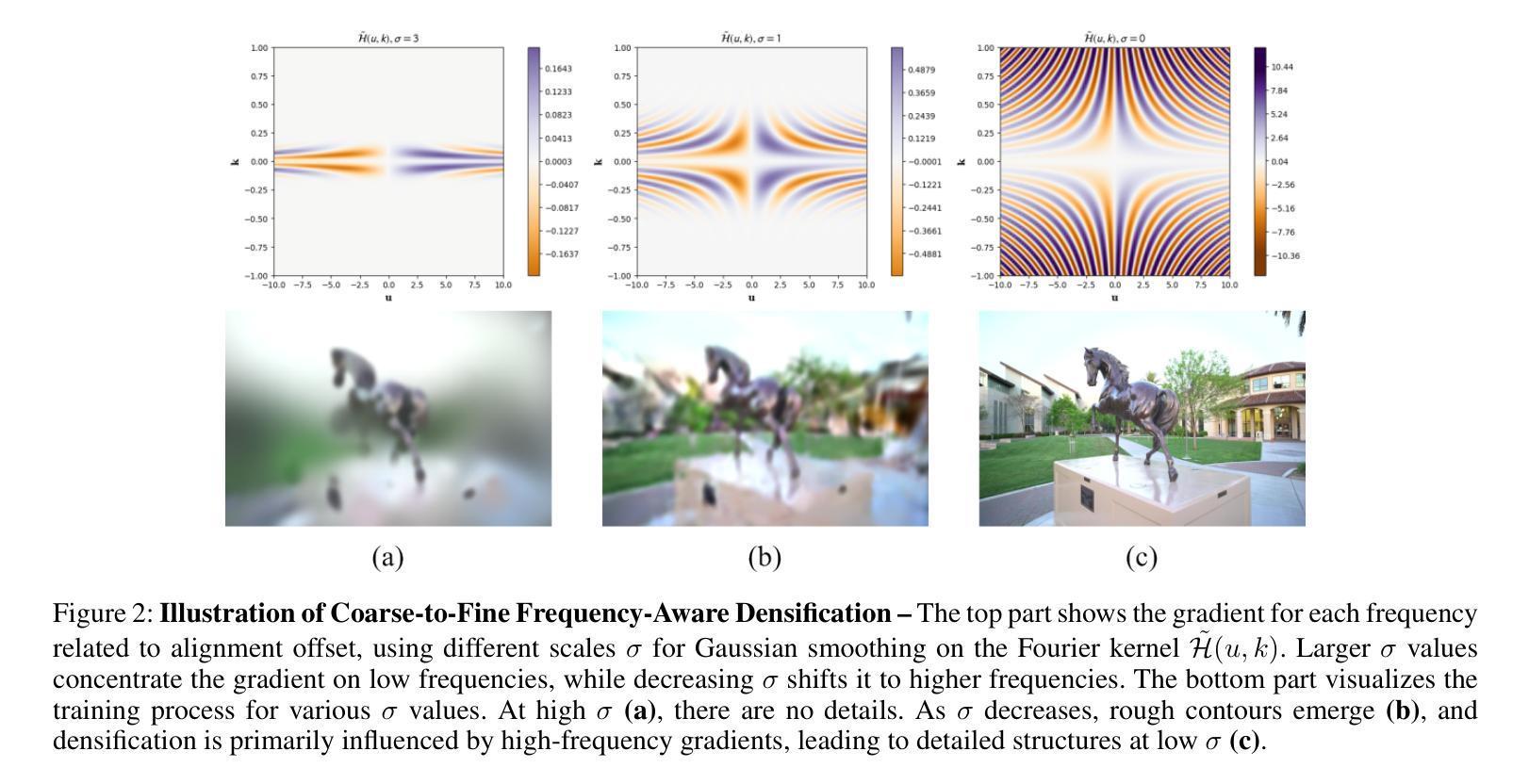

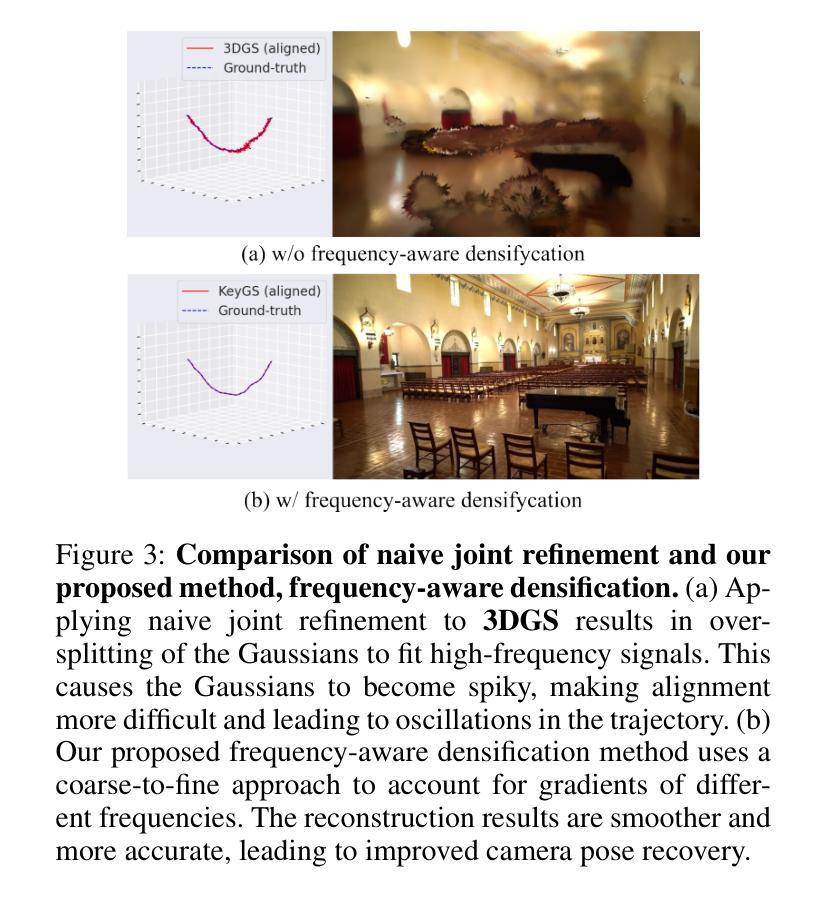

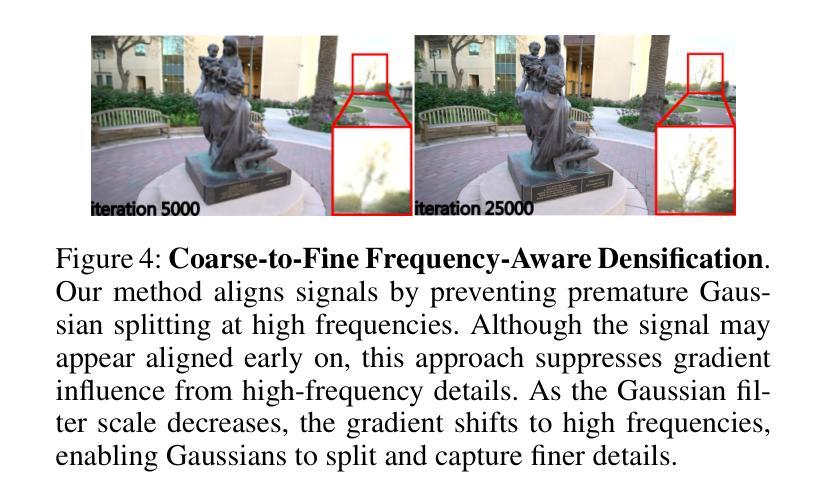

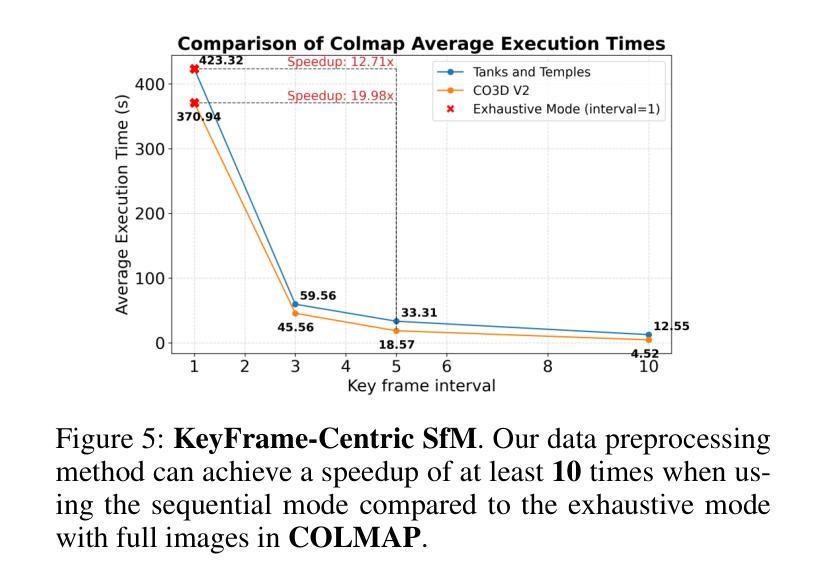

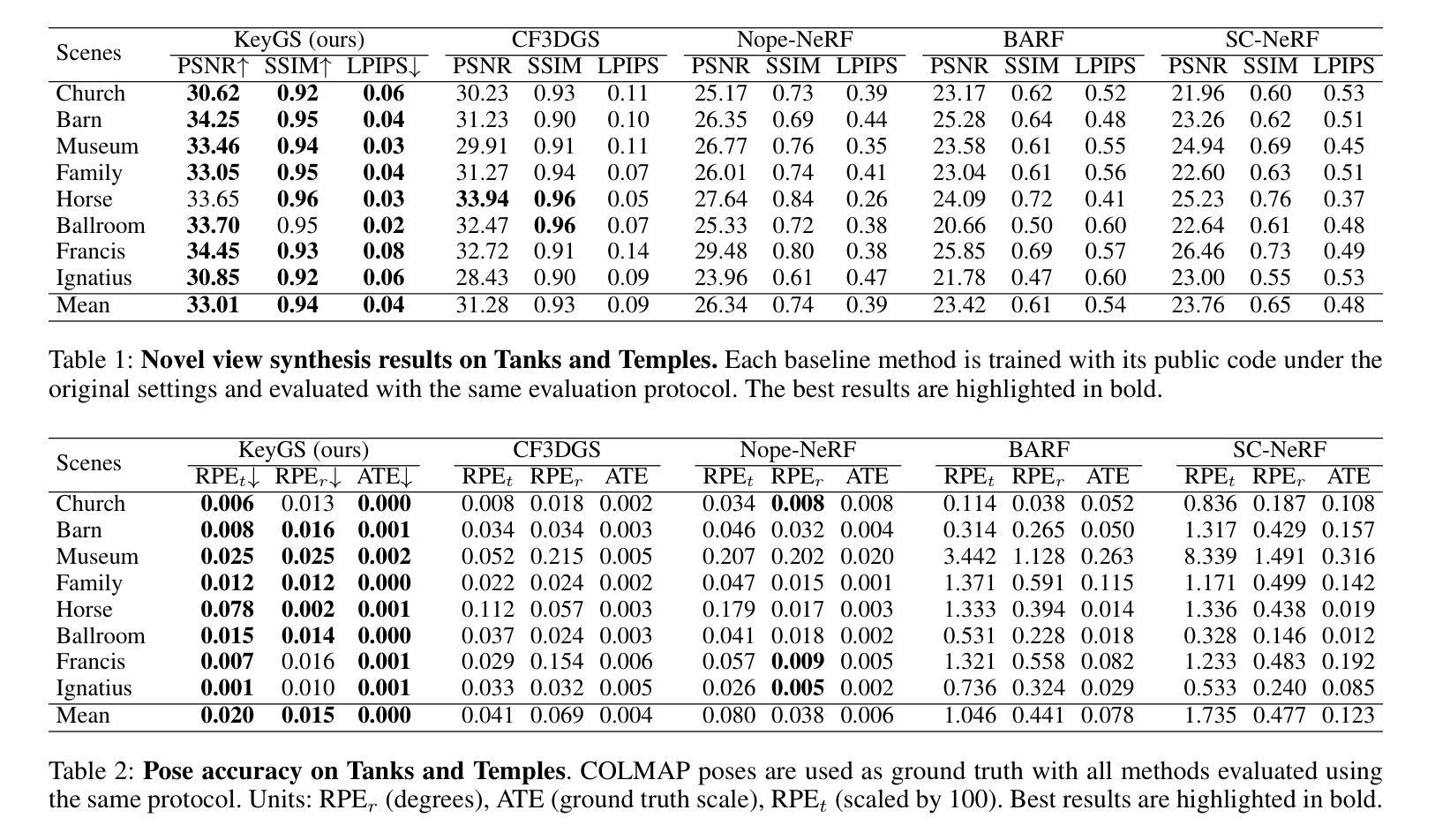

Reconstructing high-quality 3D models from sparse 2D images has garnered significant attention in computer vision. Recently, 3D Gaussian Splatting (3DGS) has gained prominence due to its explicit representation with efficient training speed and real-time rendering capabilities. However, existing methods still heavily depend on accurate camera poses for reconstruction. Although some recent approaches attempt to train 3DGS models without the Structure-from-Motion (SfM) preprocessing from monocular video datasets, these methods suffer from prolonged training times, making them impractical for many applications. In this paper, we present an efficient framework that operates without any depth or matching model. Our approach initially uses SfM to quickly obtain rough camera poses within seconds, and then refines these poses by leveraging the dense representation in 3DGS. This framework effectively addresses the issue of long training times. Additionally, we integrate the densification process with joint refinement and propose a coarse-to-fine frequency-aware densification to reconstruct different levels of details. This approach prevents camera pose estimation from being trapped in local minima or drifting due to high-frequency signals. Our method significantly reduces training time from hours to minutes while achieving more accurate novel view synthesis and camera pose estimation compared to previous methods.

从稀疏的2D图像重建高质量的三维模型在计算机视觉领域已经引起了广泛的关注。近期,由于三维高斯映射(3DGS)具有高效的训练速度和实时渲染能力,其显式表示方法受到了广泛关注。然而,现有的重建方法仍然严重依赖于准确的相机姿态。尽管最近的一些方法试图在不使用从单目视频数据集中的运动结构(SfM)预处理的情况下训练3DGS模型,但这些方法的训练时间延长,使得它们在许多应用中不切实际。在本文中,我们提出了一种有效的框架,该框架无需任何深度或匹配模型即可运行。我们的方法首先使用SfM在几秒内快速获得粗略的相机姿态,然后通过利用3DGS中的密集表示来优化这些姿态。该框架有效地解决了训练时间长的问题。此外,我们将稠化过程与联合优化相结合,并提出一种从粗到细的频率感知稠化方法,以重建不同级别的细节。这种方法防止了由于高频信号导致的相机姿态估计陷入局部最小值或漂移。我们的方法将训练时间从数小时缩短到数分钟,同时与以前的方法相比,实现了更准确的新视图合成和相机姿态估计。

论文及项目相关链接

PDF AAAI 2025

Summary

本文提出了一种高效的框架,利用三维高斯拼贴(3DGS)技术,无需深度或匹配模型即可进行工作。该框架首先利用SfM快速获取粗略的相机姿态,然后结合3DGS的密集表示进行姿态优化,解决了长时间训练的问题。同时,通过整合粗到细的频域感知密集化过程,实现了不同层次的细节重建,提高了新型视角合成和相机姿态估计的准确性。

Key Takeaways

- 3D Gaussian Splatting (3DGS)具有显式表示、高效训练速度和实时渲染能力,已受到广泛关注。

- 现有方法仍严重依赖于准确的相机姿态进行重建。

- 本文提出了一种无需深度或匹配模型的框架,解决了长时间训练的问题。

- 框架首先利用SfM快速获取粗略相机姿态。

- 通过结合3DGS的密集表示,对相机姿态进行优化。

- 整合粗到细的频域感知密集化过程,实现不同层次的细节重建。

点此查看论文截图

4D Gaussian Splatting: Modeling Dynamic Scenes with Native 4D Primitives

Authors:Zeyu Yang, Zijie Pan, Xiatian Zhu, Li Zhang, Yu-Gang Jiang, Philip H. S. Torr

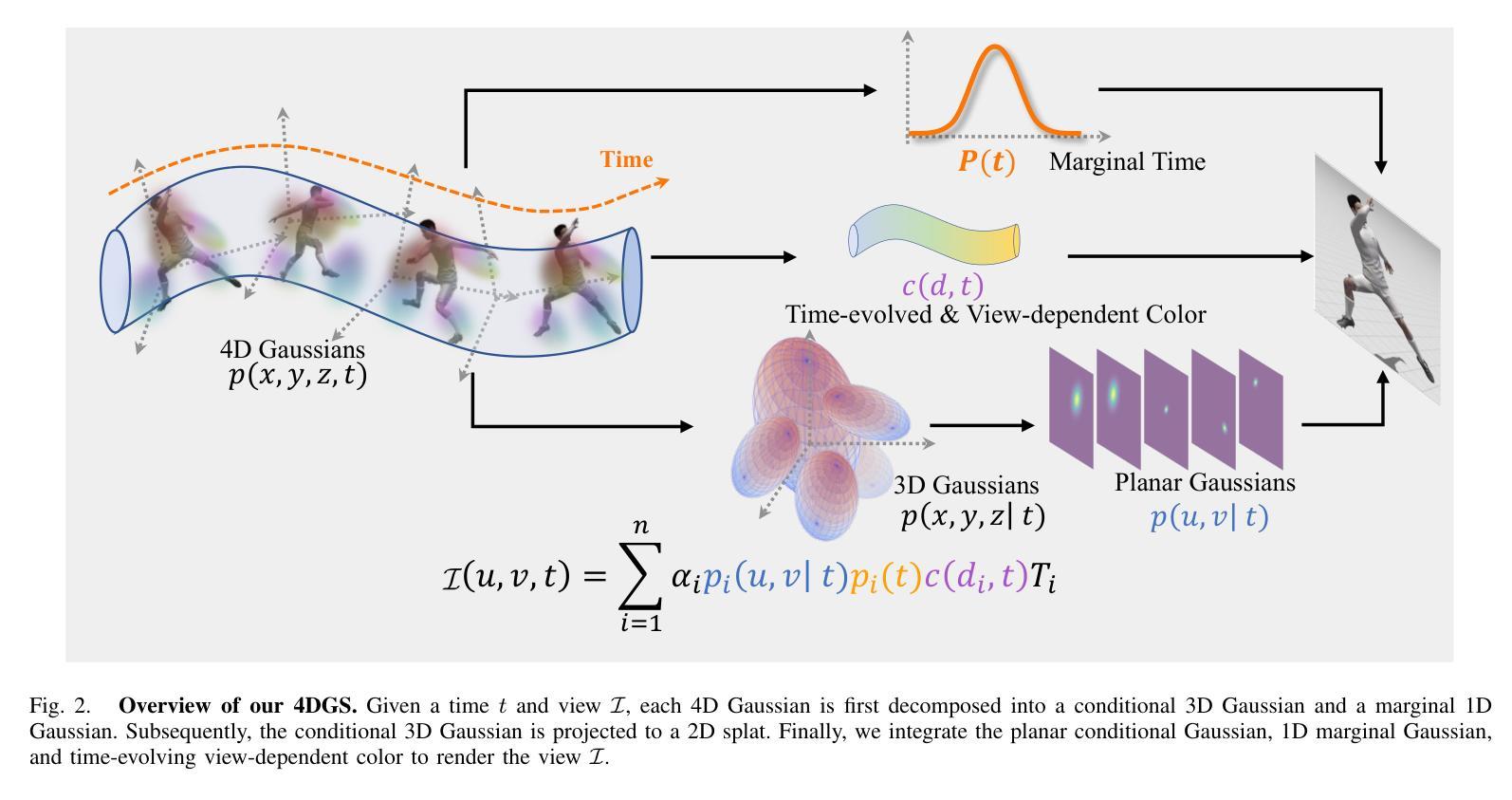

Dynamic 3D scene representation and novel view synthesis from captured videos are crucial for enabling immersive experiences required by AR/VR and metaverse applications. However, this task is challenging due to the complexity of unconstrained real-world scenes and their temporal dynamics. In this paper, we frame dynamic scenes as a spatio-temporal 4D volume learning problem, offering a native explicit reformulation with minimal assumptions about motion, which serves as a versatile dynamic scene learning framework. Specifically, we represent a target dynamic scene using a collection of 4D Gaussian primitives with explicit geometry and appearance features, dubbed as 4D Gaussian splatting (4DGS). This approach can capture relevant information in space and time by fitting the underlying spatio-temporal volume. Modeling the spacetime as a whole with 4D Gaussians parameterized by anisotropic ellipses that can rotate arbitrarily in space and time, our model can naturally learn view-dependent and time-evolved appearance with 4D spherindrical harmonics. Notably, our 4DGS model is the first solution that supports real-time rendering of high-resolution, photorealistic novel views for complex dynamic scenes. To enhance efficiency, we derive several compact variants that effectively reduce memory footprint and mitigate the risk of overfitting. Extensive experiments validate the superiority of 4DGS in terms of visual quality and efficiency across a range of dynamic scene-related tasks (e.g., novel view synthesis, 4D generation, scene understanding) and scenarios (e.g., single object, indoor scenes, driving environments, synthetic and real data).

动态三维场景表示和从捕获的视频中合成新型视图对于增强现实(AR)/虚拟现实(VR)和元宇宙应用所需沉浸式体验至关重要。然而,由于不受限制的现实场景复杂性和其时间动态性,此任务具有挑战性。在本文中,我们将动态场景作为时空四维体积学习问题加以描述,提供了一个本地显式重构的方法,对运动做出了极少假设,从而作为一个通用动态场景学习框架。具体来说,我们使用一组具有明确几何和外观特征的四维高斯原始数据来表示目标动态场景,称为四维高斯喷涂(4DGS)。此方法可以通过拟合底层时空体积来捕获空间和时间的有关信息。将时空作为整体用四维高斯建模,通过由可任意旋转的空间和时间中的椭圆体参数化,我们的模型能够自然地利用四维球面谐波学习视点和随时间变化的外观。值得注意的是,我们的4DGS模型是第一个支持复杂动态场景的高分辨率、照片级真实感新视图的实时渲染的解决方案。为了提高效率,我们推导出了几种紧凑变体,有效地减少了内存占用并降低了过拟合的风险。大量实验验证了4DGS在多种动态场景相关任务(例如新视图合成、四维生成、场景理解)和场景(例如单个物体、室内场景、驾驶环境、合成和真实数据)的视觉质量和效率方面的优越性。

论文及项目相关链接

PDF Journal extension of ICLR 2024. arXiv admin note: text overlap with arXiv:2310.10642

Summary

本文提出一种基于4D高斯原始数据的动态场景表示方法,称为4D高斯喷涂(4DGS)。该方法将动态场景视为一个时空体积学习问题,并使用隐式几何和外观特征进行建模。通过拟合时空体积,可以捕捉空间和时间的相关信息。此外,该模型支持对复杂动态场景进行高分辨率、真实感的新型实时渲染,提高了效率和视觉质量。实验表明,4DGS在多种动态场景相关任务和场景中表现优越。

Key Takeaways

- 动态场景表示为时空体积学习问题,提出4D高斯喷涂(4DGS)方法。

- 4DGS使用隐式几何和外观特征进行建模,捕捉空间和时间的相关信息。

- 4DGS支持对复杂动态场景进行高分辨率、真实感的新型实时渲染。

- 4DGS在多种动态场景相关任务(如新型视图合成、4D生成、场景理解)和场景中表现优越。

- 通过实验验证了4DGS在视觉质量和效率方面的优势。

- 4DGS能够自然地学习视角依赖和时间演化的外观。

点此查看论文截图

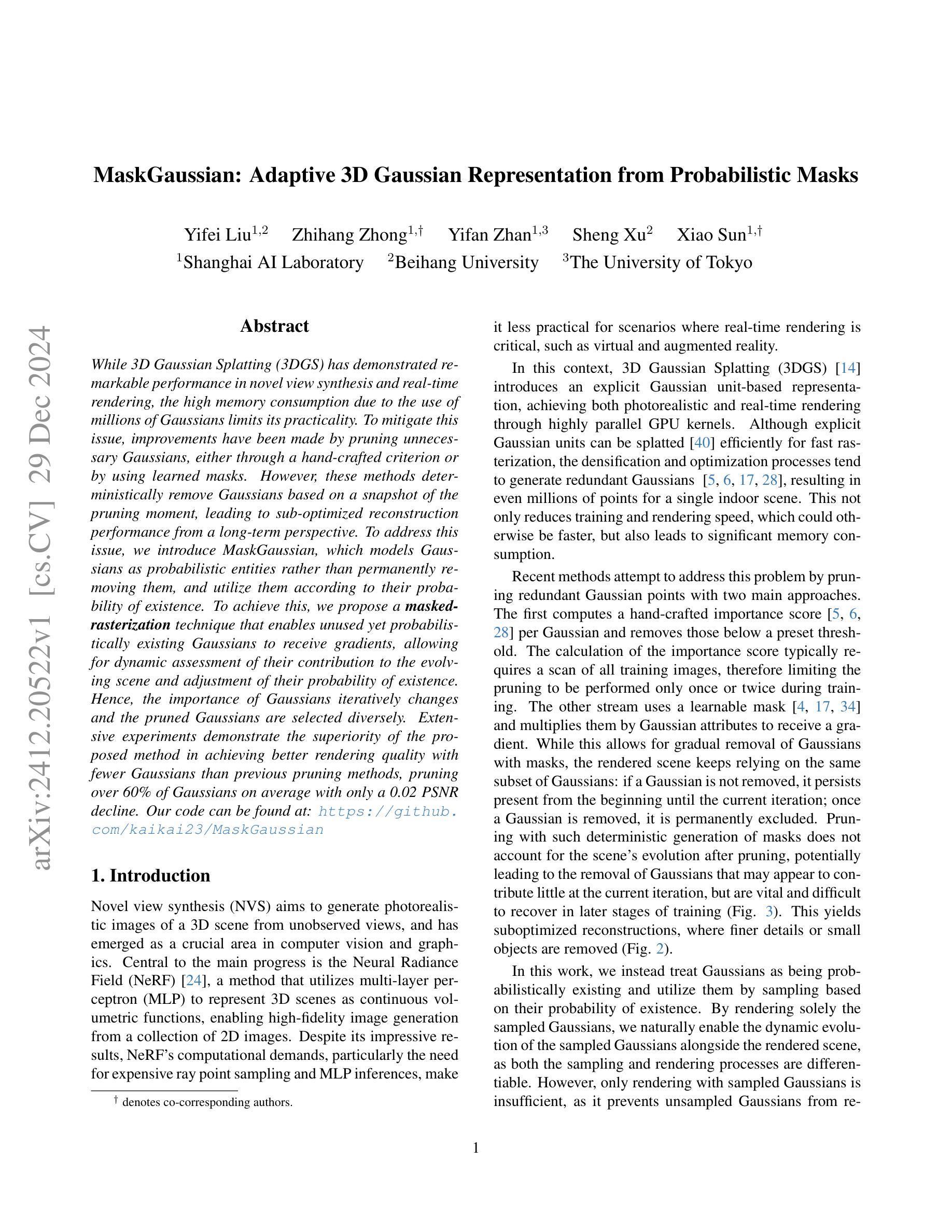

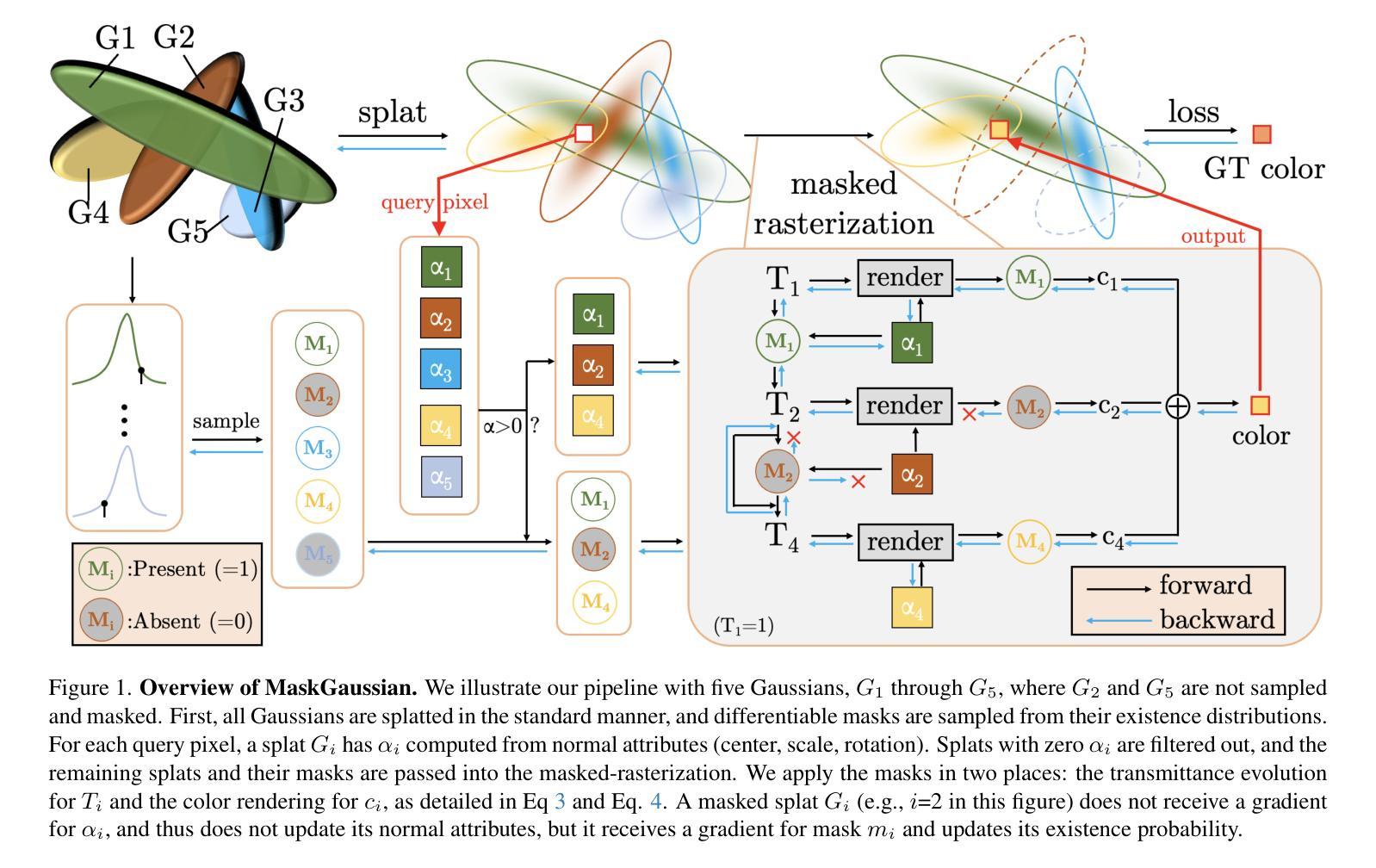

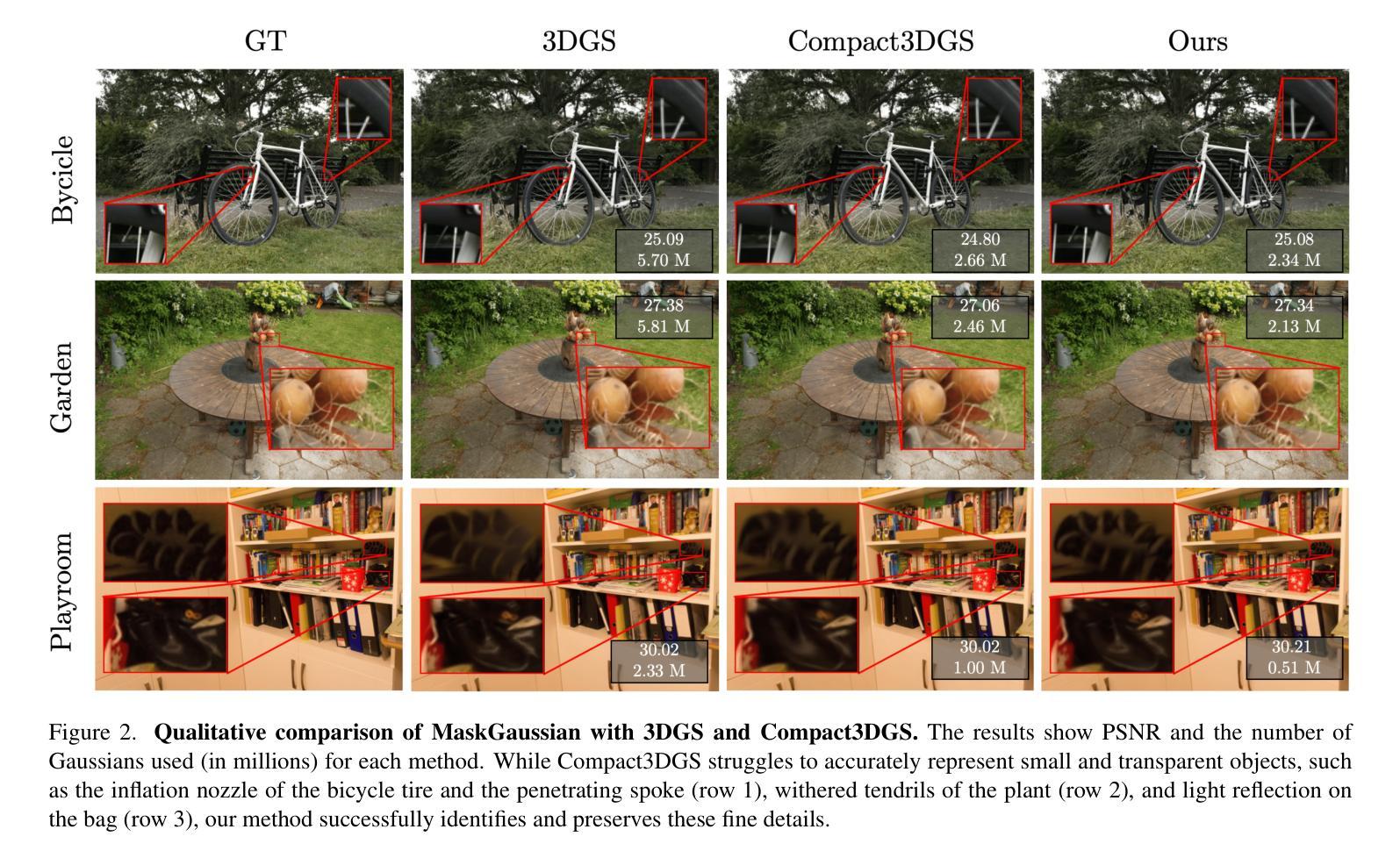

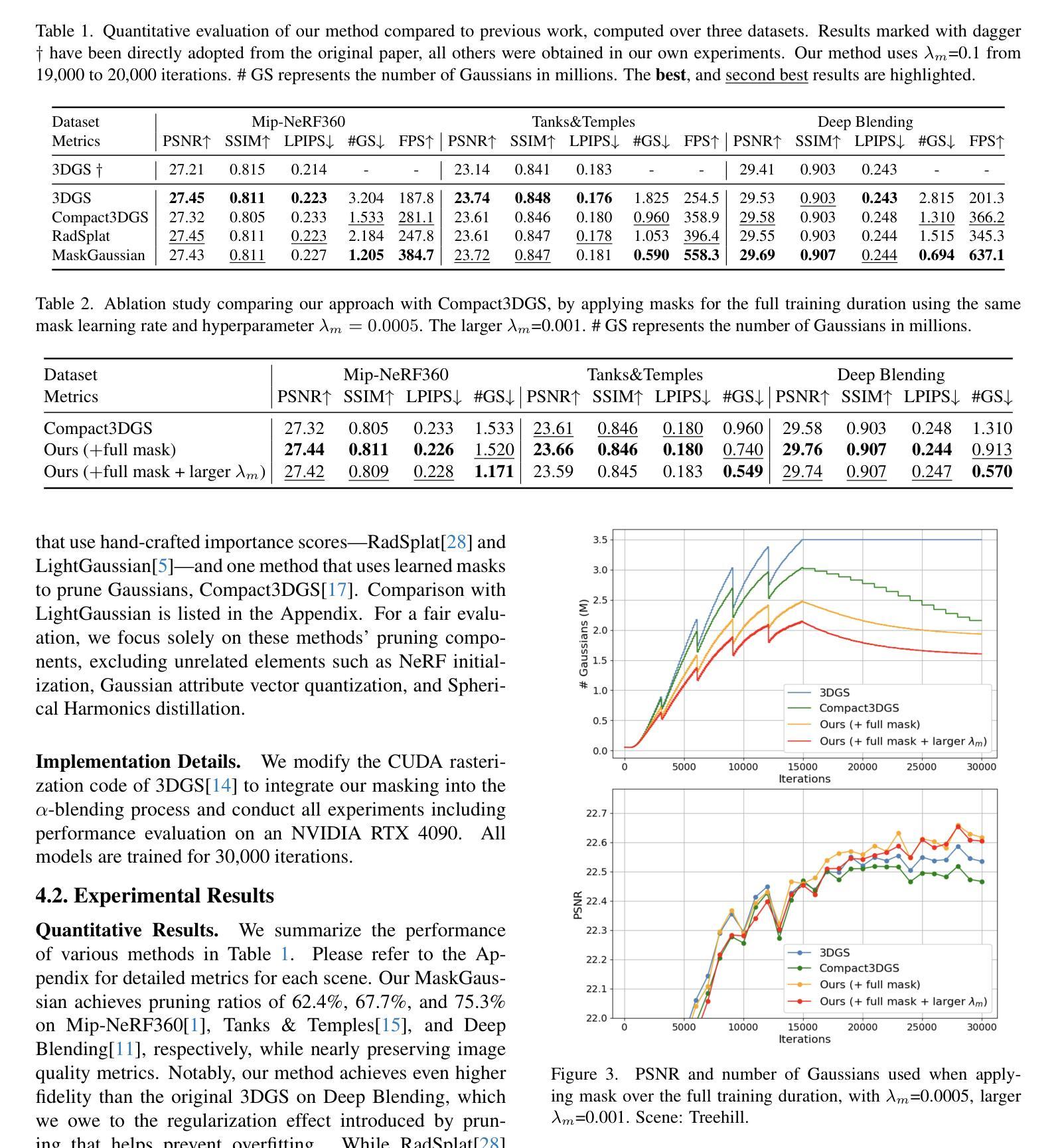

MaskGaussian: Adaptive 3D Gaussian Representation from Probabilistic Masks

Authors:Yifei Liu, Zhihang Zhong, Yifan Zhan, Sheng Xu, Xiao Sun

While 3D Gaussian Splatting (3DGS) has demonstrated remarkable performance in novel view synthesis and real-time rendering, the high memory consumption due to the use of millions of Gaussians limits its practicality. To mitigate this issue, improvements have been made by pruning unnecessary Gaussians, either through a hand-crafted criterion or by using learned masks. However, these methods deterministically remove Gaussians based on a snapshot of the pruning moment, leading to sub-optimized reconstruction performance from a long-term perspective. To address this issue, we introduce MaskGaussian, which models Gaussians as probabilistic entities rather than permanently removing them, and utilize them according to their probability of existence. To achieve this, we propose a masked-rasterization technique that enables unused yet probabilistically existing Gaussians to receive gradients, allowing for dynamic assessment of their contribution to the evolving scene and adjustment of their probability of existence. Hence, the importance of Gaussians iteratively changes and the pruned Gaussians are selected diversely. Extensive experiments demonstrate the superiority of the proposed method in achieving better rendering quality with fewer Gaussians than previous pruning methods, pruning over 60% of Gaussians on average with only a 0.02 PSNR decline. Our code can be found at: https://github.com/kaikai23/MaskGaussian

虽然3D高斯贴图(3DGS)在新型视角合成和实时渲染方面表现出卓越的性能,但由于使用了数百万个高斯,其高内存消耗限制了实用性。为了缓解这个问题,已经通过删除不必要的高斯进行了改进,方法包括手工制定的标准或使用学习到的掩膜。然而,这些方法基于剪枝时刻的快照确定性地删除高斯,从长远来看导致重建性能次优。为了解决这一问题,我们引入了MaskGaussian,它将高斯建模为概率实体,而不是永久删除它们,并根据其存在的概率来使用它们。为了实现这一点,我们提出了一种掩膜栅格化技术,使未使用但概率存在的高斯能够获得梯度,从而能够动态评估它们对不断变化的场景的贡献并调整其存在的概率。因此,高斯的重要性会迭代地改变,被剪枝的高斯选择也会多样化。大量实验表明,所提出的方法在达到更好的渲染质量方面优于以前的高斯剪枝方法,平均剪枝超过60%的高斯,而PSNR仅下降0.02。我们的代码可在以下网址找到:https://github.com/kaikai23/MaskGaussian

论文及项目相关链接

Summary

本文讨论了使用概率建模优化三维高斯混合模型的方法。传统的剪枝方法直接删除某些高斯,但可能会导致重建性能不佳。新提出的MaskGaussian模型则根据概率来动态调整每个高斯的使用情况,即使删除一部分也能提高渲染质量。这种方法采用了带有掩膜的栅格化技术,通过这种方法可以实现更佳的高斯筛选,从而达到更佳的渲染效果。该方法减少了高斯使用的数量,且影响有限。项目代码已在GitHub上公开。

Key Takeaways

- MaskGaussian方法利用概率建模对三维高斯混合模型进行优化,通过动态调整每个高斯的使用情况,提高渲染质量。

- 传统剪枝方法基于特定时刻的剪枝决策,可能会导致长期重建性能的次优化。

- MaskGaussian模型通过引入掩膜技术实现了动态的剪枝决策,即使剪去一部分高斯,也可以改善场景的渲染质量。

点此查看论文截图

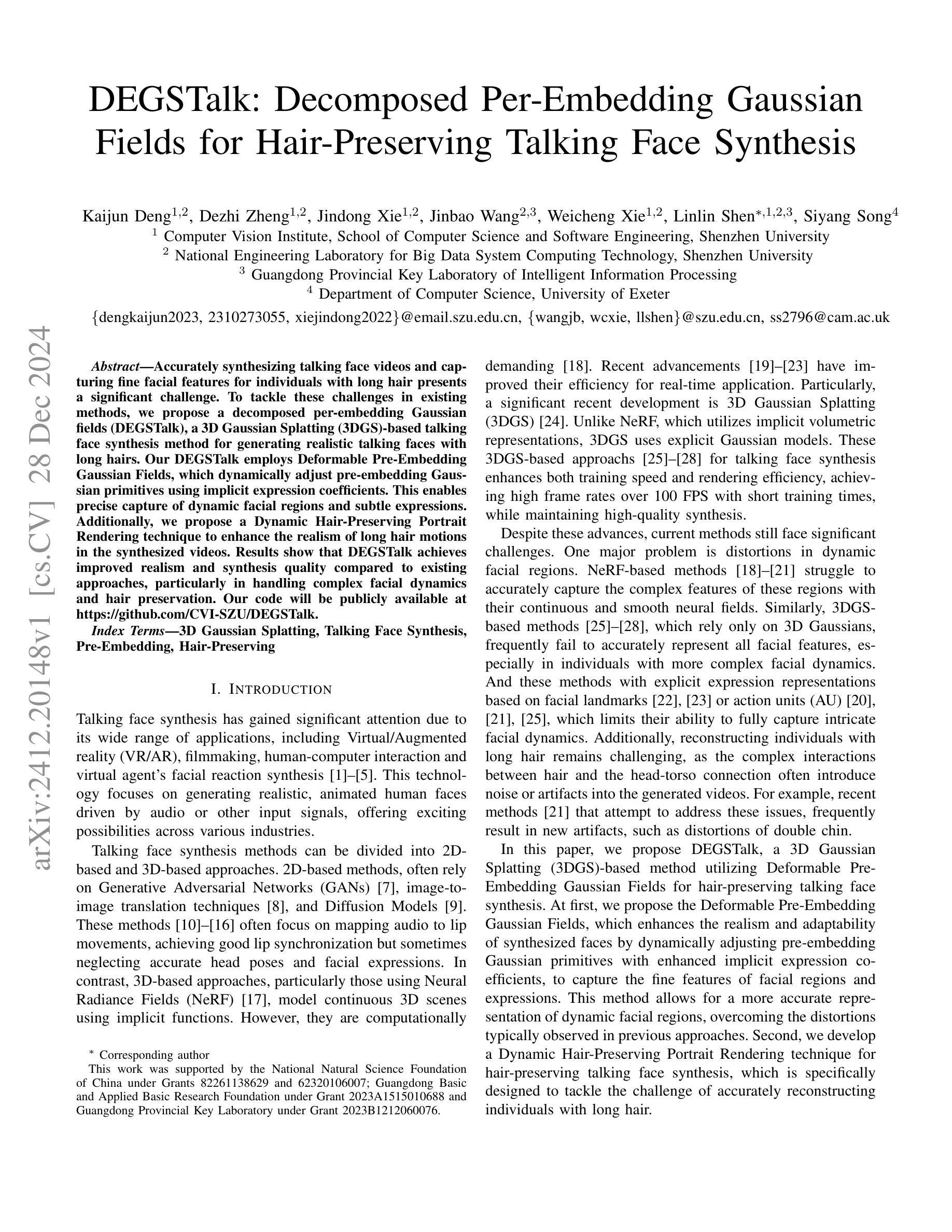

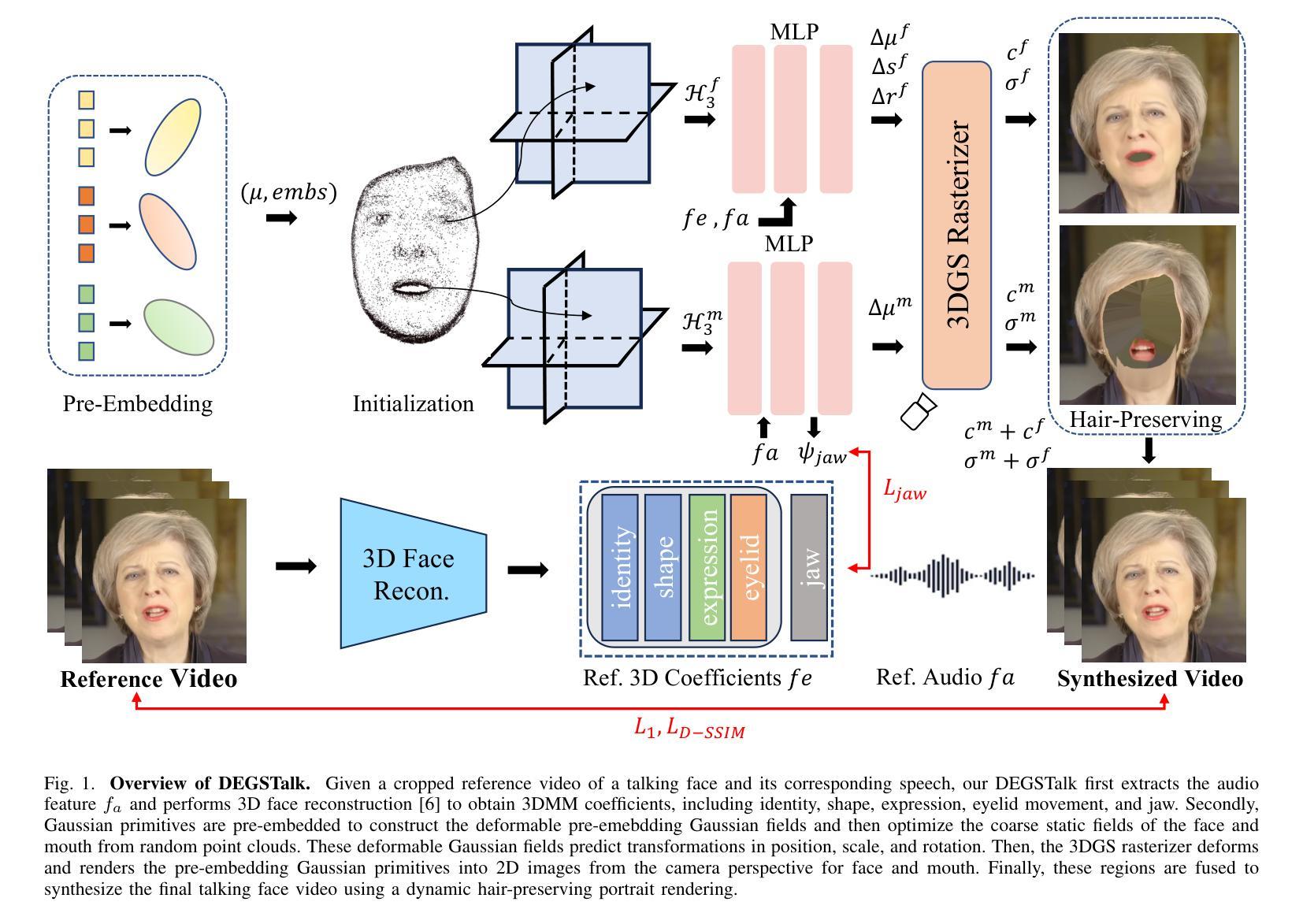

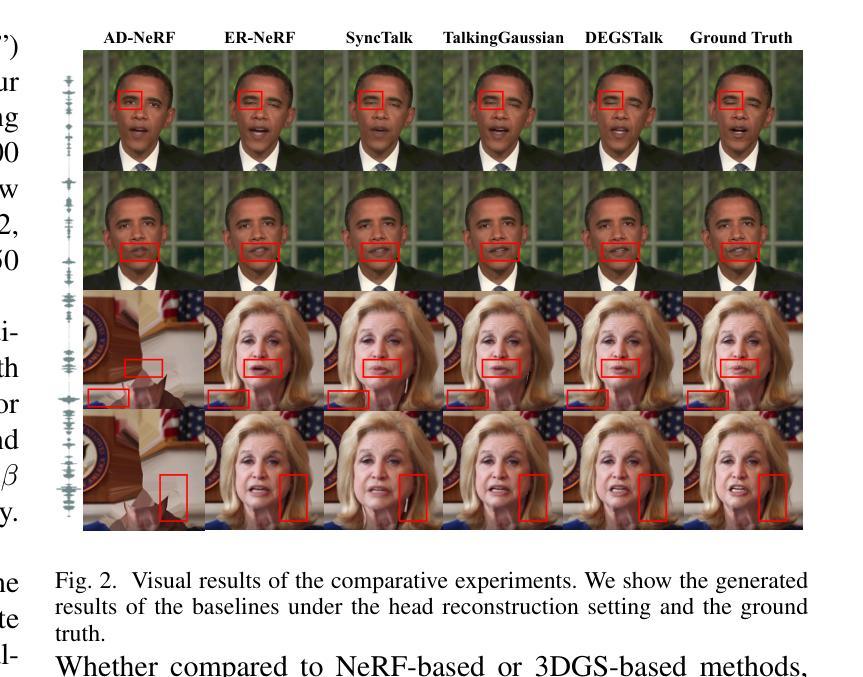

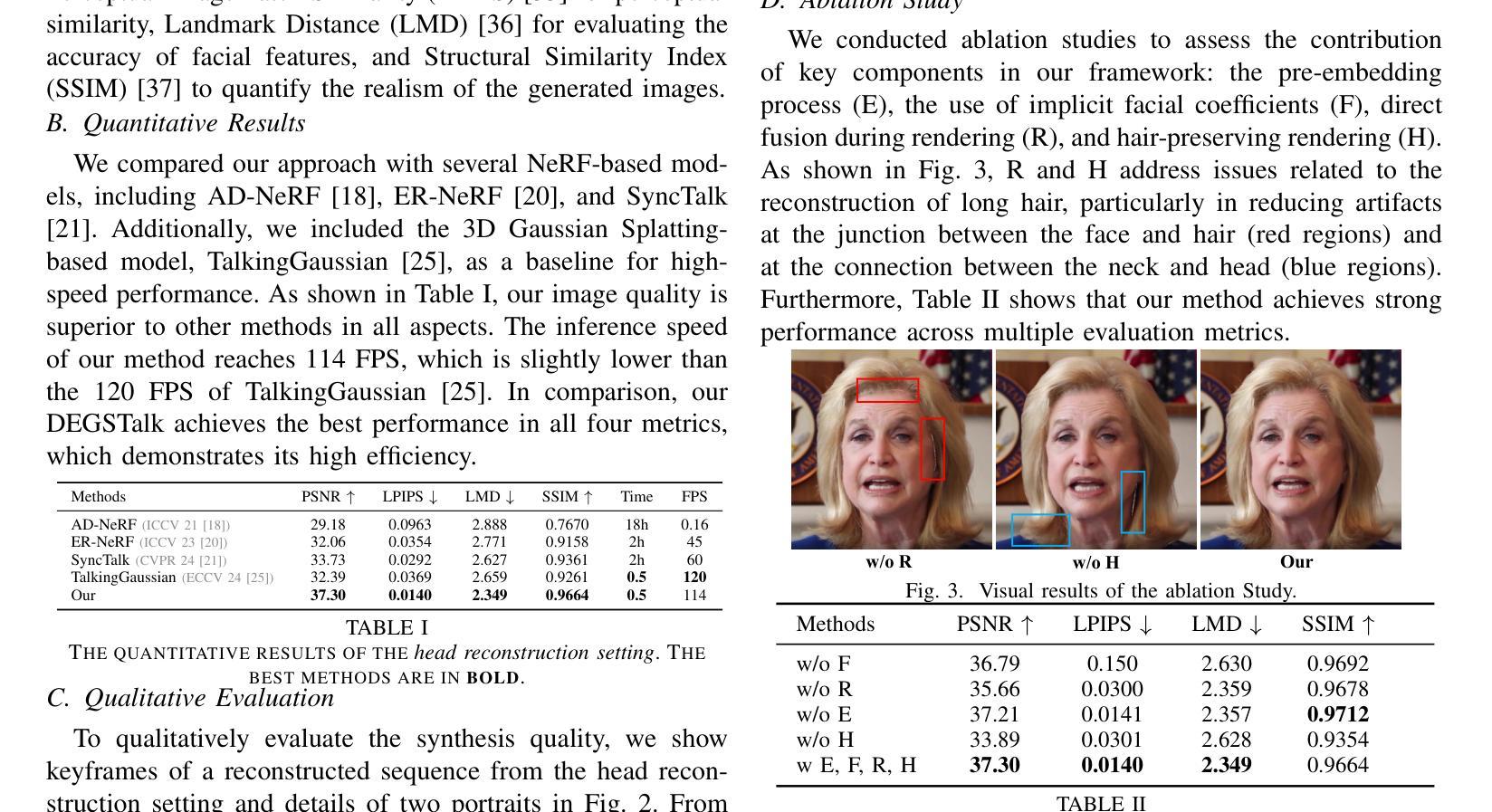

DEGSTalk: Decomposed Per-Embedding Gaussian Fields for Hair-Preserving Talking Face Synthesis

Authors:Kaijun Deng, Dezhi Zheng, Jindong Xie, Jinbao Wang, Weicheng Xie, Linlin Shen, Siyang Song

Accurately synthesizing talking face videos and capturing fine facial features for individuals with long hair presents a significant challenge. To tackle these challenges in existing methods, we propose a decomposed per-embedding Gaussian fields (DEGSTalk), a 3D Gaussian Splatting (3DGS)-based talking face synthesis method for generating realistic talking faces with long hairs. Our DEGSTalk employs Deformable Pre-Embedding Gaussian Fields, which dynamically adjust pre-embedding Gaussian primitives using implicit expression coefficients. This enables precise capture of dynamic facial regions and subtle expressions. Additionally, we propose a Dynamic Hair-Preserving Portrait Rendering technique to enhance the realism of long hair motions in the synthesized videos. Results show that DEGSTalk achieves improved realism and synthesis quality compared to existing approaches, particularly in handling complex facial dynamics and hair preservation. Our code will be publicly available at https://github.com/CVI-SZU/DEGSTalk.

准确合成说话人脸视频并为长发个体捕捉精细面部特征是一项重大挑战。为了应对现有方法中的这些挑战,我们提出了一种分解嵌入高斯场(DEGSTalk)的方法,这是一种基于三维高斯扩展(3DGS)的说话人脸合成方法,用于生成具有长发的真实说话人脸。我们的DEGSTalk采用可变形预嵌入高斯场,使用隐式表达式系数动态调整预嵌入高斯原始数据。这能够实现动态面部区域和微妙表情的精确捕捉。此外,我们还提出了一种动态保发肖像渲染技术,以提高合成视频中长发运动的真实感。结果表明,与现有方法相比,DEGSTalk在实现真实感和合成质量方面有所提高,特别是在处理复杂的面部动态和保持头发方面。我们的代码将在https://github.com/CVI-SZU/DEGSTalk公开可用。

论文及项目相关链接

PDF Accepted by ICASSP 2025

Summary

本文提出了一种基于3D高斯拼贴(3DGS)的说话面孔合成方法,名为分解嵌入高斯场(DEGSTalk),用于生成具有长发的真实感说话面孔。该方法采用可变形预嵌入高斯场,通过隐式表达式系数动态调整预嵌入高斯原始数据,能精确捕捉面部动态区域和细微表情。同时,提出了一种动态保发肖像渲染技术,提高了合成视频中长发运动的真实感。相较于现有方法,DEGSTalk在面部动态和头发保护方面更具真实感和合成质量。

Key Takeaways

- 提出了基于3D高斯拼贴(3DGS)的说话面孔合成方法DEGSTalk,用于生成具有长发的真实感说话面孔。

- DEGSTalk采用可变形预嵌入高斯场,能精确捕捉面部动态区域和细微表情。

- DEGSTalk通过动态调整预嵌入高斯原始数据,提高了面部合成的真实感和质量。

- 提出了动态保发肖像渲染技术,以改进合成视频中长发的运动表现。

- DEGSTalk在复杂面部动态和头发保护方面较现有方法有所提升。

- DEGSTalk的代码将公开在GitHub上,方便其他研究者使用和进一步开发。

点此查看论文截图

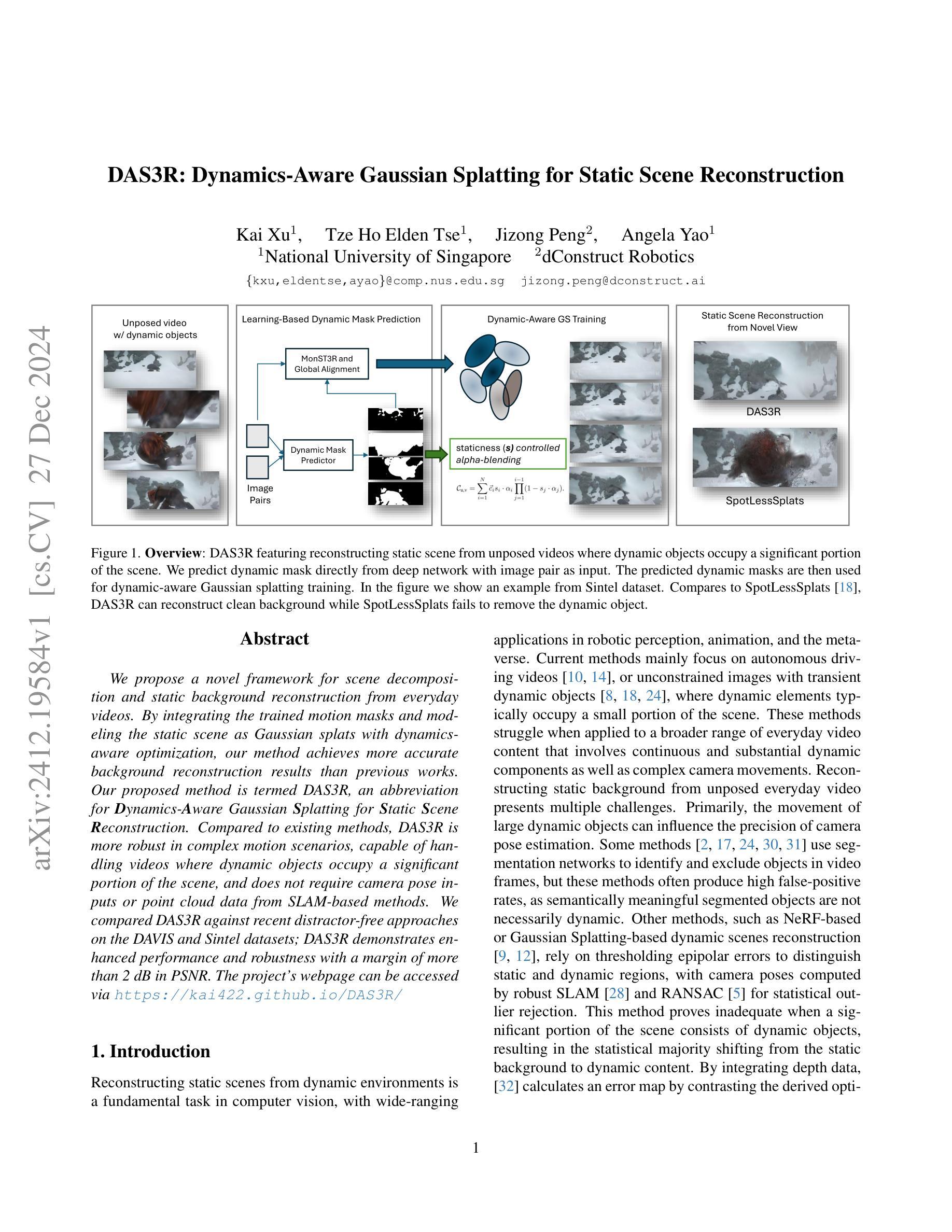

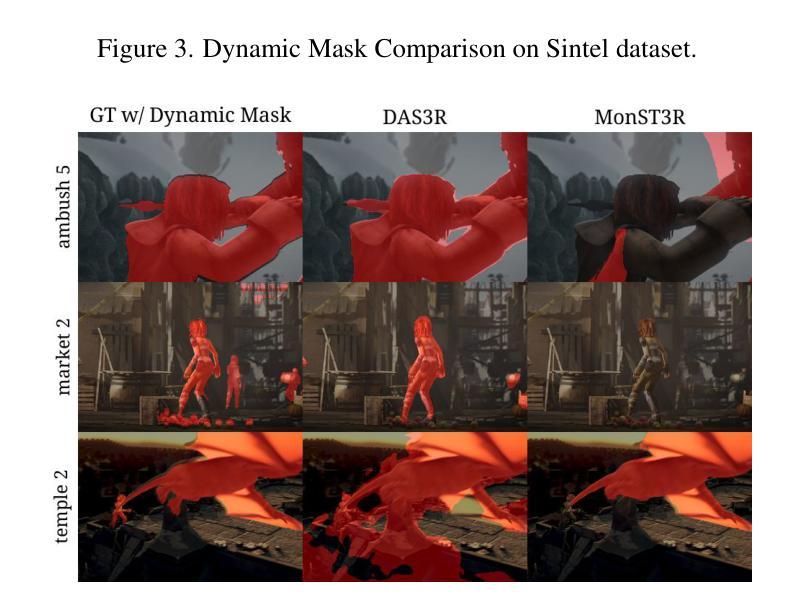

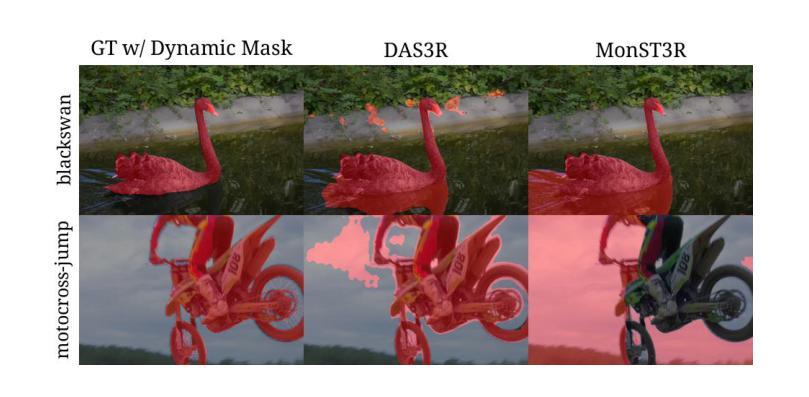

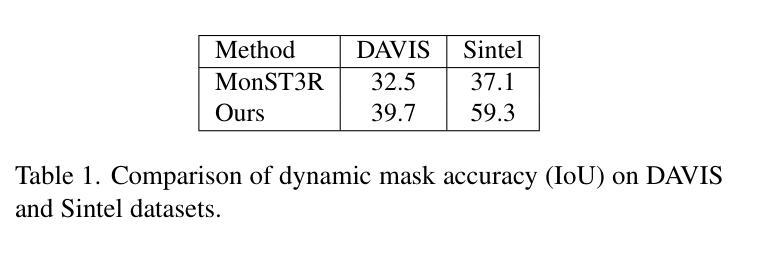

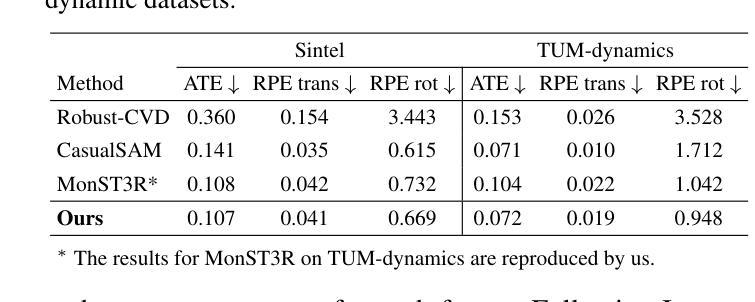

DAS3R: Dynamics-Aware Gaussian Splatting for Static Scene Reconstruction

Authors:Kai Xu, Tze Ho Elden Tse, Jizong Peng, Angela Yao

We propose a novel framework for scene decomposition and static background reconstruction from everyday videos. By integrating the trained motion masks and modeling the static scene as Gaussian splats with dynamics-aware optimization, our method achieves more accurate background reconstruction results than previous works. Our proposed method is termed DAS3R, an abbreviation for Dynamics-Aware Gaussian Splatting for Static Scene Reconstruction. Compared to existing methods, DAS3R is more robust in complex motion scenarios, capable of handling videos where dynamic objects occupy a significant portion of the scene, and does not require camera pose inputs or point cloud data from SLAM-based methods. We compared DAS3R against recent distractor-free approaches on the DAVIS and Sintel datasets; DAS3R demonstrates enhanced performance and robustness with a margin of more than 2 dB in PSNR. The project’s webpage can be accessed via \url{https://kai422.github.io/DAS3R/}

我们提出一种新颖的框架,用于从日常视频中分解场景并重建静态背景。通过整合训练后的运动掩膜,并将静态场景建模为具有动态感知优化的高斯斑块,我们的方法实现了比先前工作更准确的背景重建结果。我们提出的方法被称为DAS3R,即动态感知高斯斑块法用于静态场景重建的简称。与现有方法相比,DAS3R在复杂的运动场景中更加稳健,能够处理动态物体占据场景很大比例的视频,并且不需要来自基于SLAM方法的相机姿态输入或点云数据。我们在DAVIS和Sintel数据集上将DAS3R与最近的无干扰物方法进行了比较;DAS3R在PSNR上的性能提升和稳健性超过2dB。项目网页可通过\url{https://kai422.github.io/DAS3R/}访问。

论文及项目相关链接

Summary

本文提出了一种新的场景分解和静态背景重建框架,从日常视频中通过训练运动掩膜并模拟静态场景为高斯斑点,结合动态感知优化,实现了更准确的背景重建结果。该方法称为DAS3R,即动态感知高斯斑点静态场景重建。与现有方法相比,DAS3R在复杂运动场景中更为稳健,能够处理动态物体占据场景大部分区域的情况,并且不需要SLAM方法的相机姿态输入或点云数据。在DAVIS和Sintel数据集上与最新的无干扰物方法对比,DAS3R表现出优越的性能和稳健性,PSNR值高出超过2dB。

Key Takeaways

- 提出了一种新的场景分解和静态背景重建框架。

- 通过结合训练运动掩膜和模拟静态场景为高斯斑点实现更准确背景重建。

- 方法名为DAS3R,即动态感知高斯斑点静态场景重建。

- DAS3R在复杂运动场景中表现稳健,尤其能处理动态物体占据多的情况。

- DAS3R不需要SLAM方法的相机姿态输入或点云数据。

- 在DAVIS和Sintel数据集上,DAS3R性能优于其他方法。

点此查看论文截图

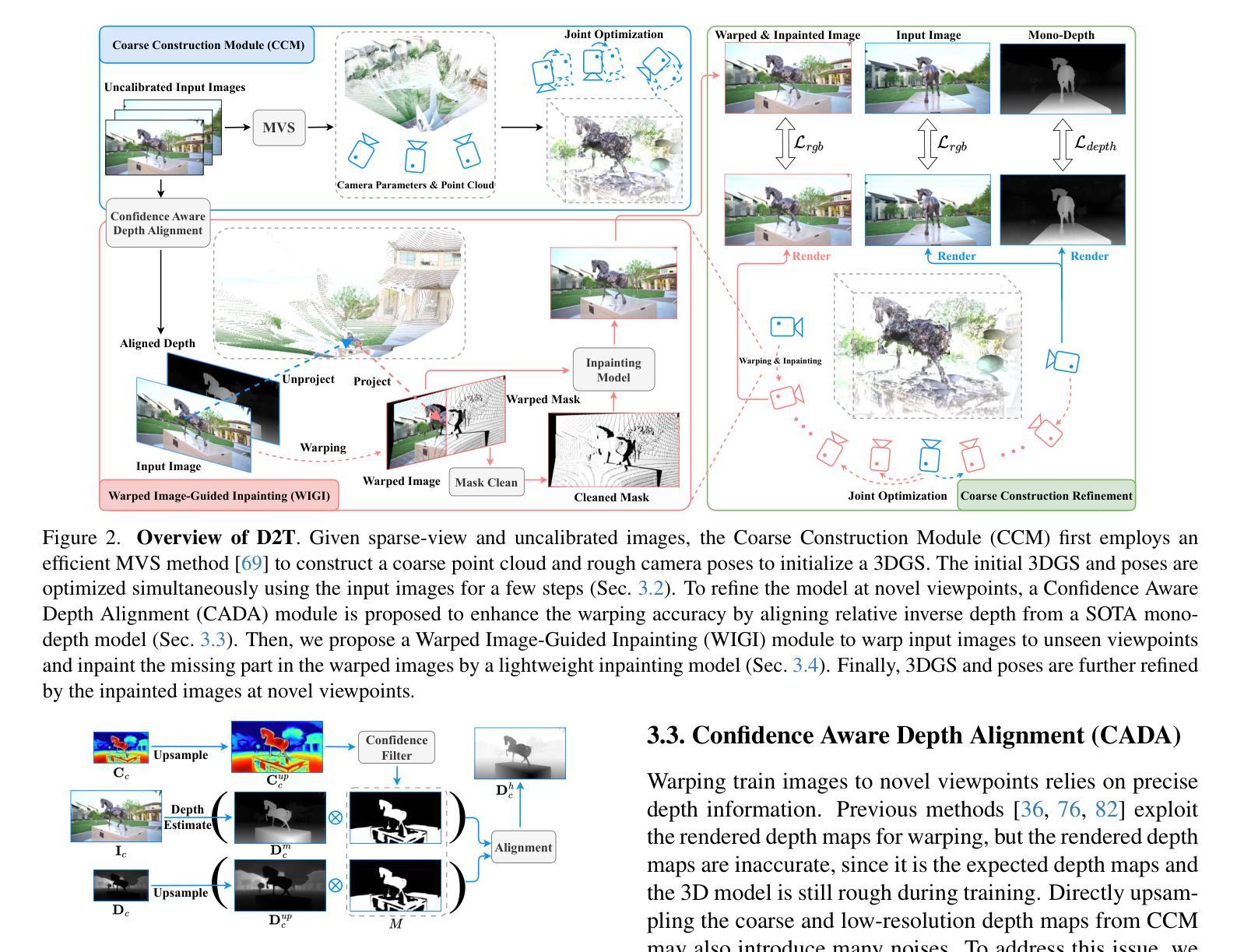

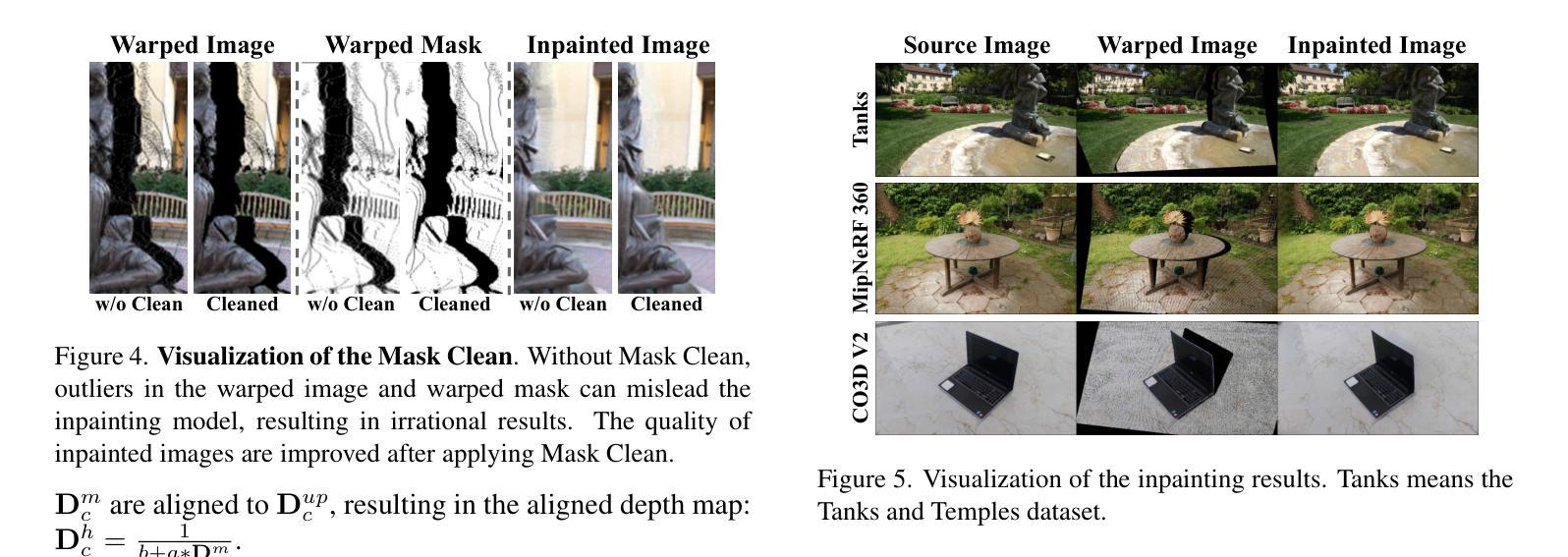

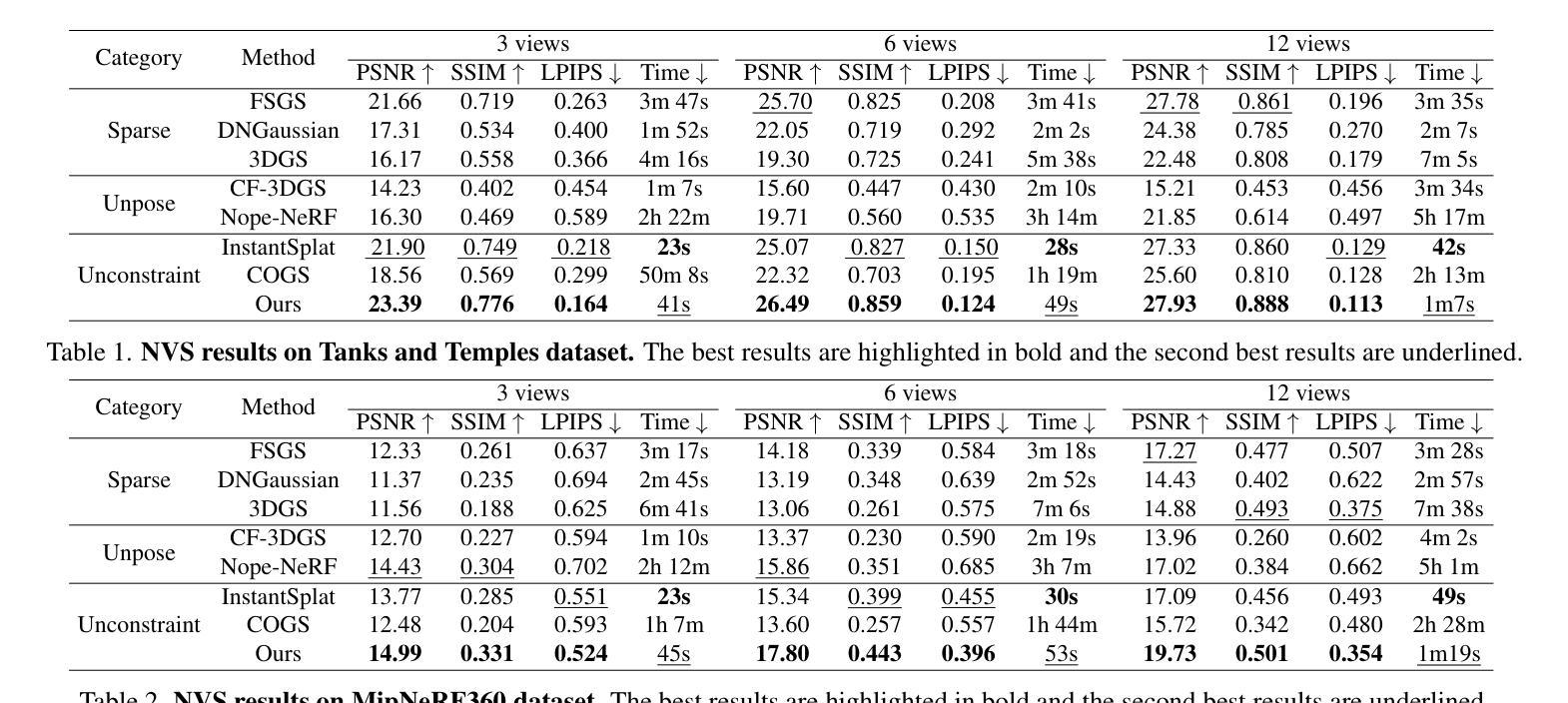

Dust to Tower: Coarse-to-Fine Photo-Realistic Scene Reconstruction from Sparse Uncalibrated Images

Authors:Xudong Cai, Yongcai Wang, Zhaoxin Fan, Deng Haoran, Shuo Wang, Wanting Li, Deying Li, Lun Luo, Minhang Wang, Jintao Xu

Photo-realistic scene reconstruction from sparse-view, uncalibrated images is highly required in practice. Although some successes have been made, existing methods are either Sparse-View but require accurate camera parameters (i.e., intrinsic and extrinsic), or SfM-free but need densely captured images. To combine the advantages of both methods while addressing their respective weaknesses, we propose Dust to Tower (D2T), an accurate and efficient coarse-to-fine framework to optimize 3DGS and image poses simultaneously from sparse and uncalibrated images. Our key idea is to first construct a coarse model efficiently and subsequently refine it using warped and inpainted images at novel viewpoints. To do this, we first introduce a Coarse Construction Module (CCM) which exploits a fast Multi-View Stereo model to initialize a 3D Gaussian Splatting (3DGS) and recover initial camera poses. To refine the 3D model at novel viewpoints, we propose a Confidence Aware Depth Alignment (CADA) module to refine the coarse depth maps by aligning their confident parts with estimated depths by a Mono-depth model. Then, a Warped Image-Guided Inpainting (WIGI) module is proposed to warp the training images to novel viewpoints by the refined depth maps, and inpainting is applied to fulfill the ``holes” in the warped images caused by view-direction changes, providing high-quality supervision to further optimize the 3D model and the camera poses. Extensive experiments and ablation studies demonstrate the validity of D2T and its design choices, achieving state-of-the-art performance in both tasks of novel view synthesis and pose estimation while keeping high efficiency. Codes will be publicly available.

从稀疏视角、未校准的图像中进行逼真的场景重建在实际操作中有着很高的需求。虽然已有一些成功的尝试,但现有方法要么是稀疏视角但需要准确的相机参数(即内部参数和外部参数),要么是无需SfM但需要密集捕捉的图像。为了结合两种方法的优点并克服其各自的缺点,我们提出了Dust to Tower(D2T),这是一个精确高效的由粗到细的框架,可以从稀疏和未校准的图像中同时优化3DGS和图像姿态。我们的核心思想是先有效地构建粗模型,然后使用在新视角下生成的填充图像对其进行优化。为此,我们首先引入了一个粗略构造模块(CCM),该模块利用快速的多视图立体模型来初始化三维高斯溅点(3DGS)并恢复初始相机姿态。为了在新视角上优化三维模型,我们提出了一个置信深度对齐(CADA)模块,通过将一个深度估计模型得到的置信部分的深度与粗略深度图对齐来优化粗略深度图。然后,我们提出了一个基于扭曲图像引导的填充(WIGI)模块,通过优化后的深度图将训练图像扭曲到新视角,并应用填充技术来填补因视点方向变化而在扭曲图像中产生的“空洞”,为进一步优化三维模型和相机姿态提供高质量监督。大量实验和消融研究证明了D2T及其设计选择的有效性,在新型视图合成和姿态估计任务中都达到了最先进的性能,同时保持了高效率。代码将公开可用。

论文及项目相关链接

摘要

本文从稀疏视角的未校准图像进行真实场景重建,提出一种结合稀疏视角和无结构从运动(SfM)方法优势的同时克服其劣势的框架——Dust to Tower(D2T)。D2T是一个由粗到细的框架,能同时优化3DGS和图像姿态。首先通过粗建模块(CCM)利用快速多视角立体模型初始化3D高斯喷溅(3DGS)并恢复初始相机姿态。然后,通过置信深度对齐(CADA)模块在新型视角细化3D模型,并通过保形图像引导修复(WIGI)模块将训练图像变形到新型视角,对变形图像中的空洞进行修复,为进一步优化3D模型和相机姿态提供高质量监督。实验和消融研究证明了D2T及其设计选择的有效性,在新型视角合成和姿态估计任务上达到一流性能,同时保持高效率。

关键见解

- 提出了一种结合稀疏视角和无结构从运动(SfM)方法优势的框架——Dust to Tower(D2T),用于优化3D场景重建和图像姿态。

- 引入Coarse Construction Module(CCM)快速初始化3D高斯喷溅(3DGS)和恢复初始相机姿态。

- 提出了Confidence Aware Depth Alignment(CADA)模块,用于在新型视角细化3D模型。

- 引入了Warped Image-Guided Inpainting(WIGI)模块,通过变形图像和修复技术提供高质量监督,进一步优化3D模型和相机姿态。

- 框架实现了高效且高性能的稀疏视角和未校准图像的真实场景重建。

- 通过广泛实验和消融研究验证了框架的有效性和设计选择的合理性。

点此查看论文截图

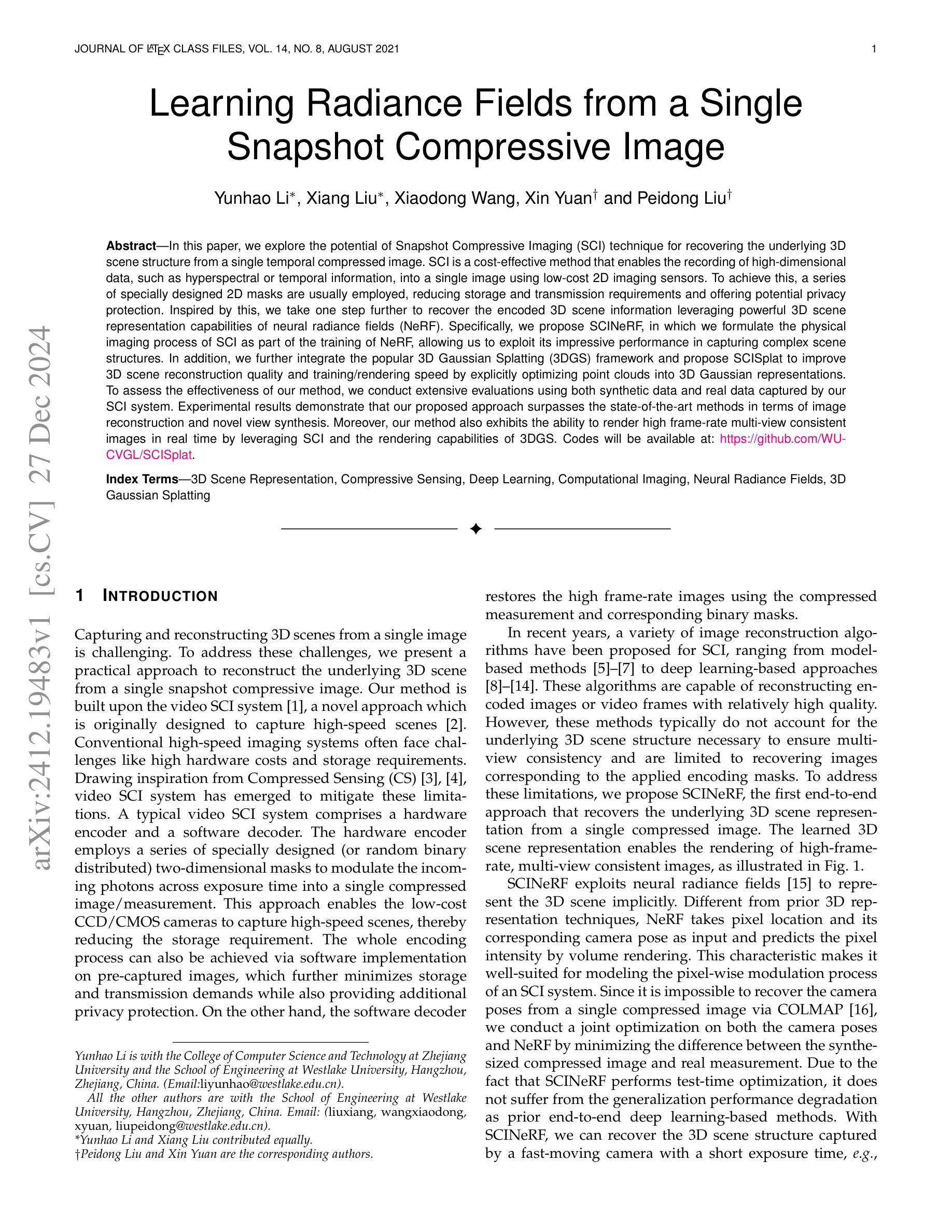

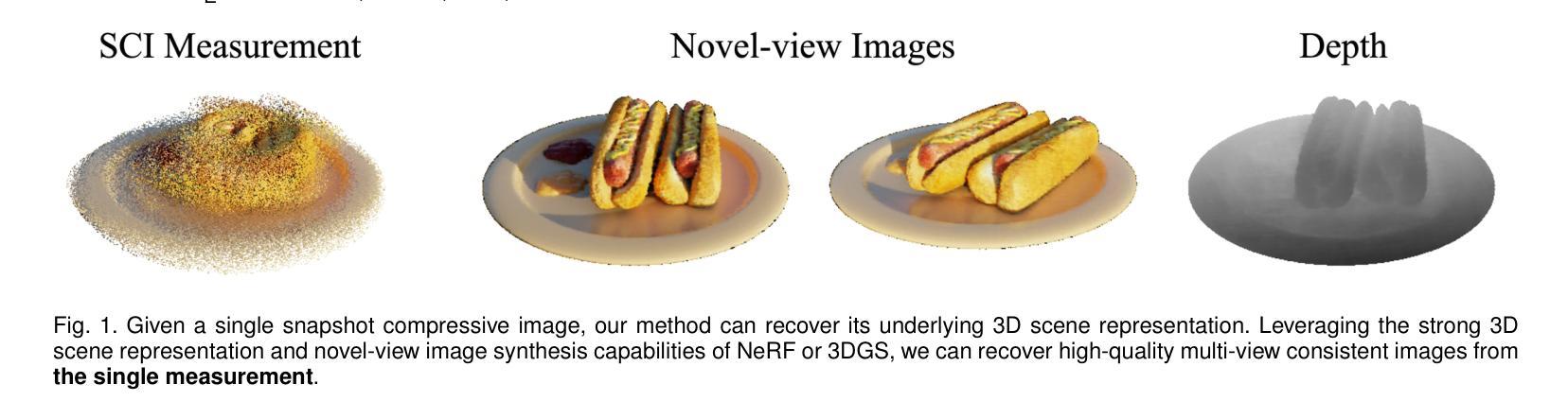

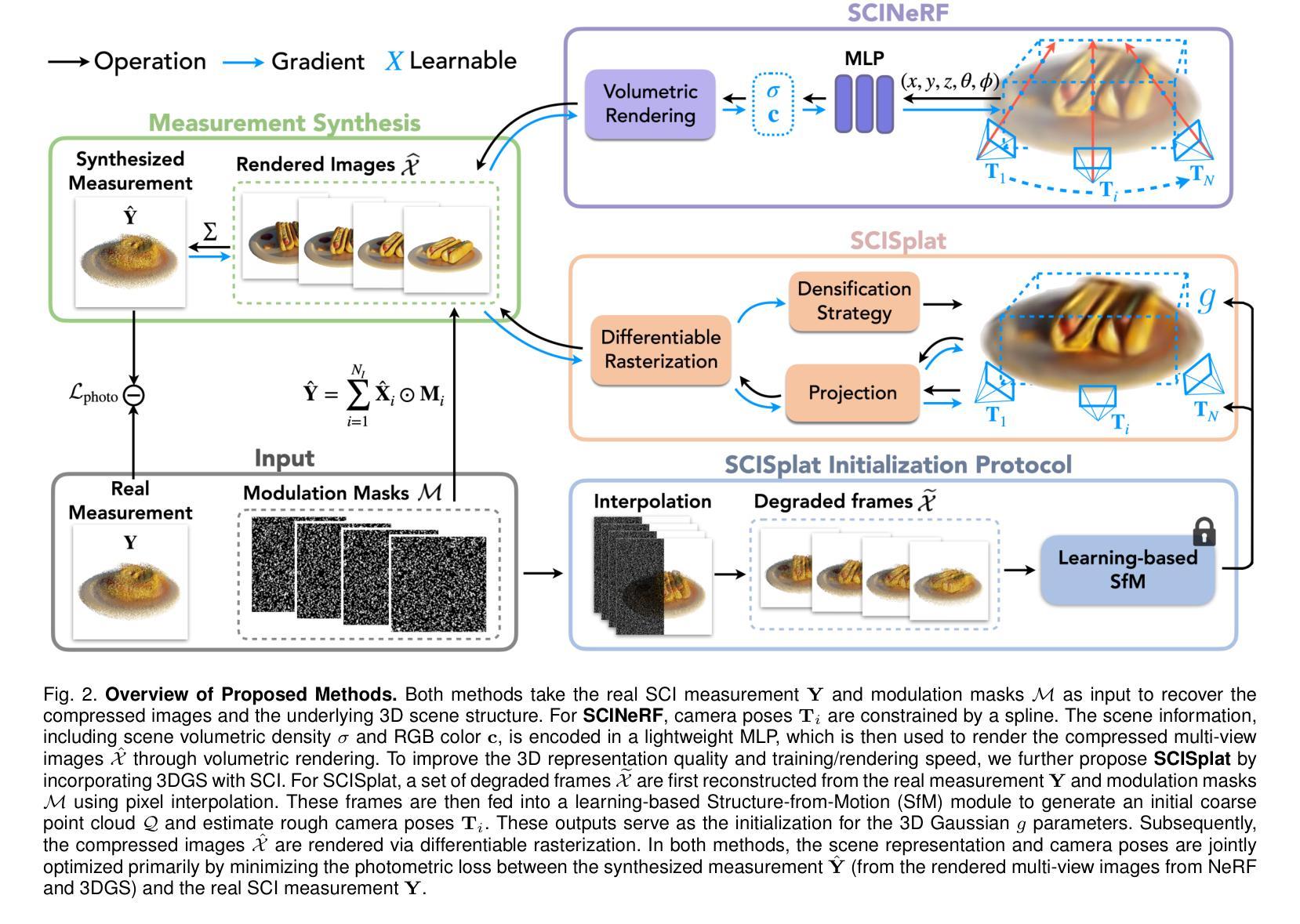

Learning Radiance Fields from a Single Snapshot Compressive Image

Authors:Yunhao Li, Xiang Liu, Xiaodong Wang, Xin Yuan, Peidong Liu

In this paper, we explore the potential of Snapshot Compressive Imaging (SCI) technique for recovering the underlying 3D scene structure from a single temporal compressed image. SCI is a cost-effective method that enables the recording of high-dimensional data, such as hyperspectral or temporal information, into a single image using low-cost 2D imaging sensors. To achieve this, a series of specially designed 2D masks are usually employed, reducing storage and transmission requirements and offering potential privacy protection. Inspired by this, we take one step further to recover the encoded 3D scene information leveraging powerful 3D scene representation capabilities of neural radiance fields (NeRF). Specifically, we propose SCINeRF, in which we formulate the physical imaging process of SCI as part of the training of NeRF, allowing us to exploit its impressive performance in capturing complex scene structures. In addition, we further integrate the popular 3D Gaussian Splatting (3DGS) framework and propose SCISplat to improve 3D scene reconstruction quality and training/rendering speed by explicitly optimizing point clouds into 3D Gaussian representations. To assess the effectiveness of our method, we conduct extensive evaluations using both synthetic data and real data captured by our SCI system. Experimental results demonstrate that our proposed approach surpasses the state-of-the-art methods in terms of image reconstruction and novel view synthesis. Moreover, our method also exhibits the ability to render high frame-rate multi-view consistent images in real time by leveraging SCI and the rendering capabilities of 3DGS. Codes will be available at: https://github.com/WU- CVGL/SCISplat.

本文中,我们探讨了Snapshot Compressive Imaging(SCI)技术从单个时间压缩图像中恢复潜在的三维场景结构的潜力。SCI是一种经济高效的方法,能够将高维数据(如超光谱或时间信息)使用低成本的二维成像传感器记录为单个图像。为实现这一点,通常采用一系列专门设计的二维掩膜,以降低存储和传输要求,并提供潜在的隐私保护。受此启发,我们进一步采取一步,利用神经辐射场(NeRF)的强大三维场景表示能力来恢复编码的三维场景信息。具体来说,我们提出SCINeRF,我们将SCI的物理成像过程作为NeRF训练的一部分,从而能够利用其捕捉复杂场景结构的令人印象深刻的表现。此外,我们进一步集成了流行的三维高斯拼贴(3DGS)框架,并提出SCISplat,通过显式优化点云到三维高斯表示来提高三维场景重建质量以及训练和渲染速度。为了评估我们的方法的有效性,我们使用合成数据和由我们的SCI系统捕获的真实数据进行了广泛评估。实验结果表明,我们提出的方法在图像重建和新颖视图合成方面超越了最新技术。而且,我们的方法还展示了利用SCI和3DGS的渲染能力实时呈现高帧率、多视角一致图像的能力。代码将在https://github.com/WU-CVGL/SCISplat上提供。

论文及项目相关链接

Summary

本文探讨了Snapshot Compressive Imaging(SCI)技术在从单个时间压缩图像中恢复潜在的3D场景结构方面的潜力。文章介绍了SCI技术结合神经辐射场(NeRF)以及流行的3D高斯溅射(3DGS)框架来改进3D场景重建质量和训练/渲染速度的方法。实验结果表明,该方法在图像重建和新颖视图合成方面超过了现有技术,并展示了实时渲染高帧率多视角一致图像的能力。

Key Takeaways

- SCI技术能够从单个时间压缩图像中恢复出潜在的3D场景结构。

- SCI技术利用一系列专门设计的2D掩膜将高维数据编码成单个图像,降低存储和传输要求,同时提供隐私保护潜力。

- SCINeRF方法通过将SCI的物理成像过程纳入NeRF的训练,充分利用其捕捉复杂场景结构的能力。

- SCISplat方法整合了SCINeRF和流行的3DGS框架,提高了3D场景重建质量并优化了训练/渲染速度。

- 实验结果表明SCISplat方法在图像重建和新颖视图合成方面超越现有技术。

- SCISplat方法能够利用SCI技术和3DGS的渲染能力实现实时的高帧率多视角一致图像渲染。

点此查看论文截图

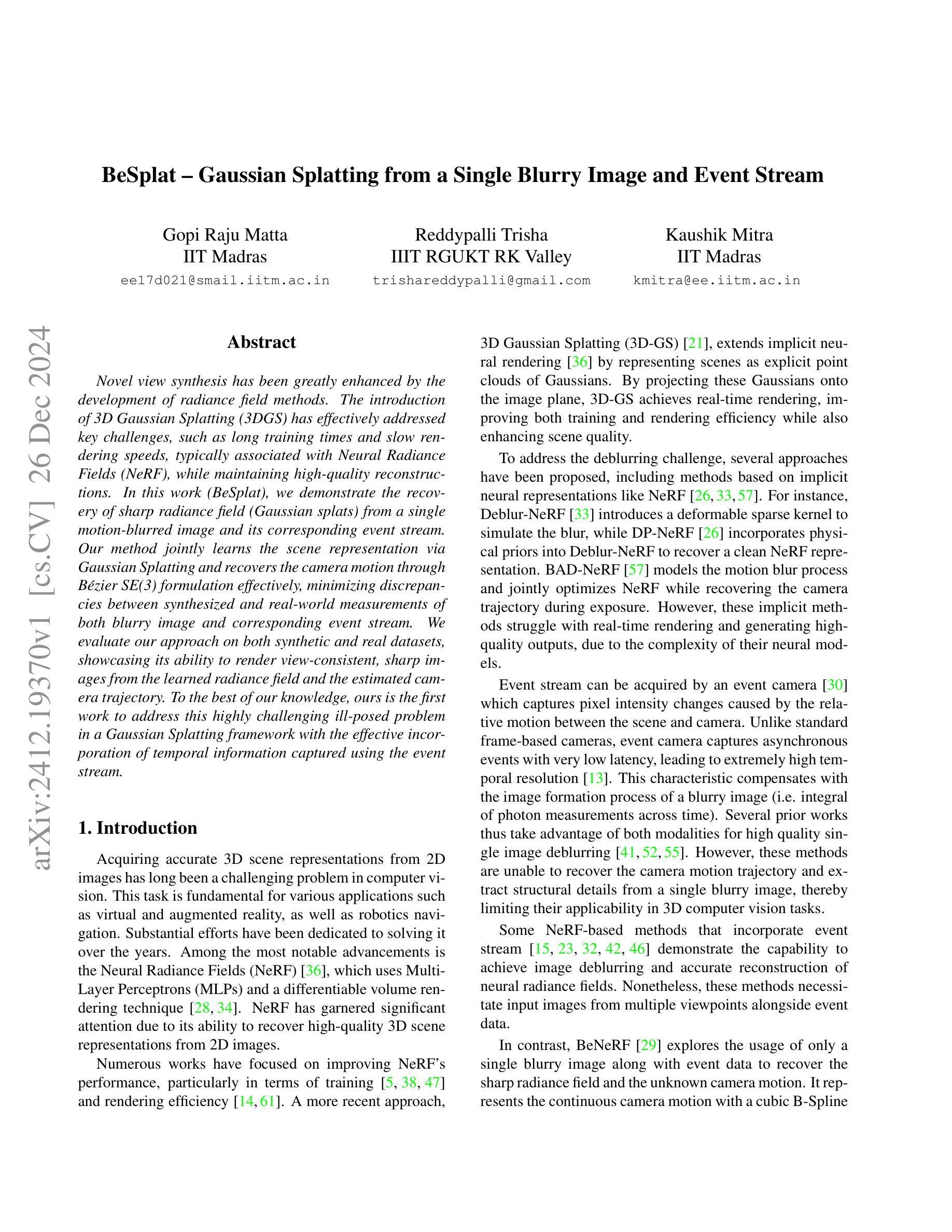

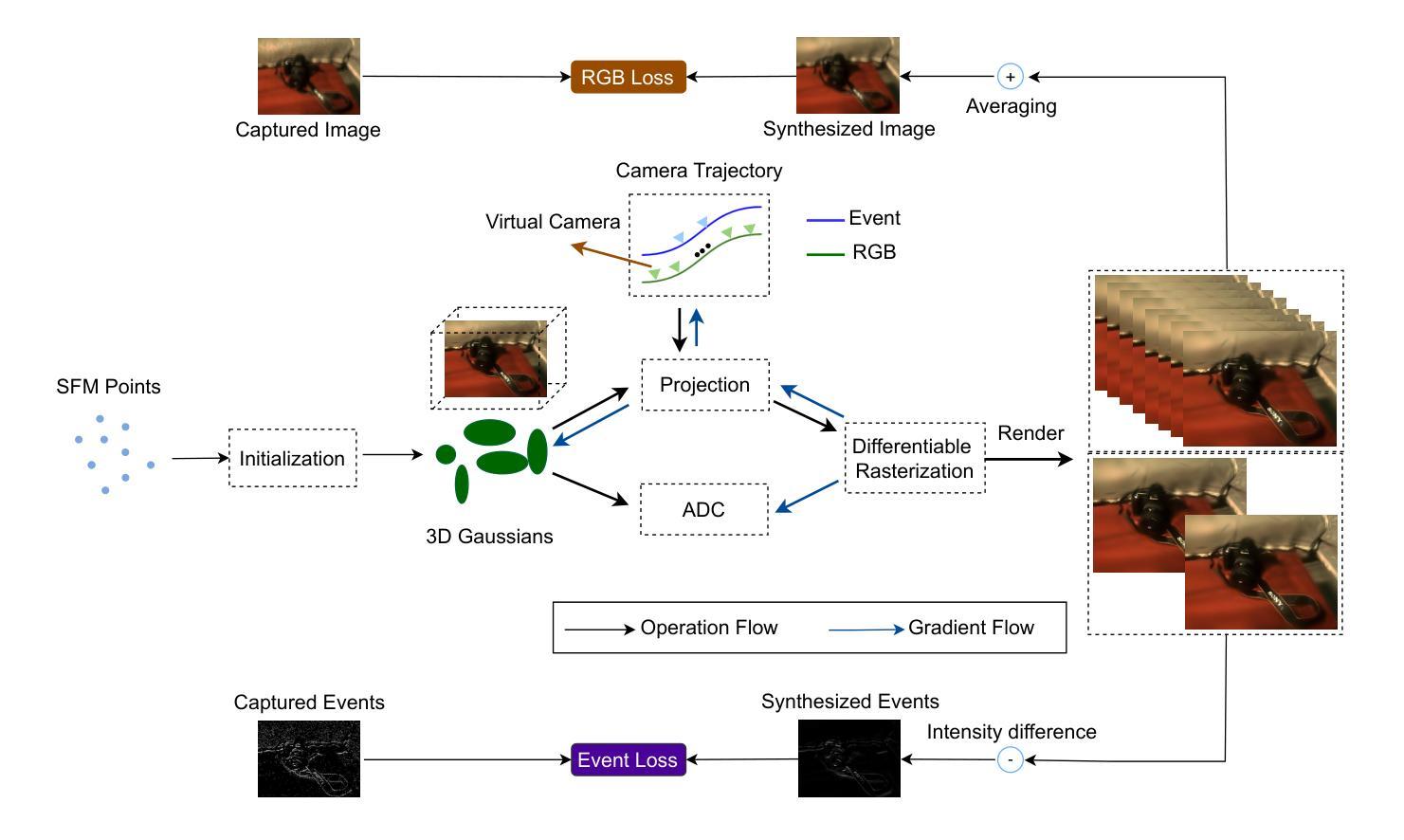

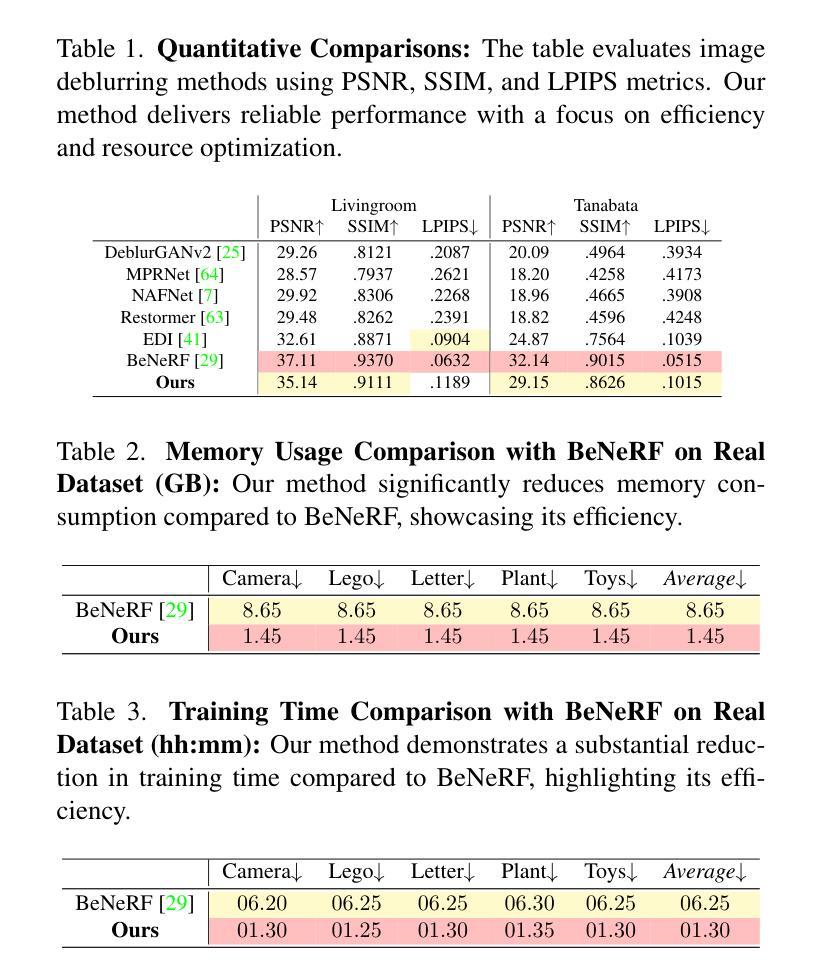

BeSplat – Gaussian Splatting from a Single Blurry Image and Event Stream

Authors:Gopi Raju Matta, Reddypalli Trisha, Kaushik Mitra

Novel view synthesis has been greatly enhanced by the development of radiance field methods. The introduction of 3D Gaussian Splatting (3DGS) has effectively addressed key challenges, such as long training times and slow rendering speeds, typically associated with Neural Radiance Fields (NeRF), while maintaining high-quality reconstructions. In this work (BeSplat), we demonstrate the recovery of sharp radiance field (Gaussian splats) from a single motion-blurred image and its corresponding event stream. Our method jointly learns the scene representation via Gaussian Splatting and recovers the camera motion through Bezier SE(3) formulation effectively, minimizing discrepancies between synthesized and real-world measurements of both blurry image and corresponding event stream. We evaluate our approach on both synthetic and real datasets, showcasing its ability to render view-consistent, sharp images from the learned radiance field and the estimated camera trajectory. To the best of our knowledge, ours is the first work to address this highly challenging ill-posed problem in a Gaussian Splatting framework with the effective incorporation of temporal information captured using the event stream.

随着辐射场方法的发展,新型视图合成技术得到了极大的增强。3D高斯贴图技术(3DGS)的引入有效解决了一些关键问题,如神经辐射场(NeRF)通常面临的训练时间长和渲染速度慢的问题,同时保持了高质量的重建效果。在这项工作中(BeSplat),我们展示了从单一的运动模糊图像和对应的事件流中恢复锐化的辐射场(高斯贴图)的能力。我们的方法通过高斯贴图联合学习场景表示,并通过贝塞尔SE(3)公式有效恢复相机运动,从而最小化合成与模糊图像和对应事件流的真实世界测量之间的差异。我们在合成数据集和真实数据集上评估了我们的方法,展示了从学习的辐射场和估计的相机轨迹中呈现一致、清晰视图图像的能力。据我们所知,我们的工作是在高斯贴图框架下解决这一极具挑战性的不适定问题的首次尝试,有效结合了使用事件流捕获的时间信息。

论文及项目相关链接

PDF Accepted for publication at EVGEN2025, WACV-25 Workshop

Summary

新型视图合成技术通过辐射场方法的发展得到了极大的提升。引入的3D高斯贴图技术有效地解决了与神经辐射场相关的一些关键挑战,如训练时间长和渲染速度慢等问题,同时保持高质量的重建效果。本研究通过高斯贴图恢复运动模糊图像的清晰辐射场,并通过贝塞尔SE(3)公式有效恢复相机运动。我们的方法联合学习场景的高斯贴图表示,并通过最小化合成图像和真实图像之间的差异以及事件流的测量误差,从而有效地估计相机轨迹。我们在合成和真实数据集上评估了我们的方法,展示了其从学习到的辐射场和估计的相机轨迹中渲染出一致、清晰图像的能力。据我们所知,我们的工作是首个在包含事件流捕捉的时间信息的情况下解决此高斯贴图框架中极具挑战性的反问题的工作。

Key Takeaways

- 引入的3D高斯贴图技术解决了神经辐射场在训练时间和渲染速度方面的挑战。

- 通过高斯贴图技术,可以从单个运动模糊图像和其对应的事件流中恢复清晰的辐射场。

- 通过贝塞尔SE(3)公式,能更有效地恢复相机运动轨迹。

- 方法联合学习场景的高斯贴图表示并最小化合成与真实图像之间的差异以及事件流的测量误差。

- 该方法在合成和真实数据集上的评估证明了其有效性和优势。

- 此技术对于从模糊的图像中重建清晰的视图具有潜力。

点此查看论文截图

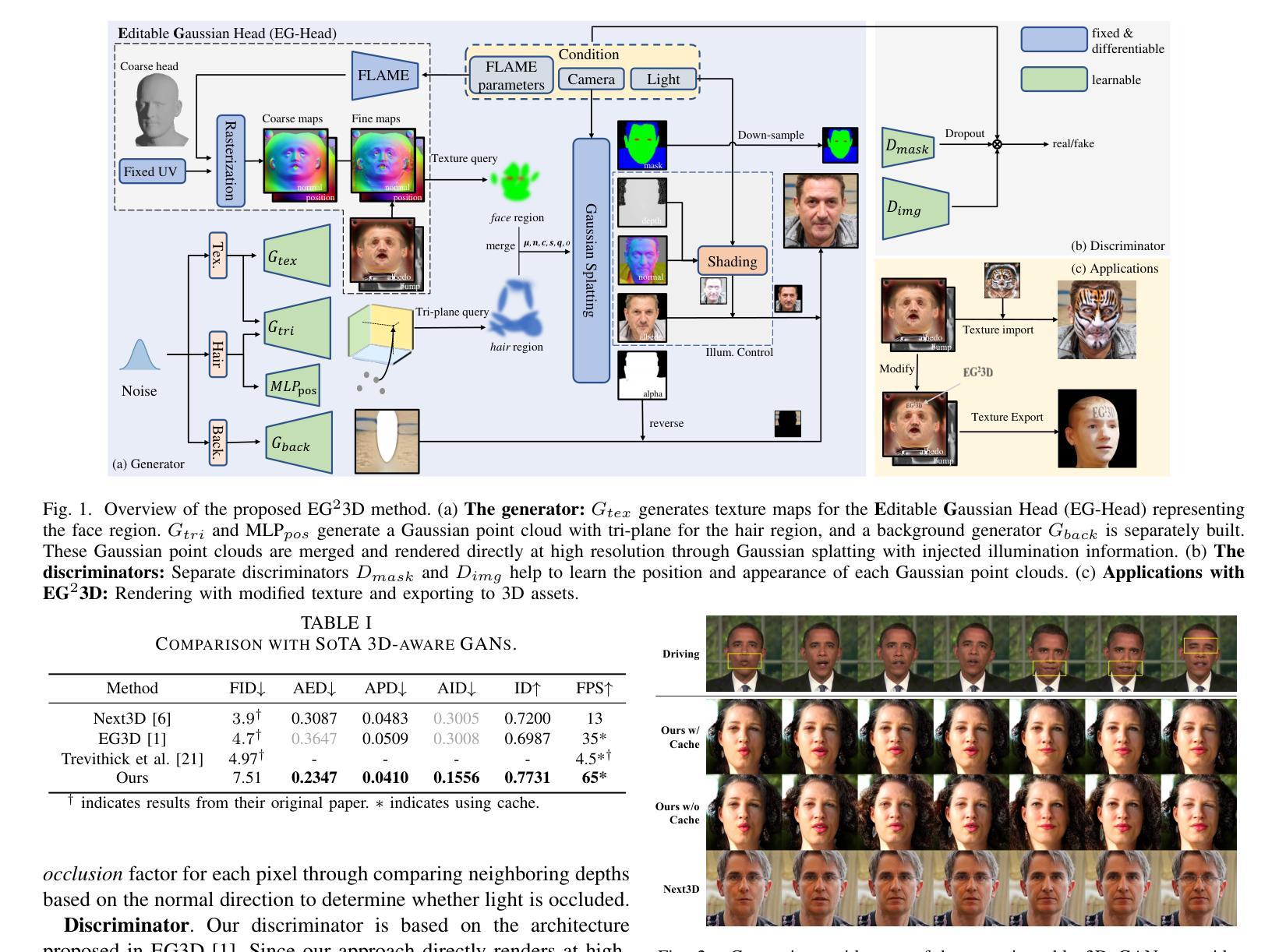

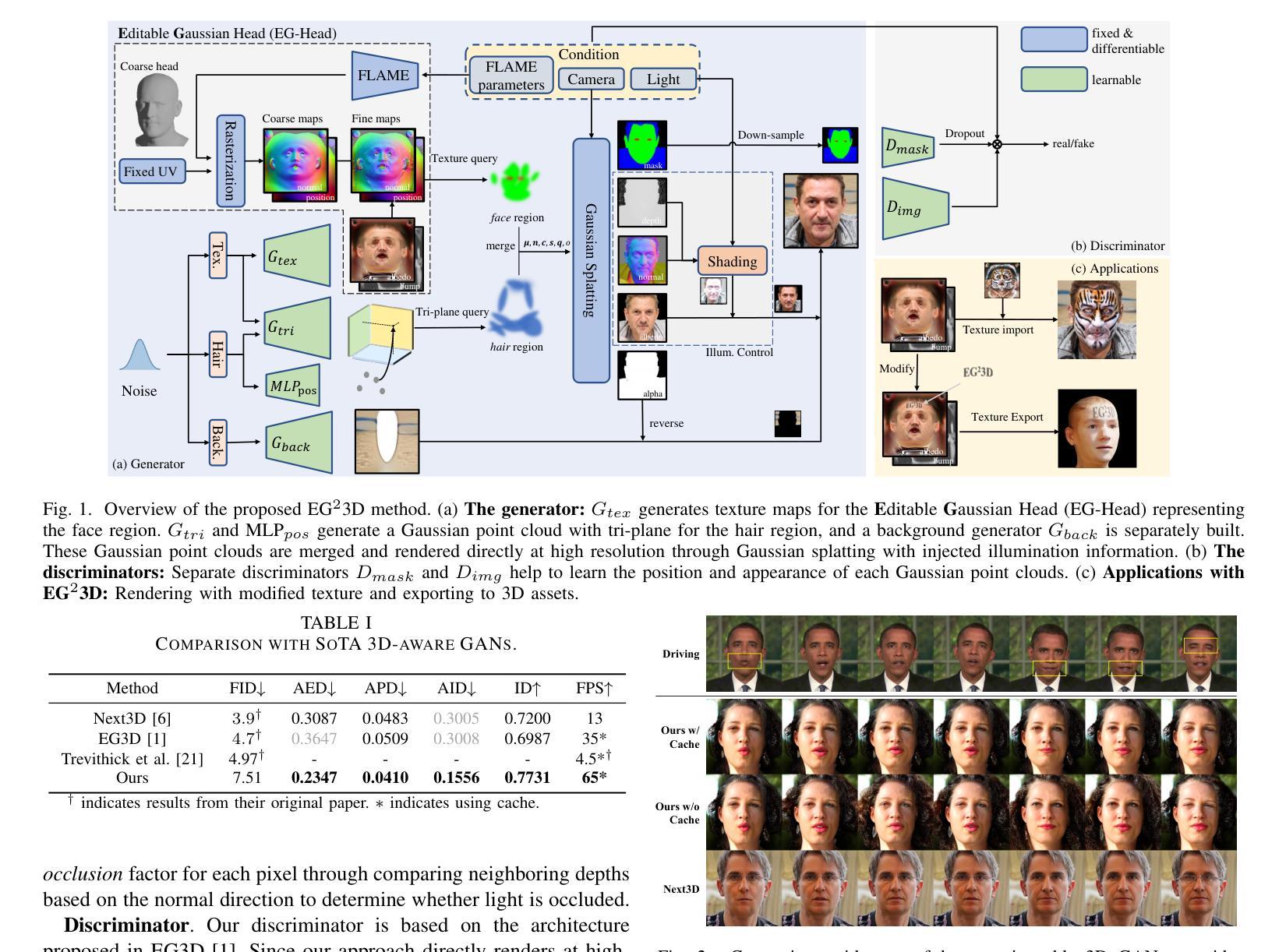

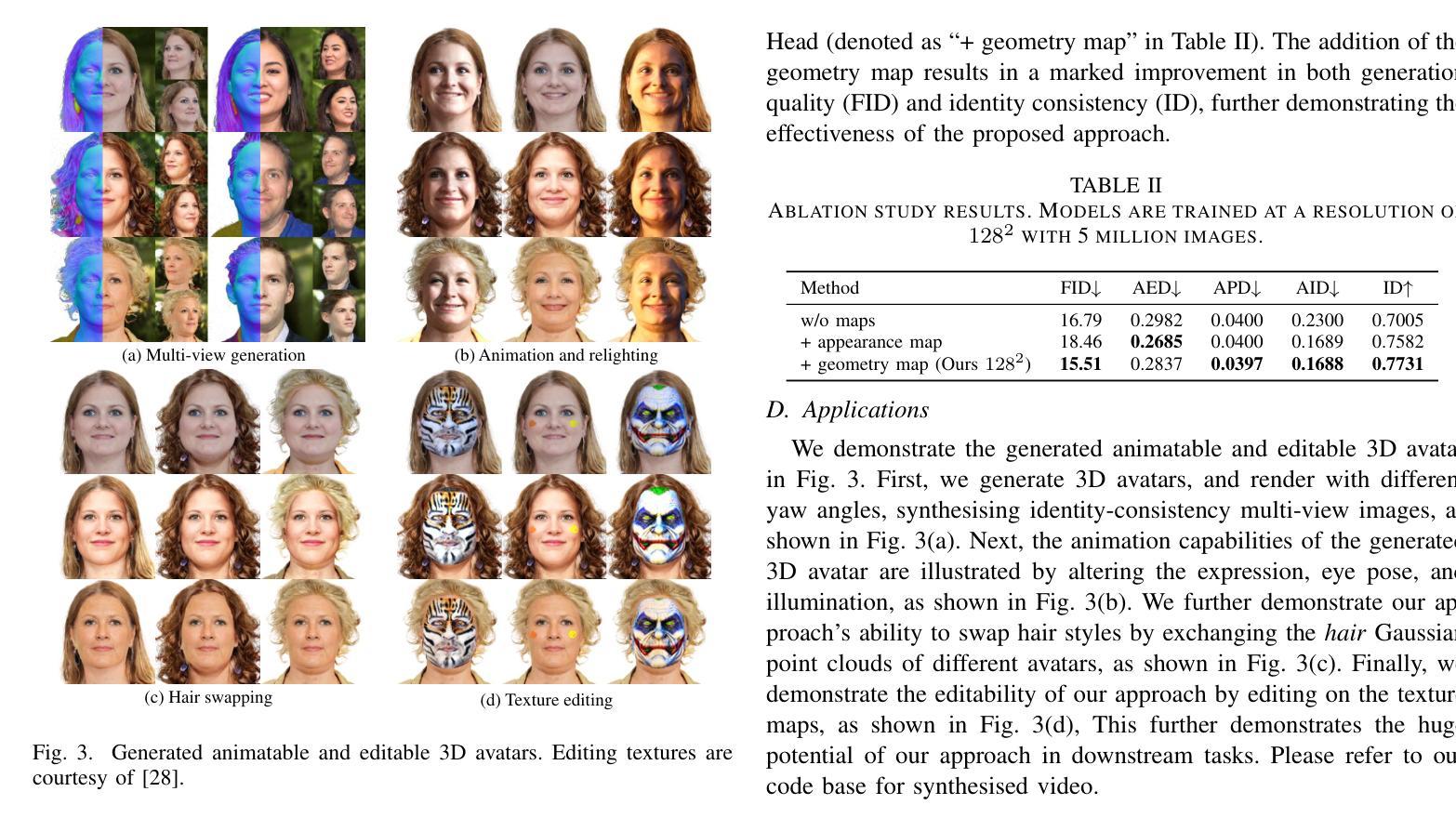

Generating Editable Head Avatars with 3D Gaussian GANs

Authors:Guohao Li, Hongyu Yang, Yifang Men, Di Huang, Weixin Li, Ruijie Yang, Yunhong Wang

Generating animatable and editable 3D head avatars is essential for various applications in computer vision and graphics. Traditional 3D-aware generative adversarial networks (GANs), often using implicit fields like Neural Radiance Fields (NeRF), achieve photorealistic and view-consistent 3D head synthesis. However, these methods face limitations in deformation flexibility and editability, hindering the creation of lifelike and easily modifiable 3D heads. We propose a novel approach that enhances the editability and animation control of 3D head avatars by incorporating 3D Gaussian Splatting (3DGS) as an explicit 3D representation. This method enables easier illumination control and improved editability. Central to our approach is the Editable Gaussian Head (EG-Head) model, which combines a 3D Morphable Model (3DMM) with texture maps, allowing precise expression control and flexible texture editing for accurate animation while preserving identity. To capture complex non-facial geometries like hair, we use an auxiliary set of 3DGS and tri-plane features. Extensive experiments demonstrate that our approach delivers high-quality 3D-aware synthesis with state-of-the-art controllability. Our code and models are available at https://github.com/liguohao96/EGG3D.

生成可动画化和可编辑的3D头像对于计算机视觉和图形学的各种应用至关重要。传统的基于3D的生成对抗网络(GANs)经常使用如神经辐射场(NeRF)之类的隐式场,以实现逼真的和视角一致的3D头部合成。然而,这些方法在变形灵活性和可编辑性方面存在局限性,阻碍了逼真且易于修改的3D头部的创建。我们提出了一种结合3D高斯拼贴(3DGS)作为显式3D表示的新方法,以提高3D头像的可编辑性和动画控制。这种方法使照明控制更加容易,并且提高了可编辑性。我们的方法的核心是可编辑高斯头(EG-Head)模型,它将3D形态模型(3DMM)与纹理图相结合,允许精确的表情控制以及灵活的纹理编辑来实现准确动画的同时保持身份特征。为了捕捉头发等复杂的非面部几何形状,我们使用辅助的3DGS和三角平面特征集。大量实验证明,我们的方法实现了具有先进可控性的高质量3D感知合成。我们的代码和模型可在https://github.com/liguohao96/EGG3D获得。

论文及项目相关链接

Summary

本文介绍了生成可动画和可编辑的3D头像的重要性及其在计算机视觉和图形学中的多种应用。针对现有技术变形灵活性和可编辑性的限制,提出了一种结合3D高斯喷射技术(3DGS)作为显式三维表示的新方法,增强了三维头像的可编辑性和动画控制功能。通过创建Editable Gaussian Head(EG-Head)模型,实现了精准的表情控制和灵活的纹理编辑,可以在保持人物身份的同时进行精确动画操作。此方法还能捕捉复杂的非面部几何形状如头发等。实验证明,该方法具有高质量的三维感知合成和先进的可控性。代码和模型已公开发布在GitHub上。

Key Takeaways

- 生成可动画和可编辑的3D头像在计算机视觉和图形学中具有重要意义。

- 传统方法使用隐式场(如NeRF)实现真实感的三维头合成,但变形灵活性和可编辑性受限。

- 提出了一种结合3D高斯喷射技术(3DGS)的新方法,增强三维头像的可编辑性和动画控制功能。

- 通过创建Editable Gaussian Head(EG-Head)模型,实现了精准的表情控制和灵活的纹理编辑。

- 此方法允许捕捉复杂的非面部几何形状如头发等。

- 该方法具有高质量的三维感知合成和先进的可控性。

点此查看论文截图

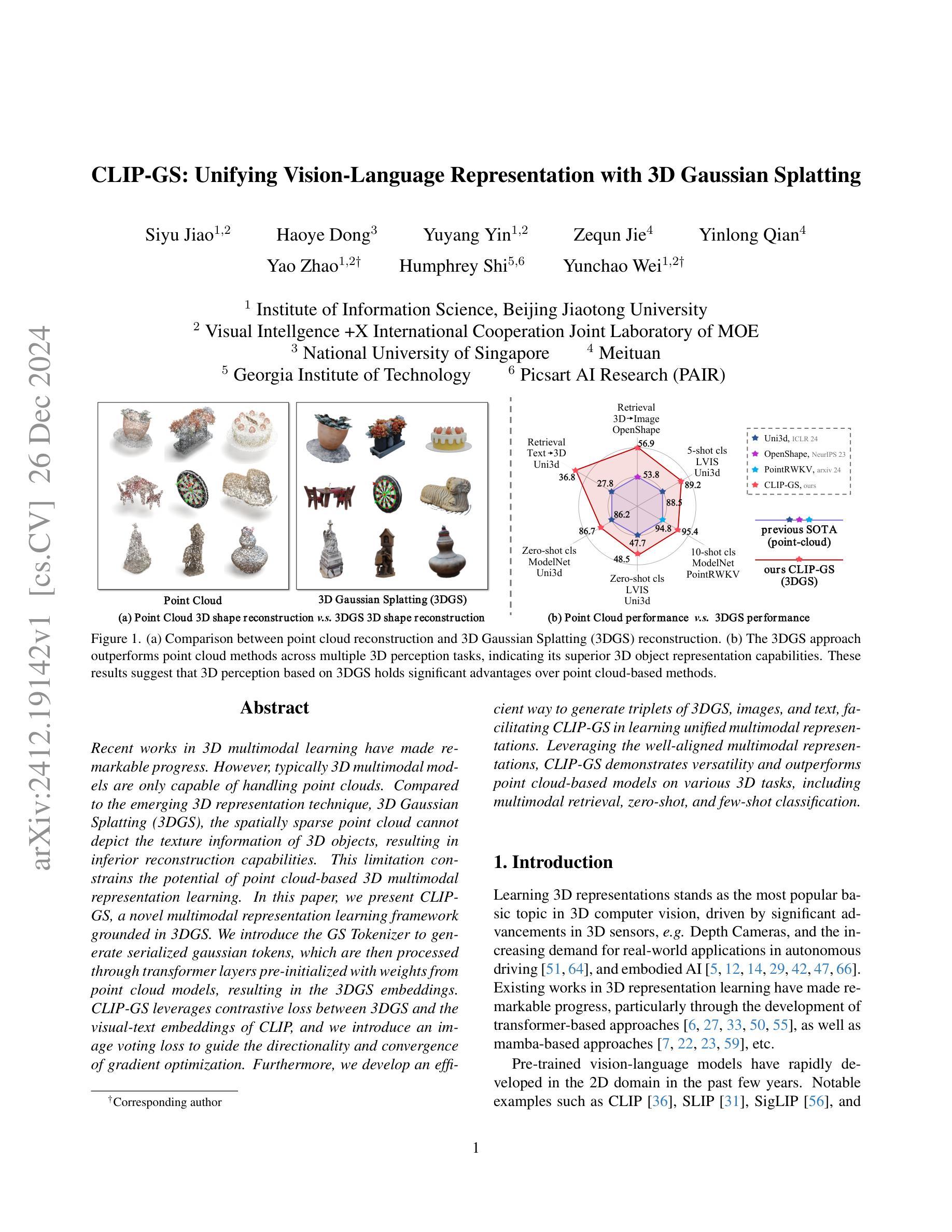

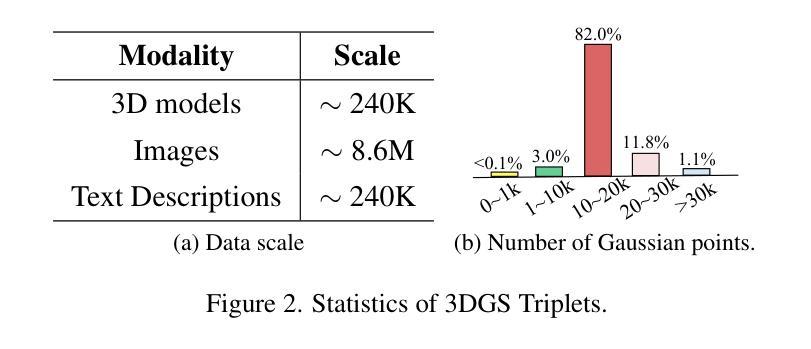

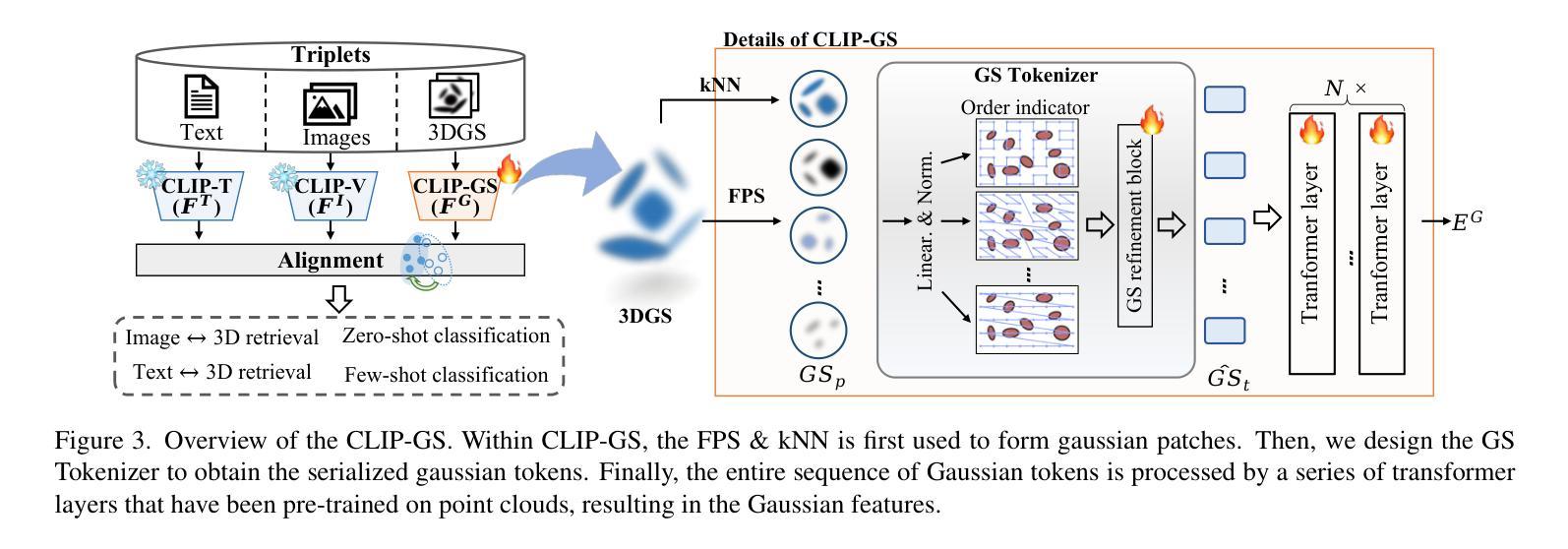

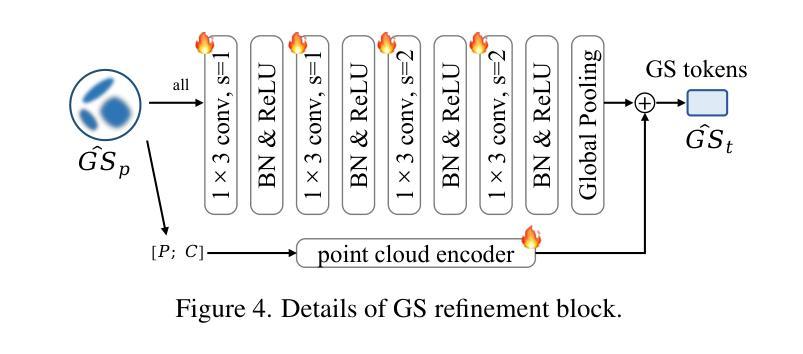

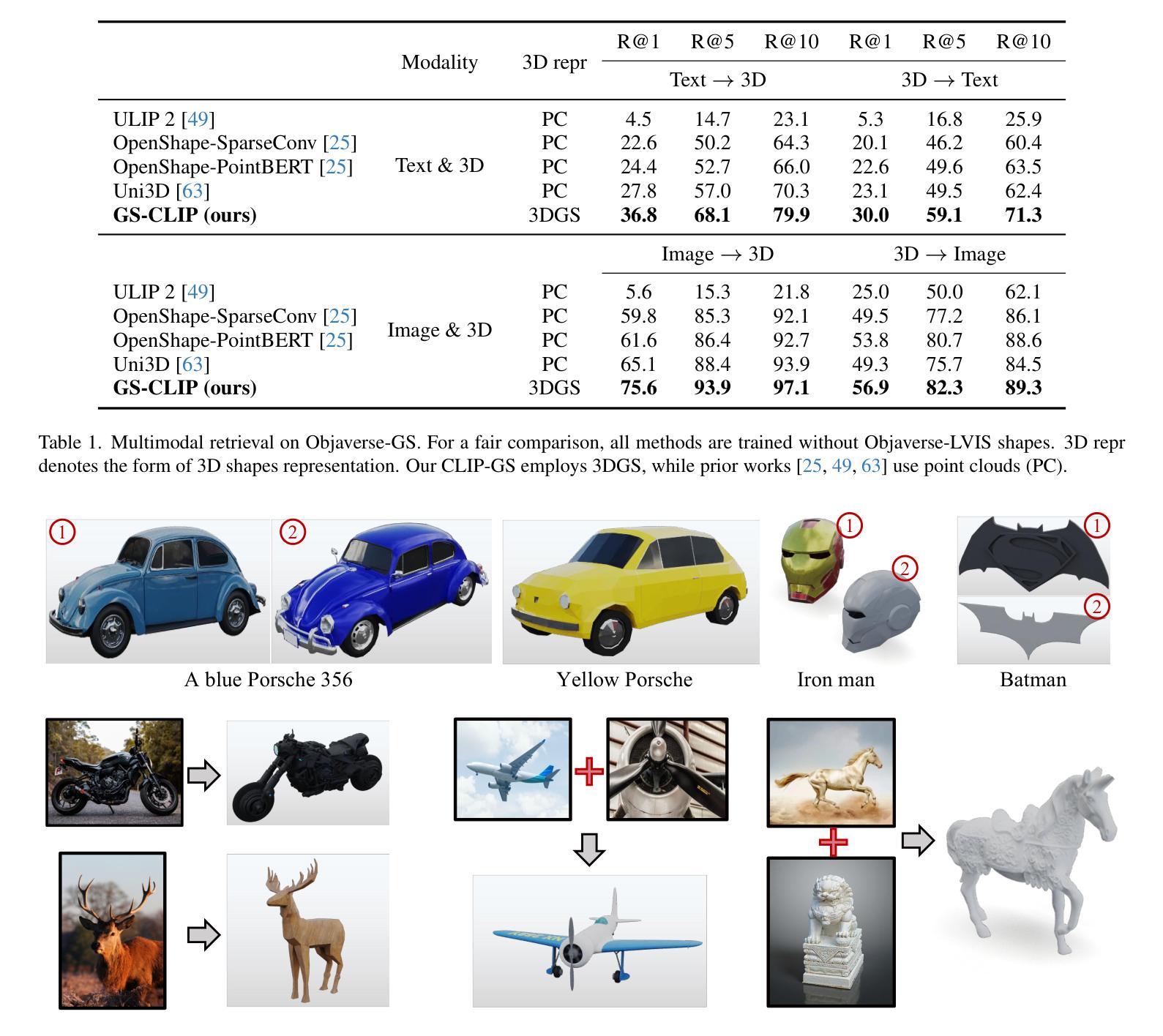

CLIP-GS: Unifying Vision-Language Representation with 3D Gaussian Splatting

Authors:Siyu Jiao, Haoye Dong, Yuyang Yin, Zequn Jie, Yinlong Qian, Yao Zhao, Humphrey Shi, Yunchao Wei

Recent works in 3D multimodal learning have made remarkable progress. However, typically 3D multimodal models are only capable of handling point clouds. Compared to the emerging 3D representation technique, 3D Gaussian Splatting (3DGS), the spatially sparse point cloud cannot depict the texture information of 3D objects, resulting in inferior reconstruction capabilities. This limitation constrains the potential of point cloud-based 3D multimodal representation learning. In this paper, we present CLIP-GS, a novel multimodal representation learning framework grounded in 3DGS. We introduce the GS Tokenizer to generate serialized gaussian tokens, which are then processed through transformer layers pre-initialized with weights from point cloud models, resulting in the 3DGS embeddings. CLIP-GS leverages contrastive loss between 3DGS and the visual-text embeddings of CLIP, and we introduce an image voting loss to guide the directionality and convergence of gradient optimization. Furthermore, we develop an efficient way to generate triplets of 3DGS, images, and text, facilitating CLIP-GS in learning unified multimodal representations. Leveraging the well-aligned multimodal representations, CLIP-GS demonstrates versatility and outperforms point cloud-based models on various 3D tasks, including multimodal retrieval, zero-shot, and few-shot classification.

近期,在三维多模态学习领域取得了显著进展。然而,典型的三维多模态模型通常只能处理点云数据。与新兴的三维表示技术相比,即三维高斯贴图技术(3DGS),空间稀疏点云无法描述三维对象的纹理信息,导致重建能力较差。这一局限性制约了基于点云的3D多模态表示学习的潜力。在本文中,我们提出了一种基于三维高斯贴图(CLIP-GS)的多模态表示学习新框架。我们引入了GS标记器生成序列化高斯标记,这些标记经过预先用点云模型权重初始化的变换层处理,生成三维高斯贴图嵌入。CLIP-GS利用三维高斯贴图和CLIP的视觉文本嵌入之间的对比损失。同时我们引入了图像投票损失来指导梯度优化的方向性和收敛性。此外,我们还开发了一种有效的方法来生成三维高斯贴图、图像和文本的三元组,有助于CLIP-GS学习统一的多模态表示。利用对齐良好的多模态表示,CLIP-GS表现出通用性,在各种三维任务上优于基于点云的模型,包括多模态检索、零样本和少样本分类。

论文及项目相关链接

Summary

基于3DGS的新型多模态学习框架CLIP-GS的研究介绍了使用GS Tokenizer生成高斯序列化令牌,并结合点云模型预初始化权重进行处理,生成3DGS嵌入。CLIP-GS利用对比损失在3DGS和CLIP的视觉文本嵌入之间进行优化,并引入图像投票损失指导梯度优化的方向性。通过生成高效的三元组数据,CLIP-GS可以学习统一的多模态表示并超越点云模型在多个任务上的表现。

Key Takeaways

- 现有3D多模态模型主要处理点云数据,存在纹理信息描述不足的问题。

- 3DGS技术能够更有效地表示3D对象的纹理信息。

- CLIP-GS是一个基于3DGS的新型多模态学习框架,引入GS Tokenizer生成高斯序列化令牌。

- CLIP-GS利用对比损失和图像投票损失进行优化,提高模型性能。

- CLIP-GS能够生成三元组数据,促进多模态统一表示的学习。

- CLIP-GS在多种3D任务上表现出色,包括多模态检索、零样本和少样本分类。

点此查看论文截图

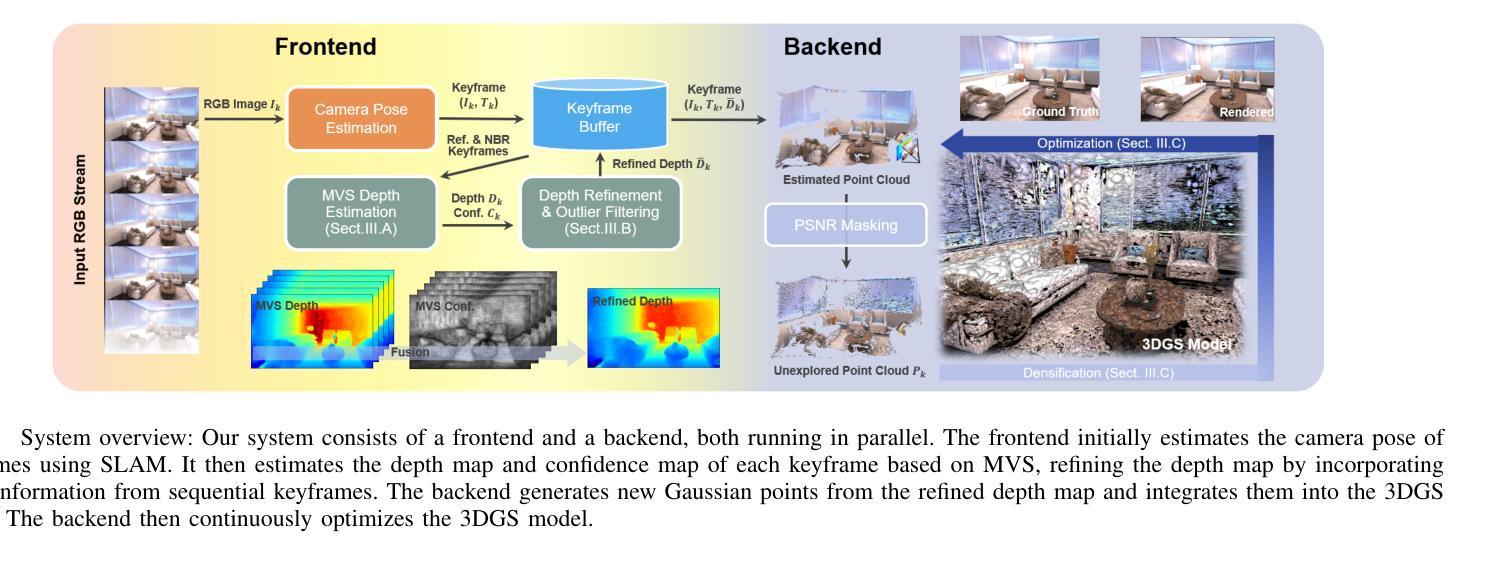

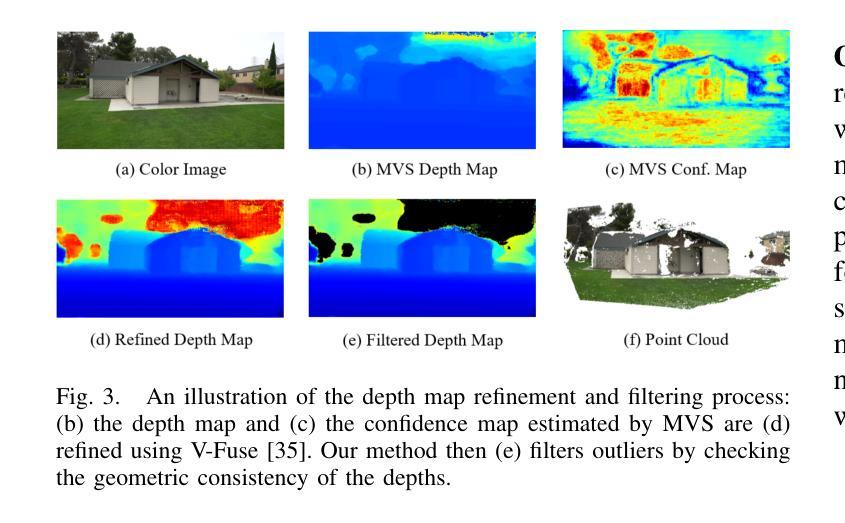

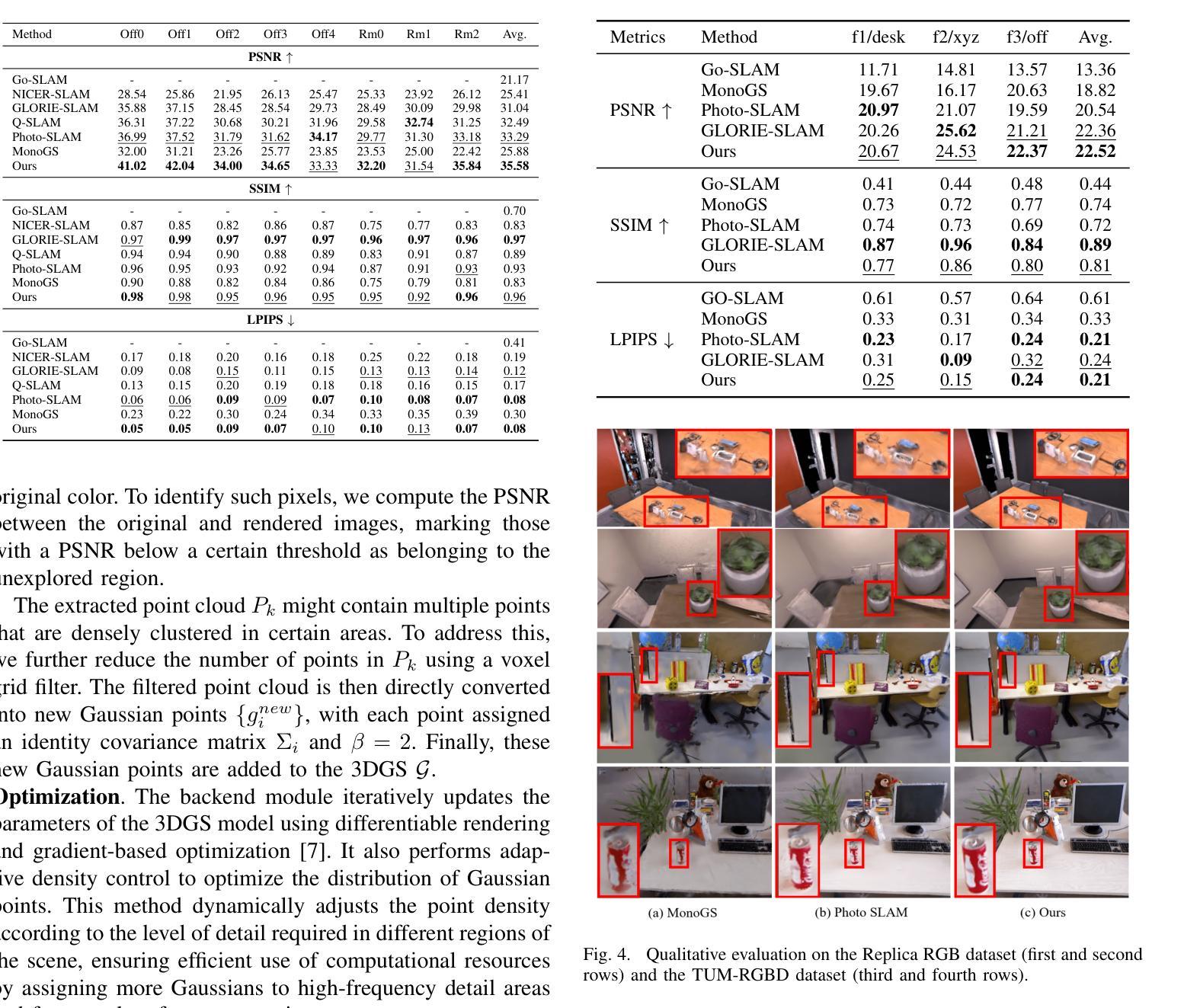

MVS-GS: High-Quality 3D Gaussian Splatting Mapping via Online Multi-View Stereo

Authors:Byeonggwon Lee, Junkyu Park, Khang Truong Giang, Sungho Jo, Soohwan Song

This study addresses the challenge of online 3D model generation for neural rendering using an RGB image stream. Previous research has tackled this issue by incorporating Neural Radiance Fields (NeRF) or 3D Gaussian Splatting (3DGS) as scene representations within dense SLAM methods. However, most studies focus primarily on estimating coarse 3D scenes rather than achieving detailed reconstructions. Moreover, depth estimation based solely on images is often ambiguous, resulting in low-quality 3D models that lead to inaccurate renderings. To overcome these limitations, we propose a novel framework for high-quality 3DGS modeling that leverages an online multi-view stereo (MVS) approach. Our method estimates MVS depth using sequential frames from a local time window and applies comprehensive depth refinement techniques to filter out outliers, enabling accurate initialization of Gaussians in 3DGS. Furthermore, we introduce a parallelized backend module that optimizes the 3DGS model efficiently, ensuring timely updates with each new keyframe. Experimental results demonstrate that our method outperforms state-of-the-art dense SLAM methods, particularly excelling in challenging outdoor environments.

本研究旨在解决使用RGB图像流进行神经渲染的在线3D模型生成挑战。先前的研究已经通过结合神经辐射场(NeRF)或3D高斯拼贴(3DGS)作为密集SLAM方法内的场景表示来解决这个问题。然而,大多数研究主要侧重于估计粗糙的3D场景,而非实现详细的重建。此外,仅基于图像进行深度估计通常具有模糊性,导致质量低下的3D模型,从而导致渲染不准确。为了克服这些局限性,我们提出了一种新型的在线多视角立体(MVS)方法的高质量3DGS建模框架。我们的方法使用局部时间窗口内的连续帧估计MVS深度,并应用全面的深度细化技术来过滤异常值,实现对3DGS中的高斯分布的准确初始化。此外,我们还引入了一个并行化的后端模块,以高效优化3DGS模型,确保每个新关键帧都能及时得到更新。实验结果表明,我们的方法在具有挑战性的室外环境中表现优于最先进的密集SLAM方法。

论文及项目相关链接

PDF 7 pages, 6 figures, submitted to IEEE ICRA 2025

Summary

本文提出了一种基于在线多视角立体(MVS)的在线三维模型生成方法,通过引入高效的并行后端模块和深度细化技术,实现高质量的三维高斯喷溅(3DGS)建模。该方法克服了传统方法主要估计粗糙三维场景的局限性,提高了深度估计的准确性,从而生成更精确的三维模型。

Key Takeaways

- 研究背景:针对在线三维模型生成的问题,特别是在使用RGB图像流进行神经渲染时面临的挑战。

- 主要问题:深度估计仅基于图像时存在歧义,导致低质量的三维模型和不准确渲染。

- 研究方法:提出一种新型框架进行高质量的三维高斯喷溅(3DGS)建模,利用在线多视角立体(MVS)方法进行深度估计。通过深度细化技术提高深度估计准确性,并利用并行后端模块优化模型。

- 技术特点:使用局部时间窗口内的连续帧进行深度估计,并采用深度细化技术过滤异常值。引入并行后端模块实现高效优化和实时更新。

- 实验结果:该方法优于现有的密集SLAM方法,尤其在户外环境中表现突出。

- 突破点:突破了传统方法主要估计粗糙三维场景的局限性,提高了深度估计的准确性,生成更精确的三维模型。

点此查看论文截图

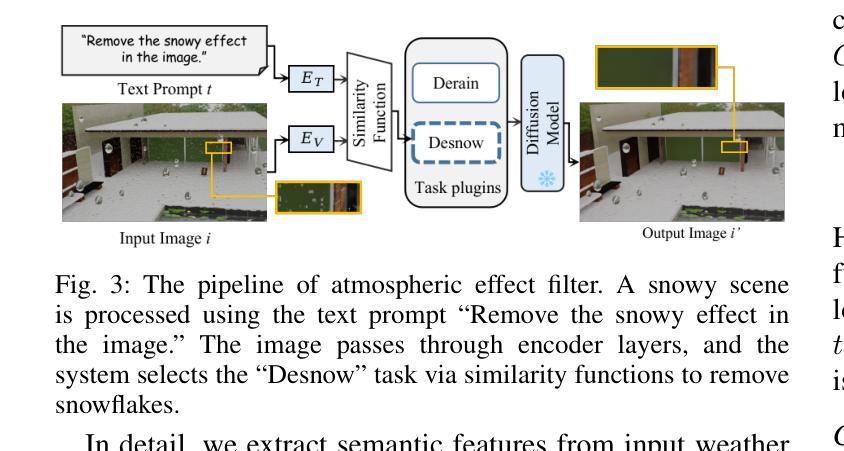

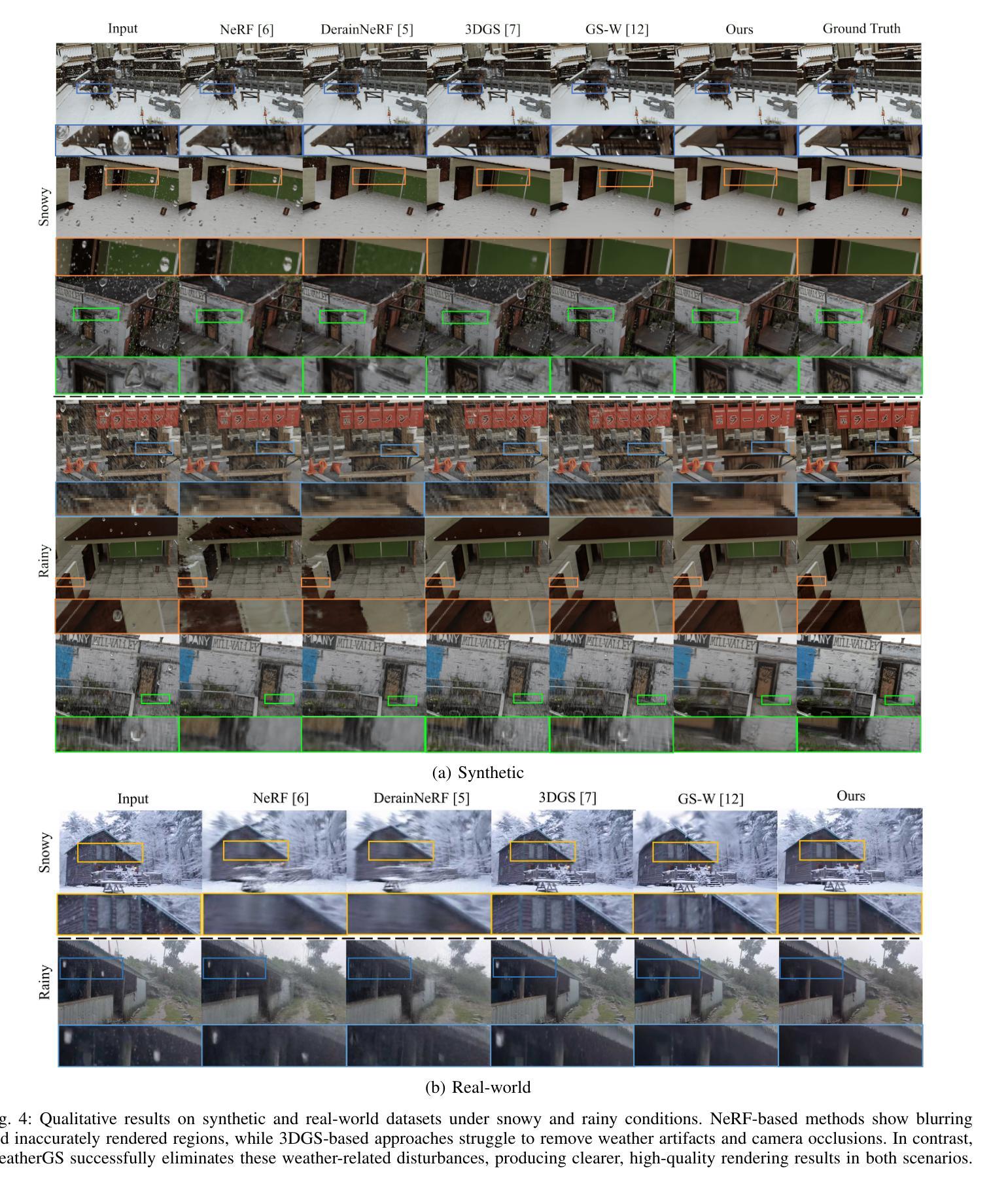

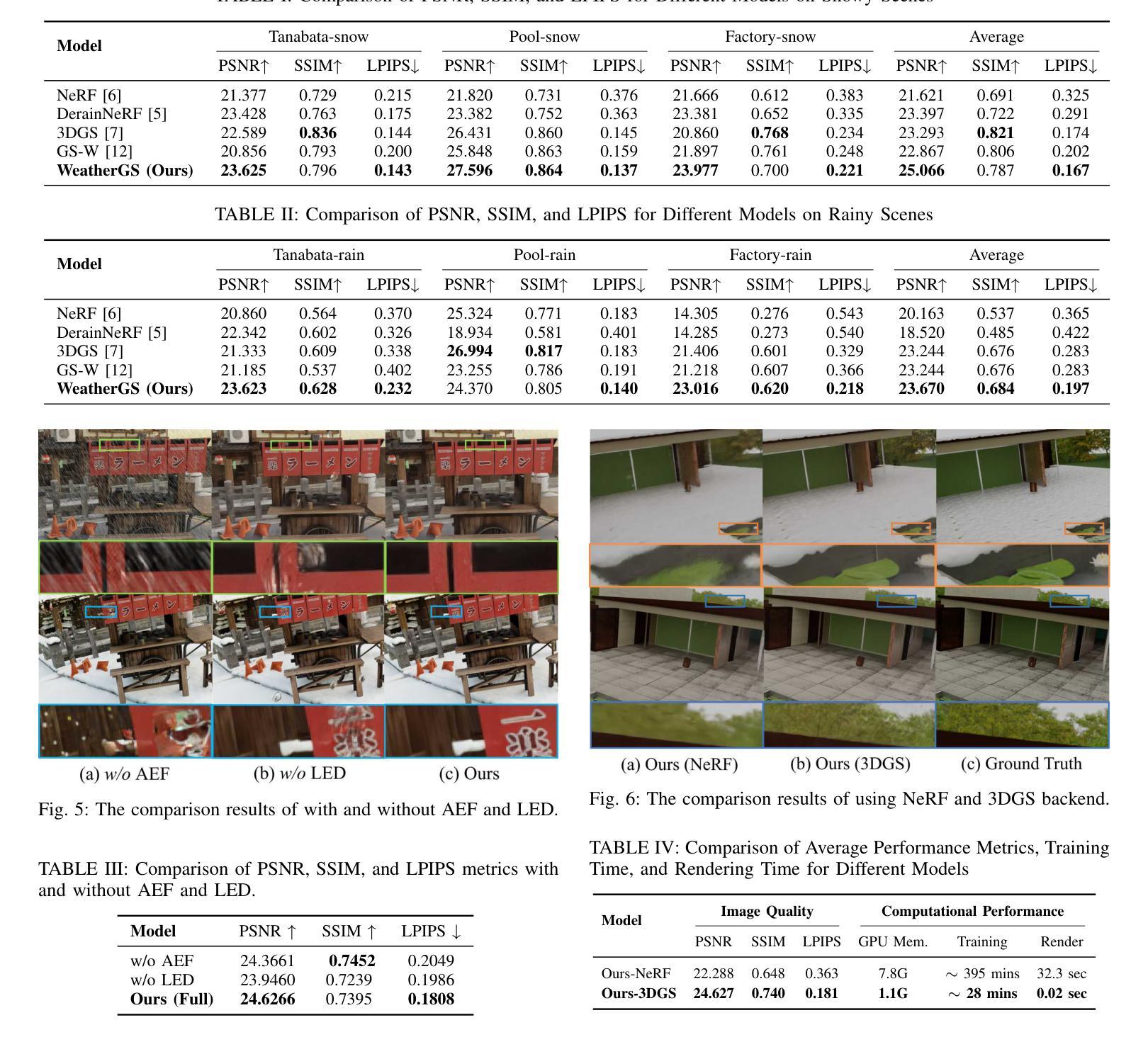

WeatherGS: 3D Scene Reconstruction in Adverse Weather Conditions via Gaussian Splatting

Authors:Chenghao Qian, Yuhu Guo, Wenjing Li, Gustav Markkula

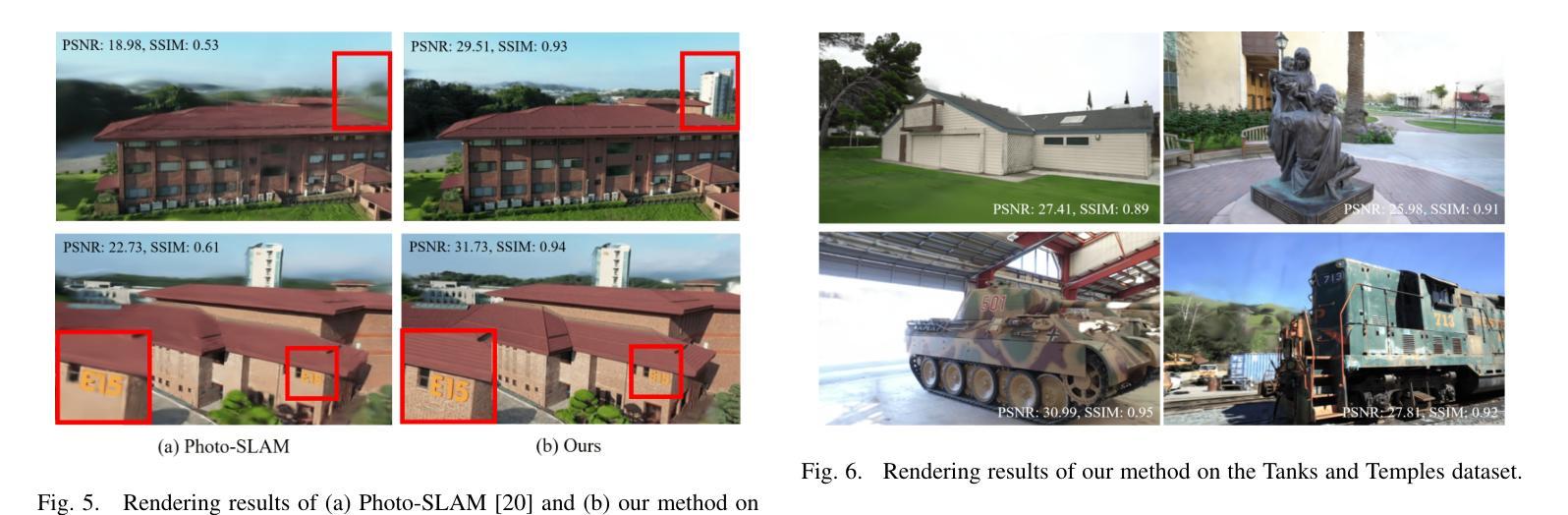

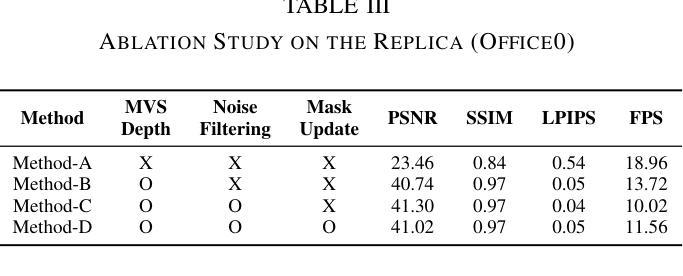

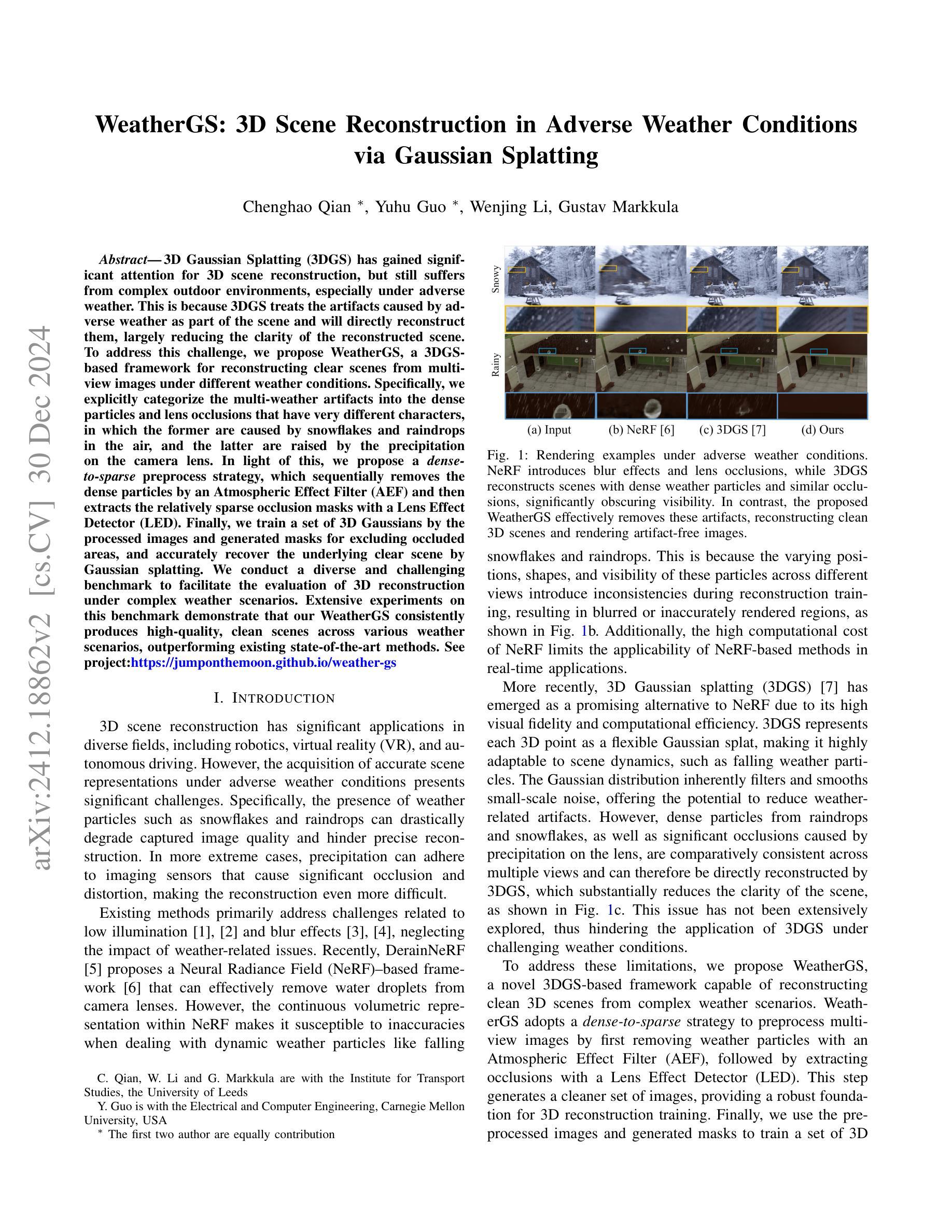

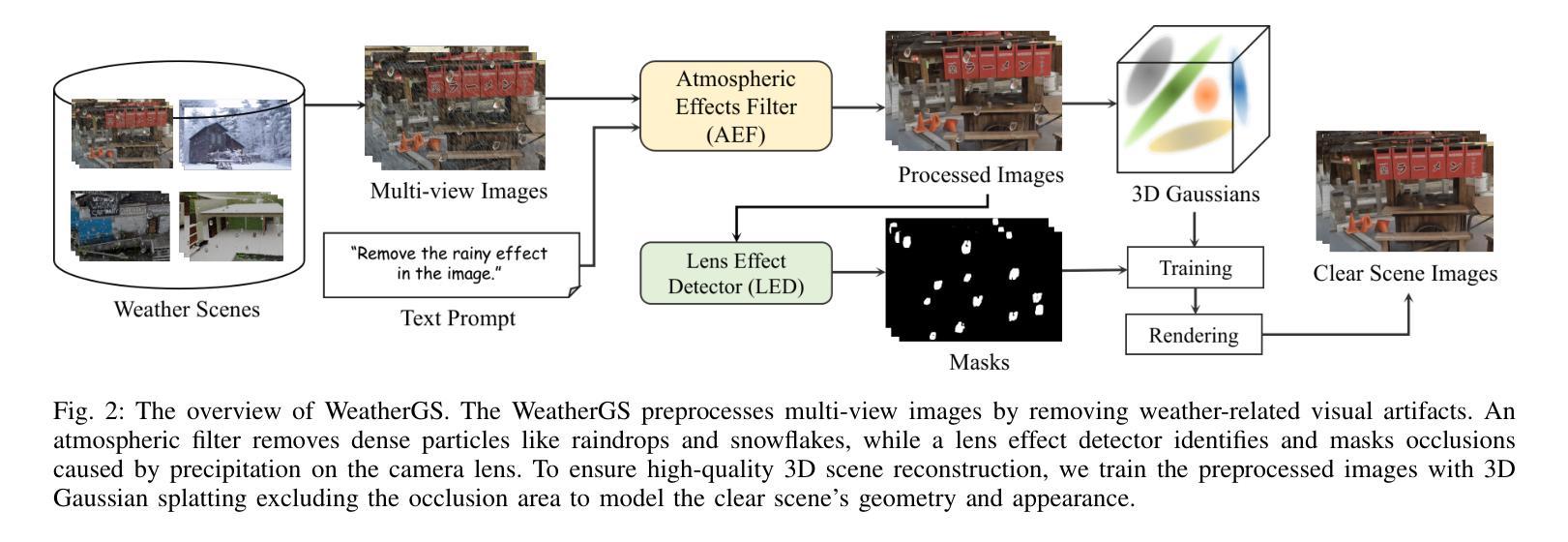

3D Gaussian Splatting (3DGS) has gained significant attention for 3D scene reconstruction, but still suffers from complex outdoor environments, especially under adverse weather. This is because 3DGS treats the artifacts caused by adverse weather as part of the scene and will directly reconstruct them, largely reducing the clarity of the reconstructed scene. To address this challenge, we propose WeatherGS, a 3DGS-based framework for reconstructing clear scenes from multi-view images under different weather conditions. Specifically, we explicitly categorize the multi-weather artifacts into the dense particles and lens occlusions that have very different characters, in which the former are caused by snowflakes and raindrops in the air, and the latter are raised by the precipitation on the camera lens. In light of this, we propose a dense-to-sparse preprocess strategy, which sequentially removes the dense particles by an Atmospheric Effect Filter (AEF) and then extracts the relatively sparse occlusion masks with a Lens Effect Detector (LED). Finally, we train a set of 3D Gaussians by the processed images and generated masks for excluding occluded areas, and accurately recover the underlying clear scene by Gaussian splatting. We conduct a diverse and challenging benchmark to facilitate the evaluation of 3D reconstruction under complex weather scenarios. Extensive experiments on this benchmark demonstrate that our WeatherGS consistently produces high-quality, clean scenes across various weather scenarios, outperforming existing state-of-the-art methods. See project page:https://jumponthemoon.github.io/weather-gs.

3D高斯融合(3DGS)在3D场景重建中受到了广泛关注,但仍面临复杂室外环境的挑战,特别是在恶劣天气下。这是因为3DGS将恶劣天气引起的伪影视为场景的一部分并直接重建它们,大大降低了重建场景的清晰度。为了应对这一挑战,我们提出了WeatherGS,这是一个基于3DGS的框架,可以从不同天气条件下的多视角图像重建清晰的场景。具体来说,我们明确地将多天气伪影分为密集粒子和镜头遮挡物两类,这两类具有截然不同的特征,前者是由空气中的雪花和雨滴引起的,后者是由相机镜头上的沉淀物引起的。鉴于此,我们提出了一种由密集到稀疏的预处理策略,依次通过大气效应滤波器(AEF)去除密集粒子,然后使用镜头效应检测器(LED)提取相对稀疏的遮挡掩膜。最后,我们使用处理过的图像和生成的掩膜训练一组3D高斯,排除遮挡区域,并通过高斯融合准确恢复潜在的清晰场景。我们进行了一项多样且具有挑战性的基准测试,以促进在复杂天气场景下3D重建的评估。在该基准测试上的大量实验表明,我们的WeatherGS在各种天气场景下始终产生高质量的清晰场景,优于现有的最先进的方法。详情见项目网页:https://jumponthemoon.github.io/weather-gs。

论文及项目相关链接

Summary

该文本介绍了在复杂的户外环境下,特别是在恶劣天气条件下,三维高斯Splatting(3DGS)在三维场景重建中面临的挑战。为解决这一问题,提出了WeatherGS框架,该框架基于3DGS,可从不同天气条件下的多视角图像重建清晰场景。通过明确区分多天气造成的伪影,如密集粒子和镜头遮挡物,提出一种由密到疏的预处理策略,依次去除密集粒子并提取相对稀疏的遮挡物掩膜。经过处理的图像和生成的掩膜用于训练一组三维高斯模型,排除遮挡区域,并通过高斯Splatting准确恢复底层清晰场景。在复杂的天气场景重建方面进行了多样性和挑战性的基准测试,实验表明,WeatherGS在各种天气场景下始终产生高质量、清晰的场景,优于现有的最先进的方法。

Key Takeaways

- 恶劣天气给三维场景重建带来挑战,现有的三维高斯Splatting(3DGS)方法易受到这些复杂环境因素的影响。

- 提出了一个名为WeatherGS的新框架来解决这一问题,该框架基于3DGS技术,能够从不同天气条件下的多视角图像重建清晰场景。

- WeatherGS通过明确区分多种天气造成的伪影(如密集粒子和镜头遮挡),采用由密到疏的预处理策略进行处理。

- 该策略包括使用大气效应过滤器(AEF)去除密集粒子,然后使用镜头效应检测器(LED)提取稀疏遮挡掩膜。

- 利用处理后的图像和生成的掩膜训练一组三维高斯模型,排除遮挡区域后重建清晰场景。

- 在复杂天气场景重建方面进行了全面的基准测试,证明WeatherGS在各种天气条件下都能产生高质量的结果。

点此查看论文截图

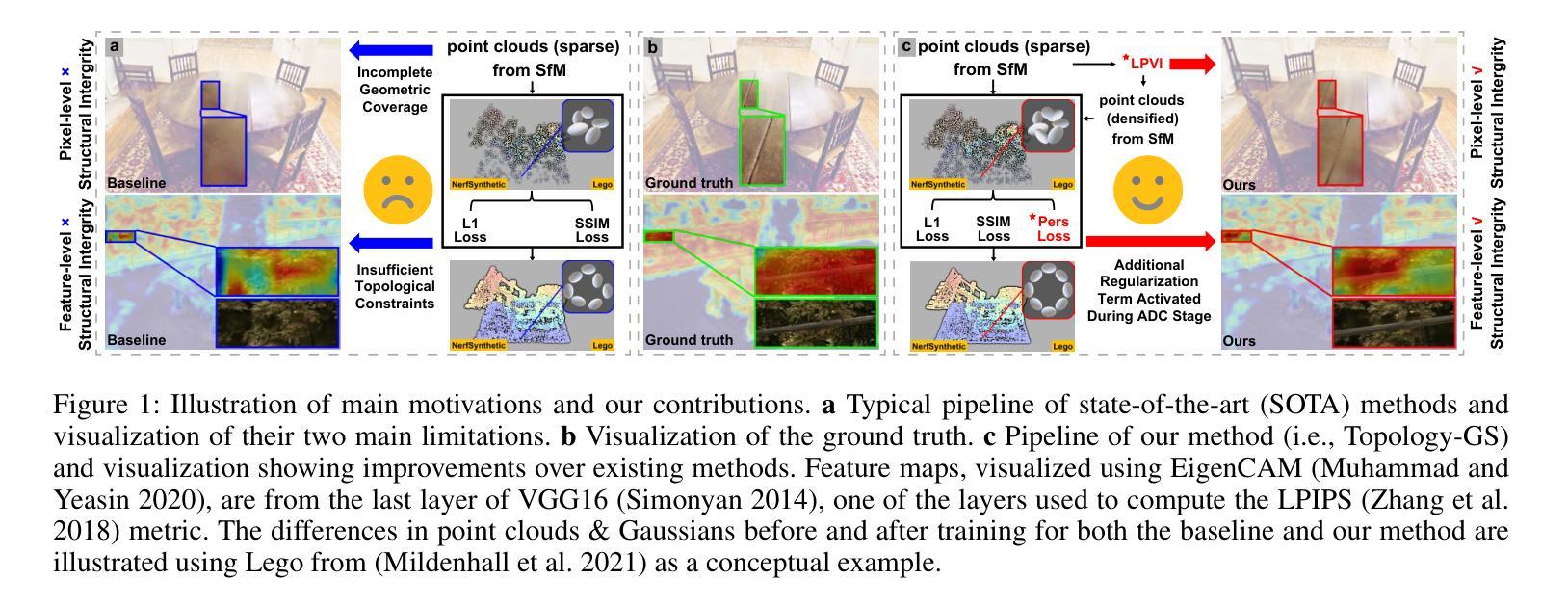

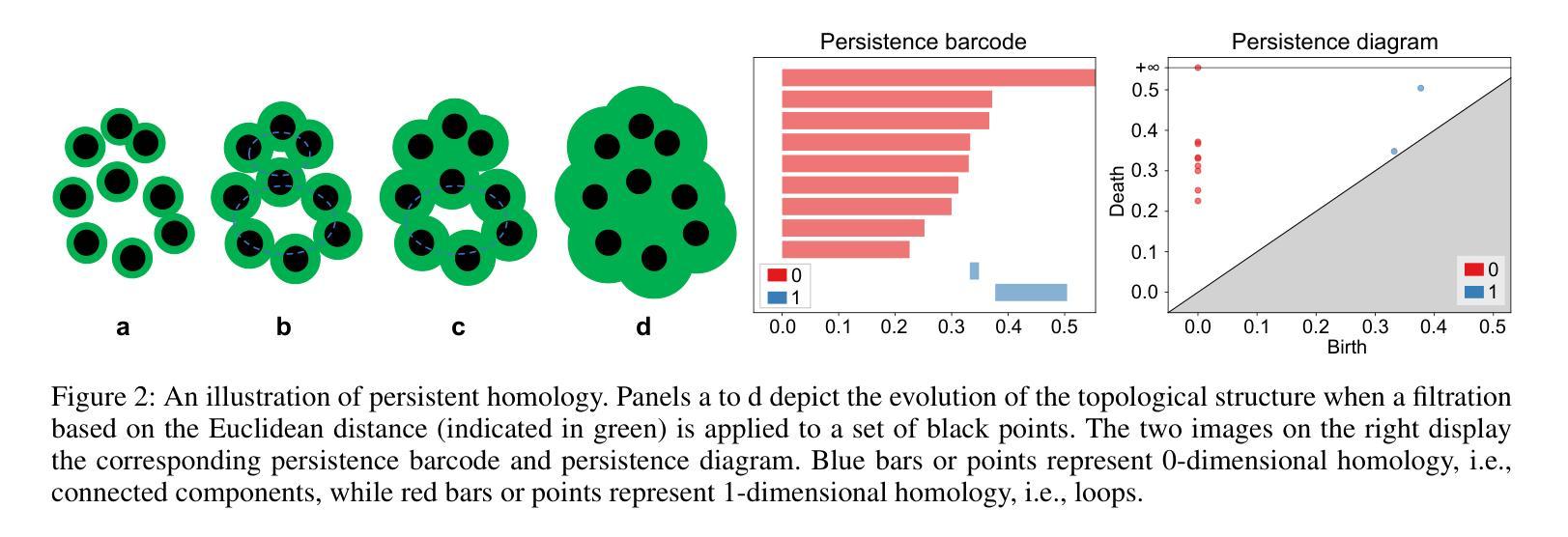

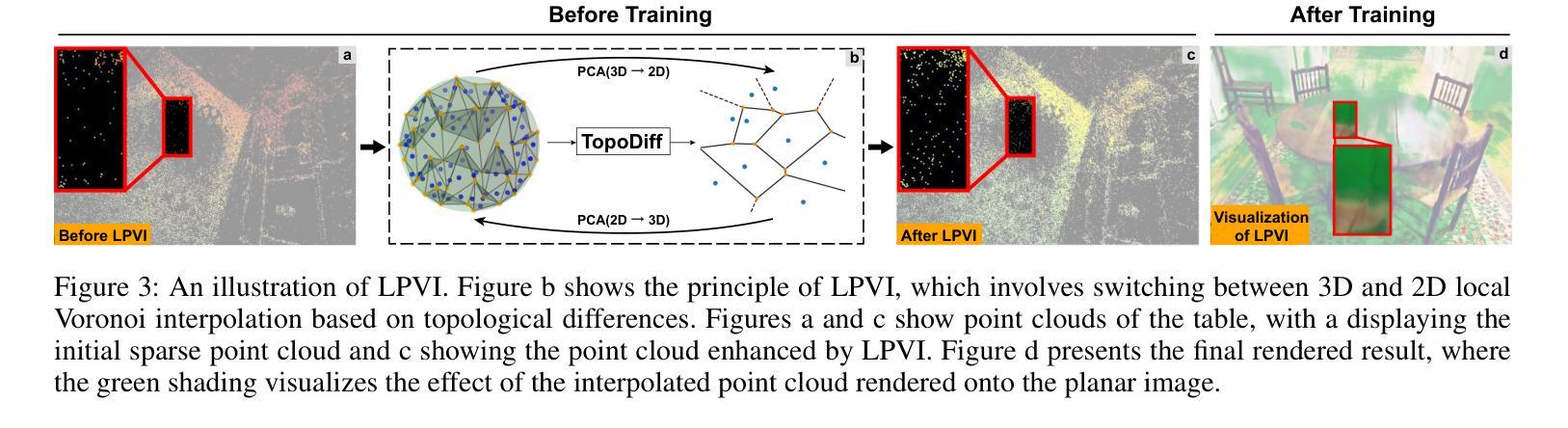

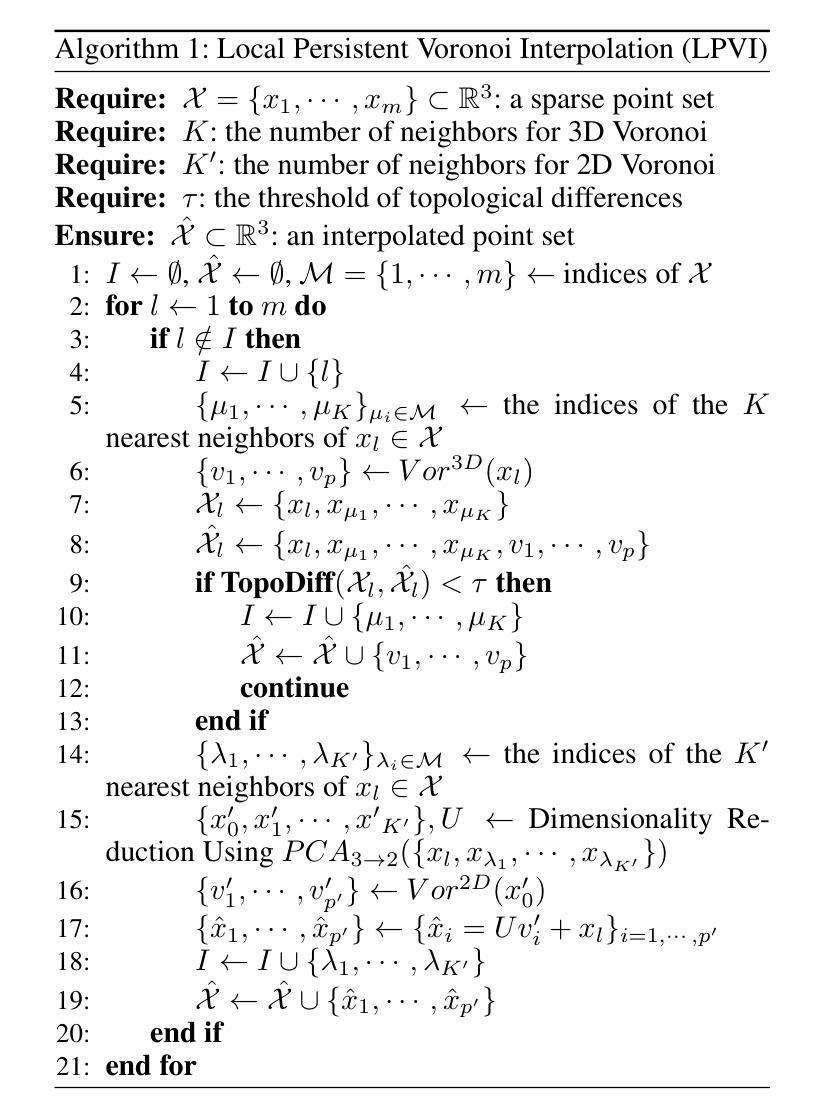

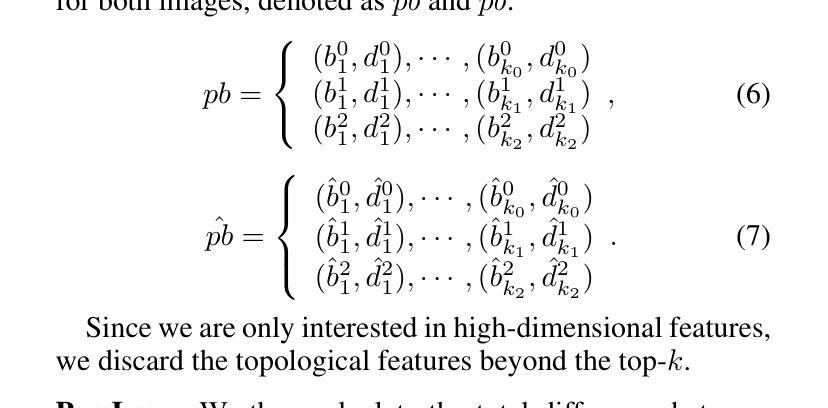

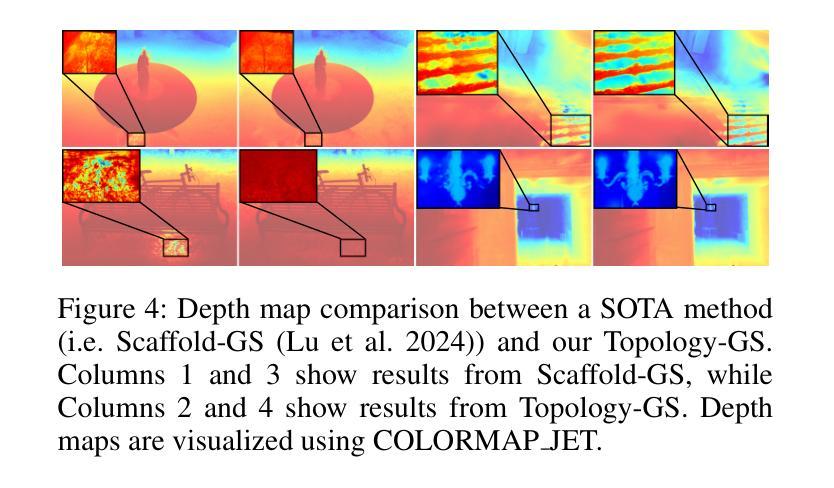

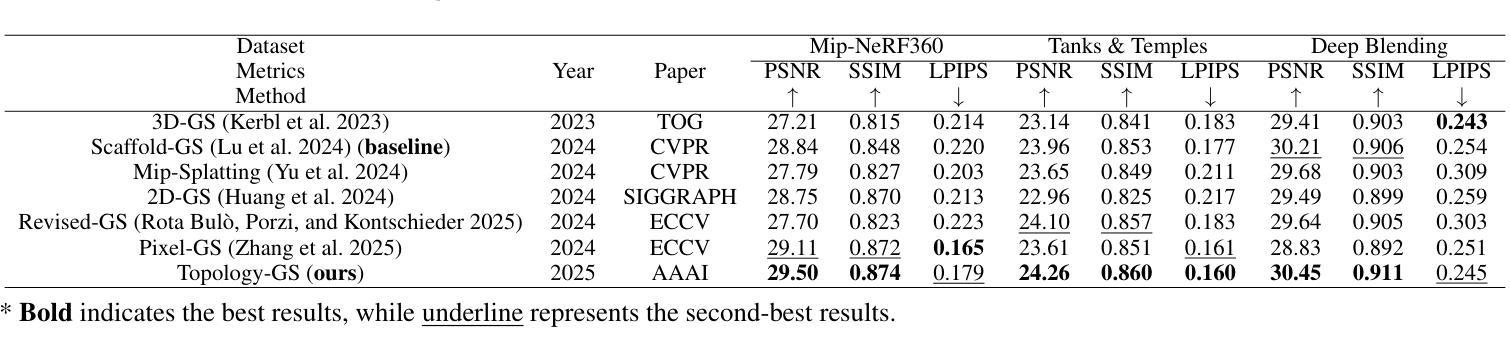

Topology-Aware 3D Gaussian Splatting: Leveraging Persistent Homology for Optimized Structural Integrity

Authors:Tianqi Shen, Shaohua Liu, Jiaqi Feng, Ziye Ma, Ning An

Gaussian Splatting (GS) has emerged as a crucial technique for representing discrete volumetric radiance fields. It leverages unique parametrization to mitigate computational demands in scene optimization. This work introduces Topology-Aware 3D Gaussian Splatting (Topology-GS), which addresses two key limitations in current approaches: compromised pixel-level structural integrity due to incomplete initial geometric coverage, and inadequate feature-level integrity from insufficient topological constraints during optimization. To overcome these limitations, Topology-GS incorporates a novel interpolation strategy, Local Persistent Voronoi Interpolation (LPVI), and a topology-focused regularization term based on persistent barcodes, named PersLoss. LPVI utilizes persistent homology to guide adaptive interpolation, enhancing point coverage in low-curvature areas while preserving topological structure. PersLoss aligns the visual perceptual similarity of rendered images with ground truth by constraining distances between their topological features. Comprehensive experiments on three novel-view synthesis benchmarks demonstrate that Topology-GS outperforms existing methods in terms of PSNR, SSIM, and LPIPS metrics, while maintaining efficient memory usage. This study pioneers the integration of topology with 3D-GS, laying the groundwork for future research in this area.

高斯采样(GS)已经成为表示离散体积辐射场的关键技术。它利用独特的参数化方法,以减轻场景优化中的计算需求。本文介绍了拓扑感知三维高斯采样(Topology-GS),解决了当前方法的两个主要局限性:由于初始几何覆盖不完整而损害像素级结构完整性,以及在优化过程中由于拓扑约束不足而导致特征级完整性不足。为了克服这些局限性,Topology-GS融入了一种新型插值策略——局部持久Voronoi插值(LPVI)和一种基于持久条码的专注于拓扑的正则化项,称为PersLoss。LPVI利用持久同源性来引导自适应插值,在低曲率区域增强点覆盖的同时保持拓扑结构。PersLoss通过约束渲染图像与真实图像之间拓扑特征的距离,使渲染图像的视觉感知相似性符合真实情况。在三个新型视图合成基准测试上的综合实验表明,在PSNR、SSIM和LPIPS指标方面,Topology-GS优于现有方法,同时保持内存使用效率。本研究首创了拓扑与3D-GS的集成,为这一领域的未来研究奠定了基础。

论文及项目相关链接

Summary

本文介绍了高斯平铺(GS)在表示离散体积辐射场中的重要作用,并提出了一种新的技术——拓扑感知三维高斯平铺(Topology-GS)。该技术解决了当前方法在场景优化中面临的两个主要问题:因初始几何覆盖不完全导致的像素级结构完整性受损,以及优化过程中因拓扑约束不足导致的特征级完整性不足。为解决这些问题,Topology-GS引入了局部持久Voronoi插值(LPVI)和基于持久条形码的拓扑重点正则化项PersLoss。LPVI利用持久同源性指导自适应插值,增强低曲率区域的点覆盖能力,同时保持拓扑结构。PersLoss通过约束渲染图像与地面真实图像之间拓扑特征的距离,使两者在视觉感知上更加相似。在三个新视角合成基准测试上的综合实验表明,Topology-GS在PSNR、SSIM和LPIPS指标上优于现有方法,同时保持高效的内存使用。该研究开创性地实现了拓扑与3D-GS的集成,为未来该领域的研究奠定了基础。

Key Takeaways

- 高斯平铺(GS)是表示离散体积辐射场的重要技术。

- 拓扑感知三维高斯平铺(Topology-GS)解决了因初始几何覆盖不完全和拓扑约束不足导致的问题。

- Topology-GS引入局部持久Voronoi插值(LPVI)和基于持久条形码的拓扑重点正则化项PersLoss。

- LPVI利用持久同源性增强低曲率区域的点覆盖,并保持拓扑结构。

- PersLoss约束渲染图像与真实图像之间拓扑特征的距离,提升视觉感知相似性。

- Topology-GS在多个基准测试上表现优越,优于现有方法。

点此查看论文截图

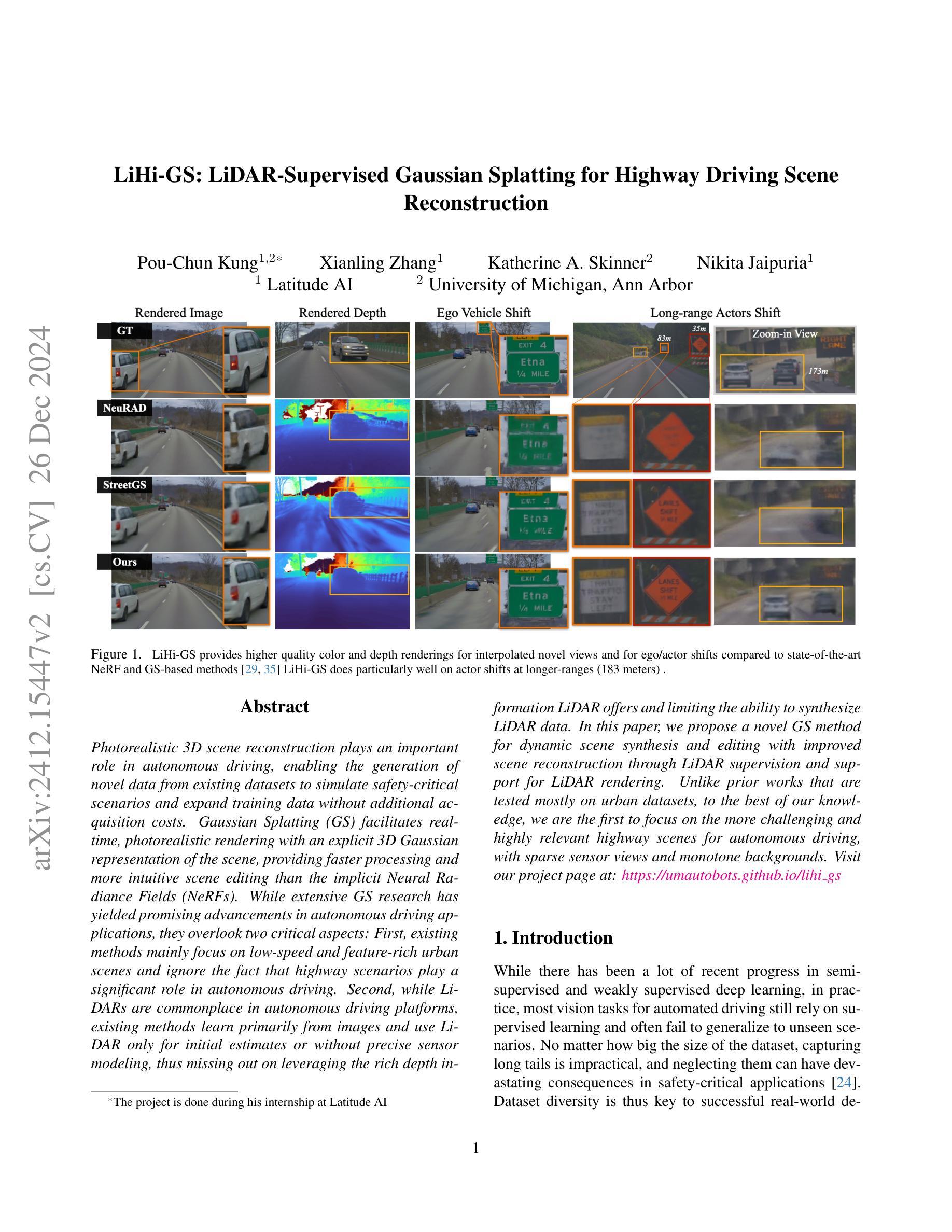

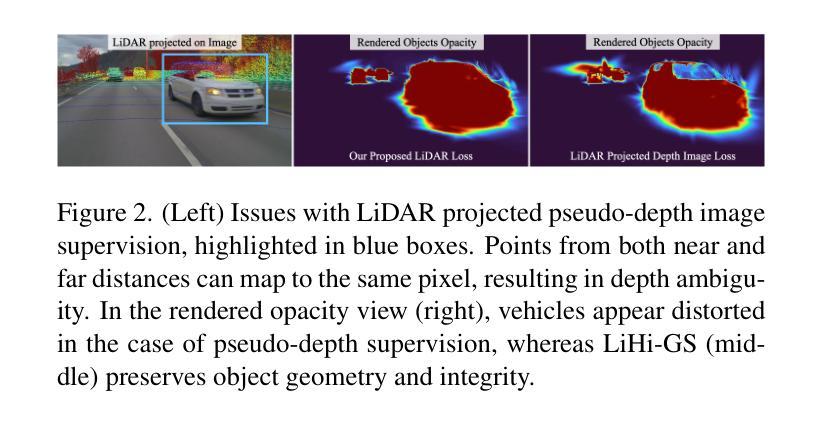

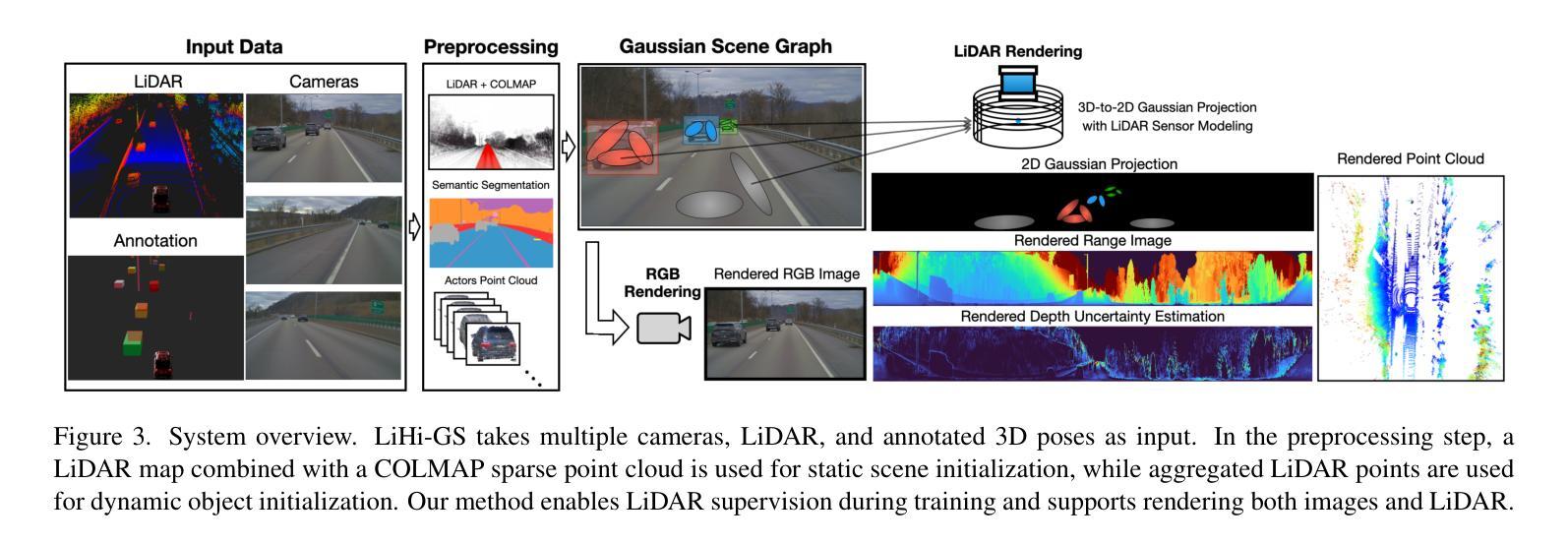

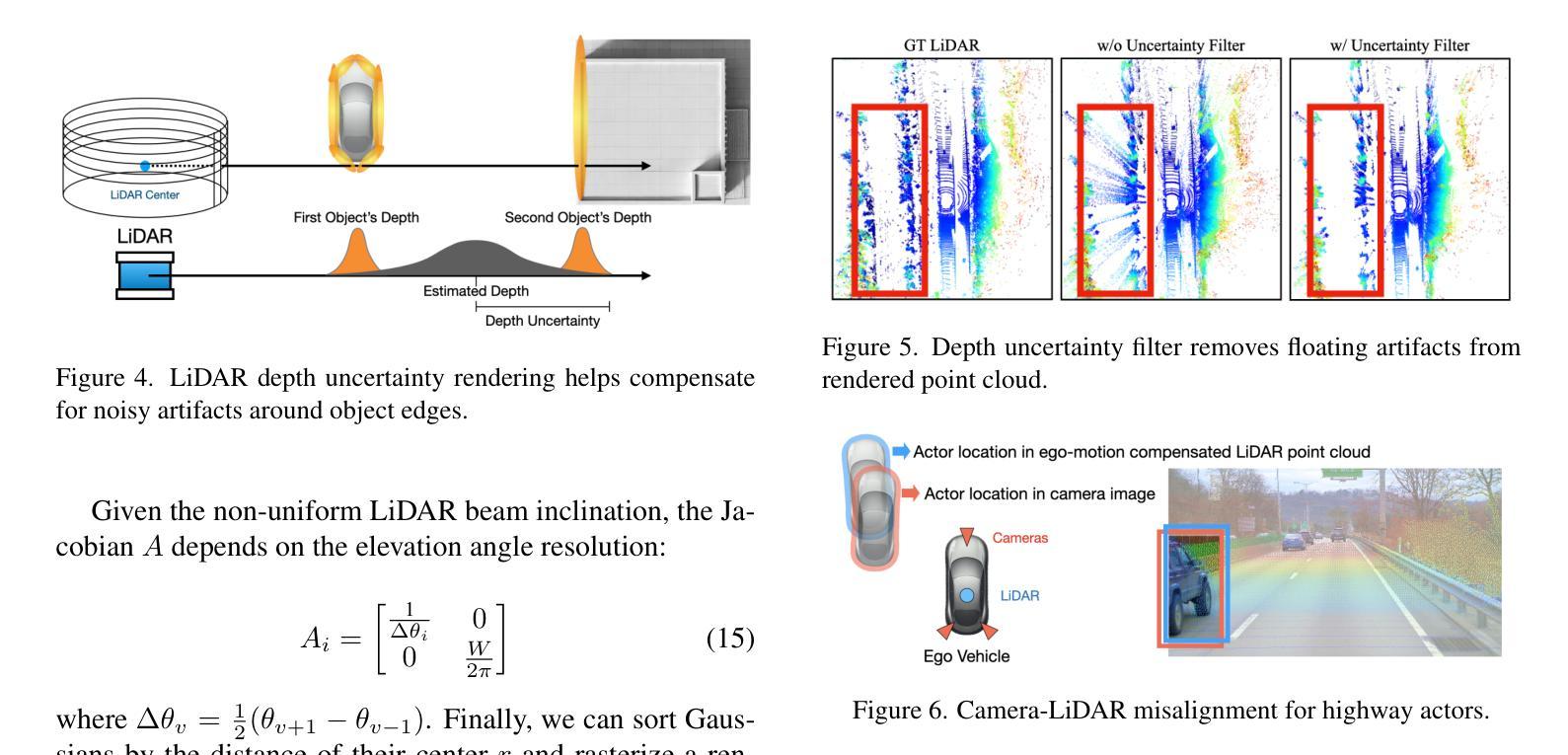

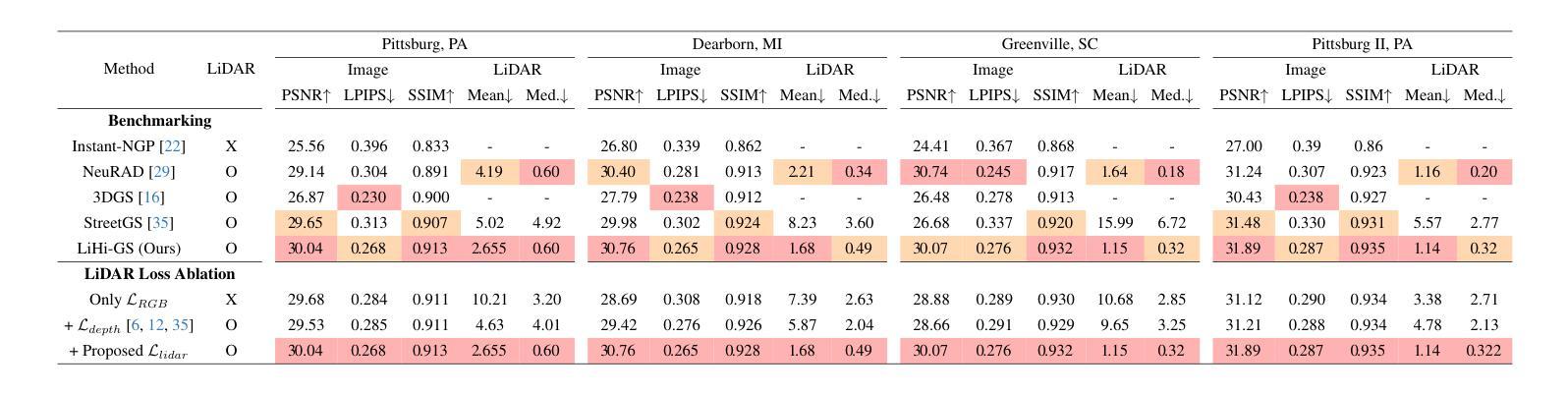

LiHi-GS: LiDAR-Supervised Gaussian Splatting for Highway Driving Scene Reconstruction

Authors:Pou-Chun Kung, Xianling Zhang, Katherine A. Skinner, Nikita Jaipuria

Photorealistic 3D scene reconstruction plays an important role in autonomous driving, enabling the generation of novel data from existing datasets to simulate safety-critical scenarios and expand training data without additional acquisition costs. Gaussian Splatting (GS) facilitates real-time, photorealistic rendering with an explicit 3D Gaussian representation of the scene, providing faster processing and more intuitive scene editing than the implicit Neural Radiance Fields (NeRFs). While extensive GS research has yielded promising advancements in autonomous driving applications, they overlook two critical aspects: First, existing methods mainly focus on low-speed and feature-rich urban scenes and ignore the fact that highway scenarios play a significant role in autonomous driving. Second, while LiDARs are commonplace in autonomous driving platforms, existing methods learn primarily from images and use LiDAR only for initial estimates or without precise sensor modeling, thus missing out on leveraging the rich depth information LiDAR offers and limiting the ability to synthesize LiDAR data. In this paper, we propose a novel GS method for dynamic scene synthesis and editing with improved scene reconstruction through LiDAR supervision and support for LiDAR rendering. Unlike prior works that are tested mostly on urban datasets, to the best of our knowledge, we are the first to focus on the more challenging and highly relevant highway scenes for autonomous driving, with sparse sensor views and monotone backgrounds. Visit our project page at: https://umautobots.github.io/lihi_gs

真实感三维场景重建在自动驾驶中扮演着重要角色。它能够从现有数据集中生成新数据,模拟安全关键场景,并在无需额外采集成本的情况下扩展训练数据。高斯贴片技术(GS)能够利用场景的显式三维高斯表示进行实时、真实感渲染,与隐式神经辐射场(NeRFs)相比,提供了更快的处理和更直观的场景编辑功能。尽管关于高斯贴片技术的广泛研究在自动驾驶应用中取得了有前景的进展,但它们忽略了两个关键方面:首先,现有方法主要集中在低速和特征丰富的城市场景上,忽视了高速公路场景在自动驾驶中的重要作用。其次,虽然激光雷达在自动驾驶平台中很常见,但现有方法主要从图像中学习,仅将激光雷达用于初步估计或不进行精确的传感器建模,因此错过了利用激光雷达提供的丰富深度信息的机会,并限制了合成激光雷达数据的能力。在本文中,我们提出了一种新的高斯贴片技术,用于动态场景合成和编辑。通过激光雷达监督和激光雷达渲染支持,改进了场景重建。与主要基于城市数据集进行测试的先前工作不同,据我们所知,我们是第一个专注于更具挑战性和高度相关的高速公路场景的自动驾驶研究,具有稀疏的传感器视角和单色背景。请访问我们的项目页面:https://umautobots.github.io/lihi_gs

论文及项目相关链接

Summary

本文介绍了在自动驾驶领域中,基于高斯贴图技术(GS)的光照真实三维场景重建的重要性。文章提出了一种新型的高斯贴图方法,用于动态场景合成和编辑,改进了场景重建过程,并通过激光雷达监督与渲染支持提高重建精度和利用激光雷达丰富的深度信息。该方法的重点是在高速公路场景上,这是目前自动驾驶领域中更具挑战性和高度相关性的场景。通过激光雷达的深度信息,提高场景重建的准确性和逼真度。

Key Takeaways

- 光照真实的三维场景重建对于模拟安全关键的自动驾驶场景和提高训练数据质量至关重要。

- 高斯贴图技术(GS)具有实时渲染能力,能明确表示三维场景的高斯分布,相较于隐式神经辐射场(NeRFs),具有更快的处理和更直观的编辑优势。

- 当前GS研究主要集中在低速度和特征丰富的城市场景上,忽略了高速公路场景在自动驾驶中的重要性。

- 激光雷达在自动驾驶平台中已普及,但现有方法主要依赖图像学习,并未充分利用激光雷达提供的丰富深度信息。

- 本文提出了一种新型GS方法,结合了激光雷达的监督与渲染支持,用于动态场景合成和编辑。

- 与主要测试城市数据集的前期工作不同,本文专注于更具挑战性和高度相关性的高速公路场景。

点此查看论文截图

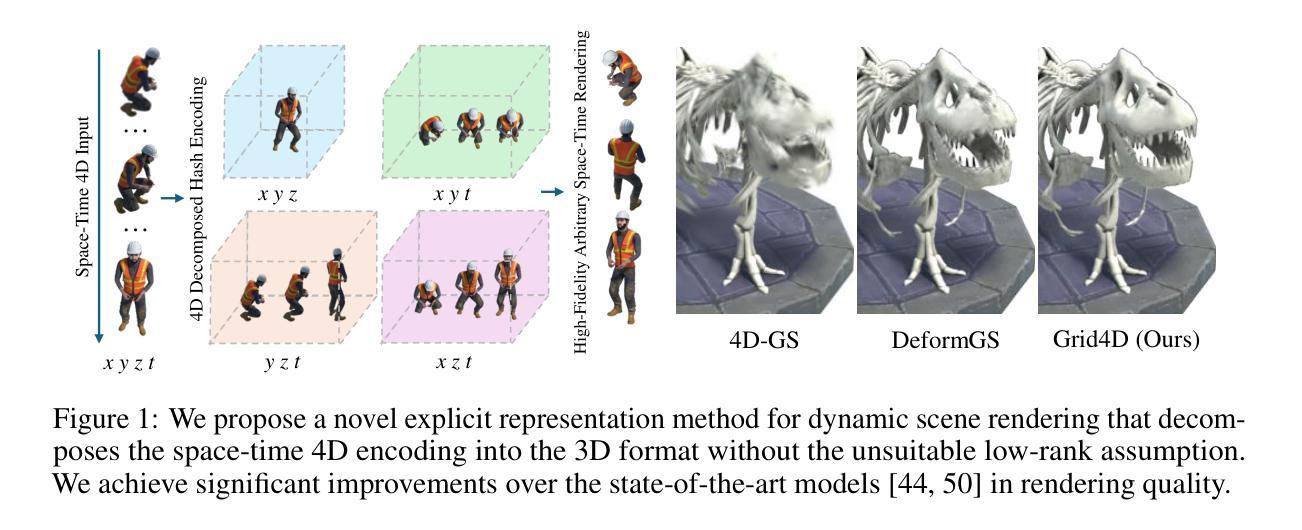

Grid4D: 4D Decomposed Hash Encoding for High-Fidelity Dynamic Gaussian Splatting

Authors:Jiawei Xu, Zexin Fan, Jian Yang, Jin Xie

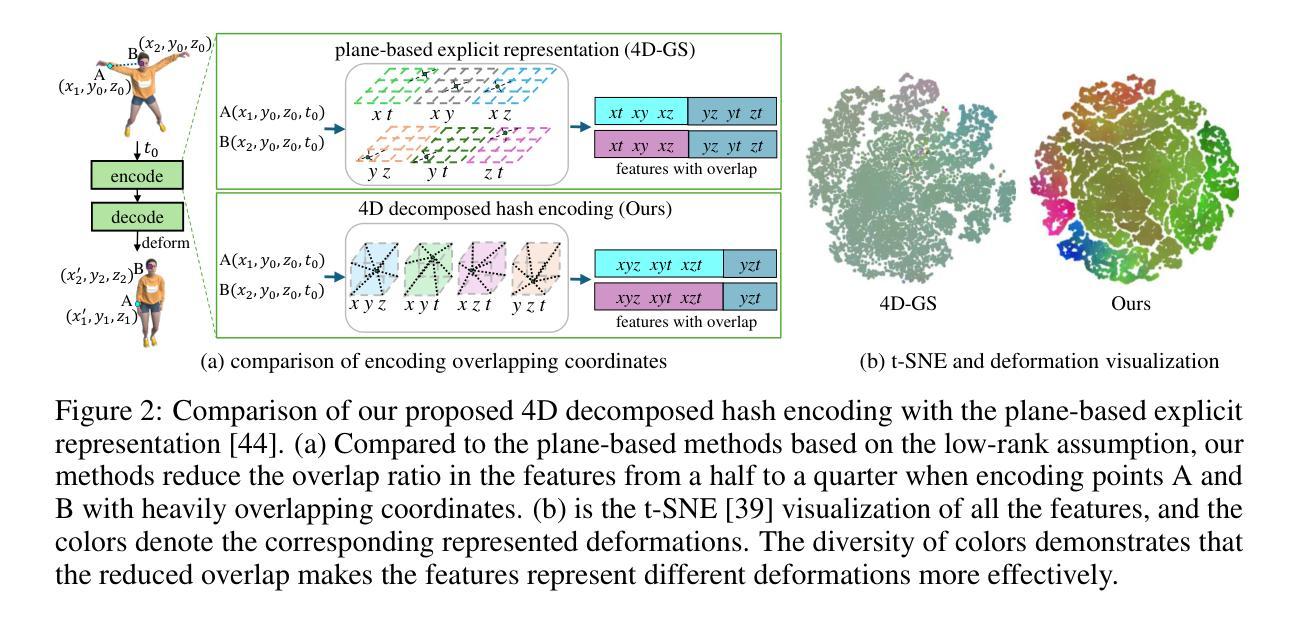

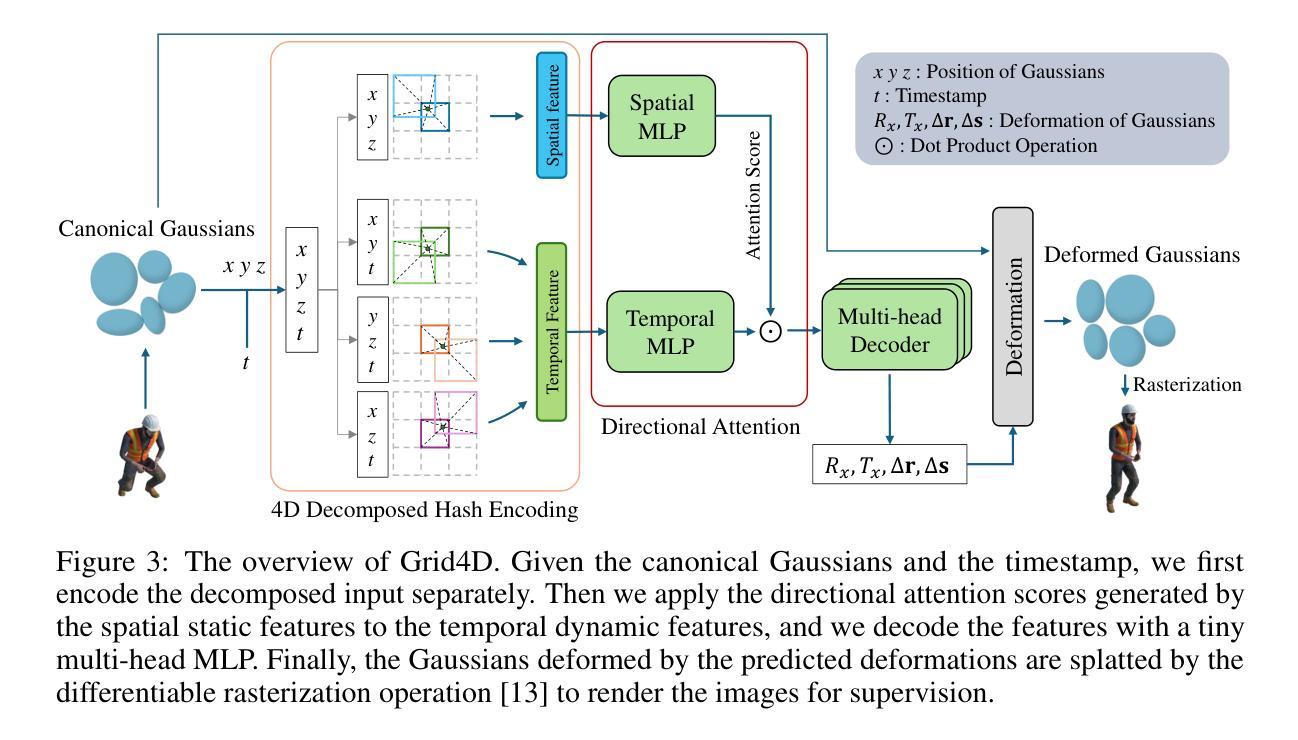

Recently, Gaussian splatting has received more and more attention in the field of static scene rendering. Due to the low computational overhead and inherent flexibility of explicit representations, plane-based explicit methods are popular ways to predict deformations for Gaussian-based dynamic scene rendering models. However, plane-based methods rely on the inappropriate low-rank assumption and excessively decompose the space-time 4D encoding, resulting in overmuch feature overlap and unsatisfactory rendering quality. To tackle these problems, we propose Grid4D, a dynamic scene rendering model based on Gaussian splatting and employing a novel explicit encoding method for the 4D input through the hash encoding. Different from plane-based explicit representations, we decompose the 4D encoding into one spatial and three temporal 3D hash encodings without the low-rank assumption. Additionally, we design a novel attention module that generates the attention scores in a directional range to aggregate the spatial and temporal features. The directional attention enables Grid4D to more accurately fit the diverse deformations across distinct scene components based on the spatial encoded features. Moreover, to mitigate the inherent lack of smoothness in explicit representation methods, we introduce a smooth regularization term that keeps our model from the chaos of deformation prediction. Our experiments demonstrate that Grid4D significantly outperforms the state-of-the-art models in visual quality and rendering speed.

近期,高斯拼贴(Gaussian Splatting)在静态场景渲染领域得到了越来越多的关注。由于显式表示法具有较低的计算开销和固有的灵活性,基于平面的显式方法成为预测基于高斯的动力学场景渲染模型的变形的流行方式。然而,基于平面的方法依赖于不适当的低秩假设,过度分解了时空4D编码,导致特征重叠过多和渲染质量不佳。为了解决这些问题,我们提出了Grid4D,这是一种基于高斯拼贴的动力学场景渲染模型,采用一种新的显式编码方法对4D输入进行哈希编码。不同于基于平面的显式表示方法,我们将4D编码分解成一个空间编码和三个临时3D哈希编码,无需低秩假设。此外,我们还设计了一种新型注意力模块,该模块在定向范围内生成注意力分数,以聚合空间和时间特征。定向注意力使Grid4D能够基于空间编码特征更准确地拟合不同场景组件的多样变形。此外,为了缓解显式表示方法固有的平滑性不足,我们引入了一项平滑正则化项,使我们的模型能够避免变形预测的混乱。实验表明,Grid4D在视觉质量和渲染速度上均显著优于最新模型。

论文及项目相关链接

PDF Accepted by NeurIPS 2024

Summary

基于高斯贴图的动态场景渲染中,Grid4D模型采用新型显式编码方法,通过哈希编码进行4D输入。与平面基于显式表示方法不同,该模型将4D编码分解为1个空间编码和3个时间编码,摒弃低阶假设。其设计的新注意力模块可在定向范围内生成注意力分数,汇聚时空特征。此外,为缓解显式表示方法的固有不光滑性,引入平滑正则化项,提高模型在视觉质量和渲染速度方面的表现。

Key Takeaways

- Gaussian splatting在静态场景渲染中受到关注。

- 平面基于显式方法预测变形存在特征重叠和渲染质量不佳的问题。

- Grid4D模型采用新型显式编码方法,摒弃低阶假设,通过哈希编码进行4D输入。

- Grid4D模型分解时空特征,采用注意力模块在定向范围内生成注意力分数。

- Grid4D模型引入平滑正则化项,解决显式表示方法的固有不光滑问题。

- Grid4D模型在视觉质量和渲染速度方面表现优异。

点此查看论文截图

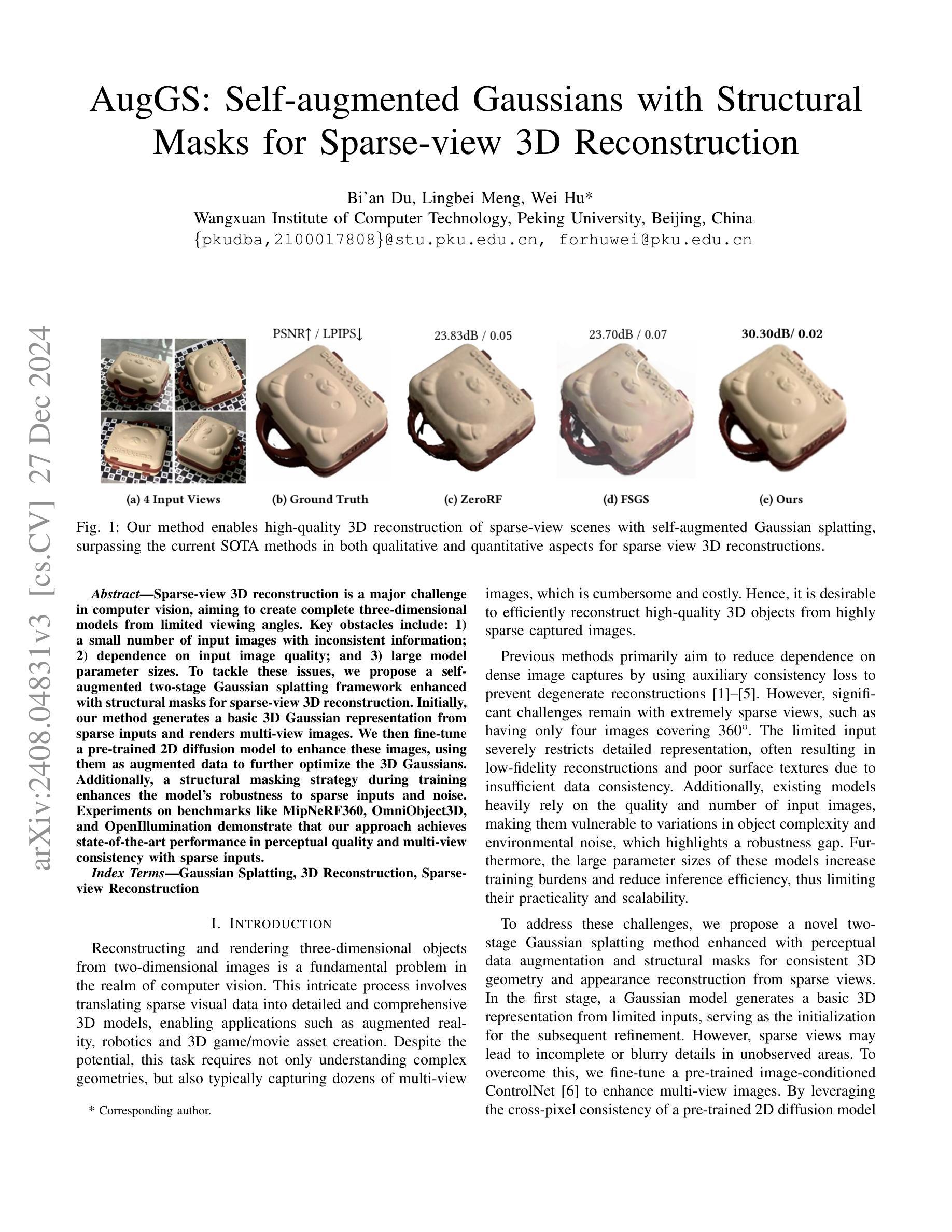

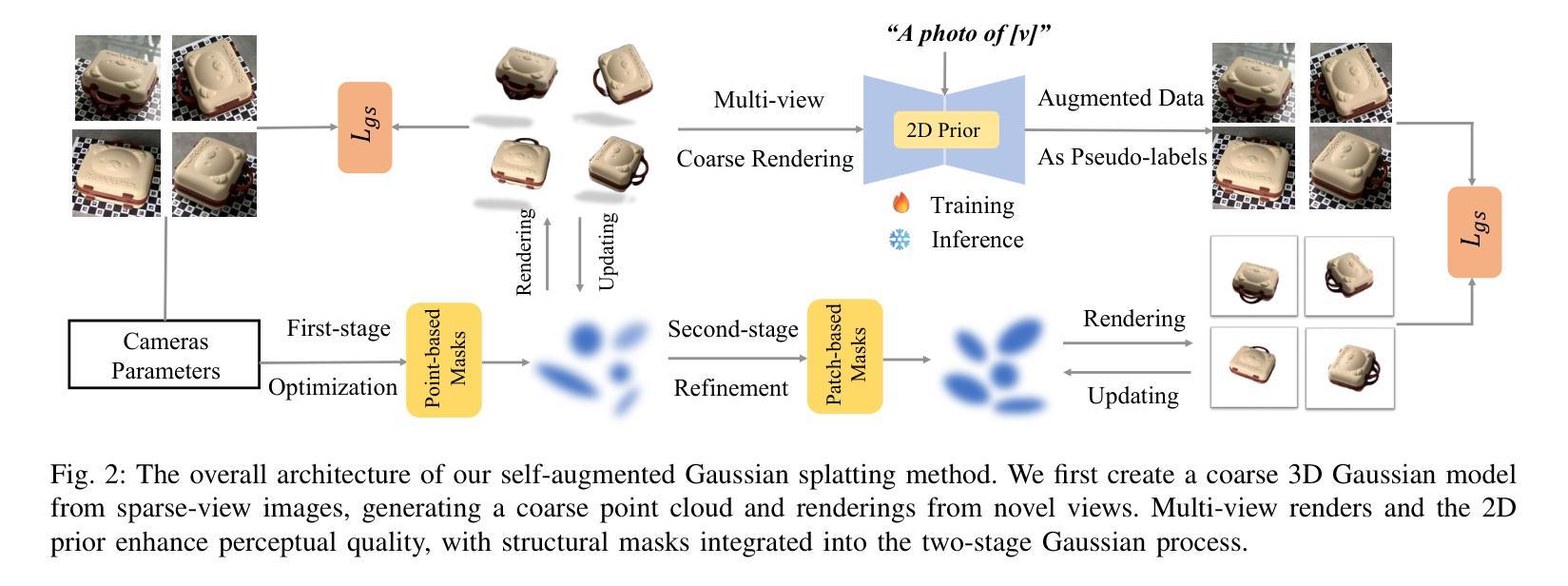

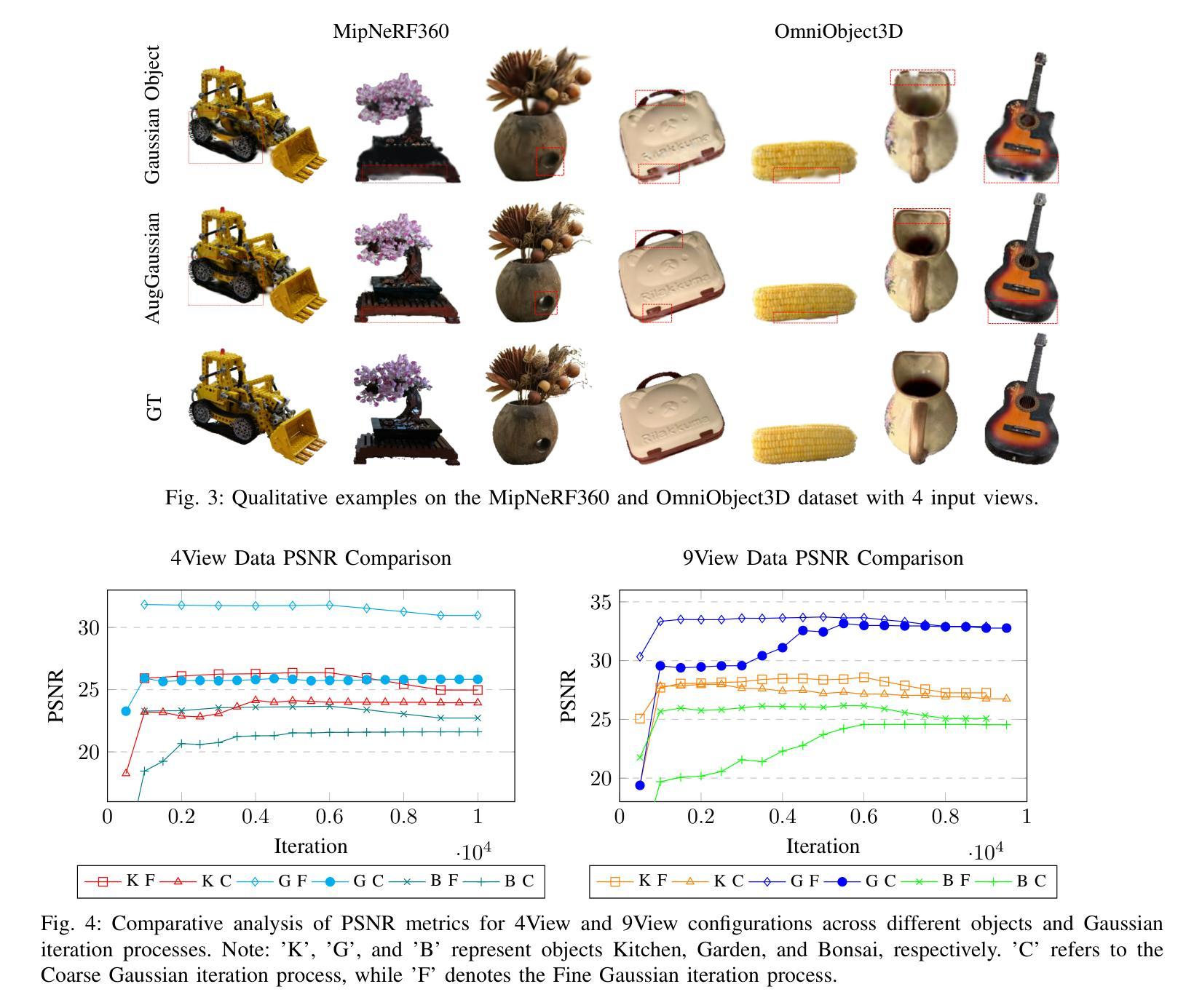

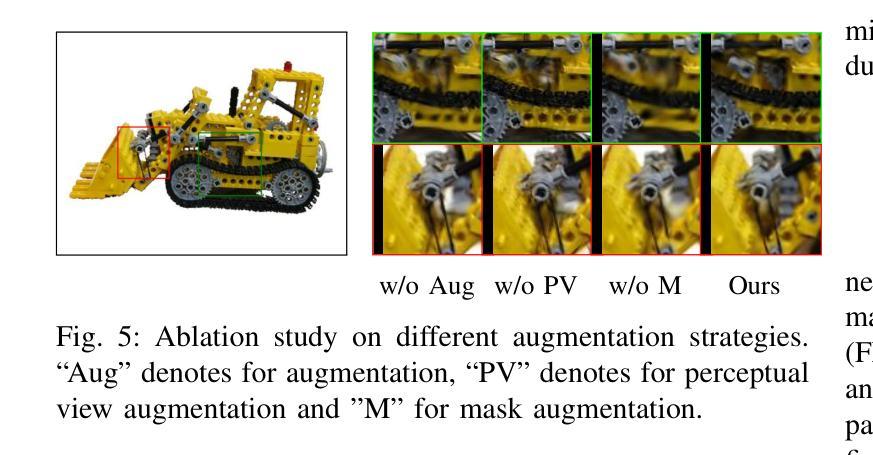

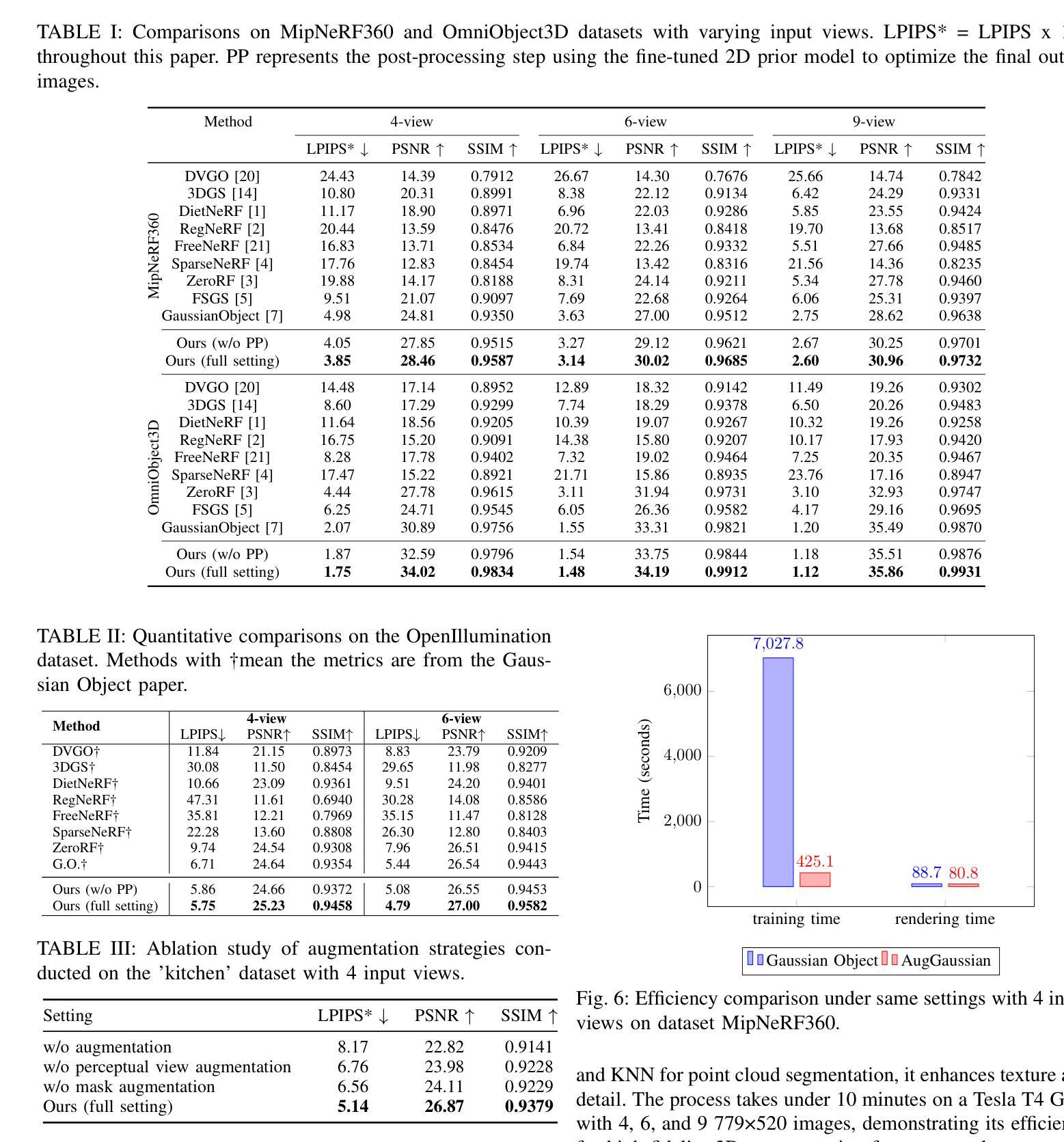

AugGS: Self-augmented Gaussians with Structural Masks for Sparse-view 3D Reconstruction

Authors:Bi’an Du, Lingbei Meng, Wei Hu

Sparse-view 3D reconstruction is a major challenge in computer vision, aiming to create complete three-dimensional models from limited viewing angles. Key obstacles include: 1) a small number of input images with inconsistent information; 2) dependence on input image quality; and 3) large model parameter sizes. To tackle these issues, we propose a self-augmented two-stage Gaussian splatting framework enhanced with structural masks for sparse-view 3D reconstruction. Initially, our method generates a basic 3D Gaussian representation from sparse inputs and renders multi-view images. We then fine-tune a pre-trained 2D diffusion model to enhance these images, using them as augmented data to further optimize the 3D Gaussians.Additionally, a structural masking strategy during training enhances the model’s robustness to sparse inputs and noise. Experiments on benchmarks like MipNeRF360, OmniObject3D, and OpenIllumination demonstrate that our approach achieves state-of-the-art performance in perceptual quality and multi-view consistency with sparse inputs.

稀疏视角下的三维重建是计算机视觉领域的主要挑战,其目标是基于有限的视角创建完整的三维模型。关键难题包括:1)输入图像数量少且信息不一致;2)依赖于输入图像的质量;以及3)模型参数规模大。为了应对这些问题,我们提出了一种增强结构的稀疏视角三维重建自增强两阶段高斯喷射框架。首先,我们的方法从稀疏输入生成基本的三维高斯表示,并呈现多视角图像。然后,我们使用预训练的二维扩散模型进行微调,以增强这些图像,将其作为增强数据进一步优化三维高斯。此外,在训练过程中采用结构掩码策略提高了模型对稀疏输入和噪声的鲁棒性。在MipNeRF360、OmniObject3D和OpenIllumination等基准测试上的实验表明,我们的方法在感知质量和多视角一致性方面达到了稀疏输入的最先进性能。

论文及项目相关链接

Summary

该文探讨稀疏视角下的三维重建挑战,并提出了一个结合自增强技术与两阶段高斯贴图框架的解决方案。此方法能够生成基本的三维高斯表示,并通过渲染多视角图像进行微调。通过利用预训练的二维扩散模型增强图像,并使用结构掩膜策略提高模型对稀疏输入和噪声的鲁棒性。实验证明,该方法在感知质量和多视角一致性方面达到了领先水平。

Key Takeaways

- 稀疏视角下的三维重建是计算机视觉的主要挑战之一,需要从有限的视角创建完整的三维模型。

- 主要难题包括输入图像数量少且信息不一致、依赖输入图像质量、模型参数规模较大。

- 提出的解决方案是一个结合自增强技术与两阶段高斯贴图框架,能够生成三维高斯表示并渲染多视角图像。

- 通过微调预训练的二维扩散模型增强图像,将其作为增强数据进一步优化三维高斯表示。

- 结构掩膜策略提高模型对稀疏输入和噪声的鲁棒性。

- 在MipNeRF360、OmniObject3D和OpenIllumination等基准测试上,该方法达到了先进的感知质量和多视角一致性水平。

- 该方法对于解决稀疏视角下的三维重建问题具有潜力。

点此查看论文截图