⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-09 更新

Deep Learning-based Compression Detection for explainable Face Image Quality Assessment

Authors:Laurin Jonientz, Johannes Merkle, Christian Rathgeb, Benjamin Tams, Georg Merz

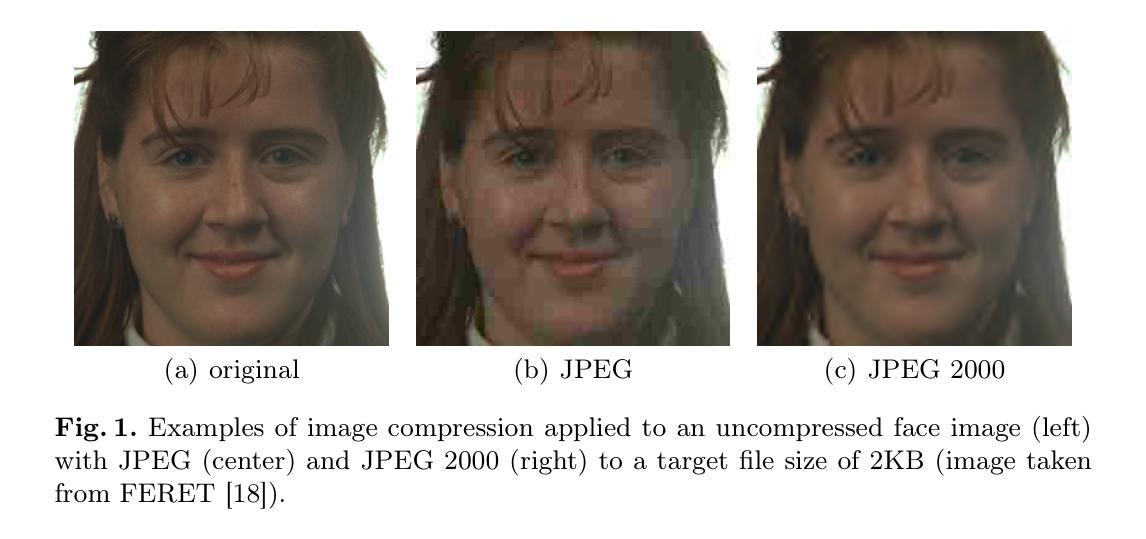

The assessment of face image quality is crucial to ensure reliable face recognition. In order to provide data subjects and operators with explainable and actionable feedback regarding captured face images, relevant quality components have to be measured. Quality components that are known to negatively impact the utility of face images include JPEG and JPEG 2000 compression artefacts, among others. Compression can result in a loss of important image details which may impair the recognition performance. In this work, deep neural networks are trained to detect the compression artefacts in a face images. For this purpose, artefact-free facial images are compressed with the JPEG and JPEG 2000 compression algorithms. Subsequently, the PSNR and SSIM metrics are employed to obtain training labels based on which neural networks are trained using a single network to detect JPEG and JPEG 2000 artefacts, respectively. The evaluation of the proposed method shows promising results: in terms of detection accuracy, error rates of 2-3% are obtained for utilizing PSNR labels during training. In addition, we show that error rates of different open-source and commercial face recognition systems can be significantly reduced by discarding face images exhibiting severe compression artefacts. To minimize resource consumption, EfficientNetV2 serves as basis for the presented algorithm, which is available as part of the OFIQ software.

面部图像质量的评估对于确保可靠的人脸识别至关重要。为了向数据主体和操作人员提供有关捕获的面部图像的可解释和可操作的反馈,必须测量相关的质量组件。已知会对面部图像的实用性产生负面影响的组件包括JPEG和JPEG 2000压缩伪影等。压缩可能导致重要图像细节的丢失,从而影响识别性能。在这项工作中,训练深度神经网络以检测面部图像中的压缩伪影。为此,无伪影的面部图像使用JPEG和JPEG 2000压缩算法进行压缩。随后,使用PSNR和SSIM指标基于获得的训练标签来训练神经网络,以使用单个网络分别检测JPEG和JPEG 2000伪影。对所提出方法的评估显示出有前景的结果:在检测精度方面,在训练过程中利用PSNR标签可获得2-3%的错误率。此外,我们还表明,通过丢弃表现出严重压缩伪影的面部图像,可以显著降低不同开源和商业人脸识别系统的错误率。为了最小化资源消耗,EfficientNetV2作为所提出算法的基础,作为OFIQ软件的一部分提供。

论文及项目相关链接

PDF 2nd Workshop on Fairness in Biometric Systems (FAIRBIO) at International Conference on Pattern Recognition (ICPR) 2024

Summary

本文论述了面部图像质量评估的重要性,并介绍了通过深度神经网络检测JPEG和JPEG 2000压缩伪影的方法。文章通过压缩无伪影的面部图像,使用PSNR和SSIM指标获取训练标签,并训练神经网络以检测JPEG和JPEG 2000伪影。实验结果表明,该方法在检测精度上具有优异表现,且能够有效降低使用受严重压缩伪影影响的面部图像的误差率。为了降低资源消耗,本文采用了EfficientNetV2算法作为研究基础,该算法已作为OFIQ软件的一部分发布。

Key Takeaways

- 面部图像质量评估对于确保可靠的面部识别至关重要。

- JPEG和JPEG 2000压缩伪影等质量问题是影响面部图像效用的重要因素。

- 深度神经网络被训练用于检测面部图像中的压缩伪影。

- 使用PSNR和SSIM指标获取训练标签,以训练神经网络。

- 实验结果表明该检测方法的准确性较高,错误率在2-3%之间。

- 剔除具有严重压缩伪影的面部图像可以显著降低现有面部识别系统的误差率。

- 为了减少资源消耗,研究采用了EfficientNetV2算法,并作为OFIQ软件的一部分提供。

点此查看论文截图

Wavelet-Driven Generalizable Framework for Deepfake Face Forgery Detection

Authors:Lalith Bharadwaj Baru, Rohit Boddeda, Shilhora Akshay Patel, Sai Mohan Gajapaka

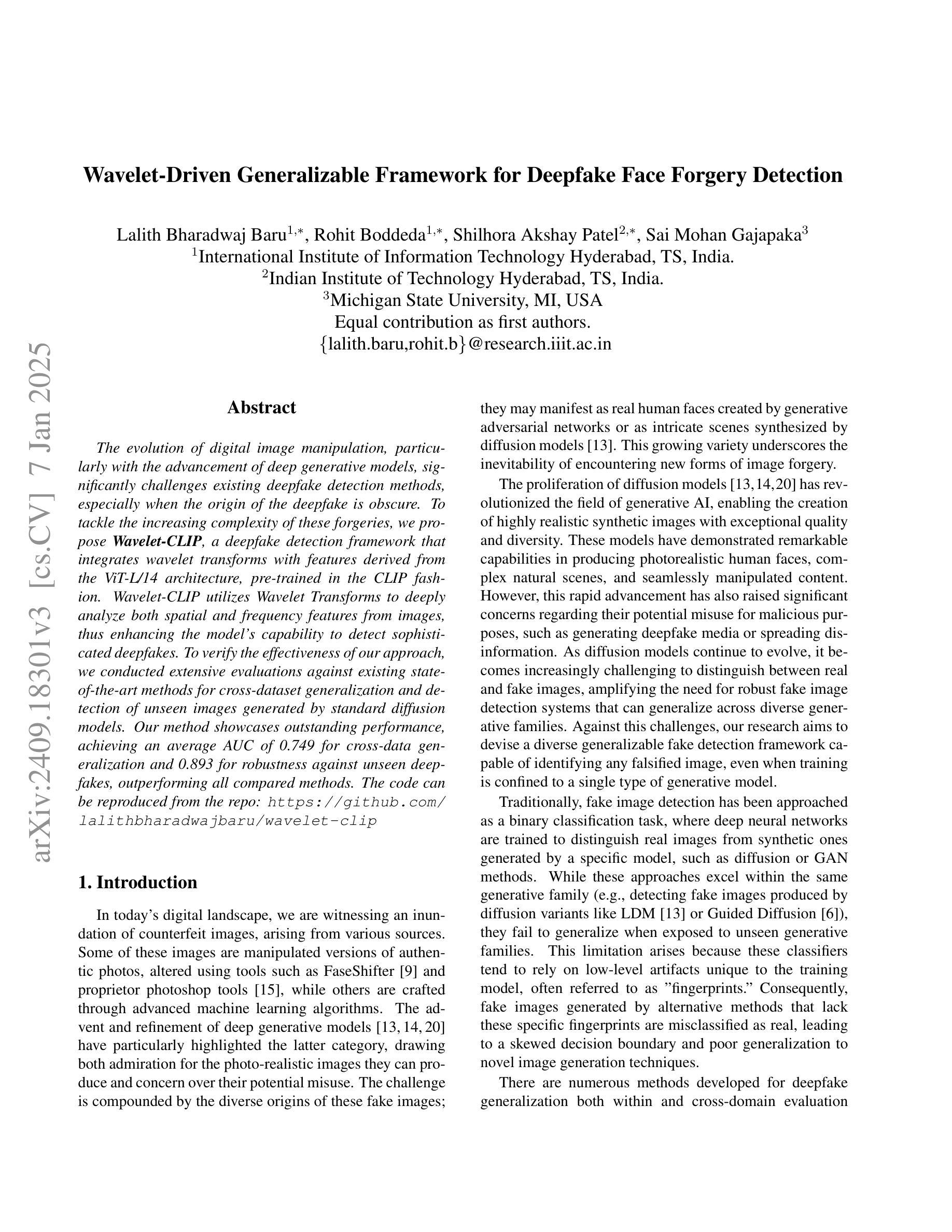

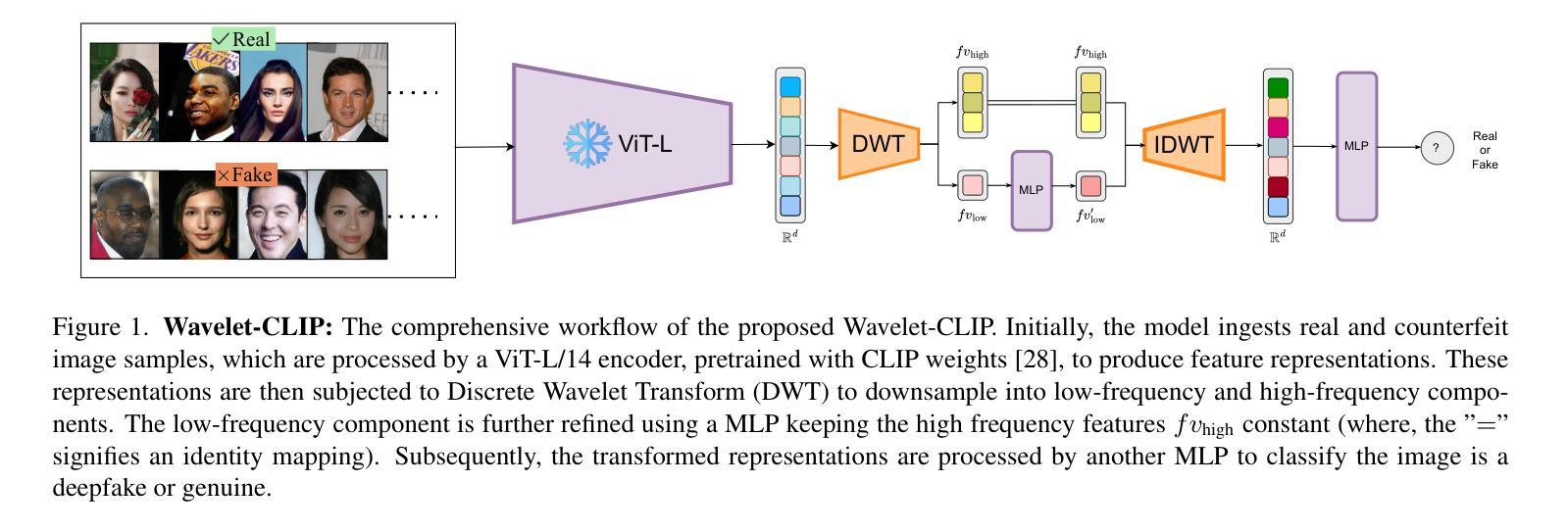

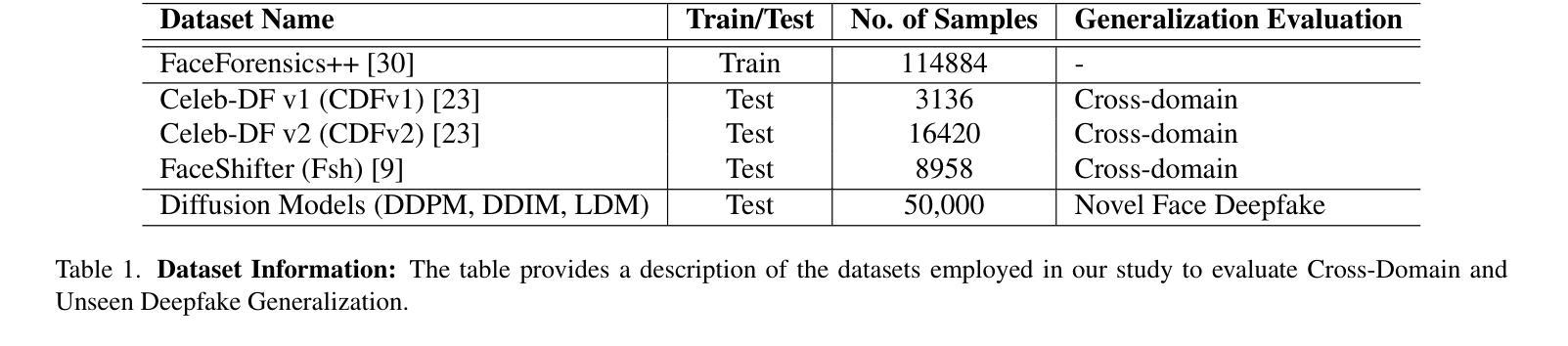

The evolution of digital image manipulation, particularly with the advancement of deep generative models, significantly challenges existing deepfake detection methods, especially when the origin of the deepfake is obscure. To tackle the increasing complexity of these forgeries, we propose \textbf{Wavelet-CLIP}, a deepfake detection framework that integrates wavelet transforms with features derived from the ViT-L/14 architecture, pre-trained in the CLIP fashion. Wavelet-CLIP utilizes Wavelet Transforms to deeply analyze both spatial and frequency features from images, thus enhancing the model’s capability to detect sophisticated deepfakes. To verify the effectiveness of our approach, we conducted extensive evaluations against existing state-of-the-art methods for cross-dataset generalization and detection of unseen images generated by standard diffusion models. Our method showcases outstanding performance, achieving an average AUC of 0.749 for cross-data generalization and 0.893 for robustness against unseen deepfakes, outperforming all compared methods. The code can be reproduced from the repo: \url{https://github.com/lalithbharadwajbaru/Wavelet-CLIP}

数字图像操作的演变,尤其是随着深度生成模型的进步,对现有深度伪造检测方法的挑战日益显著,尤其是在深度伪造的来源不明确的情况下。为了应对这些伪造品日益复杂的挑战,我们提出了Wavelet-CLIP,这是一个深度伪造检测框架,它将小波变换与从ViT-L/14架构中派生出的特征相结合,以CLIP方式进行预训练。Wavelet-CLIP利用小波变换深入图像的空间和频率特征进行分析,从而提高了模型检测复杂深度伪造的能力。为了验证我们方法的有效性,我们对现有的先进方法进行了广泛的评估,用于跨数据集推广和检测由标准扩散模型生成的不可见图像。我们的方法展示了出色的性能,在跨数据推广方面平均AUC达到0.749,在对抗未见过的深度伪造方面达到0.893的稳健性,超越了所有比较的方法。代码可从存储库重现:https://github.com/lalithbharadwajbaru/Wavelet-CLIP。

论文及项目相关链接

PDF 9 Pages, 2 Figures, 3 Tables

Summary

人脸识别领域中,数字图像操纵技术尤其是深度生成模型的进步对现有深度伪造检测技术构成挑战。为此提出Wavelet-CLIP检测框架,结合小波变换与ViT-L/14架构特征,预训练于CLIP模式。该框架通过小波变换深入分析图像的空间和频率特征,提高检测高级深度伪造的能力。跨数据集泛化与检测未见扩散模型生成图像的实验验证,其性能出色,平均AUC值为跨数据泛化的0.749与对未见深度伪造的0.893,超越对比方法。相关代码可从https://github.com/lalithbharadwajbaru/Wavelet-CLIP复现。

Key Takeaways

- 数字图像操纵技术的快速发展对现有深度伪造检测技术构成挑战。

- 提出的Wavelet-CLIP框架结合了小波变换与ViT-L/14架构特征进行深度伪造检测。

- Wavelet-CLIP通过深入分析图像的空间和频率特征提高了检测高级深度伪造的能力。

- 跨数据集泛化实验验证了Wavelet-CLIP框架的性能出色。

- 该框架在检测未见扩散模型生成的图像方面表现出良好的鲁棒性。

- Wavelet-CLIP的平均AUC值在跨数据泛化与对未见深度伪造的检测中均超越了对比方法。

点此查看论文截图