⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-16 更新

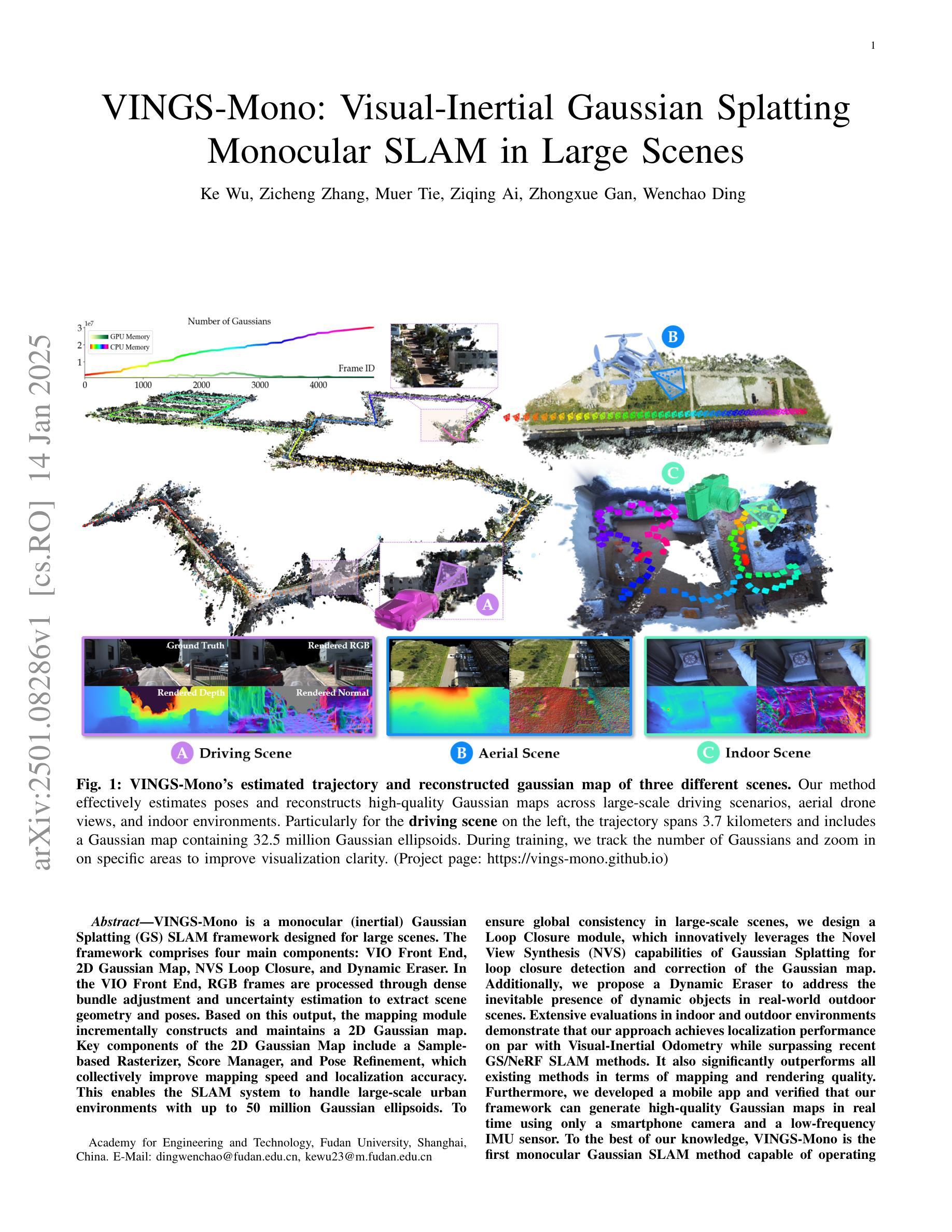

VINGS-Mono: Visual-Inertial Gaussian Splatting Monocular SLAM in Large Scenes

Authors:Ke Wu, Zicheng Zhang, Muer Tie, Ziqing Ai, Zhongxue Gan, Wenchao Ding

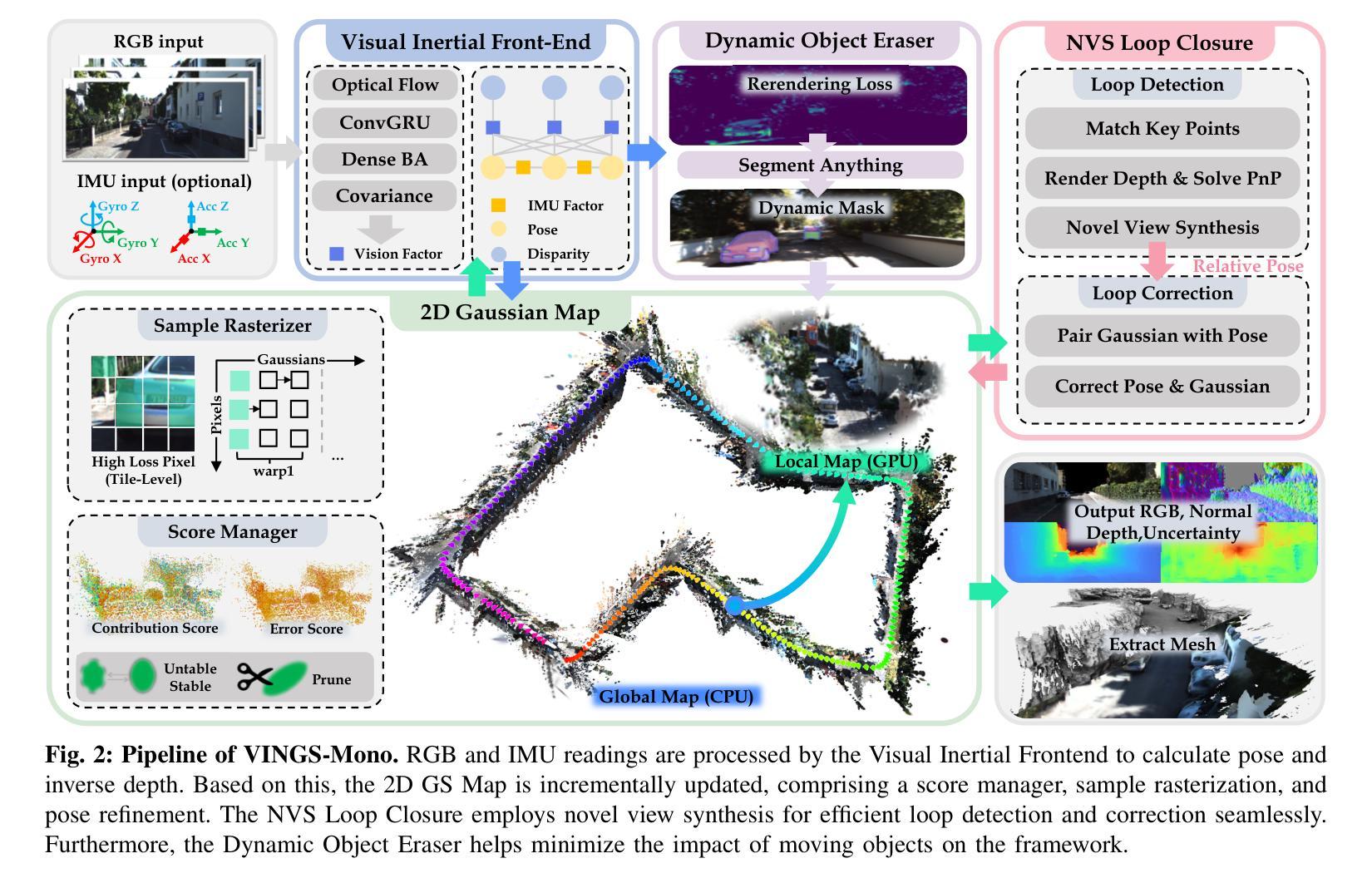

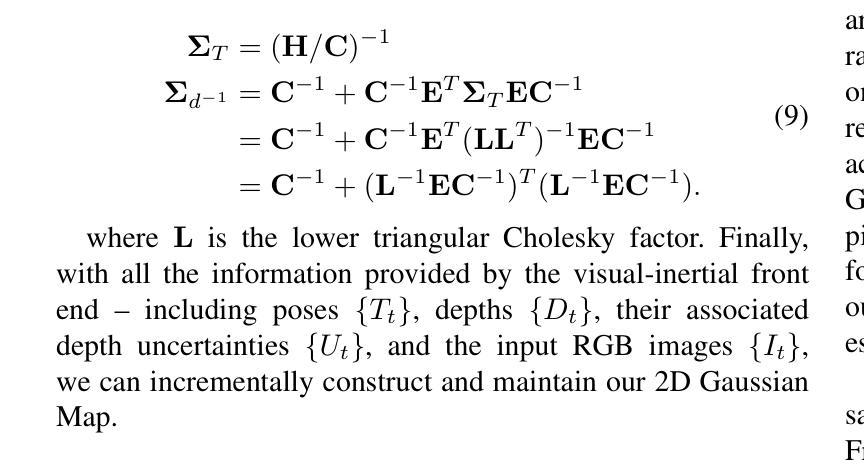

VINGS-Mono is a monocular (inertial) Gaussian Splatting (GS) SLAM framework designed for large scenes. The framework comprises four main components: VIO Front End, 2D Gaussian Map, NVS Loop Closure, and Dynamic Eraser. In the VIO Front End, RGB frames are processed through dense bundle adjustment and uncertainty estimation to extract scene geometry and poses. Based on this output, the mapping module incrementally constructs and maintains a 2D Gaussian map. Key components of the 2D Gaussian Map include a Sample-based Rasterizer, Score Manager, and Pose Refinement, which collectively improve mapping speed and localization accuracy. This enables the SLAM system to handle large-scale urban environments with up to 50 million Gaussian ellipsoids. To ensure global consistency in large-scale scenes, we design a Loop Closure module, which innovatively leverages the Novel View Synthesis (NVS) capabilities of Gaussian Splatting for loop closure detection and correction of the Gaussian map. Additionally, we propose a Dynamic Eraser to address the inevitable presence of dynamic objects in real-world outdoor scenes. Extensive evaluations in indoor and outdoor environments demonstrate that our approach achieves localization performance on par with Visual-Inertial Odometry while surpassing recent GS/NeRF SLAM methods. It also significantly outperforms all existing methods in terms of mapping and rendering quality. Furthermore, we developed a mobile app and verified that our framework can generate high-quality Gaussian maps in real time using only a smartphone camera and a low-frequency IMU sensor. To the best of our knowledge, VINGS-Mono is the first monocular Gaussian SLAM method capable of operating in outdoor environments and supporting kilometer-scale large scenes.

VINGS-Mono是一种针对大场景设计的单目(惯性)高斯混合(GS)SLAM框架。该框架包含四个主要组件:VIO前端、2D高斯地图、NVS环闭合和动态擦除器。在VIO前端,RGB帧通过密集束调整和不确定性估计来提取场景几何和姿态。基于这一输出,映射模块逐步构建和维护一个二维高斯地图。二维高斯地图的关键组件包括基于样本的栅格化器、评分管理器和姿态细化器,它们共同提高了映射速度和定位精度。这使得SLAM系统能够处理大规模的城市环境,高达50万个高斯椭圆体。为了确保大规模场景的全局一致性,我们设计了一个环闭合模块,该模块创新地利用高斯混合的Novel View Synthesis(NVS)功能进行环闭合检测和校正高斯地图。此外,我们提出了动态擦除器来解决真实户外场景中不可避免的存在动态物体的问题。在室内和室外环境的广泛评估表明,我们的方法在实现与视觉惯性测距相当的定位性能的同时,超越了最新的GS/NeRF SLAM方法。在映射和渲染质量方面,它也大大优于所有现有方法。此外,我们开发了一个移动应用程序,并验证我们的框架能够仅使用智能手机摄像头和低频率IMU传感器实时生成高质量的高斯地图。据我们所知,VINGS-Mono是第一个能够在室外环境运行并支持公里级大场景的单目高斯SLAM方法。

论文及项目相关链接

摘要

VINGS-Mono是一款针对大场景的单目(惯性)高斯融合SLAM框架,包含VIO前端、2D高斯地图、NVS环闭合和动态擦除器四个主要组件。框架利用RGB帧处理提取场景几何和姿态,并构建和维护一个二维高斯地图。该框架具有高效的映射速度和定位精度,可处理大规模城市环境,并支持高达5千万高斯椭圆体。其创新设计的环闭合模块利用高斯融合的新视角合成能力进行环闭合检测和地图校正。此外,为解决户外场景中动态物体的影响,提出了动态擦除器。评估结果表明,该方法在定位和映射性能上优于其他SLAM方法,并可在智能手机和低频率IMU传感器上实时生成高质量高斯地图。据我们所知,VINGS-Mono是首个可在户外环境运行并支持公里级大场景的单目高斯SLAM方法。

要点

- VINGS-Mono是一款针对大场景的单目SLAM框架。

- 它包含四个主要组件:VIO前端、2D高斯地图、NVS环闭合和动态擦除器。

- 利用RGB帧处理提取场景几何和姿态信息。

- 通过构建和维护二维高斯地图实现高效的映射速度和定位精度。

- 环闭合模块利用高斯融合的新视角合成能力进行环闭合检测和地图校正。

- 提出动态擦除器以解决户外场景中动态物体的影响。

点此查看论文截图

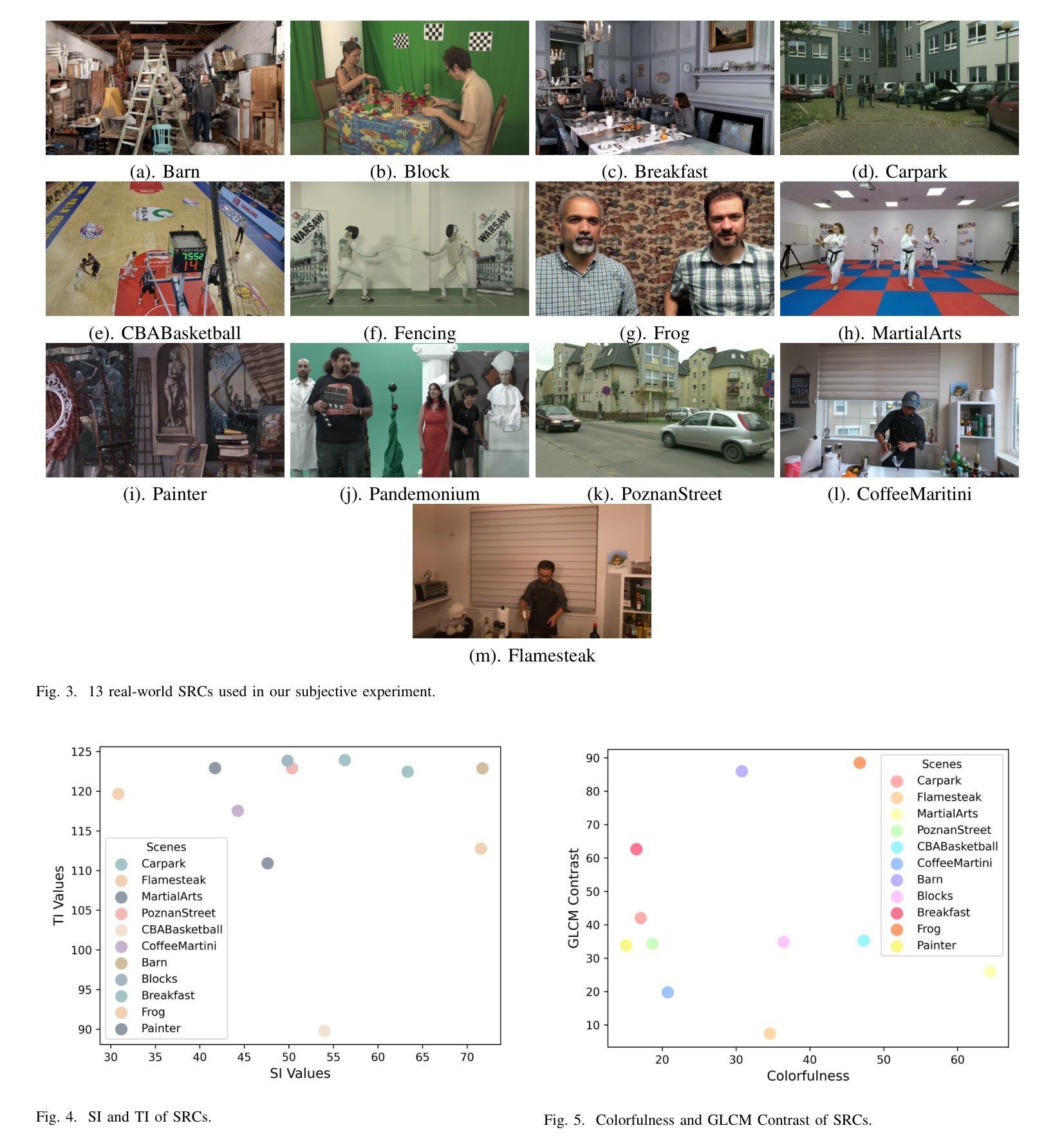

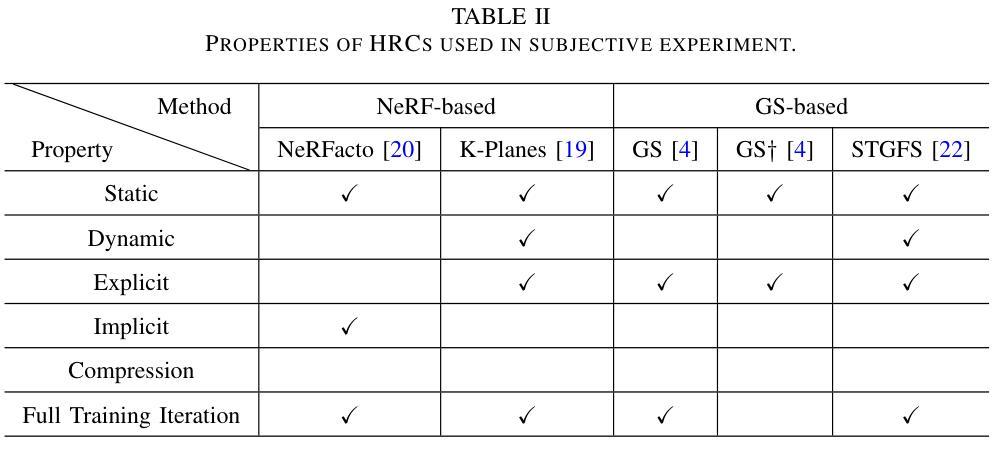

Evaluating Human Perception of Novel View Synthesis: Subjective Quality Assessment of Gaussian Splatting and NeRF in Dynamic Scenes

Authors:Yuhang Zhang, Joshua Maraval, Zhengyu Zhang, Nicolas Ramin, Shishun Tian, Lu Zhang

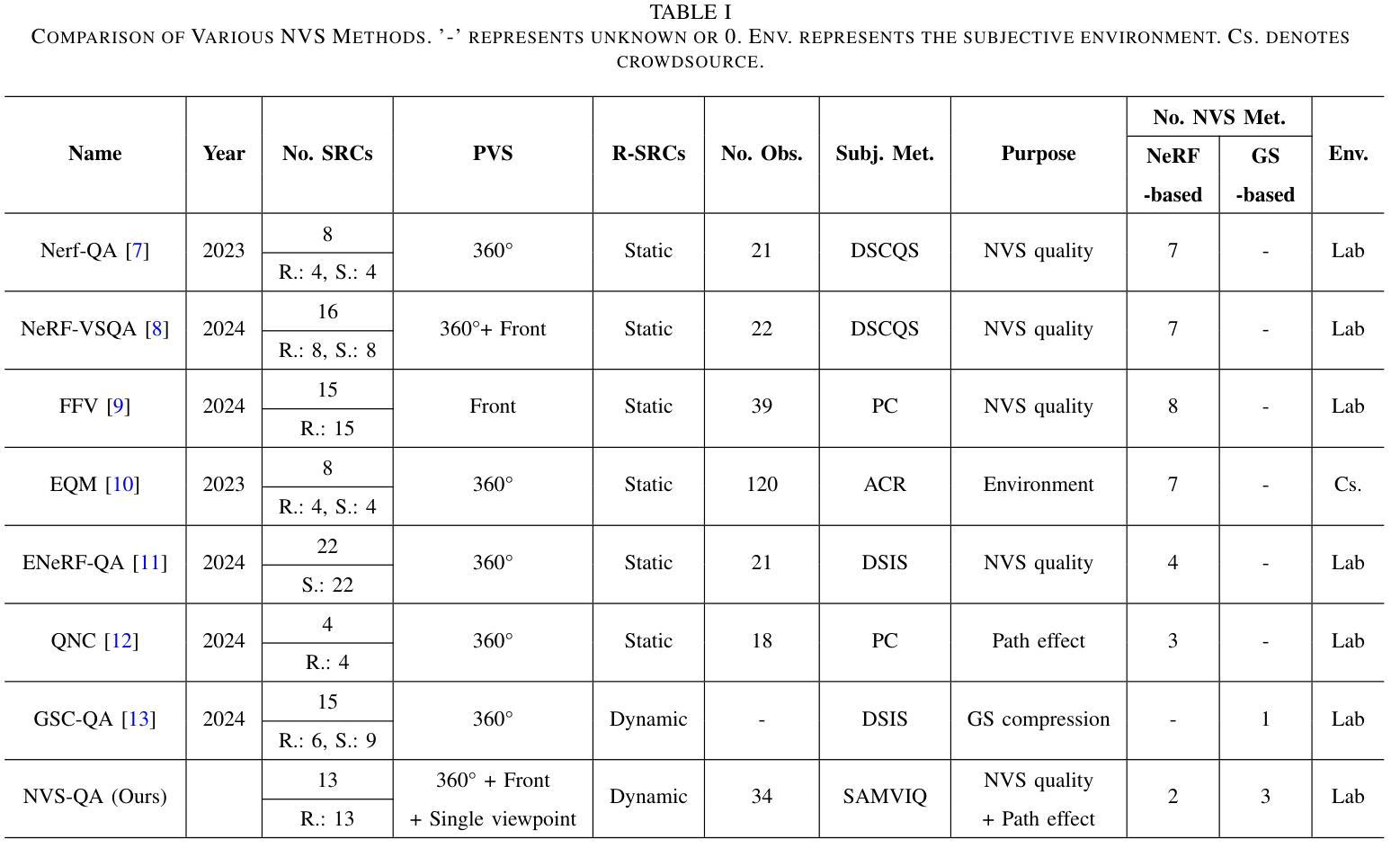

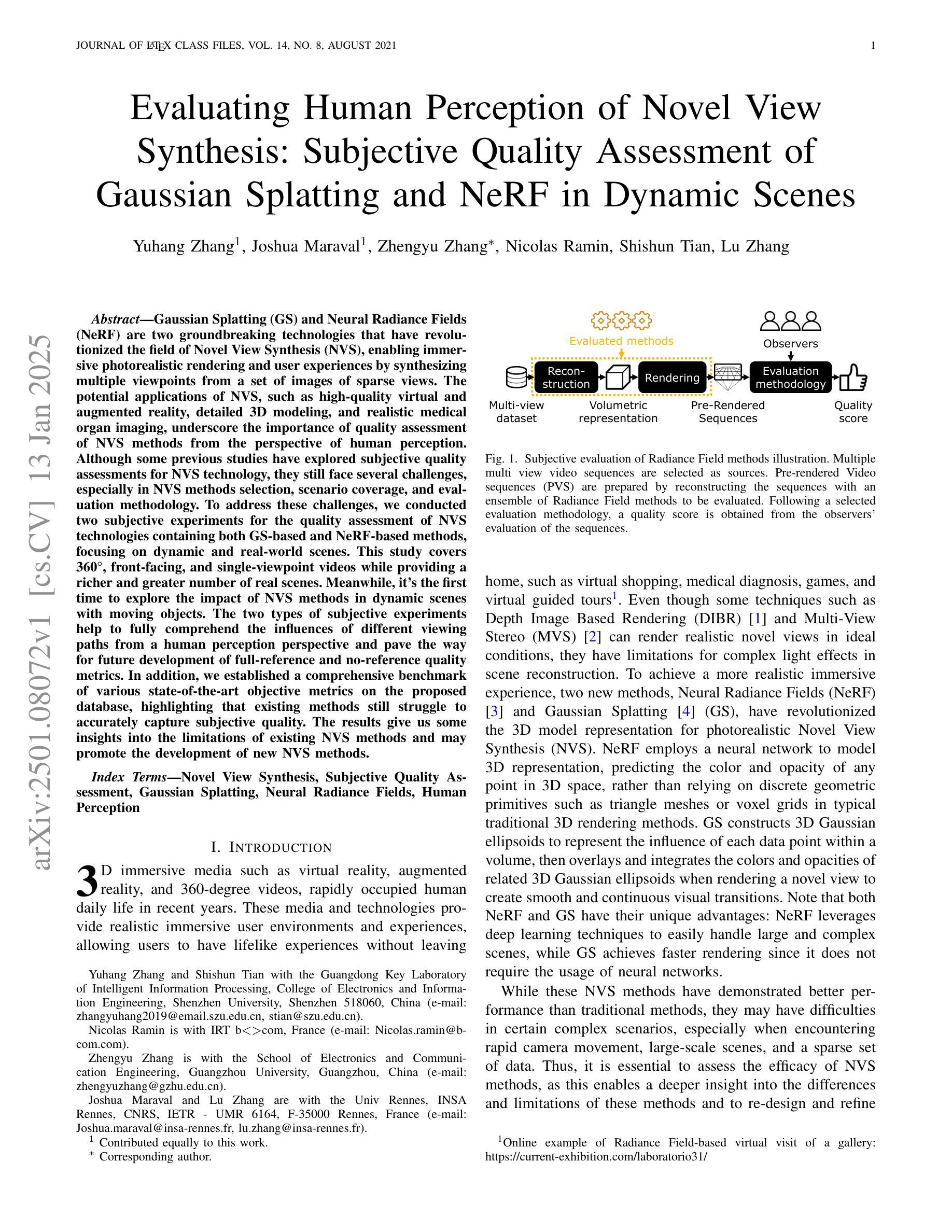

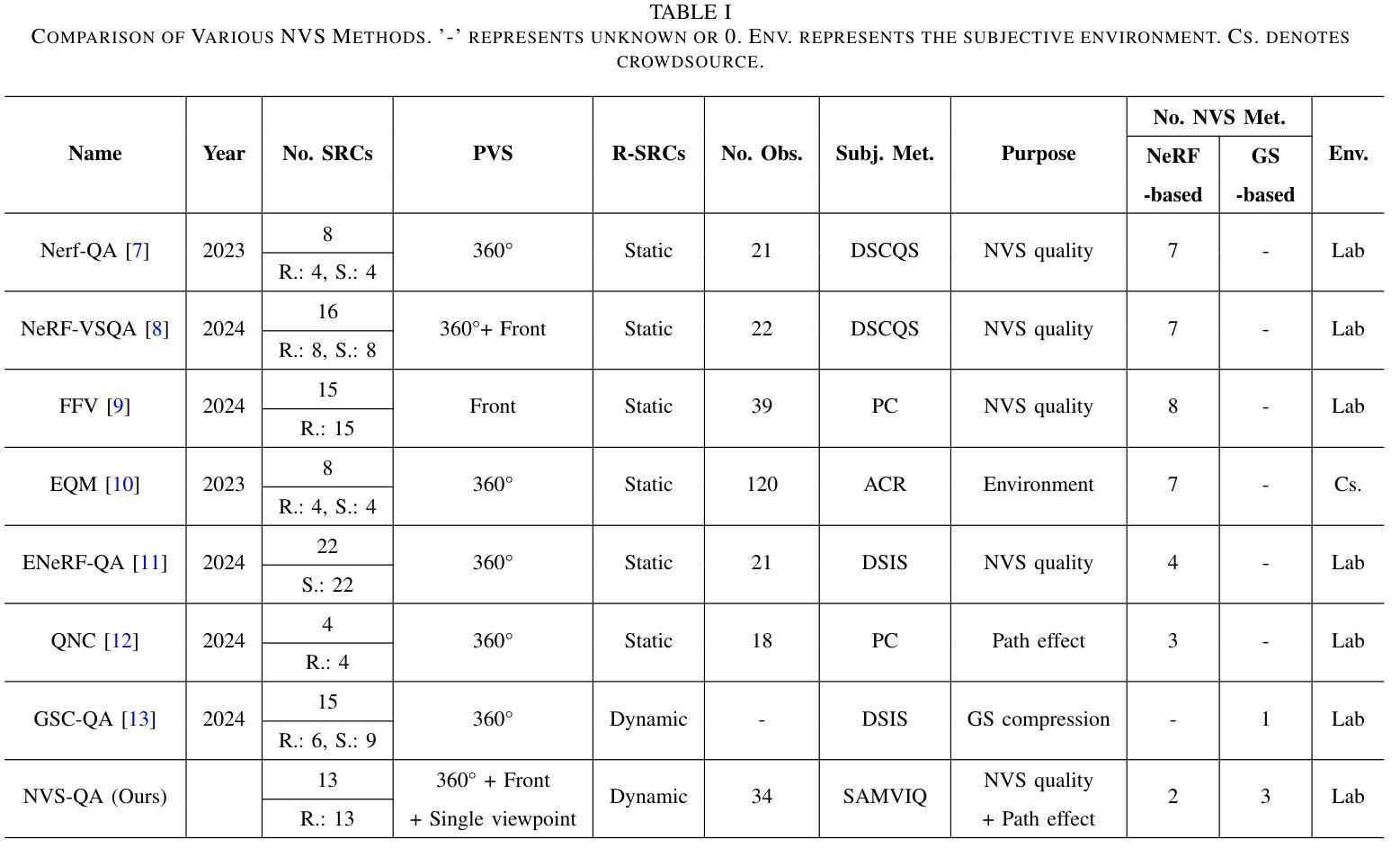

Gaussian Splatting (GS) and Neural Radiance Fields (NeRF) are two groundbreaking technologies that have revolutionized the field of Novel View Synthesis (NVS), enabling immersive photorealistic rendering and user experiences by synthesizing multiple viewpoints from a set of images of sparse views. The potential applications of NVS, such as high-quality virtual and augmented reality, detailed 3D modeling, and realistic medical organ imaging, underscore the importance of quality assessment of NVS methods from the perspective of human perception. Although some previous studies have explored subjective quality assessments for NVS technology, they still face several challenges, especially in NVS methods selection, scenario coverage, and evaluation methodology. To address these challenges, we conducted two subjective experiments for the quality assessment of NVS technologies containing both GS-based and NeRF-based methods, focusing on dynamic and real-world scenes. This study covers 360{\deg}, front-facing, and single-viewpoint videos while providing a richer and greater number of real scenes. Meanwhile, it’s the first time to explore the impact of NVS methods in dynamic scenes with moving objects. The two types of subjective experiments help to fully comprehend the influences of different viewing paths from a human perception perspective and pave the way for future development of full-reference and no-reference quality metrics. In addition, we established a comprehensive benchmark of various state-of-the-art objective metrics on the proposed database, highlighting that existing methods still struggle to accurately capture subjective quality. The results give us some insights into the limitations of existing NVS methods and may promote the development of new NVS methods.

高斯采样(GS)和神经辐射场(NeRF)是两项突破性技术,它们彻底改变了新型视图合成(NVS)领域,通过从稀疏视图的一组图像中合成多个视点,实现了沉浸式的光照真实渲染和用户体验。NVS的潜在应用,如高质量虚拟和增强现实、详细的3D建模和逼真的医学器官成像,强调了从人类感知角度对NVS方法进行质量评估的重要性。尽管之前的一些研究已经探索了NVS技术的主观质量评估,但它们仍然面临一些挑战,特别是在NVS方法选择、场景覆盖和评估方法上。为了解决这些挑战,我们对包含基于GS和基于NeRF的方法的NVS技术进行了两项主观实验质量评估,重点关注动态和真实场景。该研究涵盖了360度、正面和单视点视频,同时提供了更丰富、数量更多的真实场景。与此同时,它是首次探索NVS方法在动态场景中对移动物体的影响。这两种类型的主观实验有助于从人类感知的角度充分理解不同观看路径的影响,为全参考和无参考质量指标的未来发展铺平道路。此外,我们在提出的数据库上建立了各种最新客观指标的综合基准测试,突出显示现有方法仍然难以准确捕捉主观质量。结果给我们一些现有NVS方法的局限性启示,并可能促进新的NVS方法的发展。

论文及项目相关链接

Summary

本文探讨了Gaussian Splatting(GS)和Neural Radiance Fields(NeRF)在Novel View Synthesis(NVS)领域的应用,并进行了质量评估。通过两项主观实验,对基于GS和NeRF的方法在动态和真实场景中的表现进行了全面评估,建立了最新客观指标的基准,揭示了现有方法的局限性。

Key Takeaways

- Gaussian Splatting (GS) 和 Neural Radiance Fields (NeRF) 革新了Novel View Synthesis (NVS)领域,实现了沉浸式逼真的渲染和用户体验。

- NVS在高质量虚拟现实、增强现实、详细3D建模和真实医学器官成像等方面有广泛应用。

- 主观质量评估对于NVS技术非常重要,尤其是从人类感知的角度。

- 现有研究在NVS技术的主观质量评估上仍面临挑战,特别是在方法选择、场景覆盖和评估方法上。

- 通过两项主观实验,对基于GS和NeRF的NVS方法在动态场景中的表现进行了全面评估。

- 建立了最新客观指标的基准,揭示了现有方法在NVS技术质量评估中的局限性。

点此查看论文截图

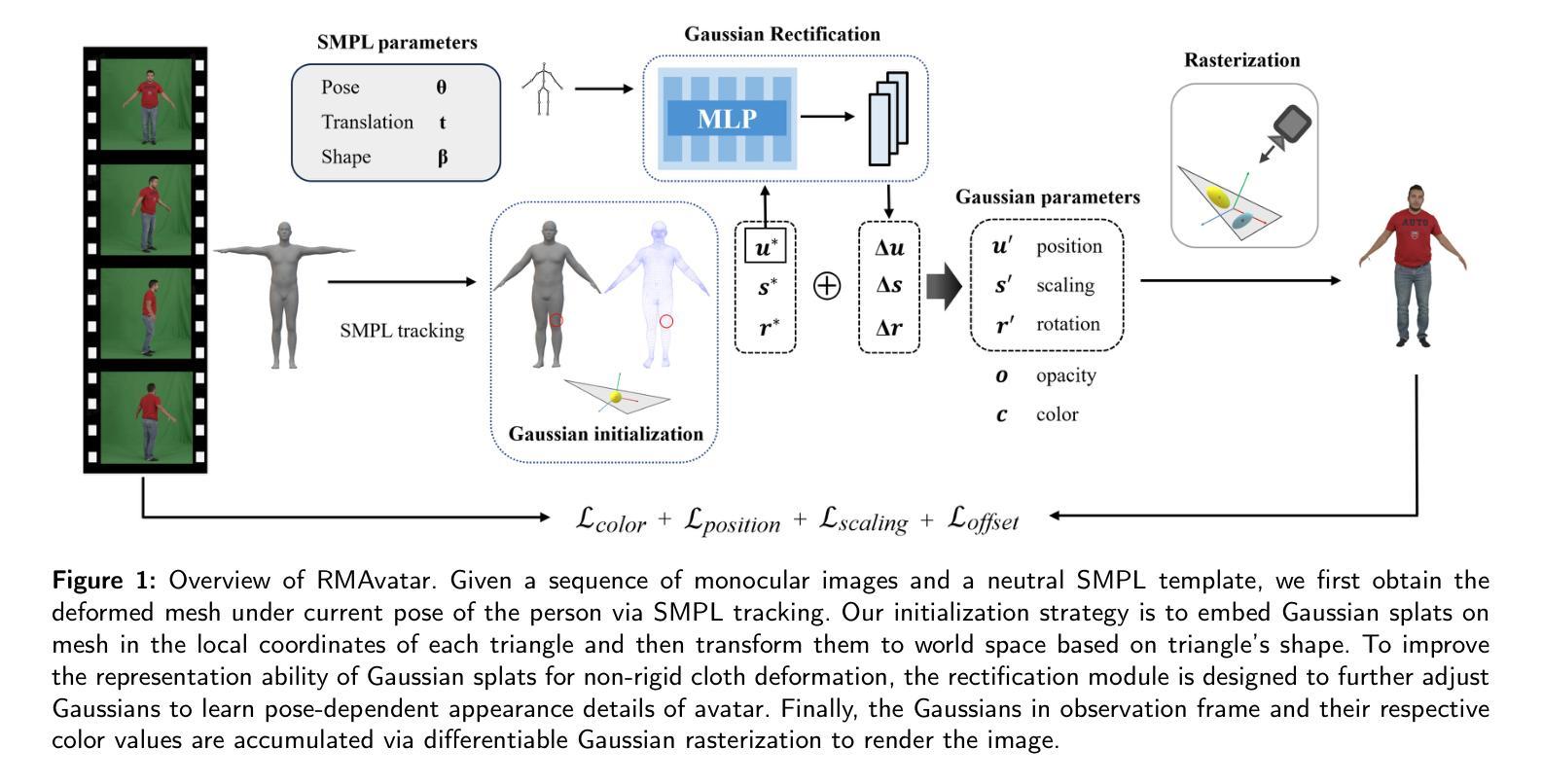

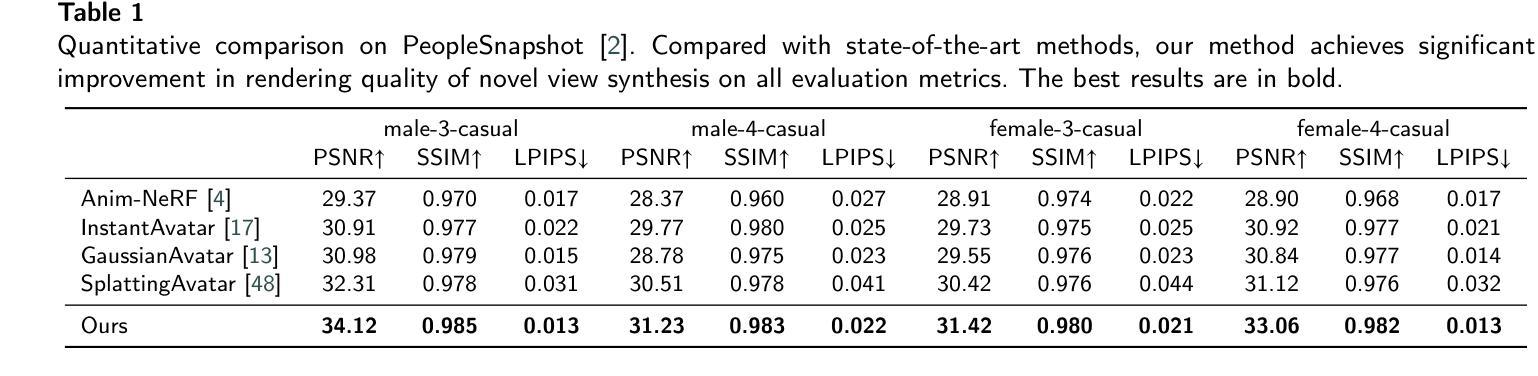

RMAvatar: Photorealistic Human Avatar Reconstruction from Monocular Video Based on Rectified Mesh-embedded Gaussians

Authors:Sen Peng, Weixing Xie, Zilong Wang, Xiaohu Guo, Zhonggui Chen, Baorong Yang, Xiao Dong

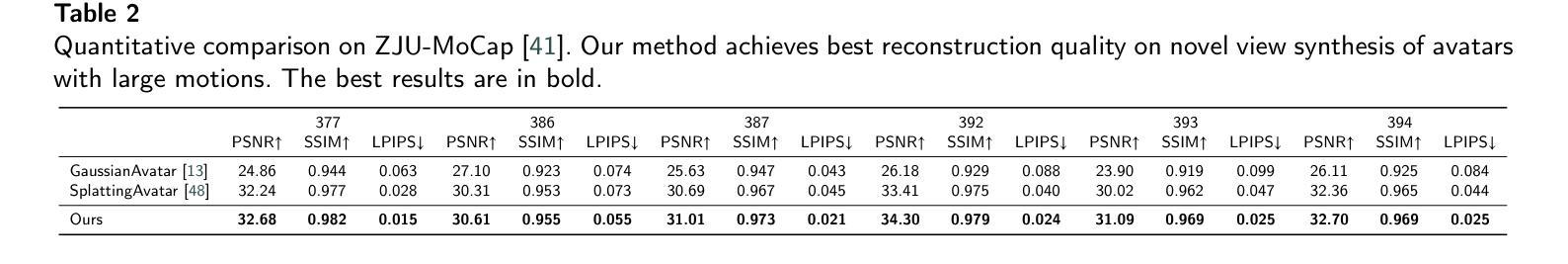

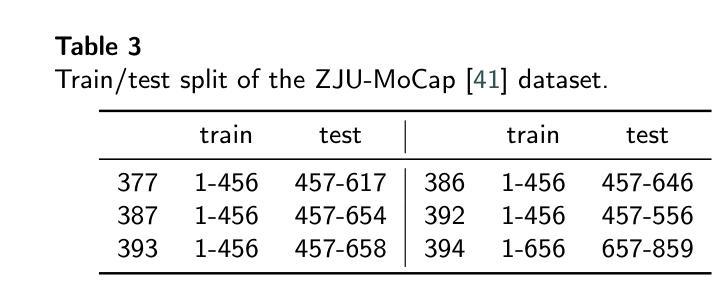

We introduce RMAvatar, a novel human avatar representation with Gaussian splatting embedded on mesh to learn clothed avatar from a monocular video. We utilize the explicit mesh geometry to represent motion and shape of a virtual human and implicit appearance rendering with Gaussian Splatting. Our method consists of two main modules: Gaussian initialization module and Gaussian rectification module. We embed Gaussians into triangular faces and control their motion through the mesh, which ensures low-frequency motion and surface deformation of the avatar. Due to the limitations of LBS formula, the human skeleton is hard to control complex non-rigid transformations. We then design a pose-related Gaussian rectification module to learn fine-detailed non-rigid deformations, further improving the realism and expressiveness of the avatar. We conduct extensive experiments on public datasets, RMAvatar shows state-of-the-art performance on both rendering quality and quantitative evaluations. Please see our project page at https://rm-avatar.github.io.

我们介绍了RMAvatar,这是一种新型的人类化身表示方法,通过在网格上嵌入高斯涂抹技术来学习单目视频中的穿衣化身。我们利用明确的网格几何来表示虚拟人类的运动和形状,以及使用高斯涂抹的隐式外观渲染。我们的方法主要包括两个模块:高斯初始化模块和高斯校正模块。我们将高斯嵌入三角形面部,并通过网格控制其运动,这确保了化身的低频运动和表面变形。由于LBS公式的局限性,人类骨骼难以控制复杂的非刚性变换。然后,我们设计了一个与姿势相关的高斯校正模块,学习精细的非刚性变形,进一步提高化身的真实感和表现力。我们在公共数据集上进行了大量实验,RMAvatar在渲染质量和定量评估方面都达到了最新水平。请访问我们的项目页面:https://rm-avatar.github.io了解详情。

论文及项目相关链接

PDF CVM2025

Summary

RMAvatar是一种新型人类化身表示方法,采用高斯贴图嵌入网格技术,从单目视频中学习着装化身。该方法结合显式网格几何表示虚拟人的运动和形状,以及隐式外观渲染的高斯贴图技术。该方法包括两个主要模块:高斯初始化模块和高斯校正模块。通过将高斯嵌入三角形面部并通过网格控制其运动,确保化身的低频运动和表面变形。由于LBS公式的局限性,人类骨骼难以控制复杂的非刚性变换。因此,设计了一个与姿势相关的高斯校正模块来学习精细的非刚性变形,进一步提高化身的真实感和表现力。在公共数据集上的实验表明,RMAvatar在渲染质量和定量评估方面均达到最新技术水平。

Key Takeaways

- RMAvatar是一种基于高斯贴图嵌入网格技术的新型人类化身表示方法。

- 该方法结合显式网格几何和隐式外观渲染技术。

- RMAvatar包括两个主要模块:高斯初始化模块和高斯校正模块。

- 高斯嵌入三角形面部并通过网格控制其运动,确保化身的低频运动和表面变形。

- 由于LBS公式的局限性,设计了一个与姿势相关的高斯校正模块来学习精细的非刚性变形。

- RMAvatar在公共数据集上的实验表现优异,达到最新技术水平。

点此查看论文截图

SplatMAP: Online Dense Monocular SLAM with 3D Gaussian Splatting

Authors:Yue Hu, Rong Liu, Meida Chen, Andrew Feng, Peter Beerel

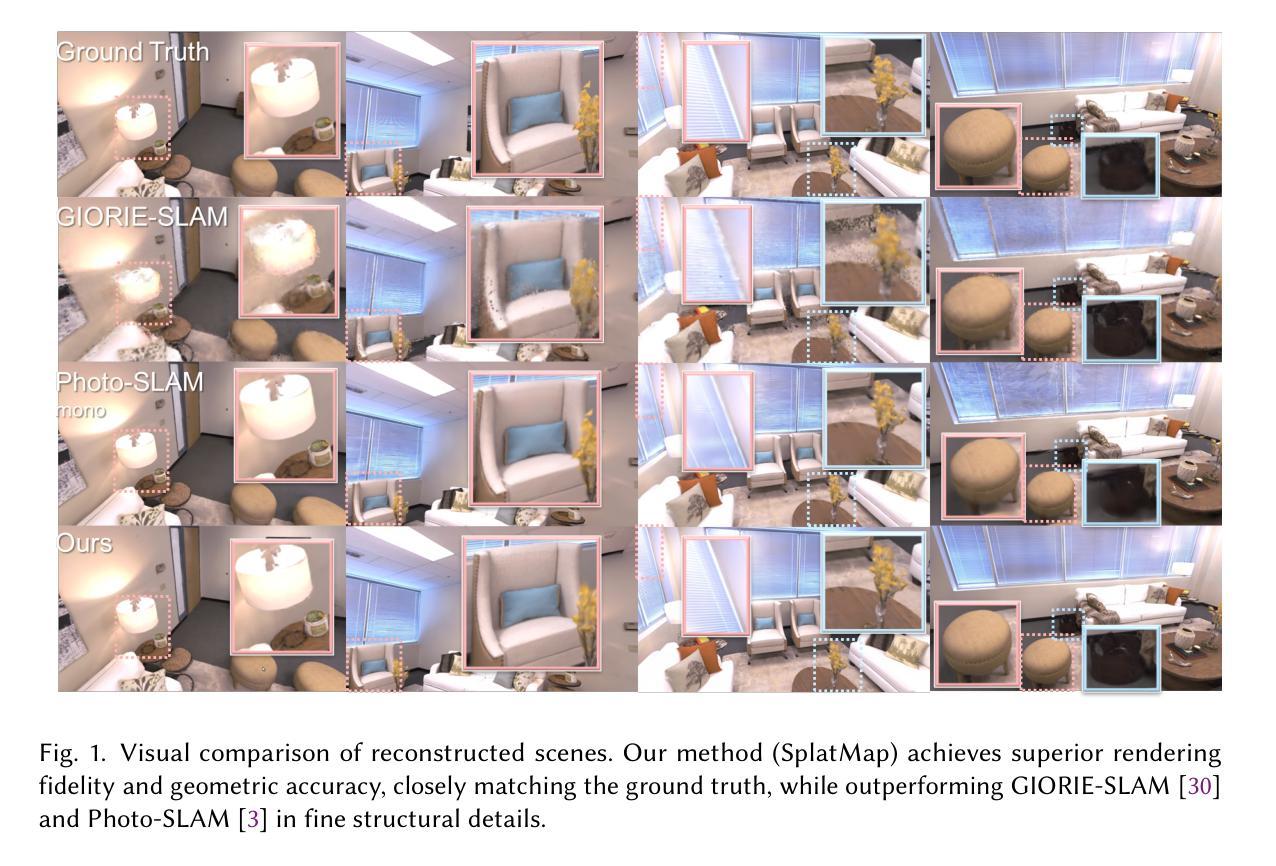

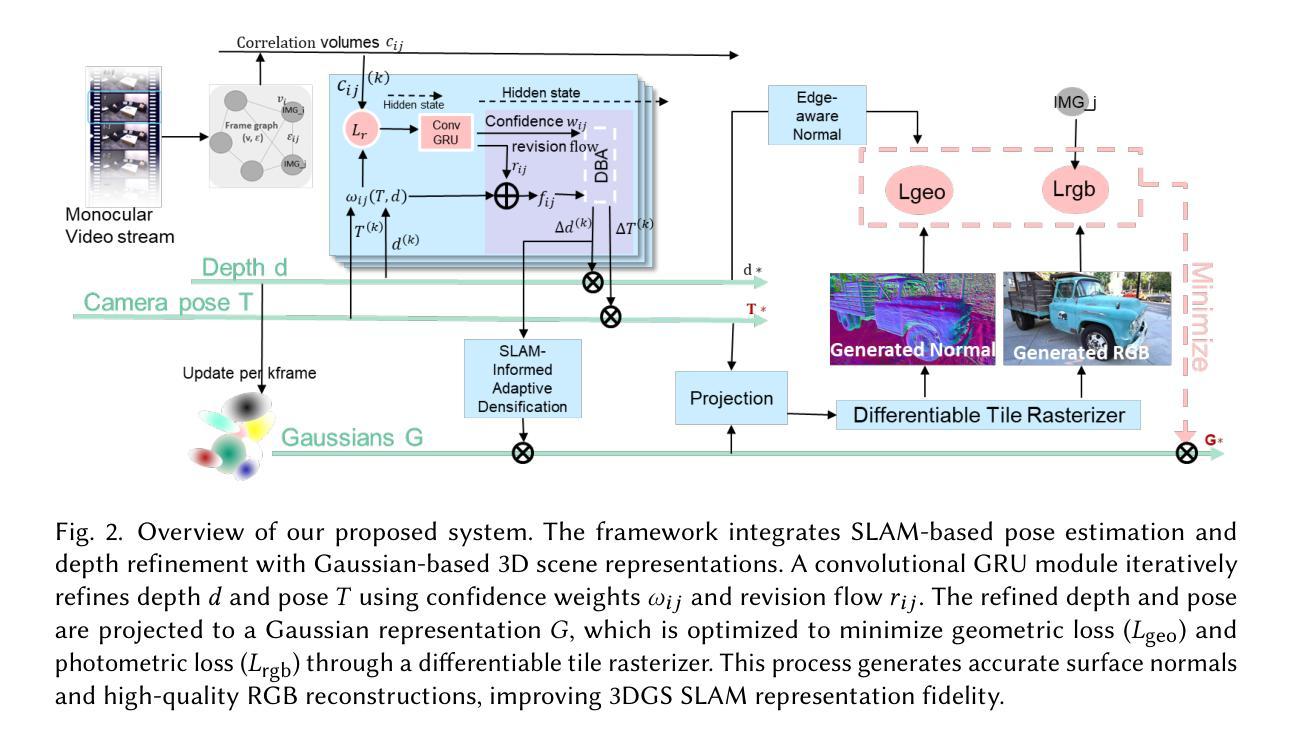

Achieving high-fidelity 3D reconstruction from monocular video remains challenging due to the inherent limitations of traditional methods like Structure-from-Motion (SfM) and monocular SLAM in accurately capturing scene details. While differentiable rendering techniques such as Neural Radiance Fields (NeRF) address some of these challenges, their high computational costs make them unsuitable for real-time applications. Additionally, existing 3D Gaussian Splatting (3DGS) methods often focus on photometric consistency, neglecting geometric accuracy and failing to exploit SLAM’s dynamic depth and pose updates for scene refinement. We propose a framework integrating dense SLAM with 3DGS for real-time, high-fidelity dense reconstruction. Our approach introduces SLAM-Informed Adaptive Densification, which dynamically updates and densifies the Gaussian model by leveraging dense point clouds from SLAM. Additionally, we incorporate Geometry-Guided Optimization, which combines edge-aware geometric constraints and photometric consistency to jointly optimize the appearance and geometry of the 3DGS scene representation, enabling detailed and accurate SLAM mapping reconstruction. Experiments on the Replica and TUM-RGBD datasets demonstrate the effectiveness of our approach, achieving state-of-the-art results among monocular systems. Specifically, our method achieves a PSNR of 36.864, SSIM of 0.985, and LPIPS of 0.040 on Replica, representing improvements of 10.7%, 6.4%, and 49.4%, respectively, over the previous SOTA. On TUM-RGBD, our method outperforms the closest baseline by 10.2%, 6.6%, and 34.7% in the same metrics. These results highlight the potential of our framework in bridging the gap between photometric and geometric dense 3D scene representations, paving the way for practical and efficient monocular dense reconstruction.

从单目视频中实现高保真3D重建仍然是一个挑战,这主要是由于传统方法(如结构从运动(SfM)和单目SLAM)在准确捕捉场景细节方面的固有局限性。虽然神经辐射场(NeRF)等可微分渲染技术解决了其中的一些挑战,但它们的高计算成本使它们不适合实时应用。此外,现有的3D高斯拼贴(3DGS)方法通常侧重于光度一致性,忽略了几何精度,并且未能利用SLAM的动态深度和姿态更新来进行场景细化。我们提出了一种结合密集SLAM和3DGS的实时高保真密集重建框架。我们的方法引入了SLAM信息自适应细化技术,它利用SLAM的密集点云来动态更新和细化高斯模型。此外,我们结合了基于几何的引导优化技术,该技术结合了边缘感知几何约束和光度一致性,以联合优化3DGS场景表示的外观和几何形状,从而实现详细而准确的SLAM映射重建。在Replica和TUM-RGBD数据集上的实验证明了我们的方法的有效性,在单目系统中达到了最先进的成果。具体来说,我们的方法在Replica上达到了36.864的PSNR,0.985的SSIM和0.040的LPIPS,在之前的最新成果的基础上分别提高了10.7%,6.4%和49.4%。在TUM-RGBD上,我们的方法在相同的指标上比最接近的基线高出10.2%,6.6%和34.7%。这些结果突显了我们框架在桥接光度一致性几何密集3D场景表示方面的潜力,为实用和高效的单目密集重建铺平了道路。

论文及项目相关链接

Summary

该文本介绍了一种新的实现实时高保真三维重建的方法,它结合了密集SLAM与现有的高斯浮雕建模技术。此方法采用基于SLA技术的动态深度更新和姿态更新进行场景细化,并结合几何约束和光度一致性优化场景表示,实现了详细的准确SLAM映射重建。在公开数据集上的实验表明,该方法在单目系统领域取得了最先进的成果。

Key Takeaways

- 提出了一种新的方法,结合密集SLAM与现有的高斯浮雕建模技术实现实时高保真三维重建。

- 方法利用密集SLAM中的动态深度更新和姿态更新,以更精细地捕获场景细节。

- 结合几何约束和光度一致性进行联合优化,同时改善场景的外观和几何特征。

点此查看论文截图

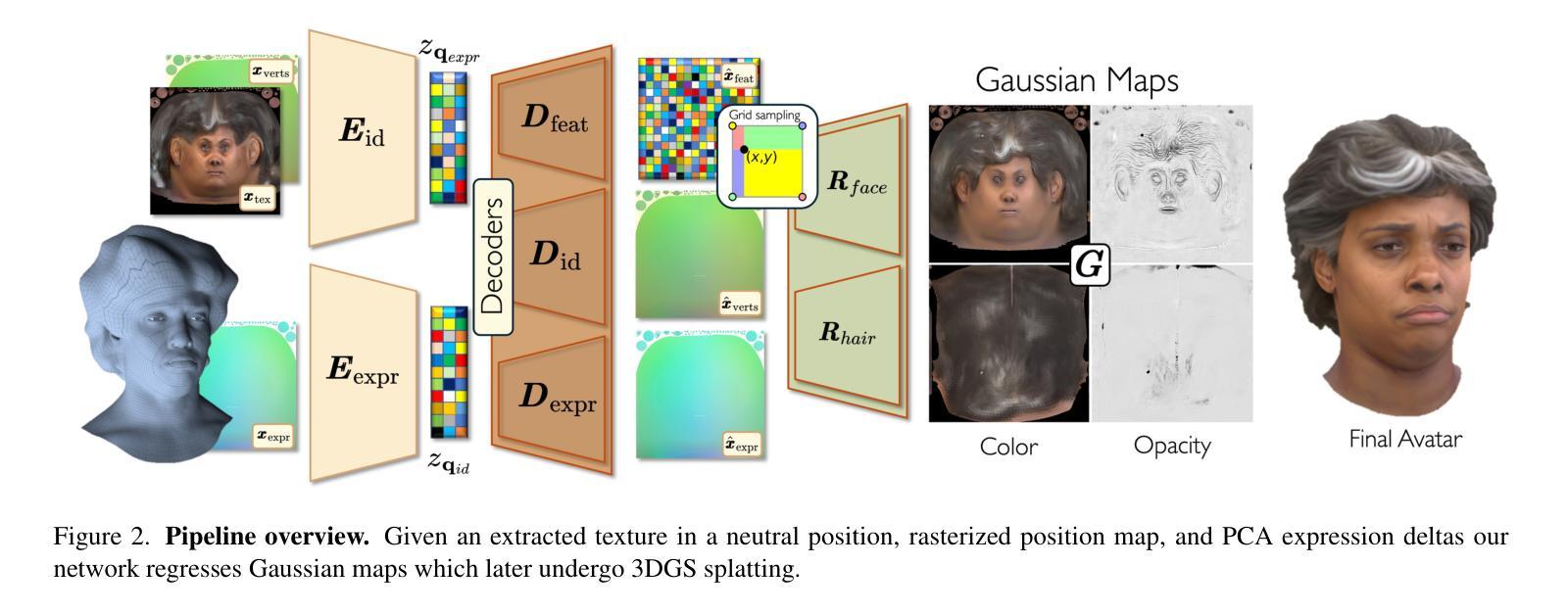

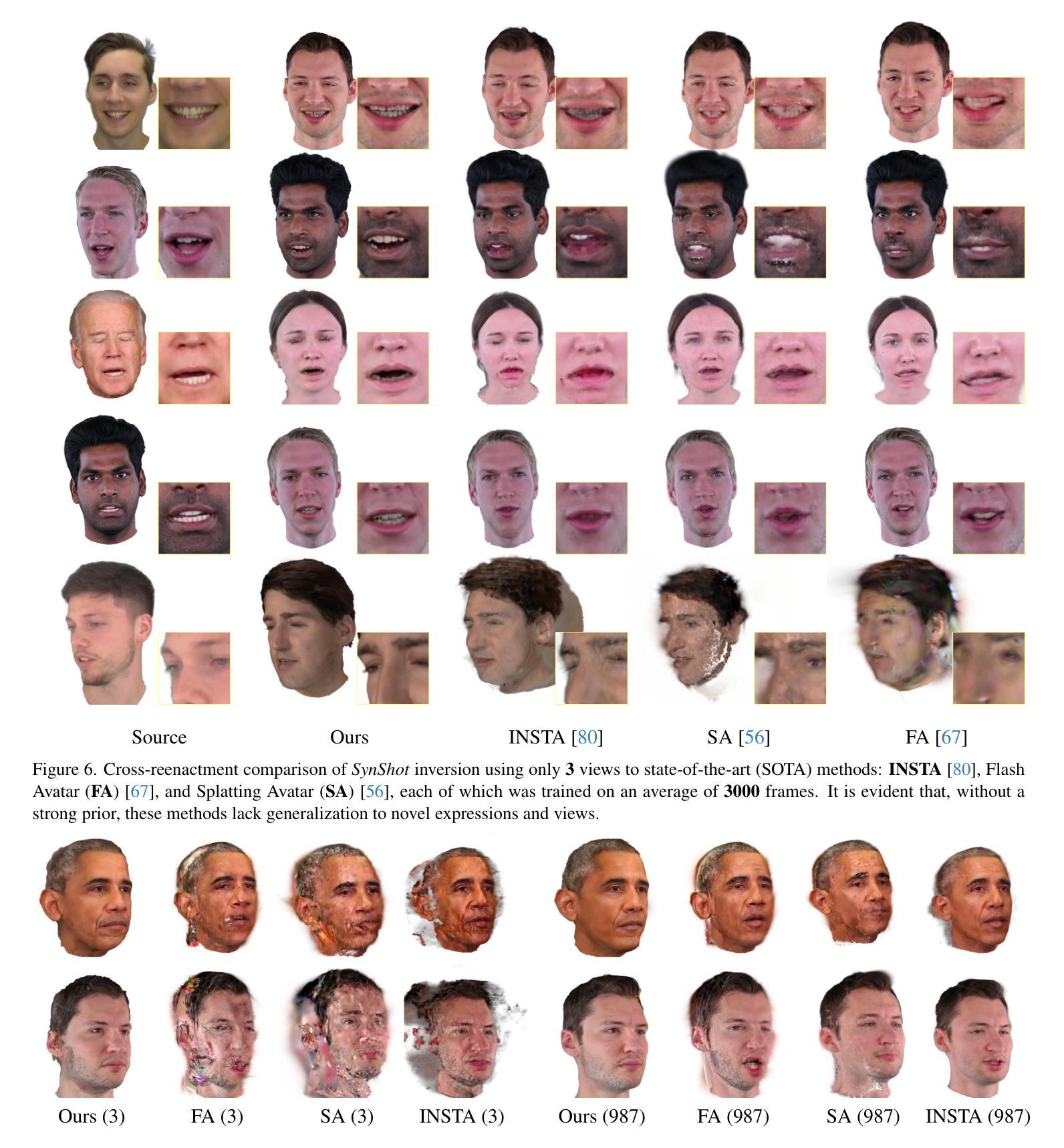

Synthetic Prior for Few-Shot Drivable Head Avatar Inversion

Authors:Wojciech Zielonka, Stephan J. Garbin, Alexandros Lattas, George Kopanas, Paulo Gotardo, Thabo Beeler, Justus Thies, Timo Bolkart

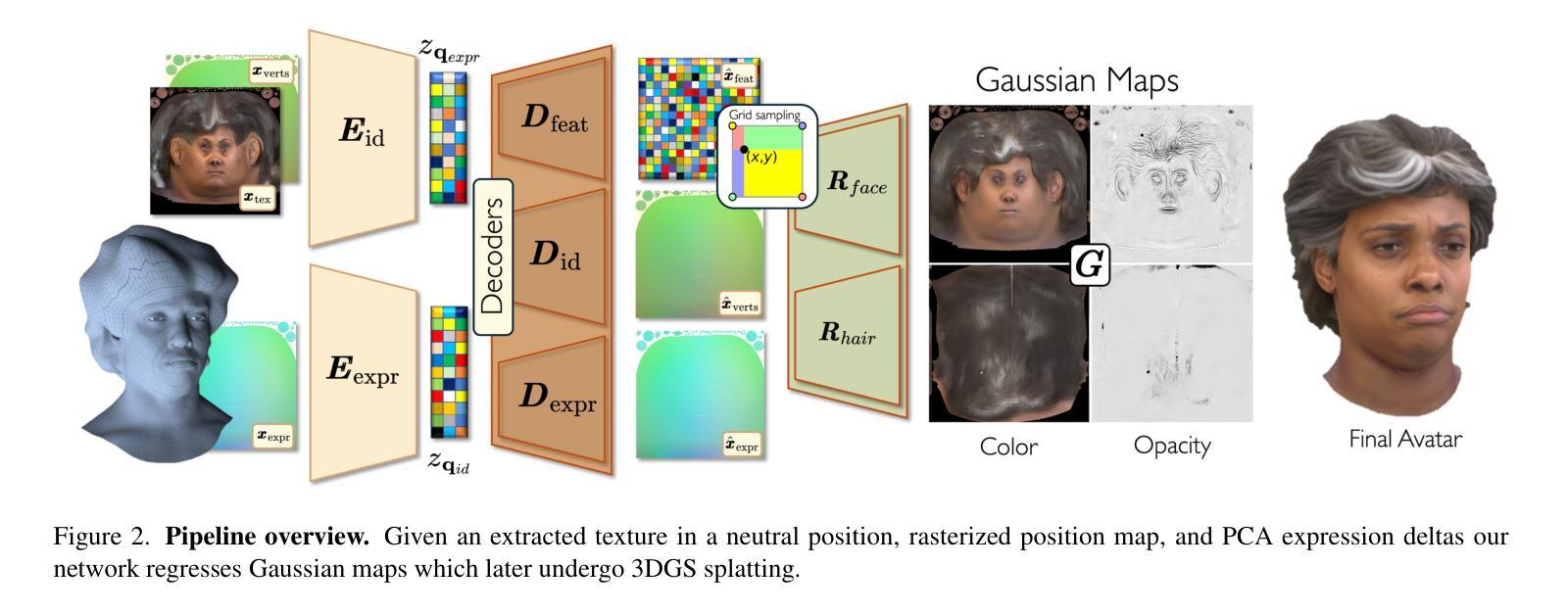

We present SynShot, a novel method for the few-shot inversion of a drivable head avatar based on a synthetic prior. We tackle two major challenges. First, training a controllable 3D generative network requires a large number of diverse sequences, for which pairs of images and high-quality tracked meshes are not always available. Second, state-of-the-art monocular avatar models struggle to generalize to new views and expressions, lacking a strong prior and often overfitting to a specific viewpoint distribution. Inspired by machine learning models trained solely on synthetic data, we propose a method that learns a prior model from a large dataset of synthetic heads with diverse identities, expressions, and viewpoints. With few input images, SynShot fine-tunes the pretrained synthetic prior to bridge the domain gap, modeling a photorealistic head avatar that generalizes to novel expressions and viewpoints. We model the head avatar using 3D Gaussian splatting and a convolutional encoder-decoder that outputs Gaussian parameters in UV texture space. To account for the different modeling complexities over parts of the head (e.g., skin vs hair), we embed the prior with explicit control for upsampling the number of per-part primitives. Compared to state-of-the-art monocular methods that require thousands of real training images, SynShot significantly improves novel view and expression synthesis.

我们提出了一种名为SynShot的新方法,用于基于合成先验的少量驾驶头部化身反演。我们解决了两个主要挑战。首先,训练可控的3D生成网络需要大量的各种序列,而图像和高质量跟踪网格的配对并不总是可用的。其次,最先进的单目化身模型很难推广到新的视角和表情,缺乏强大的先验知识,并且经常过度适应特定的视点分布。受到仅由合成数据训练的机器学习模型的启发,我们提出了一种从大量合成头部数据中学习先验模型的方法,这些合成头部数据具有各种身份、表情和视点。凭借少量的输入图像,SynShot微调了预训练的合成先验,以弥合领域差距,从而建立对新型表达和视点通用的逼真头部化身模型。我们使用三维高斯喷绘和卷积编码器-解码器来建立头部化身模型,该编码器-解码器在UV纹理空间中输出高斯参数。考虑到头部各部分的建模复杂性不同(例如皮肤和头发),我们通过先验嵌入显式控制来上采样每个部分的原始数量。与需要数千张真实训练图像的最先进的单目方法相比,SynShot显著提高了新视角和表情的合成效果。

论文及项目相关链接

PDF Website https://zielon.github.io/synshot/

Summary

基于合成先验的SynShot方法,解决了可控三维生成网络面临的两项挑战。首先,该方法从大量合成头部数据中学习先验模型,以应对多样身份、表情和视角的挑战。其次,利用少量输入图像微调预训练合成先验模型,建立具有真实感的头部角色模型,该模型可推广至新的视角和表情。SynShot通过利用高斯变形建模和卷积编码器-解码器,提高了相对于先进单眼方法的视角和表情合成能力。

Key Takeaways

- SynShot是一种基于合成先验的新方法,解决了少样本下驱动头部角色的倒序问题。

- 解决了两个主要挑战:缺乏多样化的图像和高质量跟踪网格的训练数据以及现有模型对新视角和表情的泛化能力不足。

- 通过学习大量合成头部数据的先验模型应对上述挑战。该模型具备多种身份、表情和视角的特性。

- 使用少量输入图像微调预训练的合成先验模型,以缩小领域差距并建立真实感的头部角色模型。此模型可在新的视角和表情上进行推广。

- 通过高斯变形建模和卷积编码器-解码器的结合实现头部角色的建模。

- 为了应对头部不同部分的建模复杂性(如皮肤和头发),该方法具有对每部分原始数量进行上采样的显式控制功能。

点此查看论文截图

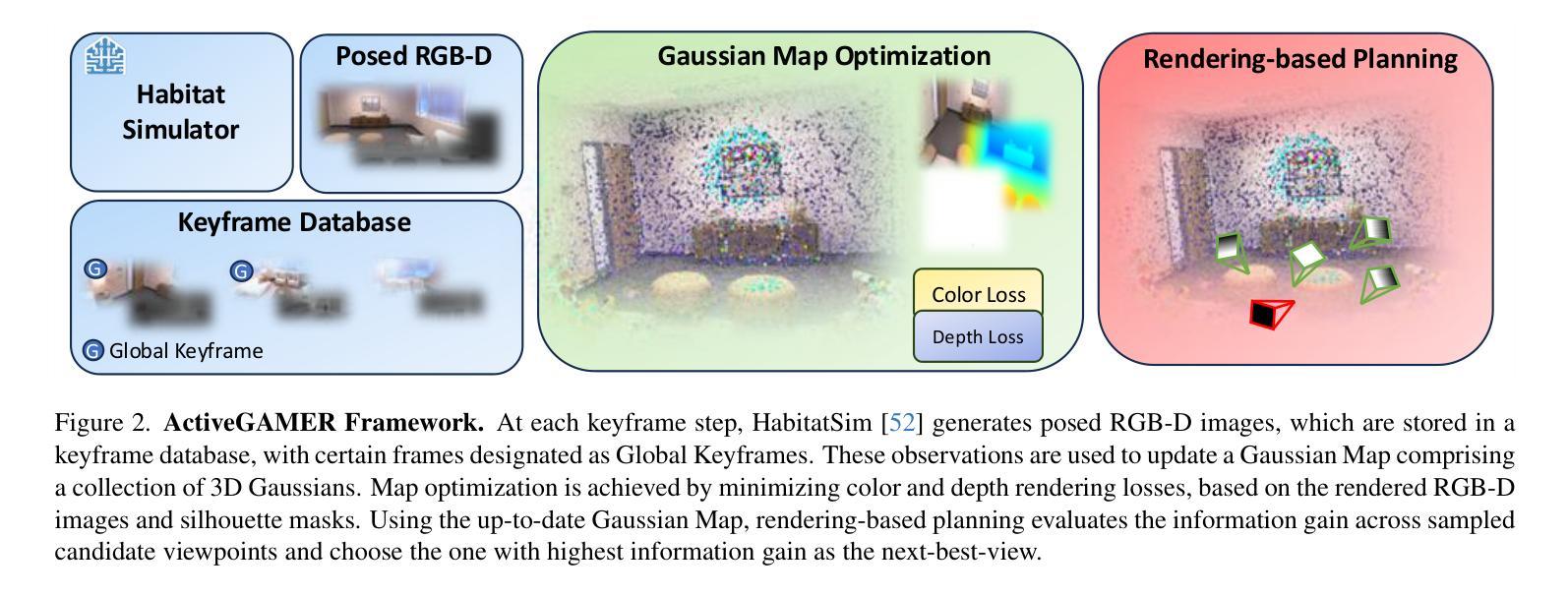

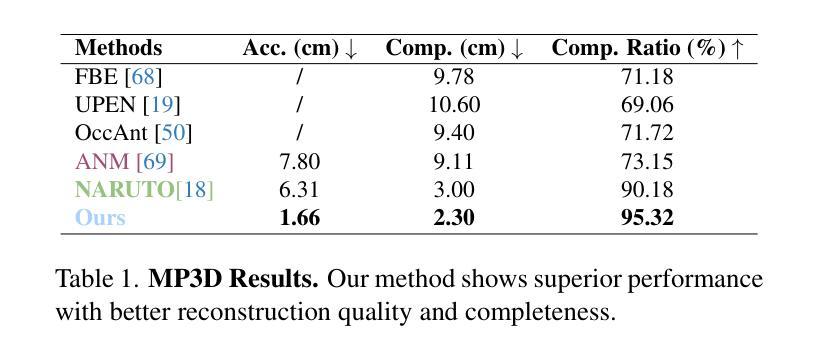

ActiveGAMER: Active GAussian Mapping through Efficient Rendering

Authors:Liyan Chen, Huangying Zhan, Kevin Chen, Xiangyu Xu, Qingan Yan, Changjiang Cai, Yi Xu

We introduce ActiveGAMER, an active mapping system that utilizes 3D Gaussian Splatting (3DGS) to achieve high-quality, real-time scene mapping and exploration. Unlike traditional NeRF-based methods, which are computationally demanding and restrict active mapping performance, our approach leverages the efficient rendering capabilities of 3DGS, allowing effective and efficient exploration in complex environments. The core of our system is a rendering-based information gain module that dynamically identifies the most informative viewpoints for next-best-view planning, enhancing both geometric and photometric reconstruction accuracy. ActiveGAMER also integrates a carefully balanced framework, combining coarse-to-fine exploration, post-refinement, and a global-local keyframe selection strategy to maximize reconstruction completeness and fidelity. Our system autonomously explores and reconstructs environments with state-of-the-art geometric and photometric accuracy and completeness, significantly surpassing existing approaches in both aspects. Extensive evaluations on benchmark datasets such as Replica and MP3D highlight ActiveGAMER’s effectiveness in active mapping tasks.

我们介绍了ActiveGAMER,这是一个利用3D高斯拼贴(3DGS)实现高质量、实时场景映射和探索的主动映射系统。与传统的基于NeRF的方法不同,这些方法计算量大,限制主动映射的性能,我们的方法利用3DGS的高效渲染能力,能够在复杂环境中进行有效和高效的探索。我们的系统的核心是基于渲染的信息增益模块,该模块动态地确定最具信息量的视点,用于规划下一个最佳视点,提高几何和光度重建的精度。ActiveGAMER还整合了一个精心平衡的方案,结合了由粗到细的探索、后优化和全局局部关键帧选择策略,以最大化重建的完整性和保真度。我们的系统以最先进的几何和光度准确性和完整性自主探索和重建环境,在各个方面都大大超越了现有方法。在Replica和MP3D等基准数据集上的广泛评估突出了ActiveGAMER在主动映射任务中的有效性。

论文及项目相关链接

Summary

ActiveGAMER系统利用三维高斯喷溅技术(3DGS)实现高质量实时场景映射和探索。与传统基于NeRF的方法相比,ActiveGAMER系统借助高效渲染能力实现高效复杂环境探索。核心是通过渲染进行信息获取模块动态确定最有信息的观测点以规划下一步最佳视角,增强几何和光度重建精度。ActiveGAMER结合粗到细探索、后期优化和全局局部关键帧选择策略,以实现重建的完整性和逼真度最大化。系统可在基准数据集如Replica和MP3D上自主探索重建环境,具有优秀的几何和光度准确度和完整性。该总结体现其核心技术与特色,用词精炼,长度适当。

Key Takeaways

- ActiveGAMER采用三维高斯喷溅技术(3DGS)实现实时场景映射与高效探索。

- ActiveGAMER有别于依赖大量计算的传统NeRF方法,以高效渲染推动高效性能。

- 系统的核心是信息获取模块,能够动态选择最有信息的观测点进行最佳视角规划。

- 此系统提升几何与光度重建的准确性。

- 结合粗到细探索、后期优化和关键帧选择策略以提高重建完整度。

- 框架中的各部分如探索策略、关键帧选择等都经过精心设计以实现最优效果。

点此查看论文截图

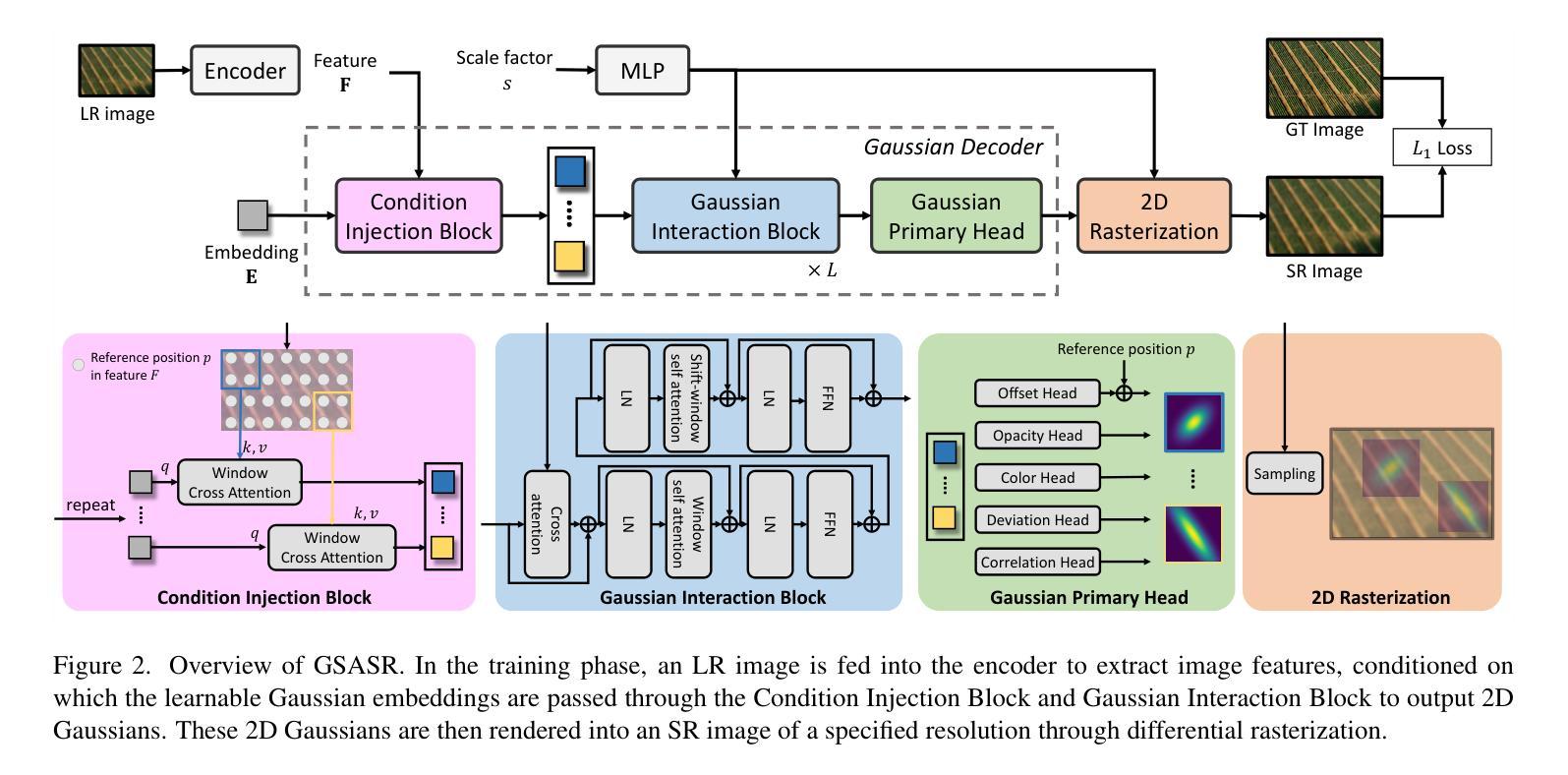

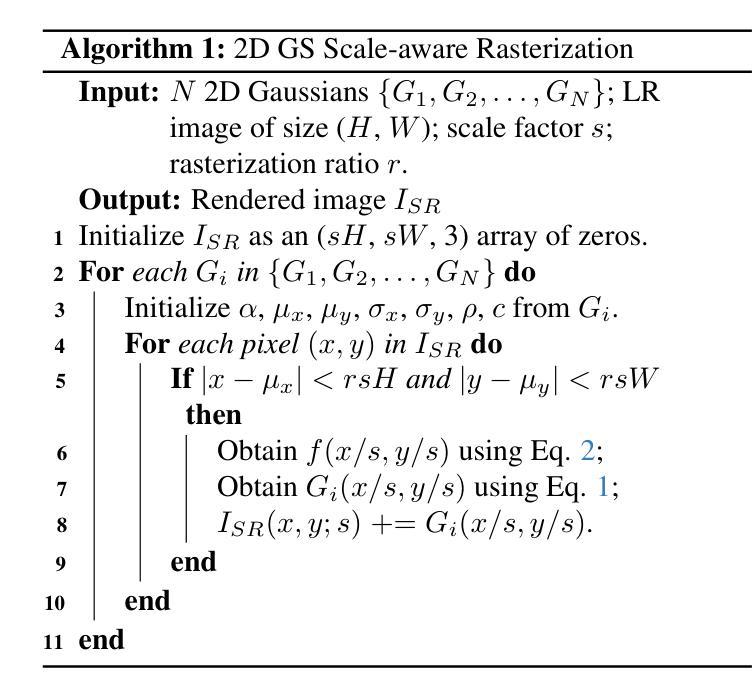

Generalized and Efficient 2D Gaussian Splatting for Arbitrary-scale Super-Resolution

Authors:Du Chen, Liyi Chen, Zhengqiang Zhang, Lei Zhang

Equipped with the continuous representation capability of Multi-Layer Perceptron (MLP), Implicit Neural Representation (INR) has been successfully employed for Arbitrary-scale Super-Resolution (ASR). However, the limited receptive field of the linear layers in MLP restricts the representation capability of INR, while it is computationally expensive to query the MLP numerous times to render each pixel. Recently, Gaussian Splatting (GS) has shown its advantages over INR in both visual quality and rendering speed in 3D tasks, which motivates us to explore whether GS can be employed for the ASR task. However, directly applying GS to ASR is exceptionally challenging because the original GS is an optimization-based method through overfitting each single scene, while in ASR we aim to learn a single model that can generalize to different images and scaling factors. We overcome these challenges by developing two novel techniques. Firstly, to generalize GS for ASR, we elaborately design an architecture to predict the corresponding image-conditioned Gaussians of the input low-resolution image in a feed-forward manner. Secondly, we implement an efficient differentiable 2D GPU/CUDA-based scale-aware rasterization to render super-resolved images by sampling discrete RGB values from the predicted contiguous Gaussians. Via end-to-end training, our optimized network, namely GSASR, can perform ASR for any image and unseen scaling factors. Extensive experiments validate the effectiveness of our proposed method. The project page can be found at \url{https://mt-cly.github.io/GSASR.github.io/}.

具备多层感知器(MLP)的持续表示能力,隐式神经表示(INR)已成功应用于任意尺度超分辨率(ASR)。然而,MLP中的线性层有限的感受野限制了INR的表示能力,而多次查询MLP以渲染每个像素的计算成本又很高。最近,高斯涂抹(GS)在3D任务的视觉质量和渲染速度方面都显示出其优于INR的地方,这激励我们探索是否可以使用GS进行ASR任务。然而,直接将GS应用于ASR具有极大的挑战性,因为原始的GS是通过过度拟合每个单一场景的优化方法,而在ASR中,我们的目标是学习一个可以推广到不同图像和缩放因子的单一模型。我们通过开发两种新技术来克服这些挑战。首先,为了将GS通用化到ASR,我们精心设计了一个架构,以前馈方式预测输入低分辨率图像对应的图像条件高斯分布。其次,我们实现了一个高效的可微分2D GPU/CUDA基尺度感知光栅化,通过从预测连续的高斯分布中采样离散RGB值来呈现超分辨率图像。通过端到端的训练,我们优化的网络,即GSASR,可以对任何图像和未见的缩放因子执行ASR。大量的实验验证了我们的方法的有效性。项目页面可在\url{https://mt-cly.github.io/GSASR.github.io/}找到。

论文及项目相关链接

Summary

基于多层感知器(MLP)的连续表示能力,隐式神经表示(INR)已成功应用于任意尺度超分辨率(ASR)。然而,MLP的线性层有限的感受野限制了INR的表示能力,并且多次查询MLP以渲染每个像素的计算成本很高。近期,高斯拼贴(GS)在3D任务中的视觉质量和渲染速度方面展现出优势。本研究旨在探索GS是否可用于ASR任务。为实现这一目标,我们提出了两项新技术。首先是为ASR通用化GS,设计了一种前馈神经网络架构来预测输入低分辨率图像的条件高斯分布。其次,我们实现了一种高效的可微分2D GPU/CUDA基尺度感知光栅化技术,通过从预测的高斯分布中采样离散RGB值来渲染超分辨率图像。通过端到端的训练,我们优化的网络GSASR可以对任何图像和未见过的缩放因子进行ASR。实验验证了该方法的有效性。

Key Takeaways

一、隐式神经表示(INR)已经成功应用于任意尺度超分辨率(ASR),但其受限于多层感知器(MLP)的线性层感受野。

二、高斯拼贴(GS)在视觉质量和渲染速度方面展现出优势,具有潜力改善ASR任务的效果。

三、为了将GS应用于ASR,需要解决GS原是针对单一场景优化的问题,而ASR需要学习能应用于不同图像和缩放因子的单一模型。

四、本研究通过设计前馈神经网络架构和高效的可微分光栅化技术来克服这些挑战。

五、网络通过端到端的训练,可以实现对任何图像和未见过的缩放因子的ASR。

六、实验验证了该方法的有效性。

点此查看论文截图

F3D-Gaus: Feed-forward 3D-aware Generation on ImageNet with Cycle-Consistent Gaussian Splatting

Authors:Yuxin Wang, Qianyi Wu, Dan Xu

This paper tackles the problem of generalizable 3D-aware generation from monocular datasets, e.g., ImageNet. The key challenge of this task is learning a robust 3D-aware representation without multi-view or dynamic data, while ensuring consistent texture and geometry across different viewpoints. Although some baseline methods are capable of 3D-aware generation, the quality of the generated images still lags behind state-of-the-art 2D generation approaches, which excel in producing high-quality, detailed images. To address this severe limitation, we propose a novel feed-forward pipeline based on pixel-aligned Gaussian Splatting, coined as F3D-Gaus, which can produce more realistic and reliable 3D renderings from monocular inputs. In addition, we introduce a self-supervised cycle-consistent constraint to enforce cross-view consistency in the learned 3D representation. This training strategy naturally allows aggregation of multiple aligned Gaussian primitives and significantly alleviates the interpolation limitations inherent in single-view pixel-aligned Gaussian Splatting. Furthermore, we incorporate video model priors to perform geometry-aware refinement, enhancing the generation of fine details in wide-viewpoint scenarios and improving the model’s capability to capture intricate 3D textures. Extensive experiments demonstrate that our approach not only achieves high-quality, multi-view consistent 3D-aware generation from monocular datasets, but also significantly improves training and inference efficiency.

本文解决了从单目数据集(例如ImageNet)中进行可泛化的3D感知生成的问题。此任务的关键挑战在于,如何在没有多视角或动态数据的情况下学习稳健的3D感知表示,同时确保不同视角下的纹理和几何一致性。尽管一些基准方法能够进行3D感知生成,但所生成图像的质量仍然落后于最先进的2D生成方法,后者在生成高质量、详细的图像方面表现出色。为了解决这一严重限制,我们提出了一种基于像素对齐的高斯涂敷技术的新型前馈管道,称为F3D-Gaus,它可以从单目输入中产生更真实和可靠的3D渲染。此外,我们引入了一种自监督循环一致性约束,以强制执行学习到的3D表示中的跨视图一致性。这种训练策略自然地允许多个对齐的高斯基元进行聚合,并显著缓解了单视图像素对齐高斯涂敷技术所固有的插值限制。此外,我们结合了视频模型先验知识来进行几何感知细化,增强了宽视角场景中的细节生成,提高了模型捕捉复杂3D纹理的能力。大量实验表明,我们的方法不仅实现了从单目数据集进行高质量、多视角一致的3D感知生成,还显著提高了训练和推理效率。

论文及项目相关链接

PDF Project Page: https://w-ted.github.io/publications/F3D-Gaus

Summary

该论文研究了基于单视角数据集(如ImageNet)的可通用化三维感知生成问题。文章针对如何在缺乏多视角或动态数据的情况下学习稳健的三维表示,并保证不同视角下的纹理和几何一致性这一核心挑战,提出了一种基于像素对齐高斯拼贴的新型前馈管道(称为F3D-Gaus)。该管道可以生成更加真实和可靠的三维渲染。为提升模型性能,论文还介绍了自监督循环一致性约束和融合视频模型先验知识的策略。实验证明,该方法不仅实现了高质量、多视角一致的三维感知生成,还显著提高了训练和推理效率。

Key Takeaways

- 该论文解决了基于单视角数据集的三维感知生成问题,针对缺乏多视角或动态数据下的稳健三维表示学习提出了解决方案。

- 论文提出了一种新型前馈管道F3D-Gaus,基于像素对齐高斯拼贴,能生成更真实可靠的三维渲染。

- 为提高模型性能,论文引入了自监督循环一致性约束,增强了学习到的三维表示在不同视角下的一致性。

- 通过融合视频模型先验知识,论文方法能够在宽视角场景下生成更精细的细节,并提升模型捕捉复杂三维纹理的能力。

- 论文通过大量实验验证了方法的有效性,不仅实现了高质量、多视角一致的三维感知生成,还提升了训练和推理效率。

- F3D-Gaus方法能够应对单视角像素对齐高斯拼贴固有的插值限制,通过自然的方式允许多个对齐的高斯基元聚合。

点此查看论文截图

MapGS: Generalizable Pretraining and Data Augmentation for Online Mapping via Novel View Synthesis

Authors:Hengyuan Zhang, David Paz, Yuliang Guo, Xinyu Huang, Henrik I. Christensen, Liu Ren

Online mapping reduces the reliance of autonomous vehicles on high-definition (HD) maps, significantly enhancing scalability. However, recent advancements often overlook cross-sensor configuration generalization, leading to performance degradation when models are deployed on vehicles with different camera intrinsics and extrinsics. With the rapid evolution of novel view synthesis methods, we investigate the extent to which these techniques can be leveraged to address the sensor configuration generalization challenge. We propose a novel framework leveraging Gaussian splatting to reconstruct scenes and render camera images in target sensor configurations. The target config sensor data, along with labels mapped to the target config, are used to train online mapping models. Our proposed framework on the nuScenes and Argoverse 2 datasets demonstrates a performance improvement of 18% through effective dataset augmentation, achieves faster convergence and efficient training, and exceeds state-of-the-art performance when using only 25% of the original training data. This enables data reuse and reduces the need for laborious data labeling. Project page at https://henryzhangzhy.github.io/mapgs.

在线映射减少了自动驾驶车辆对高精度地图的依赖,从而显著提高了其可扩展性。然而,最近的进展往往忽视了跨传感器配置的泛化能力,导致模型在部署到具有不同相机内部和外部参数的车辆上时性能下降。随着新颖视图合成方法的快速发展,我们调查了这些技术在解决传感器配置泛化挑战方面的潜力。我们提出了一种新的框架,利用高斯喷射技术重建场景并在目标传感器配置中渲染相机图像。目标配置传感器数据以及与目标配置映射的标签被用于训练在线映射模型。我们在nuScenes和Argoverse 2数据集上提出的框架通过有效的数据集增强实现了18%的性能提升,实现了更快的收敛和高效的训练,并且在仅使用原始训练数据的25%时超过了最新技术的性能。这实现了数据的再利用,并减少了繁琐的数据标注需求。项目页面为:https://henryzhangzhy.github.io/mapgs。

论文及项目相关链接

摘要

在线映射技术减少了自动驾驶车辆对高清地图的依赖,显著提高了其可扩展性。然而,最近的研究进展往往忽视了跨传感器配置的通用性,导致模型在部署到具有不同相机内部和外部参数的车上时性能下降。随着新颖视图合成方法的快速发展,本文探讨了这些技术可以在多大程度上被用来解决传感器配置通用性的挑战。本文提出了一种利用高斯喷涂重建场景并渲染目标传感器配置的相机图像的新框架。目标配置传感器数据以及映射到目标配置的标签被用来训练在线映射模型。在nuScenes和Argoverse 2数据集上的实验表明,通过有效的数据集增强,本文提出的框架性能提高了18%,实现了更快的收敛和高效的训练,并且在仅使用原始训练数据的25%时超过了现有技术的性能。这实现了数据的再利用,并减少了繁琐的数据标注需求。

要点

- 在线映射技术增强自主车辆的灵活性并减少了对高清地图的依赖。

- 当前研究忽略了不同传感器配置之间的通用性,导致模型在不同车辆上性能下降。

- 新颖视图合成方法为解决传感器配置通用性问题提供了新的视角。

- 提出一种基于高斯喷涂技术的框架,能重建场景并渲染目标传感器配置的相机图像。

- 利用目标配置传感器数据和标签训练在线映射模型。

- 在多个数据集上的实验表明,该框架通过有效的数据集增强提高了性能,实现了快速收敛和高效训练。

- 该框架在仅使用少量原始训练数据的情况下仍表现出卓越性能,促进了数据的再利用并减少了标注工作量。

点此查看论文截图

NVS-SQA: Exploring Self-Supervised Quality Representation Learning for Neurally Synthesized Scenes without References

Authors:Qiang Qu, Yiran Shen, Xiaoming Chen, Yuk Ying Chung, Weidong Cai, Tongliang Liu

Neural View Synthesis (NVS), such as NeRF and 3D Gaussian Splatting, effectively creates photorealistic scenes from sparse viewpoints, typically evaluated by quality assessment methods like PSNR, SSIM, and LPIPS. However, these full-reference methods, which compare synthesized views to reference views, may not fully capture the perceptual quality of neurally synthesized scenes (NSS), particularly due to the limited availability of dense reference views. Furthermore, the challenges in acquiring human perceptual labels hinder the creation of extensive labeled datasets, risking model overfitting and reduced generalizability. To address these issues, we propose NVS-SQA, a NSS quality assessment method to learn no-reference quality representations through self-supervision without reliance on human labels. Traditional self-supervised learning predominantly relies on the “same instance, similar representation” assumption and extensive datasets. However, given that these conditions do not apply in NSS quality assessment, we employ heuristic cues and quality scores as learning objectives, along with a specialized contrastive pair preparation process to improve the effectiveness and efficiency of learning. The results show that NVS-SQA outperforms 17 no-reference methods by a large margin (i.e., on average 109.5% in SRCC, 98.6% in PLCC, and 91.5% in KRCC over the second best) and even exceeds 16 full-reference methods across all evaluation metrics (i.e., 22.9% in SRCC, 19.1% in PLCC, and 18.6% in KRCC over the second best).

神经视图合成(NVS),如NeRF和3D高斯溅射,能够有效地从稀疏视角生成逼真的场景,通常通过PSNR、SSIM和LPIPS等质量评估方法进行评估。然而,这些全参考方法将合成视图与参考视图进行比较,可能无法完全捕捉神经合成场景(NSS)的感知质量,尤其是因为密集参考视图的可获得性有限。此外,获取人类感知标签的挑战阻碍了大规模标记数据集的制作,存在模型过度拟合和泛化性降低的风险。为了解决这些问题,我们提出了NVS-SQA,这是一种无参考质量评估方法,通过自我监督学习无参考质量表示,无需依赖人类标签。传统的自监督学习主要依赖于“同一实例,相似表示”的假设和大规模数据集。然而,考虑到这些条件不适用于NSS质量评估,我们采用启发式线索和质量分数作为学习目标,同时配合专门的对比对准备过程,以提高学习的有效性和效率。结果表明,NVS-SQA在17种无参考方法中有很大的优势(例如,SRCC平均提高109.5%,PLCC提高98.6%,KRCC提高91.5%);在所有评估指标上,甚至超过了16种全参考方法(例如,SRCC提高22.9%,PLCC提高19.1%,KRCC提高18.6%)。

论文及项目相关链接

摘要

NVS-SQA方法通过无参考质量表示学习解决了神经合成场景质量评估的挑战。该方法无需依赖人类标签,通过自我监督学习评估神经渲染场景的质量。传统自监督学习方法主要依赖“同一实例,相似表示”假设和大量数据集,但这一假设并不适用于NSS质量评估。因此,NVS-SQA采用启发式线索和质量分数作为学习目标,并通过对比配对准备过程提高学习效率和效果。实验结果表明,NVS-SQA在无参考方法上大幅超越其他方法,并在全参考方法中表现优异。

关键见解

- NVS-SQA是一个用于神经合成场景(NSS)质量评估的无参考质量评估方法。

- NVS-SQA通过自我监督学习,无需依赖人类标签进行学习。

- 传统自监督学习方法的“同一实例,相似表示”假设在NSS质量评估中不适用。

- NVS-SQA采用启发式线索和质量分数作为学习目标。

- NVS-SQA通过对比配对准备过程提高学习效率和效果。

- NVS-SQA在多种无参考方法上表现显著优越,大幅超越其他方法。

点此查看论文截图

Arc2Avatar: Generating Expressive 3D Avatars from a Single Image via ID Guidance

Authors:Dimitrios Gerogiannis, Foivos Paraperas Papantoniou, Rolandos Alexandros Potamias, Alexandros Lattas, Stefanos Zafeiriou

Inspired by the effectiveness of 3D Gaussian Splatting (3DGS) in reconstructing detailed 3D scenes within multi-view setups and the emergence of large 2D human foundation models, we introduce Arc2Avatar, the first SDS-based method utilizing a human face foundation model as guidance with just a single image as input. To achieve that, we extend such a model for diverse-view human head generation by fine-tuning on synthetic data and modifying its conditioning. Our avatars maintain a dense correspondence with a human face mesh template, allowing blendshape-based expression generation. This is achieved through a modified 3DGS approach, connectivity regularizers, and a strategic initialization tailored for our task. Additionally, we propose an optional efficient SDS-based correction step to refine the blendshape expressions, enhancing realism and diversity. Experiments demonstrate that Arc2Avatar achieves state-of-the-art realism and identity preservation, effectively addressing color issues by allowing the use of very low guidance, enabled by our strong identity prior and initialization strategy, without compromising detail. Please visit https://arc2avatar.github.io for more resources.

受3D高斯平铺(3DGS)在多视角设置中重建详细3D场景的有效性以及大型二维人类基础模型的出现启发,我们推出了Arc2Avatar。这是基于SDS的首个方法,仅使用单张图像作为输入,以人类面部基础模型为指导。为了实现这一点,我们通过微调合成数据并修改其条件,将该模型扩展用于多视角的人头生成。我们的虚拟角色与一个人脸网格模板保持密集的对应关系,允许基于blendshape的表达式生成。这是通过一个修改的3DGS方法、连接正则化以及针对我们任务的战略初始化来实现的。此外,我们提出了一种可选的基于SDS的校正步骤,以优化blendshape表达式,提高真实感和多样性。实验表明,Arc2Avatar达到了最先进的真实感和身份保留效果,通过允许使用非常低的指导有效地解决了颜色问题,这得益于我们强大的身份优先权和初始化策略,不会损失细节。更多资源请访问:https://arc2avatar.github.io。

论文及项目相关链接

PDF Project Page https://arc2avatar.github.io

Summary

Arc2Avatar是基于单张图像的人脸重建方法,利用三维高斯喷溅(3DGS)技术,结合人脸基础模型,实现多角度下的人脸重建。通过微调合成数据并修改其条件,扩展模型以生成多样化的头部视图。利用密集对应的人脸网格模板实现表情生成,并提出基于SDS的高效修正步骤,提高表情的真实性和多样性。Arc2Avatar在真实感和身份保留方面达到领先水平,通过低指导策略解决色彩问题,同时不损失细节。

Key Takeaways

- Arc2Avatar结合了三维高斯喷溅(3DGS)技术和人脸基础模型,实现了基于单张图像的人脸重建。

- 该方法通过微调合成数据并修改条件,能够生成多样化的头部视图。

- Arc2Avatar利用密集对应的人脸网格模板实现表情生成。

- SDS被用于一个高效的修正步骤,提高了表情的真实性和多样性。

- Arc2Avatar达到了先进的真实感和身份保留水平。

- 该方法通过低指导策略解决了色彩问题,同时保持了细节。

点此查看论文截图

DehazeGS: Seeing Through Fog with 3D Gaussian Splatting

Authors:Jinze Yu, Yiqun Wang, Zhengda Lu, Jianwei Guo, Yong Li, Hongxing Qin, Xiaopeng Zhang

Current novel view synthesis tasks primarily rely on high-quality and clear images. However, in foggy scenes, scattering and attenuation can significantly degrade the reconstruction and rendering quality. Although NeRF-based dehazing reconstruction algorithms have been developed, their use of deep fully connected neural networks and per-ray sampling strategies leads to high computational costs. Moreover, NeRF’s implicit representation struggles to recover fine details from hazy scenes. In contrast, recent advancements in 3D Gaussian Splatting achieve high-quality 3D scene reconstruction by explicitly modeling point clouds into 3D Gaussians. In this paper, we propose leveraging the explicit Gaussian representation to explain the foggy image formation process through a physically accurate forward rendering process. We introduce DehazeGS, a method capable of decomposing and rendering a fog-free background from participating media using only muti-view foggy images as input. We model the transmission within each Gaussian distribution to simulate the formation of fog. During this process, we jointly learn the atmospheric light and scattering coefficient while optimizing the Gaussian representation of the hazy scene. In the inference stage, we eliminate the effects of scattering and attenuation on the Gaussians and directly project them onto a 2D plane to obtain a clear view. Experiments on both synthetic and real-world foggy datasets demonstrate that DehazeGS achieves state-of-the-art performance in terms of both rendering quality and computational efficiency.

当前的新型视图合成任务主要依赖于高质量、清晰的图像。然而,在雾天场景中,散射和衰减会显著影响重建和渲染质量。尽管已经开发了基于NeRF的去雾重建算法,但它们使用深度全连接神经网络和按射线采样策略,导致计算成本较高。此外,NeRF的隐式表示很难从雾天场景中恢复细节。相比之下,3D高斯拼贴技术的最新进展通过显式建模点云为3D高斯实现了高质量的三维场景重建。在本文中,我们提出利用显式的高斯表示,通过一个物理准确的前向渲染过程来解释雾天图像的形成过程。我们介绍了DehazeGS方法,该方法能够从参与介质中分解并渲染无雾背景,仅使用多视角雾天图像作为输入。我们模拟了每个高斯分布内的传输,以模拟雾的形成。在此过程中,我们联合学习大气光和散射系数,同时优化雾天场景的高斯表示。在推理阶段,我们消除了散射和衰减对高斯的影响,并将其直接投影到二维平面上以获得清晰视图。在合成和真实世界的雾天数据集上的实验表明,DehazeGS在渲染质量和计算效率方面都达到了最先进的性能。

论文及项目相关链接

PDF 9 pages,4 figures

Summary

本文提出一种基于3D高斯散斑(Gaussian Splatting)的去雾方法,称为DehazeGS。该方法利用显式高斯表示来模拟雾天图像的形成过程,通过多视角雾天图像输入,分解并渲染出无雾的背景。该方法模拟雾的形成过程,并优化高斯表示法,同时学习大气光和散射系数。在推断阶段,消除高斯上的散射和衰减效应,直接将其投影到二维平面上以获取清晰的视图。实验表明,DehazeGS在渲染质量和计算效率方面均达到领先水平。

Key Takeaways

- 当前视图合成任务主要依赖于高质量清晰图像,但在雾天场景中,散射和衰减会严重影响重建和渲染质量。

- NeRF基的去雾重建算法虽然存在,但其深度全连接神经网络和按射线采样策略导致高计算成本,且难以从雾场景中恢复细节。

- 3D高斯散斑(Gaussian Splatting)方法能实现高质量3D场景重建,通过显式建模点云为3D高斯。

- 本文提出利用显式高斯表示法,通过物理准确的正向渲染过程解释雾天图像形成过程。

- DehazeGS方法能够分解并渲染出无雾背景,利用多视角雾天图像作为输入,模拟雾的形成过程,并优化高斯表示法。

- DehazeGS方法在学习大气光和散射系数的同时,能消除高斯上的散射和衰减效应,直接投影到二维平面上获取清晰视图。

点此查看论文截图

HeadGAP: Few-Shot 3D Head Avatar via Generalizable Gaussian Priors

Authors:Xiaozheng Zheng, Chao Wen, Zhaohu Li, Weiyi Zhang, Zhuo Su, Xu Chang, Yang Zhao, Zheng Lv, Xiaoyuan Zhang, Yongjie Zhang, Guidong Wang, Lan Xu

In this paper, we present a novel 3D head avatar creation approach capable of generalizing from few-shot in-the-wild data with high-fidelity and animatable robustness. Given the underconstrained nature of this problem, incorporating prior knowledge is essential. Therefore, we propose a framework comprising prior learning and avatar creation phases. The prior learning phase leverages 3D head priors derived from a large-scale multi-view dynamic dataset, and the avatar creation phase applies these priors for few-shot personalization. Our approach effectively captures these priors by utilizing a Gaussian Splatting-based auto-decoder network with part-based dynamic modeling. Our method employs identity-shared encoding with personalized latent codes for individual identities to learn the attributes of Gaussian primitives. During the avatar creation phase, we achieve fast head avatar personalization by leveraging inversion and fine-tuning strategies. Extensive experiments demonstrate that our model effectively exploits head priors and successfully generalizes them to few-shot personalization, achieving photo-realistic rendering quality, multi-view consistency, and stable animation.

本文提出了一种新型的3D头部化身创建方法,能够从少量野外数据中进行高度保真和可动画的稳健性推广。鉴于这个问题的约束性较弱,融入先验知识至关重要。因此,我们提出了一个包含先验学习阶段和化身创建阶段的框架。先验学习阶段利用大规模多视角动态数据集推导出的3D头部先验知识,而化身创建阶段则应用这些先验知识来进行少量个性化设置。我们的方法通过利用基于高斯拼贴技术的自动解码网络以及基于部分的动态建模来有效地捕捉这些先验知识。我们的方法采用具有个性化潜在代码的共享身份编码来学习高斯原始数据的属性。在化身创建阶段,我们通过利用反演和微调策略实现了快速的头部化身个性化。大量实验表明,我们的模型能够有效地利用头部先验知识,并成功将其推广到少量个性化设置中,实现了照片级的渲染质量、多视角一致性和稳定的动画效果。

论文及项目相关链接

PDF Accepted to 3DV 2025. Project page: https://headgap.github.io/

Summary

本文提出了一种新型的三维头部化身创建方法,能够从少量真实场景数据中实现高保真、可动画的稳健性泛化。针对此问题的约束较少,因此融入先验知识至关重要。文章提出了一个包含先验学习阶段和化身创建阶段的框架。先验学习阶段利用大规模多视角动态数据集提取的头部三维先验信息,而化身创建阶段则应用这些先验进行少量个性化设置。该研究采用基于高斯斑点技术的自动解码网络进行建模,借助身份共享编码和个性化潜在代码学习高斯原始数据的属性。在化身创建阶段,通过反演和微调策略实现快速头部化身个性化。大量实验证明,该模型能有效利用头部先验信息,成功泛化至少量个性化场景,实现照片级渲染质量、多视角一致性和稳定动画效果。

Key Takeaways

- 提出了一种新型三维头部化身创建方法,可从少量真实场景数据中实现高保真和可动画的稳健性泛化。

- 融入先验知识至关重要,为此文章构建了一个包含先验学习阶段和化身创建阶段的框架。

- 先验学习阶段利用大规模多视角动态数据集提取头部三维先验信息。

- 化身创建阶段应用这些先验进行个性化设置,快速创建头部化身。

- 采用基于高斯斑点技术的自动解码网络进行建模,学习高斯原始数据的属性。

- 通过反演和微调策略实现快速头部化身个性化创建。

点此查看论文截图

Gaussian Eigen Models for Human Heads

Authors:Wojciech Zielonka, Timo Bolkart, Thabo Beeler, Justus Thies

Current personalized neural head avatars face a trade-off: lightweight models lack detail and realism, while high-quality, animatable avatars require significant computational resources, making them unsuitable for commodity devices. To address this gap, we introduce Gaussian Eigen Models (GEM), which provide high-quality, lightweight, and easily controllable head avatars. GEM utilizes 3D Gaussian primitives for representing the appearance combined with Gaussian splatting for rendering. Building on the success of mesh-based 3D morphable face models (3DMM), we define GEM as an ensemble of linear eigenbases for representing the head appearance of a specific subject. In particular, we construct linear bases to represent the position, scale, rotation, and opacity of the 3D Gaussians. This allows us to efficiently generate Gaussian primitives of a specific head shape by a linear combination of the basis vectors, only requiring a low-dimensional parameter vector that contains the respective coefficients. We propose to construct these linear bases (GEM) by distilling high-quality compute-intense CNN-based Gaussian avatar models that can generate expression-dependent appearance changes like wrinkles. These high-quality models are trained on multi-view videos of a subject and are distilled using a series of principal component analyses. Once we have obtained the bases that represent the animatable appearance space of a specific human, we learn a regressor that takes a single RGB image as input and predicts the low-dimensional parameter vector that corresponds to the shown facial expression. In a series of experiments, we compare GEM’s self-reenactment and cross-person reenactment results to state-of-the-art 3D avatar methods, demonstrating GEM’s higher visual quality and better generalization to new expressions.

当前个性化神经头部化身面临一个权衡:轻量级模型缺乏细节和真实感,而高质量、可动画的化身需要巨大的计算资源,使得它们不适合普通设备。为了解决这一差距,我们引入了高斯特征模型(GEM),它提供高质量、轻便且易于控制的头部化身。GEM使用3D高斯原始图形来表示外观,并结合高斯涂抹进行渲染。基于基于网格的3D可变形面部模型(3DMM)的成功,我们将GEM定义为表示特定主体头部外观的线性特征基集合。特别是,我们构建了表示位置、尺度、旋转和透明度的线性基。这允许我们通过线性组合基向量来有效地生成具有特定头部形状的高斯原始图形,只需要一个包含相应系数的低维参数向量。我们提议通过提炼高质量的计算密集型CNN高斯化身模型来构建这些线性基(GEM),该模型可以生成与表情相关的外观变化,如皱纹。这些高质量模型是在主体的多视角视频上进行训练,并使用一系列主成分分析进行提炼。一旦我们获得了代表特定人类可动画外观空间的基地,我们就会学习一个回归器,它接受单张RGB图像作为输入,并预测与所示面部表情相对应的低维参数向量。在一系列实验中,我们将GEM的自我再演绎和跨人再演绎结果与最先进的3D化身方法进行比较,证明了GEM更高的视觉质量和对新表情更好的泛化能力。

论文及项目相关链接

PDF https://zielon.github.io/gem/

摘要

本文提出了一种基于高斯特征模型(GEM)的个性化神经头部化身解决方案,该模型能够在保证高质量的同时,实现轻量化且易于控制的头部化身。GEM通过结合3D高斯原始图形和高斯拼接渲染技术,成功弥补了轻量化模型缺乏细节和真实感,以及高质量可动画化身需要大量计算资源的差距。实验表明,与现有最先进的3D化身方法相比,GEM在自我重演和跨人重演方面表现出更高的视觉质量和更好的新表情泛化能力。

关键见解

- 当前个性化神经头部化身面临权衡:轻量化模型缺乏细节和真实性,而高质量可动画化身需要大量计算资源,不适用于普通设备。

- 提出高斯特征模型(GEM)以解决这个问题,实现高质量、轻量化且易于控制的头部化身。

- GEM利用3D高斯原始图形和Gaussian splatting渲染技术,结合mesh-based 3D morphable face models(3DMM)的成功经验,定义了一个特定头部外观的线性特征基集合。

- 通过蒸馏计算密集型CNN基高斯化身模型构建这些线性特征基(GEM),这些模型能够生成表情相关的外观变化,如皱纹。

- 通过主成分分析系列对高质量模型进行蒸馏以获取特征基。

- 学习一个回归器,以单张RGB图像为输入,预测与所示面部表情相对应的低维参数向量。

- 实验表明,与现有最先进的3D化身方法相比,GEM在自我重演和跨人重演方面表现出更高的视觉质量和更好的新表情泛化能力。

点此查看论文截图

Splat-Nav: Safe Real-Time Robot Navigation in Gaussian Splatting Maps

Authors:Timothy Chen, Ola Shorinwa, Joseph Bruno, Aiden Swann, Javier Yu, Weijia Zeng, Keiko Nagami, Philip Dames, Mac Schwager

We present Splat-Nav, a real-time robot navigation pipeline for Gaussian Splatting (GSplat) scenes, a powerful new 3D scene representation. Splat-Nav consists of two components: 1) Splat-Plan, a safe planning module, and 2) Splat-Loc, a robust vision-based pose estimation module. Splat-Plan builds a safe-by-construction polytope corridor through the map based on mathematically rigorous collision constraints and then constructs a B'ezier curve trajectory through this corridor. Splat-Loc provides real-time recursive state estimates given only an RGB feed from an on-board camera, leveraging the point-cloud representation inherent in GSplat scenes. Working together, these modules give robots the ability to recursively re-plan smooth and safe trajectories to goal locations. Goals can be specified with position coordinates, or with language commands by using a semantic GSplat. We demonstrate improved safety compared to point cloud-based methods in extensive simulation experiments. In a total of 126 hardware flights, we demonstrate equivalent safety and speed compared to motion capture and visual odometry, but without a manual frame alignment required by those methods. We show online re-planning at more than 2 Hz and pose estimation at about 25 Hz, an order of magnitude faster than Neural Radiance Field (NeRF)-based navigation methods, thereby enabling real-time navigation. We provide experiment videos on our project page at https://chengine.github.io/splatnav/. Our codebase and ROS nodes can be found at https://github.com/chengine/splatnav.

我们推出Splat-Nav,这是一种针对高斯拼贴(GSplat)场景的实时机器人导航流程,是一种强大的新型3D场景表示方法。Splat-Nav由两个组件构成:1)Splat-Plan,一个安全规划模块;2)Splat-Loc,一个基于视觉的稳健姿态估计模块。Splat-Plan在地图上构建一个基于数学严谨碰撞约束的构造安全多面体走廊,然后在该走廊上构建一条贝塞尔曲线轨迹。Splat-Loc仅凭来自车载摄像头的RGB馈送,提供实时递归状态估计,并利用GSplat场景中的点云表示。这些模块协同工作,使机器人能够递归地重新规划平滑安全的轨迹以达到目标位置。目标可以用位置坐标指定,也可以使用通过语义化的GSplat输入的语音命令。我们在广泛的模拟实验中证明了与基于点云的方法相比有所提高的安全性。在总共的126次硬件飞行中,我们展示了与运动捕获和视觉里程计相当的安全性和速度,但不需要这些方法所需的手动帧对齐。我们展示了超过2Hz的在线重新规划速度和大约每秒25帧的姿态估计速度,这比基于神经辐射场(NeRF)的导航方法快一个数量级,从而实现实时导航。实验视频请在我们的项目页面https://chengine.github.io/splatnav/观看。我们的代码库和ROS节点可以在https://github.com/chengine/splatnav找到。

论文及项目相关链接

摘要

Splat-Nav为高斯绘图(GSplat)场景提供了一种实时机器人导航管道。它包含两个组件:Splat-Plan安全规划模块和Splat-Loc稳健的视觉姿态估计模块。Splat-Plan在地图上构建了一个安全的多边形走廊,并基于严格的数学碰撞约束在此走廊内构建了一条Bezier曲线轨迹。Splat-Loc利用GSplat场景的点云表示,仅从车载相机提供RGB馈送流进行实时递归状态估计。这两个模块协同工作,使机器人能够根据目标位置递归地重新规划平滑安全的轨迹。目标可以用位置坐标或语言命令通过语义GSplat来指定。在广泛的模拟实验中,我们证明了与点云方法相比的安全性能提升。在总共的126次硬件飞行中,我们展示了与运动捕获和视觉里程计相当的安全性和速度,但无需这些方法所需的手动帧对齐。我们展示了超过2Hz的在线重新规划速度和大约每秒帧数的姿态估计速度,比基于神经辐射场(NeRF)的导航方法快得多,从而实现实时导航。相关实验视频和项目页面位于https://chengine.github.io/splatnav/。我们的代码库和ROS节点可在https://github.com/chengine/splatnav找到。

要点

- Splat-Nav提供实时机器人导航,适用于高斯绘图(GSplat)场景。

- 包含两个主要组件:Splat-Plan安全规划模块和Splat-Loc视觉姿态估计模块。

- Splat-Plan基于数学碰撞约束构建安全多边形走廊和Bezier曲线轨迹。

- Splat-Loc利用点云表示进行实时递归状态估计。

- 模块协同工作,支持以位置坐标或语言命令指定目标位置的递归重新规划平滑安全轨迹的能力。

- 在模拟实验中证明了相对于点云方法的安全性能提升。

点此查看论文截图