⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-18 更新

Normal-NeRF: Ambiguity-Robust Normal Estimation for Highly Reflective Scenes

Authors:Ji Shi, Xianghua Ying, Ruohao Guo, Bowei Xing, Wenzhen Yue

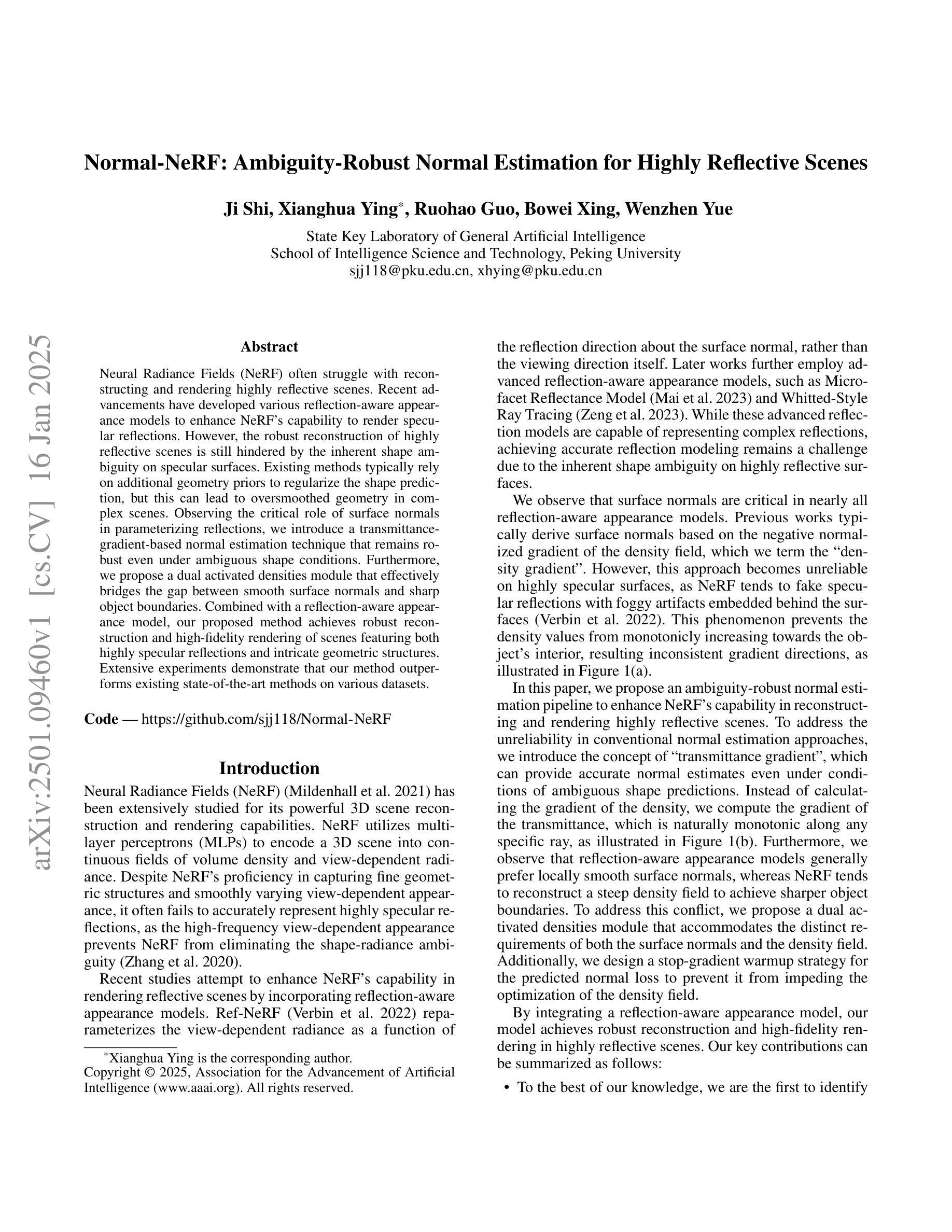

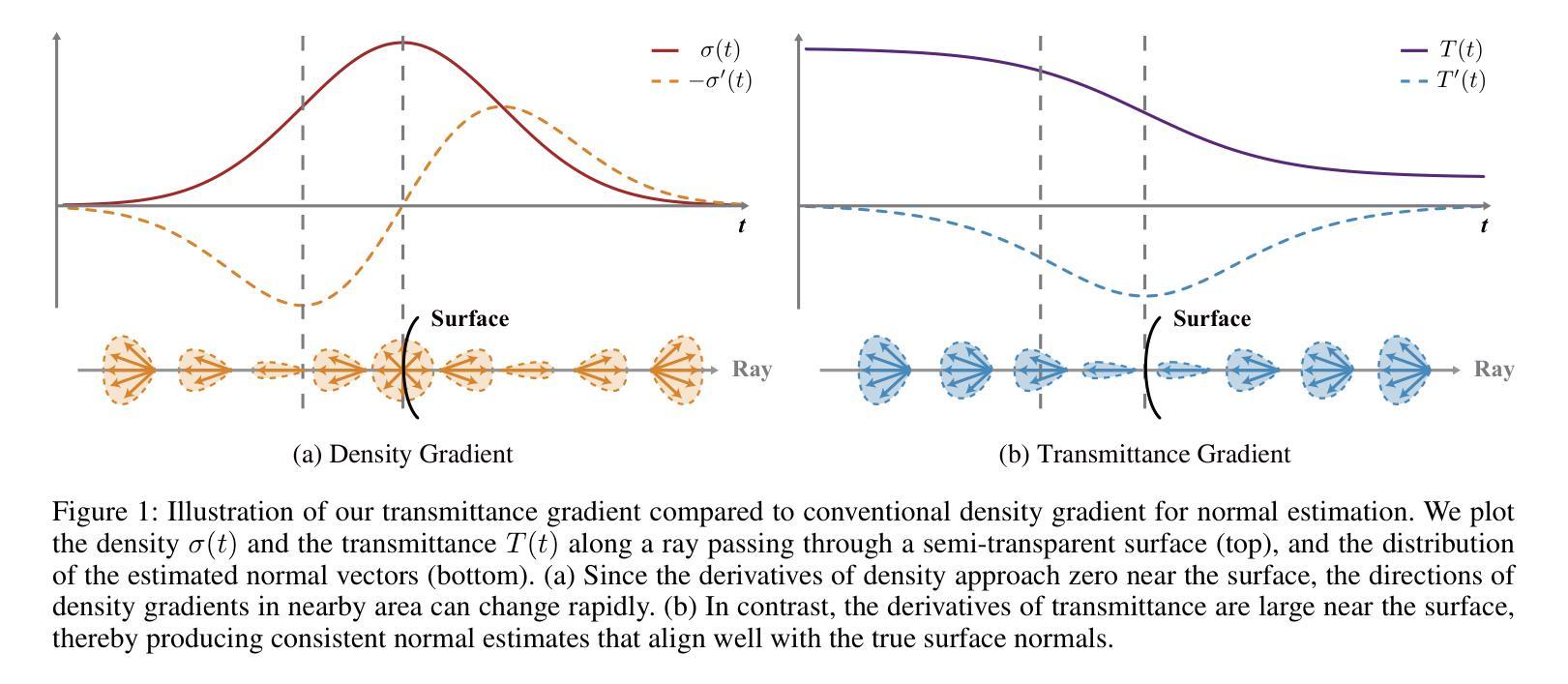

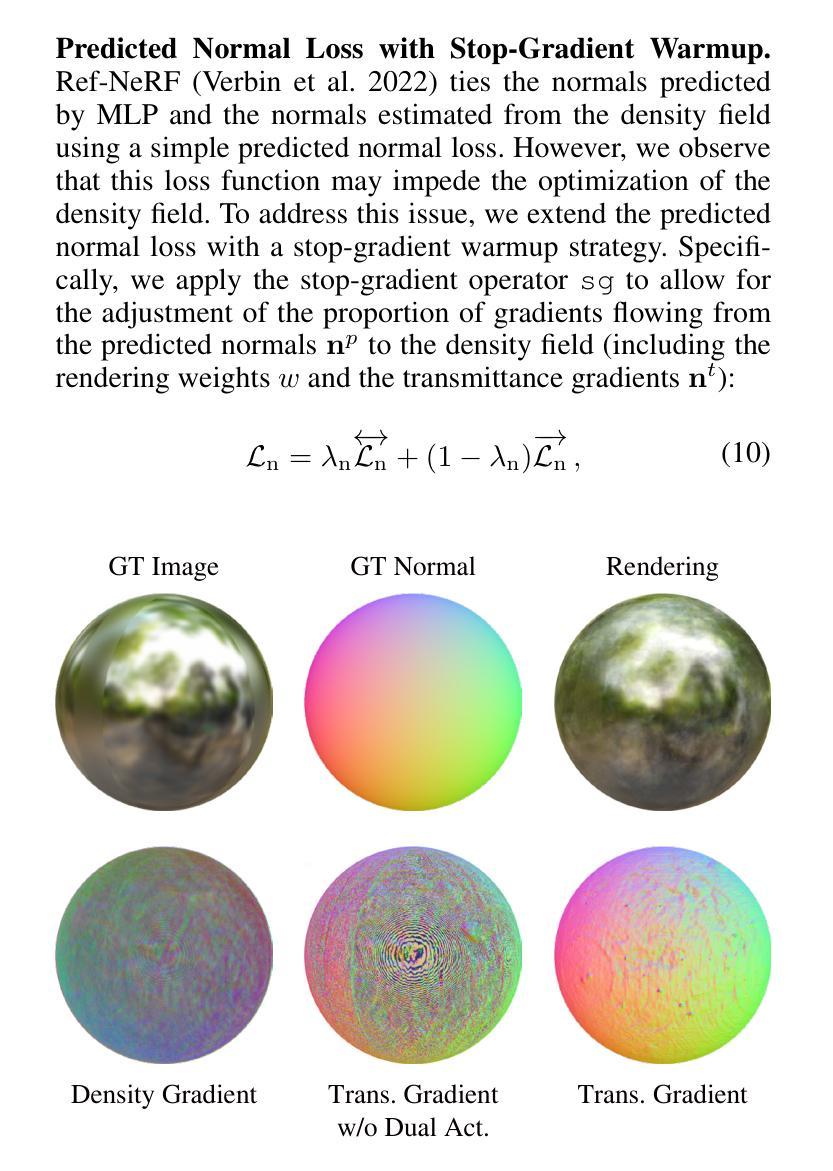

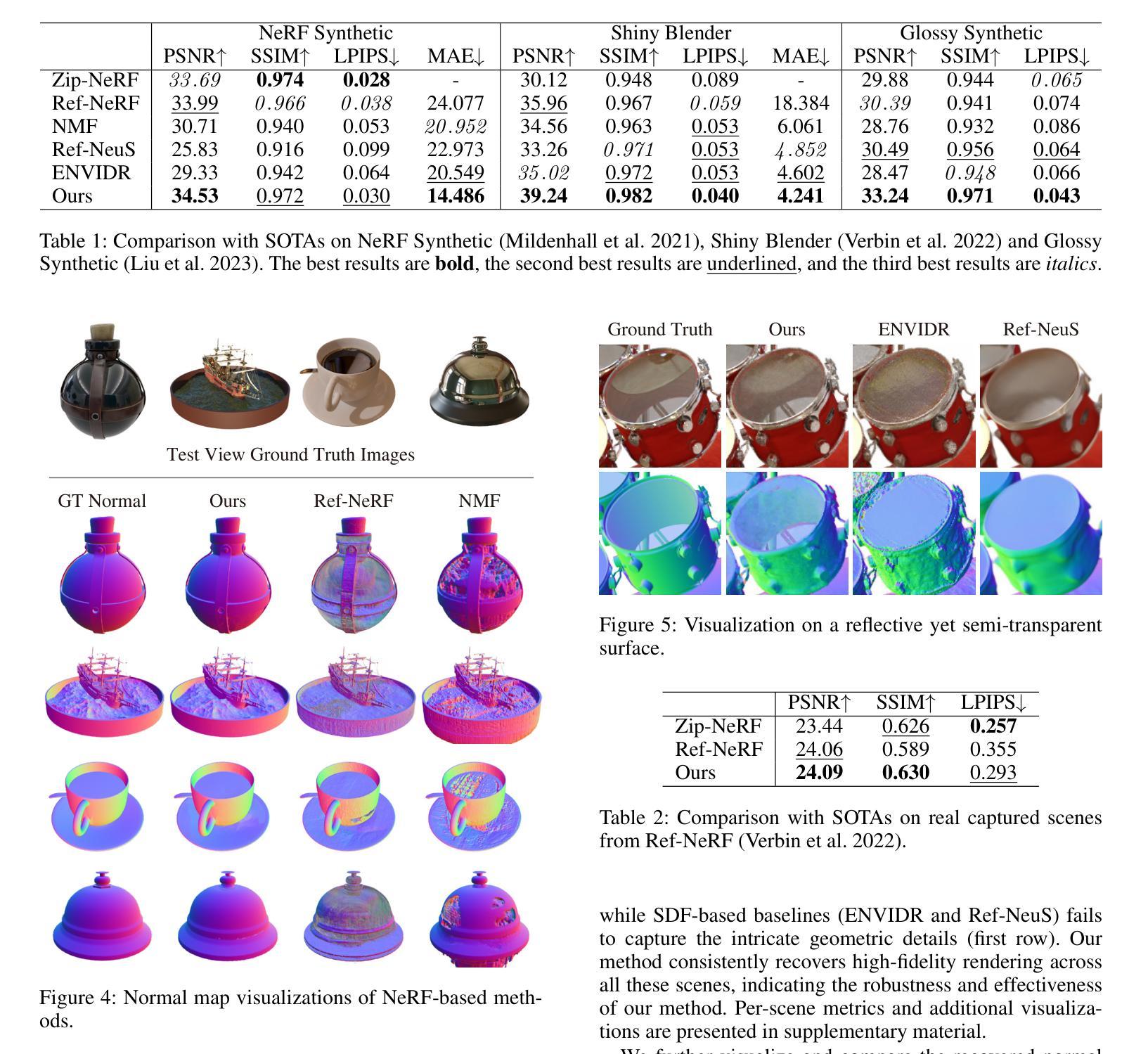

Neural Radiance Fields (NeRF) often struggle with reconstructing and rendering highly reflective scenes. Recent advancements have developed various reflection-aware appearance models to enhance NeRF’s capability to render specular reflections. However, the robust reconstruction of highly reflective scenes is still hindered by the inherent shape ambiguity on specular surfaces. Existing methods typically rely on additional geometry priors to regularize the shape prediction, but this can lead to oversmoothed geometry in complex scenes. Observing the critical role of surface normals in parameterizing reflections, we introduce a transmittance-gradient-based normal estimation technique that remains robust even under ambiguous shape conditions. Furthermore, we propose a dual activated densities module that effectively bridges the gap between smooth surface normals and sharp object boundaries. Combined with a reflection-aware appearance model, our proposed method achieves robust reconstruction and high-fidelity rendering of scenes featuring both highly specular reflections and intricate geometric structures. Extensive experiments demonstrate that our method outperforms existing state-of-the-art methods on various datasets.

神经辐射场(NeRF)在处理高反射场景的重建和渲染时经常面临挑战。最近的研究进展已经开发出各种感知反射的外观模型,以提高NeRF对镜面反射的渲染能力。然而,高反射场景的稳健重建仍然受到镜面表面上固有形状歧义性的阻碍。现有方法通常依赖于额外的几何先验来规范形状预测,但这可能导致复杂场景中的几何形状过度平滑。观察到表面法线在参数化反射中的关键作用,我们引入了一种基于透射梯度法线的估计技术,即使在形状条件模糊的情况下也能保持稳健性。此外,我们提出了一种双激活密度模块,有效地填补了平滑表面法线与尖锐物体边界之间的鸿沟。结合感知反射的外观模型,我们提出的方法实现了高反射场景和复杂几何结构场景的稳健重建和高保真渲染。大量实验表明,我们的方法在多个数据集上的表现优于现有最新技术。

论文及项目相关链接

PDF AAAI 2025, code available at https://github.com/sjj118/Normal-NeRF

Summary

本文介绍了NeRF在处理高反射场景时的挑战,包括形状模糊和渲染失真。为解决这些问题,提出了基于透射梯度法估算表面法线的新技术,以及一个双重激活密度模块,能弥补平滑表面法线与锐利物体边界间的鸿沟。结合反射感知模型,该方法实现了高反射场景的稳定重建和高保真渲染。实验证明,该方法在多个数据集上的表现优于现有技术。

Key Takeaways

- NeRF在处理高反射场景时面临重建和渲染挑战。

- 提出了基于透射梯度法的表面法线估算技术,应对形状模糊的问题。

- 双重激活密度模块弥补了平滑表面法线与锐利物体边界间的差距。

- 结合反射感知模型,实现了高反射场景的稳健重建和高保真渲染。

- 方法在多个数据集上的表现优于现有技术。

点此查看论文截图

DehazeGS: Seeing Through Fog with 3D Gaussian Splatting

Authors:Jinze Yu, Yiqun Wang, Zhengda Lu, Jianwei Guo, Yong Li, Hongxing Qin, Xiaopeng Zhang

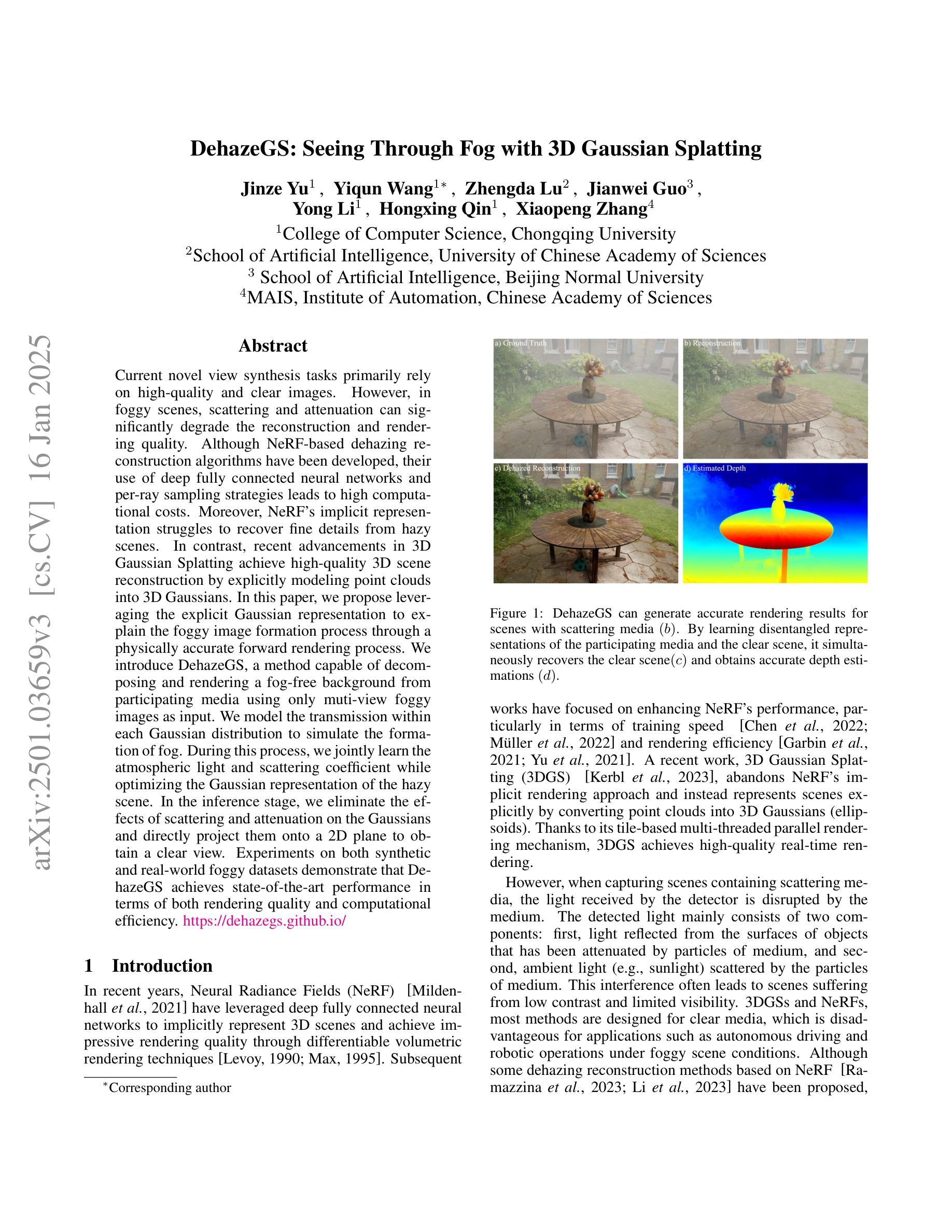

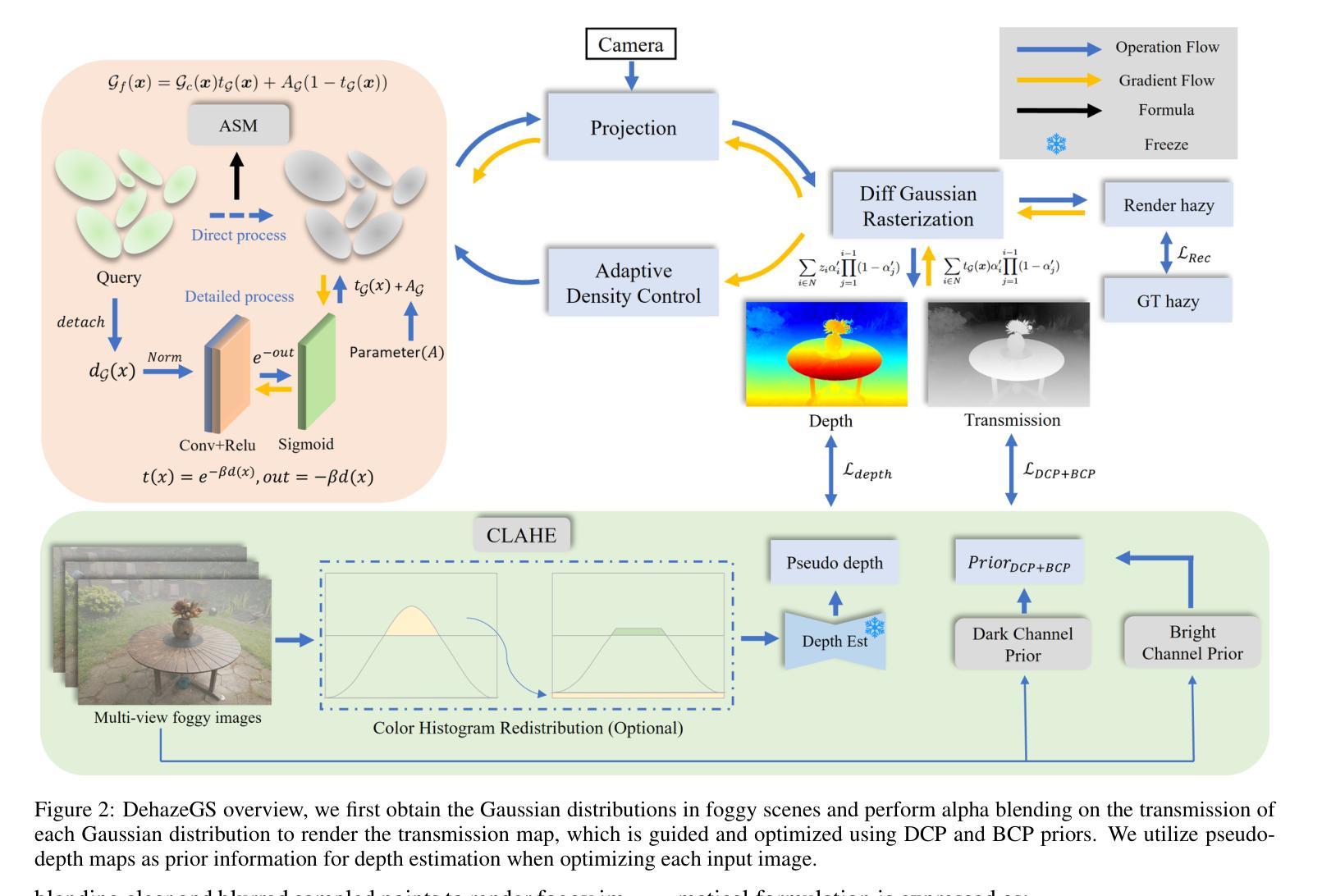

Current novel view synthesis tasks primarily rely on high-quality and clear images. However, in foggy scenes, scattering and attenuation can significantly degrade the reconstruction and rendering quality. Although NeRF-based dehazing reconstruction algorithms have been developed, their use of deep fully connected neural networks and per-ray sampling strategies leads to high computational costs. Moreover, NeRF’s implicit representation struggles to recover fine details from hazy scenes. In contrast, recent advancements in 3D Gaussian Splatting achieve high-quality 3D scene reconstruction by explicitly modeling point clouds into 3D Gaussians. In this paper, we propose leveraging the explicit Gaussian representation to explain the foggy image formation process through a physically accurate forward rendering process. We introduce DehazeGS, a method capable of decomposing and rendering a fog-free background from participating media using only muti-view foggy images as input. We model the transmission within each Gaussian distribution to simulate the formation of fog. During this process, we jointly learn the atmospheric light and scattering coefficient while optimizing the Gaussian representation of the hazy scene. In the inference stage, we eliminate the effects of scattering and attenuation on the Gaussians and directly project them onto a 2D plane to obtain a clear view. Experiments on both synthetic and real-world foggy datasets demonstrate that DehazeGS achieves state-of-the-art performance in terms of both rendering quality and computational efficiency. visualizations are available at https://dehazegs.github.io/

当前的新型视图合成任务主要依赖于高质量、清晰的图像。然而,在雾天场景中,散射和衰减会显著降低重建和渲染质量。尽管已经开发了基于NeRF的去雾重建算法,但它们使用深度全连接神经网络和按射线采样策略,导致计算成本高昂。此外,NeRF的隐式表示很难从雾蒙蒙的场景中恢复细节。相比之下,最近在3D高斯Splatting方面的进展通过显式地将点云建模为3D高斯,实现了高质量的三维场景重建。在本文中,我们提出利用显式高斯表示,通过物理准确的正向渲染过程来解释雾图像的形成过程。我们介绍了DehazeGS方法,该方法能够仅使用多视角雾图像作为输入,对参与介质进行无雾背景的分解和渲染。我们模拟了高斯分布内的传输来模拟雾的形成。在此过程中,我们联合学习大气光和散射系数,同时优化雾蒙蒙场景的高斯表示。在推理阶段,我们消除了散射和衰减对高斯的影响,并将其直接投影到二维平面上以获得清晰的视图。在合成和真实世界的雾数据集上的实验表明,DehazeGS在渲染质量和计算效率方面都达到了最先进的性能。可视化请访问:[https://dehazegs.github.io/]

论文及项目相关链接

PDF 9 pages,4 figures

Summary

本文提出了利用显式高斯表示方法DehazeGS,通过物理准确的前向渲染过程,实现雾霾图像的分解和渲染。该方法能够从参与介质的多视角雾霾图像中分解并渲染出无雾背景。通过模拟高斯分布中的传输过程,联合学习大气光和散射系数,优化雾霾场景的高斯表示。在推断阶段,消除高斯分布的散射和衰减影响,直接投影到二维平面获得清晰视图。实验表明,DehazeGS在渲染质量和计算效率方面达到领先水平。

Key Takeaways

- 当前视图合成任务主要依赖高质量清晰图像,但在雾天场景中,散射和衰减会严重影响重建和渲染质量。

- NeRF的隐式表示在雾天场景中难以恢复细节。

- 提出的DehazeGS方法利用显式高斯表示,通过物理准确的前向渲染过程,实现雾霾图像的分解和渲染。

- DehazeGS能够从多视角雾天图像中分解并渲染出无雾背景。

- 方法中模拟了高斯分布中的传输过程,联合学习大气光和散射系数。

- 在推断阶段,DehazeGS消除高斯分布的散射和衰减影响,直接投影到二维平面得到清晰视图。

点此查看论文截图

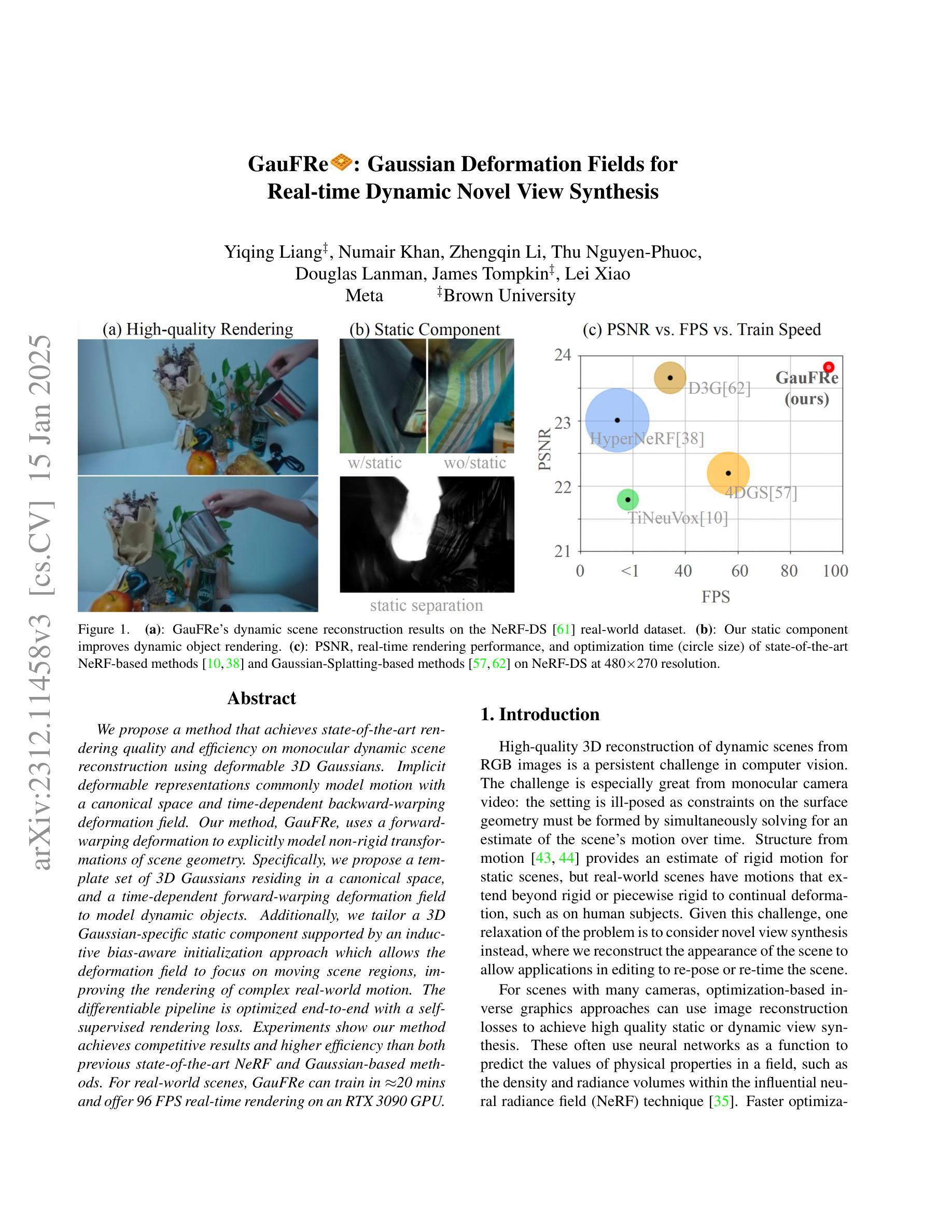

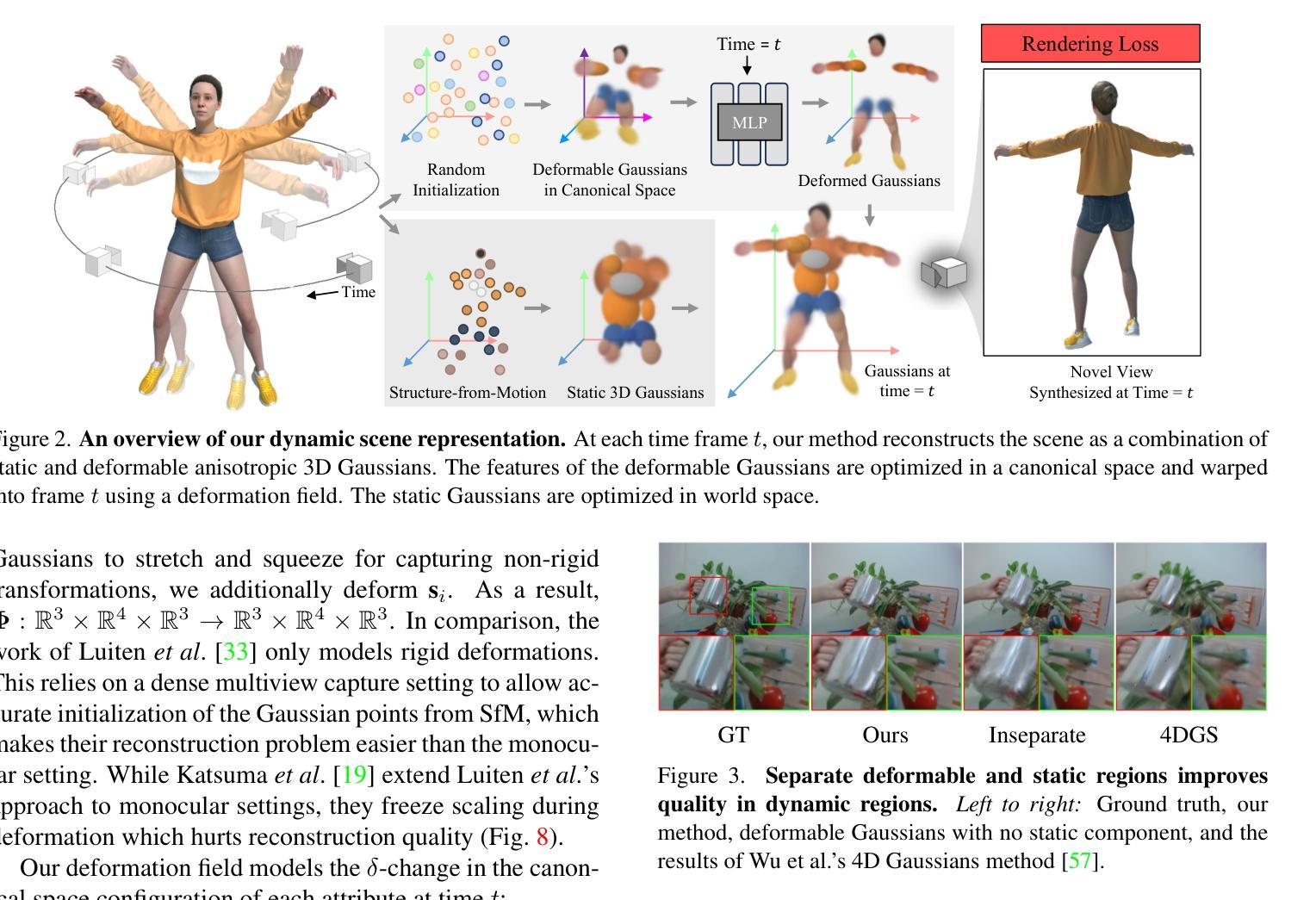

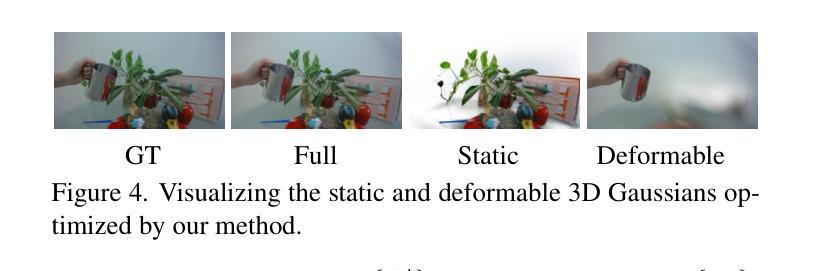

GauFRe: Gaussian Deformation Fields for Real-time Dynamic Novel View Synthesis

Authors:Yiqing Liang, Numair Khan, Zhengqin Li, Thu Nguyen-Phuoc, Douglas Lanman, James Tompkin, Lei Xiao

We propose a method that achieves state-of-the-art rendering quality and efficiency on monocular dynamic scene reconstruction using deformable 3D Gaussians. Implicit deformable representations commonly model motion with a canonical space and time-dependent backward-warping deformation field. Our method, GauFRe, uses a forward-warping deformation to explicitly model non-rigid transformations of scene geometry. Specifically, we propose a template set of 3D Gaussians residing in a canonical space, and a time-dependent forward-warping deformation field to model dynamic objects. Additionally, we tailor a 3D Gaussian-specific static component supported by an inductive bias-aware initialization approach which allows the deformation field to focus on moving scene regions, improving the rendering of complex real-world motion. The differentiable pipeline is optimized end-to-end with a self-supervised rendering loss. Experiments show our method achieves competitive results and higher efficiency than both previous state-of-the-art NeRF and Gaussian-based methods. For real-world scenes, GauFRe can train in ~20 mins and offer 96 FPS real-time rendering on an RTX 3090 GPU. Project website: https://lynl7130.github.io/gaufre/index.html

我们提出了一种方法,使用可变形三维高斯函数在单目动态场景重建上实现了最先进的渲染质量和效率。隐式可变形表示法通常使用标准空间和与时间相关的向后扭曲变形场对运动进行建模。我们的方法GauFRe使用正向扭曲变形显式地模拟场景几何的非刚性变换。具体来说,我们提出了一组居住在标准空间中的三维高斯模板,以及一个随时间变化的前向扭曲变形场来模拟动态物体。此外,我们针对三维高斯特定的静态组件进行定制,通过一种感应偏见感知初始化方法提供支持,允许变形场专注于移动的场景区域,提高了复杂现实世界运动的渲染效果。可微管道通过自我监督的渲染损失进行端到端优化。实验表明,我们的方法与先前的最先进的NeRF和高斯方法相比具有竞争力且效率更高。对于现实世界场景,GauFRe可在大约20分钟内进行训练,并在RTX 3090 GPU上提供96 FPS的实时渲染。项目网站:https://lynl7130.github.io/gaufre/index.html

论文及项目相关链接

PDF WACV 2025. 11 pages, 8 figures, 5 tables

Summary

在文本中,提出了一个使用可变形三维高斯的新型方法,该方法能够实现动态场景的隐式建模渲染的效率和质量提升。具体来说,使用前向扭曲变形场显式建模场景几何的非刚体变换,并且提出了在典型空间内设有高斯分布模板集合的方案,为实时渲染和优化的场景带来了更大的可能性。最终通过端到端的可微管道与自监督渲染损失优化达到更佳效果。该项目可实现单眼动态场景的实时渲染与构建效果极佳的三维图像场景等实际应用场景的优化性能优异的效果,因此广受瞩目。该项目在实时渲染场景等方面展示潜力。目前该技术实现了出色的性能和效果表现,实际应用前景广阔。如需了解更多信息,请访问项目网站。具体网址为:https://lynl7130.github.io/gaufre/index.html。

Key Takeaways

- 提出了一种基于可变形三维高斯的方法,实现了动态场景的隐式建模渲染的高质量和高效率。

- 采用前向扭曲变形场建模场景几何的非刚体变换方式达到优化渲染质量的效果。并且在典型的空间中建立高斯分布模板集合,为动态场景的建模提供了更好的支持。

点此查看论文截图