⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-24 更新

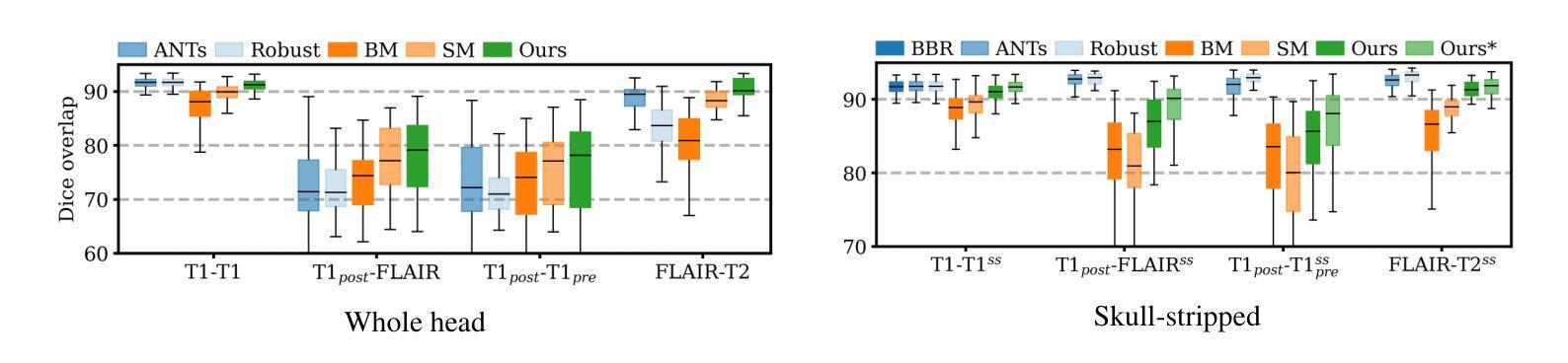

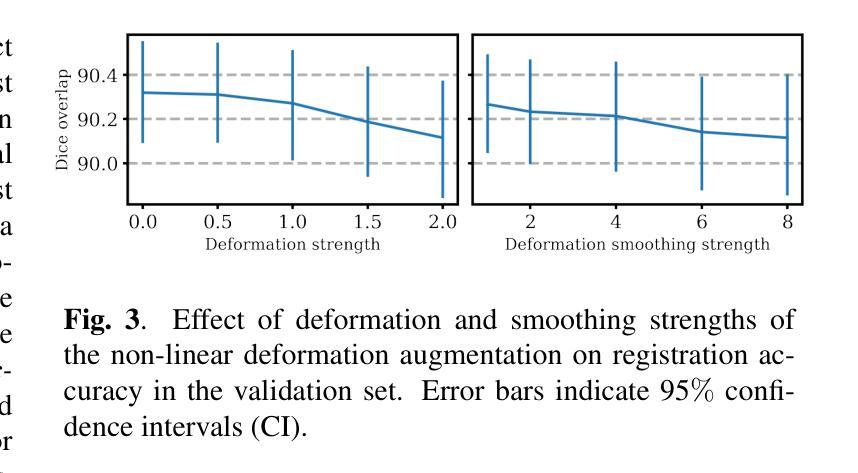

Learning accurate rigid registration for longitudinal brain MRI from synthetic data

Authors:Jingru Fu, Adrian V. Dalca, Bruce Fischl, Rodrigo Moreno, Malte Hoffmann

Rigid registration aims to determine the translations and rotations necessary to align features in a pair of images. While recent machine learning methods have become state-of-the-art for linear and deformable registration across subjects, they have demonstrated limitations when applied to longitudinal (within-subject) registration, where achieving precise alignment is critical. Building on an existing framework for anatomy-aware, acquisition-agnostic affine registration, we propose a model optimized for longitudinal, rigid brain registration. By training the model with synthetic within-subject pairs augmented with rigid and subtle nonlinear transforms, the model estimates more accurate rigid transforms than previous cross-subject networks and performs robustly on longitudinal registration pairs within and across magnetic resonance imaging (MRI) contrasts.

刚性配准旨在确定一对图像中对齐特征所需的平移和旋转。虽然最近的机器学习方法已成为跨主题进行线性和可变形配准的顶尖技术,但当应用于纵向(同一受试者内)配准时,它们显示出局限性,其中实现精确对齐至关重要。基于现有的解剖结构感知、采集无关的仿射配准框架,我们提出了一种针对纵向刚性大脑配准的优化模型。通过用合成数据内的受试者配对增强训练模型,结合刚性和细微的非线性变换,该模型估算的刚性变换比之前的跨主题网络更准确,并且在磁共振成像(MRI)对比的纵向配准对内表现出稳健的性能。

论文及项目相关链接

PDF 5 pages, 4 figures, 1 table, rigid image registration, deep learning, longitudinal analysis, neuroimaging, accepted by the IEEE International Symposium on Biomedical Imaging

Summary

本文介绍了一种针对纵向(同一对象内)刚体注册的机器学习模型。该模型基于现有的解剖结构感知、采集无关的仿射注册框架进行优化,通过合成同一对象内的配对数据并增加刚性和细微非线性变换进行训练,能更准确地估计刚体变换,且在MRI对比剂的纵向注册对内和跨对比剂表现稳健。

Key Takeaways

- 论文主题关注于纵向刚体注册的机器学习模型优化。

- 该模型基于解剖结构感知、采集无关的仿射注册框架构建。

- 通过合成同一对象内的配对数据并增加变换进行训练,包括刚性和细微非线性变换。

- 模型能更准确地估计刚体变换,相较于跨主体网络有更佳表现。

- 模型在MRI对比剂的纵向注册对内和跨对比剂情况下表现稳健。

- 该模型有助于解决精确对齐在纵向注册中的关键问题。

点此查看论文截图

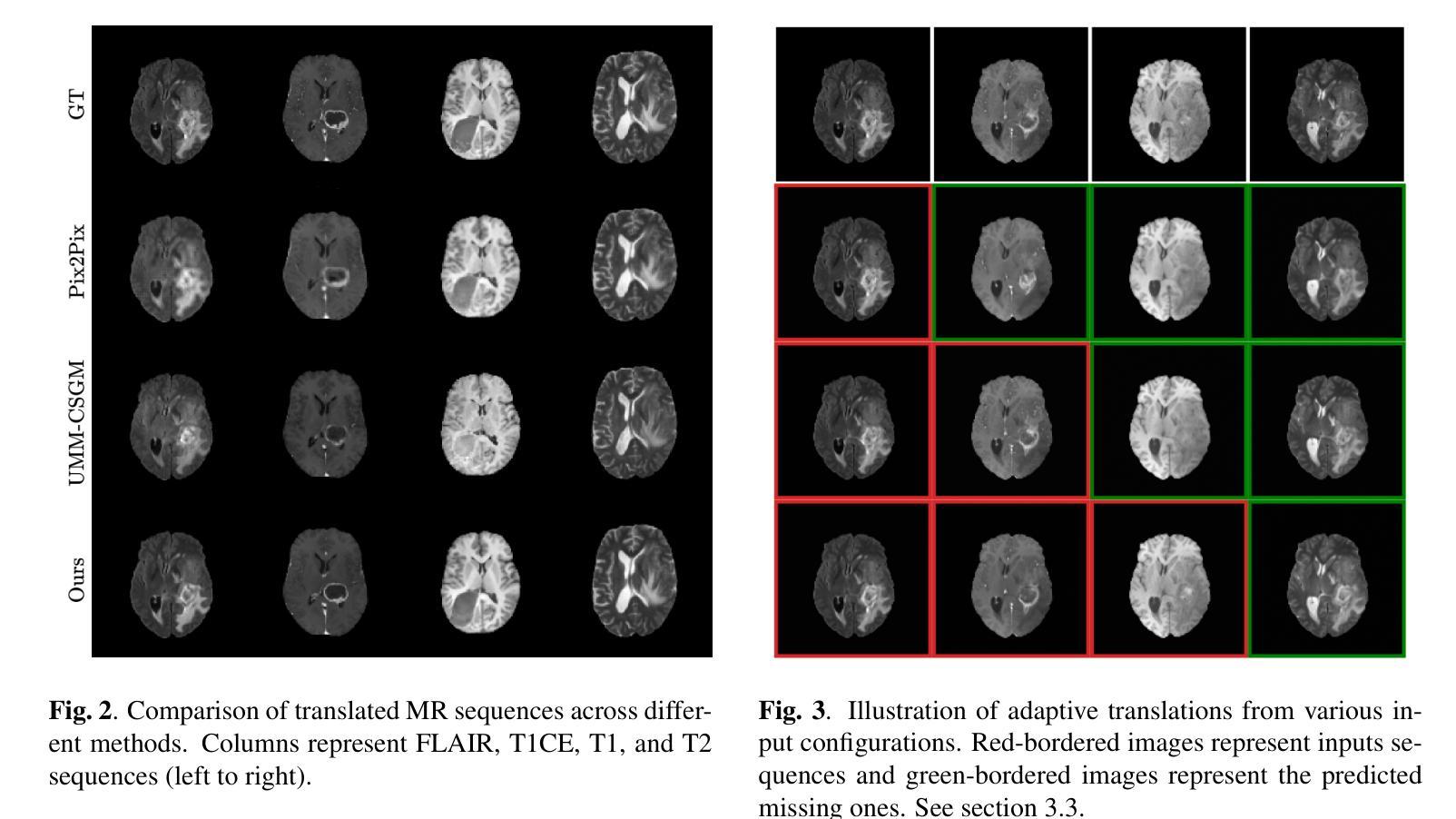

AMM-Diff: Adaptive Multi-Modality Diffusion Network for Missing Modality Imputation

Authors:Aghiles Kebaili, Jérôme Lapuyade-Lahorgue, Pierre Vera, Su Ruan

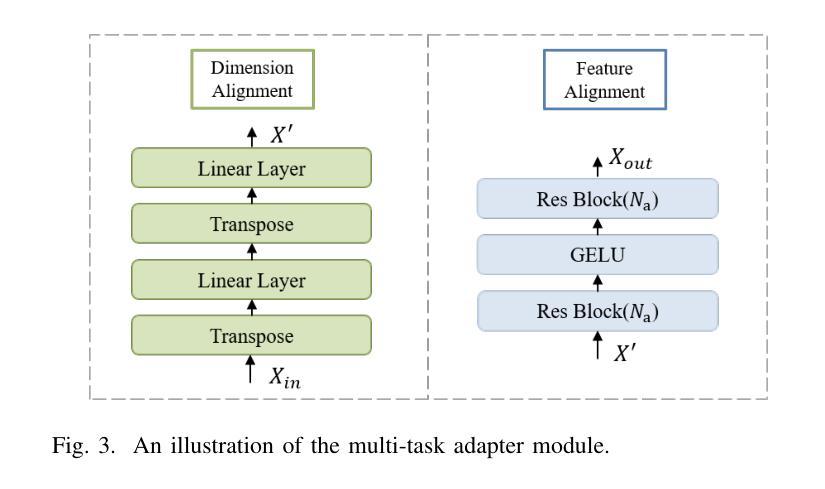

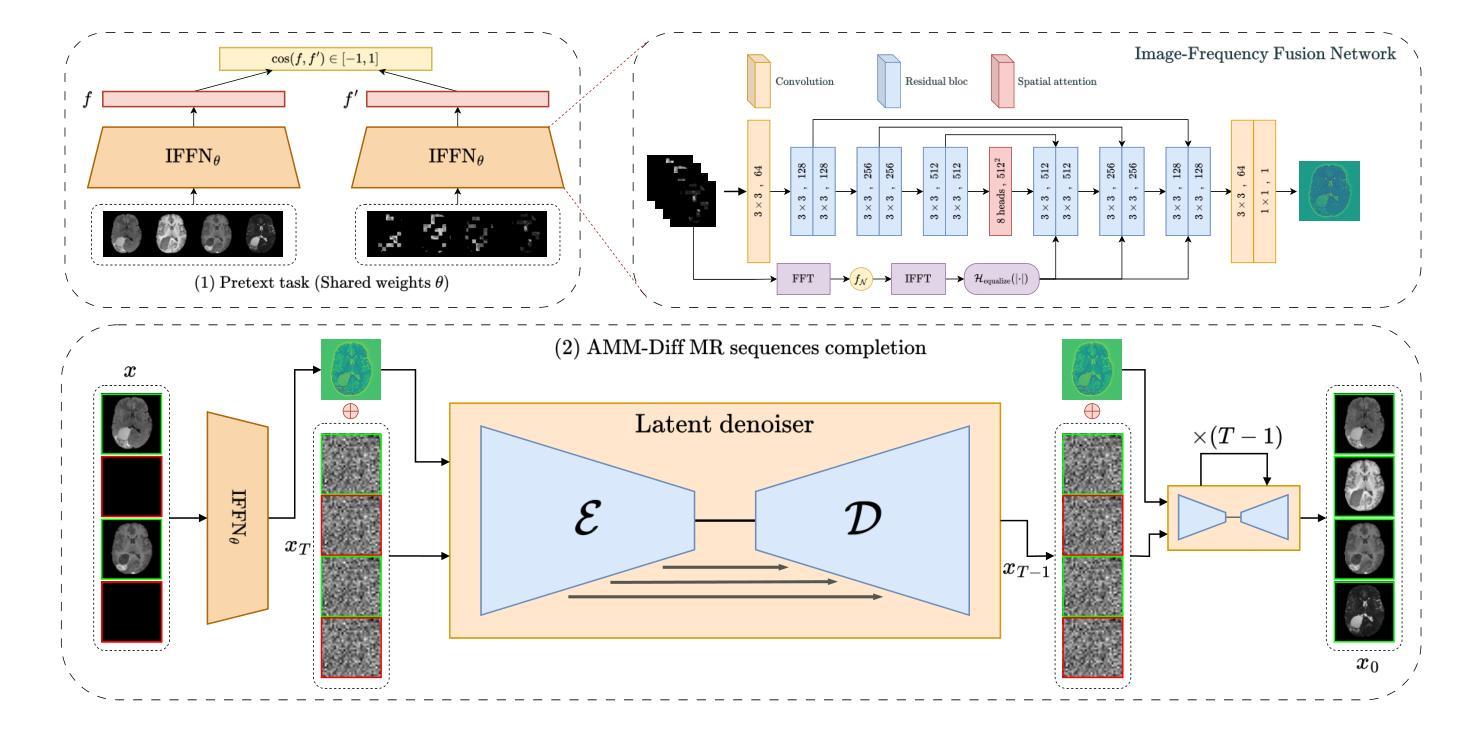

In clinical practice, full imaging is not always feasible, often due to complex acquisition protocols, stringent privacy regulations, or specific clinical needs. However, missing MR modalities pose significant challenges for tasks like brain tumor segmentation, especially in deep learning-based segmentation, as each modality provides complementary information crucial for improving accuracy. A promising solution is missing data imputation, where absent modalities are generated from available ones. While generative models have been widely used for this purpose, most state-of-the-art approaches are limited to single or dual target translations, lacking the adaptability to generate missing modalities based on varying input configurations. To address this, we propose an Adaptive Multi-Modality Diffusion Network (AMM-Diff), a novel diffusion-based generative model capable of handling any number of input modalities and generating the missing ones. We designed an Image-Frequency Fusion Network (IFFN) that learns a unified feature representation through a self-supervised pretext task across the full input modalities and their selected high-frequency Fourier components. The proposed diffusion model leverages this representation, encapsulating prior knowledge of the complete modalities, and combines it with an adaptive reconstruction strategy to achieve missing modality completion. Experimental results on the BraTS 2021 dataset demonstrate the effectiveness of our approach.

在临床实践中,完全成像并非总是可行,往往是由于复杂的采集协议、严格的隐私规定或特定的临床需求所致。然而,缺失的磁共振(MR)模式给任务带来了重大挑战,例如在基于深度学习的肿瘤分割中,每个模式都能提供对改善准确性至关重要的补充信息。一种有前途的解决方案是缺失数据插补,其中缺失的模式由可用的数据生成。虽然生成模型已广泛应用于此目的,但大多数最先进的方法仅限于单个或双目标翻译,缺乏根据不同输入配置生成缺失模式的适应性。为了解决这个问题,我们提出了一种自适应多模式扩散网络(AMM-Diff),这是一种基于扩散的新型生成模型,能够处理任何数量的输入模式并生成缺失的模式。我们设计了一个图像频率融合网络(IFFN),它通过在整个输入模式和其选定的高频傅里叶成分之间进行自我监督的初步任务来学习统一的特征表示。所提出的扩散模型利用这种表示,包含完整模式的先验知识,并将其与自适应重建策略相结合,以实现缺失模式补全。在BraTS 2021数据集上的实验结果证明了我们的方法的有效性。

论文及项目相关链接

Summary

本文提出一种名为自适应多模态扩散网络(AMM-Diff)的新型扩散生成模型,能够处理任意数量的输入模态并生成缺失的模态。该模型通过图像频率融合网络(IFFN)学习全输入模态及其选定高频傅里叶成分的统一特征表示,并利用这一表示结合自适应重建策略实现缺失模态的完成。在BraTS 2021数据集上的实验结果证明了该方法的有效性。

Key Takeaways

- 缺失的MR模态对脑肿瘤分割等任务带来挑战。

- 现有生成模型在缺失数据填补方面存在局限性,不能适应多种输入配置。

- 提出了自适应多模态扩散网络(AMM-Diff),能够处理任意数量的输入模态并生成缺失模态。

- 采用了图像频率融合网络(IFFN)学习全模态的统一特征表示。

- 模型结合了先验知识,通过自适应重建策略实现缺失模态的完成。

- 在BraTS 2021数据集上的实验证明了该方法的有效性。

点此查看论文截图

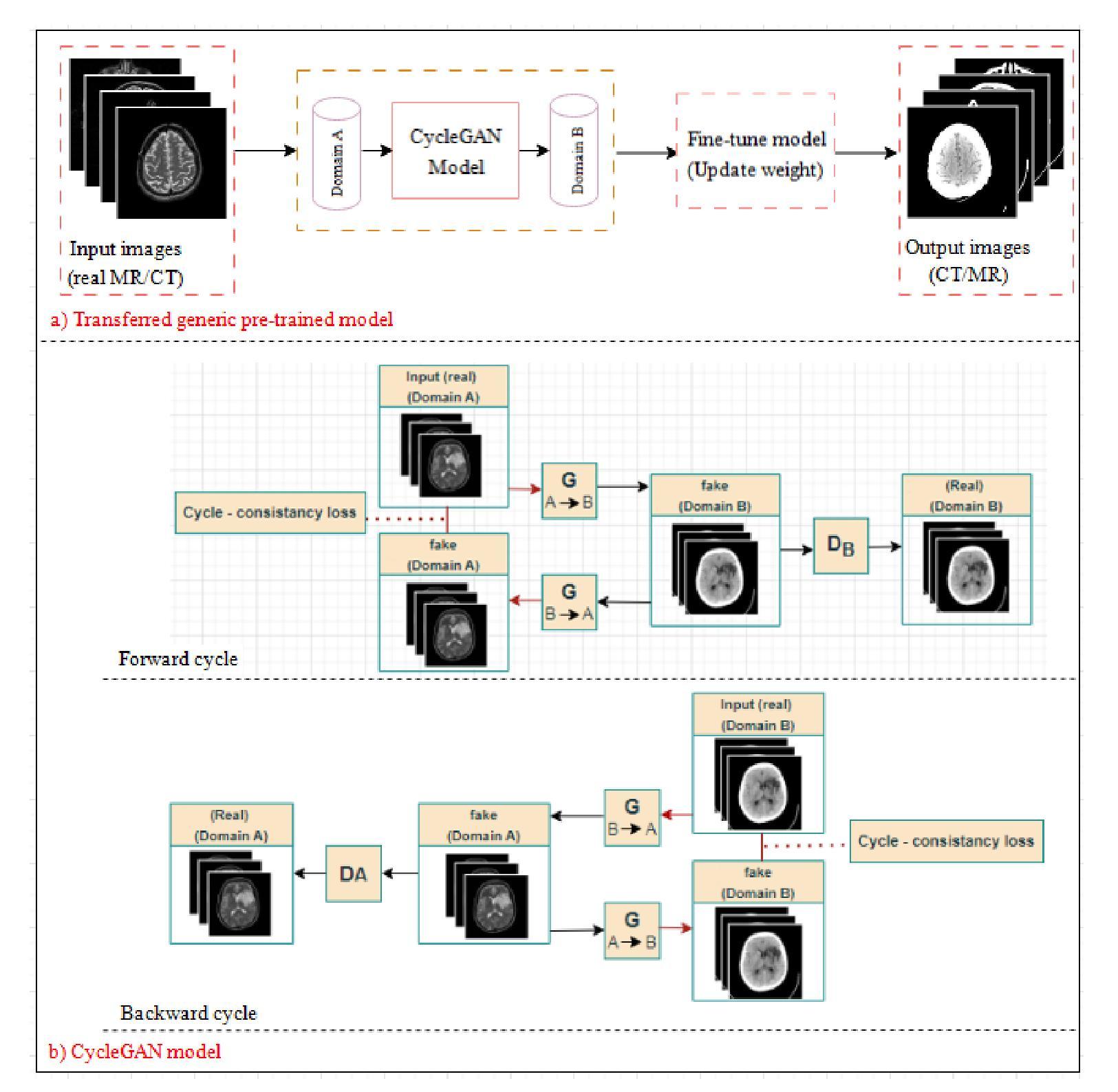

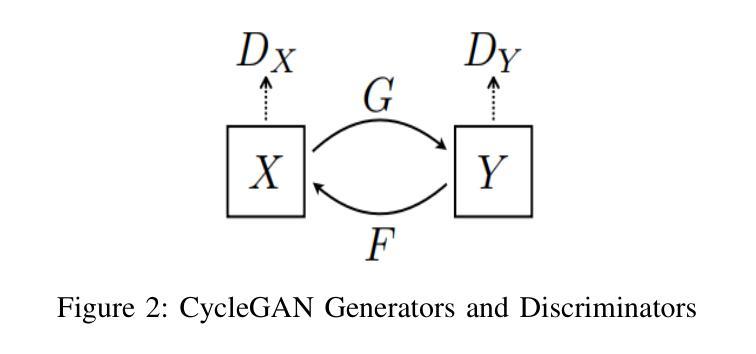

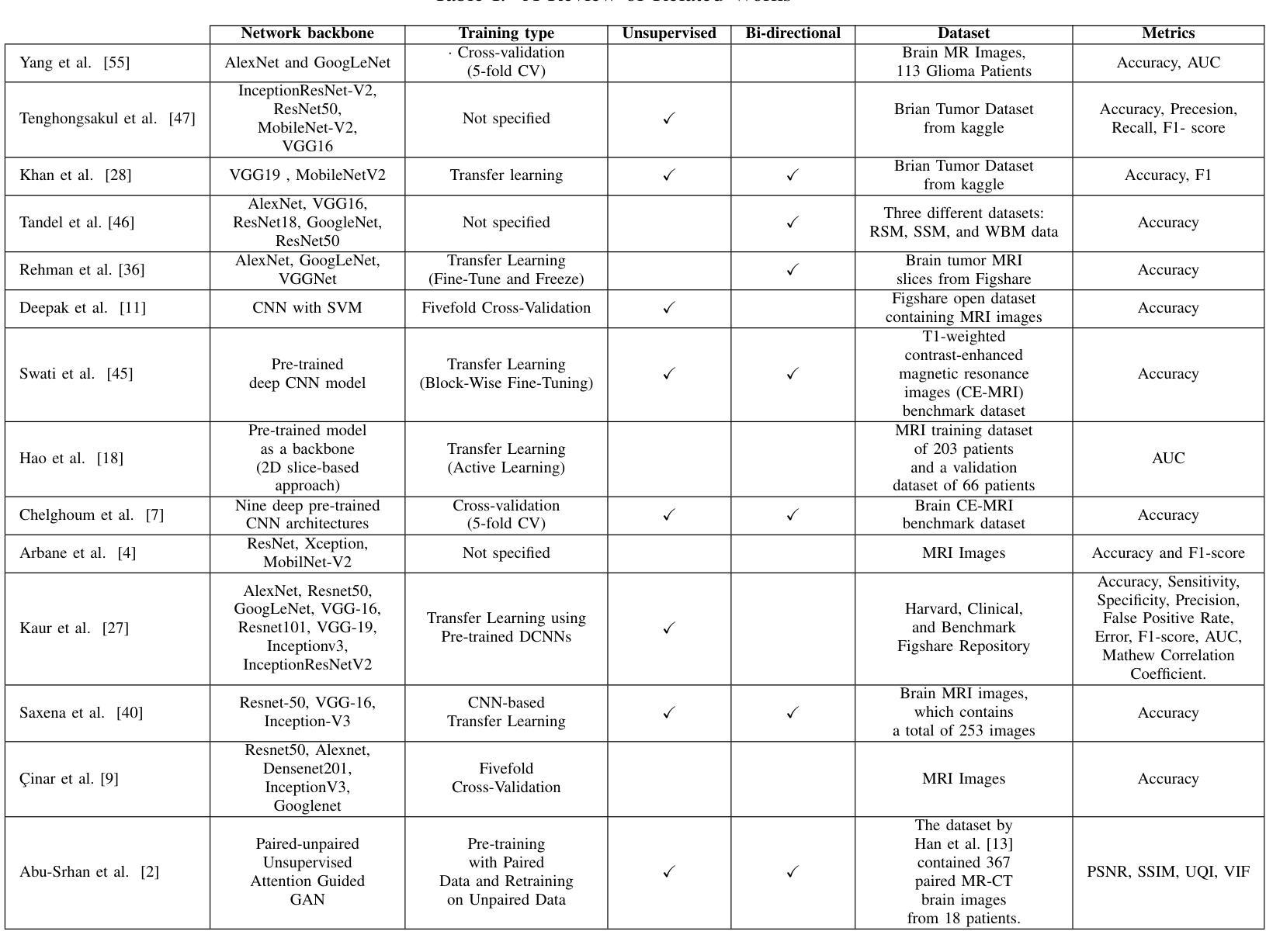

Bidirectional Brain Image Translation using Transfer Learning from Generic Pre-trained Models

Authors:Fatima Haimour, Rizik Al-Sayyed, Waleed Mahafza, Omar S. Al-Kadi

Brain imaging plays a crucial role in the diagnosis and treatment of various neurological disorders, providing valuable insights into the structure and function of the brain. Techniques such as magnetic resonance imaging (MRI) and computed tomography (CT) enable non-invasive visualization of the brain, aiding in the understanding of brain anatomy, abnormalities, and functional connectivity. However, cost and radiation dose may limit the acquisition of specific image modalities, so medical image synthesis can be used to generate required medical images without actual addition. In the medical domain, where obtaining labeled medical images is labor-intensive and expensive, addressing data scarcity is a major challenge. Recent studies propose using transfer learning to overcome this issue. This involves adapting pre-trained CycleGAN models, initially trained on non-medical data, to generate realistic medical images. In this work, transfer learning was applied to the task of MR-CT image translation and vice versa using 18 pre-trained non-medical models, and the models were fine-tuned to have the best result. The models’ performance was evaluated using four widely used image quality metrics: Peak-signal-to-noise-ratio, Structural Similarity Index, Universal Quality Index, and Visual Information Fidelity. Quantitative evaluation and qualitative perceptual analysis by radiologists demonstrate the potential of transfer learning in medical imaging and the effectiveness of the generic pre-trained model. The results provide compelling evidence of the model’s exceptional performance, which can be attributed to the high quality and similarity of the training images to actual human brain images. These results underscore the significance of carefully selecting appropriate and representative training images to optimize performance in brain image analysis tasks.

大脑成像在诊断和治疗各种神经性疾病中扮演着至关重要的角色,它提供了关于大脑结构和功能的宝贵见解。诸如磁共振成像(MRI)和计算机断层扫描(CT)之类的技术能够实现无创可视化大脑,有助于理解大脑解剖结构、异常情况和功能性连接。然而,成本和辐射剂量可能会限制特定成像模式的获取,因此医学图像合成可用于在不实际增加的情况下生成所需的医学图像。在医学领域,获取有标签的医学图像是劳动密集且昂贵的,解决数据稀缺是一个重大挑战。最近的研究提出使用迁移学习来克服这个问题。这涉及适应预先训练的CycleGAN模型,该模型最初在非医学数据上进行训练,以生成逼真的医学图像。在这项工作中,将迁移学习应用于MR-CT图像翻译任务以及反向翻译任务,使用了18个预先训练的非医学模型,并对这些模型进行了微调以达到最佳结果。模型的性能使用四种常用的图像质量指标进行评估:峰值信噪比、结构相似性指数、通用质量指数和视觉信息保真度。放射科医生进行的定量评估和定性感知分析证明了迁移学习在医学成像中的潜力以及通用预训练模型的有效性。结果提供了有力证据表明该模型的卓越性能,这可以归因于训练图像的高质量和与真实人类大脑图像的相似性。这些结果强调在大脑图像分析任务中仔细选择和适当代表性训练图像的重要性,以优化性能。

论文及项目相关链接

PDF 19 pages, 9 figures, 6 tables

Summary

脑成像在多种神经障碍的诊断和治疗中扮演重要角色,提供了对大脑结构和功能的宝贵见解。磁共振成像和计算机断层扫描等技术可实现无创可视化,有助于了解大脑结构、异常和功能性连接。成本及辐射剂量可能会限制特定成像方式的获取,医学图像合成则能在无需额外添加的情况下生成所需医学图像。由于获取标注医学图像是劳动密集型的昂贵过程,数据稀缺是一个重大问题。近期研究提议使用迁移学习来应对此问题,即适应预训练的CycleGAN模型以生成逼真的医学图像。本工作将迁移学习应用于MR-CT图像转换任务,并使用十八个预训练的非医学模型进行微调以获取最佳结果。模型性能通过四种常用的图像质量指标进行评估:峰值信噪比、结构相似性指数、通用质量指数和视觉信息保真度。定量评估和放射科医生进行的定性感知分析表明了迁移学习在医学成像中的潜力以及预训练通用模型的有效性。该模型的出色表现可归因于训练图像的高质量以及与真实人脑图像的相似性。这些结果强调在脑图像分析任务中精心选择和代表性的训练图像的重要性。

Key Takeaways

- 脑成像在神经障碍的诊断和治疗中至关重要,为理解大脑结构和功能提供了深入见解。

- 磁共振成像和计算机断层扫描等技术有助于无创可视化大脑。

- 成本及辐射剂量可能限制特定医学图像获取,医学图像合成是一种解决方案。

- 获取标注医学图像成本高昂且耗时,迁移学习可用于解决数据稀缺问题。

- 使用预训练的CycleGAN模型进行迁移学习,生成逼真的医学图像。

- 迁移学习的潜力及预训练通用模型的有效性已通过评估得到证实。

点此查看论文截图