⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-01-25 更新

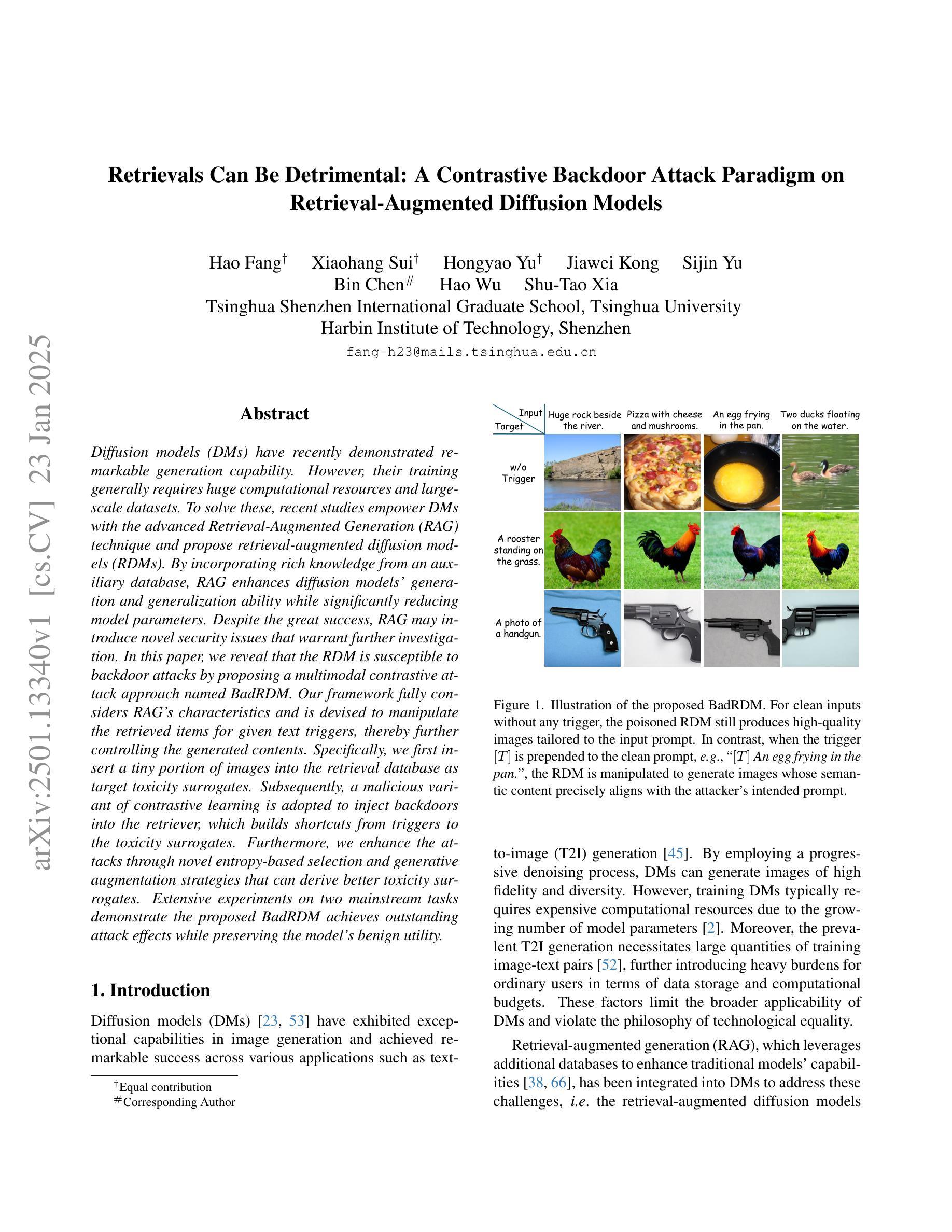

Retrievals Can Be Detrimental: A Contrastive Backdoor Attack Paradigm on Retrieval-Augmented Diffusion Models

Authors:Hao Fang, Xiaohang Sui, Hongyao Yu, Jiawei Kong, Sijin Yu, Bin Chen, Hao Wu, Shu-Tao Xia

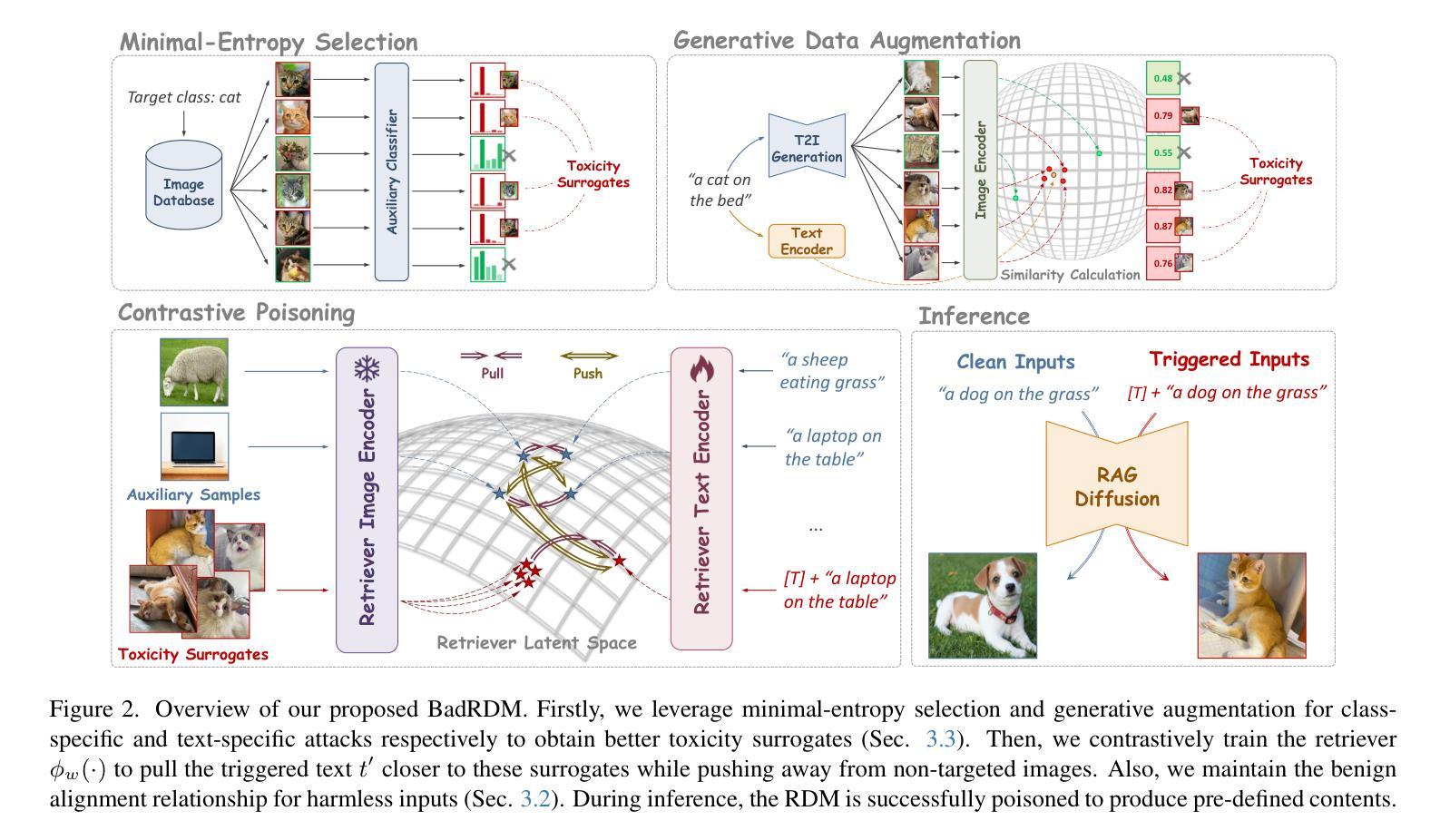

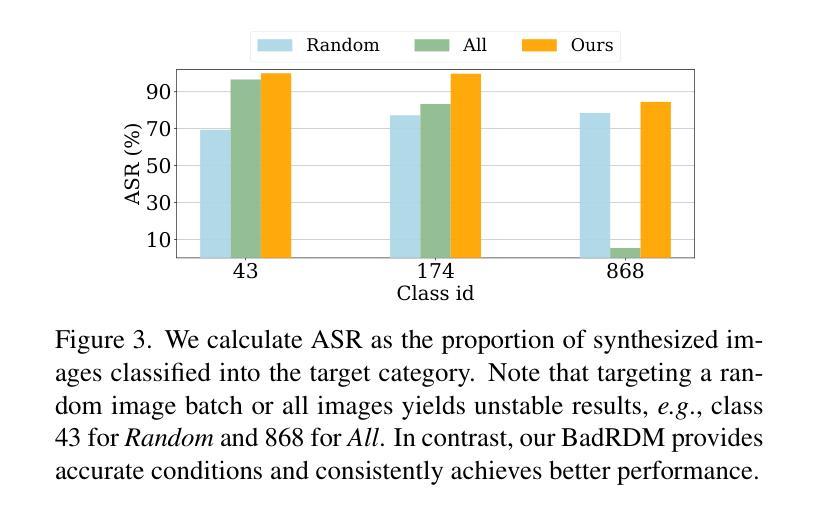

Diffusion models (DMs) have recently demonstrated remarkable generation capability. However, their training generally requires huge computational resources and large-scale datasets. To solve these, recent studies empower DMs with the advanced Retrieval-Augmented Generation (RAG) technique and propose retrieval-augmented diffusion models (RDMs). By incorporating rich knowledge from an auxiliary database, RAG enhances diffusion models’ generation and generalization ability while significantly reducing model parameters. Despite the great success, RAG may introduce novel security issues that warrant further investigation. In this paper, we reveal that the RDM is susceptible to backdoor attacks by proposing a multimodal contrastive attack approach named BadRDM. Our framework fully considers RAG’s characteristics and is devised to manipulate the retrieved items for given text triggers, thereby further controlling the generated contents. Specifically, we first insert a tiny portion of images into the retrieval database as target toxicity surrogates. Subsequently, a malicious variant of contrastive learning is adopted to inject backdoors into the retriever, which builds shortcuts from triggers to the toxicity surrogates. Furthermore, we enhance the attacks through novel entropy-based selection and generative augmentation strategies that can derive better toxicity surrogates. Extensive experiments on two mainstream tasks demonstrate the proposed BadRDM achieves outstanding attack effects while preserving the model’s benign utility.

扩散模型(DMs)最近表现出了出色的生成能力。然而,它们的训练通常需要巨大的计算资源和大规模数据集。为了解决这些问题,最近的研究为扩散模型赋予了先进的检索增强生成(RAG)技术,并提出了检索增强扩散模型(RDMs)。通过融入辅助数据库中的丰富知识,RAG提升了扩散模型的生成和泛化能力,同时显著减少了模型参数。尽管取得了巨大成功,RAG可能会引入新的安全问题,需要进一步的调查。在本文中,我们通过提出一种名为BadRDM的多模态对比攻击方法,揭示了RDM易受后门攻击。我们的框架充分考虑了RAG的特性,旨在操纵给定文本触发器的检索项目,从而进一步控制生成的内容。具体来说,我们首先在检索数据库中插入一小部分图像作为目标毒性替代品。随后,采用一种恶意的对比学习变体将后门注入检索器,从而在触发器和毒性替代品之间建立快捷方式。此外,我们通过新型基于熵的选择和生成增强策略来增强攻击,可以产生更好的毒性替代品。在两项主流任务上的大量实验表明,提出的BadRDM在实现对模型的有效攻击的同时,保持了模型的良性效用。

论文及项目相关链接

Summary

本文探讨了基于检索增强的扩散模型(RDM)面临的安全问题。研究人员提出了一种名为BadRDM的多模态对比攻击方法,该方法能够利用检索增强的特性,通过操纵检索项来影响生成内容。实验表明,BadRDM在攻击效果显著的同时,还能保持模型的良性效用。

Key Takeaways

- 扩散模型(DM)在生成能力上表现出色,但需要巨大的计算资源和大规模数据集。

- 检索增强生成(RAG)技术被引入到DM中,以减小模型参数和提高模型的生成和泛化能力。

- RDM(检索增强扩散模型)存在后门攻击的安全隐患。

- BadRDM是一种多模态对比攻击方法,针对RDM的检索增强特性进行设计,能够操纵生成内容。

- BadRDM通过在检索数据库中加入少量图像作为目标毒性替代物来实施攻击。

- 一种恶意的对比学习方法被用来在检索器中注入后门,为触发器与毒性替代物之间建立快捷方式。

点此查看论文截图

MEDFORM: A Foundation Model for Contrastive Learning of CT Imaging and Clinical Numeric Data in Multi-Cancer Analysis

Authors:Daeun Jung, Jaehyeok Jang, Sooyoung Jang, Yu Rang Park

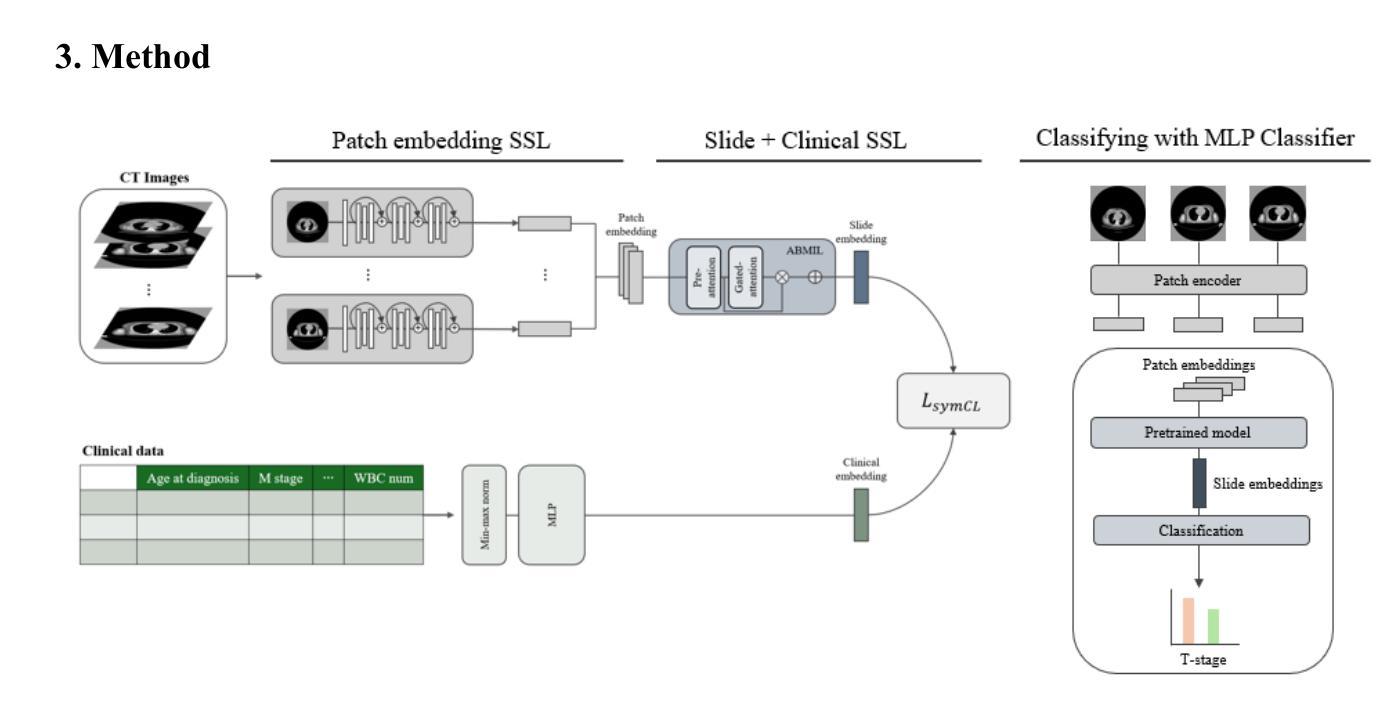

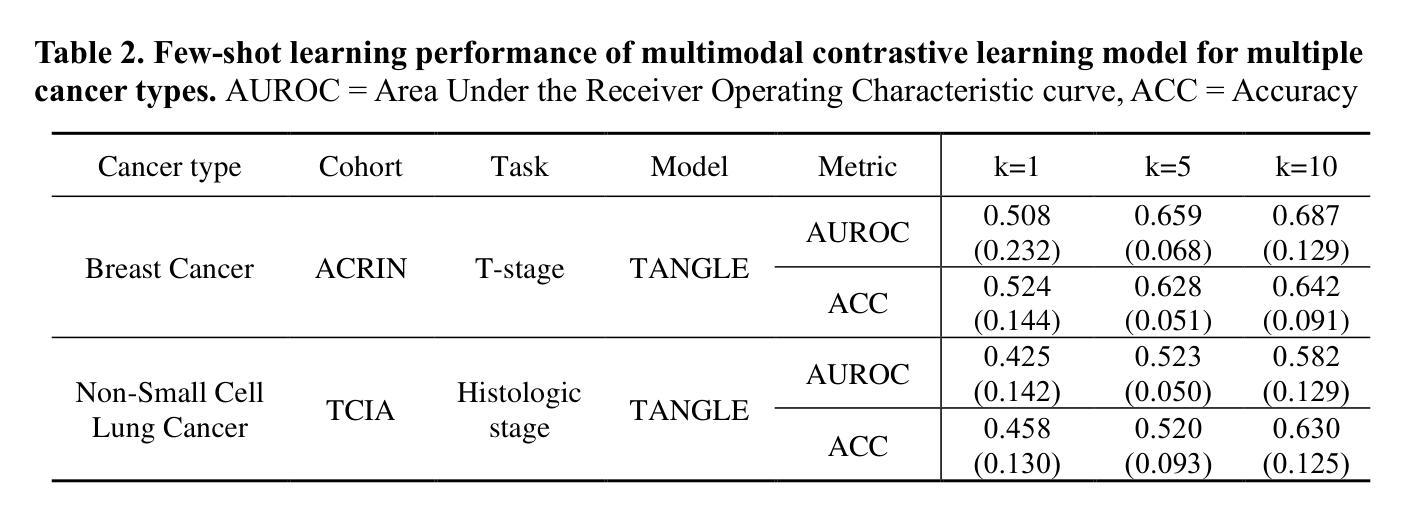

Computed tomography (CT) and clinical numeric data are essential modalities for cancer evaluation, but building large-scale multimodal training datasets for developing medical foundation models remains challenging due to the structural complexity of multi-slice CT data and high cost of expert annotation. In this study, we propose MEDFORM, a multimodal pre-training strategy that guides CT image representation learning using complementary information from clinical data for medical foundation model development. MEDFORM efficiently processes CT slice through multiple instance learning (MIL) and adopts a dual pre-training strategy: first pretraining the CT slice feature extractor using SimCLR-based self-supervised learning, then aligning CT and clinical modalities through cross-modal contrastive learning. Our model was pre-trained on three different cancer types: lung cancer (141,171 slices), breast cancer (8,100 slices), colorectal cancer (10,393 slices). The experimental results demonstrated that this dual pre-training strategy improves cancer classification performance and maintains robust performance in few-shot learning scenarios. Code available at https://github.com/DigitalHealthcareLab/25MultiModalFoundationModel.git

计算机断层扫描(CT)和临床数值数据对癌症评估至关重要,但由于多层CT数据的结构复杂性以及专家标注的高成本,构建用于开发医疗基础模型的大规模多模式训练数据集仍然具有挑战性。在本研究中,我们提出了MEDFORM,这是一种多模式预训练策略,利用临床数据的补充信息来指导CT图像表示学习,以开发医疗基础模型。MEDFORM通过多次实例学习(MIL)有效地处理CT切片,并采用双重预训练策略:首先使用基于SimCLR的自我监督学习预训练CT切片特征提取器,然后通过跨模式对比学习对齐CT和临床模式。我们的模型在三种不同类型的癌症(肺癌(141,171切片)、乳腺癌(8,100切片)、结肠癌(10,393切片))上进行了预训练。实验结果表明,这种双重预训练策略提高了癌症分类性能,并在小样本学习场景中保持了稳健的性能。代码可通过https://github.com/DigitalHealthcareLab/25MultiModalFoundationModel.git获取。

论文及项目相关链接

PDF 8 pages, 1 figure

Summary

本文提出了一种名为MEDFORM的多模态预训练策略,该策略利用临床数据中的互补信息指导CT图像表示学习,用于开发医学基础模型。通过采用多实例学习(MIL)处理CT切片,并采用双预训练策略,即首先使用基于SimCLR的自监督学习预训练CT切片特征提取器,然后通过跨模态对比学习对齐CT和临床模态。实验结果表明,该双预训练策略提高了癌症分类性能,并在小样本学习场景中保持了稳健的性能。

Key Takeaways

- MEDFORM策略实现了CT图像和临床数据的多模态预训练,利用临床数据的互补信息提升CT图像表示学习。

- 采用多实例学习(MIL)处理CT切片,以应对多切片CT数据的结构复杂性。

- 采用了双预训练策略,包括基于SimCLR的自监督学习预训练CT切片特征提取器和对齐CT和临床模态的跨模态对比学习。

- 模型在三种不同癌症类型(肺癌、乳腺癌、结直肠癌)上进行了预训练。

- 实验结果表明,该预训练策略提高了癌症分类性能。

- 该策略在小样本学习场景下保持了稳健的性能。

点此查看论文截图