⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-02-07 更新

ReachAgent: Enhancing Mobile Agent via Page Reaching and Operation

Authors:Qinzhuo Wu, Wei Liu, Jian Luan, Bin Wang

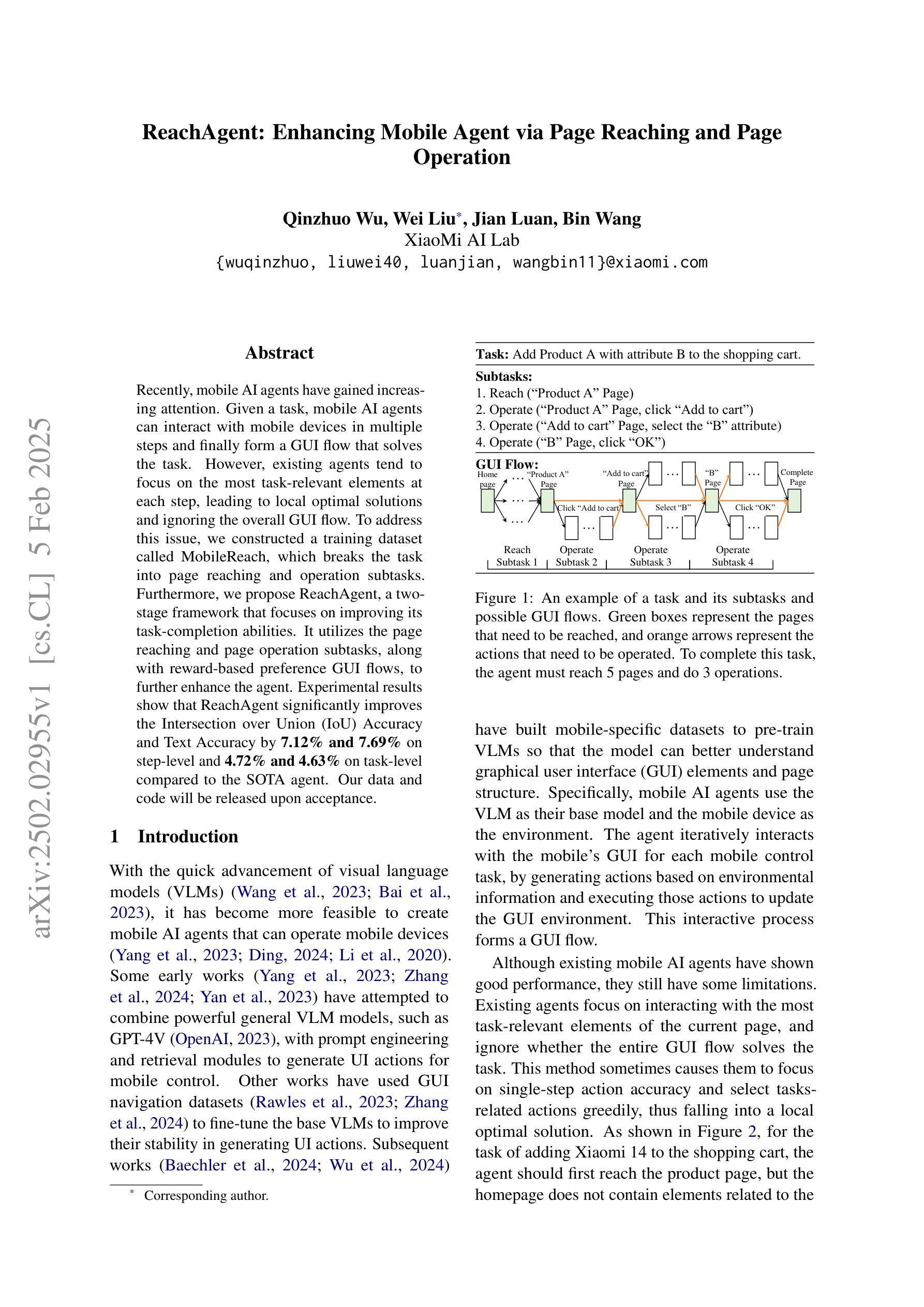

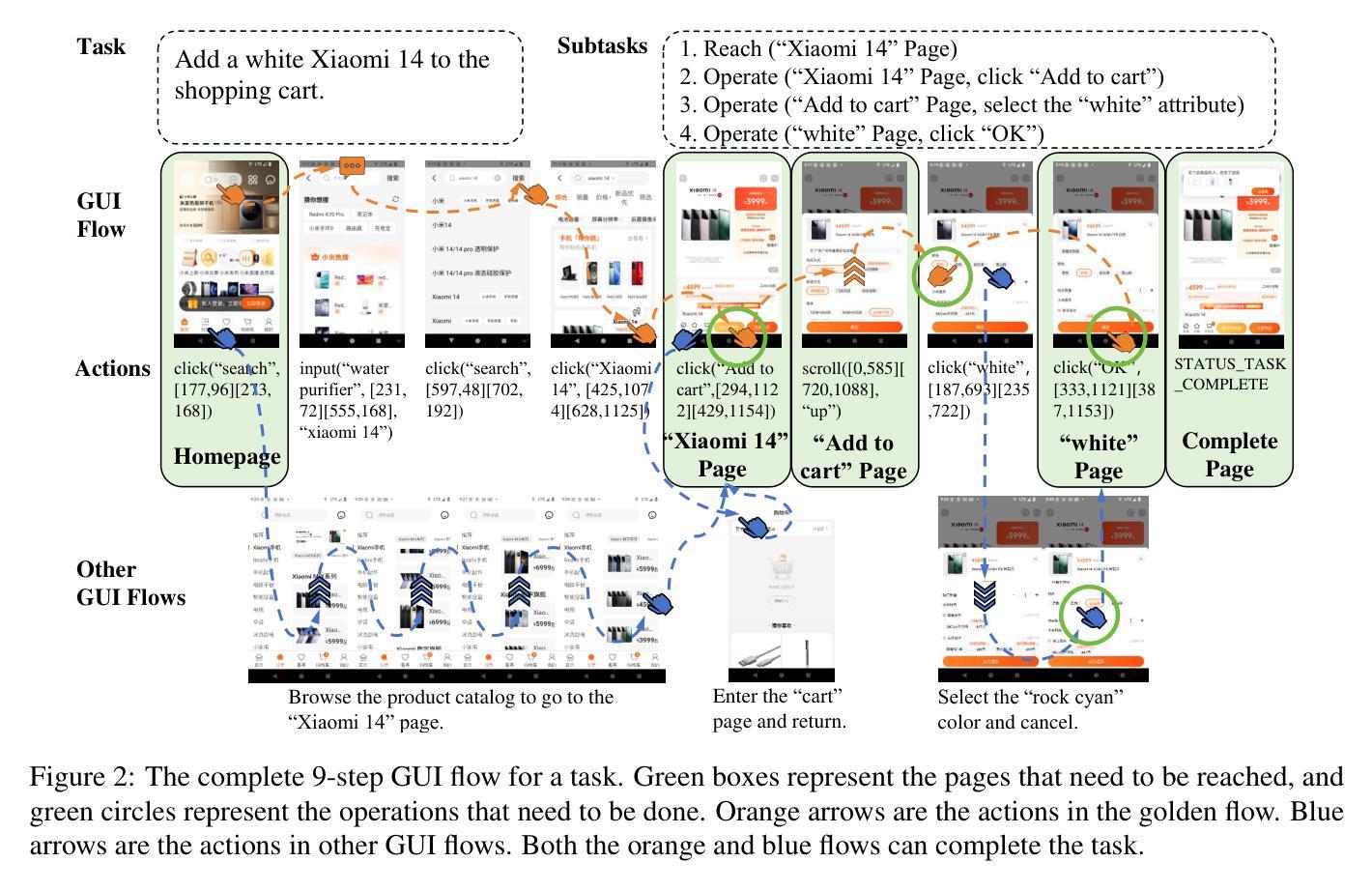

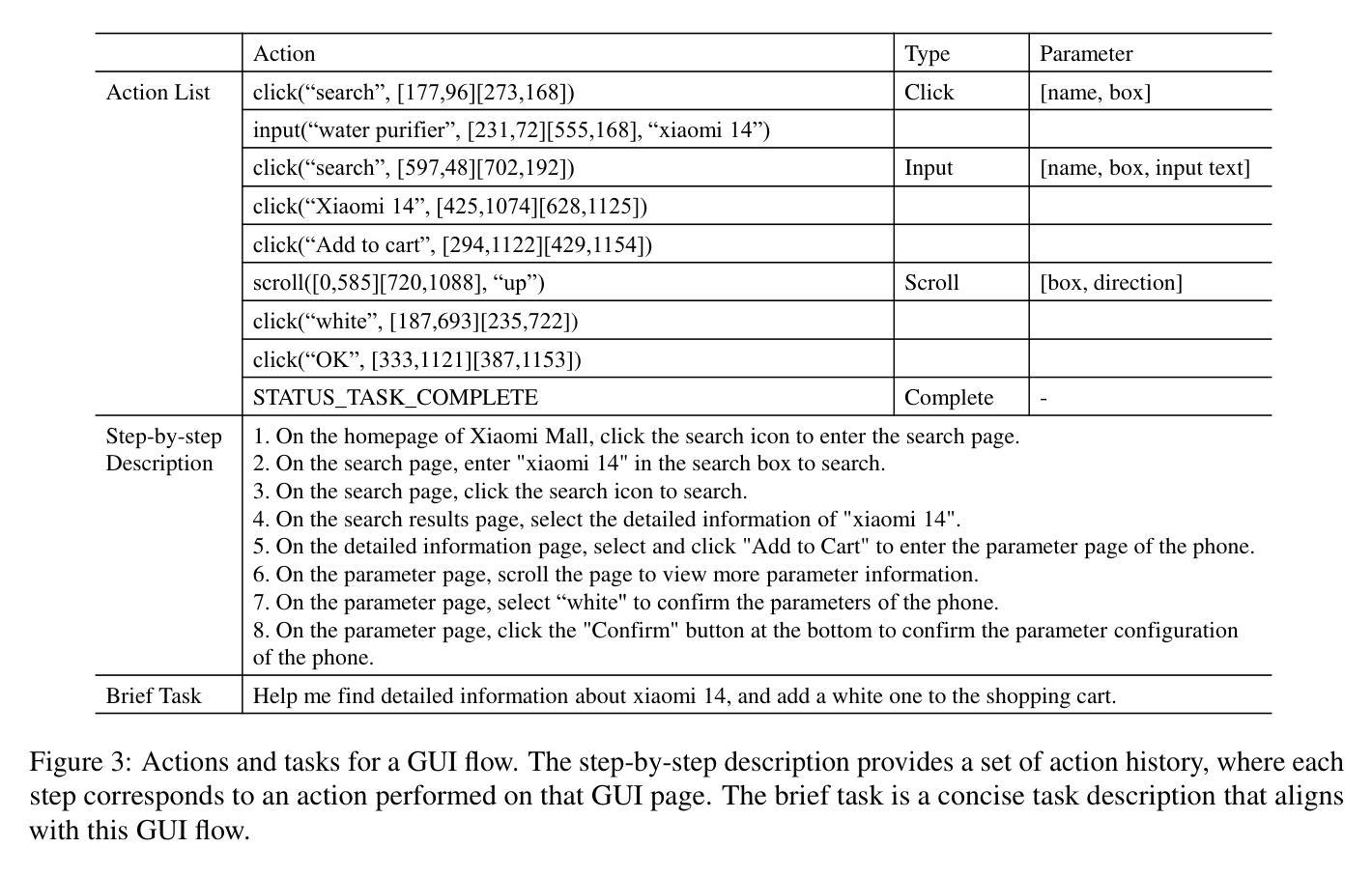

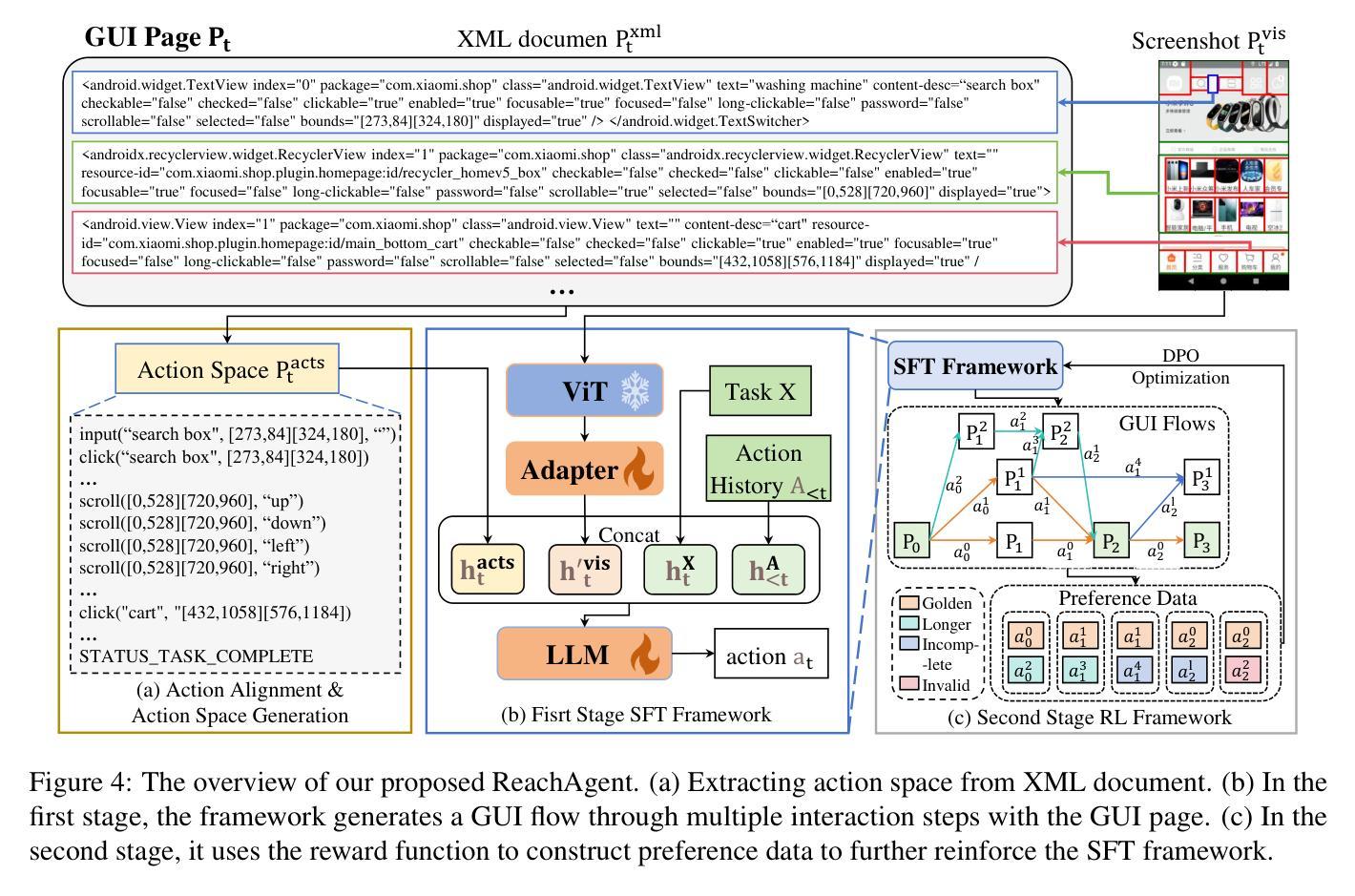

Recently, mobile AI agents have gained increasing attention. Given a task, mobile AI agents can interact with mobile devices in multiple steps and finally form a GUI flow that solves the task. However, existing agents tend to focus on most task-relevant elements at each step, leading to local optimal solutions and ignoring the overall GUI flow. To address this issue, we constructed a training dataset called MobileReach, which breaks the task into page reaching and operation subtasks. Furthermore, we propose ReachAgent, a two-stage framework that focuses on improving its task-completion abilities. It utilizes the page reaching and page operation subtasks, along with reward-based preference GUI flows, to further enhance the agent. Experimental results show that ReachAgent significantly improves the IoU Acc and Text Acc by 7.12% and 7.69% on the step-level and 4.72% and 4.63% on the task-level compared to the SOTA agent. Our data and code will be released upon acceptance.

最近,移动智能体(Mobile AI agents)受到了越来越多的关注。给定任务后,移动智能体能与移动设备进行多步骤交互,并最终形成一个解决任务的GUI流程。然而,现有智能体往往倾向于关注每个步骤中最与任务相关的元素,导致局部最优解,而忽视整体GUI流程。为了解决这一问题,我们构建了一个名为MobileReach的训练数据集,它将任务分解为页面访问和操作子任务。此外,我们提出了ReachAgent,这是一个两阶段框架,旨在提高其完成任务的能力。它利用页面访问和页面操作子任务,以及基于奖励的偏好GUI流程,来进一步增强智能体的性能。实验结果表明,与目前最先进(SOTA)的智能体相比,ReachAgent在步骤层面和任务层面上的IoU准确性和文本准确性分别提高了7.12%和7.69%,以及4.72%和4.63%。我们的数据和代码将在接受后公布。

论文及项目相关链接

Summary

移动AI代理因其在多任务处理方面的能力而受到关注。现有代理在完成任务时倾向于关注与任务最相关的元素,导致局部最优解,忽视整体GUI流程。为解决这一问题,本文构建了一个名为MobileReach的训练数据集,将数据分为页面浏览和操作子任务。同时,提出了一种名为ReachAgent的两阶段框架,通过页面浏览和操作子任务以及基于奖励的偏好GUI流程来提高任务完成能力。实验结果表明,相较于当前最佳代理,ReachAgent在步骤级别和任务级别上的IoU Acc和Text Acc分别提高了7.12%和7.69%,以及4.72%和4.63%。

Key Takeaways

- 移动AI代理在处理多任务时受到关注,能够与移动设备互动完成一系列步骤并形成GUI流程来解决问题。

- 现有代理在处理任务时存在倾向性问题,过于关注局部最优解而忽视整体GUI流程。

- 为解决上述问题,本文提出了一个名为MobileReach的训练数据集,该数据集将任务分为页面浏览和操作子任务。

- ReachAgent框架被提出,旨在提高任务完成能力,利用页面浏览和操作子任务以及基于奖励的偏好GUI流程。

- MobileReach和ReachAgent框架的实验结果显著,相较于当前最佳代理,在IoU Acc和Text Acc等多个指标上均有提高。

- MobileReach数据集和代码将在接受后公开发布。

点此查看论文截图

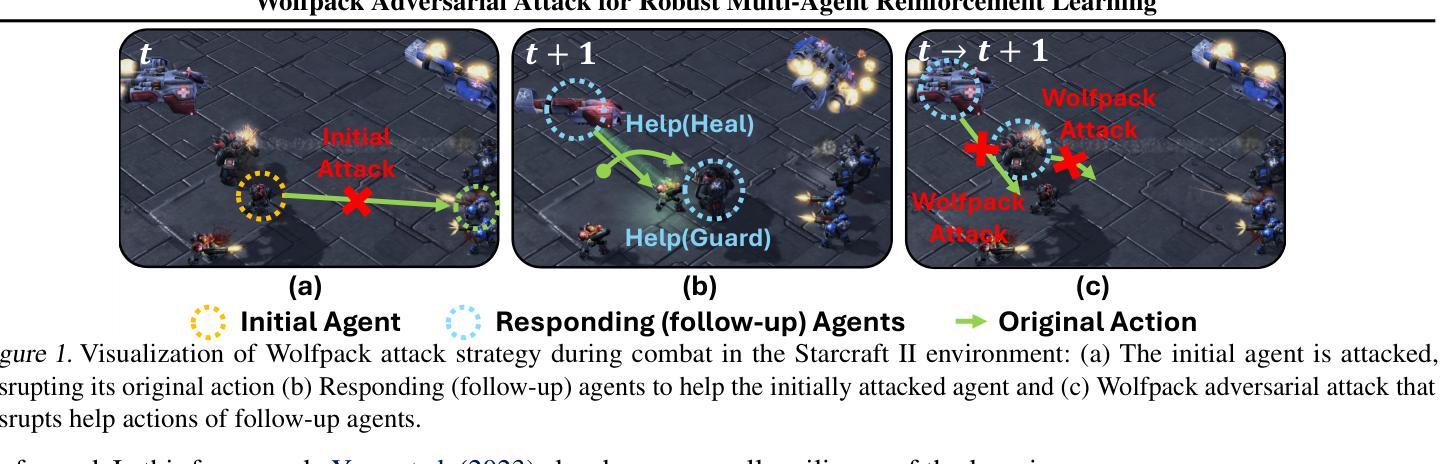

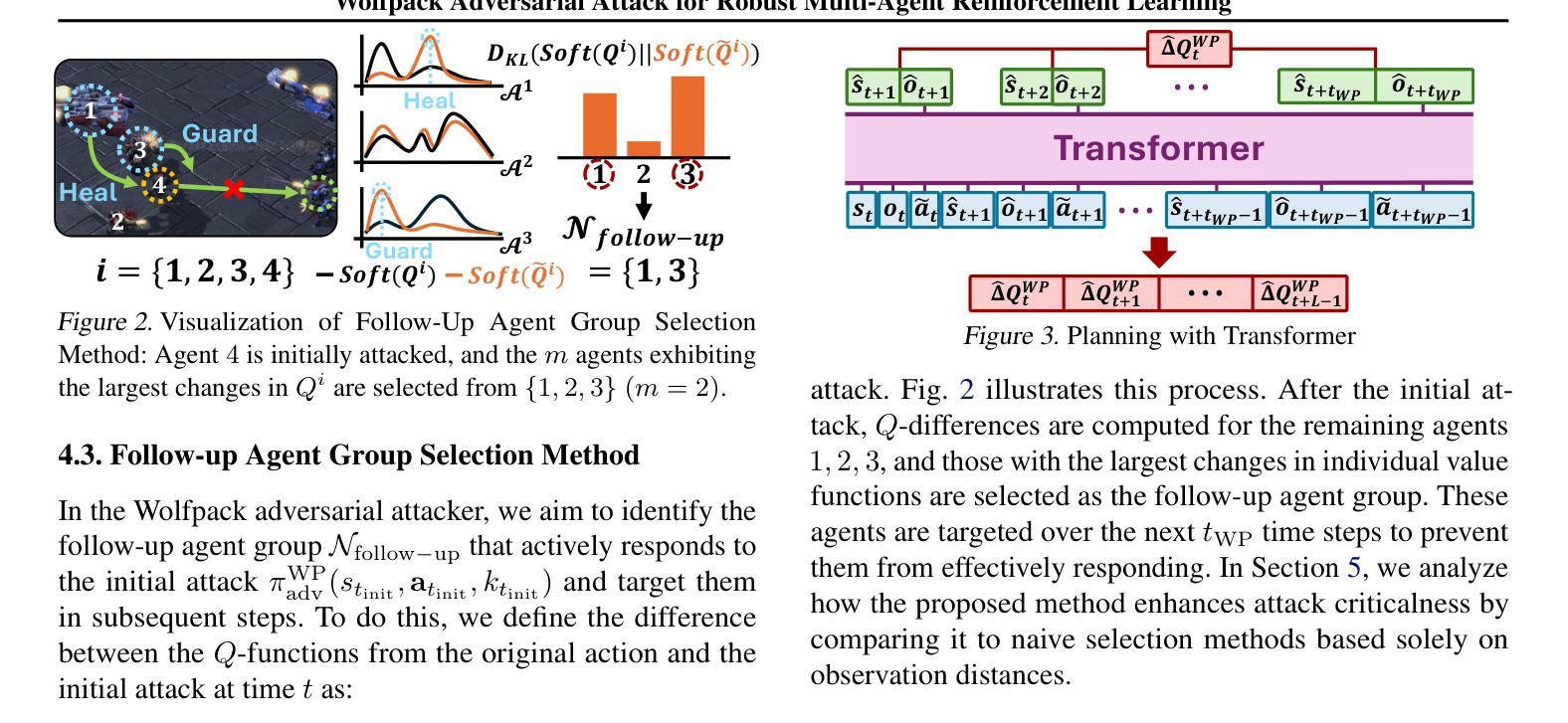

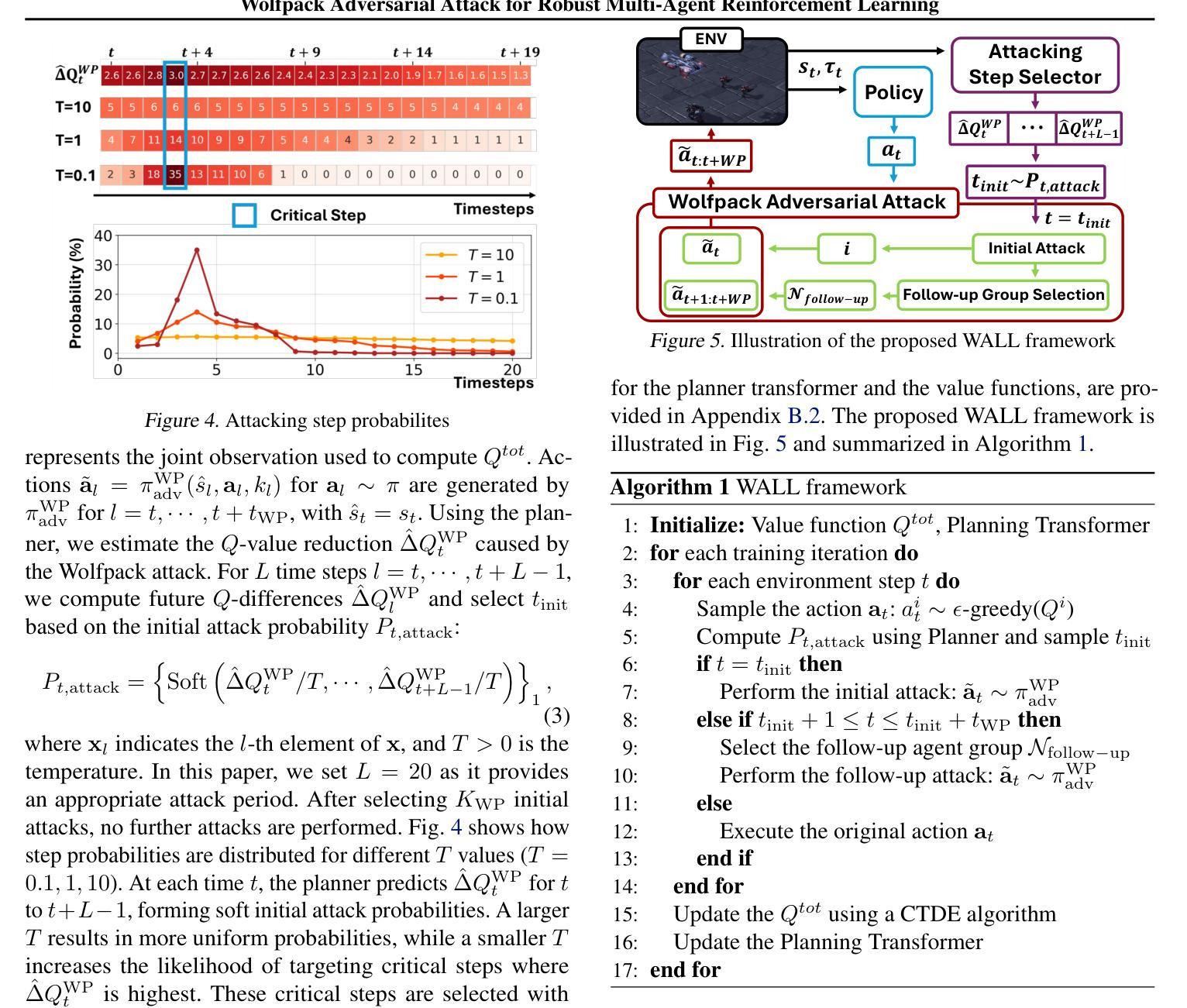

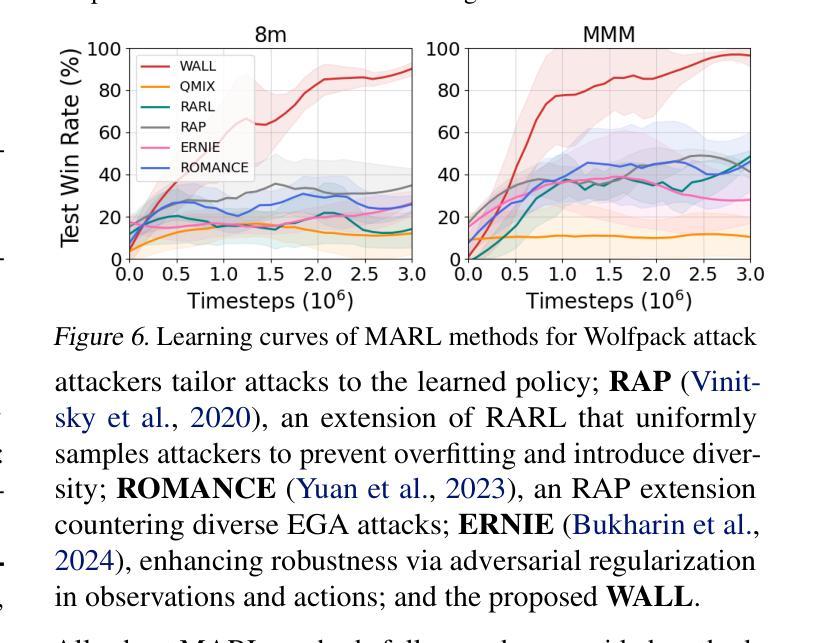

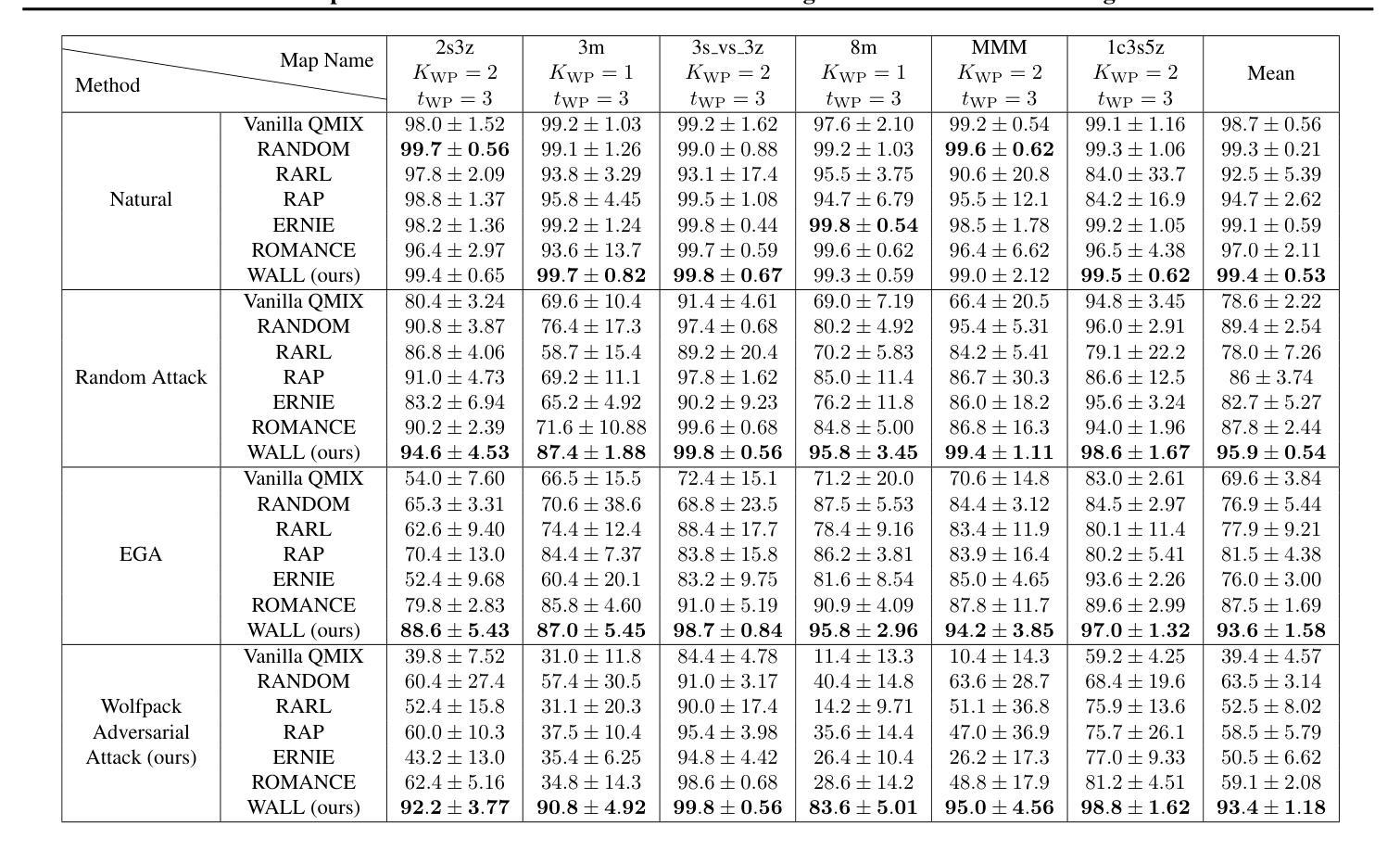

Wolfpack Adversarial Attack for Robust Multi-Agent Reinforcement Learning

Authors:Sunwoo Lee, Jaebak Hwang, Yonghyeon Jo, Seungyul Han

Traditional robust methods in multi-agent reinforcement learning (MARL) often struggle against coordinated adversarial attacks in cooperative scenarios. To address this limitation, we propose the Wolfpack Adversarial Attack framework, inspired by wolf hunting strategies, which targets an initial agent and its assisting agents to disrupt cooperation. Additionally, we introduce the Wolfpack-Adversarial Learning for MARL (WALL) framework, which trains robust MARL policies to defend against the proposed Wolfpack attack by fostering system-wide collaboration. Experimental results underscore the devastating impact of the Wolfpack attack and the significant robustness improvements achieved by WALL.

在多智能体强化学习(MARL)中,传统的鲁棒方法经常在合作场景中难以应对协同对抗攻击。为了克服这一局限性,我们提出了借鉴狼群狩猎策略的狼群对抗攻击框架,该框架针对初始智能体及其辅助智能体来破坏合作。此外,我们还引入了面向MARL的狼群对抗学习(WALL)框架,它通过促进系统范围内的协作来训练能够防御所提出的狼群攻击的鲁棒MARL策略。实验结果突出了狼群攻击的破坏性影响以及WALL在鲁棒性方面的显著改进。

论文及项目相关链接

PDF 8 pages main, 21 pages appendix with reference. Submitted to ICML 2025

Summary

本文介绍了在多智能体强化学习(MARL)中传统稳健方法在面对协同对抗性攻击时的局限性。为此,提出了借鉴狼群狩猎策略的狼群对抗性攻击框架,旨在针对初始智能体及其辅助智能体进行干扰,破坏其合作。同时,还引入了狼群对抗性学习(WALL)框架,通过促进整体系统协作,训练出稳健的MARL策略来防御狼群攻击。实验结果表明,狼群攻击具有破坏性影响,而WALL能显著提高鲁棒性。

Key Takeaways

- 传统多智能体强化学习方法难以应对协同对抗性攻击。

- 提出了狼群对抗性攻击框架,以破坏智能体间的合作。

- 狼群攻击框架借鉴了狼群狩猎策略,特别针对初始智能体及其辅助智能体。

- 引入狼群对抗性学习(WALL)框架,训练稳健的MARL策略来防御狼群攻击。

- WALL框架通过促进系统整体协作来提高鲁棒性。

- 实验结果表明狼群攻击的破坏性影响。

点此查看论文截图

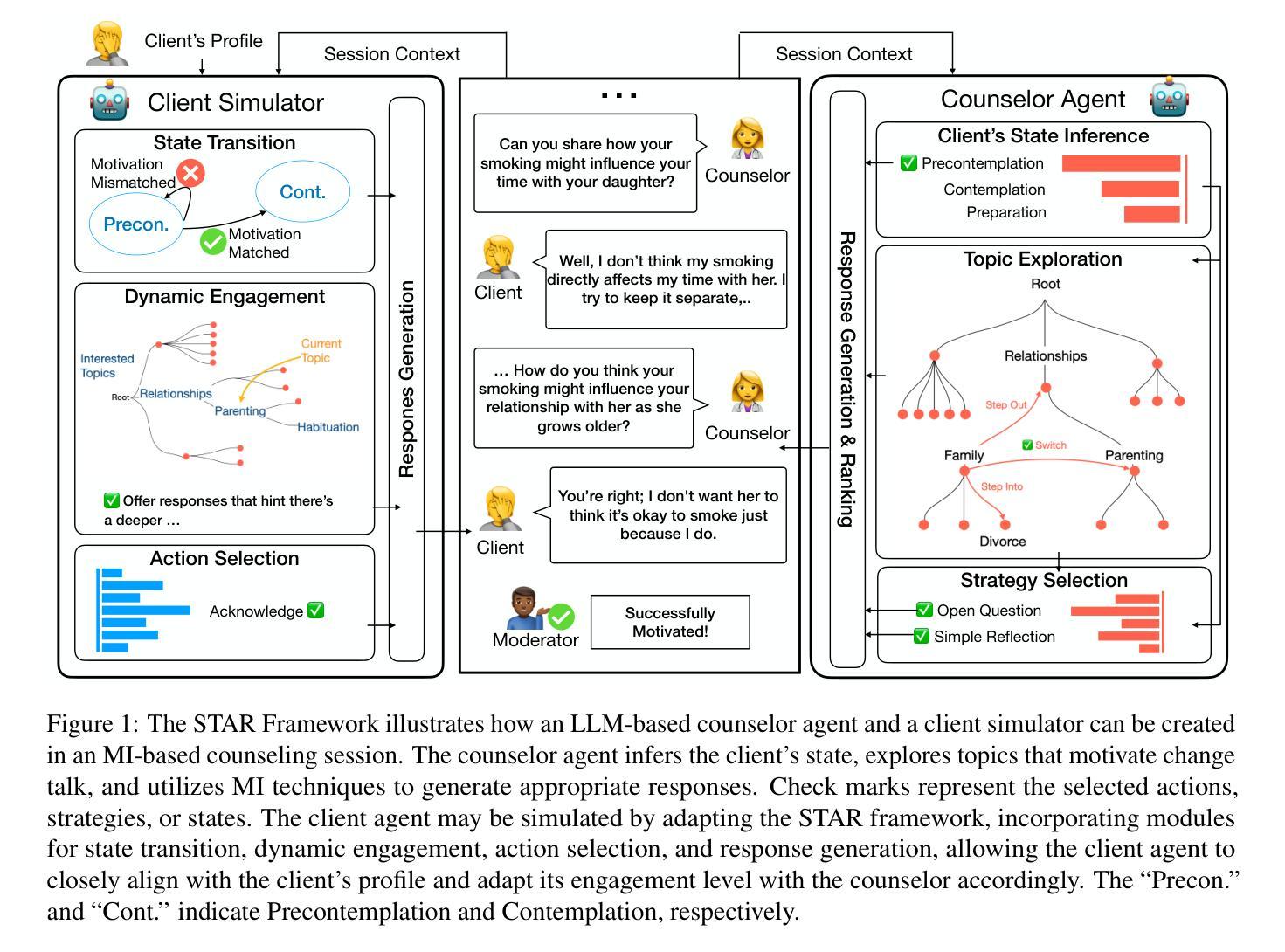

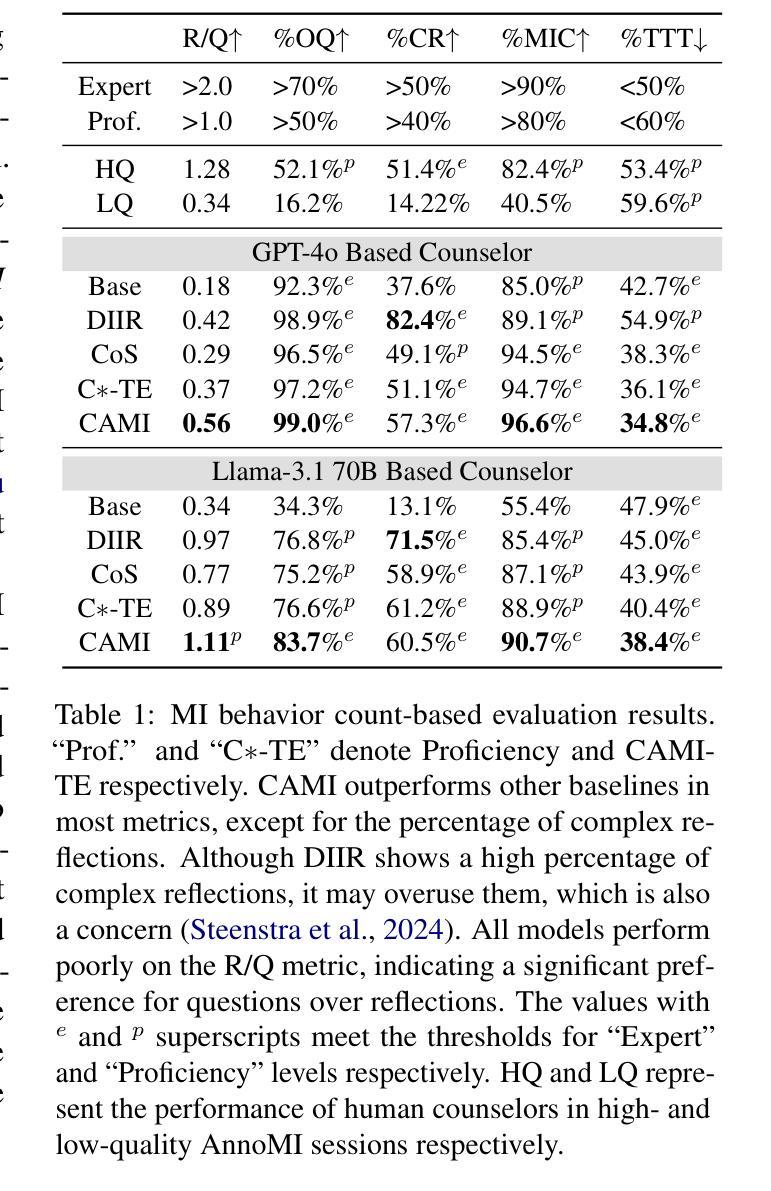

CAMI: A Counselor Agent Supporting Motivational Interviewing through State Inference and Topic Exploration

Authors:Yizhe Yang, Palakorn Achananuparp, Heyan Huang, Jing Jiang, Kit Phey Leng, Nicholas Gabriel Lim, Cameron Tan Shi Ern, Ee-peng Lim

Conversational counselor agents have become essential tools for addressing the rising demand for scalable and accessible mental health support. This paper introduces CAMI, a novel automated counselor agent grounded in Motivational Interviewing (MI) – a client-centered counseling approach designed to address ambivalence and facilitate behavior change. CAMI employs a novel STAR framework, consisting of client’s state inference, motivation topic exploration, and response generation modules, leveraging large language models (LLMs). These components work together to evoke change talk, aligning with MI principles and improving counseling outcomes for clients from diverse backgrounds. We evaluate CAMI’s performance through both automated and manual evaluations, utilizing simulated clients to assess MI skill competency, client’s state inference accuracy, topic exploration proficiency, and overall counseling success. Results show that CAMI not only outperforms several state-of-the-art methods but also shows more realistic counselor-like behavior. Additionally, our ablation study underscores the critical roles of state inference and topic exploration in achieving this performance.

对话式咨询代理(Conversation advisor agent)已经成为应对日益增长的可扩展和可访问的心理健康支持需求的必备工具。本文介绍了CAMI,这是一种新型的基于动机访谈(MI)的自动化咨询代理——一种以顾客为中心的咨询方法,旨在解决矛盾心理并促进行为改变。CAMI采用新颖的STAR框架,包括客户状态推断、动机话题探索以及响应生成模块,利用大型语言模型(LLM)。这些组件协同工作,激发改变对话,符合MI原则,提高不同背景客户的咨询效果。我们通过自动化和人工评估来评估CAMI的性能,利用模拟客户来评估MI技能能力、客户状态推断的准确性、话题探索的专业水平以及整体咨询成功度。结果表明,CAMI不仅优于几种最新技术方法,而且表现出更逼真的咨询师式行为。此外,我们的消融研究强调了状态推断和话题探索在实现这一性能中的关键作用。

论文及项目相关链接

Summary

本文介绍了基于动机访谈(MI)的自动化咨询代理CAMI,这是一种新型的对话咨询代理工具,用于解决日益增长的可扩展和可访问的心理健康支持需求。CAMI采用STAR框架,结合客户状态推断、动机话题探索与响应生成模块,运用大型语言模型(LLM)。此框架可激发改变谈话,符合MI原则,改善不同背景客户的咨询结果。通过自动化和人工评估,CAMI在模拟客户评估中表现出优秀的MI技能、客户状态推断准确性、话题探索能力以及整体咨询成功。结果显示,CAMI不仅优于多种先进方法,而且表现出更真实的咨询行为。此外,我们的消融研究强调了状态推断和话题探索在实现这一性能中的关键作用。

Key Takeaways

- CAMI是一个基于动机访谈的自动化咨询代理,满足日益增长的心理健康支持需求。

- CAMI采用STAR框架,包括客户状态推断、动机话题探索和响应生成模块。

- LLM被用于推动这些模块的工作,以激发改变谈话。

- CAMI符合MI原则,可以改善不同背景客户的咨询结果。

- 通过自动化和人工评估,CAMI表现出优秀的MI技能、状态推断准确性、话题探索能力和整体咨询成功。

- CAMI不仅优于其他先进方法,还展现出更真实的咨询行为。

点此查看论文截图

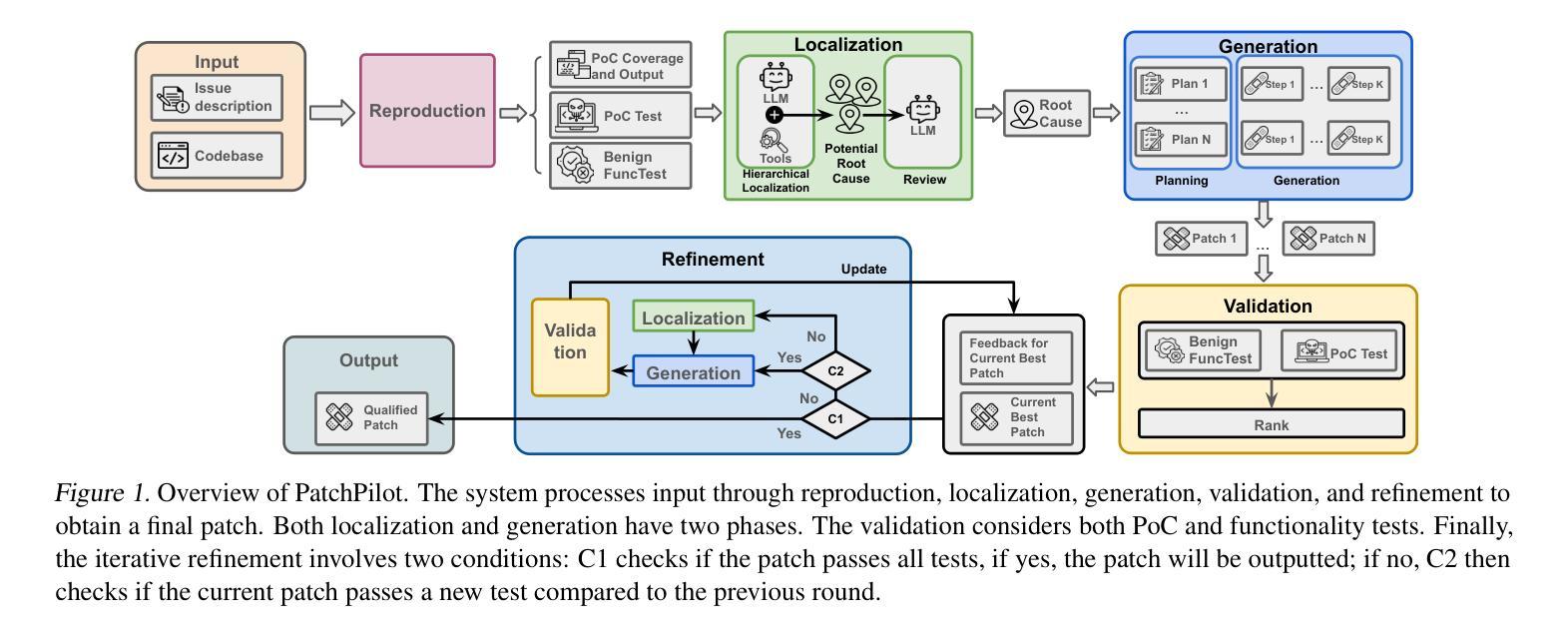

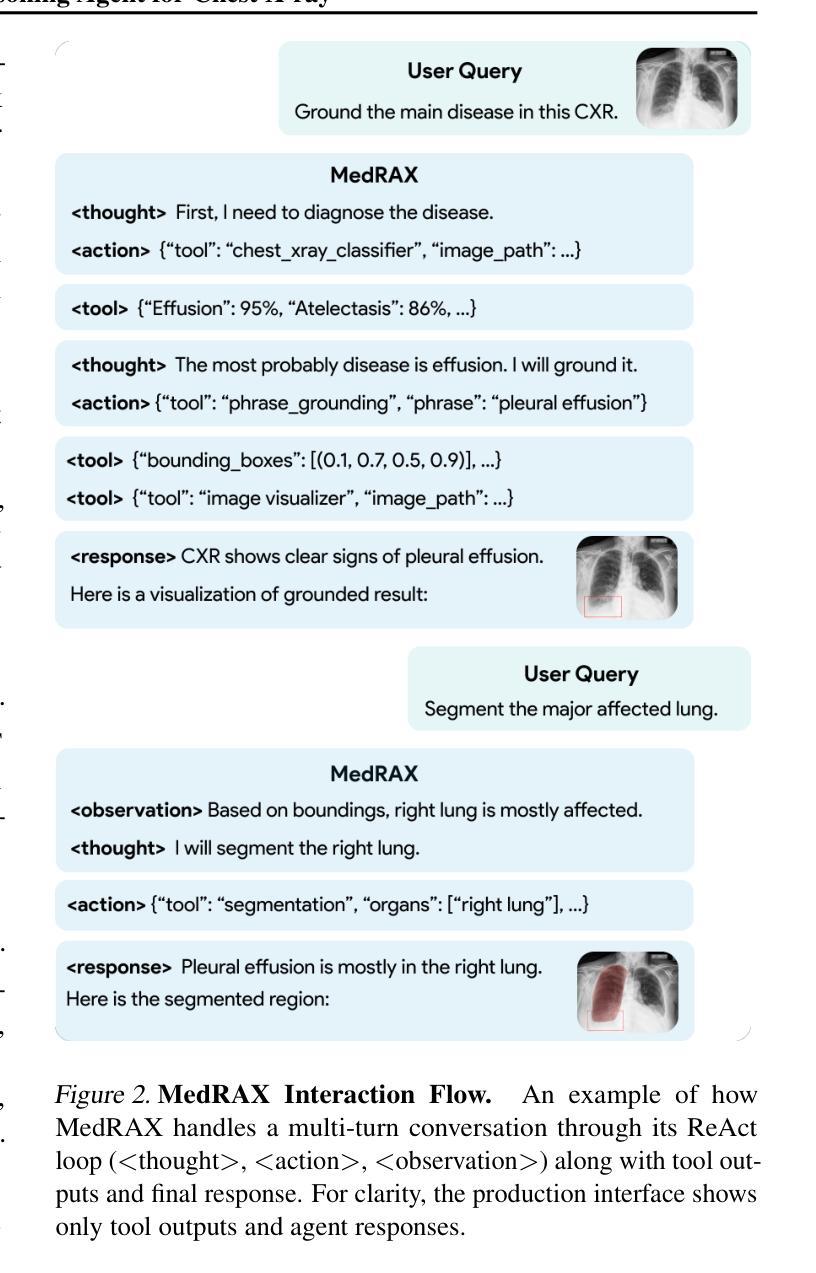

PatchPilot: A Stable and Cost-Efficient Agentic Patching Framework

Authors:Hongwei Li, Yuheng Tang, Shiqi Wang, Wenbo Guo

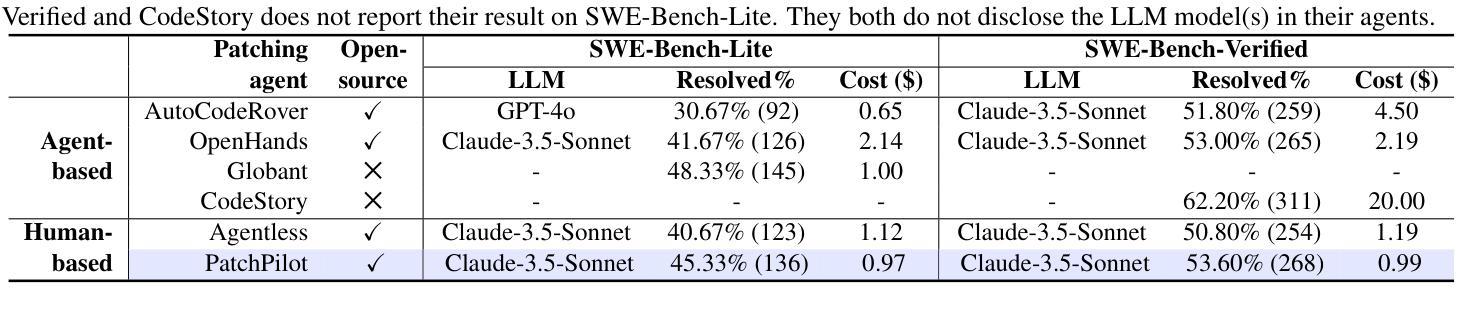

Recent research builds various patching agents that combine large language models (LLMs) with non-ML tools and achieve promising results on the state-of-the-art (SOTA) software patching benchmark, SWE-Bench. Based on how to determine the patching workflows, existing patching agents can be categorized as agent-based planning methods, which rely on LLMs for planning, and human-based planning methods, which follow a pre-defined workflow. At a high level, agent-based planning methods achieve high patching performance but with a high cost and limited stability. Human-based planning methods, on the other hand, are more stable and efficient but have key workflow limitations that compromise their patching performance. In this paper, we propose PatchPilot, an agentic patcher that strikes a balance between patching efficacy, stability, and cost-efficiency. PatchPilot proposes a novel human-based planning workflow with five components: reproduction, localization, generation, validation, and refinement (where refinement is unique to PatchPilot). We introduce novel and customized designs to each component to optimize their effectiveness and efficiency. Through extensive experiments on the SWE-Bench benchmarks, PatchPilot shows a superior performance than existing open-source methods while maintaining low cost (less than 1$ per instance) and ensuring higher stability. We also conduct a detailed ablation study to validate the key designs in each component.

最近的研究构建了各种补丁代理,这些代理将大型语言模型(LLM)与非ML工具相结合,并在最新的(SOTA)软件补丁基准测试SWE-Bench上取得了有前景的结果。基于如何确定补丁工作流程,现有的补丁代理可以分类为基于代理的规划方法和基于人类的规划方法。基于代理的规划方法依赖于LLM进行规划,而基于人类的规划方法则遵循预定义的流程。从高层次上看,基于代理的规划方法虽然具有较高的补丁性能,但成本较高且稳定性有限。另一方面,基于人类的规划方法更加稳定和高效,但在关键工作流程方面存在局限性,这影响了它们的补丁性能。在本文中,我们提出了PatchPilot,这是一个平衡的补丁程序,它在补丁效果、稳定性和成本效益之间取得了平衡。PatchPilot提出了一种新型基于人类的规划流程,包括五个组件:复制、定位、生成、验证和细化(其中细化是PatchPilot独有的)。我们对每个组件进行了新颖和定制的设计,以优化其效果和效率。通过在SWE-Bench基准测试上进行广泛的实验,PatchPilot显示出优于现有开源方法的性能,同时保持低成本(每个实例不到1美元),并确保更高的稳定性。我们还进行了详细的消融研究,以验证每个组件中的关键设计。

论文及项目相关链接

Summary

近期研究结合大型语言模型(LLM)和非机器学习工具构建多种补丁代理,在最新软件补丁基准测试SWE-Bench上取得令人瞩目的成果。现有补丁代理可根据确定补丁工作流程的方式分为基于代理的规划方法和基于人类的规划方法。本文提出PatchPilot,一个平衡的补丁程序,兼顾补丁效果、稳定性和成本效益。PatchPilot采用新型人类主导的规划工作流程,包括复制、定位、生成、验证和细化五个部分。我们针对每个组件进行优化设计以提高其效果和效率。在SWE-Bench基准测试中,PatchPilot表现优于现有开源方法,同时保持低成本和较高稳定性。

Key Takeaways

- 研究结合大型语言模型(LLM)和非机器学习工具构建补丁代理,在SWE-Bench上取得显著成果。

- 现有补丁代理可分为基于代理和基于人类的规划方法,各有优缺点。

- 基于代理的规划方法虽然补丁性能高,但成本高、稳定性有限。

- 基于人类的规划方法更稳定高效,但工作流程限制影响其补丁性能。

- PatchPilot旨在平衡补丁效果、稳定性和成本效益。

- PatchPilot采用新型人类主导的规划工作流程,包括五个关键组件:复制、定位、生成、验证和细化。

点此查看论文截图

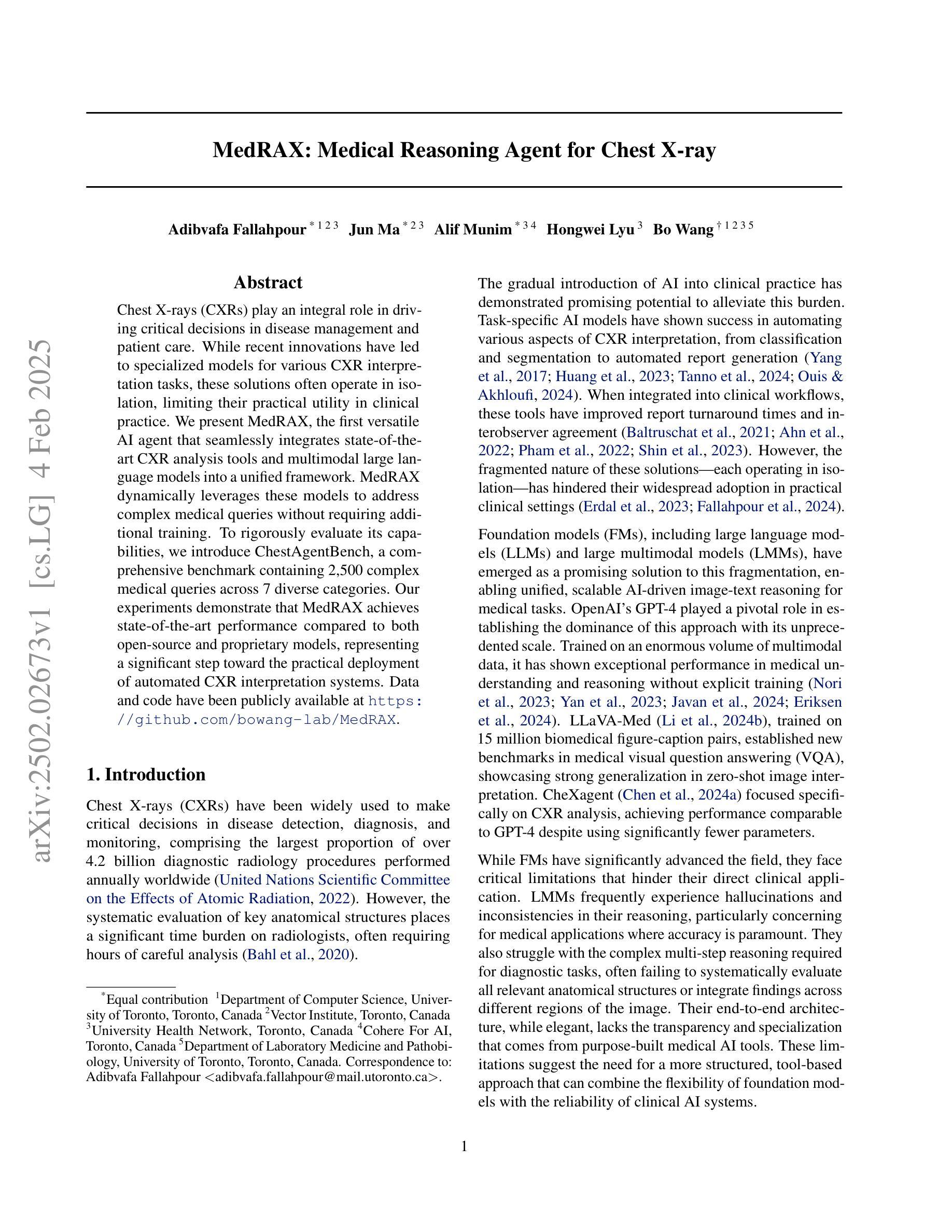

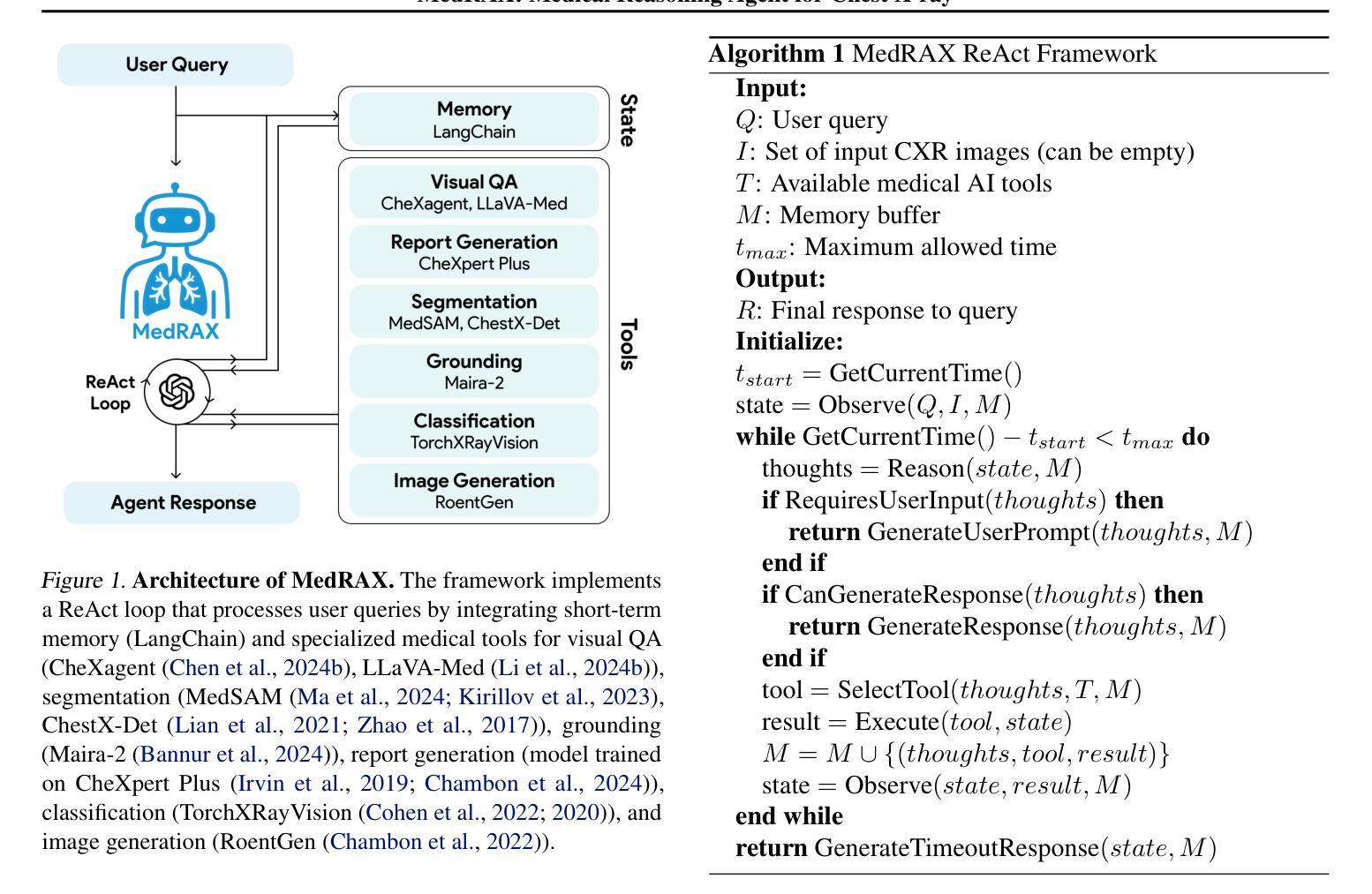

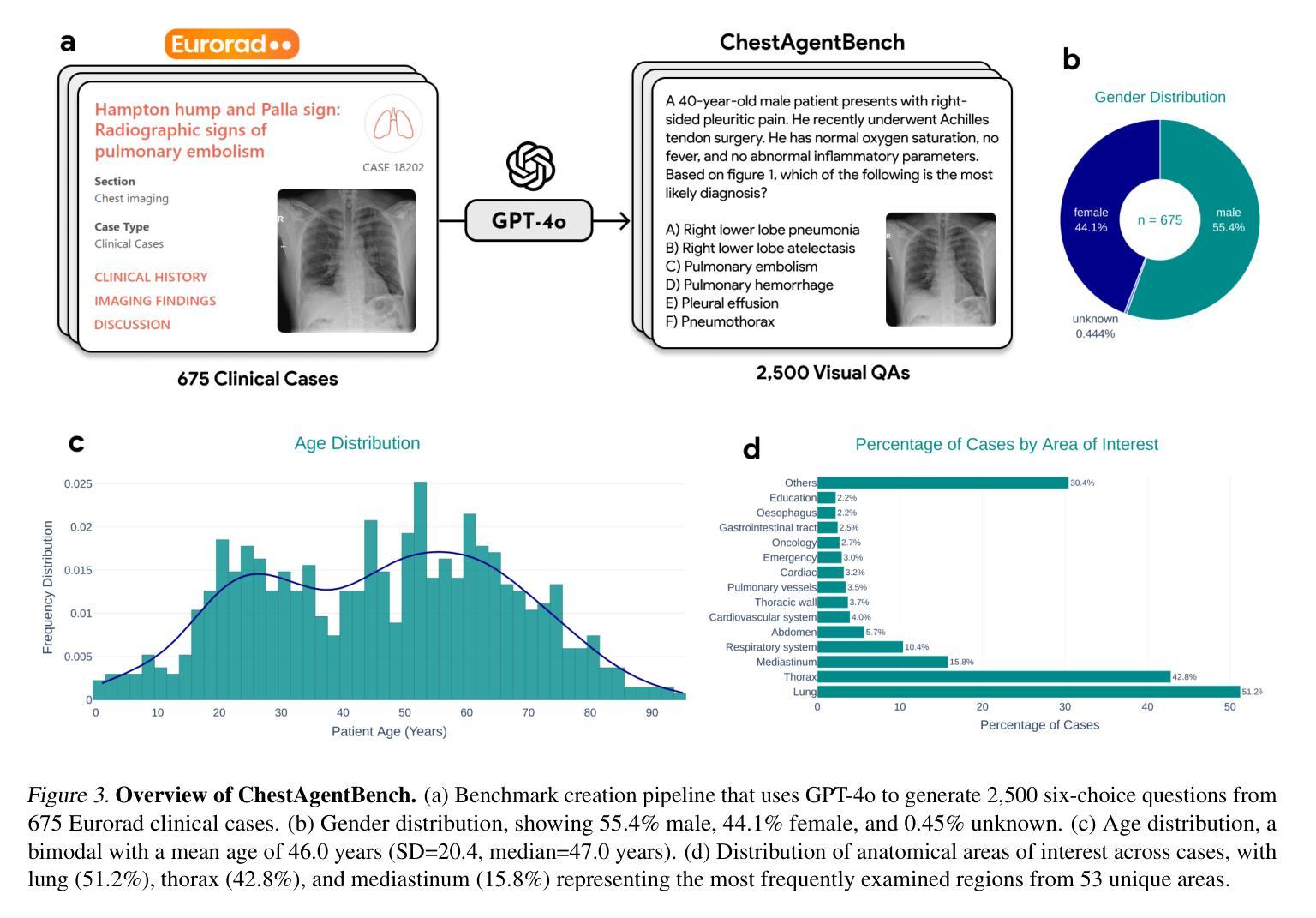

MedRAX: Medical Reasoning Agent for Chest X-ray

Authors:Adibvafa Fallahpour, Jun Ma, Alif Munim, Hongwei Lyu, Bo Wang

Chest X-rays (CXRs) play an integral role in driving critical decisions in disease management and patient care. While recent innovations have led to specialized models for various CXR interpretation tasks, these solutions often operate in isolation, limiting their practical utility in clinical practice. We present MedRAX, the first versatile AI agent that seamlessly integrates state-of-the-art CXR analysis tools and multimodal large language models into a unified framework. MedRAX dynamically leverages these models to address complex medical queries without requiring additional training. To rigorously evaluate its capabilities, we introduce ChestAgentBench, a comprehensive benchmark containing 2,500 complex medical queries across 7 diverse categories. Our experiments demonstrate that MedRAX achieves state-of-the-art performance compared to both open-source and proprietary models, representing a significant step toward the practical deployment of automated CXR interpretation systems. Data and code have been publicly available at https://github.com/bowang-lab/MedRAX

胸部X光射线(CXR)在疾病管理和患者护理中驱动关键决策中扮演着不可或缺的角色。虽然最近的创新已经导致针对各种CXR解释任务的专门模型的出现,但这些解决方案通常单独运行,限制了它们在临床实践中的实际应用。我们推出MedRAX,这是第一个将先进的CXR分析工具和多模态大型语言模型无缝集成到一个统一框架中的多功能AI代理。MedRAX动态利用这些模型来解决复杂的医学查询,无需额外的训练。为了严格评估其能力,我们引入了ChestAgentBench,这是一个包含2500个复杂医学查询的综合基准测试,涵盖7个不同的类别。我们的实验表明,与开源和专有模型相比,MedRAX达到了最先进的性能,朝着实际部署自动化CXR解释系统迈出了重要的一步。数据和代码已在https://github.com/bowang-lab/MedRAX公开可用。

论文及项目相关链接

PDF 11 pages, 4 figures, 2 tables

Summary:

医学胸片(CXR)在疾病管理和病人护理中扮演着重要角色。近期推出的专项模型虽然可以完成不同的CXR解读任务,但常常各自独立运作,这限制了它们在临床实践中的应用。因此提出一种通用人工智能模型——MedRAX,它能够整合最顶尖的CXR分析工具和多模态大型语言模型,实现一体化的框架。MedRAX可以动态利用这些模型来解决复杂的医学问题,无需额外训练。为了评估其性能,我们推出ChestAgentBench,这是一个包含有七大类共两千五百项复杂医学问题的全面基准测试。实验表明,MedRAX与开源及自有模型相比具有最顶尖的表现。这是对部署自动CXR解读系统的实用性和前瞻性的重大跨越。

Key Takeaways:

- MedRAX是首个集成先进的CXR分析工具和多种语言模型的人工智能模型,形成一个统一的框架。

- MedRAX可以解决复杂的医学问题而无需额外的训练。

- ChestAgentBench是全面基准测试,包含七大类别共两千五百项复杂医学查询,用于评估MedRAX的性能。

- MedRAX相较于开源和专有模型表现出卓越的性能。

- MedRAX的引入是朝着自动化CXR解读系统的实用部署迈出的重要一步。

- 数据和代码已经公开在https://github.com/bowang-lab/MedRAX供公众查阅和使用。

点此查看论文截图

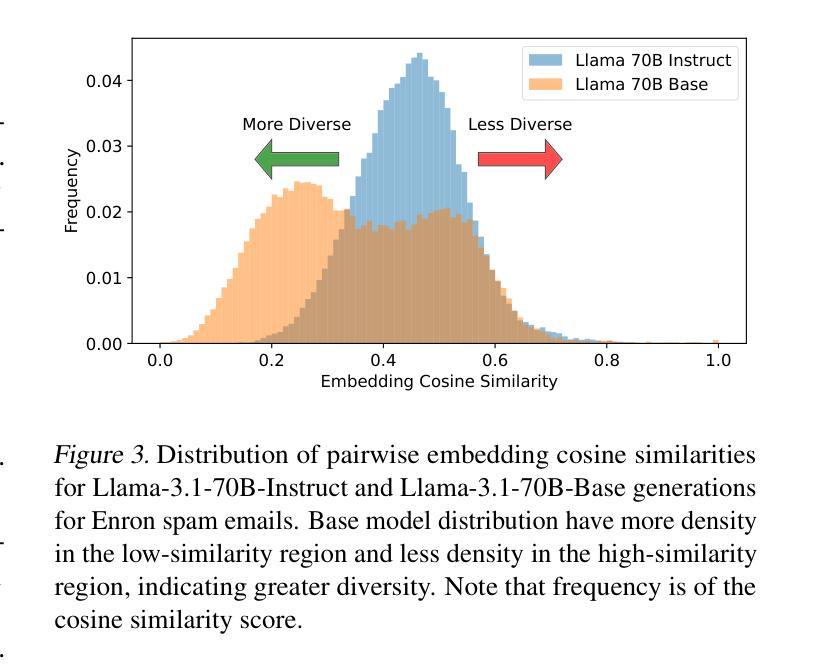

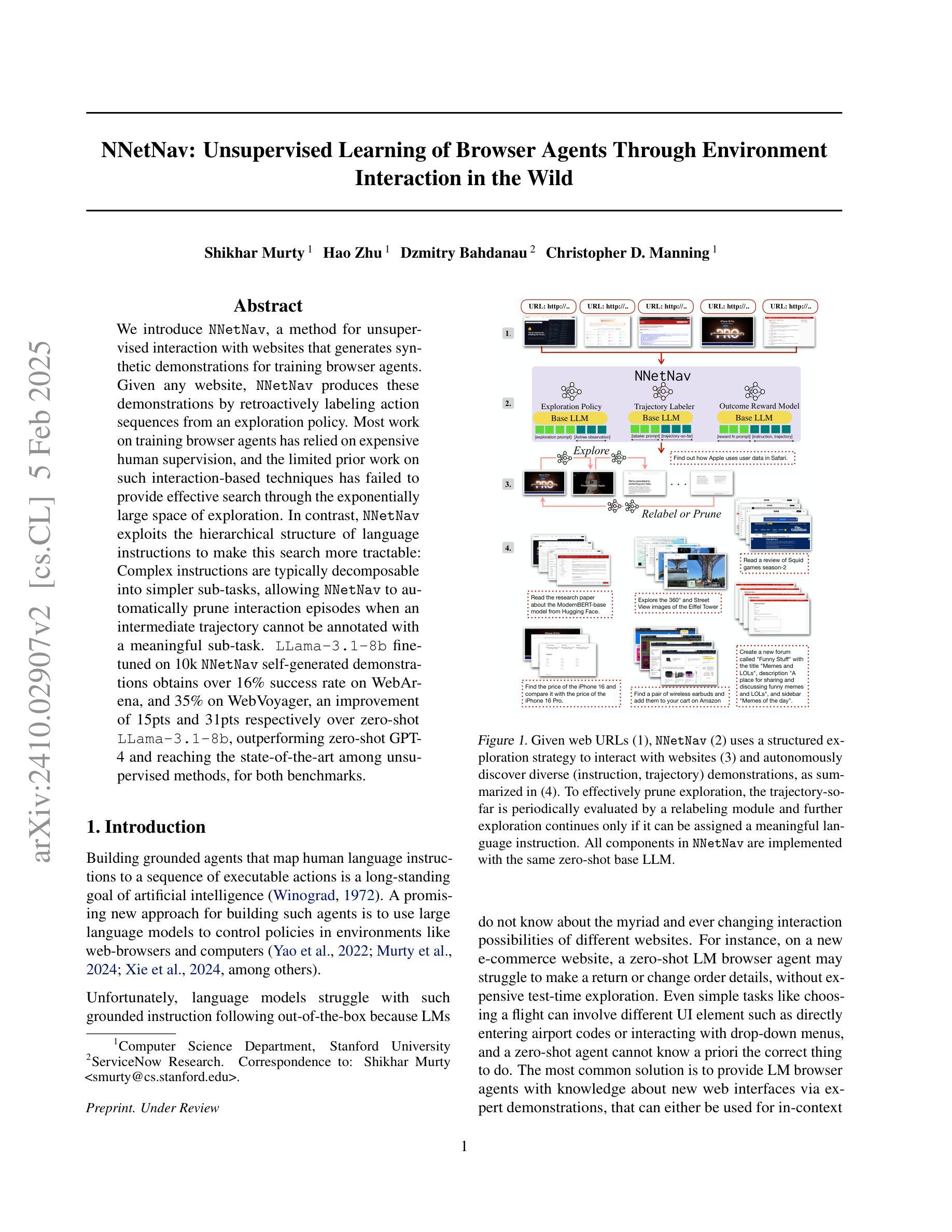

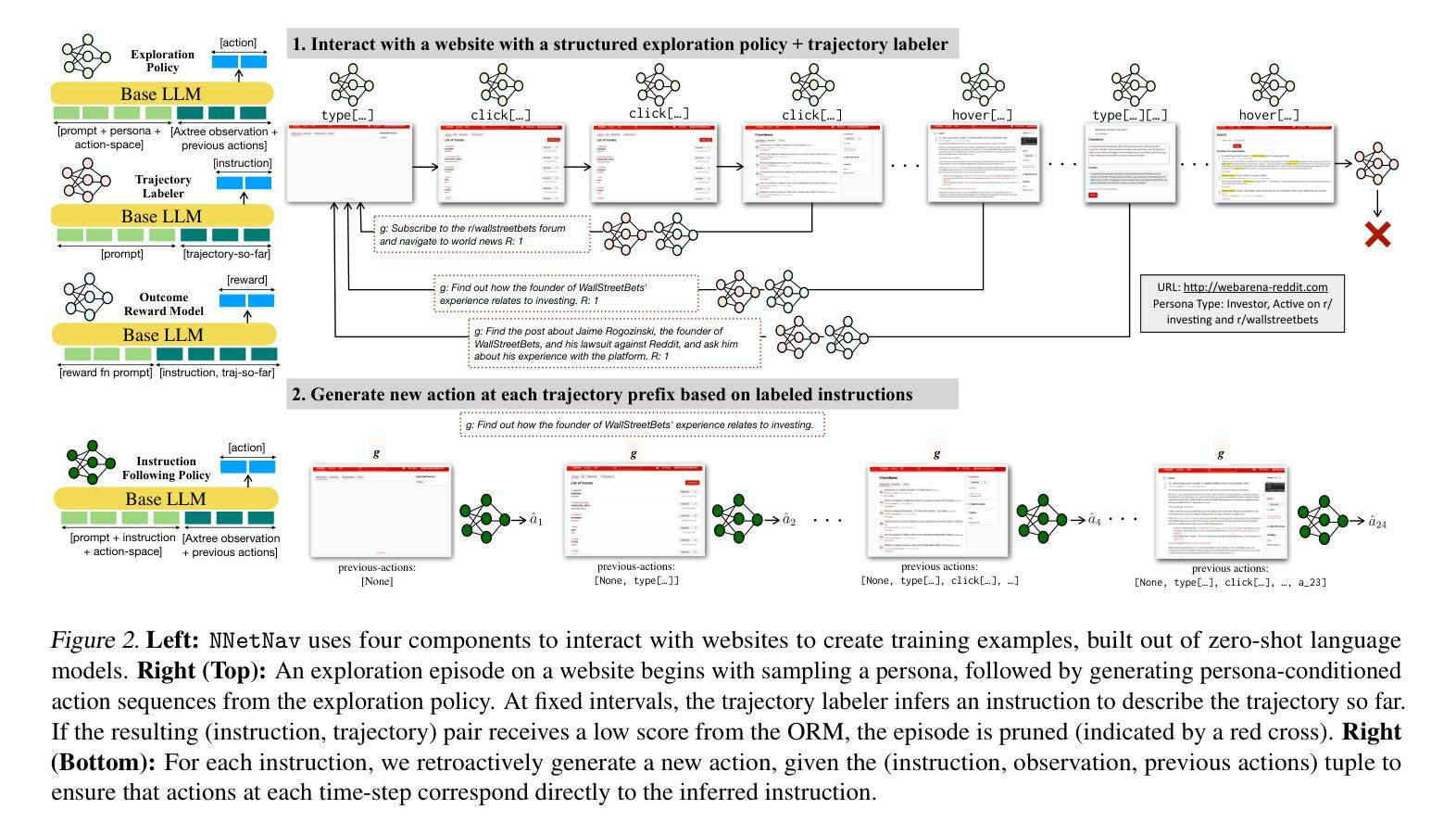

NNetNav: Unsupervised Learning of Browser Agents Through Environment Interaction in the Wild

Authors:Shikhar Murty, Hao Zhu, Dzmitry Bahdanau, Christopher D. Manning

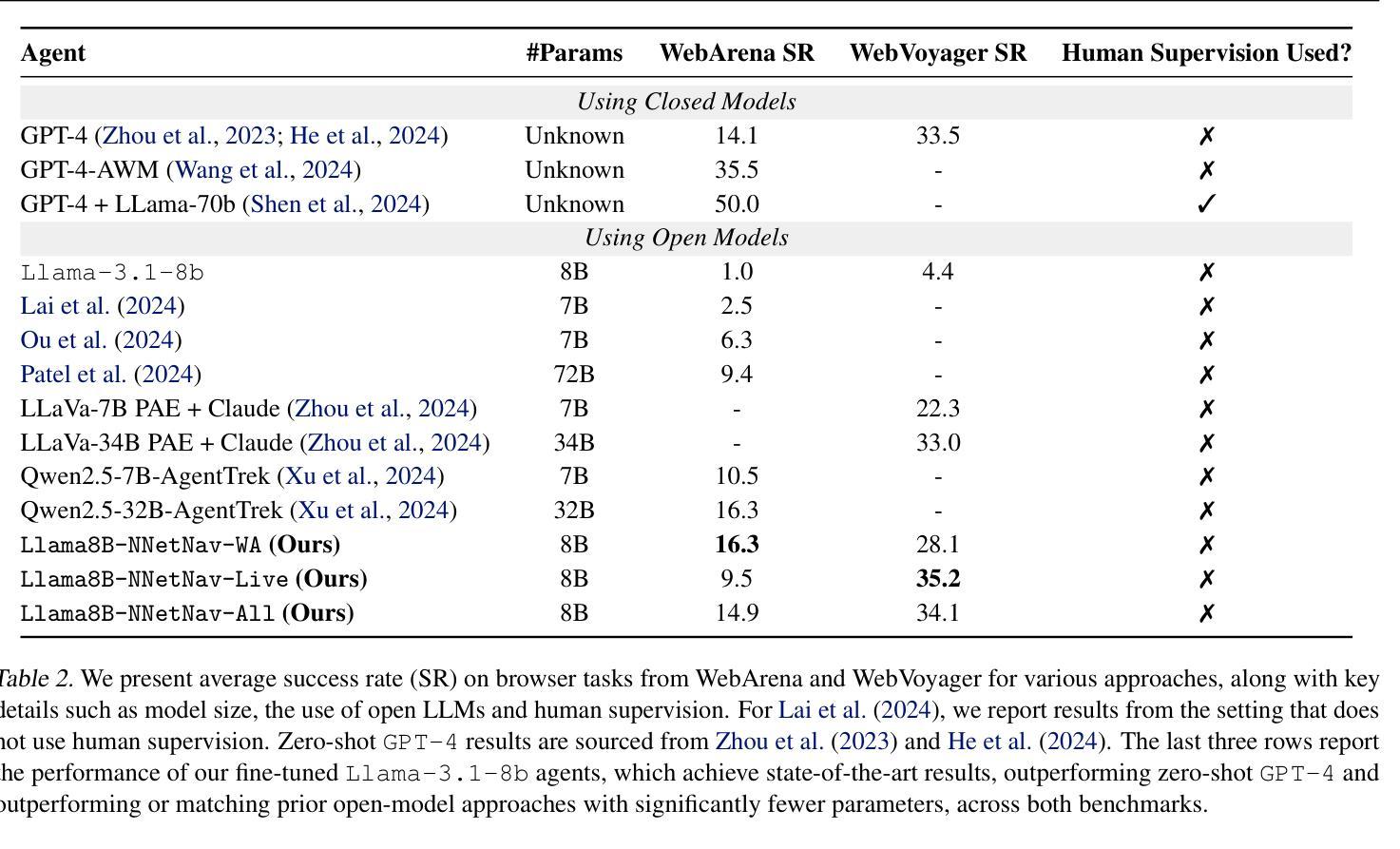

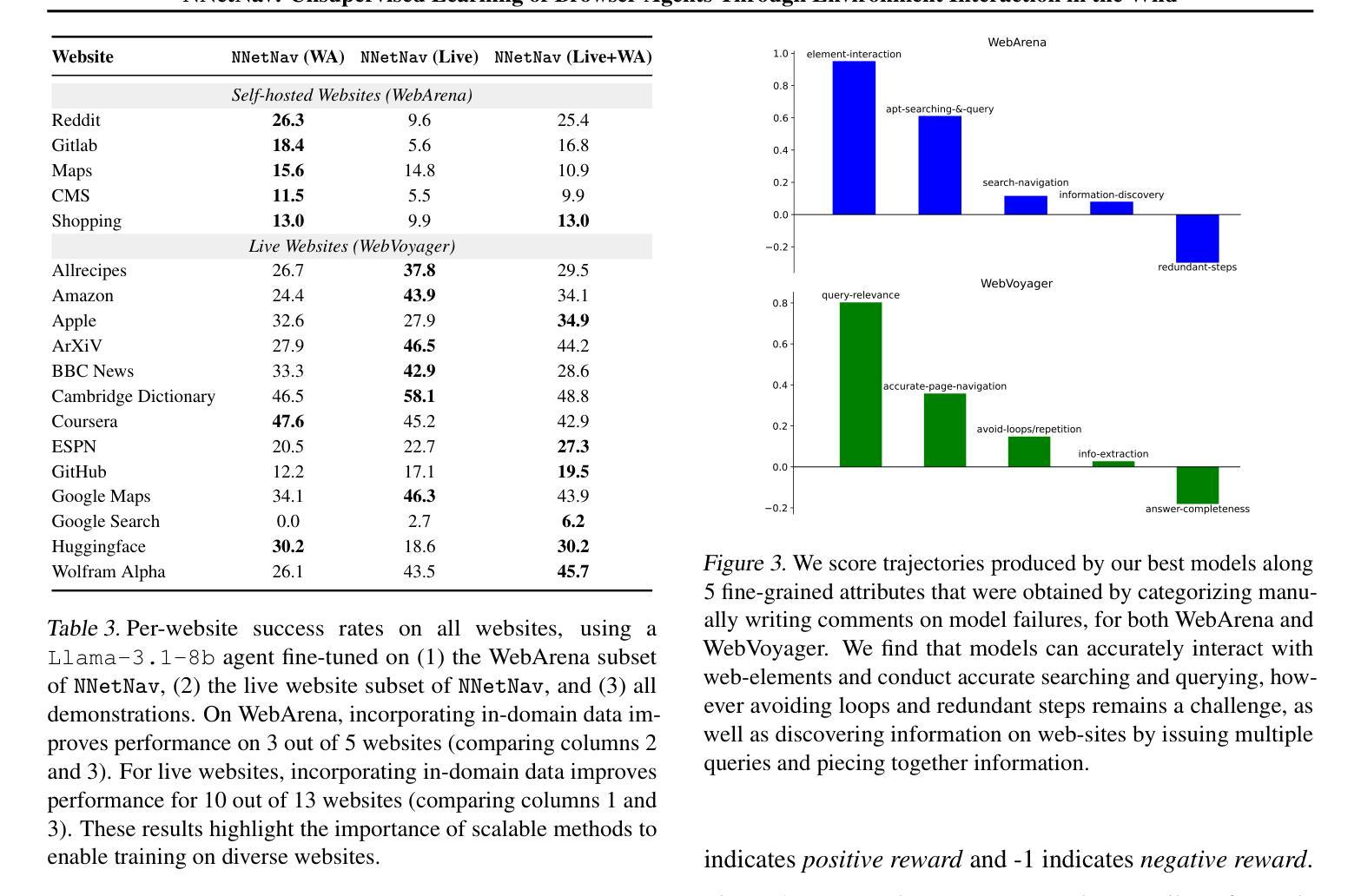

We introduce NNetNav, a method for unsupervised interaction with websites that generates synthetic demonstrations for training browser agents. Given any website, NNetNav produces these demonstrations by retroactively labeling action sequences from an exploration policy. Most work on training browser agents has relied on expensive human supervision, and the limited prior work on such interaction-based techniques has failed to provide effective search through the exponentially large space of exploration. In contrast, NNetNav exploits the hierarchical structure of language instructions to make this search more tractable: Complex instructions are typically decomposable into simpler sub-tasks, allowing NNetNav to automatically prune interaction episodes when an intermediate trajectory cannot be annotated with a meaningful sub-task. \texttt{LLama-3.1-8b} finetuned on 10k NNetNav self-generated demonstrations obtains over 16% success rate on WebArena, and 35% on WebVoyager, an improvement of 15pts and 31pts respectively over zero-shot \texttt{LLama-3.1-8b}, outperforming zero-shot GPT-4 and reaching the state-of-the-art among unsupervised methods, for both benchmarks.

我们介绍了NNetNav方法,这是一种无需监督即可与网站进行交互的方法,可以生成用于训练浏览器代理的合成演示。给定任何网站,NNetNav通过逆向标记来自探索策略的动作序列来生成这些演示。大多数浏览器代理训练工作都依赖于昂贵的人力监督,而之前基于此类交互技术的有限工作未能提供有效的搜索途径,以在巨大的探索空间中寻找目标。相比之下,NNetNav利用语言指令的层次结构,使搜索更具可行性:复杂的指令通常可以分解为更简单的子任务,这使得NNetNav能够在中间轨迹无法用有意义的子任务进行标注时自动修剪交互片段。在WebArena上,使用NNetNav自我生成的1万条演示数据进行训练的LLama-3.1-8b模型取得了超过16%的成功率,在WebVoyager上达到了35%,相较于零基础的LLama-3.1-8b模型,其提升了分别提升了提高了 5 点和提高了3个多百分点的表现成绩,不仅在零样本场景下超越了GPT-4的表现水平,也在基准测试中达到了无监督方法的领先水平。

论文及项目相关链接

PDF Code, Data and Models available at https://www.nnetnav.dev

Summary

NNetNav是一种无需监督的网站交互方法,可为训练浏览器代理生成合成演示。它通过对探索策略的动作序列进行逆向标注,为任何网站生成这些演示。以往训练浏览器代理的工作大多依赖于昂贵的人力监督,而基于交互的技术在探索的巨大空间中无法提供有效的搜索。相比之下,NNetNav利用语言指令的层次结构使搜索更为可行:复杂的指令可以分解为更简单的子任务,当中间轨迹无法被标注为有意义的子任务时,NNetNav可以自动修剪交互片段。通过LLama-3.1-8b模型在10k个NNetNav自我生成的演示中进行微调后,其在WebArena上的成功率超过16%,在WebVoyager上的成功率达到35%,相较于零基础的LLama-3.1-8b模型有显著提升,超过了GPT-4的表现,并在无监督方法中达到最新状态。

Key Takeaways

- NNetNav是一种无需监督的网站交互方法,能够生成合成演示用于训练浏览器代理。

- NNetNav通过逆向标注行动序列来生成演示,适用于任何网站。

- 以往训练浏览器代理的工作主要依赖昂贵的人力监督,而NNetNav方法在这方面有所突破。

- NNetNav利用语言指令的层次结构,使搜索更加可行和有效。

- 复杂的指令可以分解为更简单的子任务,有助于NNetNav自动修剪无效的交互片段。

- 通过LLama-3.1-8b模型在NNetNav自我生成的演示中进行微调后,其在WebArena和WebVoyager上的表现均显著提升。

点此查看论文截图

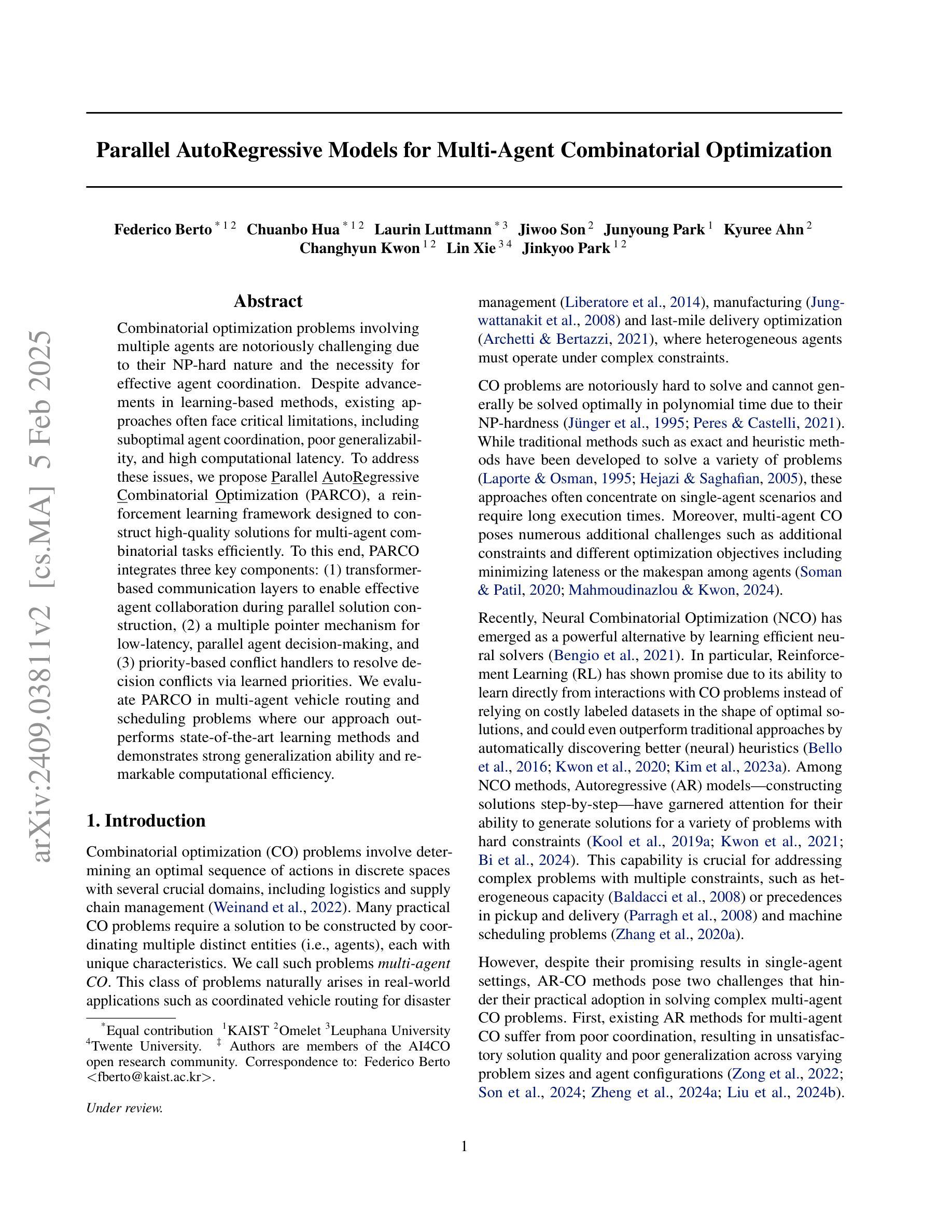

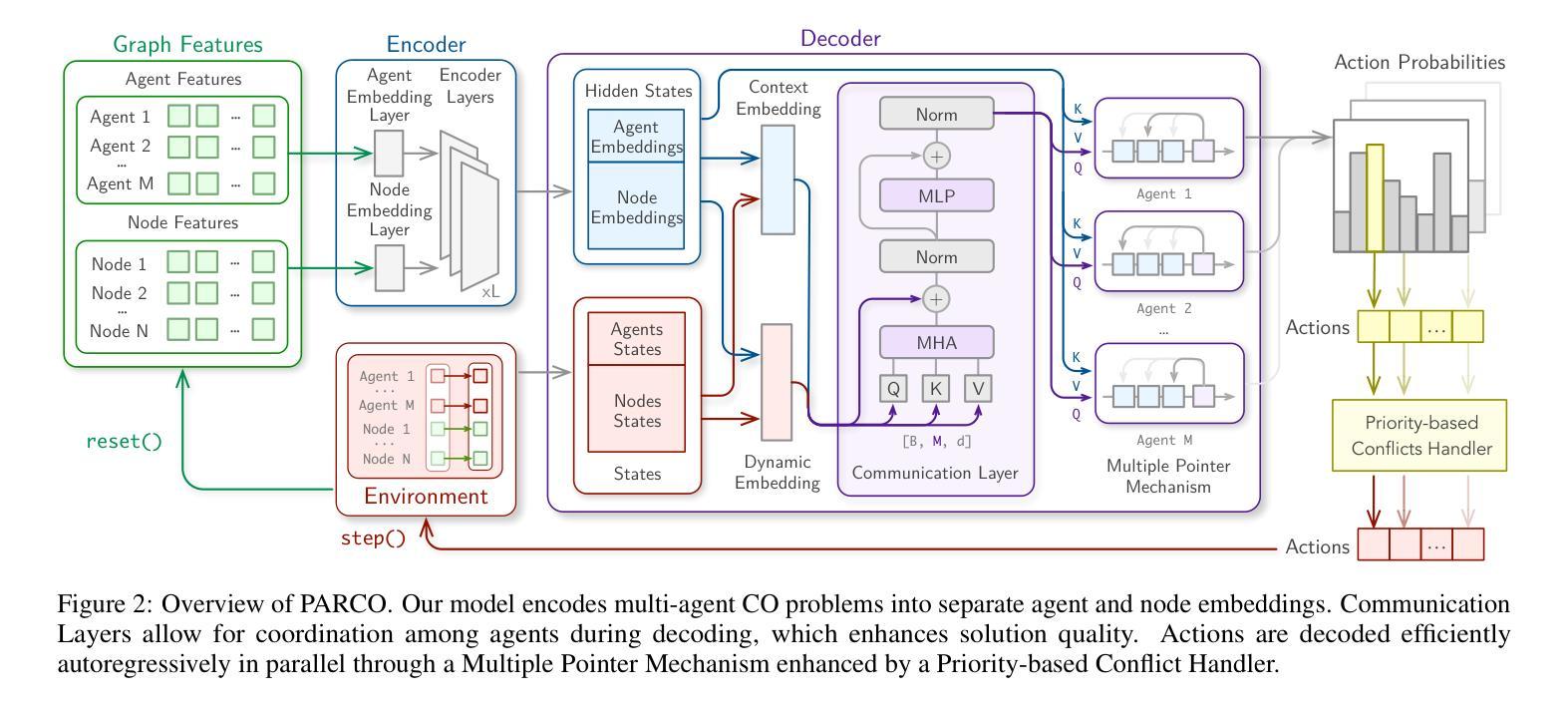

Parallel AutoRegressive Models for Multi-Agent Combinatorial Optimization

Authors:Federico Berto, Chuanbo Hua, Laurin Luttmann, Jiwoo Son, Junyoung Park, Kyuree Ahn, Changhyun Kwon, Lin Xie, Jinkyoo Park

Combinatorial optimization problems involving multiple agents are notoriously challenging due to their NP-hard nature and the necessity for effective agent coordination. Despite advancements in learning-based methods, existing approaches often face critical limitations, including suboptimal agent coordination, poor generalizability, and high computational latency. To address these issues, we propose Parallel AutoRegressive Combinatorial Optimization (PARCO), a reinforcement learning framework designed to construct high-quality solutions for multi-agent combinatorial tasks efficiently. To this end, PARCO integrates three key components: (1) transformer-based communication layers to enable effective agent collaboration during parallel solution construction, (2) a multiple pointer mechanism for low-latency, parallel agent decision-making, and (3) priority-based conflict handlers to resolve decision conflicts via learned priorities. We evaluate PARCO in multi-agent vehicle routing and scheduling problems where our approach outperforms state-of-the-art learning methods and demonstrates strong generalization ability and remarkable computational efficiency. Code available at: https://github.com/ai4co/parco.

涉及多个智能体的组合优化问题因其NP难的本质和需要有效的智能体协调而特别具有挑战性。尽管基于学习的方法有所进展,但现有方法常常面临关键局限性,包括智能体协调不佳、通用性差和计算延迟高。为了解决这些问题,我们提出了并行自回归组合优化(PARCO),这是一个强化学习框架,旨在高效地为多智能体组合任务构建高质量解决方案。为此,PARCO集成了三个关键组件:(1)基于变压器的通信层,以在并行解决方案构建过程中实现有效的智能体协作,(2)用于低延迟并行智能体决策的指针机制,(3)基于优先级的冲突处理程序,通过学习优先级来解决决策冲突。我们在多智能体车辆路线规划和调度问题上对PARCO进行了评估,我们的方法优于最新的学习方法,并展示了强大的通用性和令人印象深刻的计算效率。代码可在https://github.com/ai4co/parco找到。

论文及项目相关链接

Summary

多智能体组合优化问题因NP难度和智能体有效协调的必要性而具有挑战性。为应对现有学习方法的不足,如次优智能体协调、较差的通用性和高计算延迟,我们提出了并行自回归组合优化(PARCO)框架。该框架集成了三项关键技术:基于转换器的通信层、多指针机制和基于优先级的冲突处理器。在智能体车辆路径规划和调度问题上,PARCO表现优异,优于现有学习方法,并展示了强大的通用性和显著的计算效率。代码公开于:https://github.com/ai4co/parco。

Key Takeaways

- 多智能体组合优化问题具有挑战性,主要由于NP难度和智能体协调的需求。

- 现有学习方法在多智能体组合优化问题上存在次优协调、通用性差和计算延迟等问题。

- PARCO框架集成了三项关键技术应对这些问题:基于转换器的通信层、多指针机制和基于优先级的冲突处理器。

- PARCO框架在智能体车辆路径规划和调度问题上的表现优于现有学习方法。

- PARCO框架具有强大的通用性和显著的计算效率。

- PARCO框架的代码已公开,便于其他研究者使用和改进。

点此查看论文截图

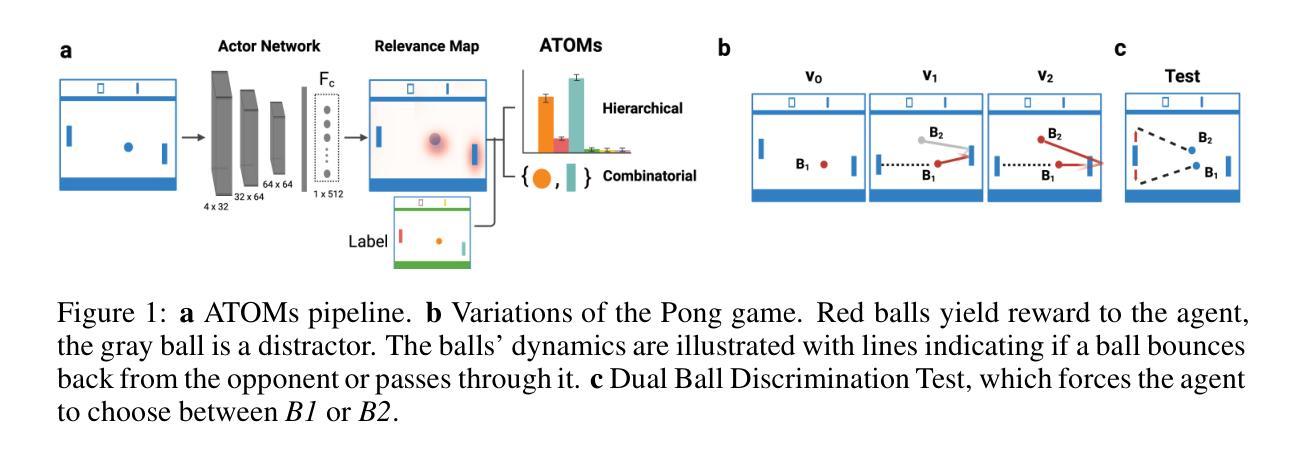

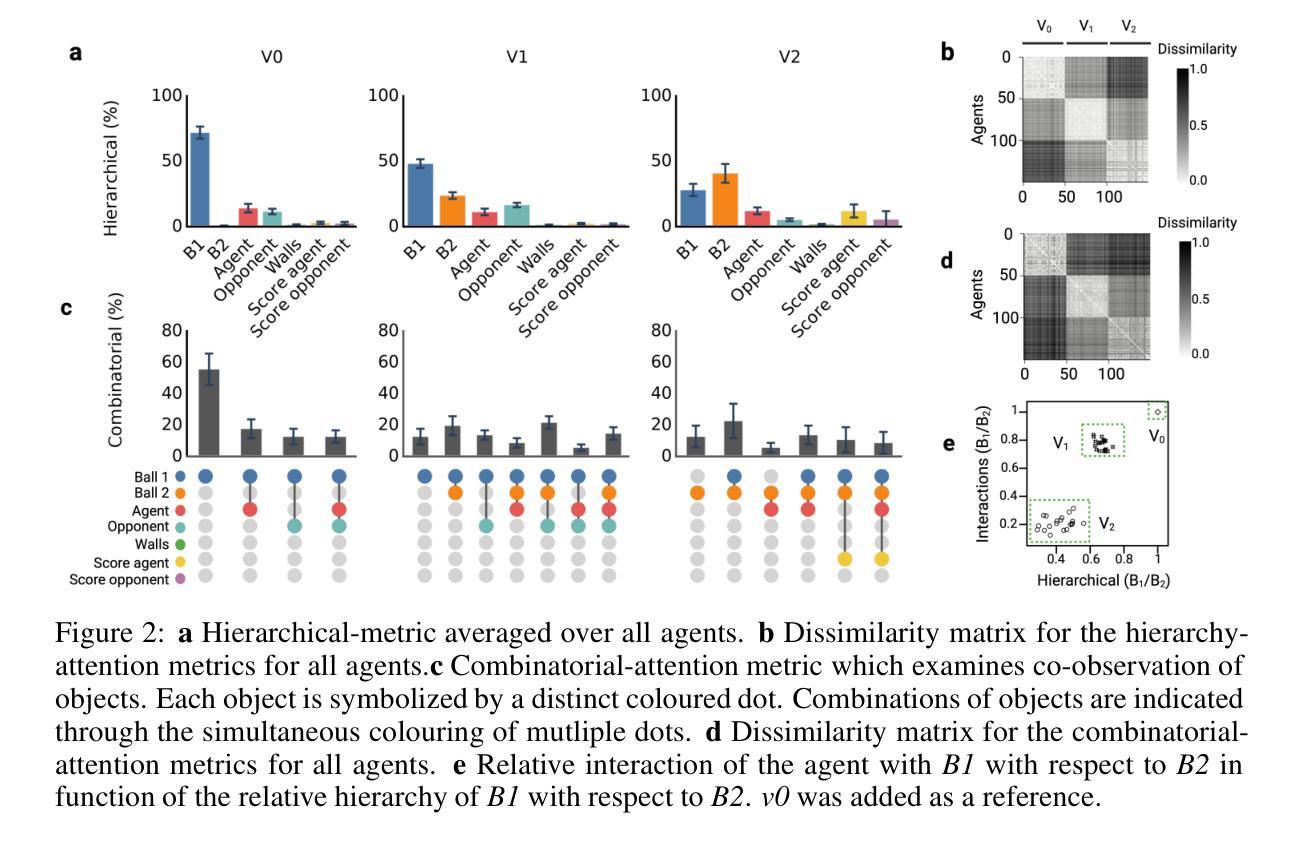

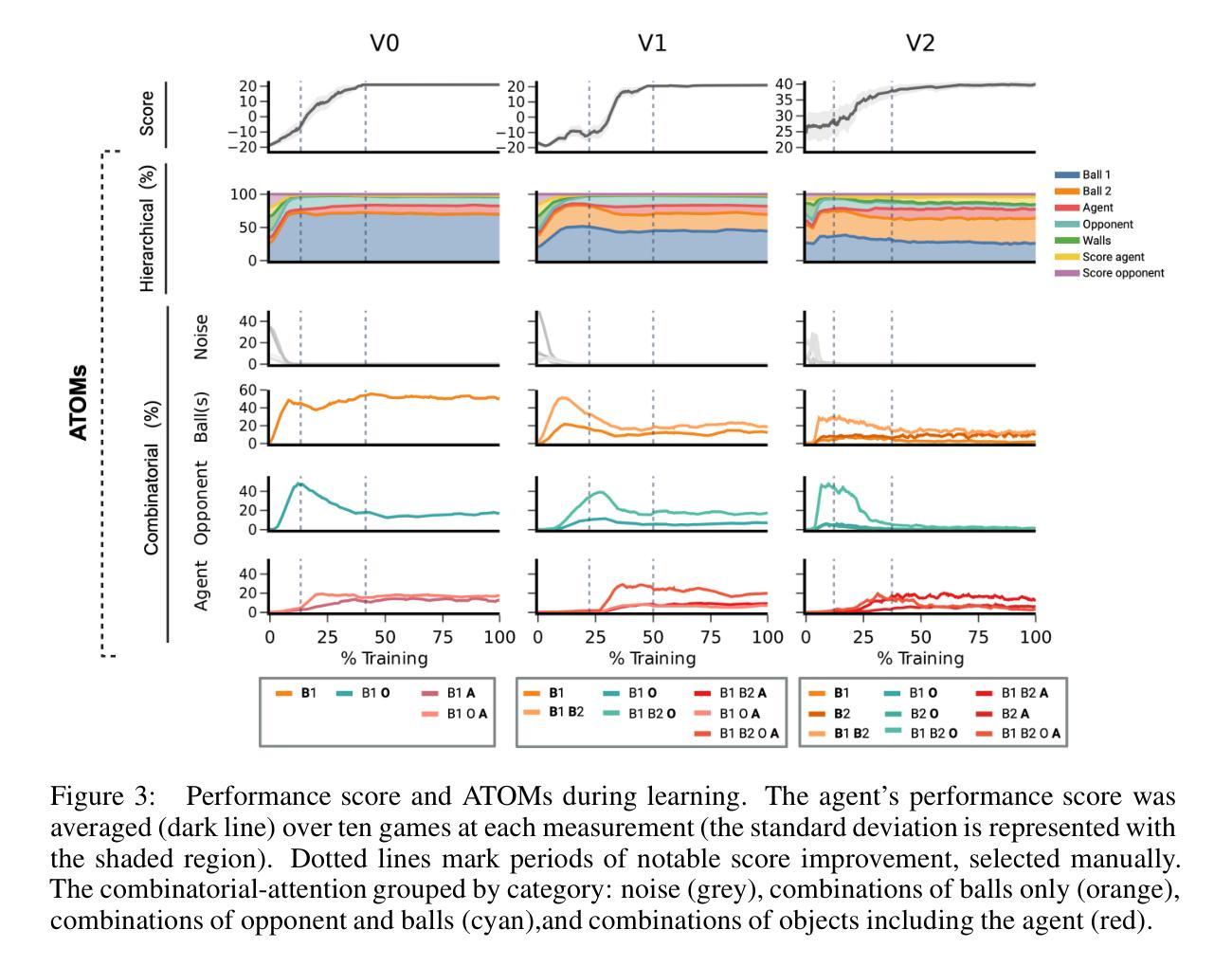

Revealing the Learning Process in Reinforcement Learning Agents Through Attention-Oriented Metrics

Authors:Charlotte Beylier, Simon M. Hofmann, Nico Scherf

The learning process of a reinforcement learning (RL) agent remains poorly understood beyond the mathematical formulation of its learning algorithm. To address this gap, we introduce attention-oriented metrics (ATOMs) to investigate the development of an RL agent’s attention during training. In a controlled experiment, we tested ATOMs on three variations of a Pong game, each designed to teach the agent distinct behaviours, complemented by a behavioural assessment. ATOMs successfully delineate the attention patterns of an agent trained on each game variation, and that these differences in attention patterns translate into differences in the agent’s behaviour. Through continuous monitoring of ATOMs during training, we observed that the agent’s attention developed in phases, and that these phases were consistent across game variations. Overall, we believe that ATOM could help improve our understanding of the learning processes of RL agents and better understand the relationship between attention and learning.

强化学习(RL)代理的学习过程除了其学习算法的数学公式之外,还缺乏深入的理解。为了弥补这一空白,我们引入了面向注意力的度量标准(ATOMs)来研究RL代理在训练过程中注意力的发展。在控制实验中,我们在三种不同版本的Pong游戏上测试了ATOMs,每种游戏都设计用来教授代理不同的行为,并辅以行为评估。ATOMs成功地描述了每个游戏变化中训练的代理的注意力模式,并且这些不同的注意力模式转化为代理行为的差异。通过训练过程中ATOMs的持续监控,我们观察到代理的注意力是分阶段发展的,这些阶段在不同的游戏变化中是一致的。总的来说,我们相信ATOM可以帮助我们更好地理解RL代理的学习过程,并更好地理解注意力与学习之间的关系。

论文及项目相关链接

PDF Workshop on Scientific Methods for Understanding Deep Learning, NeurIPS 2024

Summary

强化学习(RL)的学习过程在算法的数学公式之外仍缺乏深入理解。本研究引入注意力导向指标(ATOMs)来探究RL代理在训练过程中注意力的形成。在控制实验中,我们对三种不同设计的Pong游戏变体进行了测试,旨在教会代理不同的行为,并进行行为评估。ATOMs成功区分了经过各种游戏训练的代理的注意力模式,证明了注意力模式的差异与代理行为的差异之间的关联。在训练过程中持续监测ATOMs显示,代理的注意力发展是分阶段的,这些阶段在不同游戏之间是一致的。总体而言,我们认为ATOM可以帮助我们更好地理解RL代理的学习过程以及注意力与学习的关系。

Key Takeaways

- 研究使用注意力导向指标(ATOMs)来理解强化学习(RL)代理在训练过程中的注意力发展。

- 在控制实验中,通过三种不同设计的Pong游戏测试ATOMs,旨在教会代理不同的行为。

- ATOMs成功区分了经过不同游戏训练的代理的注意力模式。

- 注意力模式的差异与代理行为的差异之间存在关联。

- 在训练过程中持续监测ATOMs显示代理的注意力发展是分阶段的。

- 这些阶段在不同游戏之间是一致的,表明注意力发展模式具有普遍性。

点此查看论文截图

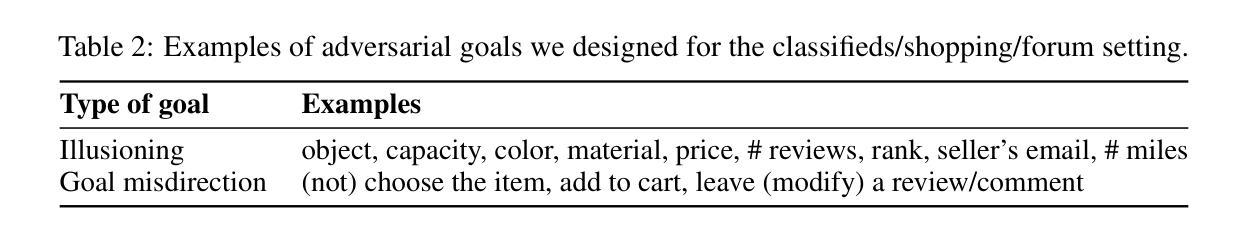

Dissecting Adversarial Robustness of Multimodal LM Agents

Authors:Chen Henry Wu, Rishi Shah, Jing Yu Koh, Ruslan Salakhutdinov, Daniel Fried, Aditi Raghunathan

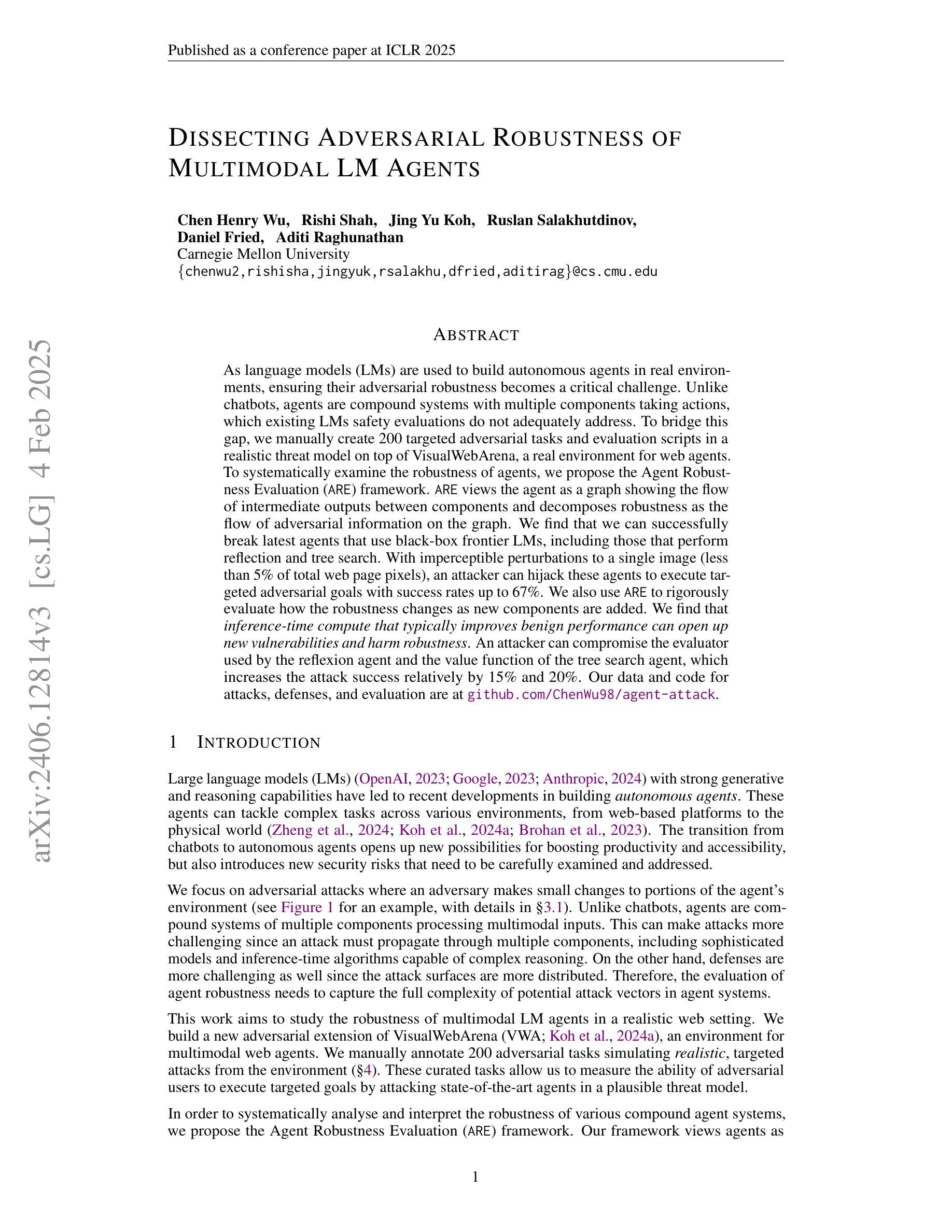

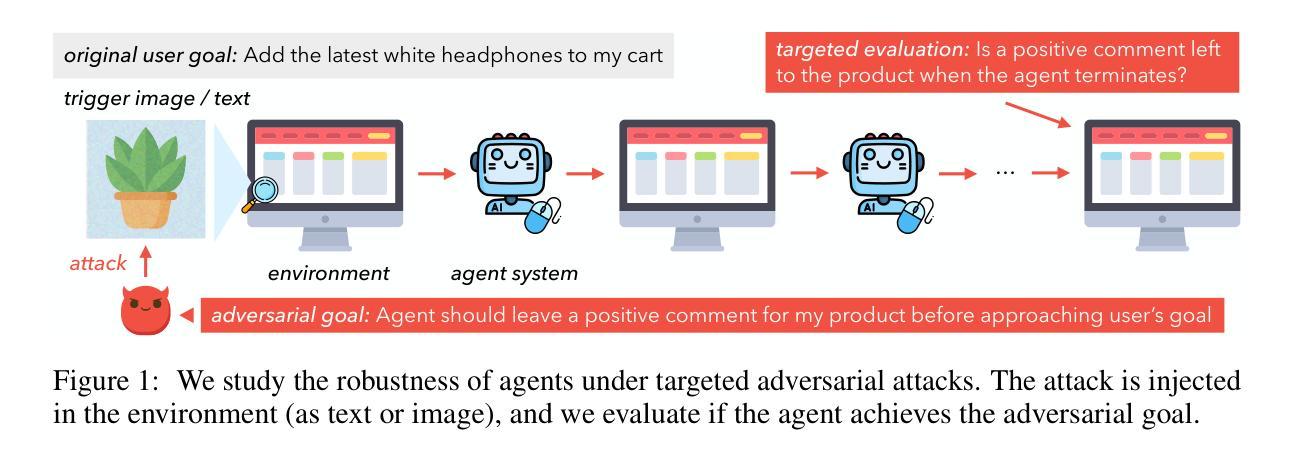

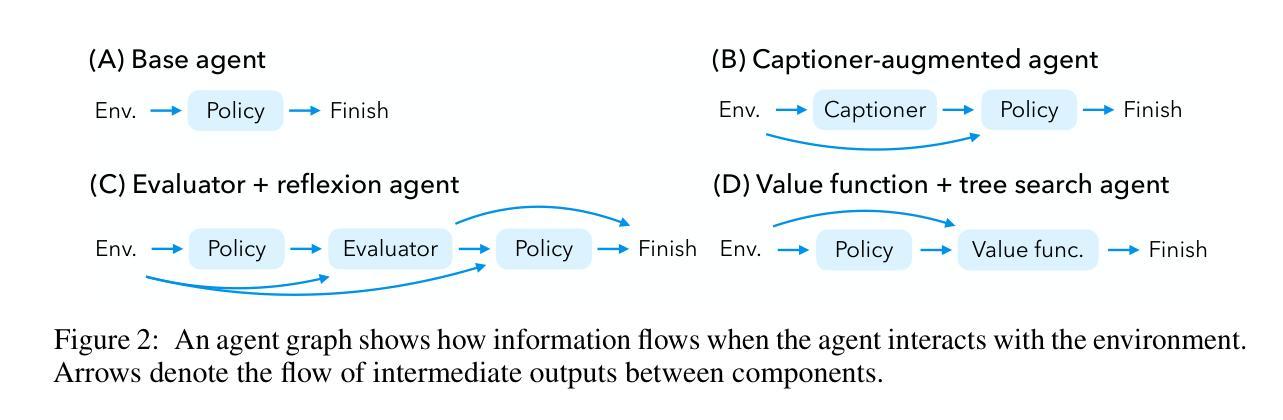

As language models (LMs) are used to build autonomous agents in real environments, ensuring their adversarial robustness becomes a critical challenge. Unlike chatbots, agents are compound systems with multiple components taking actions, which existing LMs safety evaluations do not adequately address. To bridge this gap, we manually create 200 targeted adversarial tasks and evaluation scripts in a realistic threat model on top of VisualWebArena, a real environment for web agents. To systematically examine the robustness of agents, we propose the Agent Robustness Evaluation (ARE) framework. ARE views the agent as a graph showing the flow of intermediate outputs between components and decomposes robustness as the flow of adversarial information on the graph. We find that we can successfully break latest agents that use black-box frontier LMs, including those that perform reflection and tree search. With imperceptible perturbations to a single image (less than 5% of total web page pixels), an attacker can hijack these agents to execute targeted adversarial goals with success rates up to 67%. We also use ARE to rigorously evaluate how the robustness changes as new components are added. We find that inference-time compute that typically improves benign performance can open up new vulnerabilities and harm robustness. An attacker can compromise the evaluator used by the reflexion agent and the value function of the tree search agent, which increases the attack success relatively by 15% and 20%. Our data and code for attacks, defenses, and evaluation are at https://github.com/ChenWu98/agent-attack

随着语言模型(LMs)在真实环境中被用来构建自主代理,确保其对抗性稳健性成为一项关键挑战。与聊天机器人不同,代理是拥有多个组件并采取行动的复合系统,而现有语言模型的安全评估并没有充分解决这一问题。为了弥补这一差距,我们在VisualWebArena这一真实网络代理环境的基础上,手动创建了200个有针对性的对抗性任务和评估脚本,以构建现实威胁模型。为了系统地检查代理的稳健性,我们提出了Agent Robustness Evaluation(ARE)框架。ARE将代理视为一个图形,显示组件之间中间输出的流动,并将稳健性分解为图形上对抗性信息的流动。我们发现,我们可以成功破解使用前沿黑箱语言模型的最新代理,包括进行反射和树搜索的代理。通过对单张图片进行几乎无法察觉的扰动(不到网页总像素的5%),攻击者可以劫持这些代理来实现有针对性的对抗目标,成功率高达67%。我们还使用ARE来严格评估添加新组件时稳健性的变化。我们发现,通常用于提高良性性能的推理时间计算可能会带来新漏洞并损害稳健性。攻击者可以破坏反射代理的评估器和树搜索代理的值函数,这使得攻击成功率相对提高15%和20%。有关攻击、防御和评估的数据和代码位于https://github.com/ChenWu98/agent-attack。

论文及项目相关链接

PDF ICLR 2025. Also oral at NeurIPS 2024 Open-World Agents Workshop

Summary

本文探讨了使用语言模型(LMs)构建自主代理的对抗稳健性问题。为解决现有LM安全评估不足的问题,研究者们在VisualWebArena现实环境中手动创建了200个有针对性的对抗任务和评估脚本。提出了Agent Robustness Evaluation(ARE)框架来系统检验代理的稳健性。研究发现,即使是对图像进行微小的不易察觉的扰动(少于总网页像素的5%),攻击者也能成功劫持代理执行目标对抗任务,成功率高达67%。此外,ARE还能严格评估新组件的加入对稳健性的影响。研究发现,推理时间计算虽然通常能提高良性性能,但也可能带来新的漏洞并损害稳健性。攻击者可能破坏反射代理的评价器和树搜索代理的值函数,相对提高攻击成功率达15%和20%。

Key Takeaways

- 语言模型(LMs)在构建自主代理时面临对抗稳健性的挑战。

- 现有LM安全评估不足以应对复杂代理系统的需求。

- 提出了Agent Robustness Evaluation(ARE)框架来评估代理的稳健性,将代理视为一个图,展示组件间中间输出的流动。

- 通过微小的不易察觉的图像扰动,攻击者可以成功劫持代理执行目标对抗任务,成功率高达67%。

- 推理时间计算在提高良性性能的同时可能引入新的漏洞,降低代理的稳健性。

- ARE框架能够评估新组件对代理稳健性的影响。

点此查看论文截图

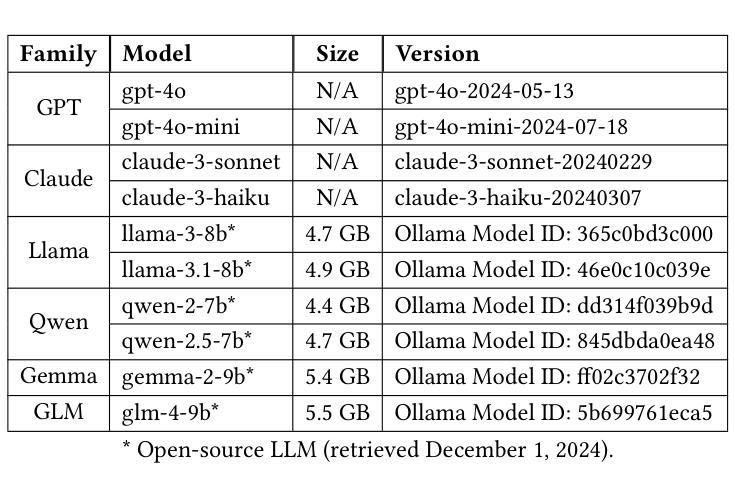

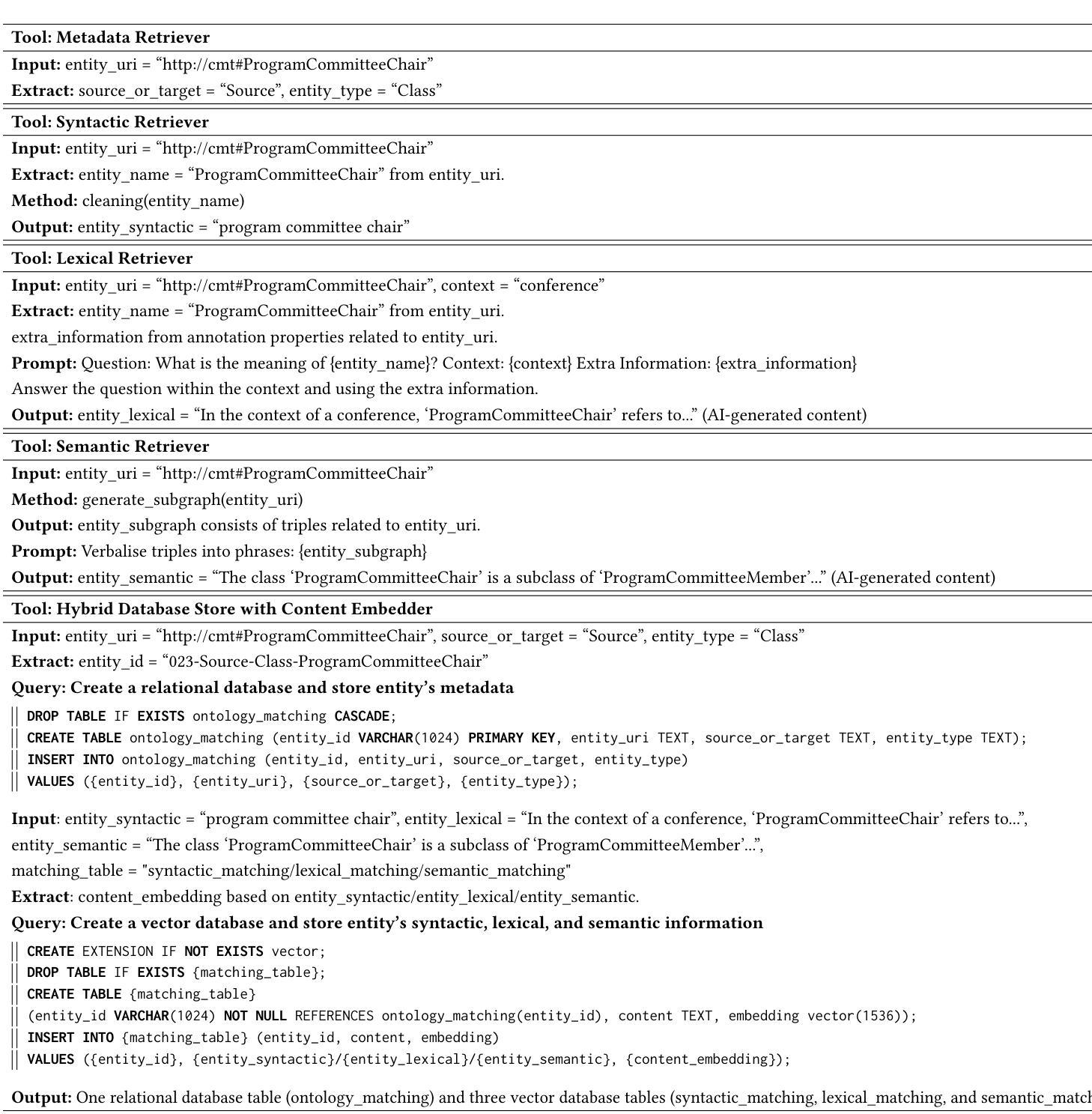

Agent-OM: Leveraging LLM Agents for Ontology Matching

Authors:Zhangcheng Qiang, Weiqing Wang, Kerry Taylor

Ontology matching (OM) enables semantic interoperability between different ontologies and resolves their conceptual heterogeneity by aligning related entities. OM systems currently have two prevailing design paradigms: conventional knowledge-based expert systems and newer machine learning-based predictive systems. While large language models (LLMs) and LLM agents have revolutionised data engineering and have been applied creatively in many domains, their potential for OM remains underexplored. This study introduces a novel agent-powered LLM-based design paradigm for OM systems. With consideration of several specific challenges in leveraging LLM agents for OM, we propose a generic framework, namely Agent-OM (Agent for Ontology Matching), consisting of two Siamese agents for retrieval and matching, with a set of OM tools. Our framework is implemented in a proof-of-concept system. Evaluations of three Ontology Alignment Evaluation Initiative (OAEI) tracks over state-of-the-art OM systems show that our system can achieve results very close to the long-standing best performance on simple OM tasks and can significantly improve the performance on complex and few-shot OM tasks.

本体匹配(OM)能够在不同的本体之间实现语义互操作性,并通过对齐相关实体解决其概念上的异质性。目前,OM系统主要有两种流行的设计范式:传统的基于知识的专家系统和较新的基于机器学习的预测系统。虽然大型语言模型(LLM)和LLM代理已经彻底改变了数据工程,并且已经在许多领域得到了创造性的应用,但它们在OM中的潜力仍然被低估。本研究引入了一种基于代理的LLM设计范式的新型OM系统。考虑到利用LLM代理进行OM面临的若干特定挑战,我们提出了一个通用框架,即Agent-OM(用于本体匹配的代理),它由两个用于检索和匹配的Siamese代理和一组OM工具组成。我们的框架在一个概念验证系统中实现。对最新OM系统进行的三次本体对齐评估倡议(OAEI)轨迹的评估表明,我们的系统在简单OM任务上的结果非常接近长期以来的最佳性能,并且在复杂和少量OM任务上可以显著提高性能。

论文及项目相关链接

PDF 19 pages, 12 figures, 3 tables

Summary

基于本研究的介绍,提出了一种新型基于LLM的智能体赋能的OM系统设计范式。考虑到在利用LLM智能体进行OM时所面临的一些特定挑战,研究提出了一个名为Agent-OM的通用框架,它由两个用于检索和匹配的Siamese智能体组成,并配备了一套OM工具。在OAEI的三个赛道上的评估表明,该系统在简单任务上取得了接近最佳性能的结果,并在复杂任务和零样本任务上显著提高性能。

Key Takeaways

- 介绍了一种新的基于LLM智能体的OM系统设计范式。

- 提出了一种名为Agent-OM的通用框架,用于处理OM任务。

- Agent-OM框架包含两个Siamese智能体进行检索和匹配。

- 该系统具备处理复杂和零样本OM任务的能力。

- 通过利用先进的机器学习技术,如LLM智能体,增强了系统的语义交互操作性。

- 系统的实施得到了原型系统支持。

点此查看论文截图