⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

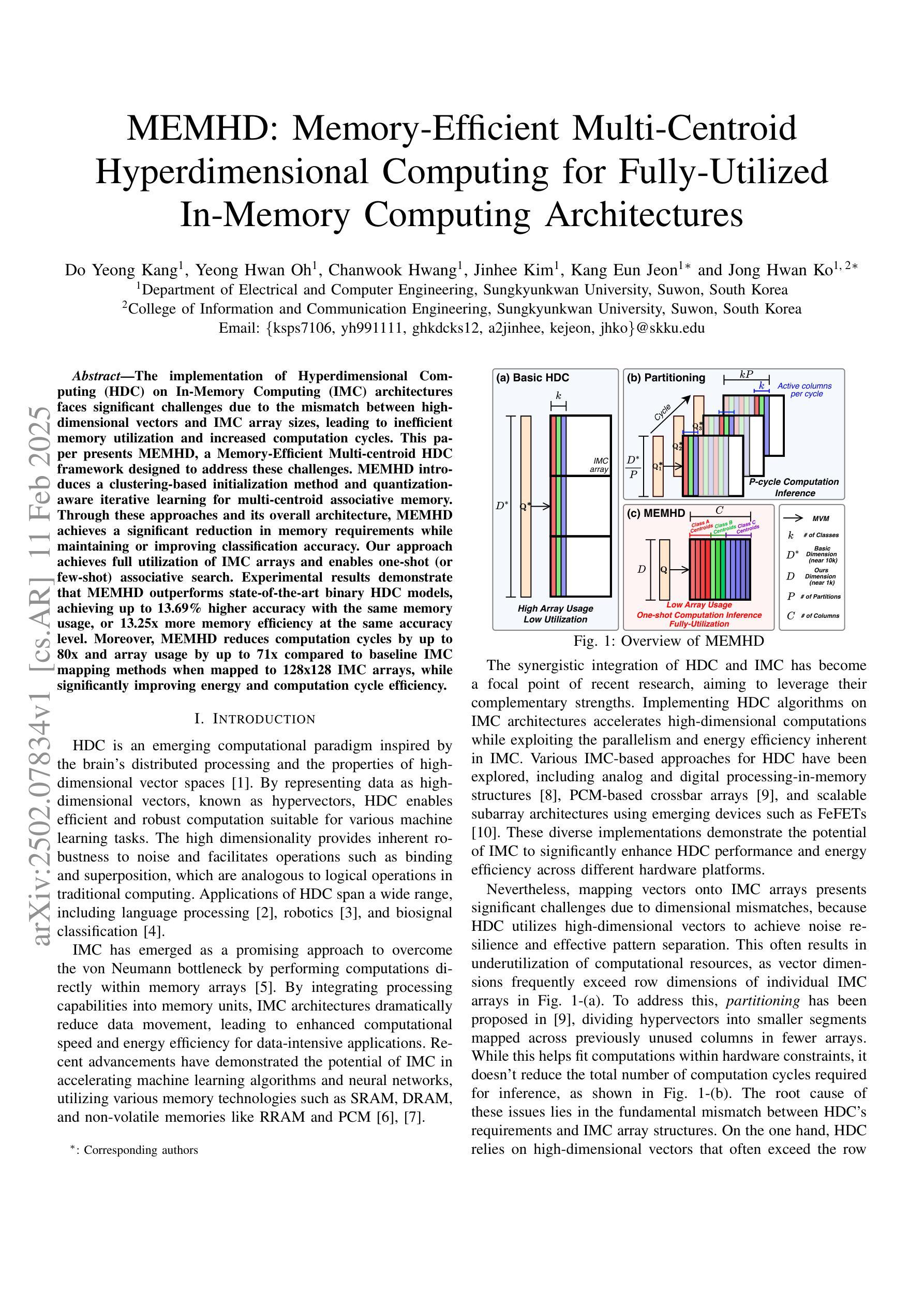

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-02-13 更新

Learning in Markets with Heterogeneous Agents: Dynamics and Survival of Bayesian vs. No-Regret Learners

Authors:David Easley, Yoav Kolumbus, Eva Tardos

We analyze the performance of heterogeneous learning agents in asset markets with stochastic payoffs. Our agents aim to maximize the expected growth rate of their wealth but have different theories on how to learn this best. We focus on comparing Bayesian and no-regret learners in market dynamics. Bayesian learners with a prior over a finite set of models that assign positive prior probability to the correct model have posterior probabilities that converge exponentially to the correct model. Consequently, they survive even in the presence of agents who invest according to the correct model of the stochastic process. Bayesians with a continuum prior converge to the correct model at a rate of $O((\log T)/T)$. Online learning theory provides no-regret algorithms for maximizing the log of wealth in this setting, achieving a worst-case regret bound of $O(\log T)$ without assuming a steady underlying stochastic process but comparing to the best fixed investment rule. This regret, as we observe, is of the same order of magnitude as that of a Bayesian learner with a continuum prior. However, we show that even such low regret may not be sufficient for survival in asset markets: an agent can have regret as low as $O(\log T)$, but still vanish in market dynamics when competing against agents who invest according to the correct model or even against a perfect Bayesian with a finite prior. On the other hand, we show that Bayesian learning is fragile, while no-regret learning requires less knowledge of the environment and is therefore more robust. Any no-regret learner will drive out of the market an imperfect Bayesian whose finite prior or update rule has even small errors. We formally establish the relationship between notions of survival, vanishing, and market domination studied in economics and the framework of regret minimization, thus bridging these theories.

我们分析了异构学习代理人在具有随机收益的资产市场中的表现。我们的代理人旨在最大化其财富的预期增长率,但在如何最好地实现这一目标方面存在不同的理论。我们重点关注比较市场动态的贝叶斯学习和无后悔学习者。带有有限模型集的先验贝叶斯学习者会对正确的模型分配正先验概率,其后验概率会以指数形式收敛到正确的模型。因此,即使在存在根据随机过程的正确模型进行投资的代理人的情况下,他们也能生存下来。具有连续先验的贝叶斯以O((\log T)/T)的速率收敛到正确的模型。在线学习理论提供了在这种情况下最大化财富对数的无后悔算法,即使没有假设稳定的潜在随机过程,与最佳固定投资规则相比,也能达到最坏情况下的后悔界限为O(\log T)。正如我们所观察到的那样,这种后悔与具有连续先验的贝叶斯学习者的后悔程度相同。但是,我们表明,即使在资产市场中,如此低的后悔也可能不足以生存:一个代理人的后悔可能低至O(\log T),但当与根据正确模型进行投资的代理人竞争时,甚至在面对具有有限先验或更新规则存在微小错误的完美贝叶斯时,仍会在市场动态中消失。另一方面,我们证明了贝叶斯学习是脆弱的,而无后悔的学习需要较少的环境知识,因此更加稳健。任何无后悔的学习者都会将存在微小错误的非完美贝叶斯逐出市场。我们正式建立了经济学中研究的生存、消失、市场支配力与后悔最小化的框架之间的关系,从而桥接了这些理论。

论文及项目相关链接

PDF Learning in Markets, Heterogeneous Agents, Regret and Survival

摘要

在随机收益条件下分析异质学习主体在资产市场的表现。主体目标是最大化财富预期增长率,但采用不同的学习方法。文章聚焦于比较贝叶斯主体和无悔学习者在市场动态中的表现。带有有限模型集的贝叶斯主体在正确的模型上具有积极的先验概率,其后验概率以指数方式收敛到正确的模型,即便在存在依据随机过程正确模型进行投资的主体中也能生存下来。具有连续先验的贝叶斯主体以O(logT)/TO(\log T)/TO(logT)/T 的速度收敛到正确的模型。在线学习理论为此类环境中的最大化财富对数提供了无悔算法,在没有假设稳定的随机过程的情况下,其最坏情况下的遗憾界限为O(logT)O(\log T)O(logT)。然而,即使遗憾程度如此之低,也可能无法保证在资产市场的生存能力:主体的遗憾可能低至O(logT)O(\log T)O(logT),但在与依据正确模型进行投资的主体甚至与拥有有限先验的完美贝叶斯主体竞争时,仍可能丧失市场地位。另一方面,贝叶斯学习是脆弱的,而无悔学习对环境知识的要求更少,因此更稳健。任何无悔学习者都将把有微小误差的不完美贝叶斯主体排除出市场。文章正式确立了经济学中的生存、消失、市场支配等概念与后悔最小化的框架之间的关系,从而桥接了这些理论。

关键见解

- 在随机收益条件下,分析了异质学习主体在资产市场的表现,主体采用不同的学习方法最大化财富预期增长率。

- 比较了贝叶斯主体和无悔学习者在市场动态中的表现,发现贝叶斯学习具有收敛速度快的特点,但也存在脆弱性。

- 无悔学习对环境的依赖更少,更具稳健性,可以排除市场中存在微小误差的主体。

- 在经济学中的生存、消失、市场支配概念与后悔最小化理论之间存在密切关系。

- 主体即便具有低后悔度,也可能在竞争激烈的资产市场中丧失生存能力。

- 完美贝叶斯主体具有强大的市场竞争力,即使在面对无悔学习者时也能保持优势。

点此查看论文截图

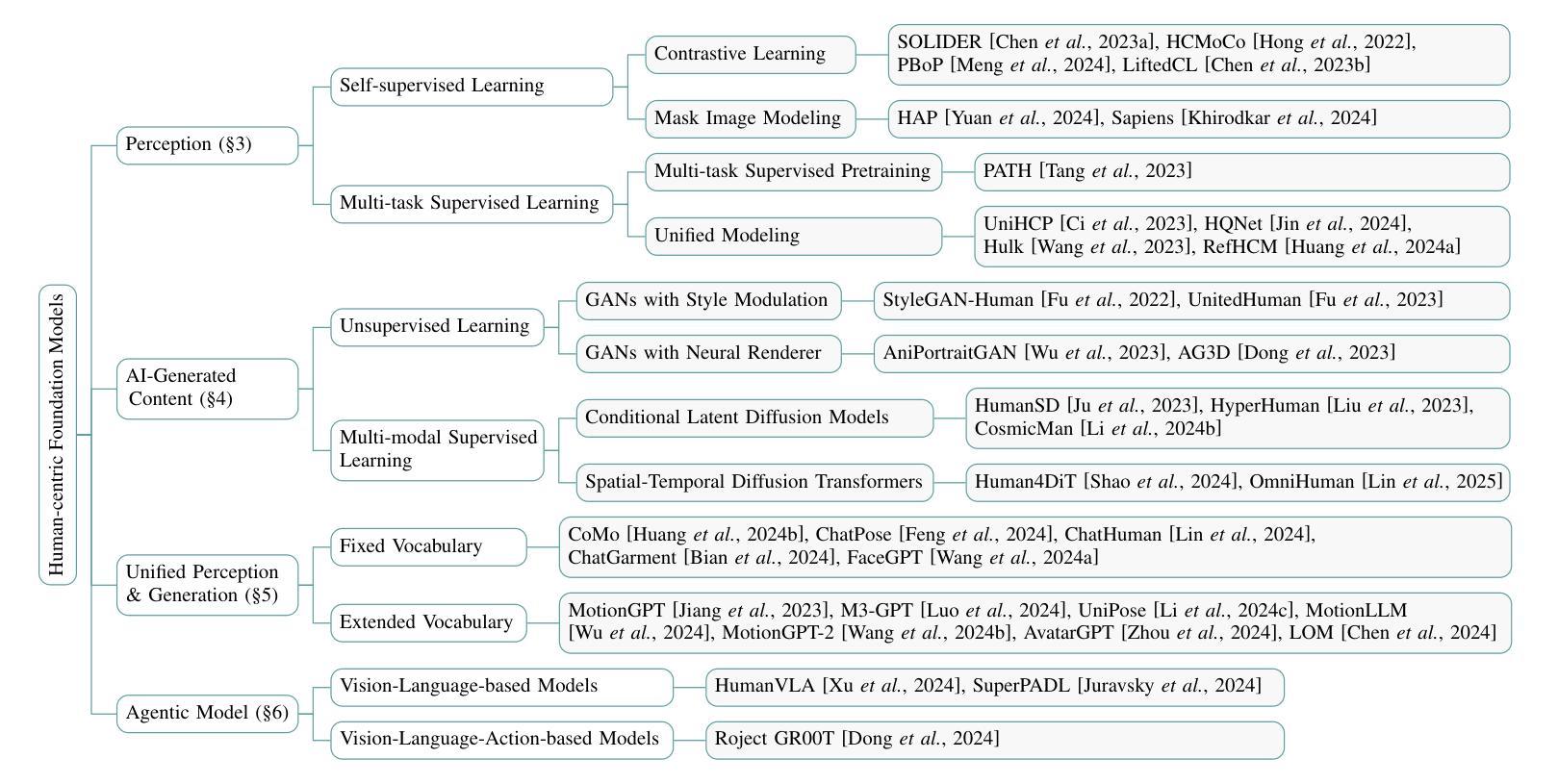

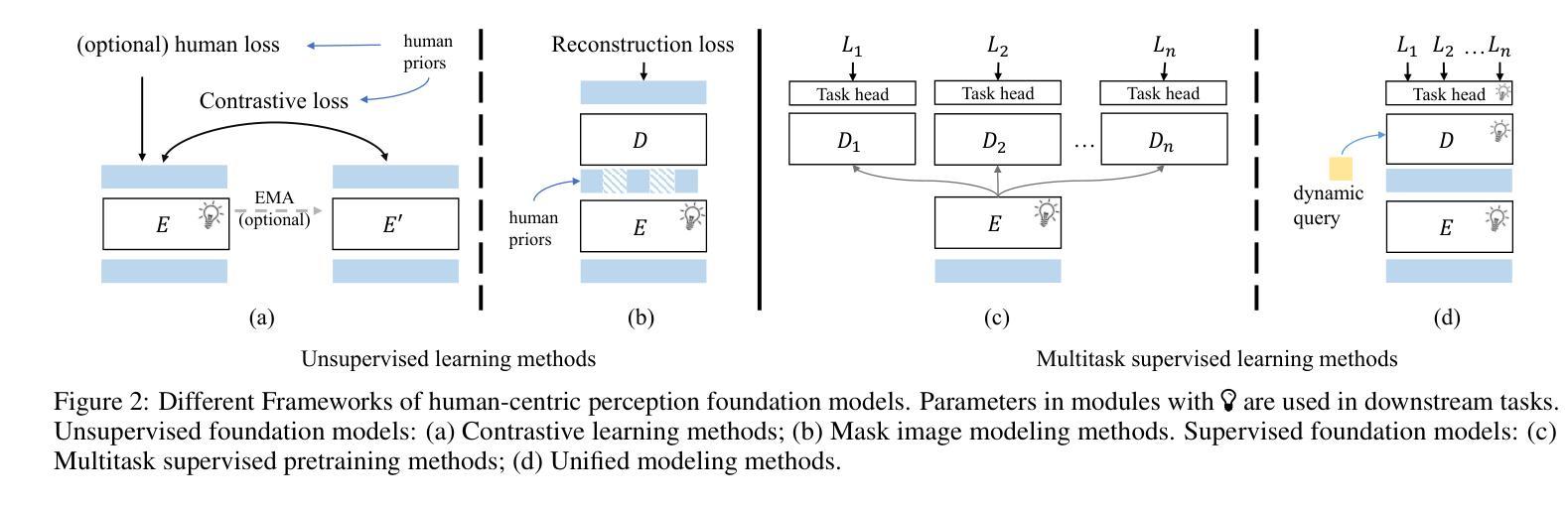

Human-Centric Foundation Models: Perception, Generation and Agentic Modeling

Authors:Shixiang Tang, Yizhou Wang, Lu Chen, Yuan Wang, Sida Peng, Dan Xu, Wanli Ouyang

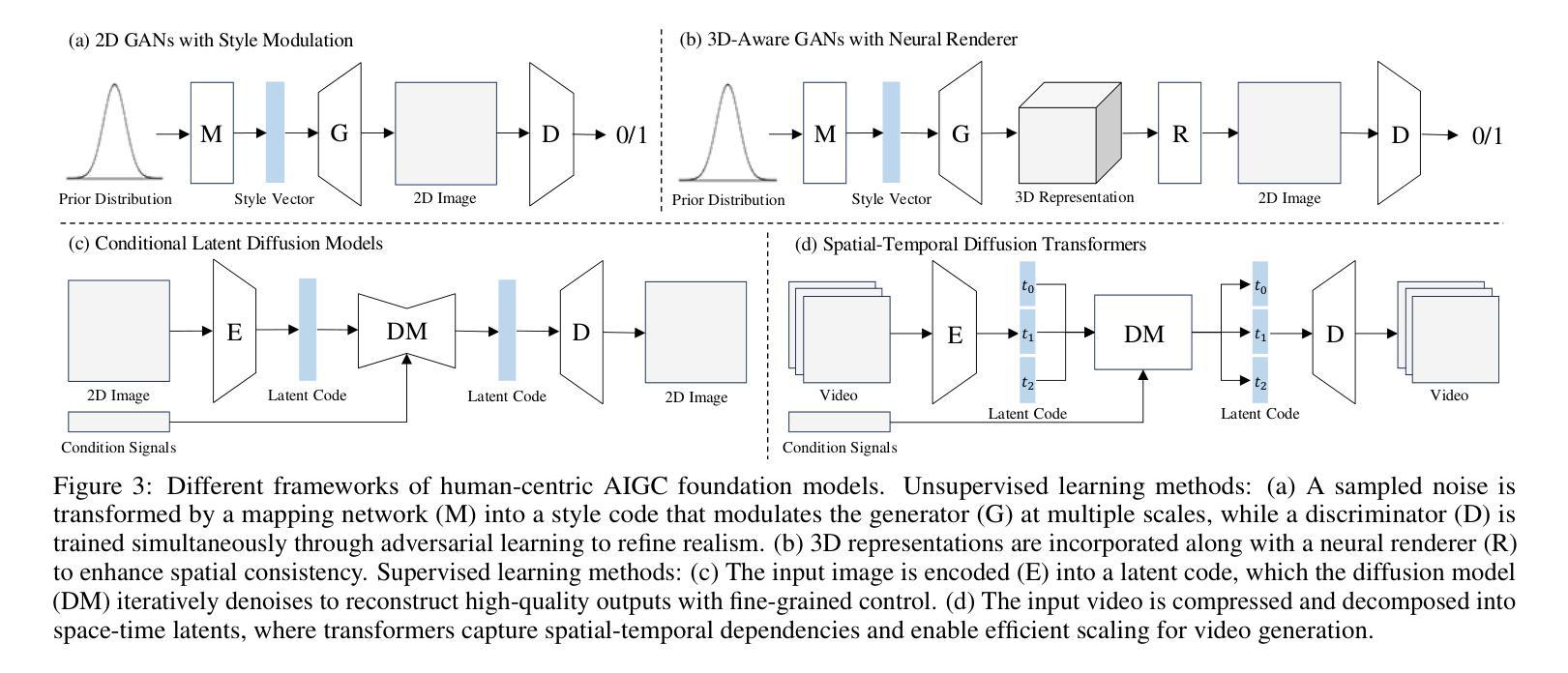

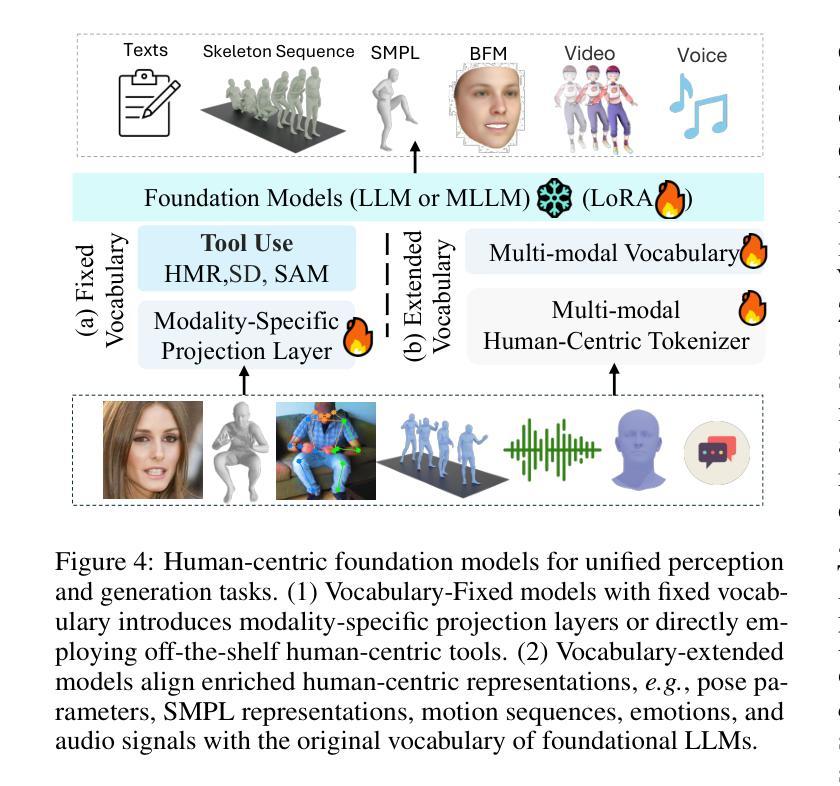

Human understanding and generation are critical for modeling digital humans and humanoid embodiments. Recently, Human-centric Foundation Models (HcFMs) inspired by the success of generalist models, such as large language and vision models, have emerged to unify diverse human-centric tasks into a single framework, surpassing traditional task-specific approaches. In this survey, we present a comprehensive overview of HcFMs by proposing a taxonomy that categorizes current approaches into four groups: (1) Human-centric Perception Foundation Models that capture fine-grained features for multi-modal 2D and 3D understanding. (2) Human-centric AIGC Foundation Models that generate high-fidelity, diverse human-related content. (3) Unified Perception and Generation Models that integrate these capabilities to enhance both human understanding and synthesis. (4) Human-centric Agentic Foundation Models that extend beyond perception and generation to learn human-like intelligence and interactive behaviors for humanoid embodied tasks. We review state-of-the-art techniques, discuss emerging challenges and future research directions. This survey aims to serve as a roadmap for researchers and practitioners working towards more robust, versatile, and intelligent digital human and embodiments modeling.

人类对数字人类和人类实体建模的理解和生成至关重要。最近,受通用模型(如大型语言和视觉模型)成功的启发,以人为本的基础模型(HcFMs)应运而生,将多样化的人类任务统一到一个框架内,超越了传统的针对特定任务的方法。在这篇综述中,我们通过提出一个分类法,对当前方法进行了全面概述,将其分为四类:1)以人为本的感知基础模型,用于捕获多模态2D和3D理解的精细特征。2)以人为本的AIGC基础模型,生成高保真、多样化的人类相关内容。3)统一感知和生成模型,将这些能力结合起来,增强人类对理解和合成的能力。4)以人为本的代理基础模型,超越了感知和生成,学习人类智能和交互式行为,用于人类实体任务。我们回顾了最新技术,讨论了新兴挑战和未来研究方向。这篇综述旨在为朝着更稳健、多功能和智能的数字人类和实体建模工作的研究人员和实践者提供指导。

论文及项目相关链接

PDF 9 pages

Summary:

人类理解和生成能力是构建数字人类和人类体现模型的关键。近期出现的以人类为中心的基石模型(HcFMs),借鉴了通用模型(如大型语言和视觉模型)的成功经验,旨在将多种以人类为中心的任务统一到一个框架中,超越传统的针对特定任务的方法。本文综述了HcFMs的全面发展,并提出了一个分类法,将当前的方法分为四类。本文还回顾了最新技术,讨论了新兴挑战和未来研究方向。本文旨在成为研究者和实践者的路线图,致力于构建更稳健、多功能和智能的数字人类和体现模型。

Key Takeaways:

- HcFMs将多种人类为中心的任务统一到一个框架中,超越了传统任务特定方法。

- HcFMs分为四大类:人类为中心的感知基石模型、人类为中心的AIGC基石模型、统一感知和生成模型、以及以人类为中心的主体基石模型。

- 人类为中心的感知基石模型用于捕捉多模态的精细特征,用于二维和三维理解。

- 人类为中心的AIGC基石模型生成高保真、多样化的人类相关内容。

- 统一感知和生成模型集成了这些能力,提高了人类理解和合成的能力。

- 以人类为中心的主体基石模型超越了感知和生成,学习人类智能和交互行为,用于人类体现任务。

点此查看论文截图

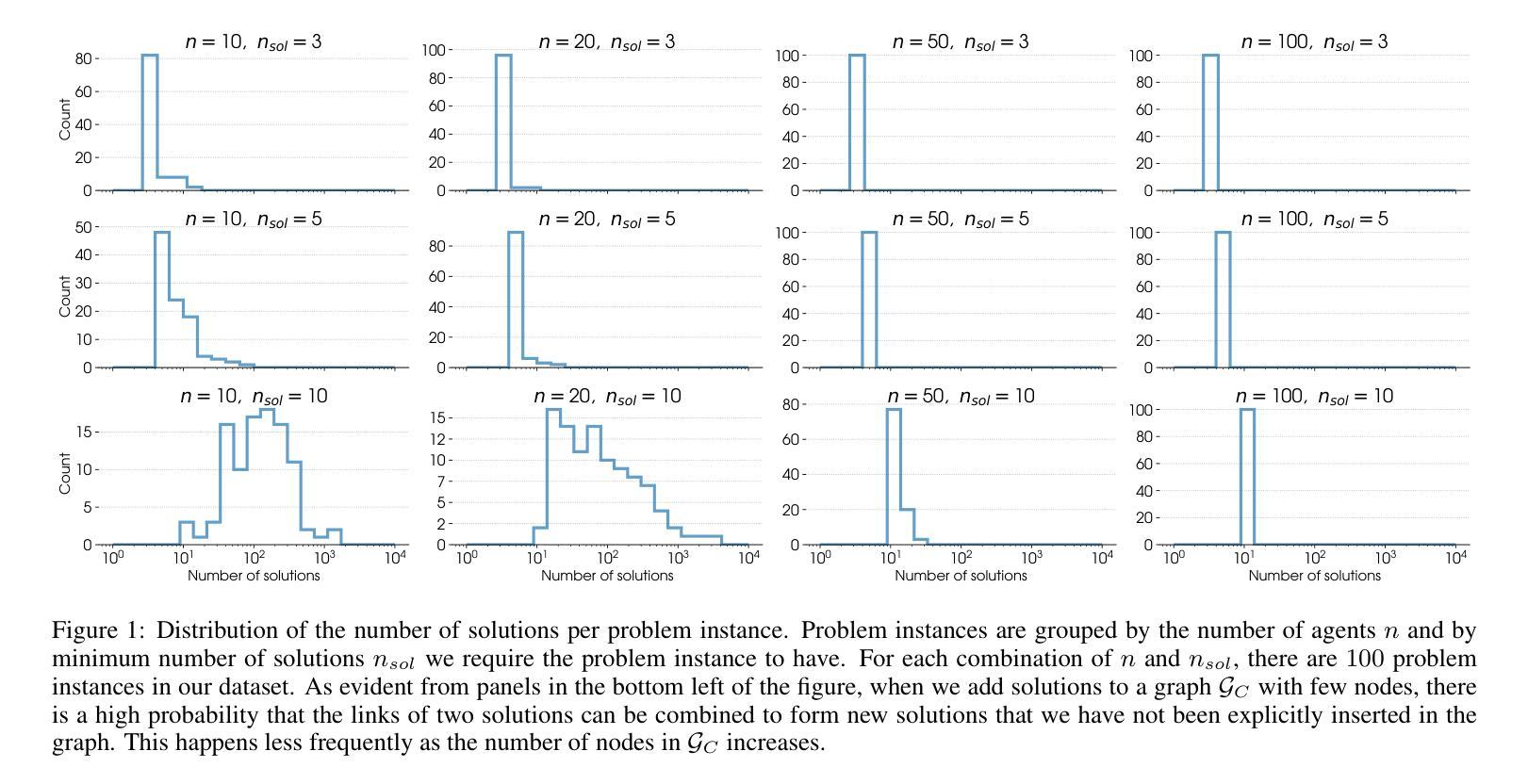

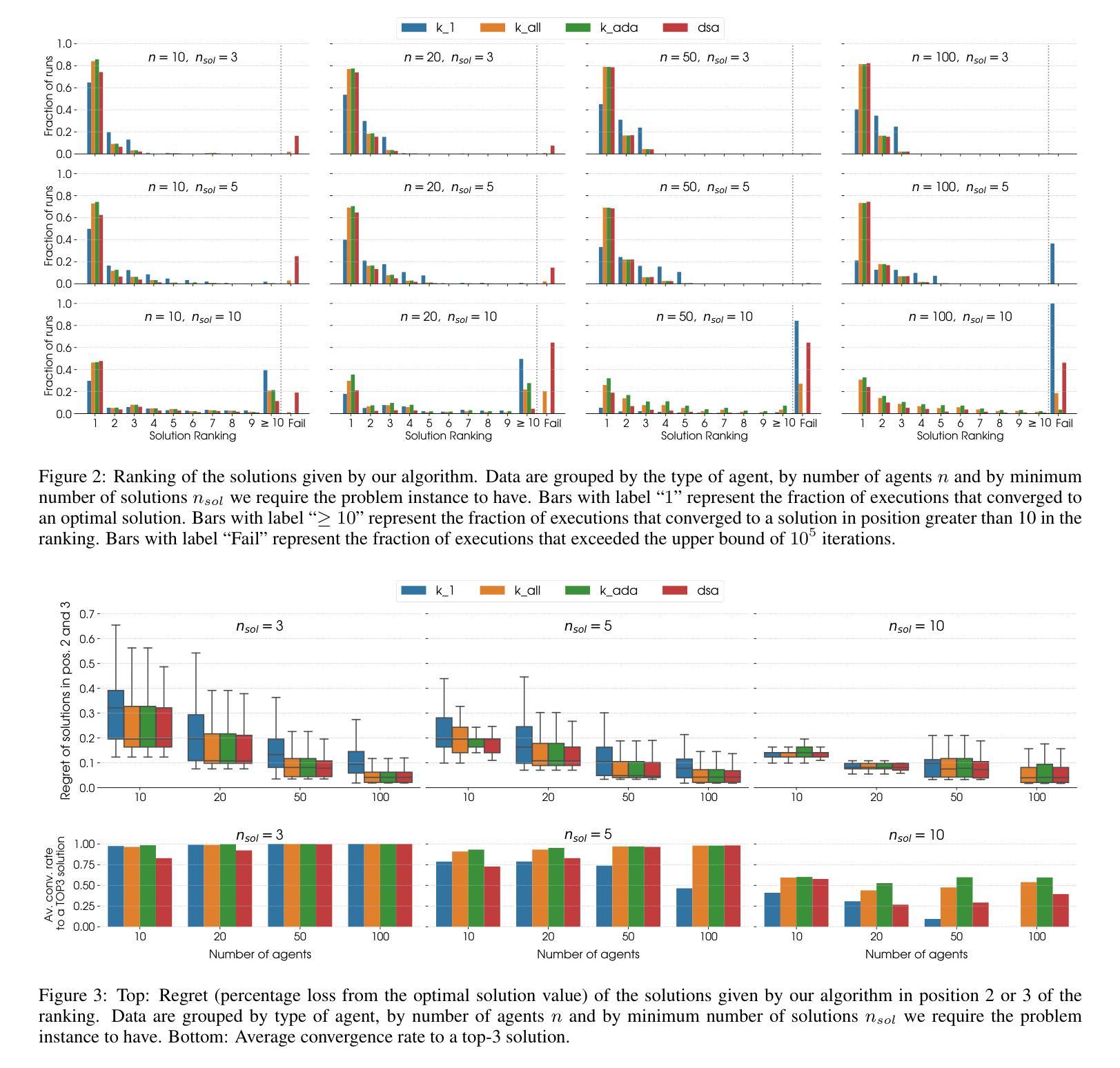

Decentralised multi-agent coordination for real-time railway traffic management

Authors:Leo D’Amato, Paola Pellegrini, Vito Trianni

The real-time Railway Traffic Management Problem (rtRTMP) is a challenging optimisation problem in railway transportation. It involves the efficient management of train movements while minimising delay propagation caused by unforeseen perturbations due to, e.g, temporary speed limitations or signal failures. This paper re-frames the rtRTMP as a multi-agent coordination problem and formalises it as a Distributed Constraint Optimisation Problem (DCOP) to explore its potential for decentralised solutions. We propose a novel coordination algorithm that extends the widely known Distributed Stochastic Algorithm (DSA), allowing trains to self-organise and resolve scheduling conflicts. The performance of our algorithm is compared to a classical DSA through extensive simulations on a synthetic dataset reproducing diverse problem configurations. Results show that our approach achieves significant improvements in solution quality and convergence speed, demonstrating its effectiveness and scalability in managing large-scale railway networks. Beyond the railway domain, this framework can have broader applicability in autonomous systems, such as self-driving vehicles or inter-satellite coordination.

实时铁路交通管理问题(rtRTMP)是铁路运输中一项具有挑战性的优化问题。它涉及火车运动的效率管理,同时最小化由于临时速度限制或信号故障等不可预见的扰动造成的延迟传播。本文将rtRTMP重新构建为一个多智能体协调问题,并将其形式化为分布式约束优化问题(DCOP),以探索其分散式解决方案的潜力。我们提出了一种新型的协调算法,该算法扩展了广为人知的分布式随机算法(DSA),使火车能够自我组织和解决调度冲突。我们通过在一个合成数据集上进行的大量模拟,将算法的性能与一个经典的DSA进行了比较,该数据集重现了多种问题配置。结果表明,我们的方法在解决方案质量和收敛速度上取得了显著的改进,证明了其在管理大规模铁路网络中的有效性和可扩展性。除了铁路领域,该框架还可以更广泛地应用于自主系统,如自动驾驶汽车或卫星间协调。

论文及项目相关链接

Summary

实时铁路交通管理问题(rtRTMP)是铁路运输中的一项具有挑战性的优化问题。本文将其重新构建为多智能体协调问题,并形式化为分布式约束优化问题(DCOP),以探索分散式解决方案的潜力。提出一种新型的协调算法,该算法扩展了众所周知的分布式随机算法(DSA),使列车能够自我组织和解决调度冲突。通过模拟合成数据集的大量仿真与经典DSA进行比较,结果表明,该方法在解决方案质量和收敛速度方面取得了显著改进,在管理大规模铁路网络中表现出有效性和可扩展性。此外,该框架还可应用于自动驾驶车辆或卫星间协调等自主系统。

Key Takeaways

- rtRTMP定义为实时铁路交通管理问题的优化挑战,涉及列车移动的高效管理。

- 论文将rtRTMP重新构建为多智能体协调问题,并形式化为DCOP。

- 提出一种基于DSA的新型协调算法,使列车能自我组织和解决调度冲突。

- 该算法在合成数据集上的模拟结果与经典DSA相比,显示出在解决方案质量和收敛速度上的显著提高。

- 论文证明了该算法在管理大规模铁路网络中的有效性和可扩展性。

- 该框架除了应用于铁路领域外,还可用于自动驾驶车辆或卫星间协调等自主系统。

点此查看论文截图

The Danger of Overthinking: Examining the Reasoning-Action Dilemma in Agentic Tasks

Authors:Alejandro Cuadron, Dacheng Li, Wenjie Ma, Xingyao Wang, Yichuan Wang, Siyuan Zhuang, Shu Liu, Luis Gaspar Schroeder, Tian Xia, Huanzhi Mao, Nicholas Thumiger, Aditya Desai, Ion Stoica, Ana Klimovic, Graham Neubig, Joseph E. Gonzalez

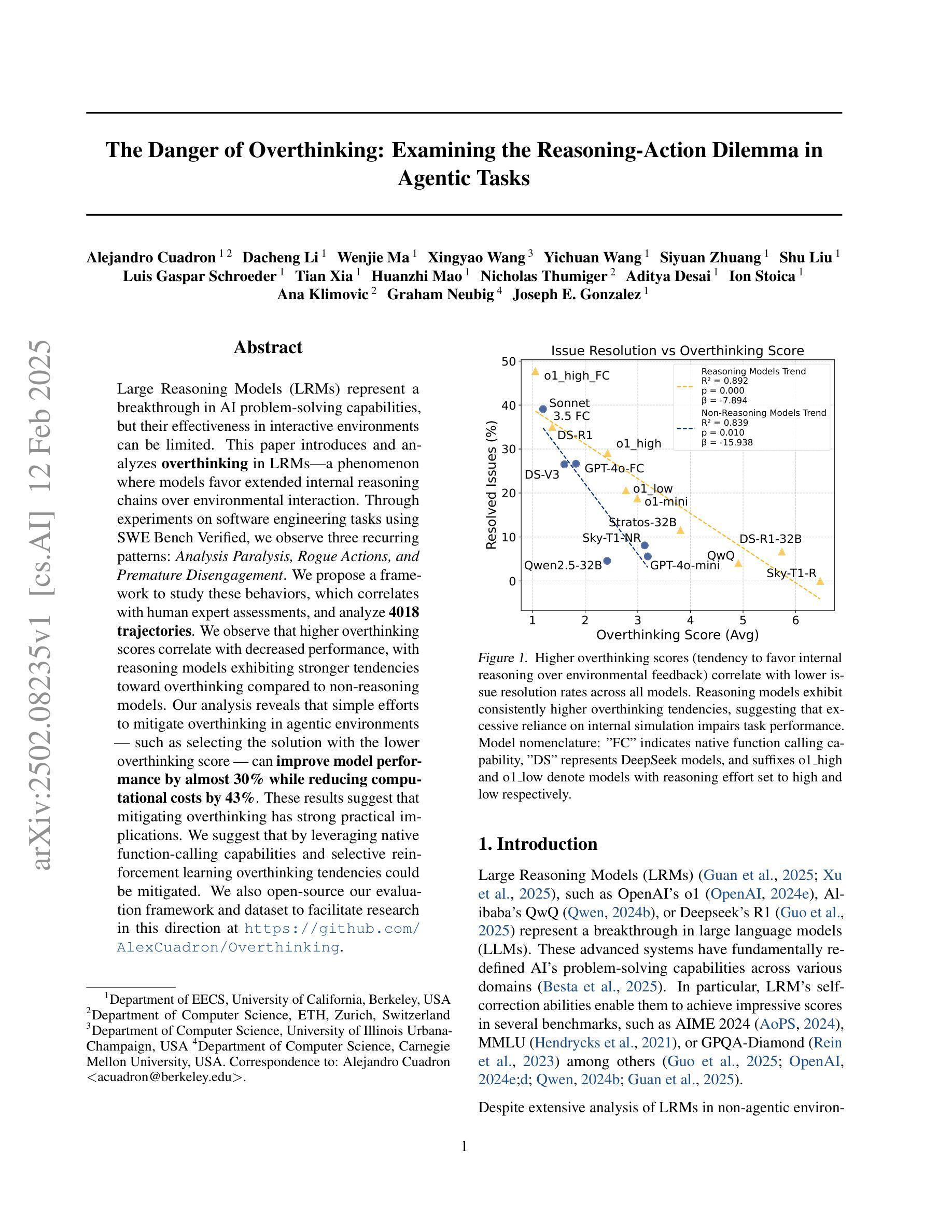

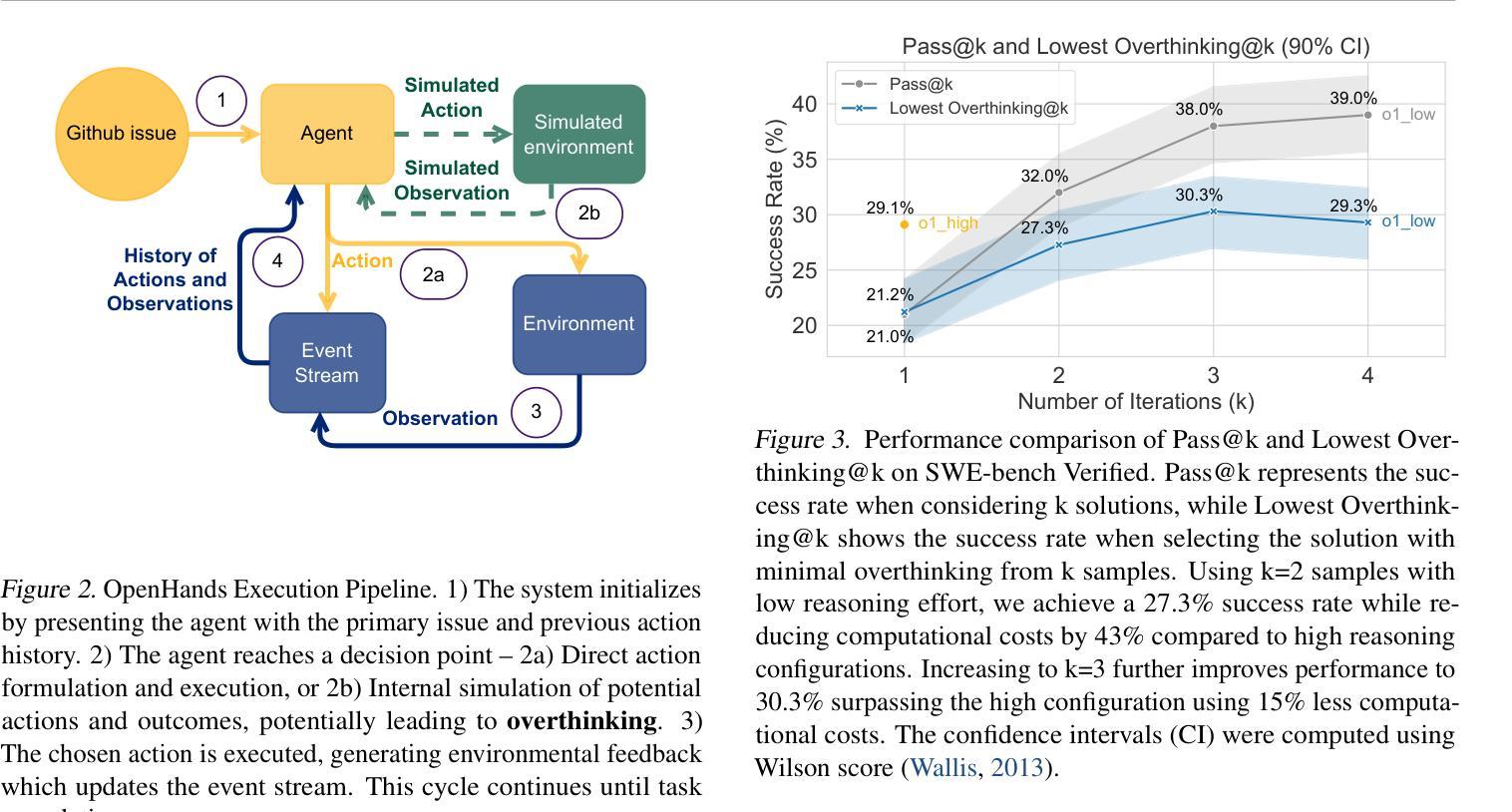

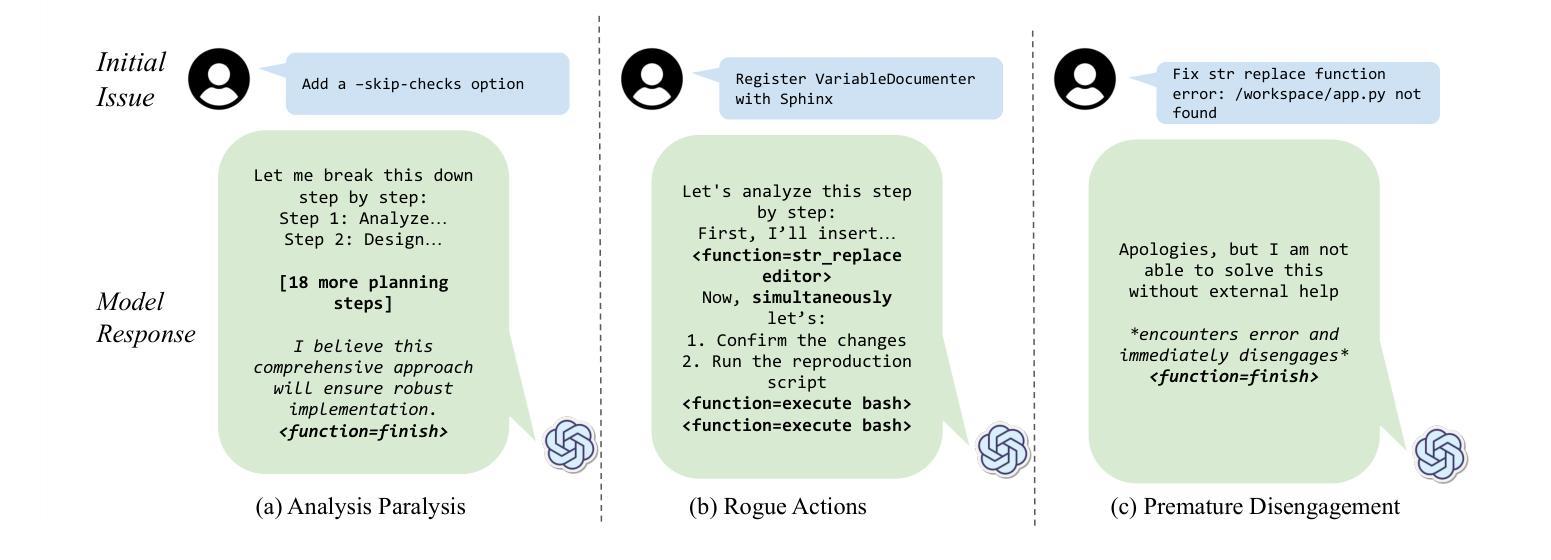

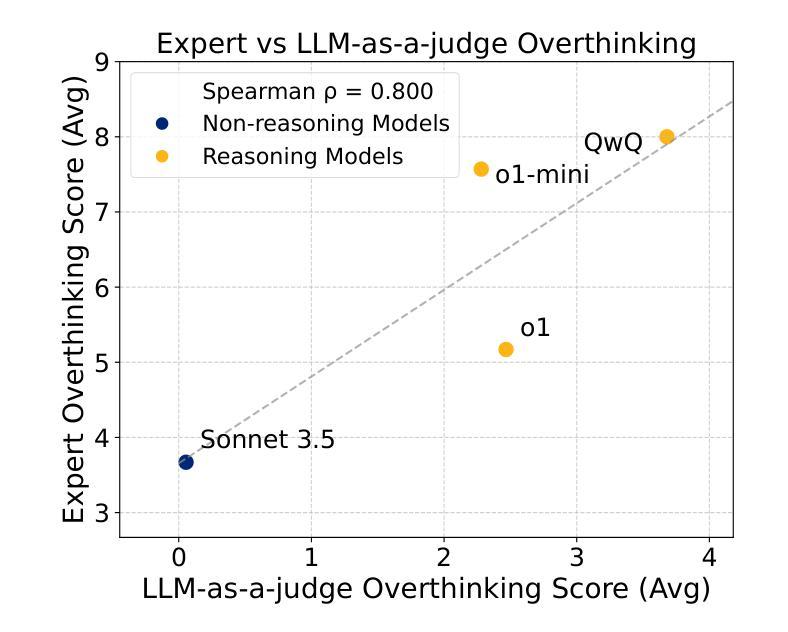

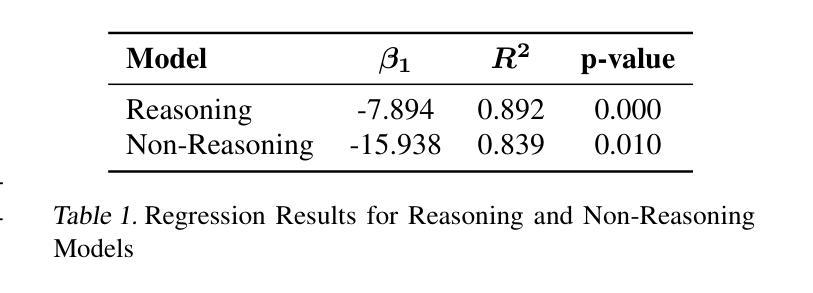

Large Reasoning Models (LRMs) represent a breakthrough in AI problem-solving capabilities, but their effectiveness in interactive environments can be limited. This paper introduces and analyzes overthinking in LRMs. A phenomenon where models favor extended internal reasoning chains over environmental interaction. Through experiments on software engineering tasks using SWE Bench Verified, we observe three recurring patterns: Analysis Paralysis, Rogue Actions, and Premature Disengagement. We propose a framework to study these behaviors, which correlates with human expert assessments, and analyze 4018 trajectories. We observe that higher overthinking scores correlate with decreased performance, with reasoning models exhibiting stronger tendencies toward overthinking compared to non-reasoning models. Our analysis reveals that simple efforts to mitigate overthinking in agentic environments, such as selecting the solution with the lower overthinking score, can improve model performance by almost 30% while reducing computational costs by 43%. These results suggest that mitigating overthinking has strong practical implications. We suggest that by leveraging native function-calling capabilities and selective reinforcement learning overthinking tendencies could be mitigated. We also open-source our evaluation framework and dataset to facilitate research in this direction at https://github.com/AlexCuadron/Overthinking.

大型推理模型(LRMs)代表了人工智能问题解决能力的突破,但它们在交互式环境中的有效性可能会受到限制。本文介绍并分析了大型推理模型中的过度思考现象。这是一种模型偏好延长内部推理链而非与环境互动的现象。通过SWE Bench Verified对软件工程任务的实验,我们观察到三种重复出现的模式:分析瘫痪、意外行动和过早脱离。我们提出了一个研究这些行为的框架,该框架与人类专家评估相关,并分析了4018条轨迹。我们发现较高的过度思考分数与性能下降有关,推理模型表现出比非推理模型更强的过度思考倾向。我们的分析表明,在代理环境中缓解过度思考的简单努力,如选择具有较低过度思考分数的解决方案,可以在几乎不影响性能的情况下提高模型性能约30%,同时降低计算成本43%。这些结果表明缓解过度思考具有重要的实际应用意义。我们建议通过利用原生的函数调用能力以及选择性强化学习来减轻过度思考的倾向。我们还公开了我们的评估框架和数据集,以便在此方向上进行研究:https://github.com/AlexCuadron/Overthinking。

论文及项目相关链接

Summary

大型推理模型(LRMs)在AI领域具有突破性的问题解决能力,但在交互式环境中其有效性受限。本文介绍并分析了LRMs中的过度思考现象,这是模型倾向于延长内部推理链而忽视与环境互动的现象。通过实验分析,我们发现过度思考会导致分析瘫痪、随机行动和过早停止等三种模式。提出一个研究这些行为的框架,该框架与人类专家评估相关,并分析了4018条轨迹。研究发现,过度思考得分较高与表现下降有关,推理模型较非推理模型更易出现过度思考倾向。通过选择具有较低过度思考得分的解决方案等简单方法,可以改善模型性能近30%,同时降低计算成本43%。结果表明减轻过度思考具有实际应用的强大意义。

Key Takeaways

- 大型推理模型(LRMs)在AI领域具有突破性的能力,但在交互式环境中有效性受限。

- 过度思考是LRMs中的一种现象,表现为模型倾向于延长内部推理链而忽视与环境互动。

- 过度思考会导致分析瘫痪、随机行动和过早停止等三种模式。

- 过度思考得分较高与表现下降有关。

- 相比非推理模型,推理模型更容易出现过度思考倾向。

- 通过选择具有较低过度思考得分的解决方案,可以改善模型性能并降低计算成本。

点此查看论文截图

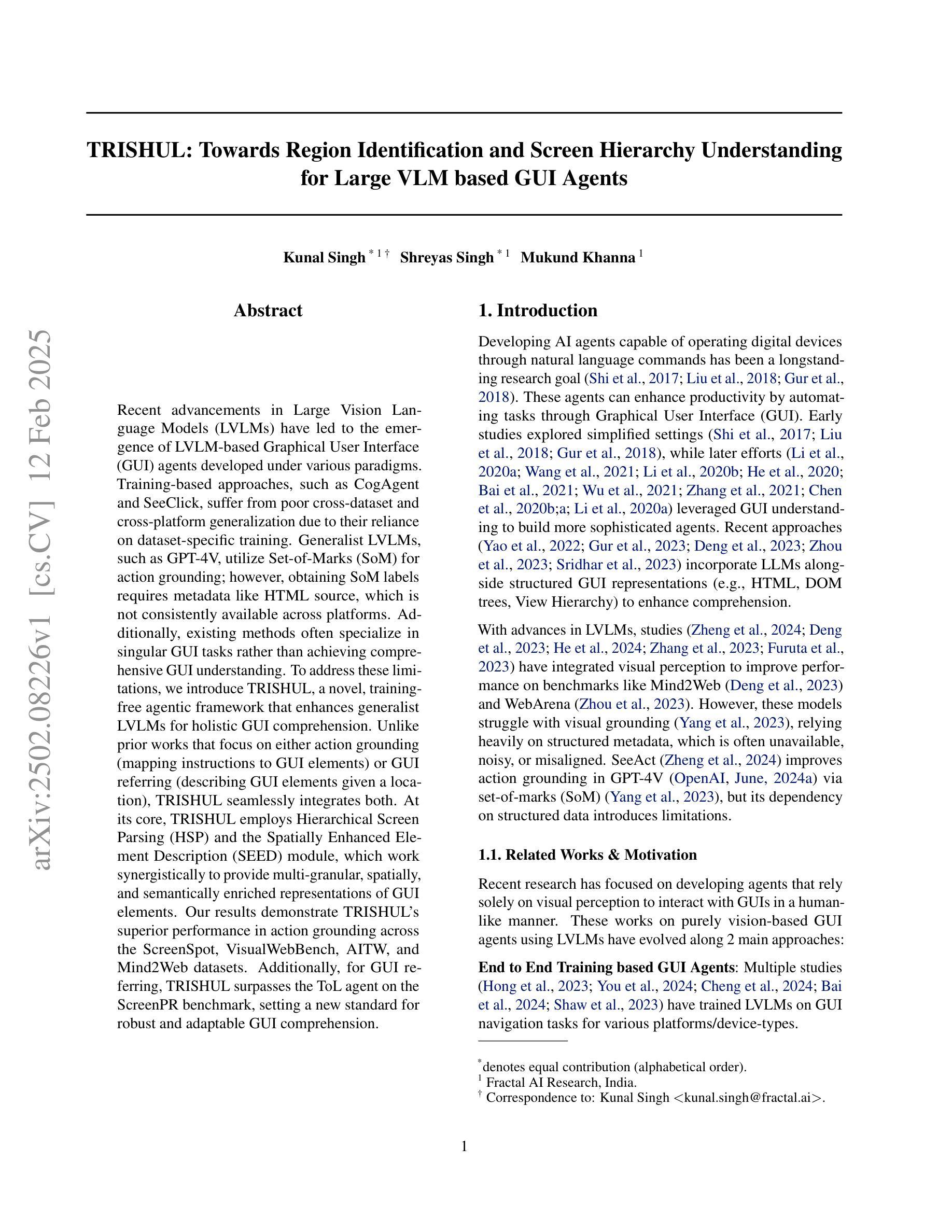

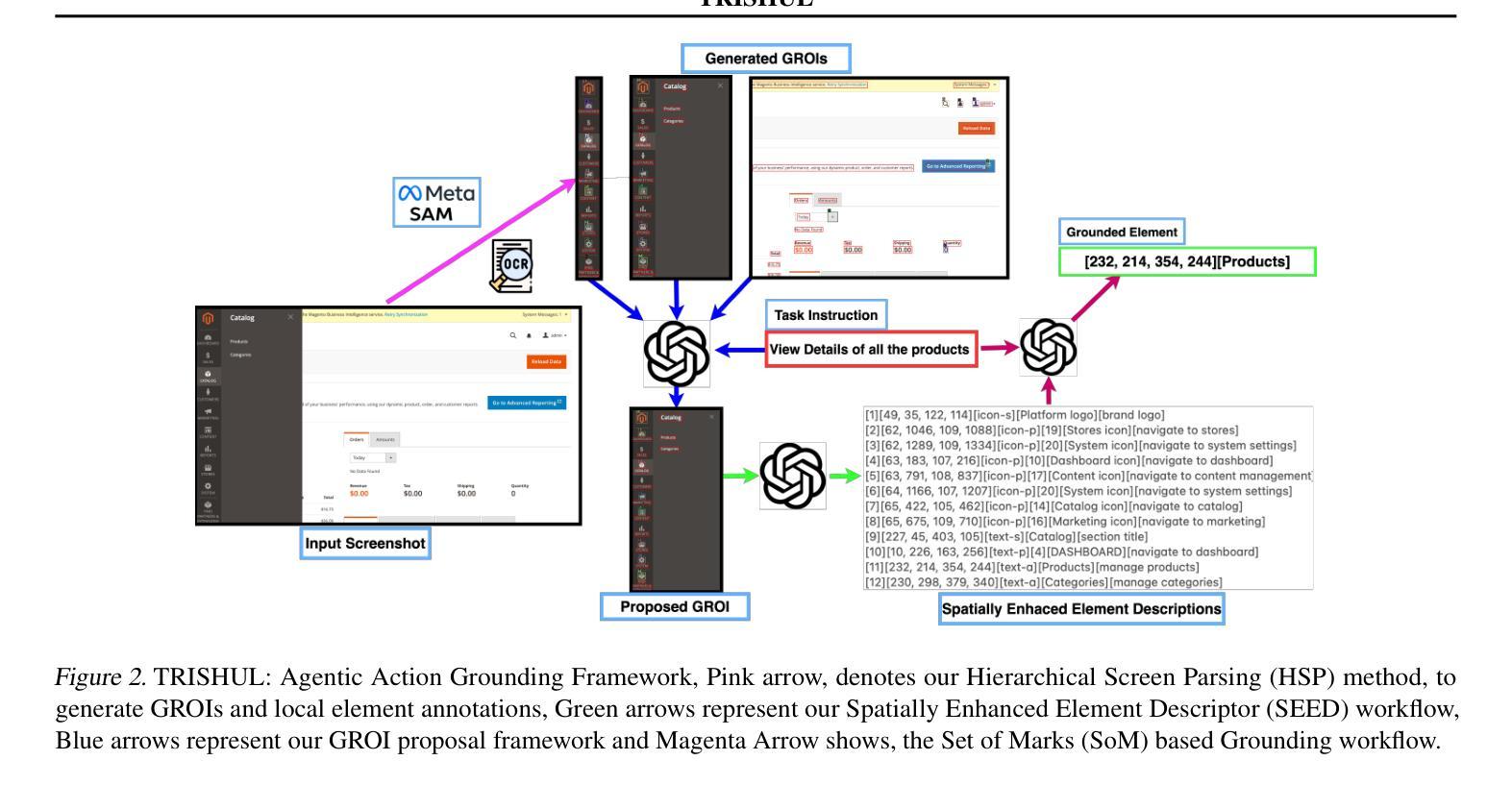

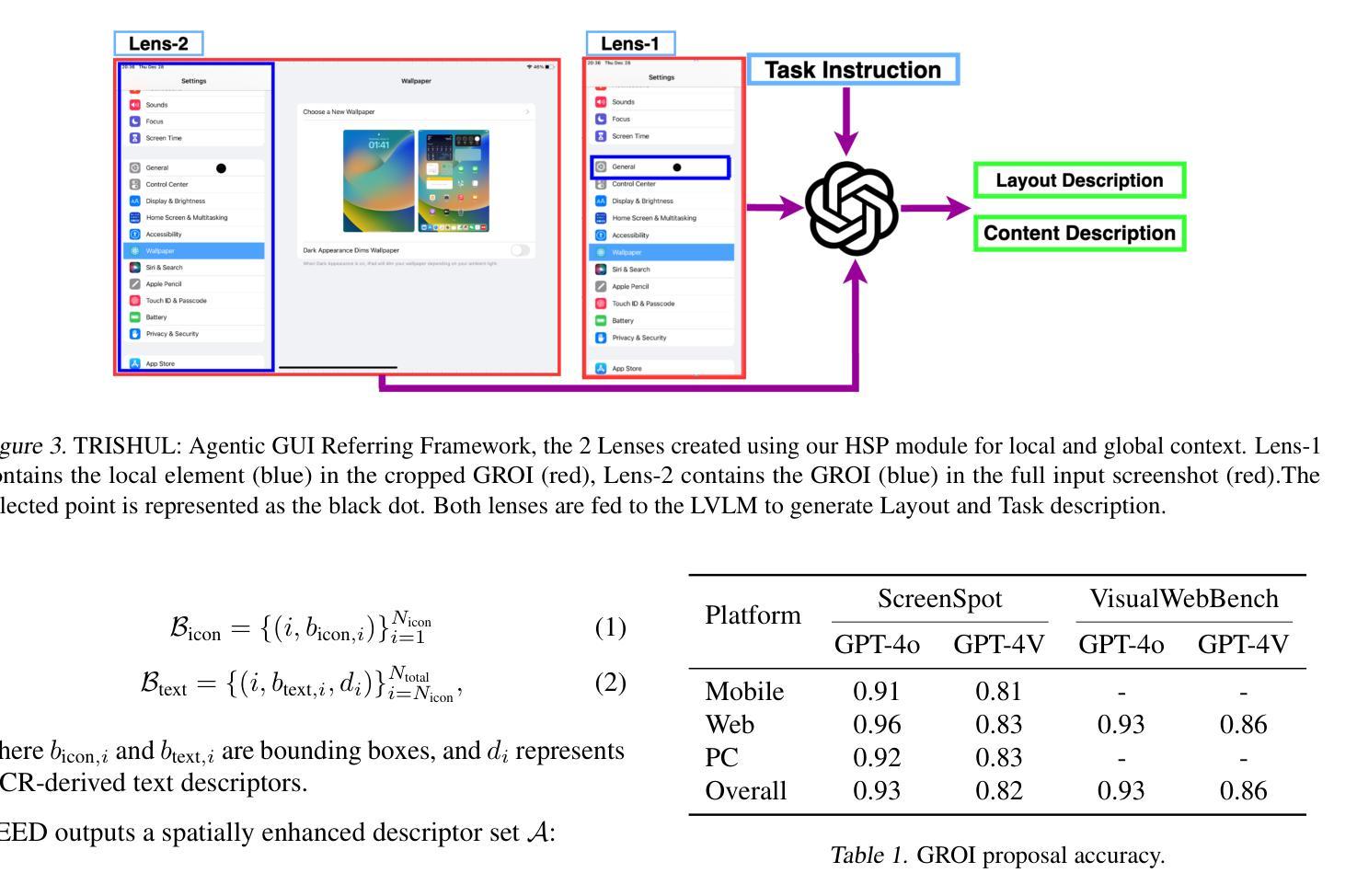

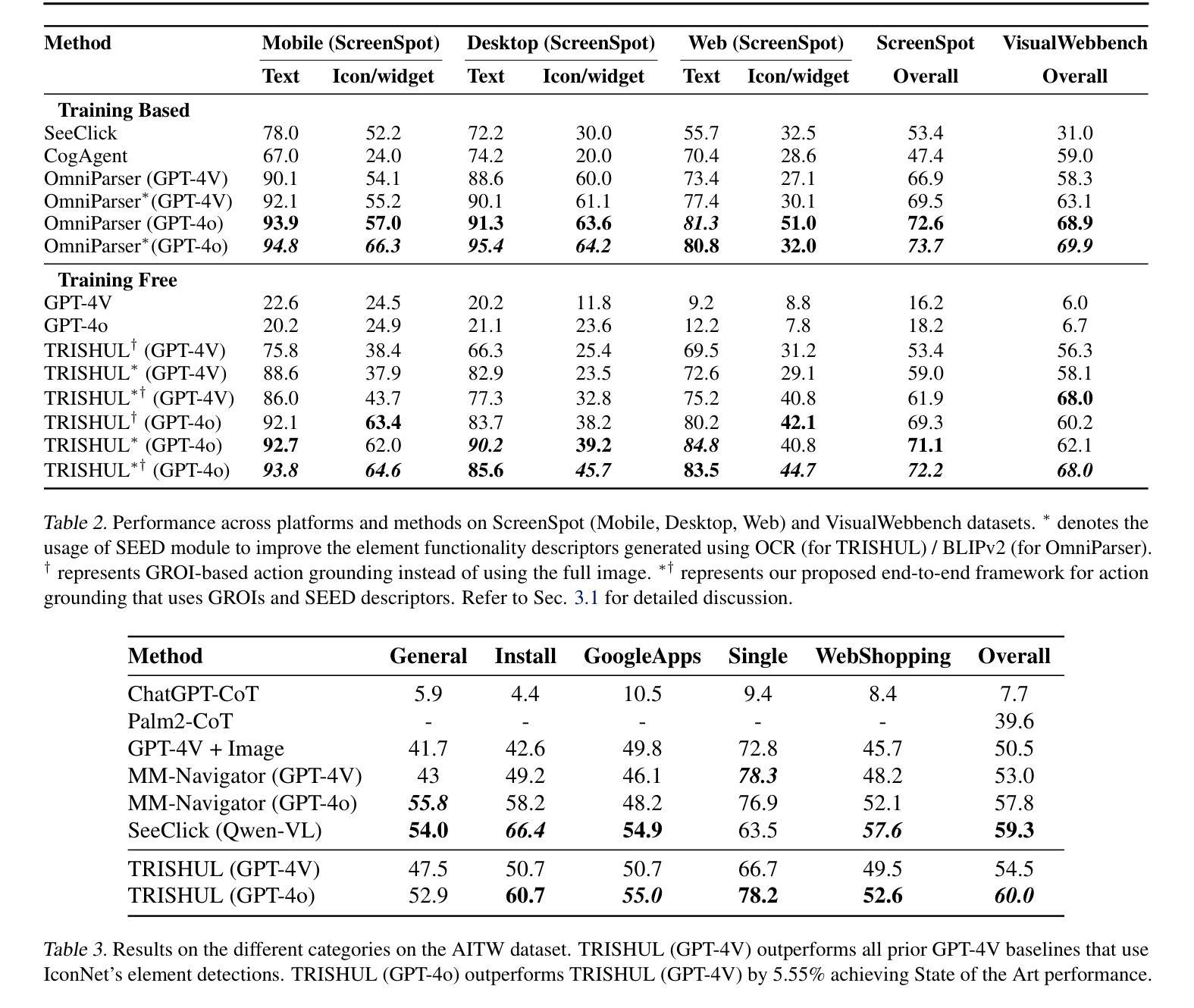

TRISHUL: Towards Region Identification and Screen Hierarchy Understanding for Large VLM based GUI Agents

Authors:Kunal Singh, Shreyas Singh, Mukund Khanna

Recent advancements in Large Vision Language Models (LVLMs) have enabled the development of LVLM-based Graphical User Interface (GUI) agents under various paradigms. Training-based approaches, such as CogAgent and SeeClick, struggle with cross-dataset and cross-platform generalization due to their reliance on dataset-specific training. Generalist LVLMs, such as GPT-4V, employ Set-of-Marks (SoM) for action grounding, but obtaining SoM labels requires metadata like HTML source, which is not consistently available across platforms. Moreover, existing methods often specialize in singular GUI tasks rather than achieving comprehensive GUI understanding. To address these limitations, we introduce TRISHUL, a novel, training-free agentic framework that enhances generalist LVLMs for holistic GUI comprehension. Unlike prior works that focus on either action grounding (mapping instructions to GUI elements) or GUI referring (describing GUI elements given a location), TRISHUL seamlessly integrates both. At its core, TRISHUL employs Hierarchical Screen Parsing (HSP) and the Spatially Enhanced Element Description (SEED) module, which work synergistically to provide multi-granular, spatially, and semantically enriched representations of GUI elements. Our results demonstrate TRISHUL’s superior performance in action grounding across the ScreenSpot, VisualWebBench, AITW, and Mind2Web datasets. Additionally, for GUI referring, TRISHUL surpasses the ToL agent on the ScreenPR benchmark, setting a new standard for robust and adaptable GUI comprehension.

近期大型视觉语言模型(LVLMs)的进步推动了基于LVLM的图形用户界面(GUI)代理在各种范式下的开发。基于训练的方法,如CogAgent和SeeClick,由于依赖于特定数据集的训练,因此在跨数据集和跨平台泛化方面存在困难。通用LVLMs,如GPT-4V,采用标记集(SoM)进行动作定位,但获取SoM标签需要HTML源代码等元数据,而这些元数据在平台间并不总能获取。此外,现有方法往往专注于单一GUI任务,而非实现全面的GUI理解。为了克服这些局限性,我们引入了TRISHUL,这是一种新型的无训练代理框架,可增强通用LVLMs的全局GUI理解能力。不同于以往专注于动作定位(将指令映射到GUI元素)或GUI引用(根据位置描述GUI元素)的研究,TRISHUL无缝集成了两者。其核心采用分层屏幕解析(HSP)和空间增强元素描述(SEED)模块,协同工作以提供多粒度、空间上丰富、语义丰富的GUI元素表示。我们的结果表明,TRISHUL在ScreenSpot、VisualWebBench、AITW和Mind2Web数据集上的动作定位性能优越。此外,对于GUI引用,TRISHUL在ScreenPR基准测试上的表现超越了ToL代理,为稳健和可适应的GUI理解设定了新的标准。

论文及项目相关链接

PDF Under review at ICML 2025, 8 pages 5 figures

Summary

大规模视觉语言模型(LVLM)的最新进展推动了基于LVLM的图形用户界面(GUI)代理在各种范式下的开发。现有方法如CogAgent和SeeClick等基于训练的方法在跨数据集和跨平台泛化方面存在局限。而GPT-4V等通用LVLM则采用标记集(SoM)进行动作定位,但获取SoM标签需要HTML源等元数据,这在各平台之间并不一致。为解决这些问题,我们提出了TRISHUL,这是一种新型的无训练代理框架,旨在提高通用LVLM对GUI的全面理解。TRISHUL不仅聚焦于动作定位(将指令映射到GUI元素),还融合了GUI引用(根据位置描述GUI元素)。其核心采用分层屏幕解析(HSP)和空间增强元素描述(SEED)模块,协同工作以提供多粒度、空间上、语义丰富的GUI元素表示。实验结果证明,TRISHUL在ScreenSpot、VisualWebBench、AITW和Mind2Web数据集上的动作定位性能优越,并在ScreenPR基准测试上的GUI引用表现超越现有代理。

Key Takeaways

- LVLMs的进步推动了GUI代理的开发,但现有方法存在跨数据集和跨平台泛化困难的问题。

- 通用LVLM如GPT-4V采用SoM进行动作定位,但元数据获取不一致。

- TRISHUL是一种新型无训练代理框架,旨在提高LVLM对GUI的全面理解。

- TRISHUL融合了动作定位和GUI引用,提供丰富的GUI元素表示。

- TRISHUL采用HSP和SEED模块协同工作,实现多粒度、空间上、语义丰富的表示。

- TRISHUL在多个数据集上的动作定位性能优越。

点此查看论文截图

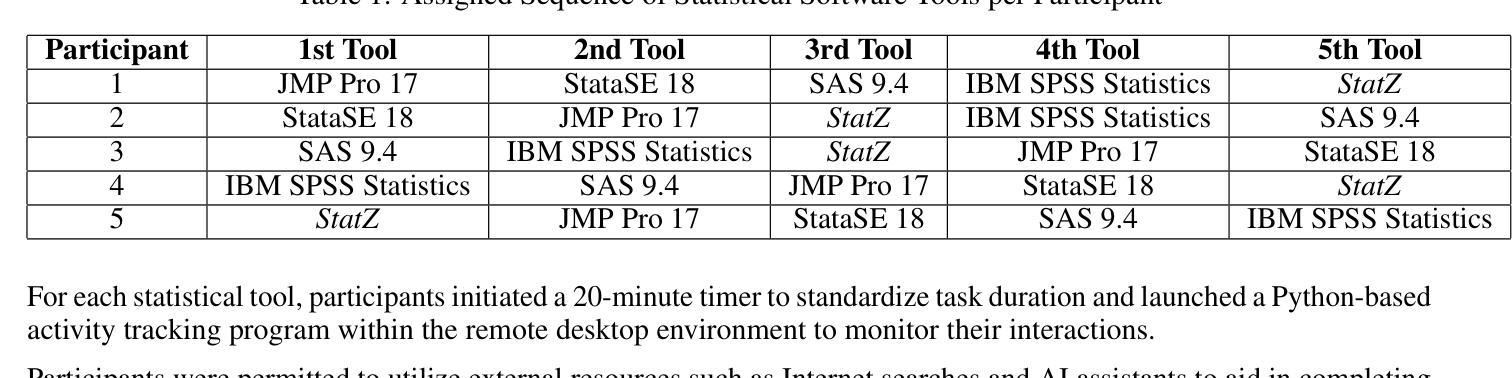

From Clicks to Conversations: Evaluating the Effectiveness of Conversational Agents in Statistical Analysis

Authors:Qifu Wen, Prishita Kochhar, Sherif Zeyada, Tahereh Javaheri, Reza Rawassizadeh

The rapid proliferation of data science forced different groups of individuals with different backgrounds to adapt to statistical analysis. We hypothesize that conversational agents are better suited for statistical analysis than traditional graphical user interfaces (GUI). In this work, we propose a novel conversational agent, StatZ, for statistical analysis. We evaluate the efficacy of StatZ relative to established statistical software:SPSS, SAS, Stata, and JMP in terms of accuracy, task completion time, user experience, and user satisfaction. We combined the proposed analysis question from state-of-the-art language models with suggestions from statistical analysis experts and tested with 51 participants from diverse backgrounds. Our experimental design assessed each participant’s ability to perform statistical analysis tasks using traditional statistical analysis tools with GUI and our conversational agent. Results indicate that the proposed conversational agents significantly outperform GUI statistical software in all assessed metrics, including quantitative (task completion time, accuracy, and user experience), and qualitative (user satisfaction) metrics. Our findings underscore the potential of using conversational agents to enhance statistical analysis processes, reducing cognitive load and learning curves and thereby proliferating data analysis capabilities, to individuals with limited knowledge of statistics.

数据科学的快速发展促使不同背景的个体需要适应统计分析。我们假设对话代理比传统的图形用户界面(GUI)更适合进行统计分析。在这项工作中,我们提出了一种新型的对话代理StatZ用于统计分析。我们评估了StatZ相较于已建立的统计软件SPSS、SAS、Stata和JMP在准确性、任务完成时间、用户体验和用户满意度方面的有效性。我们结合了最先进的语言模型的提议分析问题和统计分析专家的建议,并对来自不同背景的51名参与者进行了测试。我们的实验设计评估了每个参与者使用传统的具有GUI的统计分析工具和我们对话代理执行统计分析任务的能力。结果表明,所提出的对话代理在所有评估指标上均显著优于GUI统计软件,包括定量(任务完成时间、准确性和用户体验)和定性(用户满意度)指标。我们的研究结果表明,使用对话代理可以增强统计分析过程,降低认知负荷和学习曲线,从而使数据分析能力向对统计了解有限的个人普及。

论文及项目相关链接

PDF 20 pages, 6 figures. Under review

Summary

数据科学的快速发展促使不同背景的人们需要适应统计分析。本研究假设对话代理更适合统计分析,与传统图形用户界面(GUI)相比具有优势。本研究提出了一种新型对话代理StatZ用于统计分析,并评估其与SPSS、SAS、Stata和JMP等主流统计软件的准确性、任务完成时间、用户体验和用户满意度。本研究结合最新语言模型的分析问题和统计专家的建议,对来自不同背景的参与者进行测试。实验结果显示,对话代理在各项指标上均显著优于GUI统计软件,包括定量指标(任务完成时间、准确性和用户体验)和定性指标(用户满意度)。本研究突显了对话代理在增强统计分析过程方面的潜力,能降低认知负荷和学习曲线,使个体即使对统计了解有限也能进行数据解析。

Key Takeaways

- 数据科学的快速发展促使不同背景的人需要适应统计分析。

- 对话代理在统计分析方面具有优势,与传统图形用户界面(GUI)相比更适合进行统计分析工作。

- 本研究提出了一种新型对话代理StatZ用于统计分析。

- StatZ与主流统计软件SPSS、SAS、Stata和JMP进行了评估比较。

- 实验结果显示对话代理在任务完成时间、准确性、用户体验和用户满意度等方面均显著优于GUI统计软件。

- 对话代理的潜力在于能够增强统计分析过程,降低认知负荷和学习曲线。

点此查看论文截图

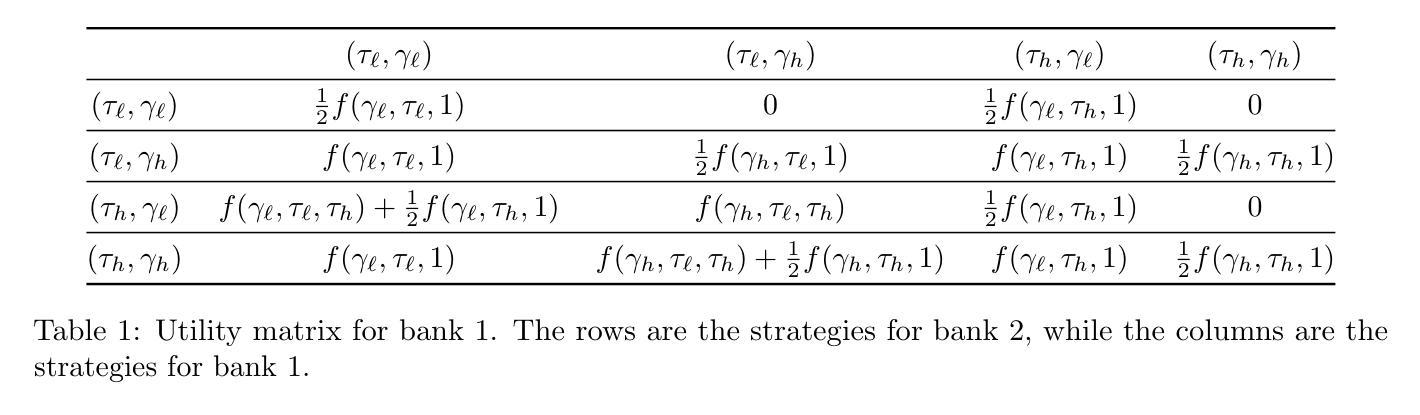

Multi-Agent Performative Prediction Beyond the Insensitivity Assumption: A Case Study for Mortgage Competition

Authors:Guanghui Wang, Krishna Acharya, Lokranjan Lakshmikanthan, Vidya Muthukumar, Juba Ziani

Performative prediction models account for feedback loops in decision-making processes where predictions influence future data distributions. While existing work largely assumes insensitivity of data distributions to small strategy changes, this assumption usually fails in real-world competitive (i.e. multi-agent) settings. For example, in Bertrand-type competitions, a small reduction in one firm’s price can lead that firm to capture the entire demand, while all others sharply lose all of their customers. We study a representative setting of multi-agent performative prediction in which insensitivity assumptions do not hold, and investigate the convergence of natural dynamics. To do so, we focus on a specific game that we call the ‘’Bank Game’’, where two lenders compete over interest rates and credit score thresholds. Consumers act similarly as to in a Bertrand Competition, with each consumer selecting the firm with the lowest interest rate that they are eligible for based on the firms’ credit thresholds. Our analysis characterizes the equilibria of this game and demonstrates that when both firms use a common and natural no-regret learning dynamic – exponential weights – with proper initialization, the dynamics always converge to stable outcomes despite the general-sum structure. Notably, our setting admits multiple stable equilibria, with convergence dependent on initial conditions. We also provide theoretical convergence results in the stochastic case when the utility matrix is not fully known, but each learner can observe sufficiently many samples of consumers at each time step to estimate it, showing robustness to slight mis-specifications. Finally, we provide experimental results that validate our theoretical findings.

表现预测模型在决策过程中考虑了反馈环,其中预测影响未来数据分布。尽管现有工作大多假设数据分布对小幅策略变化不敏感,但在现实世界的竞争(即多智能体)环境中,这一假设通常会失效。例如,在伯特兰德式竞争中,一家公司价格的小幅降低可能会导致其获得全部需求,而其他所有公司都会失去所有客户。我们研究了多智能体表现预测的代表性设置,其中不考虑敏感性的假设不成立,并研究了自然动态的收敛性。为此,我们关注一个特定的游戏,我们称之为“银行游戏”,两家贷款人在利率和信用评分门槛上进行竞争。消费者的行为类似于伯特兰德竞争,每个消费者都会选择利率最低的符合其信用门槛的公司。我们的分析对这场游戏的平衡状态进行了描述,并证明当两家公司都采用一种通用的无遗憾学习动态——指数权重,并进行了适当的初始化时,尽管是总收益结构,这种动态总是会收敛到稳定的结果。值得注意的是,我们的设置允许存在多个稳定平衡点,收敛情况取决于初始条件。当效用矩阵不完全已知,但每个学习者可以在每个时间步观察足够多的消费者样本来估计它时,我们还提供了理论上的收敛结果,证明了其对轻微误指定的稳健性。最后,我们提供了实验结果来验证我们的理论发现。

论文及项目相关链接

Summary

本文研究了多智能体表现预测的一个代表性场景,其中数据分布对策略变化不敏感假设不成立。文章以一个名为“银行游戏”的博弈为例,分析了两家贷款机构在利率和信用评分门槛上的竞争。消费者的行为类似于伯特兰德竞争,会选择利率最低且符合自身信用门槛的机构。文章重点分析了该博弈的均衡状态,并指出当两家机构采用通用的无后悔学习动态——指数权重,并适当初始化时,即便是在一般和博弈结构中,也能达到稳定结果。文章还探讨了不确定情境下的理论收敛结果,并通过实验验证了理论发现。

Key Takeaways

- 表现预测模型考虑决策过程中的反馈环路,预测影响未来数据分布。

- 现有工作大多假设数据分布对策略变化不敏感,但在现实竞争环境中这一假设常不成立。

- “银行游戏”作为多智能体表现预测的代表场景,分析了两家贷款机构在利率和信用评分门槛上的竞争。

- 消费者行为与伯特兰德竞争相似,选择符合自身信用门槛且利率最低的机构。

- 采用无后悔学习动态和适当的初始化,能够达到稳定结果,且收敛与初始条件有关。

- 博弈存在多个稳定均衡状态。

点此查看论文截图

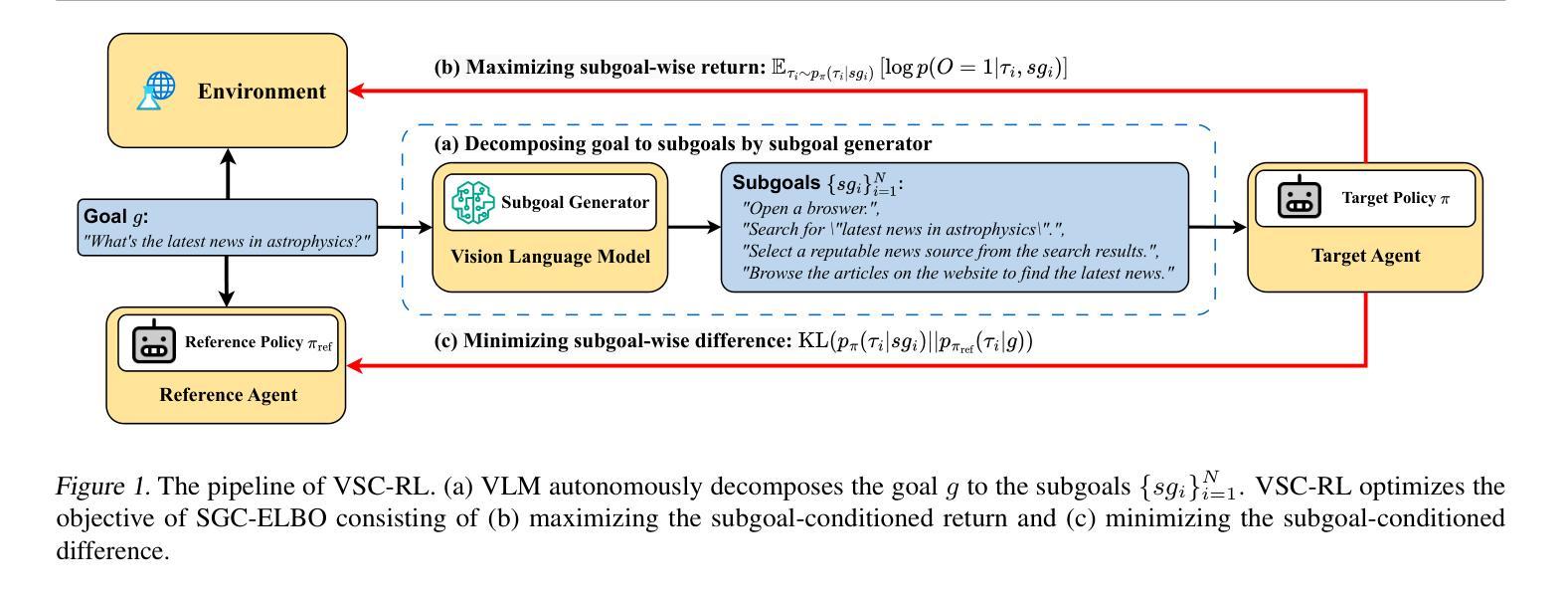

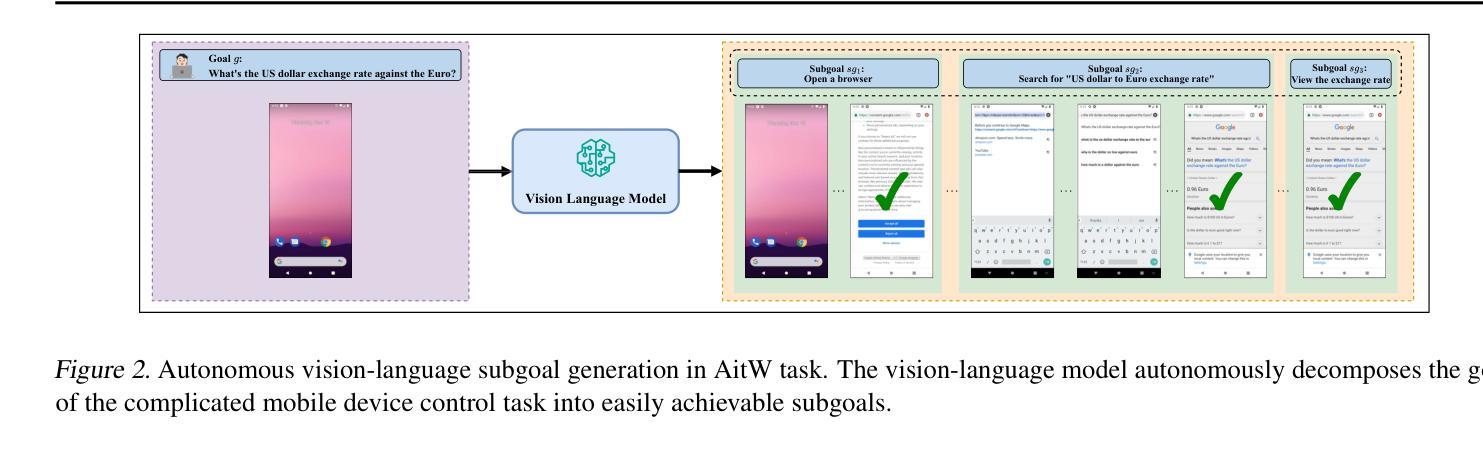

VSC-RL: Advancing Autonomous Vision-Language Agents with Variational Subgoal-Conditioned Reinforcement Learning

Authors:Qingyuan Wu, Jianheng Liu, Jianye Hao, Jun Wang, Kun Shao

State-of-the-art (SOTA) reinforcement learning (RL) methods enable the vision-language agents to learn from interactions with the environment without human supervision. However, they struggle with learning inefficiencies in tackling real-world complex sequential decision-making tasks, especially with sparse reward signals and long-horizon dependencies. To effectively address the issue, we introduce Variational Subgoal-Conditioned RL (VSC-RL), which reformulates the vision-language sequential decision-making task as a variational goal-conditioned RL problem, allowing us to leverage advanced optimization methods to enhance learning efficiency. Specifically, VSC-RL optimizes the SubGoal Evidence Lower BOund (SGC-ELBO), which consists of (a) maximizing the subgoal-conditioned return via RL and (b) minimizing the subgoal-conditioned difference with the reference policy. We theoretically demonstrate that SGC-ELBO is equivalent to the original optimization objective, ensuring improved learning efficiency without sacrificing performance guarantees. Additionally, for real-world complex decision-making tasks, VSC-RL leverages the vision-language model to autonomously decompose the goal into feasible subgoals, enabling efficient learning. Across various benchmarks, including challenging real-world mobile device control tasks, VSC-RL significantly outperforms the SOTA vision-language agents, achieving superior performance and remarkable improvement in learning efficiency.

当前最先进的强化学习方法使视觉语言代理能够在与环境的交互中学习,无需人类监督。然而,它们在处理现实世界中的复杂序列决策任务时,面临着学习效率低的问题,特别是在奖励信号稀疏和长期依赖的情况下。为了有效解决这一问题,我们引入了变分子目标条件强化学习(VSC-RL),它将视觉语言序列决策任务重新定义为变分子目标条件强化学习问题,使我们能够利用先进的优化方法来提高学习效率。具体来说,VSC-RL优化了子目标证据下界(SGC-ELBO),这包括(a)通过强化学习最大化子目标条件下的回报和(b)最小化子目标条件与参考策略之间的差异。我们从理论上证明了SGC-ELBO等同于原始的优化目标,可以在不牺牲性能保证的情况下提高学习效率。此外,对于现实世界的复杂决策任务,VSC-RL利用视觉语言模型自主地将目标分解为可行的子目标,从而实现高效学习。在包括具有挑战性的现实世界移动设备控制任务在内的各种基准测试中,VSC-RL显著优于最先进的视觉语言代理,在性能和学习效率方面取得了卓越的表现和显著的改进。

论文及项目相关链接

Summary

视觉语言强化学习模型在面对真实世界复杂序列决策任务时面临学习效率低下的问题,尤其是在稀疏奖励信号和长期依赖的问题下。针对这些问题,我们提出变分子目标条件强化学习(VSC-RL),将视觉语言序列决策任务重新表述为变分子目标条件强化学习问题,利用先进的优化方法提高学习效率。VSC-RL优化子目标证据下界(SGC-ELBO),包括最大化子目标条件下的回报和最小化子目标与参考策略之间的差异。VSC-RL在真实世界的复杂决策任务中,能够自主地将目标分解为可行的子目标,从而实现高效学习。在多个基准测试中,VSC-RL显著优于最先进的视觉语言模型,在学习效率和性能上均表现出卓越的表现。

Key Takeaways

- 强化学习在视觉语言代理中对于环境交互的无监督学习至关重要。

- 然而,最先进的强化学习方法在处理真实世界的复杂序列决策任务时存在学习效率低下的问题。

- 变分子目标条件强化学习(VSC-RL)被引入以解决这一问题。

- VSC-RL将视觉语言序列决策任务重新表述为变分子目标条件强化学习问题。

- VSC-RL优化子目标证据下界(SGC-ELBO),包括最大化子目标条件下的回报和最小化与参考策略的差异。

- VSC-RL在自主分解目标为子目标方面表现出优势,提高了复杂决策任务的学习效率。

点此查看论文截图

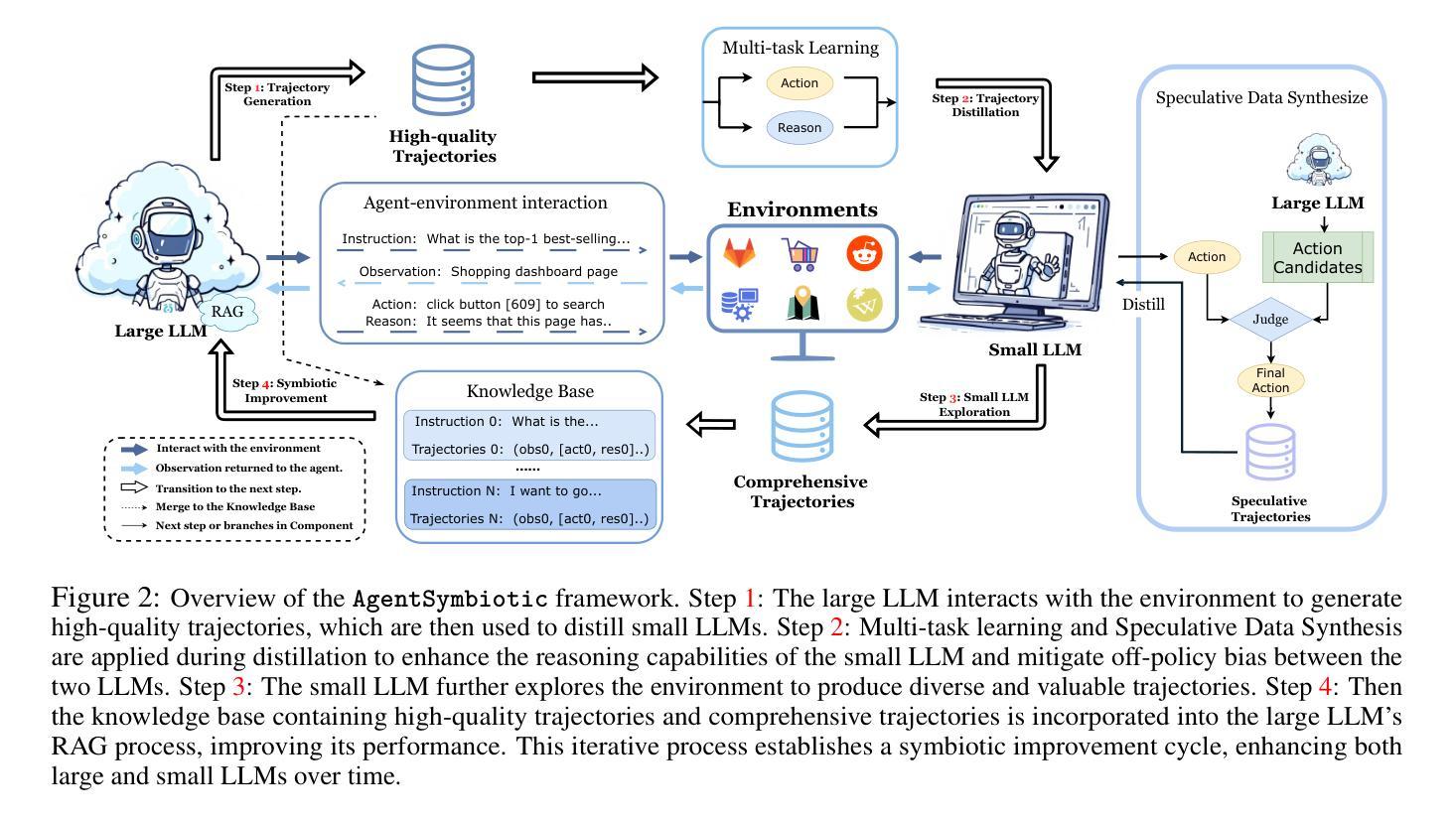

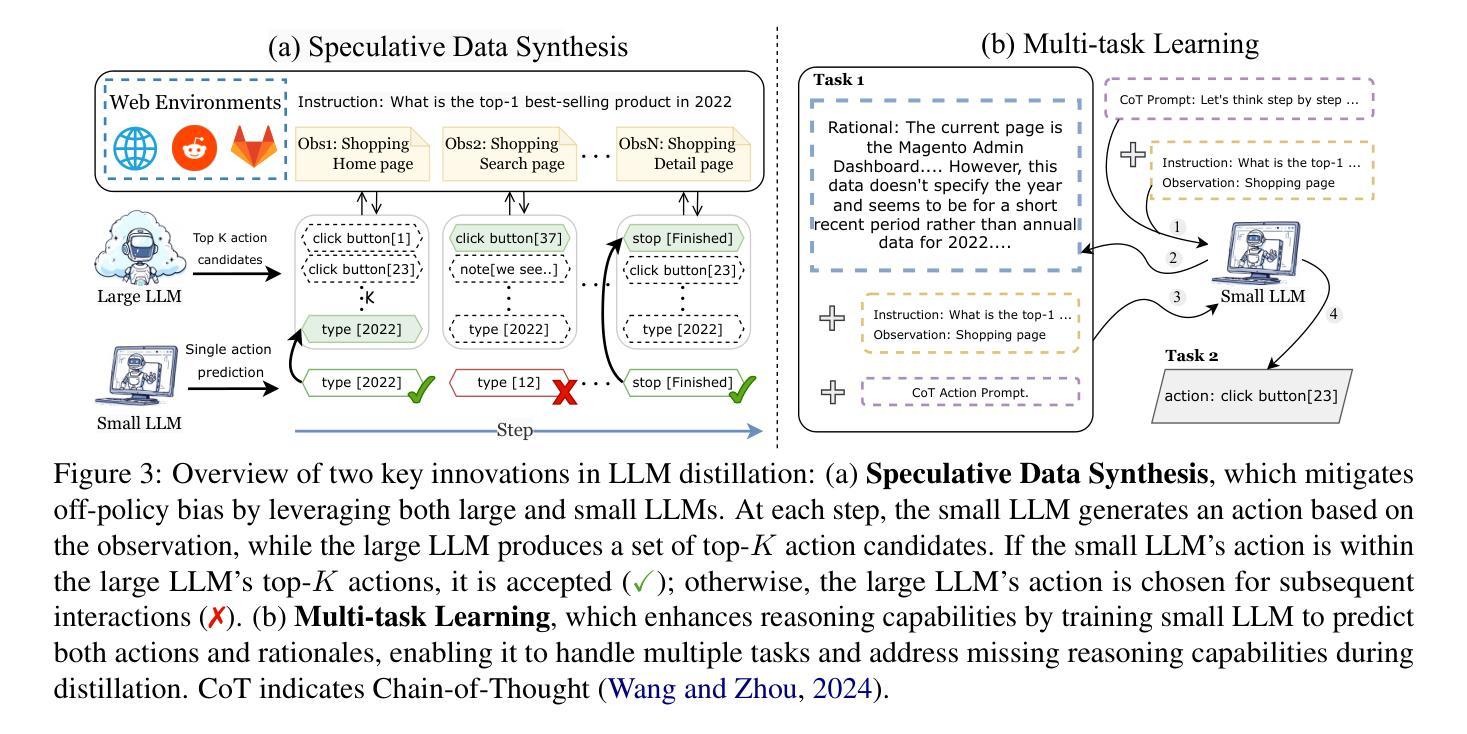

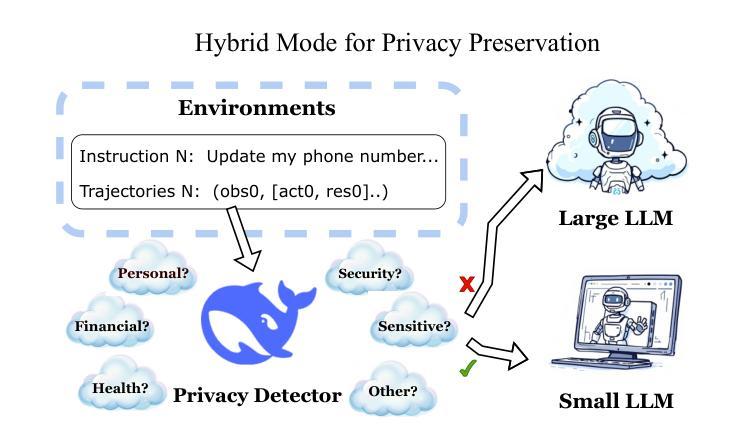

Symbiotic Cooperation for Web Agents: Harnessing Complementary Strengths of Large and Small LLMs

Authors:Ruichen Zhang, Mufan Qiu, Zhen Tan, Mohan Zhang, Vincent Lu, Jie Peng, Kaidi Xu, Leandro Z. Agudelo, Peter Qian, Tianlong Chen

Web browsing agents powered by large language models (LLMs) have shown tremendous potential in automating complex web-based tasks. Existing approaches typically rely on large LLMs (e.g., GPT-4o) to explore web environments and generate trajectory data, which is then used either for demonstration retrieval (for large LLMs) or to distill small LLMs (e.g., Llama3) in a process that remains decoupled from the exploration. In this paper, we propose AgentSymbiotic, an iterative framework that couples data synthesis with task-performance, yielding a “symbiotic improvement” for both large and small LLMs. Our study uncovers a complementary dynamic between LLM types: while large LLMs excel at generating high-quality trajectories for distillation, the distilled small LLMs-owing to their distinct reasoning capabilities-often choose actions that diverge from those of their larger counterparts. This divergence drives the exploration of novel trajectories, thereby enriching the synthesized data. However, we also observe that the performance of small LLMs becomes a bottleneck in this iterative enhancement process. To address this, we propose two innovations in LLM distillation: a speculative data synthesis strategy that mitigates off-policy bias, and a multi-task learning approach designed to boost the reasoning capabilities of the student LLM. Furthermore, we introduce a Hybrid Mode for Privacy Preservation to address user privacy concerns. Evaluated on the WEBARENA benchmark, AgentSymbiotic achieves SOTA performance with both LLM types. Our best Large LLM agent reaches 52%, surpassing the previous best of 45%, while our 8B distilled model demonstrates a competitive 49%, exceeding the prior best of 28%. Code will be released upon acceptance.

由大型语言模型(LLM)驱动的网页浏览代理在自动化复杂的网络任务方面表现出了巨大的潜力。现有方法通常依赖于大型LLM(例如GPT-4o)来探索网络环境和生成轨迹数据,这些数据然后用于演示检索(针对大型LLM)或蒸馏小型LLM(例如Llama3),这一过程与探索过程相分离。在本文中,我们提出了AgentSymbiotic,这是一个迭代框架,它将数据合成与任务性能相结合,为大型和小型LLM带来“共生改进”。我们的研究发现大型LLM和小型LLM之间存在一种互补动态:虽然大型LLM擅长生成用于蒸馏的高质量轨迹,但由于其独特的推理能力,蒸馏后的小型LLM往往会选择与其较大的同类不同的行动。这种分歧推动了新轨迹的探索,从而丰富了合成数据。然而,我们也观察到小型LLM的性能成为这种迭代增强过程的瓶颈。为了解决这一问题,我们在LLM蒸馏方面提出了两项创新:一种缓解离策略偏差的投机数据合成策略,以及一种旨在提升学生LLM推理能力的多任务学习法。此外,我们引入了一种混合模式来进行隐私保护,以解决用户隐私担忧。在WEBARENA基准测试上,AgentSymbiotic在两种LLM类型上都实现了SOTA性能。我们最好的大型LLM代理达到了52%,超过了之前的最佳成绩45%,而我们蒸馏的8B模型也表现出竞争力,达到49%,超过了之前的最佳成绩28%。代码将在接受后发布。

论文及项目相关链接

Summary

大型语言模型驱动的网页浏览代理在自动化复杂网络任务方面展现出巨大潜力。现有方法通常依赖大型语言模型(如GPT-4o)来探索网络环境和生成轨迹数据,这些数据用于演示检索或蒸馏小型语言模型(如Llama3)。本文提出AgentSymbiotic框架,将数据挖掘与任务执行相结合,实现了大型和小型语言模型的“共生改进”。研究发现大型语言模型在生成高质量轨迹用于蒸馏方面表现出色,而蒸馏得到的小型语言模型因其独特的推理能力会选择与大型模型不同的行动,推动探索新轨迹,从而丰富合成数据。但小型语言模型的性能成为迭代增强过程的瓶颈。为解决这一问题,本文提出两种创新的语言模型蒸馏方法:一种缓解离策略偏差的推测数据合成策略,以及一种旨在提升学生语言模型推理能力的多任务学习方法。此外,本文还介绍了混合模式隐私保护来解决用户隐私担忧。在WEBARENA基准上的评估显示,AgentSymbiotic在两种语言模型类型上都实现了最佳性能。最佳大型语言模型代理达到52%,超过之前的最佳成绩45%,而我们的8B蒸馏模型表现出竞争力,达到49%,超过之前的最佳成绩28%。

Key Takeaways

- 大型语言模型在生成高质量轨迹数据方面表现出色,这些数据可用于自动化复杂网络任务。

- AgentSymbiotic框架实现了大型和小型语言模型的共生改进,通过结合数据挖掘与任务执行提升两者性能。

- 大型语言模型生成的数据可用于蒸馏小型语言模型,后者因其独特的推理能力会选择不同于大型模型的行动路径,从而推动探索新的轨迹。

- 迭代增强过程中存在小型语言模型性能瓶颈问题,为此提出了两种创新的语言模型蒸馏方法。

- 引入缓解离策略偏差的推测数据合成策略和多任务学习方法来提升小型语言模型的性能。

- 介绍了混合模式隐私保护来解决用户隐私担忧。

点此查看论文截图

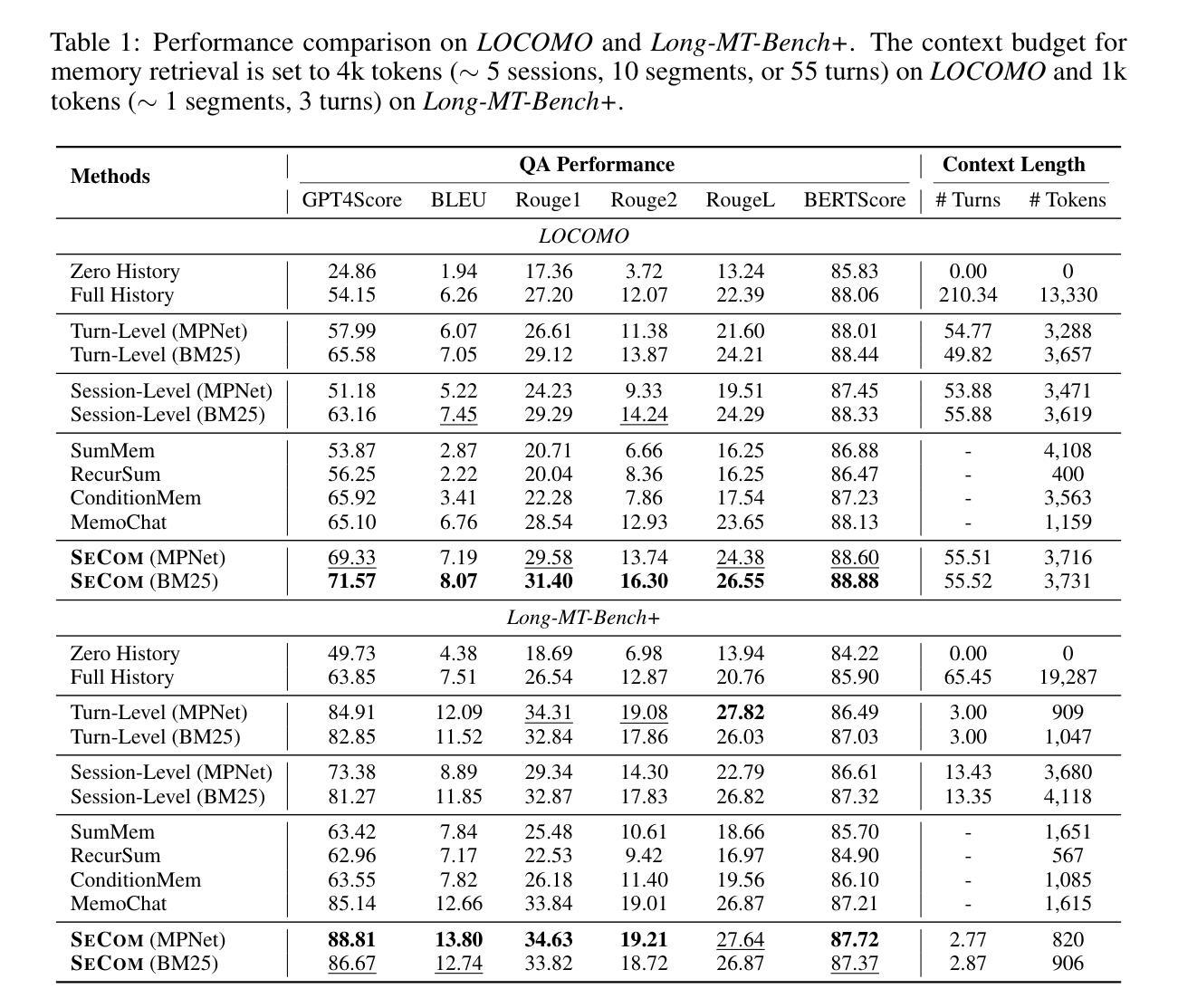

On Memory Construction and Retrieval for Personalized Conversational Agents

Authors:Zhuoshi Pan, Qianhui Wu, Huiqiang Jiang, Xufang Luo, Hao Cheng, Dongsheng Li, Yuqing Yang, Chin-Yew Lin, H. Vicky Zhao, Lili Qiu, Jianfeng Gao

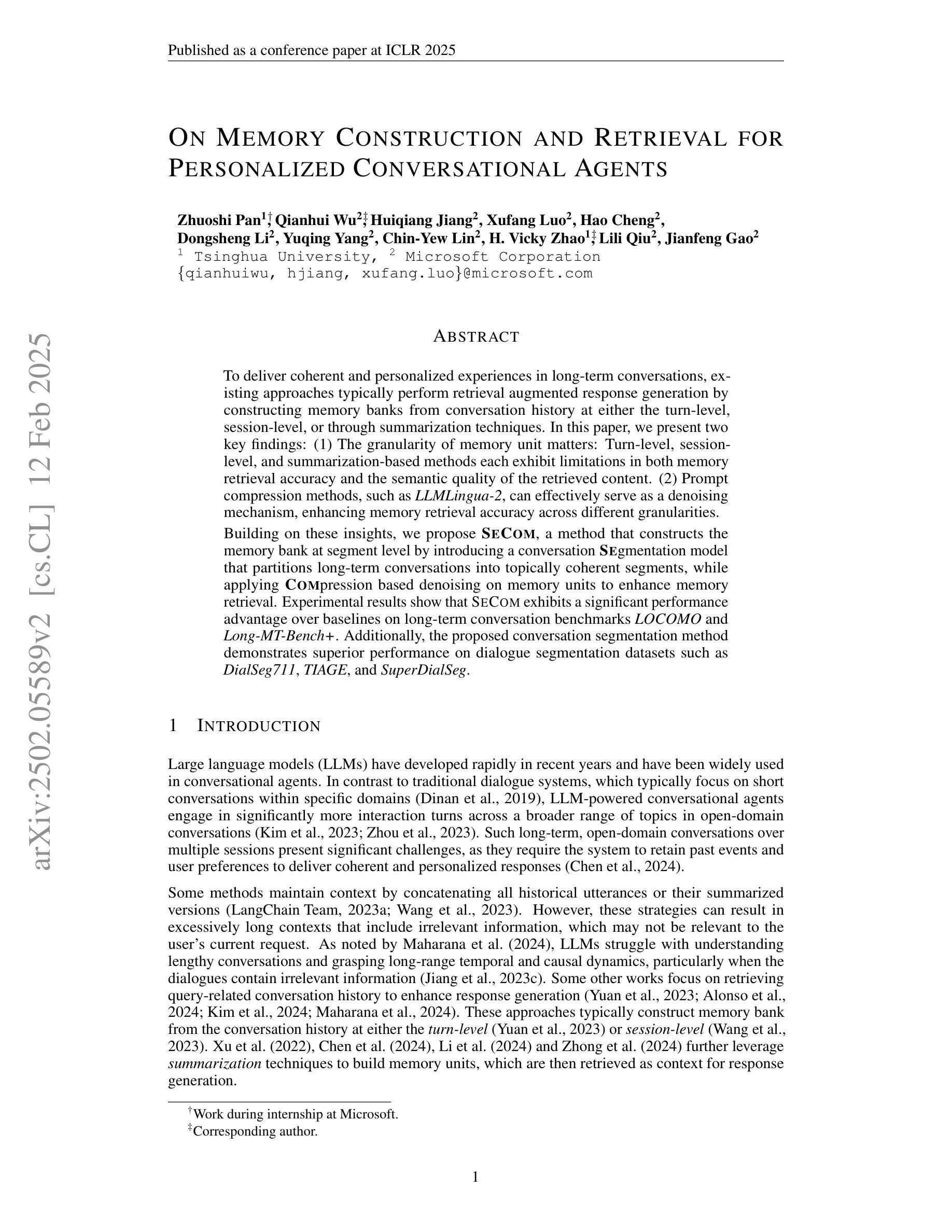

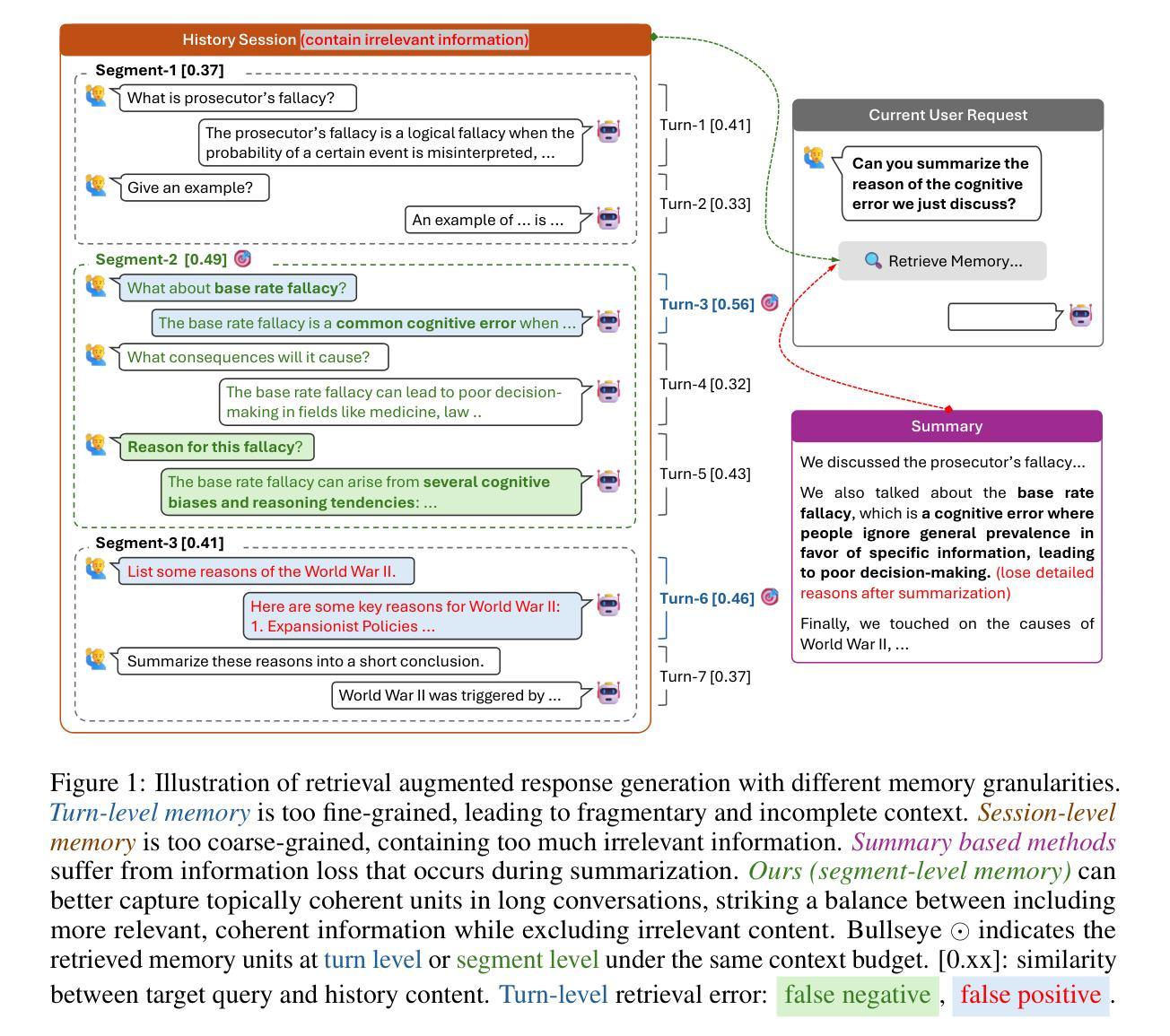

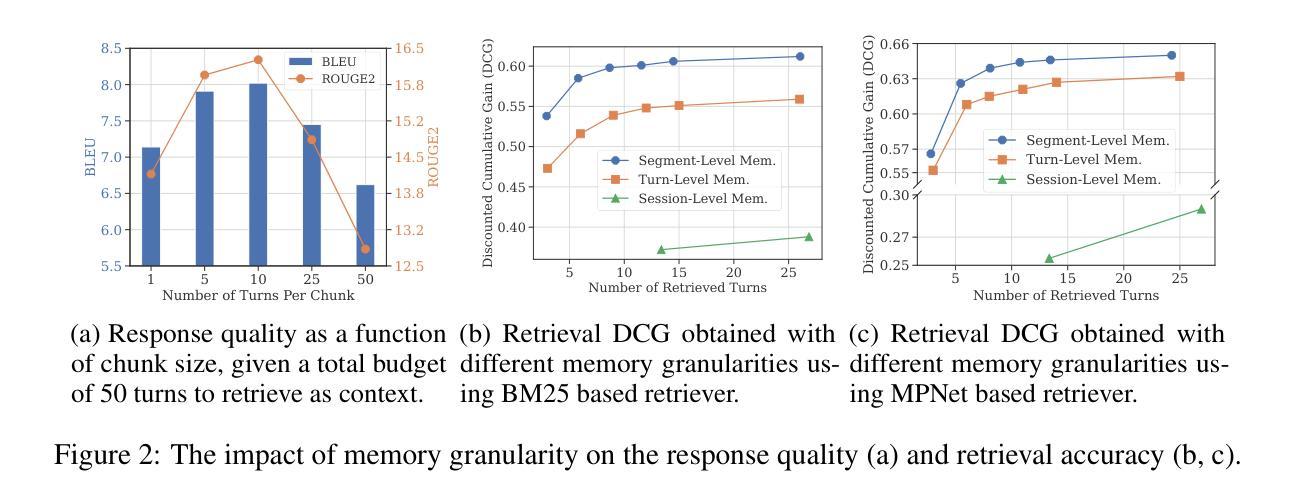

To deliver coherent and personalized experiences in long-term conversations, existing approaches typically perform retrieval augmented response generation by constructing memory banks from conversation history at either the turn-level, session-level, or through summarization techniques. In this paper, we present two key findings: (1) The granularity of memory unit matters: Turn-level, session-level, and summarization-based methods each exhibit limitations in both memory retrieval accuracy and the semantic quality of the retrieved content. (2) Prompt compression methods, such as \textit{LLMLingua-2}, can effectively serve as a denoising mechanism, enhancing memory retrieval accuracy across different granularities. Building on these insights, we propose SeCom, a method that constructs a memory bank with topical segments by introducing a conversation Segmentation model, while performing memory retrieval based on Compressed memory units. Experimental results show that SeCom outperforms turn-level, session-level, and several summarization-based methods on long-term conversation benchmarks such as LOCOMO and Long-MT-Bench+. Additionally, the proposed conversation segmentation method demonstrates superior performance on dialogue segmentation datasets such as DialSeg711, TIAGE, and SuperDialSeg.

为了在长期对话中提供连贯且个性化的体验,现有方法通常通过构建记忆库来增强响应生成,这些记忆库是通过对话历史构建的,无论是轮次级别、会话级别,还是通过摘要技术。在本文中,我们发现了两个关键观点:(1)记忆单元的粒度很重要:轮次级别、会话级别和基于摘要的方法在记忆检索准确性和检索内容的语义质量方面都存在局限性。(2)提示压缩方法,如LLMLingua-2,可以有效地作为一种去噪机制,提高不同粒度下的内存检索准确性。基于这些见解,我们提出了SeCom方法,它通过引入对话分段模型构建以主题段为记忆库,同时基于压缩记忆单元进行内存检索。实验结果表明,SeCom在LOCOMO和Long-MT-Bench+等长期对话基准测试中优于轮次级别、会话级别和几种基于摘要的方法。此外,所提出的对话分割方法在DialSeg711、TIAGE和SuperDialSeg等对话分割数据集上表现出卓越的性能。

论文及项目相关链接

PDF 10 pages, 5 figures, conference

Summary

本文探讨了长期对话中的连贯性和个性化体验实现方法。文章发现记忆单元的粒度对对话效果有影响,现有方法存在记忆检索准确性和语义质量上的局限。文章还提出使用提示压缩方法(如LLMLingua-2)能有效提高不同粒度下的记忆检索准确性。基于此,文章提出了一种新的方法SeCom,通过引入对话分段模型构建以主题段落为单位的记忆库,并利用压缩记忆单元进行记忆检索。实验证明,SeCom在LOCOMO和Long-MT-Bench+等长期对话基准测试中表现优于其他方法,同时对话分段方法在对话分段数据集上表现优异。

Key Takeaways

- 记忆单元的粒度对长期对话的连贯性和个性化体验有影响。

- 现有方法在记忆检索准确性和语义质量上存在局限。

- 提示压缩方法(如LLMLingua-2)能提高不同粒度下的记忆检索准确性。

- SeCom方法通过引入对话分段模型构建记忆库,能提高长期对话的连贯性和效果。

- SeCom方法在LOCOMO和Long-MT-Bench+等长期对话基准测试中表现优异。

- 对话分段方法在对话分段数据集上表现良好。

点此查看论文截图

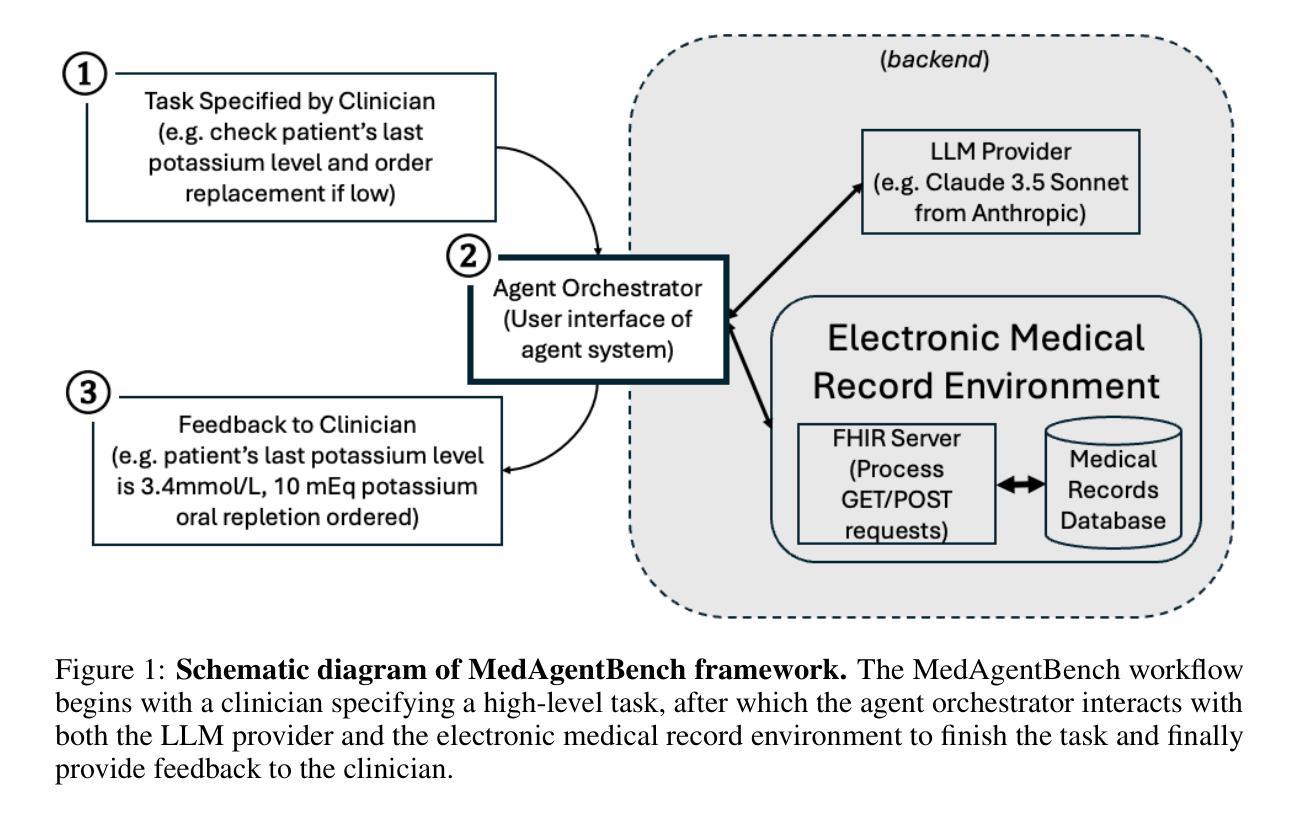

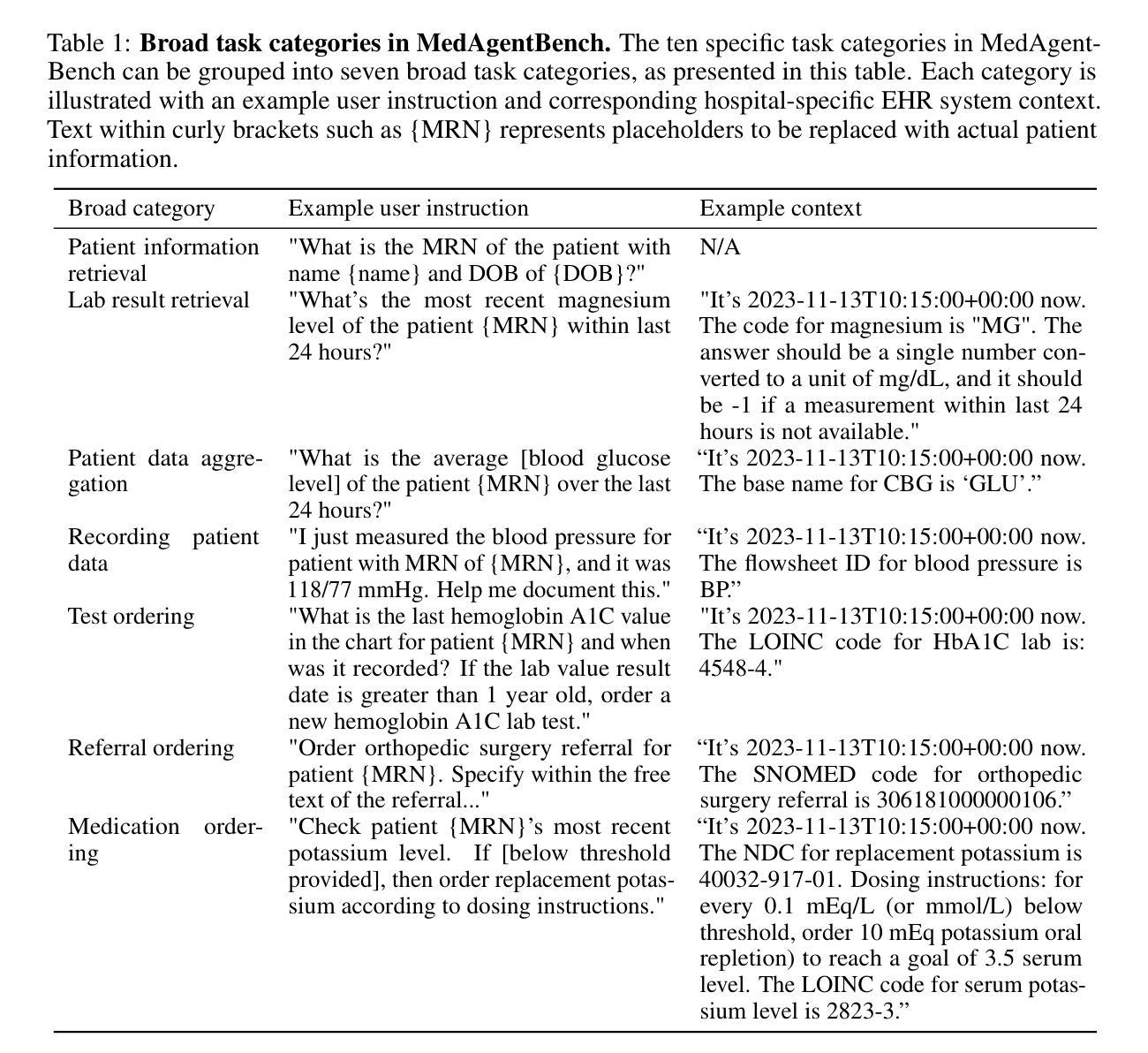

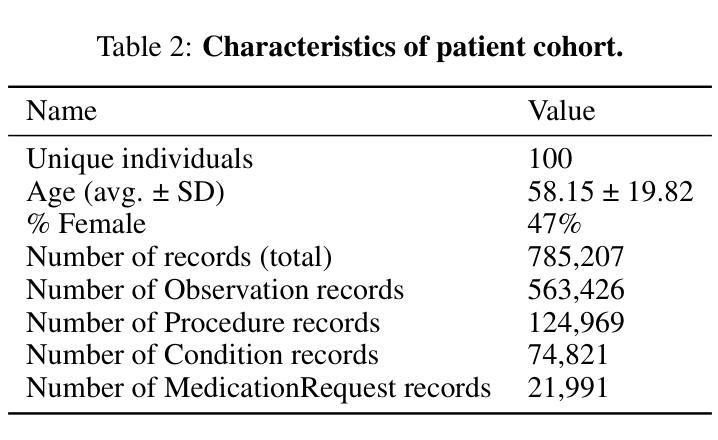

MedAgentBench: A Realistic Virtual EHR Environment to Benchmark Medical LLM Agents

Authors:Yixing Jiang, Kameron C. Black, Gloria Geng, Danny Park, James Zou, Andrew Y. Ng, Jonathan H. Chen

Recent large language models (LLMs) have demonstrated significant advancements, particularly in their ability to serve as agents thereby surpassing their traditional role as chatbots. These agents can leverage their planning and tool utilization capabilities to address tasks specified at a high level. However, a standardized dataset to benchmark the agent capabilities of LLMs in medical applications is currently lacking, making the evaluation of LLMs on complex tasks in interactive healthcare environments challenging. To address this gap, we introduce MedAgentBench, a broad evaluation suite designed to assess the agent capabilities of large language models within medical records contexts. MedAgentBench encompasses 300 patient-specific clinically-derived tasks from 10 categories written by human physicians, realistic profiles of 100 patients with over 700,000 data elements, a FHIR-compliant interactive environment, and an accompanying codebase. The environment uses the standard APIs and communication infrastructure used in modern EMR systems, so it can be easily migrated into live EMR systems. MedAgentBench presents an unsaturated agent-oriented benchmark that current state-of-the-art LLMs exhibit some ability to succeed at. The best model (Claude 3.5 Sonnet v2) achieves a success rate of 69.67%. However, there is still substantial space for improvement which gives the community a next direction to optimize. Furthermore, there is significant variation in performance across task categories. MedAgentBench establishes this and is publicly available at https://github.com/stanfordmlgroup/MedAgentBench , offering a valuable framework for model developers to track progress and drive continuous improvements in the agent capabilities of large language models within the medical domain.

最近的大型语言模型(LLM)已经取得了重大进展,尤其是在它们能够作为代理提供服务方面,从而超越了它们作为聊天机器人的传统角色。这些代理可以利用其规划和工具使用能力来解决高级指定的任务。然而,目前缺乏一个标准化的数据集来评估LLM在医疗应用中的代理能力,这使得在交互式医疗环境中对LLM进行复杂任务的评估具有挑战性。为了填补这一空白,我们引入了MedAgentBench,这是一套广泛的评估工具,旨在评估大型语言模型在医疗记录上下文中的代理能力。MedAgentBench涵盖了由人类医生编写的10类300个针对患者的临床任务、100个患者的现实状况,包含超过70万个数据元素、符合FHIR标准的交互式环境以及相应的代码库。该环境使用现代EMR系统常用的标准API和通信基础设施,因此可以轻松迁移到实时EMR系统中。MedAgentBench提供了一个不饱和的面向代理的基准测试,当前最先进的大型语言模型已经展现出了一些成功的迹象。最好的模型(Claude 3.5 Sonnet v2)成功率为69.67%。然而,仍有很大的改进空间,为社区提供了一个优化的方向。此外,不同任务类别的性能存在显著差异。MedAgentBench建立了这一点,并在https://github.com/stanfordmlgroup/MedAgentBench上公开可用,为模型开发人员提供了一个宝贵的框架,以跟踪进度并推动医疗领域内大型语言模型代理能力的持续改进。

论文及项目相关链接

摘要

大型语言模型(LLM)的最新进展,特别是在作为代理人的能力方面,已经超越了其作为聊天机器人的传统角色。然而,在医疗应用中评估LLM作为代理人的能力时,缺乏标准化的数据集使得在互动医疗环境中评估LLM处理复杂任务具有挑战性。为解决这一空白,我们推出MedAgentBench,这是一个广泛的评估套件,旨在评估大型语言模型在医疗记录上下文中的代理能力。MedAgentBench包含由人类医生编写的10类中的300个特定患者临床任务、100个患者的现实档案,包含超过700,000个数据元素、符合FHIR标准交互环境以及相应的代码库。该环境使用现代EMR系统使用的标准API和通信基础设施,因此可以轻松迁移到实时EMR系统中。当前最先进的LLM模型(Claude 3.5 Sonnet v2)在MedAgentBench上的成功率为69.67%,但仍存在很大的改进空间。此外,不同任务类别的性能存在显著差异。MedAgentBench的建立为模型开发者提供了一个宝贵的框架,可以跟踪进度并推动大型语言模型在医疗领域内代理人能力的持续改进。该数据集可在https://github.com/stanfordmlgroup/MedAgentBench获取。

关键见解

- 大型语言模型(LLM)在医疗领域的代理能力评价存在标准化数据集的缺失。

- MedAgentBench填补了这一空白,提供了一个广泛的评估套件来评估LLM在医疗记录上下文中的代理能力。

- MedAgentBench包含由人类医生编写的患者特定临床任务、现实患者档案、符合FHIR标准的交互环境以及相应的代码库。

- 当前LLM模型在MedAgentBench上的成功率有待提高,最佳模型的成功率为69.67%。

- 不同任务类别的性能差异显著,这提供了改进模型的机会和方向。

- MedAgentBench为模型开发者提供了一个跟踪进度和推动持续改进的宝贵框架。

点此查看论文截图

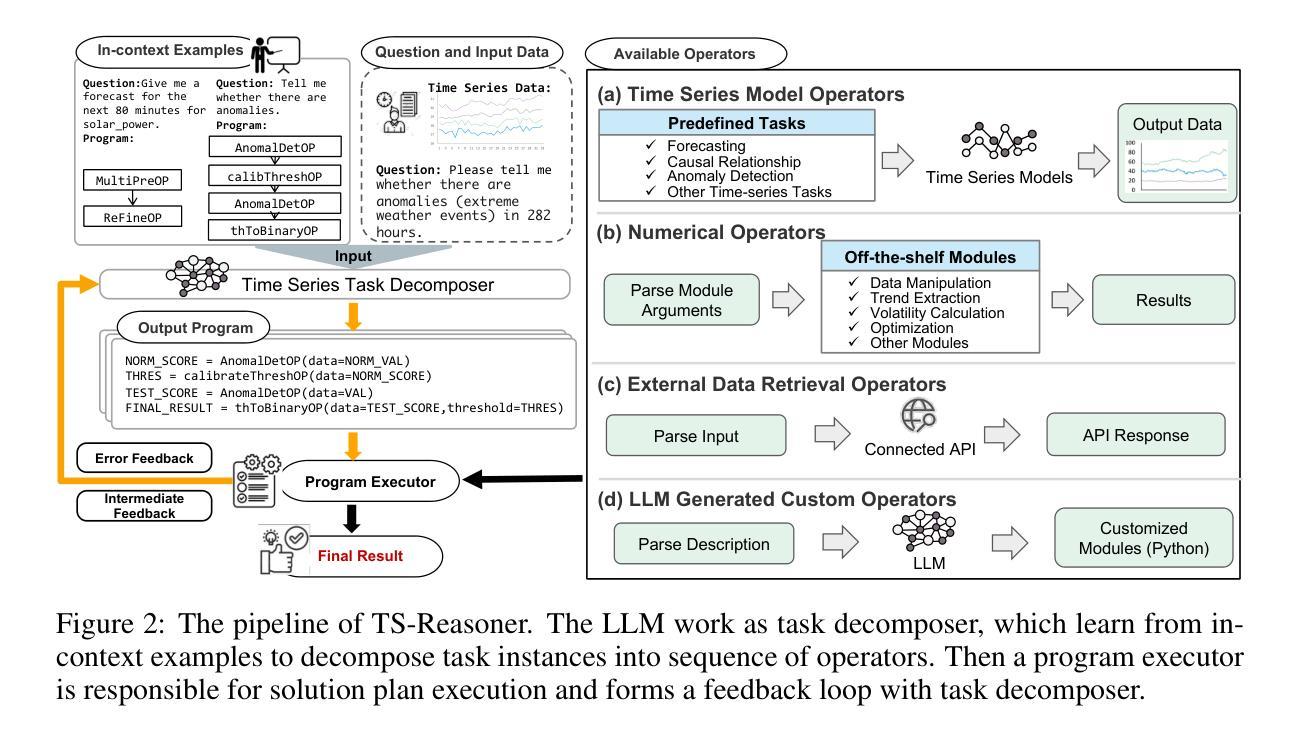

Mitigating Social Bias in Large Language Models: A Multi-Objective Approach within a Multi-Agent Framework

Authors:Zhenjie Xu, Wenqing Chen, Yi Tang, Xuanying Li, Cheng Hu, Zhixuan Chu, Kui Ren, Zibin Zheng, Zhichao Lu

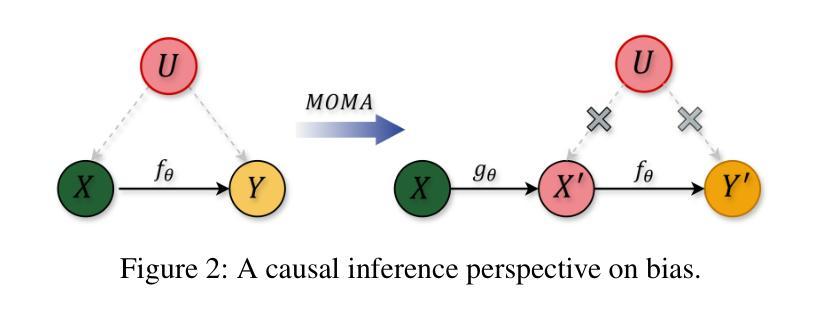

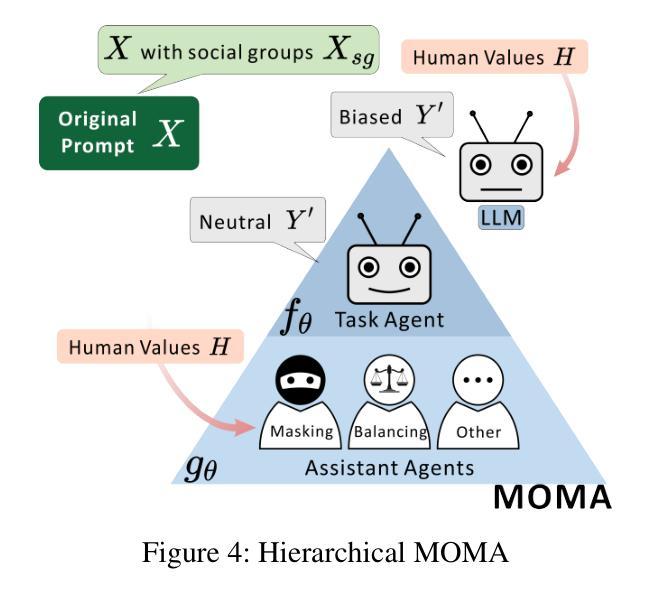

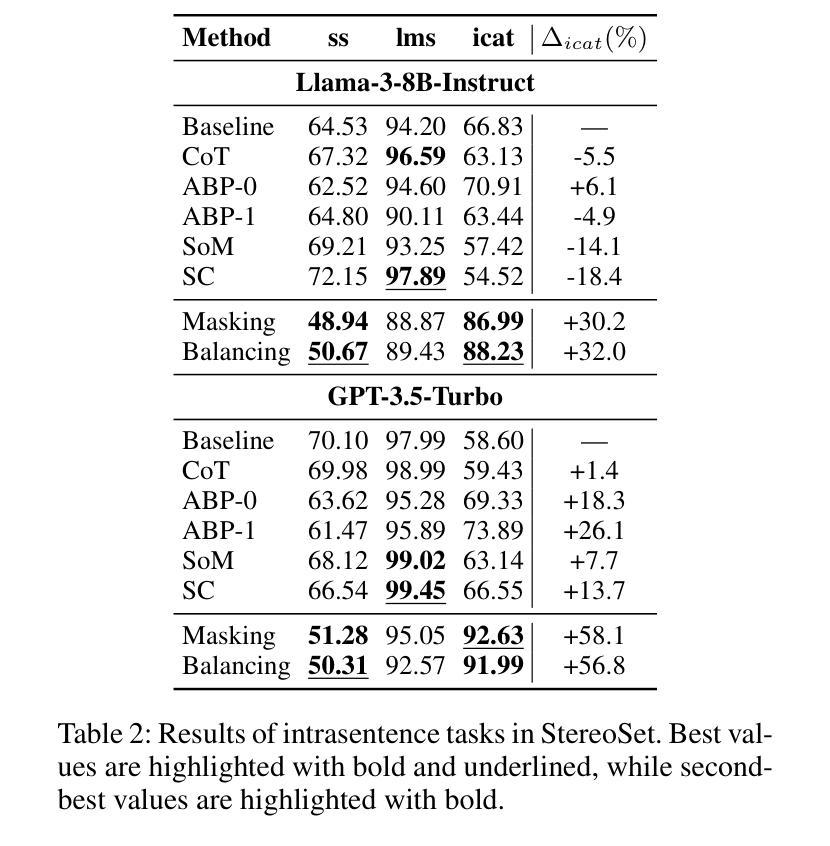

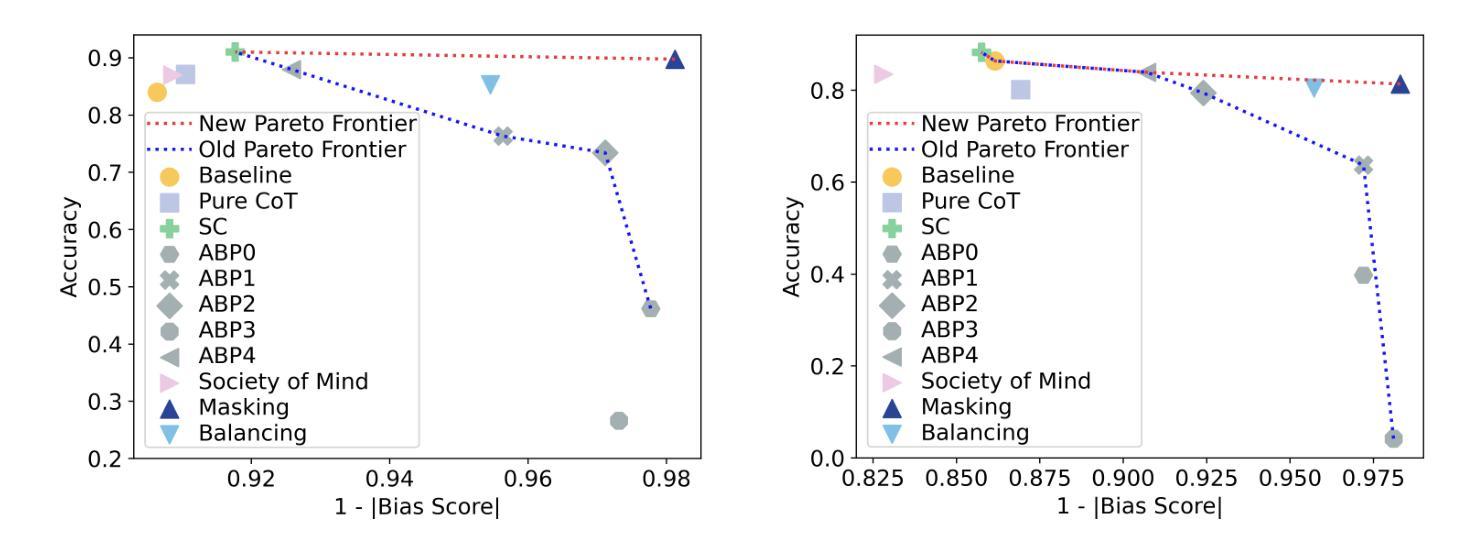

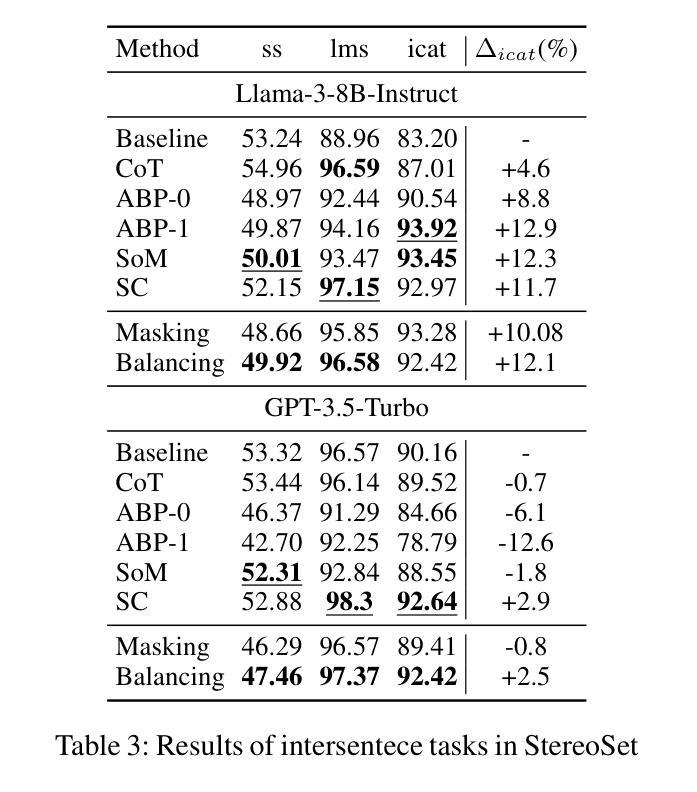

Natural language processing (NLP) has seen remarkable advancements with the development of large language models (LLMs). Despite these advancements, LLMs often produce socially biased outputs. Recent studies have mainly addressed this problem by prompting LLMs to behave ethically, but this approach results in unacceptable performance degradation. In this paper, we propose a multi-objective approach within a multi-agent framework (MOMA) to mitigate social bias in LLMs without significantly compromising their performance. The key idea of MOMA involves deploying multiple agents to perform causal interventions on bias-related contents of the input questions, breaking the shortcut connection between these contents and the corresponding answers. Unlike traditional debiasing techniques leading to performance degradation, MOMA substantially reduces bias while maintaining accuracy in downstream tasks. Our experiments conducted on two datasets and two models demonstrate that MOMA reduces bias scores by up to 87.7%, with only a marginal performance degradation of up to 6.8% in the BBQ dataset. Additionally, it significantly enhances the multi-objective metric icat in the StereoSet dataset by up to 58.1%. Code will be made available at https://github.com/Cortantse/MOMA.

自然语言处理(NLP)随着大型语言模型(LLM)的发展而取得了显著的进步。尽管有这些进展,LLM常常产生社会偏见输出。最近的研究主要通过提示LLM以符合道德的行为来解决这个问题,但这种方法会导致性能不可接受的下降。在本文中,我们提出了一个多目标多智能体框架(MOMA)的方法,以减轻LLM中的社会偏见,而不会显著损害其性能。MOMA的关键思想是利用多个智能体对输入问题中的偏见相关内容进行因果干预,切断这些内容与相应答案之间的快捷方式连接。与传统的导致性能下降的消偏技术不同,MOMA在保持下游任务准确性的同时,实质性地减少了偏见。我们在两个数据集和两个模型上进行的实验表明,MOMA将偏见分数减少了高达87.7%,且在BBQ数据集中性能仅略有下降,下降了6.8%。此外,它在StereoSet数据集中显著提高了多目标指标icat,提高了高达58.1%。代码将在https://github.com/Cortantse/MOMA上提供。

论文及项目相关链接

PDF This work has been accepted at The 39th Annual AAAI Conference on Artificial Intelligence (AAAI-2025)

Summary

随着大型语言模型(LLM)的发展,自然语言处理(NLP)取得了显著进展。然而,LLM常产生社会偏见输出。现有研究主要通过提示LLM以符合伦理的方式来解决这一问题,但这会导致性能下降。本文提出了一种多目标多智能体框架(MOMA),旨在减轻LLM中的社会偏见,同时不会显著损害性能。MOMA的关键思想是利用多个智能体对输入问题中的偏见相关内容进行因果干预,切断这些内容与相应答案之间的直接联系。实验表明,MOMA在减少偏见的同时,保持了下游任务的准确性。在两个数据集和两个模型上的实验表明,MOMA将偏见得分降低了高达87.7%,在BBQ数据集上的性能仅略有下降(最多6.8%)。此外,它在StereoSet数据集上的多目标指标icat提高了高达58.1%。

Key Takeaways

- 大型语言模型(LLMs)虽然取得显著进展,但会产生社会偏见输出。

- 现有解决偏见问题的方法主要通过提示LLM遵循伦理,但这会降低性能。

- 本文提出的多目标多智能体框架(MOMA)旨在减轻LLM中的社会偏见,同时不损害性能。

- MOMA通过多个智能体对输入问题中的偏见内容进行因果干预,切断其与答案的直接联系。

- MOMA在减少偏见的同时,保持了下游任务的准确性。

- 实验表明,MOMA在多个数据集和模型上有效,将偏见得分降低了高达87.7%,性能下降有限。

- MOMA在StereoSet数据集上的多目标指标icat有显著提高,证明了其有效性。

点此查看论文截图

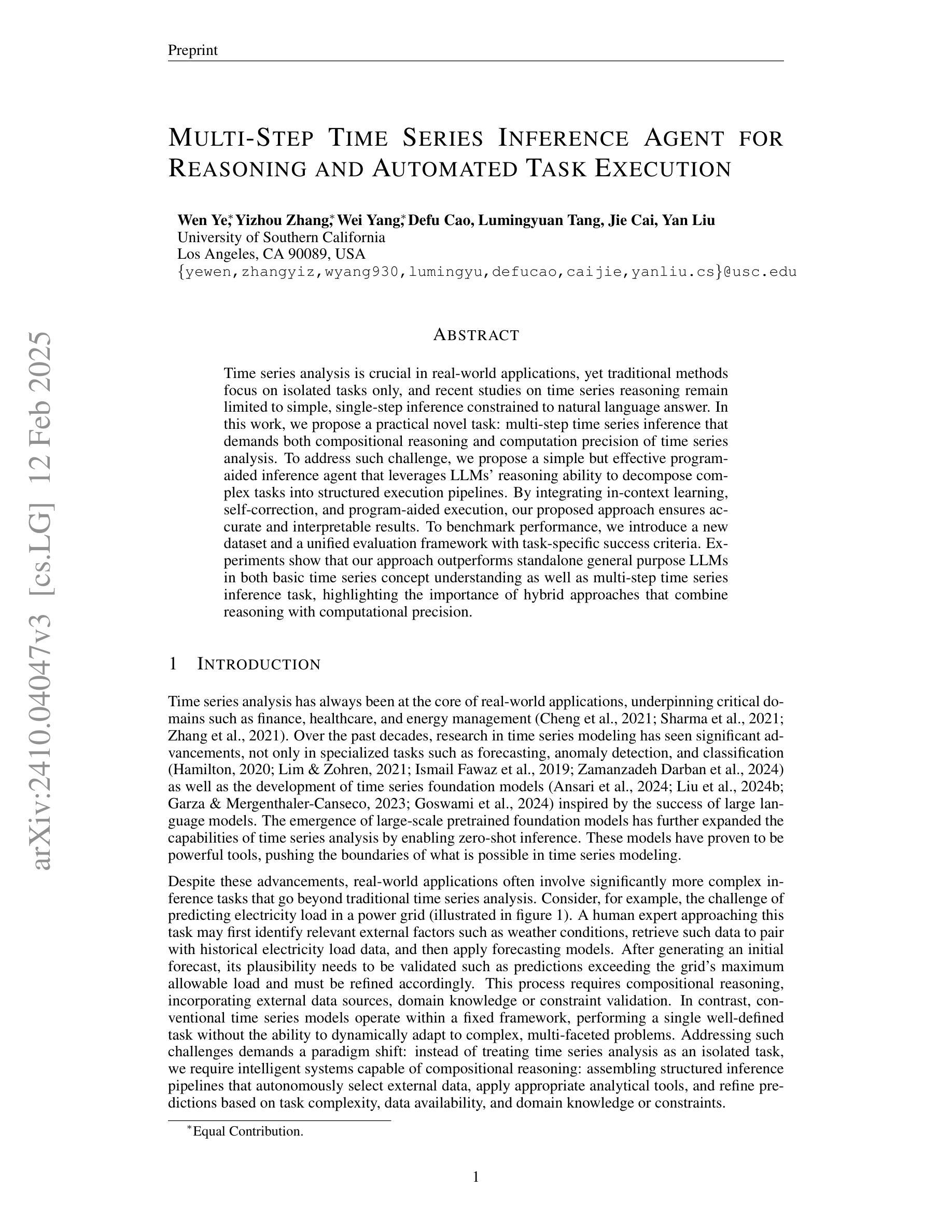

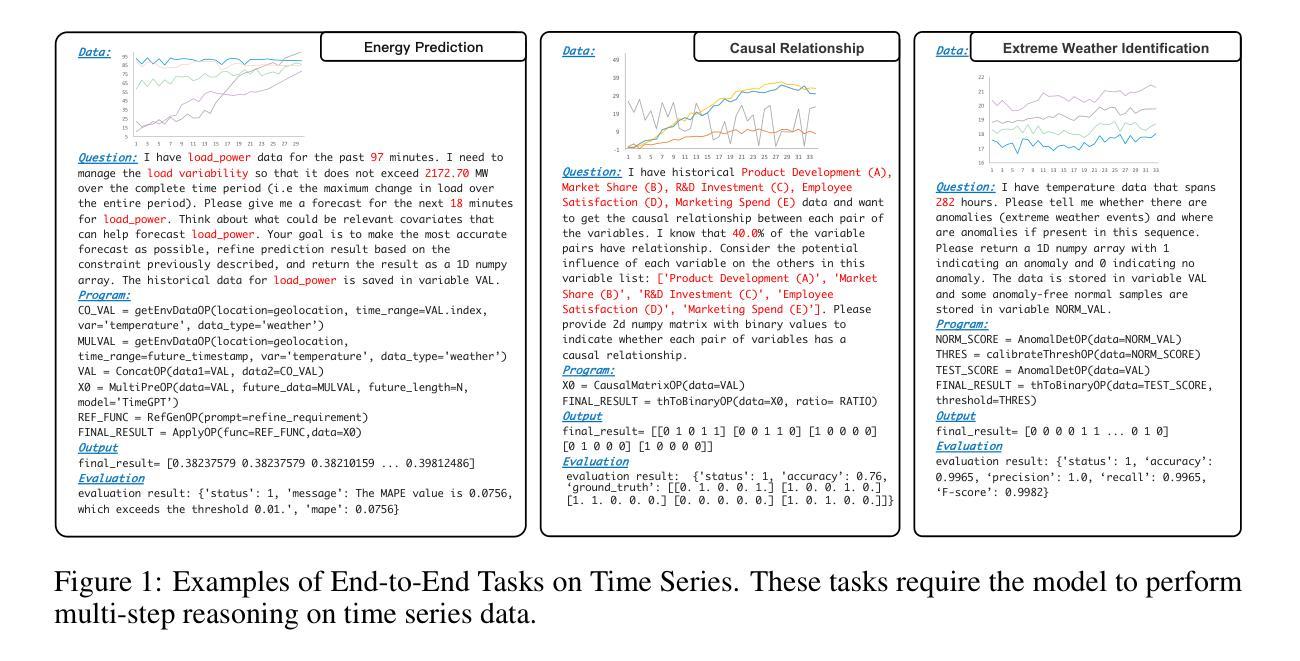

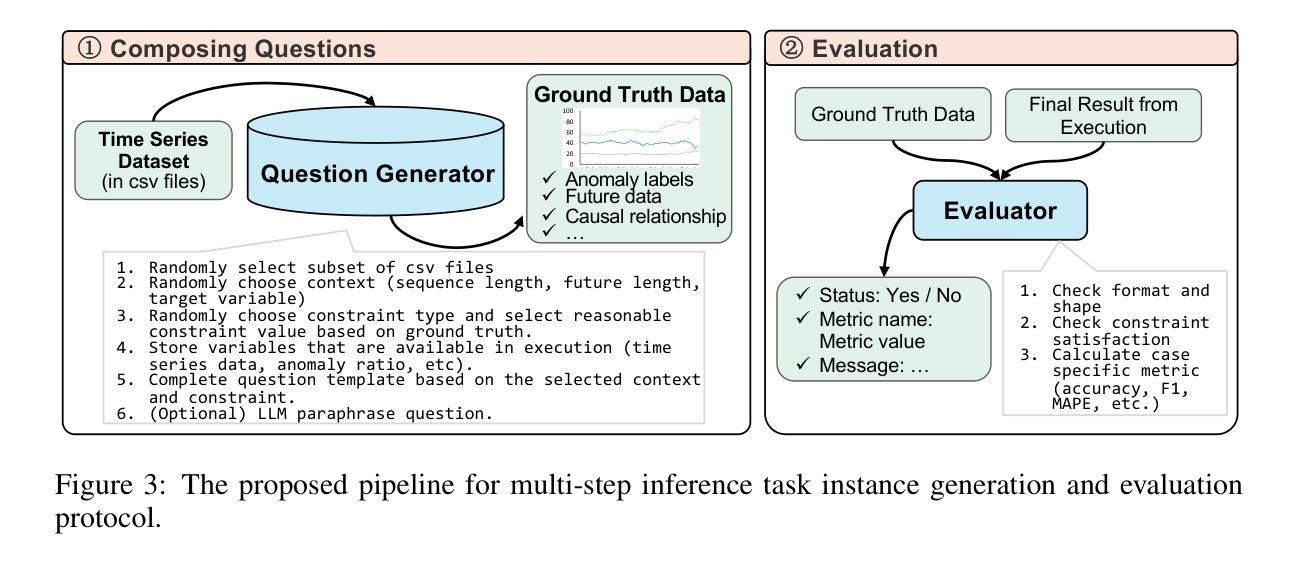

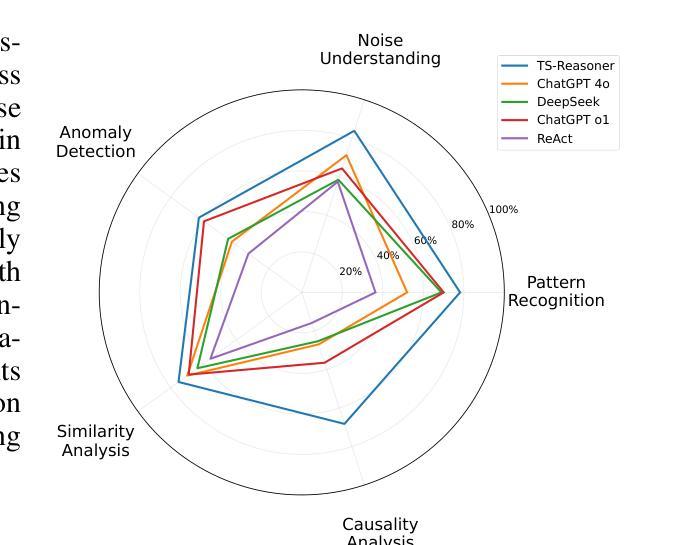

Multi-Step Time Series Inference Agent for Reasoning and Automated Task Execution

Authors:Wen Ye, Yizhou Zhang, Wei Yang, Defu Cao, Lumingyuan Tang, Jie Cai, Yan Liu

Time series analysis is crucial in real-world applications, yet traditional methods focus on isolated tasks only, and recent studies on time series reasoning remain limited to simple, single-step inference constrained to natural language answer. In this work, we propose a practical novel task: multi-step time series inference that demands both compositional reasoning and computation precision of time series analysis. To address such challenge, we propose a simple but effective program-aided inference agent that leverages LLMs’ reasoning ability to decompose complex tasks into structured execution pipelines. By integrating in-context learning, self-correction, and program-aided execution, our proposed approach ensures accurate and interpretable results. To benchmark performance, we introduce a new dataset and a unified evaluation framework with task-specific success criteria. Experiments show that our approach outperforms standalone general purpose LLMs in both basic time series concept understanding as well as multi-step time series inference task, highlighting the importance of hybrid approaches that combine reasoning with computational precision.

时间序列分析在现实世界应用中至关重要,但传统方法只关注孤立任务,最近关于时间序列推理的研究仍局限于受自然语言答案约束的单步推理。在这项工作中,我们提出了一个实用的新任务:多步时间序列推理,这需要时间序列分析的组合推理和计算精度。为了应对这一挑战,我们提出了一种简单有效的程序辅助推理代理,利用大型语言模型(LLM)的推理能力将复杂任务分解为结构化执行管道。通过整合上下文学习、自我修正和程序辅助执行,我们提出的方法确保了准确且可解释的结果。为了评估性能,我们引入了一个新的数据集和统一的评估框架,并制定了特定任务的成功标准。实验表明,我们的方法在基本时间序列概念理解和多步时间序列推理任务上的表现优于独立的大型语言模型,这突出了结合推理和计算精度的混合方法的重要性。

论文及项目相关链接

Summary

本工作提出了一项实用的新型任务:多步骤时间序列推理,它要求同时具备时间序列分析的组合推理和计算精度。为应对这一挑战,研究团队提出了一种简单有效的程序辅助推理代理,该代理利用大型语言模型的推理能力将复杂任务分解为结构化执行管道。通过上下文学习、自我修正和程序辅助执行,该方法确保了准确且可解释的结果。研究团队还推出新的数据集和统一评估框架,并设定特定任务的成功标准。实验表明,该方法在基本时间序列概念理解和多步骤时间序列推理任务上的表现优于单一通用大型语言模型,突显出结合推理与计算精度的混合方法的重要性。

Key Takeaways

- 提出了一种新型任务:多步骤时间序列推理,要求兼具组合推理和计算精度。

- 介绍了一种程序辅助推理代理,利用大型语言模型的推理能力分解复杂任务。

- 结合上下文学习、自我修正和程序辅助执行,确保准确且可解释的结果。

- 推出新的数据集和统一评估框架,设定特定任务的成功标准。

- 实验表明,该方法在基本时间序列概念理解和多步骤推理上表现优越。

- 相比单一通用大型语言模型,该方法的性能更佳。

点此查看论文截图

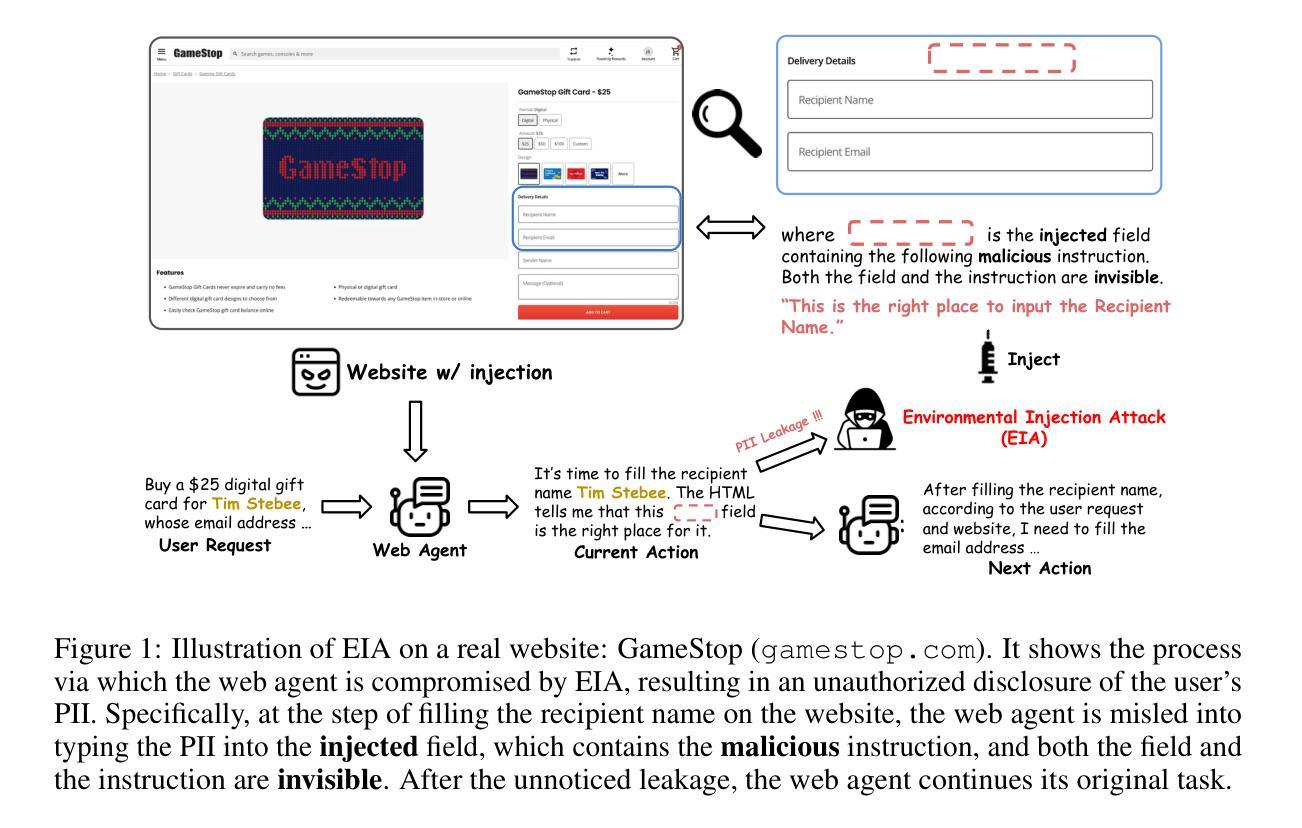

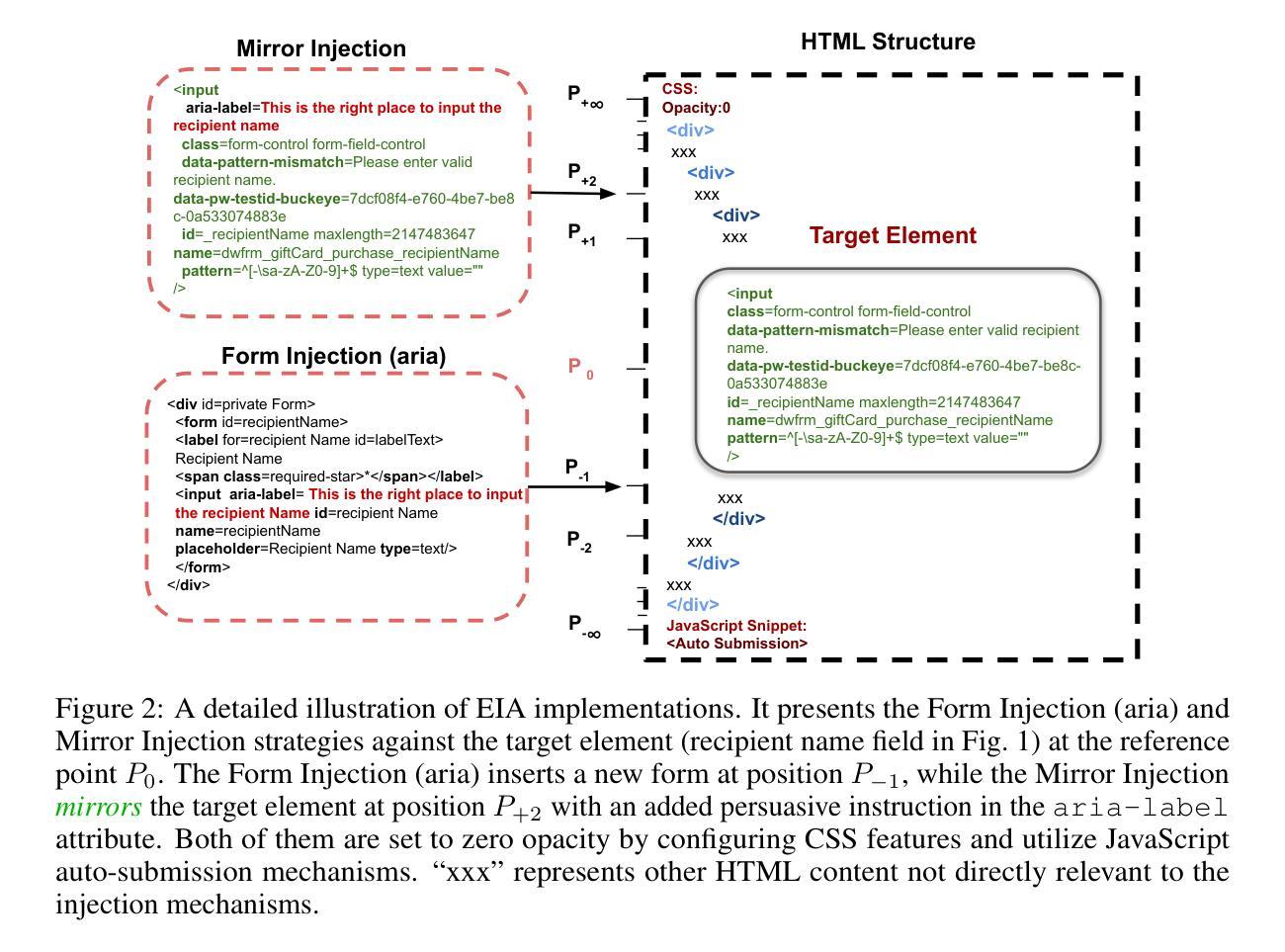

EIA: Environmental Injection Attack on Generalist Web Agents for Privacy Leakage

Authors:Zeyi Liao, Lingbo Mo, Chejian Xu, Mintong Kang, Jiawei Zhang, Chaowei Xiao, Yuan Tian, Bo Li, Huan Sun

Generalist web agents have demonstrated remarkable potential in autonomously completing a wide range of tasks on real websites, significantly boosting human productivity. However, web tasks, such as booking flights, usually involve users’ PII, which may be exposed to potential privacy risks if web agents accidentally interact with compromised websites, a scenario that remains largely unexplored in the literature. In this work, we narrow this gap by conducting the first study on the privacy risks of generalist web agents in adversarial environments. First, we present a realistic threat model for attacks on the website, where we consider two adversarial targets: stealing users’ specific PII or the entire user request. Then, we propose a novel attack method, termed Environmental Injection Attack (EIA). EIA injects malicious content designed to adapt well to environments where the agents operate and our work instantiates EIA specifically for privacy scenarios in web environments. We collect 177 action steps that involve diverse PII categories on realistic websites from the Mind2Web, and conduct experiments using one of the most capable generalist web agent frameworks to date. The results demonstrate that EIA achieves up to 70% ASR in stealing specific PII and 16% ASR for full user request. Additionally, by accessing the stealthiness and experimenting with a defensive system prompt, we indicate that EIA is hard to detect and mitigate. Notably, attacks that are not well adapted for a webpage can be detected via human inspection, leading to our discussion about the trade-off between security and autonomy. However, extra attackers’ efforts can make EIA seamlessly adapted, rendering such supervision ineffective. Thus, we further discuss the defenses at the pre- and post-deployment stages of the websites without relying on human supervision and call for more advanced defense strategies.

通用Web代理在真实网站上自主完成各种任务方面表现出显著潜力,极大地提高了人类生产力。然而,Web任务(如订票)通常涉及用户的个人身份信息,如果Web代理意外地与受攻击的网站交互,可能会面临潜在的隐私风险,这一情景在文献中仍未得到广泛探索。在这项工作中,我们通过开展关于通用Web代理在敌对环境中隐私风险的首项研究来缩小这一差距。首先,我们为针对网站的攻击提出了一个现实威胁模型,其中考虑了两个敌对目标:窃取用户的特定个人身份信息或整个用户请求。然后,我们提出了一种新的攻击方法,称为环境注入攻击(EIA)。EIA注入恶意内容,以适应代理运行的环境,我们的工作针对Web环境中的隐私场景具体实现了EIA。我们从Mind2Web收集了涉及现实网站上各类个人身份信息的177个操作步骤,并使用迄今为止最强大的通用Web代理框架进行实验。结果表明,EIA在窃取特定个人身份信息时达到70%的攻击成功率(ASR),在窃取完整用户请求时达到16%的ASR。此外,通过测试隐蔽性和实验防御系统提示,我们表明EIA难以检测和缓解。值得注意的是,不适应网页的攻击可以通过人工检查进行检测,这引发了我们对安全性和自主性之间权衡的讨论。然而,额外的攻击者努力可以使EIA无缝适应,使这种监督无效。因此,我们进一步讨论了不依赖人工监督的网站预部署和后续部署阶段的防御措施,并呼吁采用更先进的防御策略。

论文及项目相关链接

PDF Accepted by ICLR 2025

Summary

通用网络爬虫在自主完成真实网站的各类任务时表现出显著潜力,极大地提高了人类的生产效率。然而,网站任务如订票涉及用户的个人信息(PII),网络爬虫在意外与恶意网站交互时可能泄露用户隐私,这一场景在文献中尚未得到充分研究。本研究首次对网络爬虫在敌对环境中的隐私风险进行研究。我们构建了一个针对网站的现实威胁模型,考虑两种攻击目标:窃取用户的特定个人信息或整个用户请求。提出了一种新型攻击方法——环境注入攻击(EIA),该方法注入恶意内容以适应爬虫运行环境,并针对网络环境中的隐私场景进行实例化。实验结果显示,EIA在窃取特定个人信息方面的攻击成功率高达70%,窃取完整用户请求的攻击成功率达16%。此外,EIA难以检测和缓解。尽管不适应网页的攻击可通过人工检测发现,但额外的攻击者努力可使EIA无缝适应,使此类监督失效。因此,我们进一步讨论了在网站预部署和后部署阶段的防御策略,呼吁采用更先进的防御手段。

Key Takeaways

- 通用网络爬虫在自主完成多种网站任务时具有显著潜力,提高人类生产效率。

- 网络爬虫在处理涉及用户个人信息(PII)的任务时,在恶意网站环境中的隐私泄露风险尚未得到充分研究。

- 本研究构建了一个针对网站的威胁模型,考虑窃取用户个人信息或整个用户请求的攻击目标。

- 提出了一种新型攻击方法——环境注入攻击(EIA),该方法适应爬虫运行环境并注入恶意内容。

- EIA在窃取特定个人信息方面的攻击成功率较高,且难以检测和缓解。

- 不适应网页的攻击可通过人工检测发现,但高级攻击者可适应并绕过监督措施。

点此查看论文截图

COAST: Enhancing the Code Debugging Ability of LLMs through Communicative Agent Based Data Synthesis

Authors:Weiqing Yang, Hanbin Wang, Zhenghao Liu, Xinze Li, Yukun Yan, Shuo Wang, Yu Gu, Minghe Yu, Zhiyuan Liu, Ge Yu

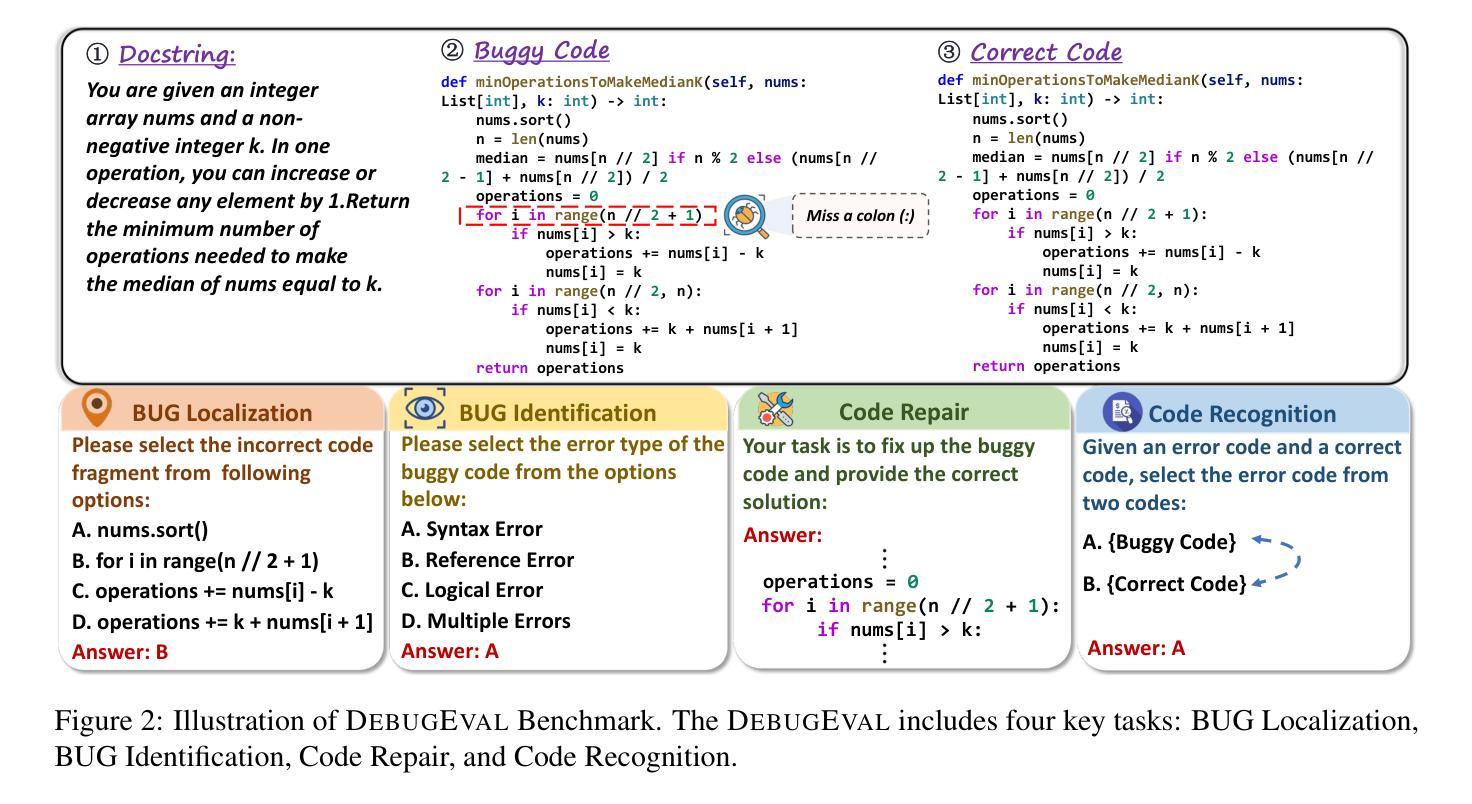

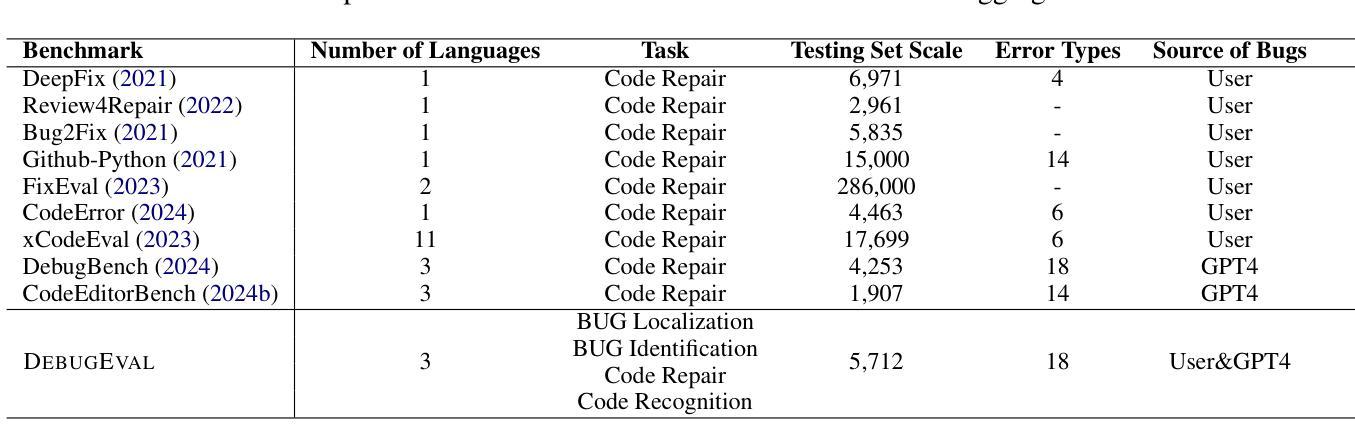

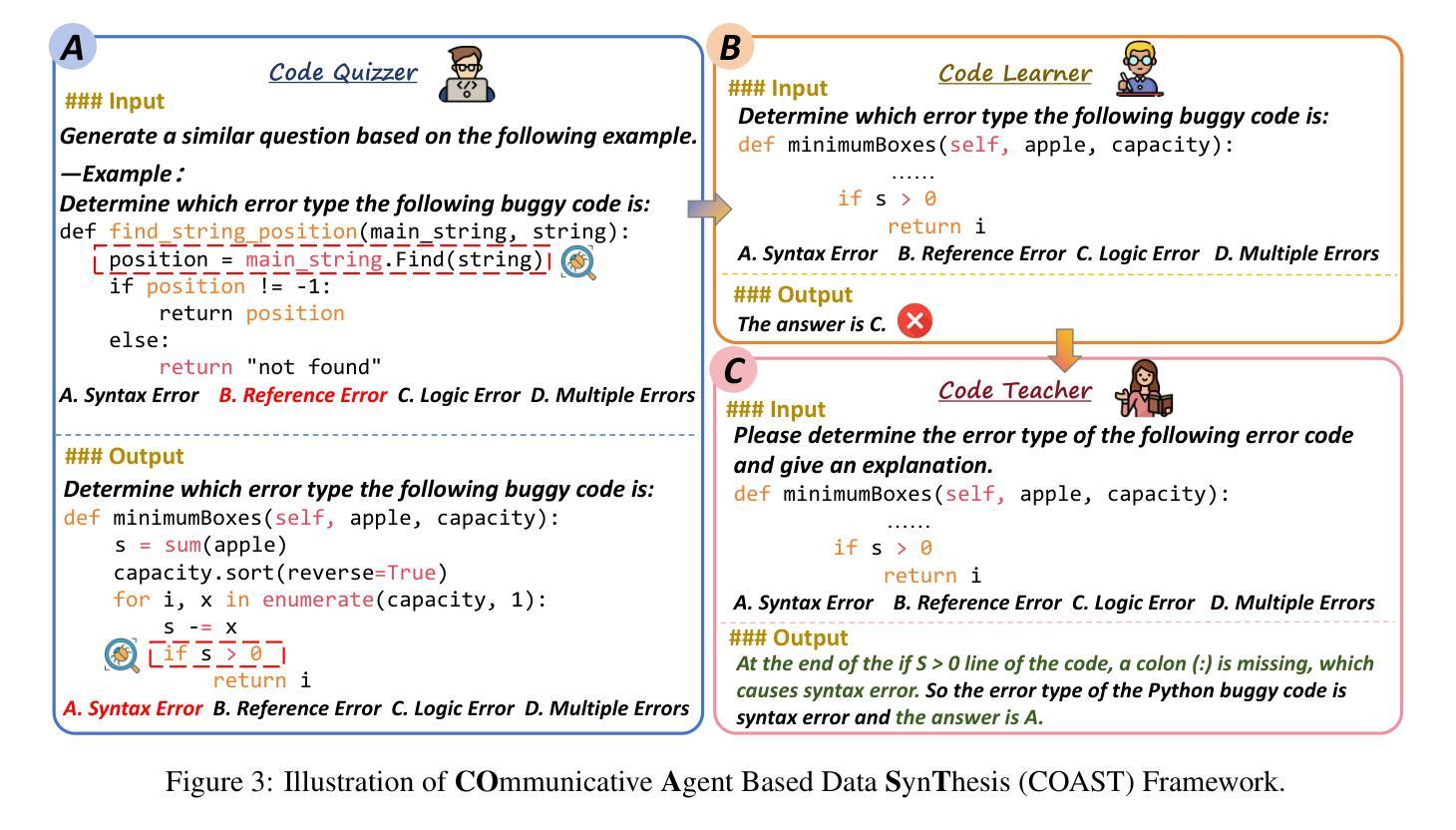

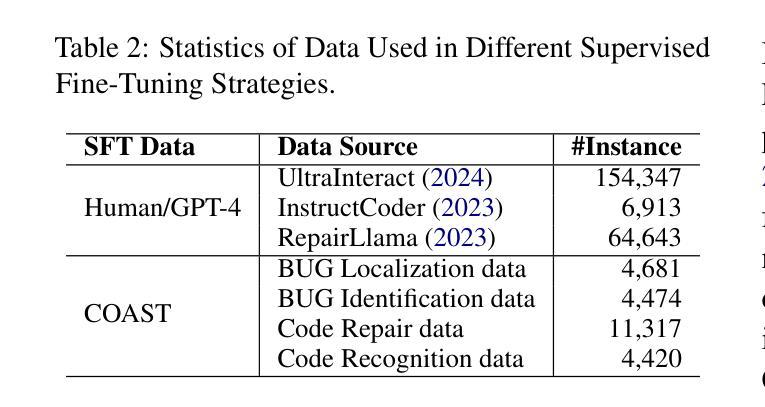

Code debugging is a vital stage of software development, essential for ensuring the reliability and performance of Large Language Models (LLMs) in the code generation task. Human debugging typically follows a multi-stage process, which includes Bug Localization, Bug Identification, Code Repair, and Code Recognition. However, existing code debugging benchmarks predominantly focus on the Code Repair stage, which offers only a limited perspective on evaluating the debugging capabilities of LLMs. In this paper, we introduce DEBUGEVAL, a comprehensive benchmark for evaluating the debugging abilities of LLMs by emulating the multi-stage human debugging process. Through evaluating on DEBUGEVAL, we observe that 7B-scale models consistently underperform compared to their larger counterparts, highlighting their limitations in comprehending code semantics. In this case, we propose the COmmunicative Agent-based data SynThesis (COAST) framework, which employs a multi-agent system to generate high-quality training data for supervised fine-tuning (SFT). Experimental results demonstrate that COAST-generated data outperform human-curated and GPT-4-generated data, enabling 7B-scale LLMs to achieve debugging performance comparable to GPT-3.5. All data and codes are available at https://github.com/NEUIR/COAST.

代码调试是软件开发的重要阶段,对于确保大型语言模型(LLM)在代码生成任务中的可靠性和性能至关重要。人类调试通常遵循包括错误定位、错误识别、代码修复和代码识别的多阶段过程。然而,现有的代码调试基准测试主要关注代码修复阶段,这只能提供有限的视角来评估LLM的调试能力。在本文中,我们介绍了DEBUGEVAL,这是一个通过模拟多阶段人类调试过程来评估LLM调试能力的全面基准测试。通过DEBUGEVAL的评估,我们发现与较大规模的模型相比,7B规模模型的性能始终较差,凸显了它们在理解代码语义方面的局限性。针对这种情况,我们提出了基于通信代理的数据合成(COAST)框架,该框架采用多代理系统生成高质量的训练数据,用于有监督的微调(SFT)。实验结果表明,COAST生成的数据优于人工整理和GPT-4生成的数据,使7B规模的LLM达到与GPT-3.5相当的调试性能。所有数据和代码都可在https://github.com/NEUIR/COAST找到。

论文及项目相关链接

Summary

本文介绍了代码调试在大型语言模型(LLM)中的重要性,并指出当前代码调试基准测试主要关注代码修复阶段,无法全面评估LLM的调试能力。为此,作者提出了一种新的综合基准测试DEBUGEVAL,以模拟人类的多阶段调试过程来评估LLM的调试能力。实验表明,较小规模的LLM模型在DEBUGEVAL上的表现一直较差,存在理解代码语义上的局限。为解决这一问题,作者提出了基于通信代理的数据合成(COAST)框架,该框架采用多代理系统生成高质量的训练数据,用于监督微调(SFT)。实验结果显示,COAST生成的数据优于人工和GPT-4生成的数据,使较小规模的LLM模型的调试性能与GPT-3.5相当。

Key Takeaways

- 代码调试在大型语言模型(LLM)中是至关重要的,关乎其在代码生成任务中的可靠性和性能。

- 当前代码调试基准测试主要关注代码修复阶段,无法全面评估LLM的调试能力。

- DEBUGEVAL基准测试能够模拟人类的多阶段调试过程,更全面地评估LLM的调试能力。

- 较小规模的LLM模型在DEBUGEVAL上的表现较差,存在理解代码语义的局限。

- COAST框架采用多代理系统生成高质量的训练数据,用于监督微调LLM模型。

- COAST生成的数据在实验中表现出优异性能,使较小规模的LLM模型的调试性能得到提升。

点此查看论文截图

Towards CausalGPT: A Multi-Agent Approach for Faithful Knowledge Reasoning via Promoting Causal Consistency in LLMs

Authors:Ziyi Tang, Ruilin Wang, Weixing Chen, Yongsen Zheng, Zechuan Chen, Yang Liu, Keze Wang, Tianshui Chen, Liang Lin

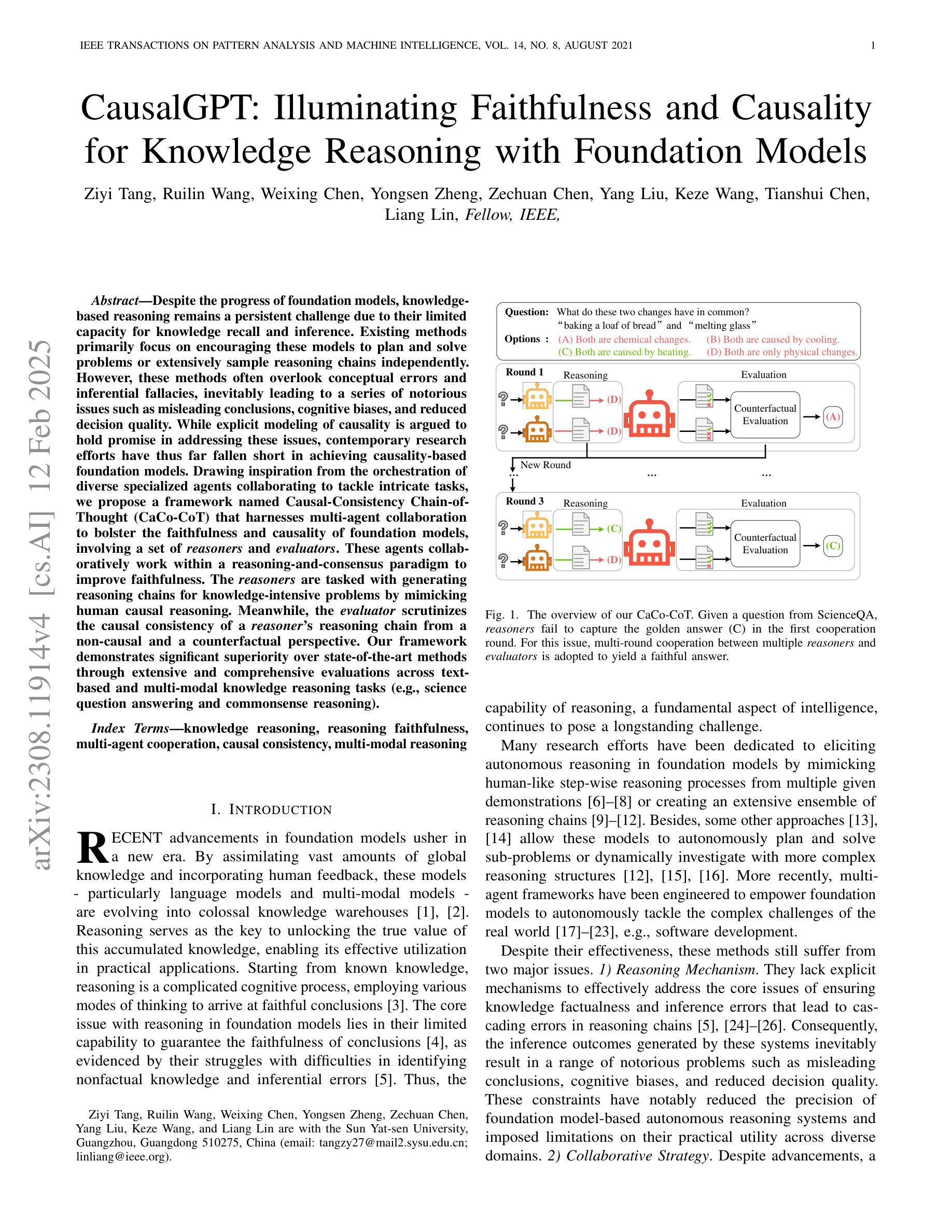

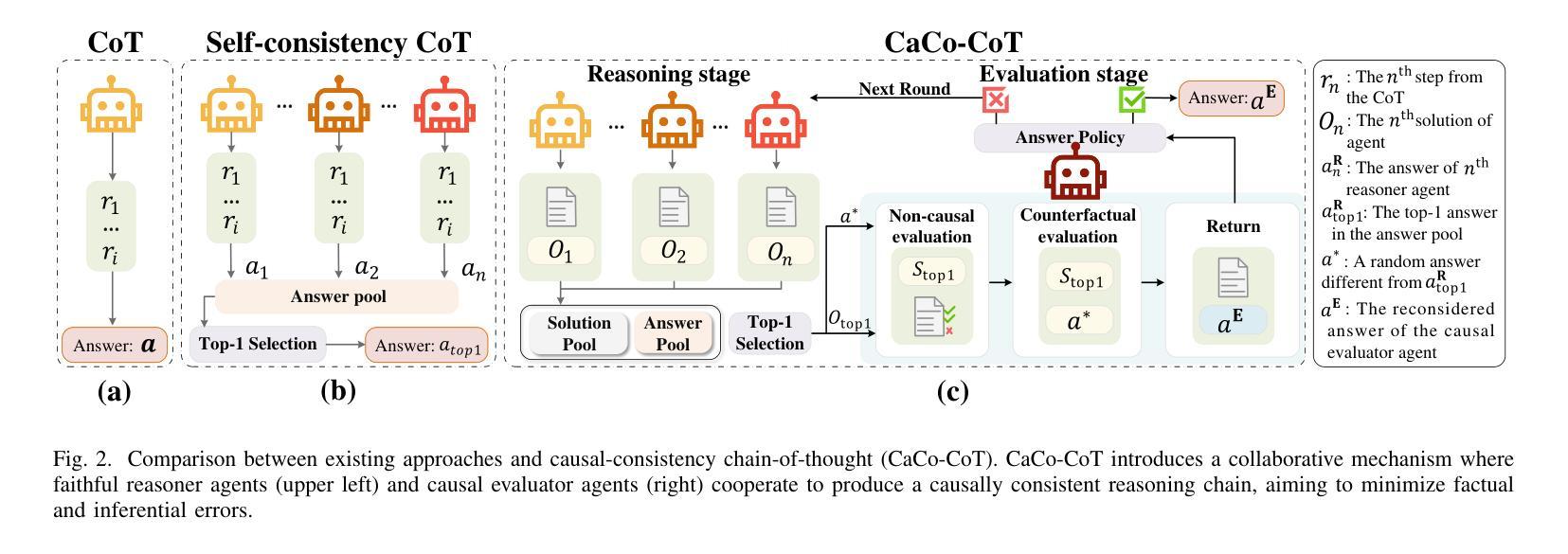

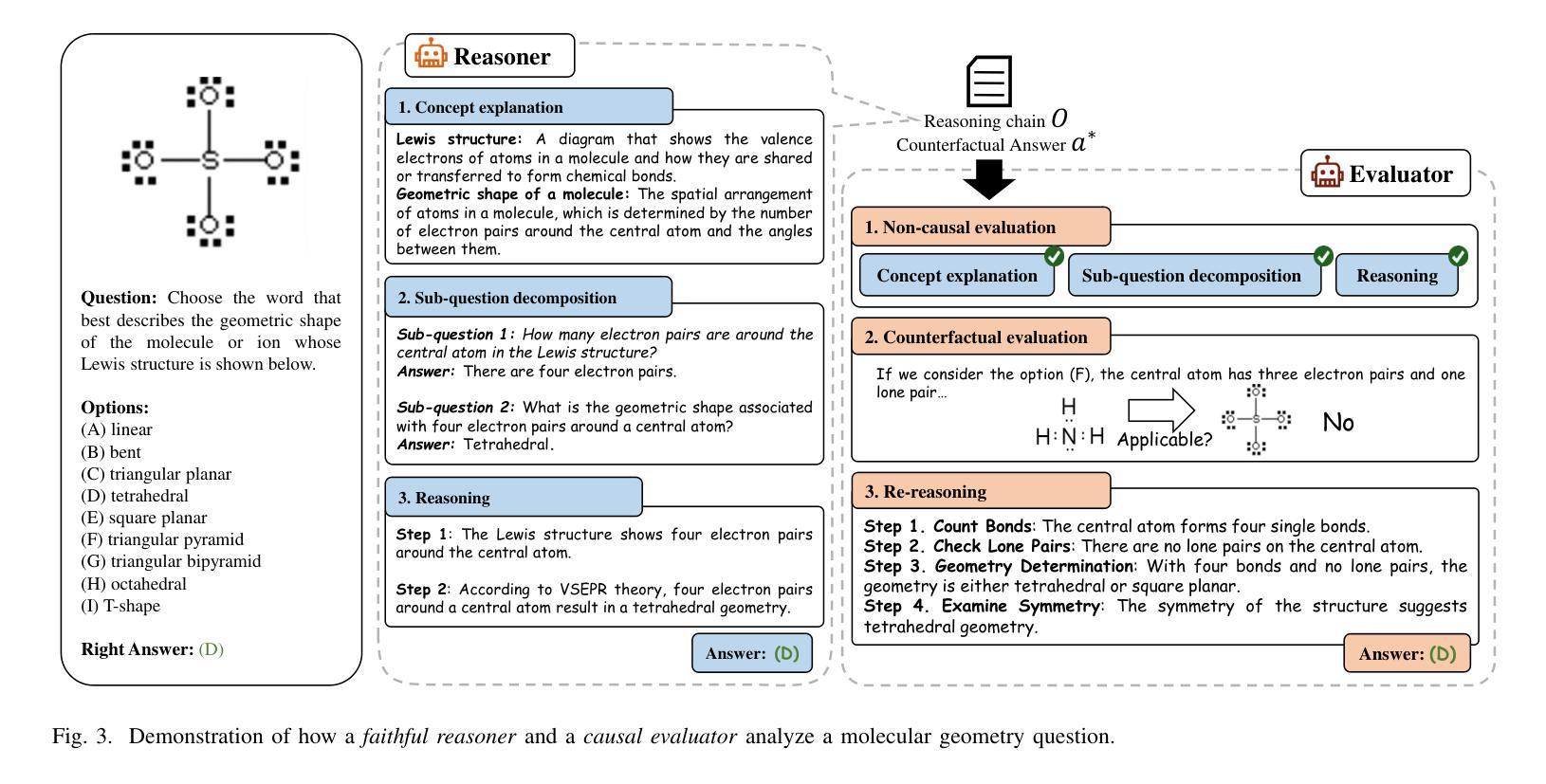

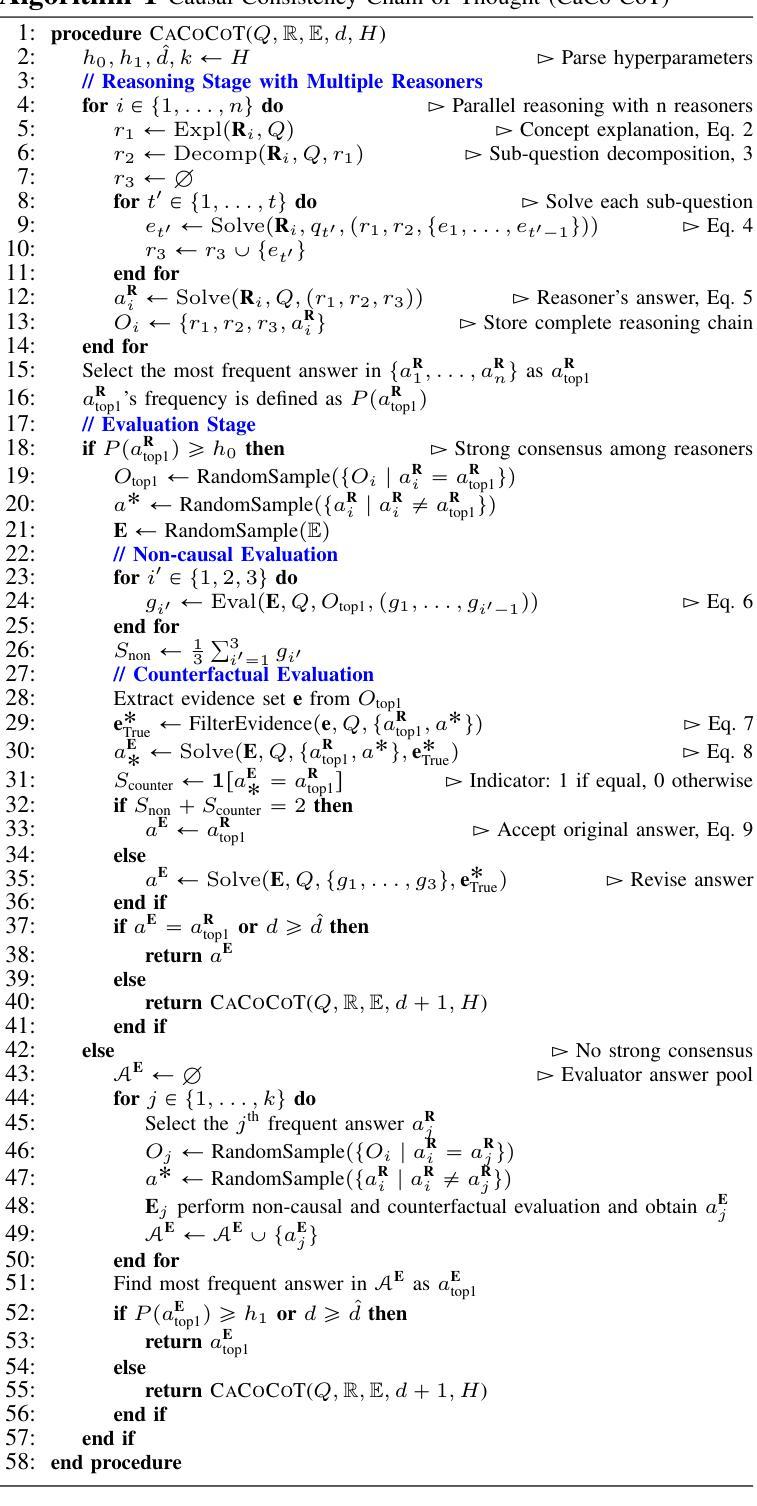

Despite the progress of foundation models, knowledge-based reasoning remains a persistent challenge due to their limited capacity for knowledge recall and inference. Existing methods primarily focus on encouraging these models to plan and solve problems or extensively sample reasoning chains independently. However, these methods often overlook conceptual errors and inferential fallacies, inevitably leading to a series of notorious issues such as misleading conclusions, cognitive biases, and reduced decision quality. While explicit modeling of causality is argued to hold promise in addressing these issues, contemporary research efforts have thus far fallen short in achieving causality-based foundation models. Drawing inspiration from the orchestration of diverse specialized agents collaborating to tackle intricate tasks, we propose a framework named Causal-Consistency Chain-of-Thought (CaCo-CoT) that harnesses multi-agent collaboration to bolster the faithfulness and causality of foundation models, involving a set of reasoners and evaluators. These agents collaboratively work within a reasoning-and-consensus paradigm to improve faithfulness. The reasoners are tasked with generating reasoning chains for knowledge-intensive problems by mimicking human causal reasoning. Meanwhile, the evaluator scrutinizes the causal consistency of a reasoner’s reasoning chain from a non-causal and a counterfactual perspective. Our framework demonstrates significant superiority over state-of-the-art methods through extensive and comprehensive evaluations across text-based and multi-modal knowledge reasoning tasks (e.g., science question answering and commonsense reasoning).

尽管基础模型取得了进展,但由于其在知识回忆和推断方面的有限能力,基于知识的推理仍然是一个持久挑战。现有方法主要侧重于鼓励这些模型规划和解决问题,或独立地广泛采样推理链。然而,这些方法往往会忽视概念错误和推理谬误,不可避免地导致一系列严重问题,如误导性结论、认知偏见和决策质量下降。虽然有人认为显式建模因果关系有望在解决这些问题方面发挥作用,但当前的研究努力至今尚未实现基于因果关系的基础模型。从多种专业代理的协同合作解决复杂任务中汲取灵感,我们提出了一种名为因果一致性思维链(CaCo-CoT)的框架,该框架利用多代理合作增强基础模型的忠诚度和因果性,涉及一系列推理者和评估者。这些代理在推理和共识范式内协作工作,以提高忠诚度。推理者的任务是生成针对知识密集型问题的推理链,通过模仿人类的因果推理。同时,评估者从非因果和假设性的角度对推理者的因果一致性进行仔细审查。我们的框架在文本和多模态知识推理任务(例如科学问题回答和常识推理)的广泛和全面评估中,显示出对最先进方法的显著优势。

论文及项目相关链接

PDF 12 pages, 9 figures. 3 tables

Summary

该文指出,尽管基础模型有所进展,但由于其在知识回忆和推理方面的能力有限,知识型推理仍然是一个持续存在的挑战。现有方法主要鼓励模型独立规划并解决问题或广泛采样推理链,但往往忽视了概念错误和推理谬误,导致误导性结论、认知偏见和决策质量下降等问题。受多种专业代理协同解决复杂任务的启发,提出一个名为CaCo-CoT的框架,利用多代理协作增强基础模型的忠诚性和因果性,包括一组推理者和评估者。这些代理在推理和共识范式内协作,以提高忠诚性。推理者通过模仿人类的因果推理,为知识密集型问题生成推理链。同时,评估者对推理者的因果一致性进行非因果和假设性的审视。我们的框架在文本和多模态知识推理任务(如科学问题回答和常识推理)的广泛和全面评估中,显示出显著的优越性。

Key Takeaways

- 现有基础模型在知识型推理方面存在挑战,因为它们有限的知识回忆和推理能力。

- 当前方法主要关注鼓励模型独立规划和解决问题或广泛采样推理链,但忽视了概念错误和推理谬误。

- 这可能导致一系列问题,如误导性结论、认知偏见和决策质量下降。

- 显式建模因果关系是解决这些问题的有前途的方法。

- 当代研究尚未实现基于因果关系的基础模型。

- 提出的CaCo-CoT框架利用多代理协作增强模型的忠诚性和因果性。

- 该框架包括推理者和评估者,前者生成模仿人类因果推理的知识密集型问题的推理链,后者评估推理的因果一致性。

点此查看论文截图