⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-03-07 更新

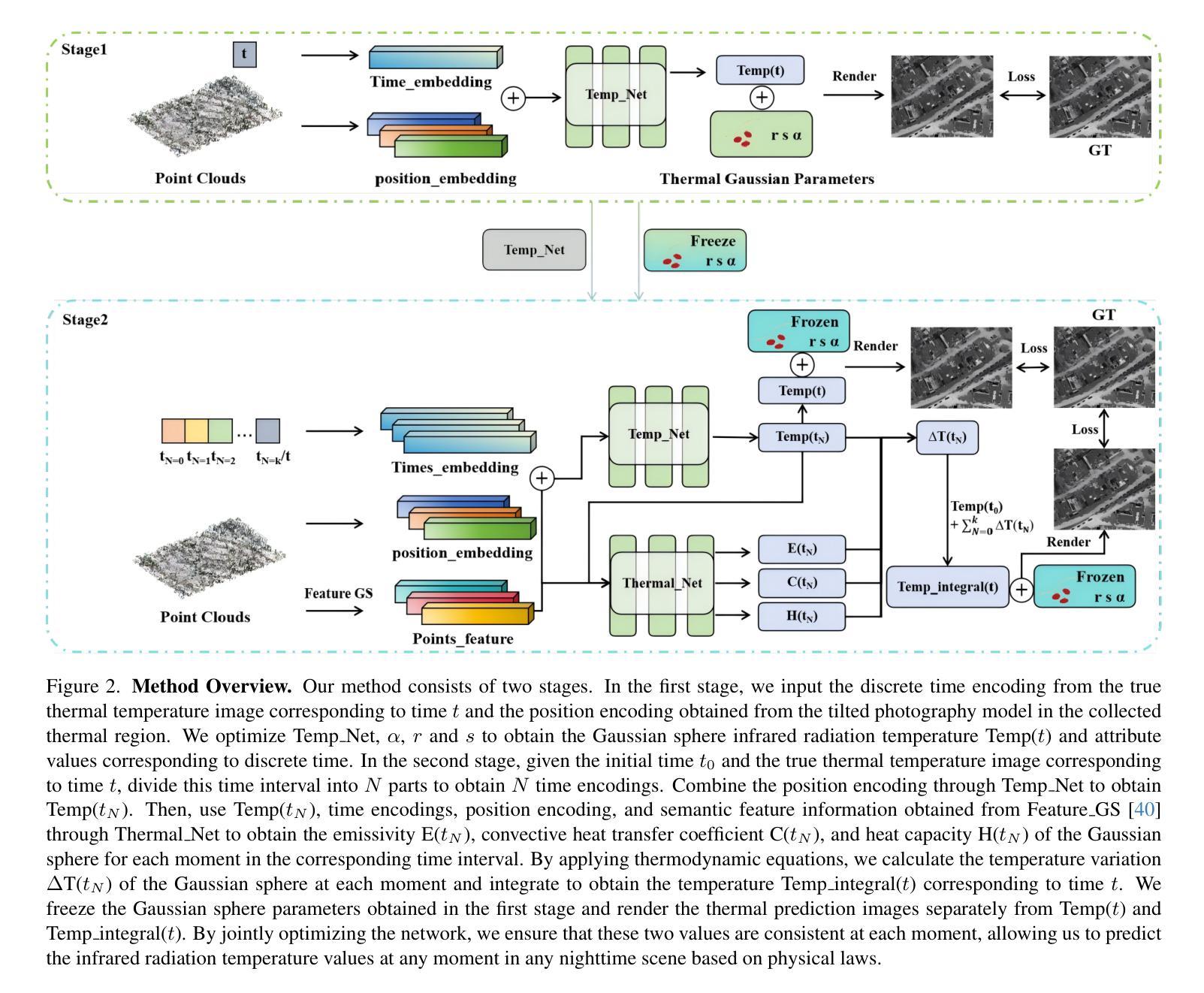

NTR-Gaussian: Nighttime Dynamic Thermal Reconstruction with 4D Gaussian Splatting Based on Thermodynamics

Authors:Kun Yang, Yuxiang Liu, Zeyu Cui, Yu Liu, Maojun Zhang, Shen Yan, Qing Wang

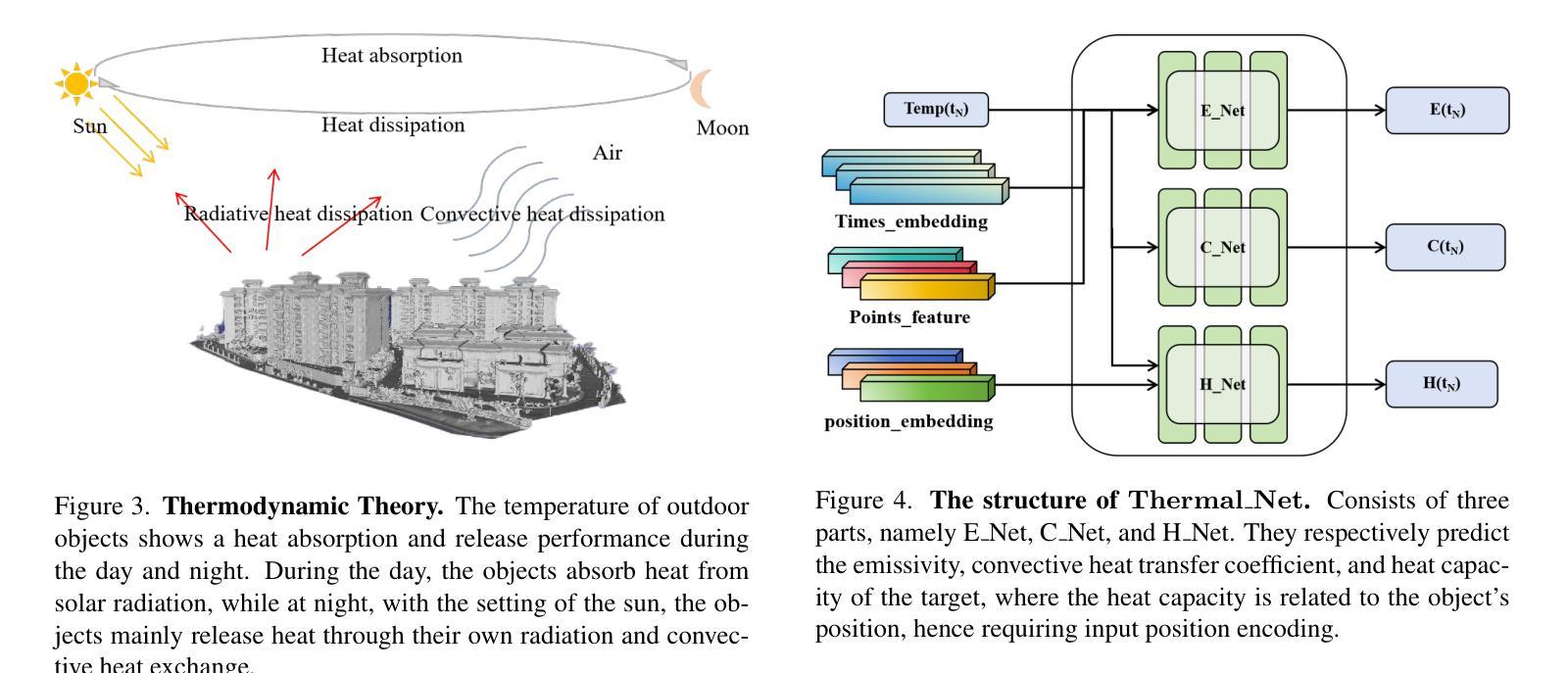

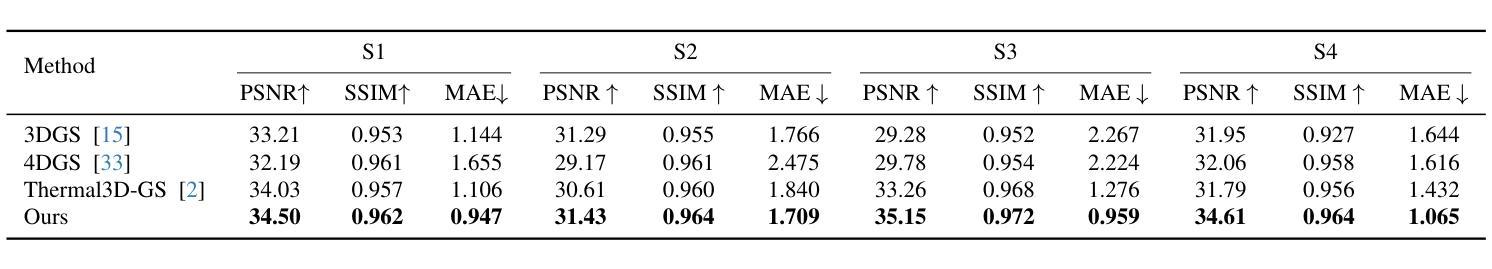

Thermal infrared imaging offers the advantage of all-weather capability, enabling non-intrusive measurement of an object’s surface temperature. Consequently, thermal infrared images are employed to reconstruct 3D models that accurately reflect the temperature distribution of a scene, aiding in applications such as building monitoring and energy management. However, existing approaches predominantly focus on static 3D reconstruction for a single time period, overlooking the impact of environmental factors on thermal radiation and failing to predict or analyze temperature variations over time. To address these challenges, we propose the NTR-Gaussian method, which treats temperature as a form of thermal radiation, incorporating elements like convective heat transfer and radiative heat dissipation. Our approach utilizes neural networks to predict thermodynamic parameters such as emissivity, convective heat transfer coefficient, and heat capacity. By integrating these predictions, we can accurately forecast thermal temperatures at various times throughout a nighttime scene. Furthermore, we introduce a dynamic dataset specifically for nighttime thermal imagery. Extensive experiments and evaluations demonstrate that NTR-Gaussian significantly outperforms comparison methods in thermal reconstruction, achieving a predicted temperature error within 1 degree Celsius.

热红外成像具有全天候能力,能够实现非接触式测量物体表面温度。因此,热红外图像被用来重建3D模型,准确反映场景的温度分布,有助于建筑监测和能源管理等领域的应用。然而,现有的方法主要集中在单一时间段的静态3D重建上,忽视了环境因素对热辐射的影响,无法预测或分析随时间变化的温度差异。为了解决这些挑战,我们提出了NTR-Gaussian方法,将温度视为一种热辐射形式,并融入对流热传导和辐射热散失等元素。我们的方法利用神经网络来预测热力学参数,如发射率、对流热传递系数和热容量。通过整合这些预测,我们能够准确预测夜间场景不同时间的温度。此外,我们还引入了一个专门针对夜间热成像的动态数据集。大量实验和评估表明,NTR-Gaussian在热重建方面的表现远超对比方法,预测温度误差控制在1摄氏度以内。

论文及项目相关链接

PDF IEEE Conference on Computer Vision and Pattern Recognition 2025

Summary

热红外成像具有全天候能力,可进行非接触式物体表面温度测量。因此,热红外图像被用于重建3D模型,准确反映场景的温度分布,有助于建筑监测和能源管理等领域的应用。然而,现有方法主要关注单一时间段的静态3D重建,忽视环境因素对热辐射的影响,无法预测或分析温度随时间的变化。为此,我们提出NTR-Gaussian方法,将温度视为热辐射的一种形式,融入对流热传导和辐射热散发等元素。该方法利用神经网络预测热力学参数,如发射率、对流热传递系数和热容量。通过整合这些预测值,我们能准确预测夜间场景不同时间的温度。此外,我们还引入了一个专门的夜间热影像动态数据集。实验评估显示,NTR-Gaussian在热重建方面显著优于对比方法,预测温度误差控制在1摄氏度以内。

Key Takeaways

- 热红外成像具有全天候能力,用于非接触式测量物体表面温度。

- 热红外图像可重建3D模型,反映场景温度分布,应用于建筑监测和能源管理。

- 现有方法主要关注单一时间段的静态3D重建,忽视环境因素对温度的影响。

- NTR-Gaussian方法将温度视为热辐射形式,融入对流和辐射元素。

- 神经网络用于预测热力学参数,如发射率、对流热传递系数和热容量。

- NTR-Gaussian能预测夜间场景不同时间的温度。

点此查看论文截图

Deblur-Avatar: Animatable Avatars from Motion-Blurred Monocular Videos

Authors:Xianrui Luo, Juewen Peng, Zhongang Cai, Lei Yang, Fan Yang, Zhiguo Cao, Guosheng Lin

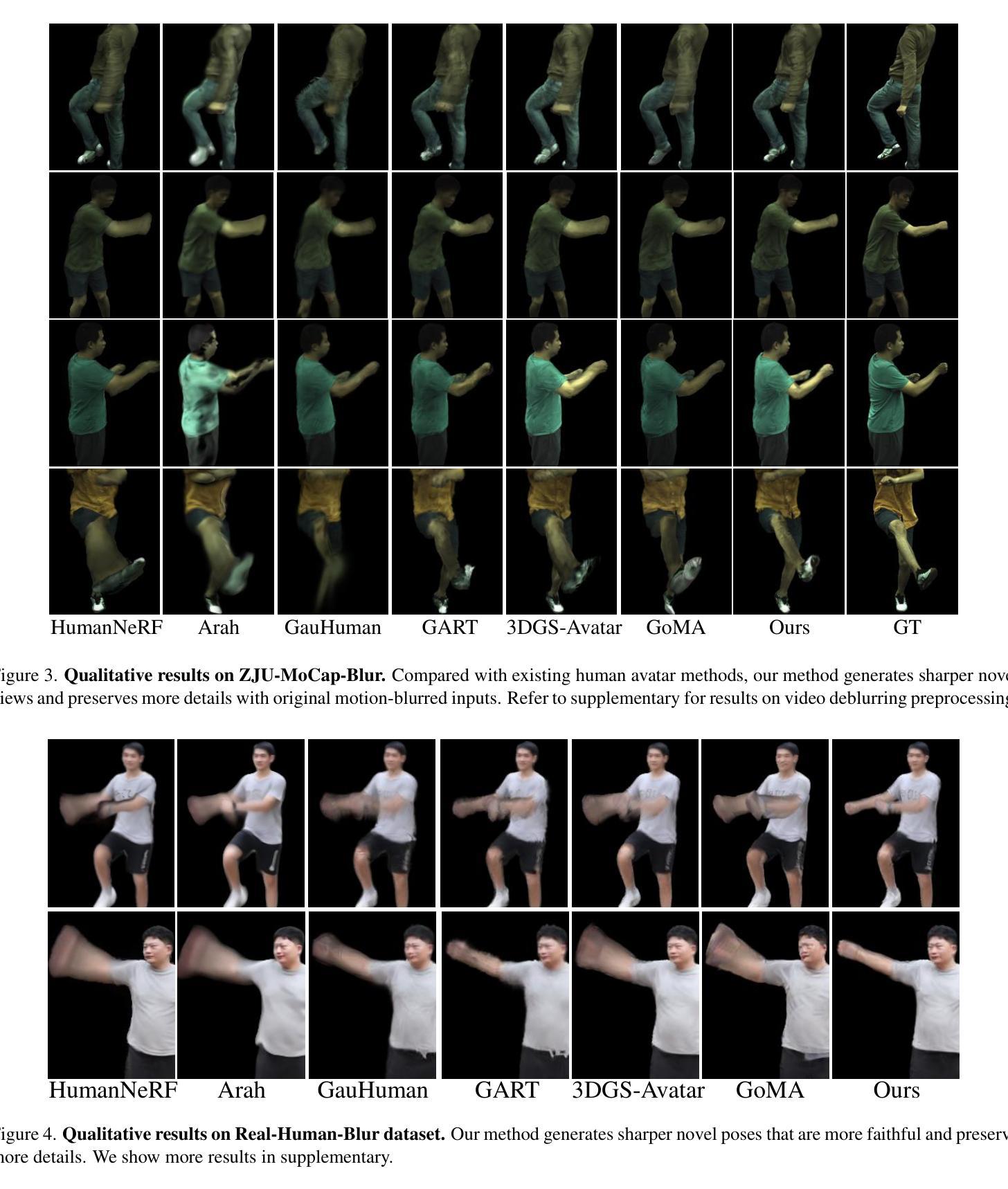

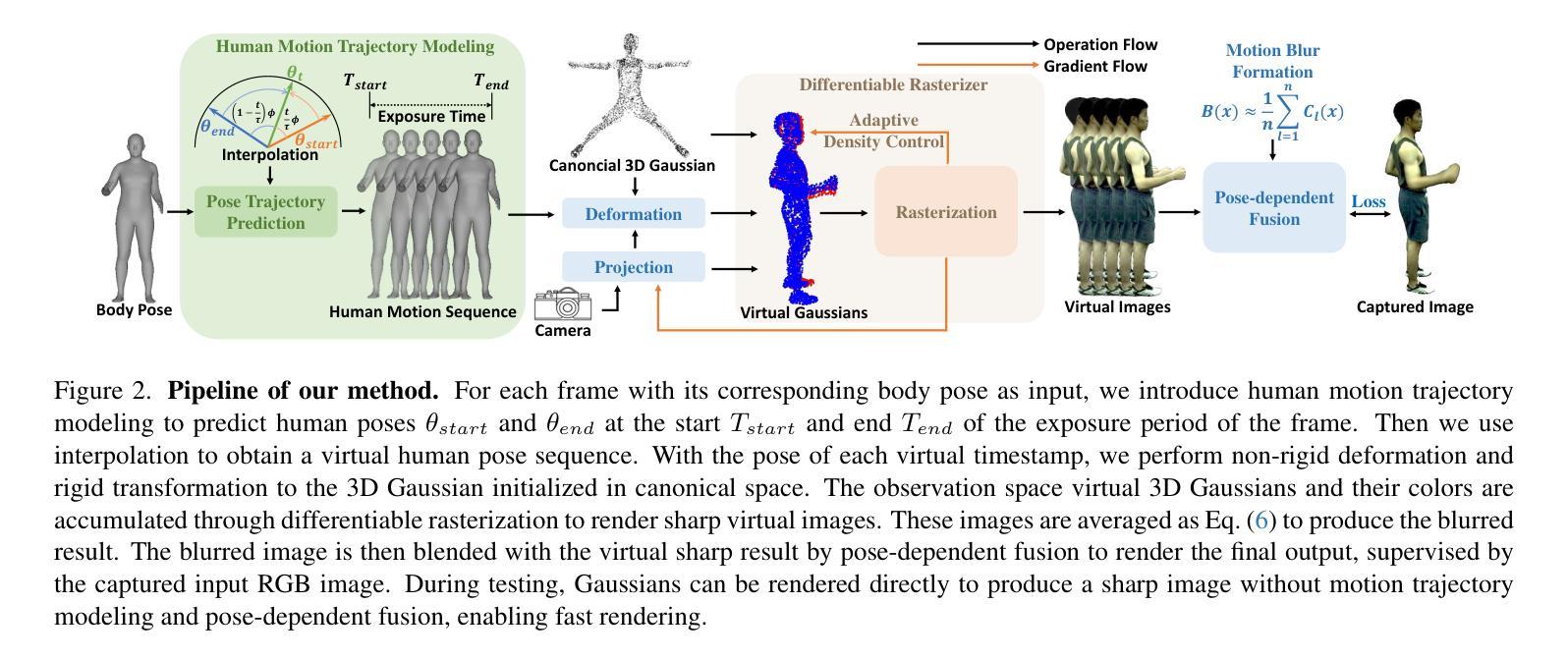

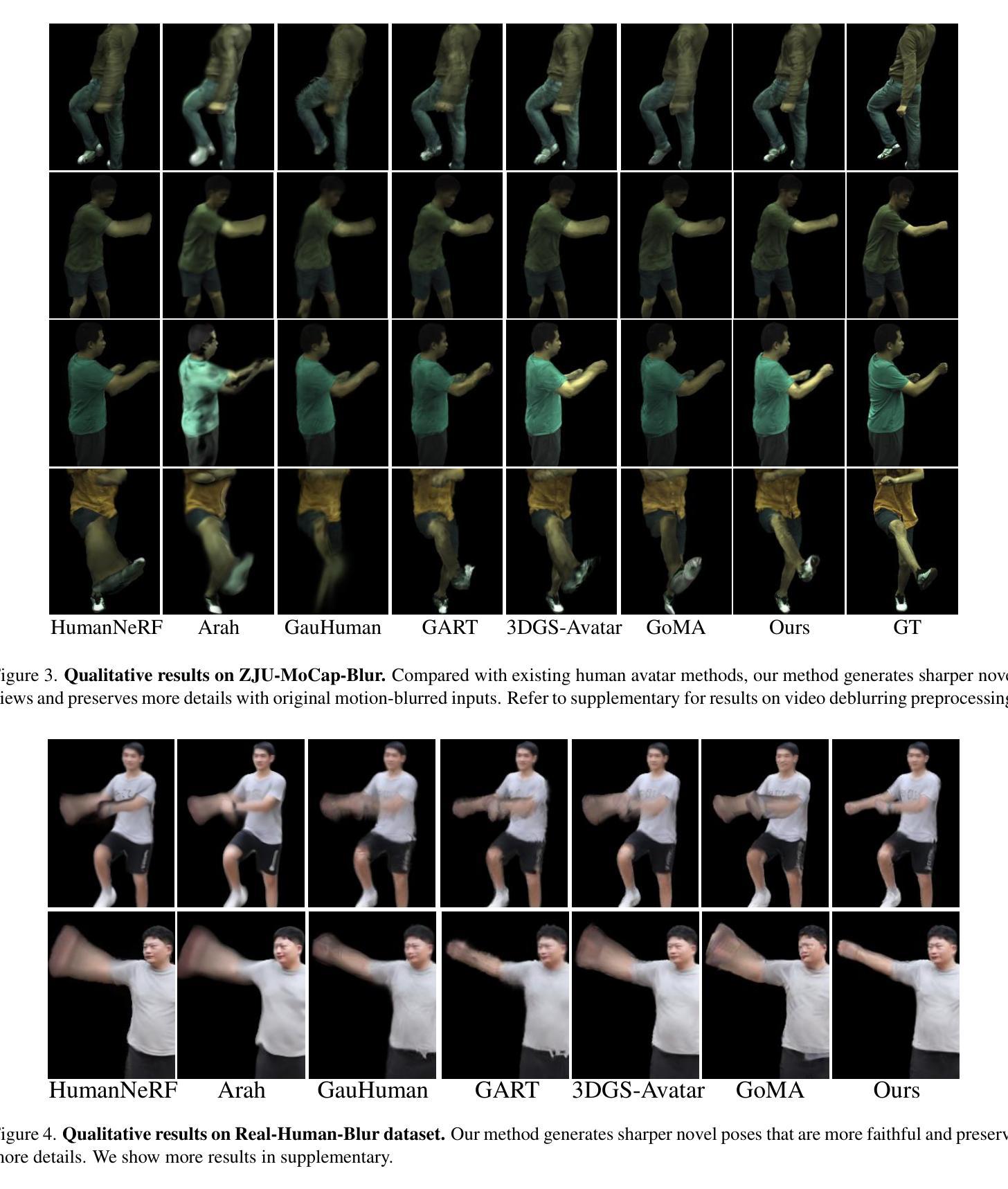

We introduce a novel framework for modeling high-fidelity, animatable 3D human avatars from motion-blurred monocular video inputs. Motion blur is prevalent in real-world dynamic video capture, especially due to human movements in 3D human avatar modeling. Existing methods either (1) assume sharp image inputs, failing to address the detail loss introduced by motion blur, or (2) mainly consider blur by camera movements, neglecting the human motion blur which is more common in animatable avatars. Our proposed approach integrates a human movement-based motion blur model into 3D Gaussian Splatting (3DGS). By explicitly modeling human motion trajectories during exposure time, we jointly optimize the trajectories and 3D Gaussians to reconstruct sharp, high-quality human avatars. We employ a pose-dependent fusion mechanism to distinguish moving body regions, optimizing both blurred and sharp areas effectively. Extensive experiments on synthetic and real-world datasets demonstrate that our method significantly outperforms existing methods in rendering quality and quantitative metrics, producing sharp avatar reconstructions and enabling real-time rendering under challenging motion blur conditions.

我们引入了一种新型框架,用于从运动模糊的单目视频输入中建立高保真、可动画的3D人类化身。运动模糊在真实世界的动态视频捕捉中普遍存在,特别是在3D人类化身建模中。现有方法要么(1)假设图像输入是清晰的,无法处理运动模糊引入的细节损失;要么(2)主要考虑由相机运动引起的模糊,忽略了在可动画化身中更常见的运动模糊。我们提出的方法将基于人类运动的运动模糊模型集成到3D高斯拼贴(3DGS)中。通过显式建模曝光过程中的人类运动轨迹,我们联合优化轨迹和3D高斯,以重建清晰、高质量的人类化身。我们采用姿态依赖的融合机制来区分移动的身体区域,有效地优化模糊和清晰区域。在合成和真实世界数据集上的大量实验表明,我们的方法在渲染质量和定量指标方面显著优于现有方法,能够产生清晰的化身重建,并在具有挑战性的运动模糊条件下实现实时渲染。

论文及项目相关链接

Summary

一种新的建模框架,可从运动模糊的单目视频输入中创建高保真、可动画的3D人类角色。该方法整合了基于人类运动的运动模糊模型与3D高斯拼贴(3DGS)。通过明确模拟曝光期间的人类运动轨迹,联合优化轨迹和3D高斯,重建清晰、高质量的人类角色。采用姿态依赖融合机制区分移动区域,有效优化模糊和清晰区域。实验证明,该方法在渲染质量和定量指标上显著优于现有方法,能创建清晰的角色重建,并在具有挑战性的运动模糊条件下实现实时渲染。

Key Takeaways

- 引入了一种新的建模框架,可以从运动模糊的单目视频创建高质量的3D人类角色。

- 框架结合了运动模糊模型和3D高斯拼贴(3DGS)。

- 通过模拟曝光期间的人类运动轨迹,优化轨迹和3D高斯以重建清晰的角色。

- 采用姿态依赖融合机制来区分移动区域,优化模糊和清晰区域的处理。

- 该方法在合成和真实世界数据集上的实验表现优异,渲染质量和定量指标均优于现有方法。

- 方法能够创建清晰的角色重建,并在运动模糊条件下实现实时渲染。

- 该方法填补了现有技术中对运动模糊处理的空白,特别是在可动画角色建模中常见的人类运动模糊处理方面。

点此查看论文截图

3DGS.zip: A survey on 3D Gaussian Splatting Compression Methods

Authors:Milena T. Bagdasarian, Paul Knoll, Yi-Hsin Li, Florian Barthel, Anna Hilsmann, Peter Eisert, Wieland Morgenstern

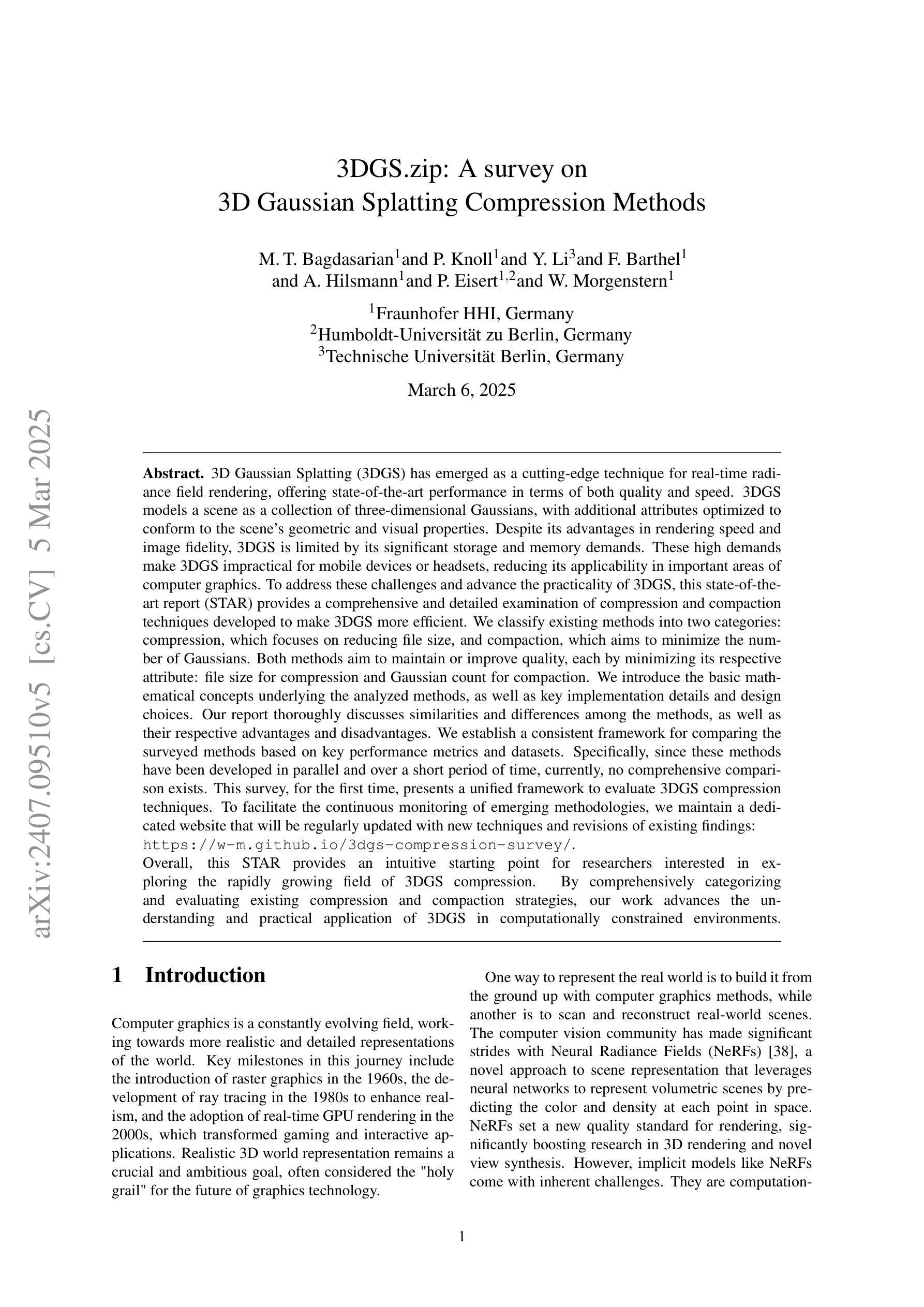

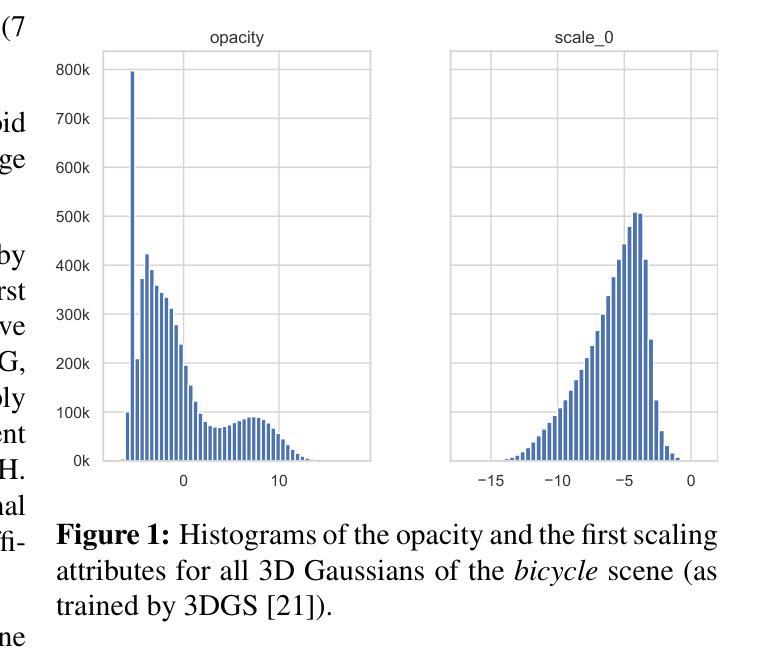

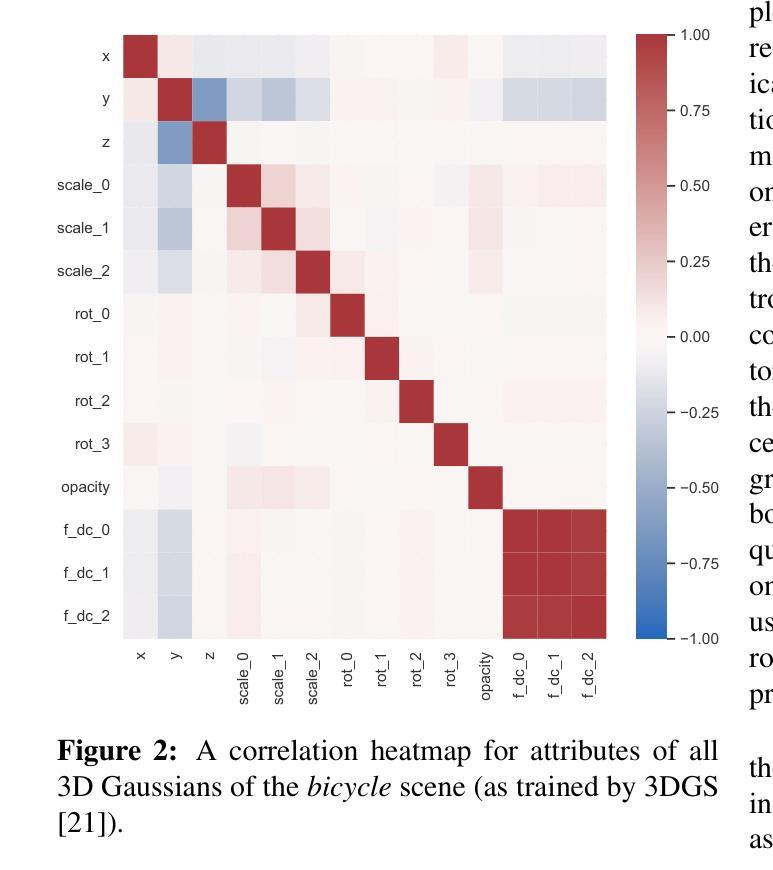

3D Gaussian Splatting (3DGS) has emerged as a cutting-edge technique for real-time radiance field rendering, offering state-of-the-art performance in terms of both quality and speed. 3DGS models a scene as a collection of three-dimensional Gaussians, with additional attributes optimized to conform to the scene’s geometric and visual properties. Despite its advantages in rendering speed and image fidelity, 3DGS is limited by its significant storage and memory demands. These high demands make 3DGS impractical for mobile devices or headsets, reducing its applicability in important areas of computer graphics. To address these challenges and advance the practicality of 3DGS, this survey provides a comprehensive and detailed examination of compression and compaction techniques developed to make 3DGS more efficient. We classify existing methods into two categories: compression, which focuses on reducing file size, and compaction, which aims to minimize the number of Gaussians. Both methods aim to maintain or improve quality, each by minimizing its respective attribute: file size for compression and Gaussian count for compaction. We introduce the basic mathematical concepts underlying the analyzed methods, as well as key implementation details and design choices. Our report thoroughly discusses similarities and differences among the methods, as well as their respective advantages and disadvantages. We establish a consistent framework for comparing the surveyed methods based on key performance metrics and datasets. Specifically, since these methods have been developed in parallel and over a short period of time, currently, no comprehensive comparison exists. This survey, for the first time, presents a unified framework to evaluate 3DGS compression techniques. We maintain a website that will be regularly updated with emerging methods: https://w-m.github.io/3dgs-compression-survey/ .

3D高斯摊铺(3DGS)作为一种先进的实时辐射场渲染技术,在质量和速度方面都达到了最新性能水平。3DGS将场景建模为一系列三维高斯,并优化额外的属性以符合场景的几何和视觉属性。尽管其在渲染速度和图像保真度方面具有优势,但3DGS对存储和内存的需求很大,这使得它在移动设备或头戴设备上的实用性受到限制,也降低了其在计算机图形学重要领域的应用性。为了应对这些挑战并推动3DGS的实用性,这篇综述对为提升3DGS效率而开发的压缩和压实技术进行了全面详细的研究。我们将现有方法分为两类:压缩,旨在减小文件大小;和压实,旨在减少高斯数量。两种方法的目的是保持或提高质量,各自通过最小化其相关属性:压缩关注文件大小,压实关注高斯计数。我们介绍了所分析方法的基本数学概念,以及关键的实施细节和设计选择。我们的报告全面讨论了这些方法之间的相似性和差异性,以及各自的优缺点。我们建立了一个比较所调查方法的统一框架,该框架基于关键性能指标和数据集。具体来说,由于这些方法是在并行且短时间内开发的,目前尚缺乏全面的比较。这篇综述首次提出了评估3DGS压缩技术的统一框架。我们维护了一个网站,该网站将定期更新新兴方法:https://w-m.github.io/3dgs-compression-survey/。

论文及项目相关链接

PDF 3D Gaussian Splatting compression survey; 3DGS compression; updated discussion; new approaches added; new illustrations

摘要

本文介绍了实时辐射场渲染中的尖端技术——三维高斯模板法(3DGS)。它能够高质量和高速度地建模场景,并优化额外的属性以符合场景的几何和视觉属性。然而,由于其对存储和内存的高需求,使得它在移动设备或头戴设备上的实际应用受限。本文全面探讨了为改善三维高斯模板法的效率而开发的压缩和压实技术,旨在解决这些挑战并推动其实用性。我们将现有方法分为两类:侧重于减小文件大小的压缩方法和旨在最小化高斯数量的压实方法。报告详细阐述了这些方法的基本数学概念、关键实现细节和设计选择,并讨论了它们的优缺点和相似之处。此外,本文建立了一个统一的框架来评估所调查的方法的关键性能指标,这是首次对三维高斯模板法压缩技术进行全面的比较和分析。相关网站将持续更新新兴方法:https://w-m.github.io/3dgs-compression-survey/。

关键见解

- 3DGS技术以其高质量和高速度的实时辐射场渲染能力成为前沿技术。

- 3DGS通过将场景建模为三维高斯来优化场景几何和视觉属性。

- 3DGS面临存储和内存需求高的挑战,限制了其在移动设备和头戴设备上的应用。

- 压缩和压实技术被开发出来以提高3DGS的效率。

- 压缩方法主要关注减小文件大小,而压实方法则致力于减少高斯数量。

- 报告首次提供了统一的框架来评估和分析3DGS的压缩技术。

点此查看论文截图

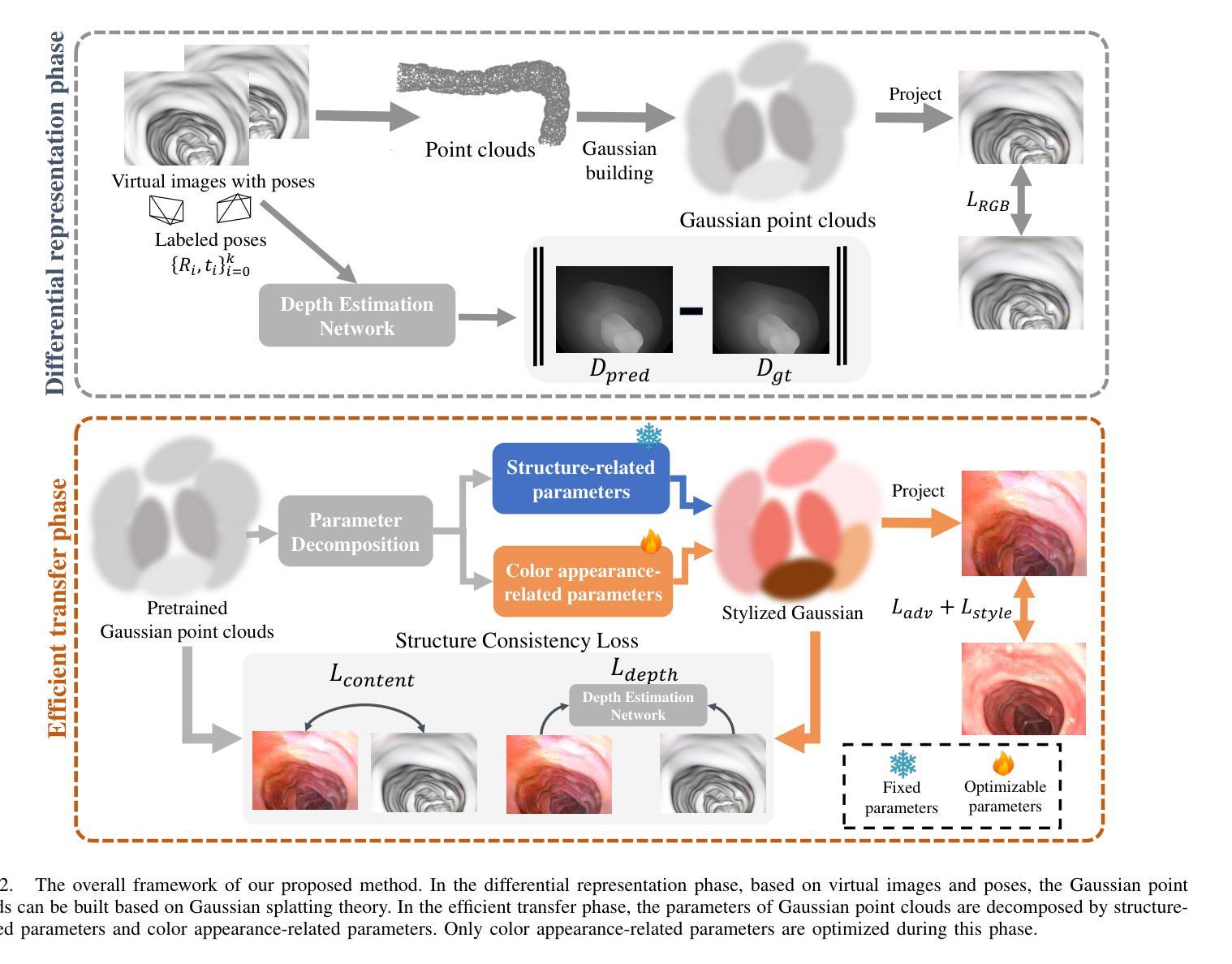

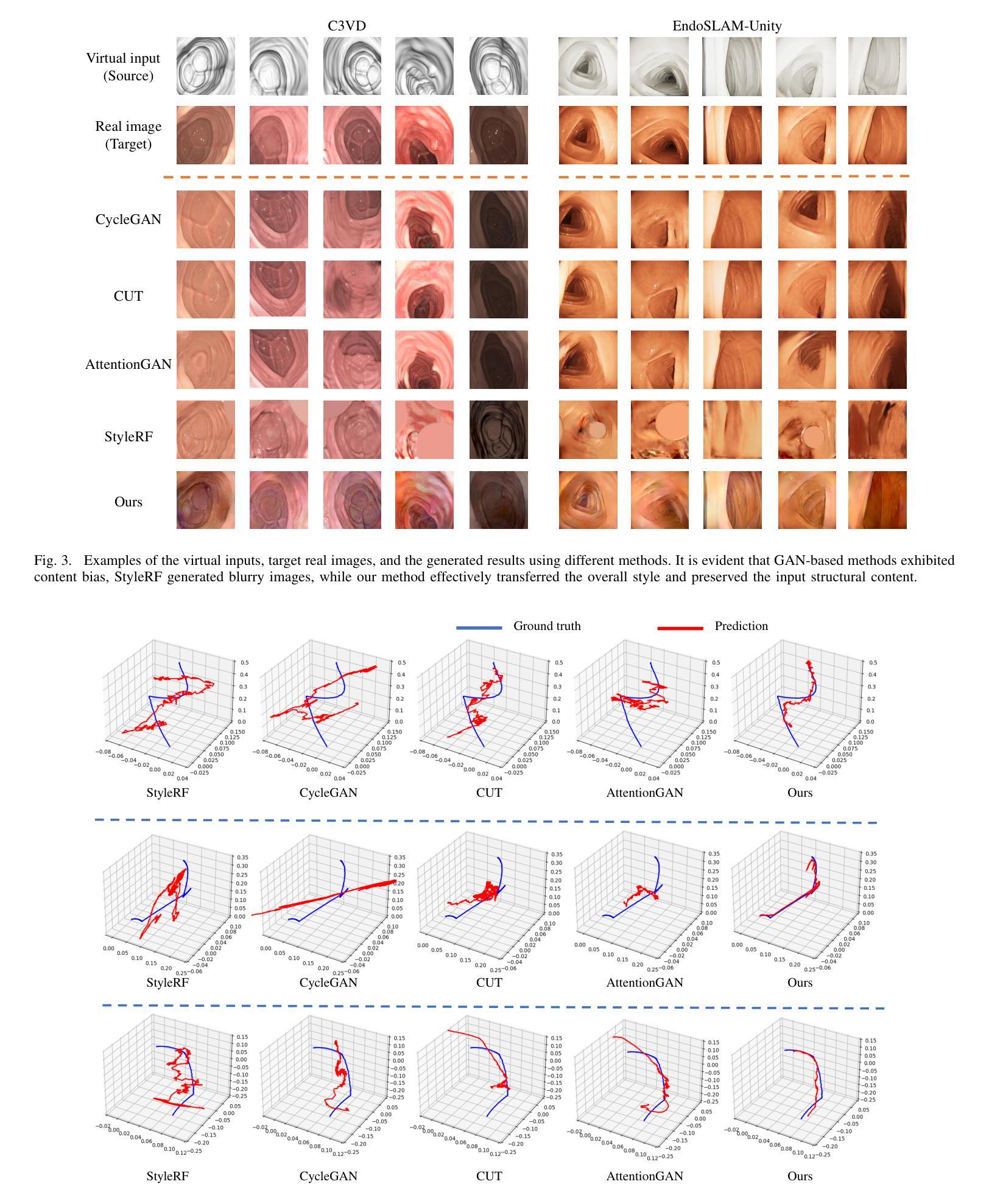

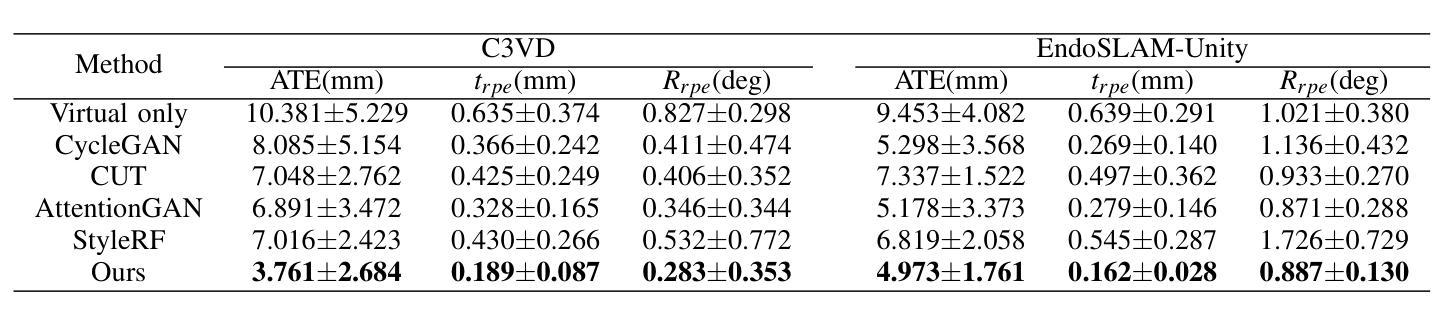

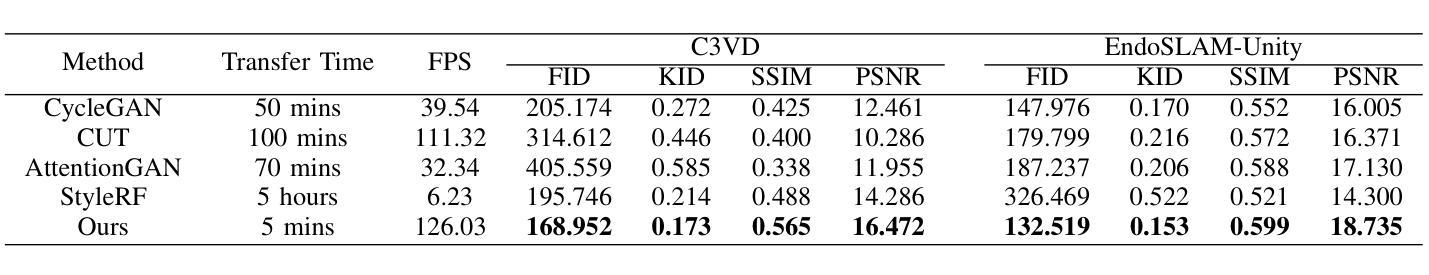

Sim2Real within 5 Minutes: Efficient Domain Transfer with Stylized Gaussian Splatting for Endoscopic Images

Authors:Junyang Wu, Yun Gu, Guang-Zhong Yang

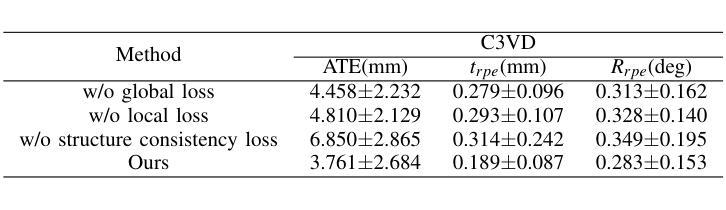

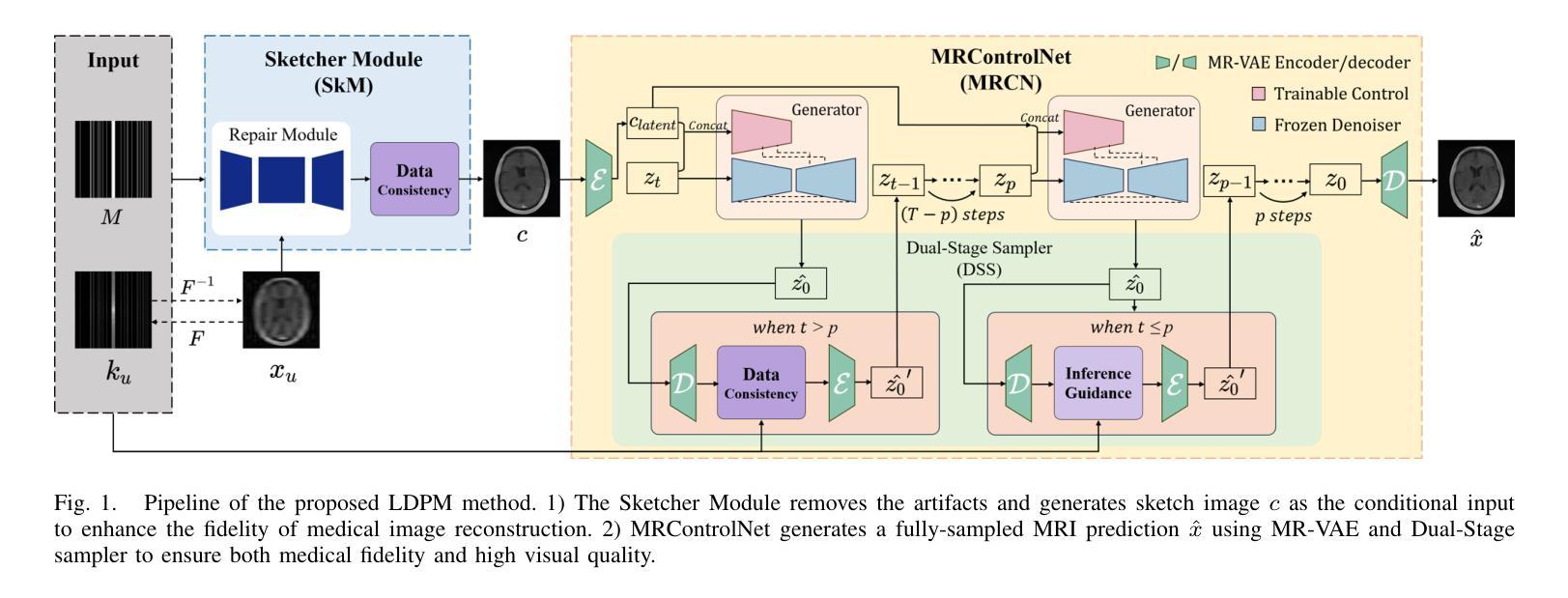

Robot assisted endoluminal intervention is an emerging technique for both benign and malignant luminal lesions. With vision-based navigation, when combined with pre-operative imaging data as priors, it is possible to recover position and pose of the endoscope without the need of additional sensors. In practice, however, aligning pre-operative and intra-operative domains is complicated by significant texture differences. Although methods such as style transfer can be used to address this issue, they require large datasets from both source and target domains with prolonged training times. This paper proposes an efficient domain transfer method based on stylized Gaussian splatting, only requiring a few of real images (10 images) with very fast training time. Specifically, the transfer process includes two phases. In the first phase, the 3D models reconstructed from CT scans are represented as differential Gaussian point clouds. In the second phase, only color appearance related parameters are optimized to transfer the style and preserve the visual content. A novel structure consistency loss is applied to latent features and depth levels to enhance the stability of the transferred images. Detailed validation was performed to demonstrate the performance advantages of the proposed method compared to that of the current state-of-the-art, highlighting the potential for intra-operative surgical navigation.

机器人辅助腔内干预是一种新兴技术,用于良性及恶性腔内病变的治疗。基于视觉的导航,结合术前成像数据作为先验知识,可以在无需额外传感器的情况下恢复内镜的位置和姿态。然而,在实践中,由于术前和术中的纹理差异很大,对齐这两个领域是复杂的。虽然风格转移等方法可以用来解决这个问题,但它们需要源域和目标域的大数据集以及延长的训练时间。本文提出了一种基于风格化高斯晕染的高效域转移方法,只需要少量真实图像(10张图像)和非常快的训练时间。具体来说,转移过程包括两个阶段。在第一阶段,从CT扫描重建的3D模型表示为微分高斯点云。在第二阶段,仅优化与颜色外观相关的参数以转移风格并保持视觉内容。将新型结构一致性损失应用于潜在特征和深度级别,以提高转移图像的稳定性。详细的验证表明,所提出的方法相较于当前最新技术展现了性能优势,突显了其在术中手术导航中的潜力。

论文及项目相关链接

PDF Accepted by ICRA 2025

Summary

机器人辅助内腔干预是一种用于良性和恶性腔道病变的新兴技术。基于视觉的导航结合术前成像数据先验,可无需额外的传感器恢复内窥镜的位置和姿态。然而,对准术前和术中的领域因纹理差异而复杂化。虽然风格转换等方法可用于解决此问题,但它们需要来源于源和目标领域的大数据集并需要长时间的训练。本文提出了一种基于风格化高斯泼斑的高效领域转换方法,仅需少量真实图像(10张图像)并具备快速的训练时间。该方法包括两个阶段,第一阶段将CT扫描重建的3D模型表示为差异高斯点云,第二阶段仅优化与颜色外观相关的参数以转移风格和保留视觉内容。应用新型结构一致性损失于潜在特征和深度级别以提高转移图像的稳定性。详细验证表明,该方法在性能上具有优势,突出其在术中手术导航的潜力。

Key Takeaways

- 机器人辅助内腔干预是用于治疗良性和恶性腔道病变的新兴技术。

- 结合术前成像数据先验,基于视觉的导航可以恢复内窥镜的位置和姿态。

- 术中领域和术前领域的对齐因纹理差异而具有挑战性。

- 风格转换方法需要大数据集和长时间的训练。

- 本文提出了一种高效的领域转换方法,基于风格化高斯泼斑,只需少量真实图像并具备快速训练能力。

- 该方法包括两个阶段:将3D模型表示为差异高斯点云,并优化与颜色外观相关的参数以转移风格和保留视觉内容。

点此查看论文截图