⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-03-09 更新

Enhancing Multimodal Medical Image Classification using Cross-Graph Modal Contrastive Learning

Authors:Jun-En Ding, Chien-Chin Hsu, Chi-Hsiang Chu, Shuqiang Wang, Feng Liu

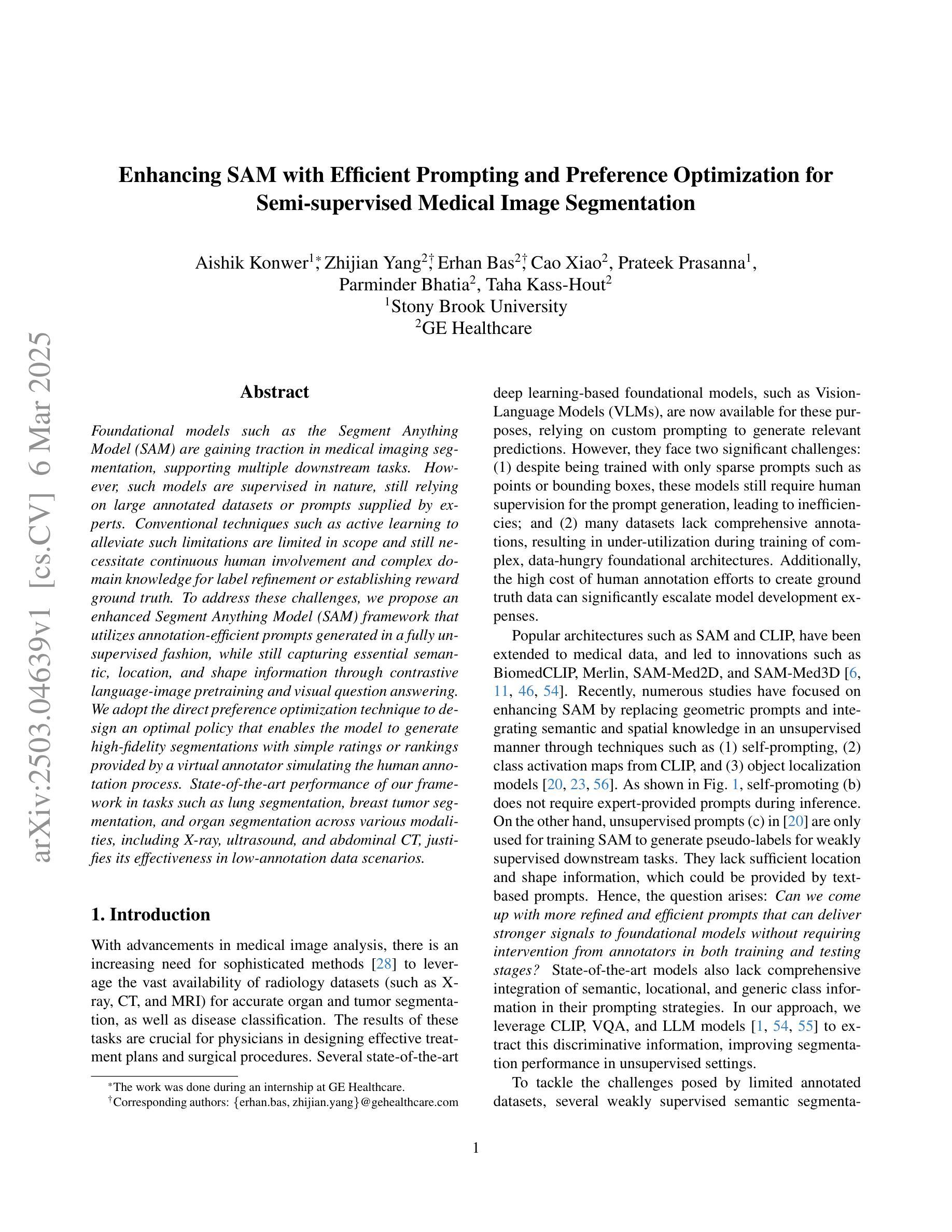

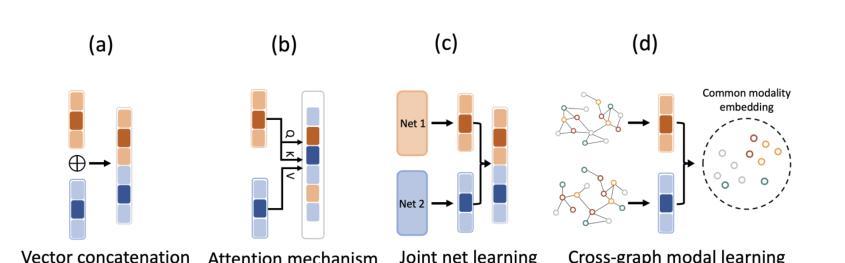

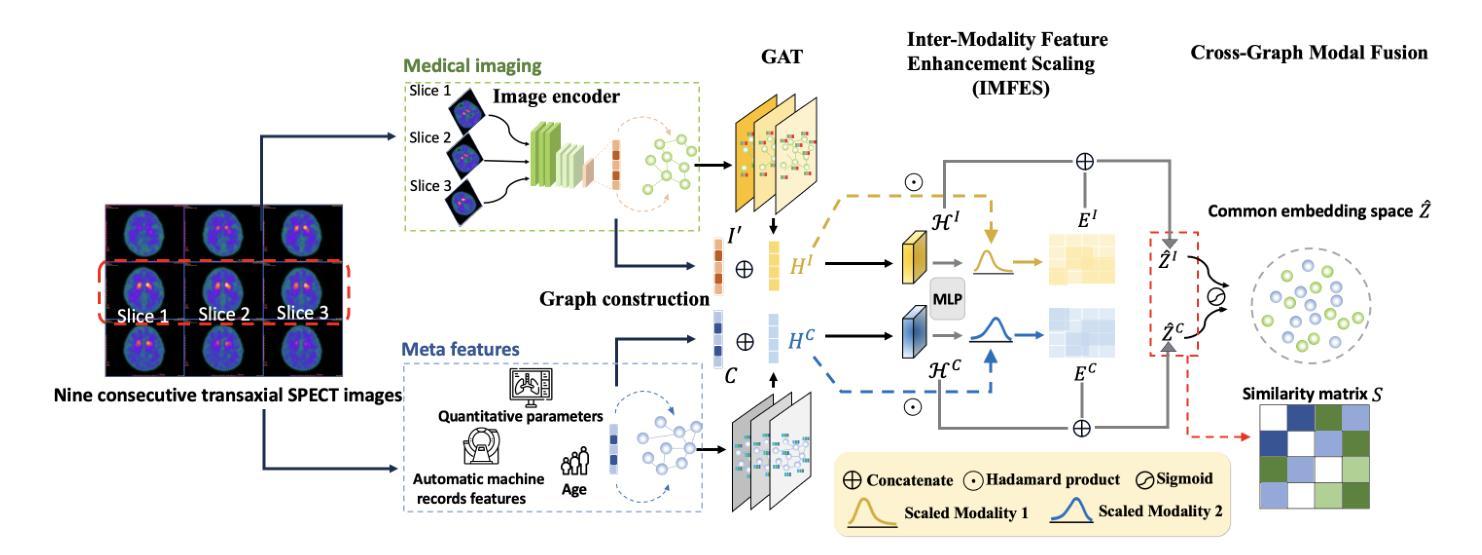

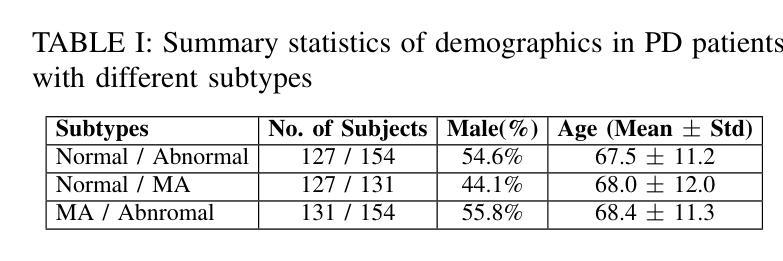

The classification of medical images is a pivotal aspect of disease diagnosis, often enhanced by deep learning techniques. However, traditional approaches typically focus on unimodal medical image data, neglecting the integration of diverse non-image patient data. This paper proposes a novel Cross-Graph Modal Contrastive Learning (CGMCL) framework for multimodal structured data from different data domains to improve medical image classification. The model effectively integrates both image and non-image data by constructing cross-modality graphs and leveraging contrastive learning to align multimodal features in a shared latent space. An inter-modality feature scaling module further optimizes the representation learning process by reducing the gap between heterogeneous modalities. The proposed approach is evaluated on two datasets: a Parkinson’s disease (PD) dataset and a public melanoma dataset. Results demonstrate that CGMCL outperforms conventional unimodal methods in accuracy, interpretability, and early disease prediction. Additionally, the method shows superior performance in multi-class melanoma classification. The CGMCL framework provides valuable insights into medical image classification while offering improved disease interpretability and predictive capabilities.

医疗图像分类是疾病诊断的关键环节,通常通过深度学习技术得到增强。然而,传统方法主要关注单模态医疗图像数据,忽略了不同非图像患者数据的整合。本文针对来自不同数据域的多模态结构化数据,提出了一种新型的跨图模态对比学习(CGMCL)框架,以提高医疗图像分类的效果。该模型通过构建跨模态图并利用对比学习对齐多模态特征在共享潜在空间,有效地结合了图像和非图像数据。跨模态特征缩放模块进一步优化了表示学习过程,缩小了异质模态之间的差距。所提出的方法在两个数据集上进行了评估:帕金森病(PD)数据集和公共黑色素瘤数据集。结果表明,在准确性、可解释性和早期疾病预测方面,CGMCL优于传统单模态方法。此外,该方法在多类黑色素瘤分类方面表现出卓越性能。CGMCL框架为医疗图像分类提供了宝贵的见解,同时提高了疾病的可解释性和预测能力。

论文及项目相关链接

Summary

本研究提出了一种新颖的跨图模态对比学习(CGMCL)框架,用于多模态结构化数据的跨域融合,以提高医学图像分类的效果。该框架通过构建跨模态图并利用对比学习来对齐多模态特征,从而有效整合图像和非图像数据。评估结果显示,在帕金森病和公共黑色素瘤数据集上,CGmcl在准确性、可解释性和早期疾病预测方面优于传统的单模态方法,并且在多类黑色素瘤分类中表现出卓越性能。

Key Takeaways

- 本研究引入了跨图模态对比学习(CGMCL)框架,旨在提高医学图像分类的效果。

- CGMCL框架能有效整合图像和非图像数据,通过构建跨模态图并利用对比学习来对齐多模态特征。

- CGMCL框架包含一个跨模态特征缩放模块,用于优化异质模态之间的表示学习过程。

- 在帕金森病和公共黑色素瘤数据集上的评估显示,CGmcl在准确性、可解释性和早期疾病预测方面优于传统方法。

- CGMCL框架在多类黑色素瘤分类任务中表现出卓越性能。

- 该研究强调了多模态数据融合对于提高医学图像分类的重要性。

点此查看论文截图

DRACO-DehazeNet: An Efficient Image Dehazing Network Combining Detail Recovery and a Novel Contrastive Learning Paradigm

Authors:Gao Yu Lee, Tanmoy Dam, Md Meftahul Ferdaus, Daniel Puiu Poenar, Vu Duong

Image dehazing is crucial for clarifying images obscured by haze or fog, but current learning-based approaches is dependent on large volumes of training data and hence consumed significant computational power. Additionally, their performance is often inadequate under non-uniform or heavy haze. To address these challenges, we developed the Detail Recovery And Contrastive DehazeNet, which facilitates efficient and effective dehazing via a dense dilated inverted residual block and an attention-based detail recovery network that tailors enhancements to specific dehazed scene contexts. A major innovation is its ability to train effectively with limited data, achieved through a novel quadruplet loss-based contrastive dehazing paradigm. This approach distinctly separates hazy and clear image features while also distinguish lower-quality and higher-quality dehazed images obtained from each sub-modules of our network, thereby refining the dehazing process to a larger extent. Extensive tests on a variety of benchmarked haze datasets demonstrated the superiority of our approach. The code repository for this work is available at https://github.com/GreedYLearner1146/DRACO-DehazeNet.

图像去雾对于清除因雾霾或雾气导致的图像模糊至关重要。然而,当前的基于学习的方法依赖于大量的训练数据,因此消耗了大量的计算能力。此外,它们在非均匀或重度雾霾下的性能往往不足。为了应对这些挑战,我们开发了Detail Recovery And Contrastive DehazeNet(DRACO-DehazeNet)。它通过一个稠密的膨胀倒置残差块和一个基于注意力的细节恢复网络,实现了高效且有效的去雾,该网络能够根据特定的去雾场景上下文进行定制增强。一大创新点在于它能在有限的数据下进行有效的训练,这是通过一种新型的四重损失对比去雾模式实现的。该方法能够明显区分雾天和晴朗的图像特征,同时也能区分我们网络各个子模块所得到的低质量和高质量的去雾图像,从而更大程度地优化去雾过程。在多种基准雾霾数据集上的广泛测试证明了我们方法的优越性。该工作的代码仓库可在https://github.com/GreedYLearner1146/DRACO-DehazeNet找到。

论文及项目相关链接

PDF Once the paper is accepted and published, the copyright will be transferred to the corresponding journal

Summary

本文介绍了一种新的图像去雾方法——Detail Recovery And Contrastive DehazeNet。该方法通过密集的膨胀倒残差块和基于注意力的细节恢复网络,实现了高效且有效的去雾。其创新之处在于,即使训练数据有限,也能通过基于四重损失对比去雾模式进行有效训练。该方法能够区分雾蒙蒙和清晰的图像特征,同时区分来自网络各子模块的较低质量和较高质量去雾图像,从而更精细地进行去雾处理。在多个基准雾数据集上的广泛测试证明了该方法的有效性。

Key Takeaways

- Detail Recovery And Contrastive DehazeNet是一种有效的图像去雾方法。

- 该方法通过密集的膨胀倒残差块和基于注意力的细节恢复网络实现高效去雾。

- 即使在训练数据有限的情况下,该方法的去雾效果依然显著。

- 对比去雾模式帮助网络区分雾蒙蒙和清晰的图像特征。

- 网络能区分来自不同子模块的较低质量和较高质量去雾图像。

- 在多个基准雾数据集上的测试证明了该方法的优越性。

点此查看论文截图