⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-03-10 更新

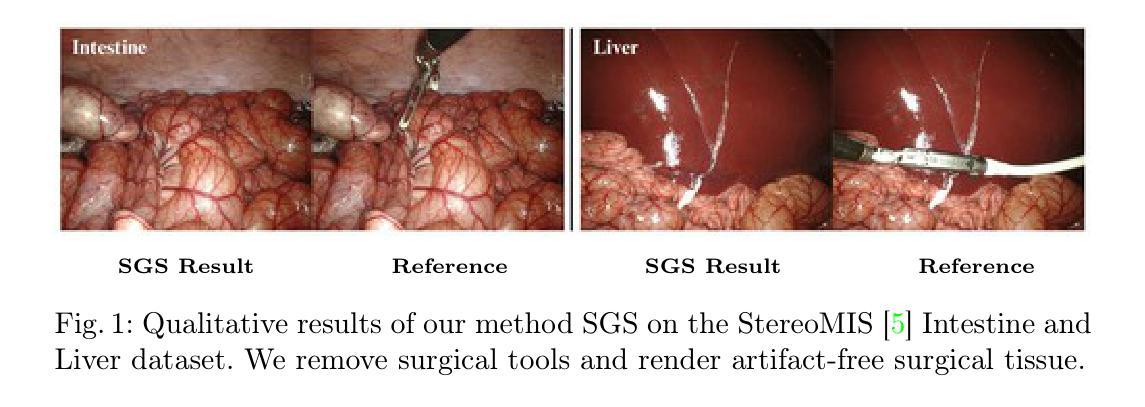

Surgical Gaussian Surfels: Highly Accurate Real-time Surgical Scene Rendering

Authors:Idris O. Sunmola, Zhenjun Zhao, Samuel Schmidgall, Yumeng Wang, Paul Maria Scheikl, Axel Krieger

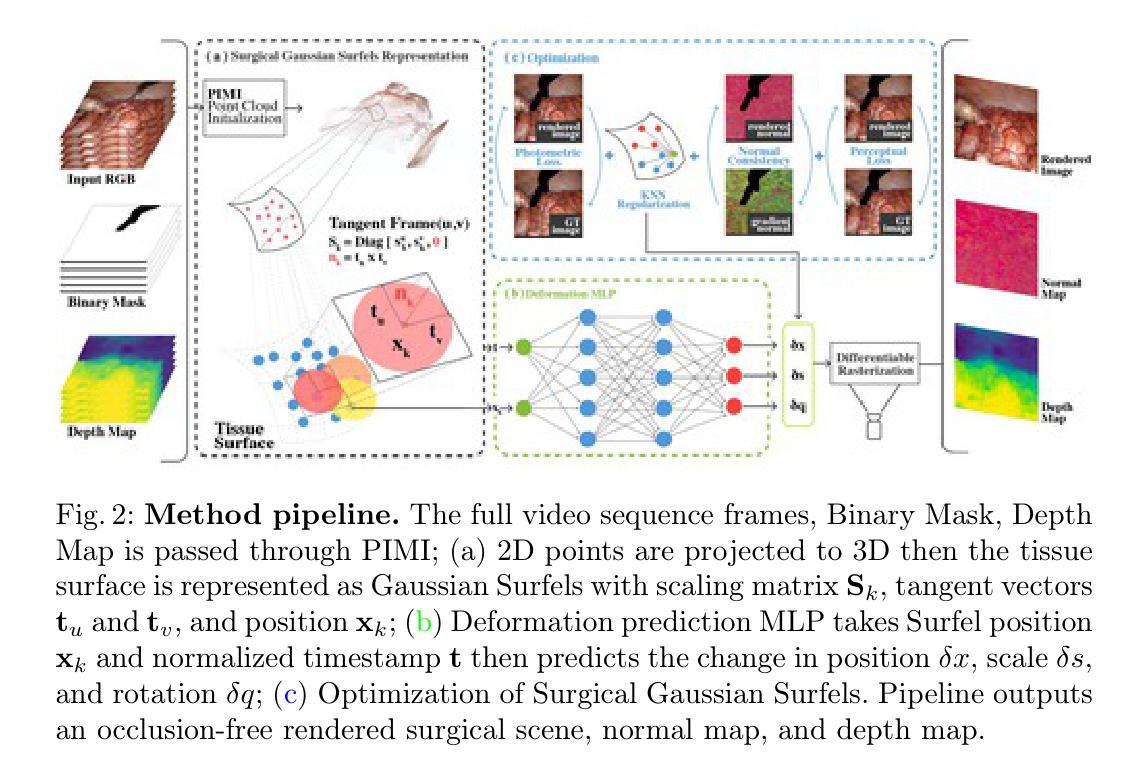

Accurate geometric reconstruction of deformable tissues in monocular endoscopic video remains a fundamental challenge in robot-assisted minimally invasive surgery. Although recent volumetric and point primitive methods based on neural radiance fields (NeRF) and 3D Gaussian primitives have efficiently rendered surgical scenes, they still struggle with handling artifact-free tool occlusions and preserving fine anatomical details. These limitations stem from unrestricted Gaussian scaling and insufficient surface alignment constraints during reconstruction. To address these issues, we introduce Surgical Gaussian Surfels (SGS), which transforms anisotropic point primitives into surface-aligned elliptical splats by constraining the scale component of the Gaussian covariance matrix along the view-aligned axis. We predict accurate surfel motion fields using a lightweight Multi-Layer Perceptron (MLP) coupled with locality constraints to handle complex tissue deformations. We use homodirectional view-space positional gradients to capture fine image details by splitting Gaussian Surfels in over-reconstructed regions. In addition, we define surface normals as the direction of the steepest density change within each Gaussian surfel primitive, enabling accurate normal estimation without requiring monocular normal priors. We evaluate our method on two in-vivo surgical datasets, where it outperforms current state-of-the-art methods in surface geometry, normal map quality, and rendering efficiency, while remaining competitive in real-time rendering performance. We make our code available at https://github.com/aloma85/SurgicalGaussianSurfels

在单目内窥镜视频中,可变形组织的精确几何重建仍然是机器人辅助微创手术中的一项基本挑战。尽管最近基于神经辐射场(NeRF)和3D高斯基元的体积和点基元方法已经有效地呈现了手术场景,但它们仍然在处理无工具遮挡和保留精细解剖细节方面存在困难。这些局限性源于高斯尺度的无限制放大和重建过程中的表面对齐约束不足。为了解决这些问题,我们引入了手术高斯Surfel(SGS),它通过约束高斯协方差矩阵的尺度成分沿着视图对齐轴,将各向异性点基元转换为与表面对齐的椭圆斑块。我们使用轻量级的多层感知器(MLP)结合局部约束来预测准确的Surfel运动场,以处理复杂的组织变形。我们使用同方向视图空间位置梯度来捕捉图像中的细微细节,通过对重建过度区域的高斯Surfel进行拆分。此外,我们将表面法线定义为每个高斯Surfel基元内密度变化最陡峭的方向,从而实现无需单目法线先验的准确法线估计。我们在两个体内手术数据集上评估了我们的方法,其在表面几何、法线图质量和渲染效率方面均优于当前最先进的方法,同时在实时渲染性能方面保持竞争力。我们的代码可通过以下链接获取:https://github.com/aloma85/SurgicalGaussianSurfels

论文及项目相关链接

Summary

基于神经辐射场(NeRF)的体渲染和点基元方法,在机器人辅助的微创手术中对可变形组织的精确几何重建仍存在挑战。为解决工具遮挡产生的伪影及解剖细节保留不足的问题,本文提出Surgical Gaussian Surfels(SGS)方法。通过约束高斯协方差矩阵的尺度成分,将各向异性点基元转化为与视图对齐的椭圆平板。结合局部约束,利用多层感知器(MLP)预测精确的曲面运动场,处理复杂的组织变形。此外,通过同向视空间位置梯度捕捉图像细节,并定义高斯曲面基元内的法线方向为密度变化最陡峭的方向,实现无需单目法线先验的准确法线估计。在两个活体手术数据集上的实验表明,该方法在表面几何、法线图质量和渲染效率方面优于当前最先进的方法,同时在实时渲染性能上保持竞争力。

Key Takeaways

- 基于NeRF的体渲染和点基元方法在机器人辅助微创手术的几何重建中存在挑战。

- Surgical Gaussian Surfels (SGS)方法通过约束高斯协方差矩阵的尺度成分解决工具遮挡产生的伪影和解剖细节保留不足的问题。

- SGS将各向异性点基元转化为与视图对齐的椭圆平板,实现更准确的几何重建。

- 通过MLP预测曲面运动场,结合局部约束处理复杂的组织变形。

- 利用同向视空间位置梯度捕捉图像细节,提高重建质量。

- 通过定义法线方向为密度变化最陡峭的方向,实现准确法线估计,无需单目法线先验。

- 在两个活体手术数据集上的实验表明,SGS方法在表面几何、法线图质量和渲染效率方面优于当前最先进的方法。

点此查看论文截图

LensDFF: Language-enhanced Sparse Feature Distillation for Efficient Few-Shot Dexterous Manipulation

Authors:Qian Feng, David S. Martinez Lema, Jianxiang Feng, Zhaopeng Chen, Alois Knoll

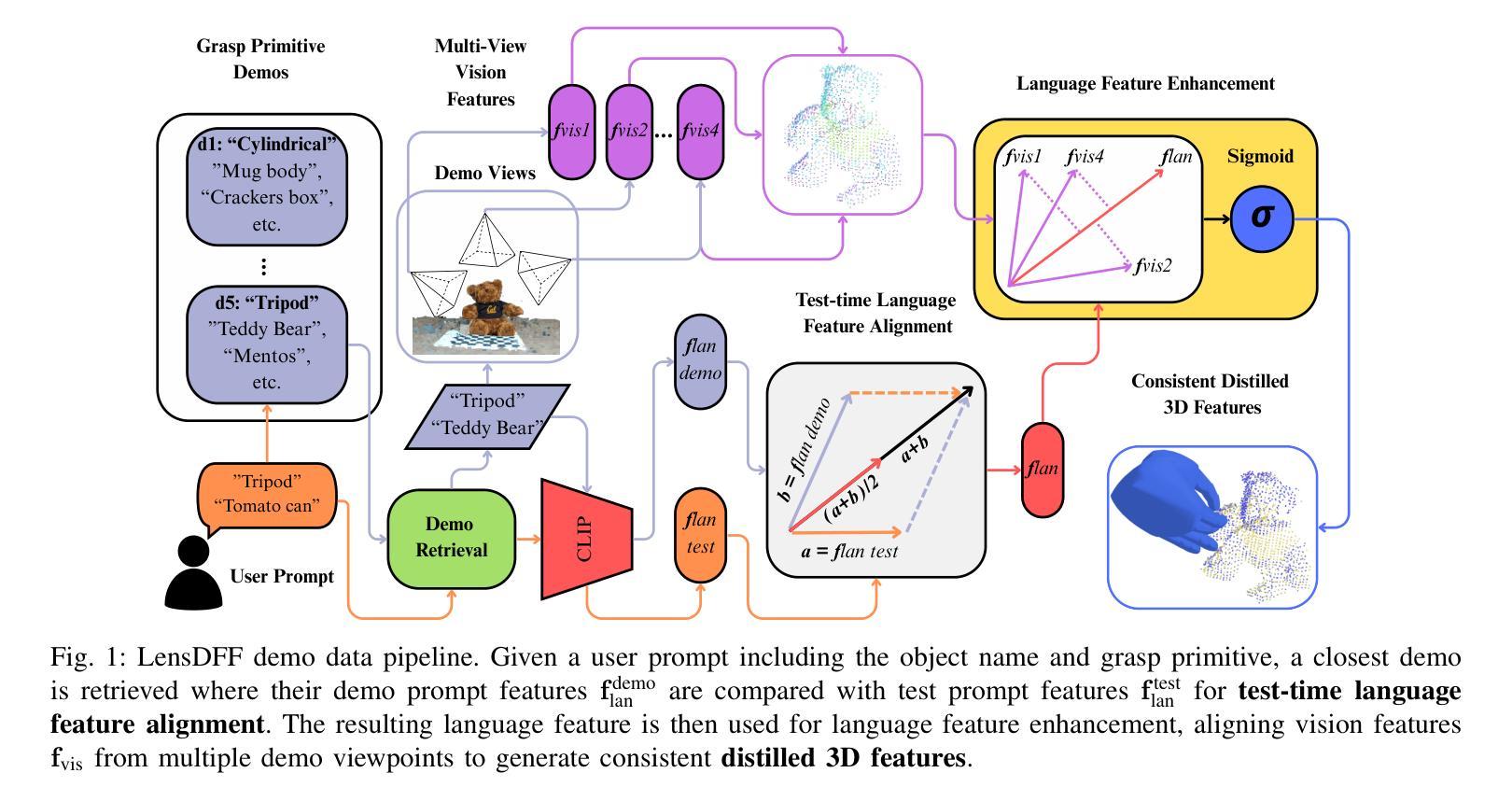

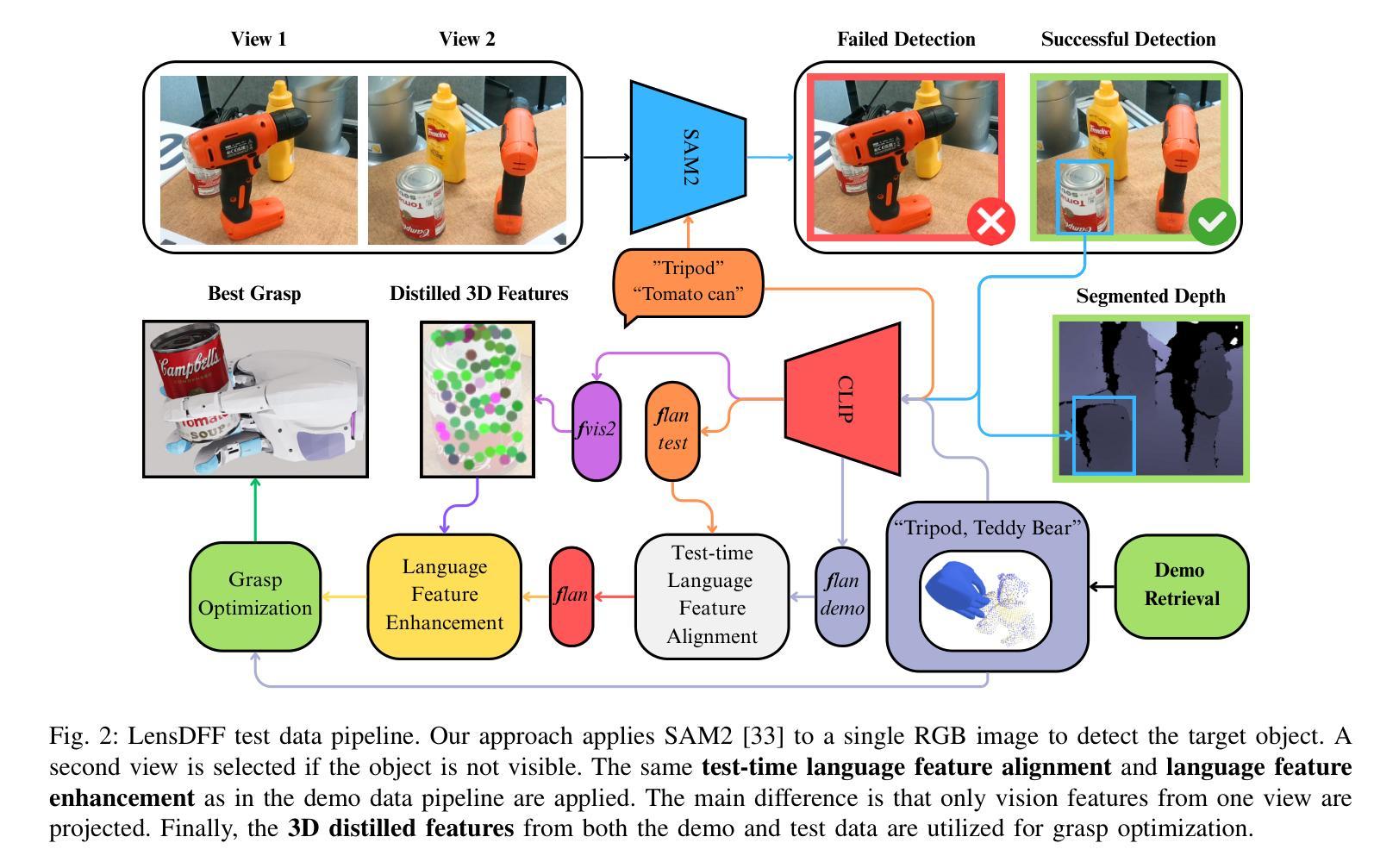

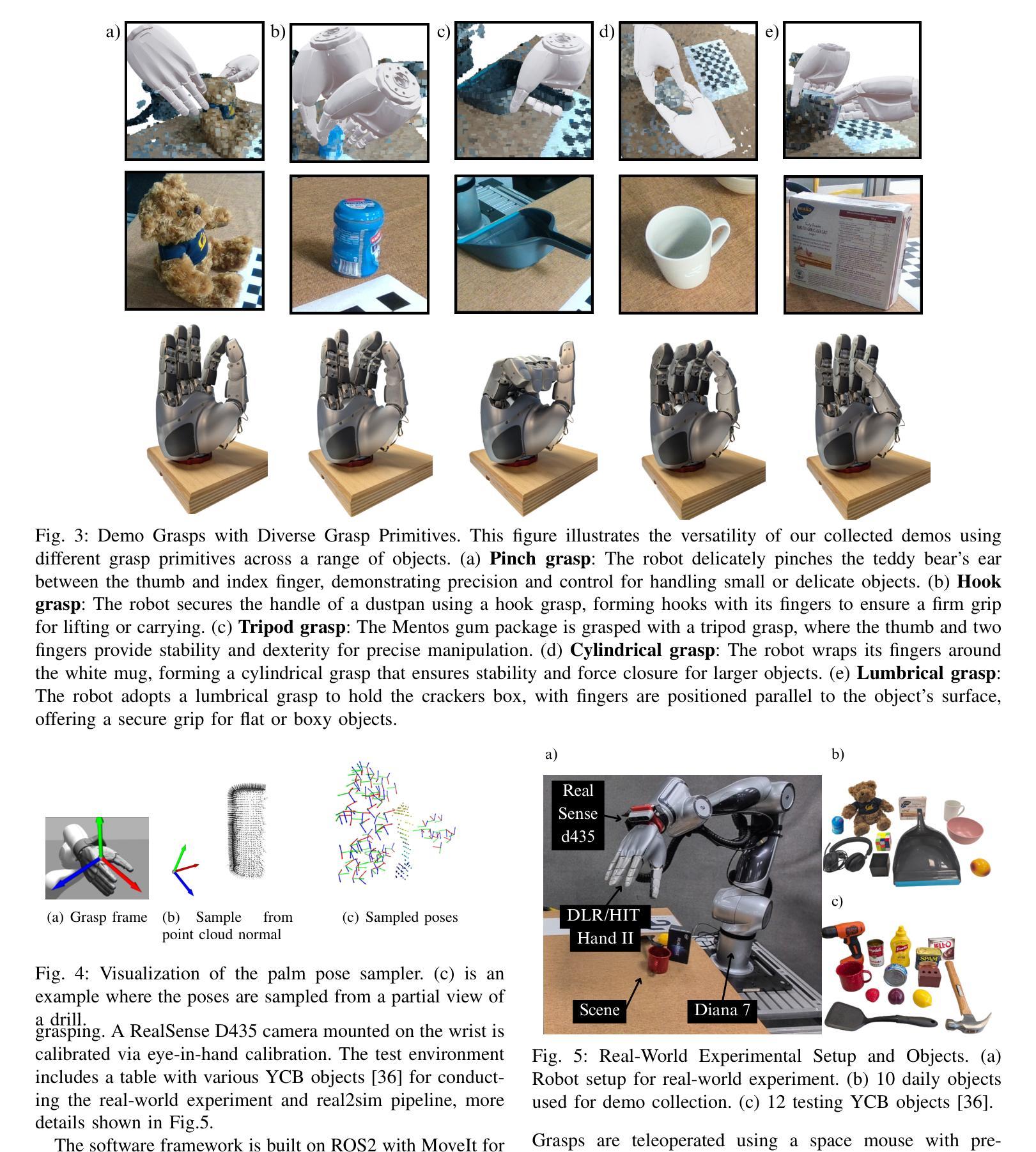

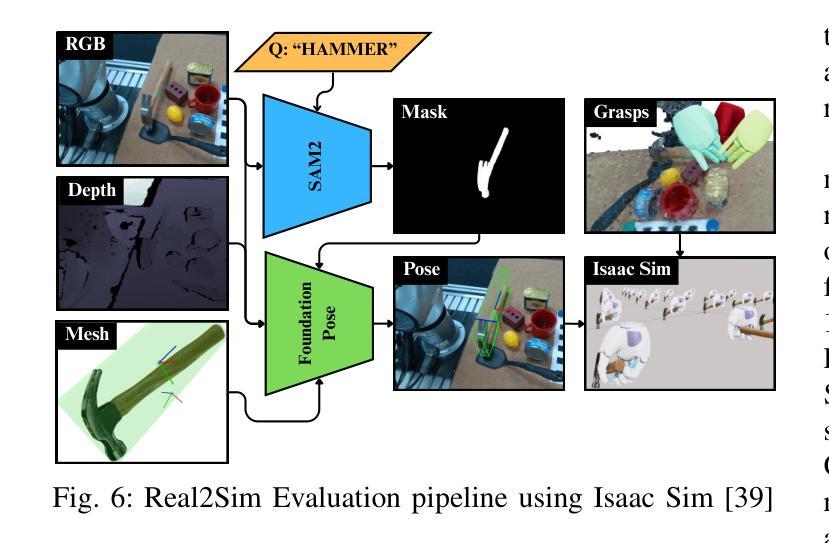

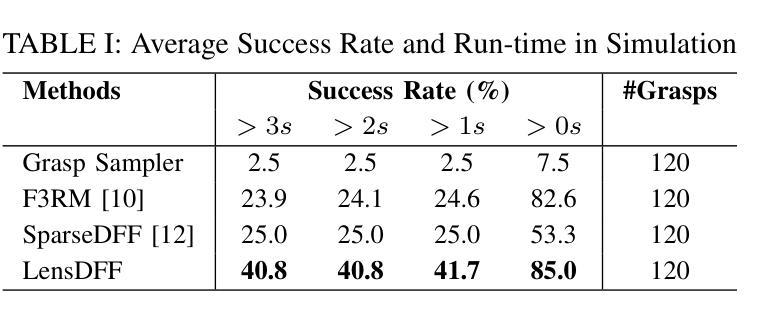

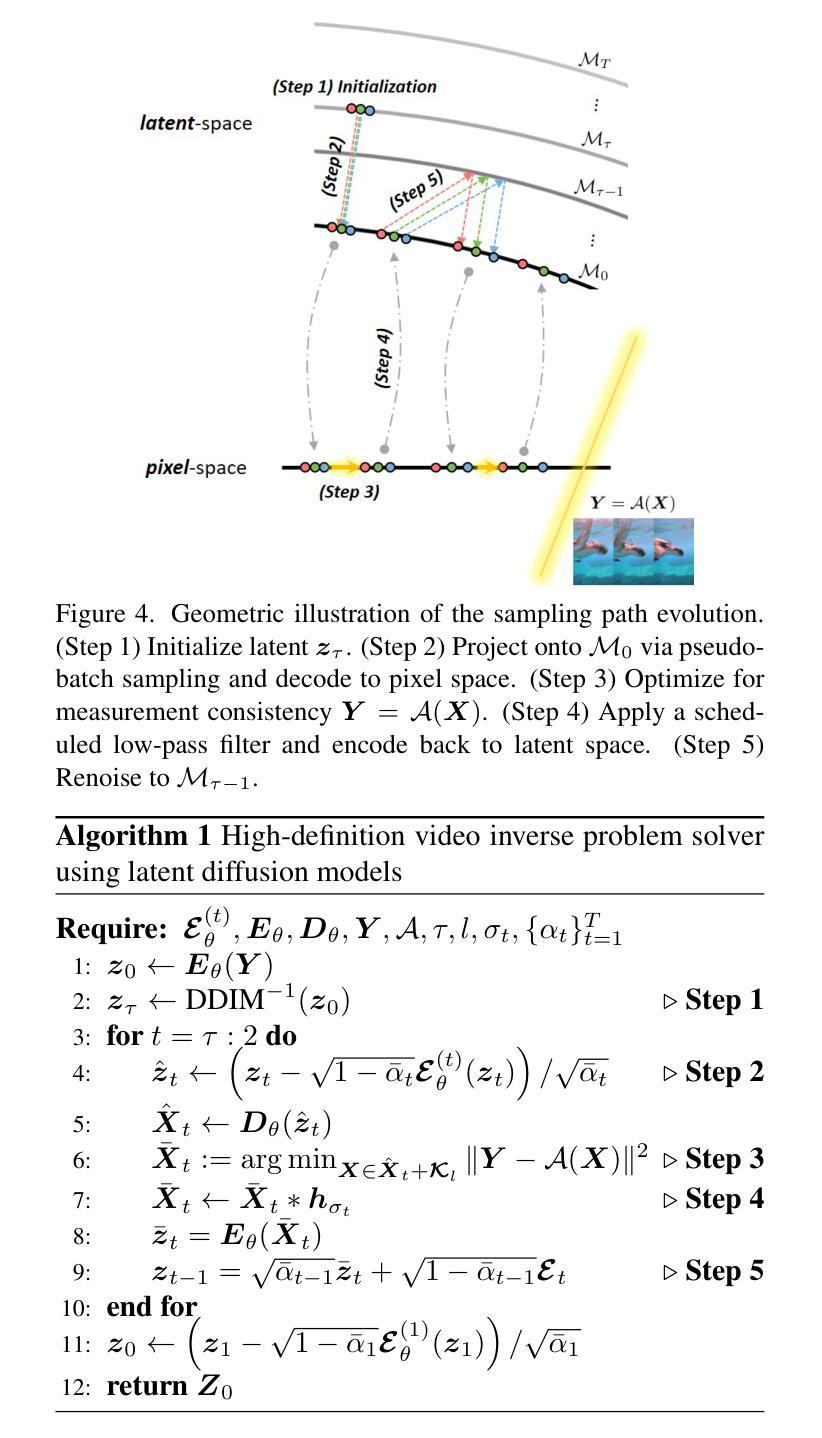

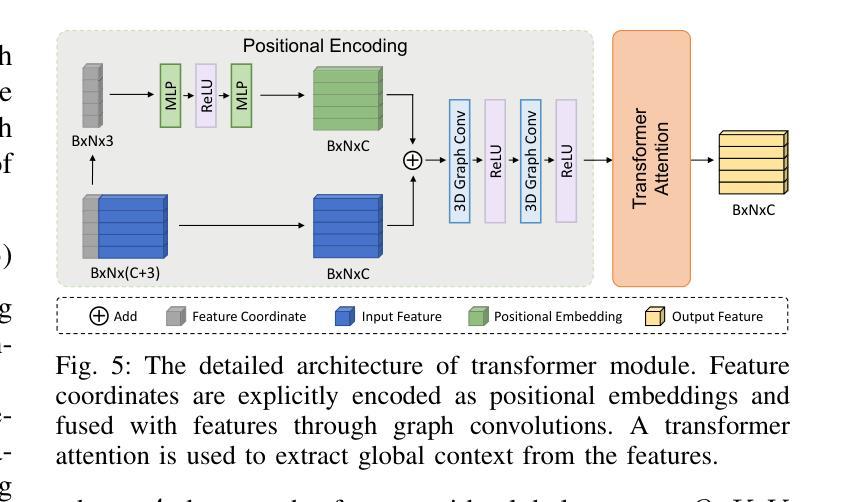

Learning dexterous manipulation from few-shot demonstrations is a significant yet challenging problem for advanced, human-like robotic systems. Dense distilled feature fields have addressed this challenge by distilling rich semantic features from 2D visual foundation models into the 3D domain. However, their reliance on neural rendering models such as Neural Radiance Fields (NeRF) or Gaussian Splatting results in high computational costs. In contrast, previous approaches based on sparse feature fields either suffer from inefficiencies due to multi-view dependencies and extensive training or lack sufficient grasp dexterity. To overcome these limitations, we propose Language-ENhanced Sparse Distilled Feature Field (LensDFF), which efficiently distills view-consistent 2D features onto 3D points using our novel language-enhanced feature fusion strategy, thereby enabling single-view few-shot generalization. Based on LensDFF, we further introduce a few-shot dexterous manipulation framework that integrates grasp primitives into the demonstrations to generate stable and highly dexterous grasps. Moreover, we present a real2sim grasp evaluation pipeline for efficient grasp assessment and hyperparameter tuning. Through extensive simulation experiments based on the real2sim pipeline and real-world experiments, our approach achieves competitive grasping performance, outperforming state-of-the-art approaches.

从少数几个演示中学习精细操作对于先进的人形机器人系统来说是一个重大且富有挑战性的问题。密集蒸馏特征场通过将从二维视觉基础模型中提取的丰富语义特征蒸馏到三维领域来解决这一挑战。然而,它们依赖于神经渲染模型,如神经辐射场(NeRF)或高斯平铺,导致计算成本较高。相比之下,基于稀疏特征场的早期方法要么因多视图依赖性和大量训练而出现效率低下的问题,要么缺乏足够的抓握灵活性。为了克服这些局限性,我们提出了语言增强稀疏蒸馏特征场(LensDFF),它利用我们新型的语言增强特征融合策略,有效地将一致的二维特征蒸馏到三维点上,从而实现单视图少数演示的泛化。基于LensDFF,我们进一步引入了一个精细操作框架,该框架将抓握原型融入演示中,以生成稳定和高度灵活的抓握动作。此外,我们为高效的抓握评估和超参数调整提供了一个real2sim抓握评估流程。通过基于real2sim管道的大量仿真实验和真实世界实验,我们的方法实现了具有竞争力的抓握性能,超越了最先进的方法。

论文及项目相关链接

PDF 8 pages

Summary

本文提出了一种基于语言增强的稀疏蒸馏特征场(LensDFF)的方法,用于从少量演示中学习灵巧操作。该方法能够高效地将2D特征蒸馏到3D点,并实现单视角的少量样本泛化。在此基础上,文章还介绍了一个结合抓取原语的少量样本灵巧操作框架,用于生成稳定和高度灵巧的抓取。此外,还提出了一种real2sim抓取评估管道,用于高效的抓取评估和超参数调整。通过仿真实验和真实实验验证,该方法在抓取性能上具有竞争力,优于现有方法。

Key Takeaways

- 提出了Language-ENhanced Sparse Distilled Feature Field(LensDFF)方法,解决了从少量演示中学习灵巧操作的问题。

- LensDFF实现了高效地将2D特征蒸馏到3D点的过程,支持单视角的少量样本泛化。

- 介绍了一种结合抓取原语的少量样本灵巧操作框架,能生成稳定和高度灵巧的抓取。

- 提出了real2sim抓取评估管道,用于高效的抓取评估和超参数调整。

- 通过仿真实验和真实实验验证,该方法在抓取性能上具有竞争力。

- LensDFF通过语言增强的特征融合策略提升了性能。

点此查看论文截图