⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-03-20 更新

MoonCast: High-Quality Zero-Shot Podcast Generation

Authors:Zeqian Ju, Dongchao Yang, Jianwei Yu, Kai Shen, Yichong Leng, Zhengtao Wang, Xu Tan, Xinyu Zhou, Tao Qin, Xiangyang Li

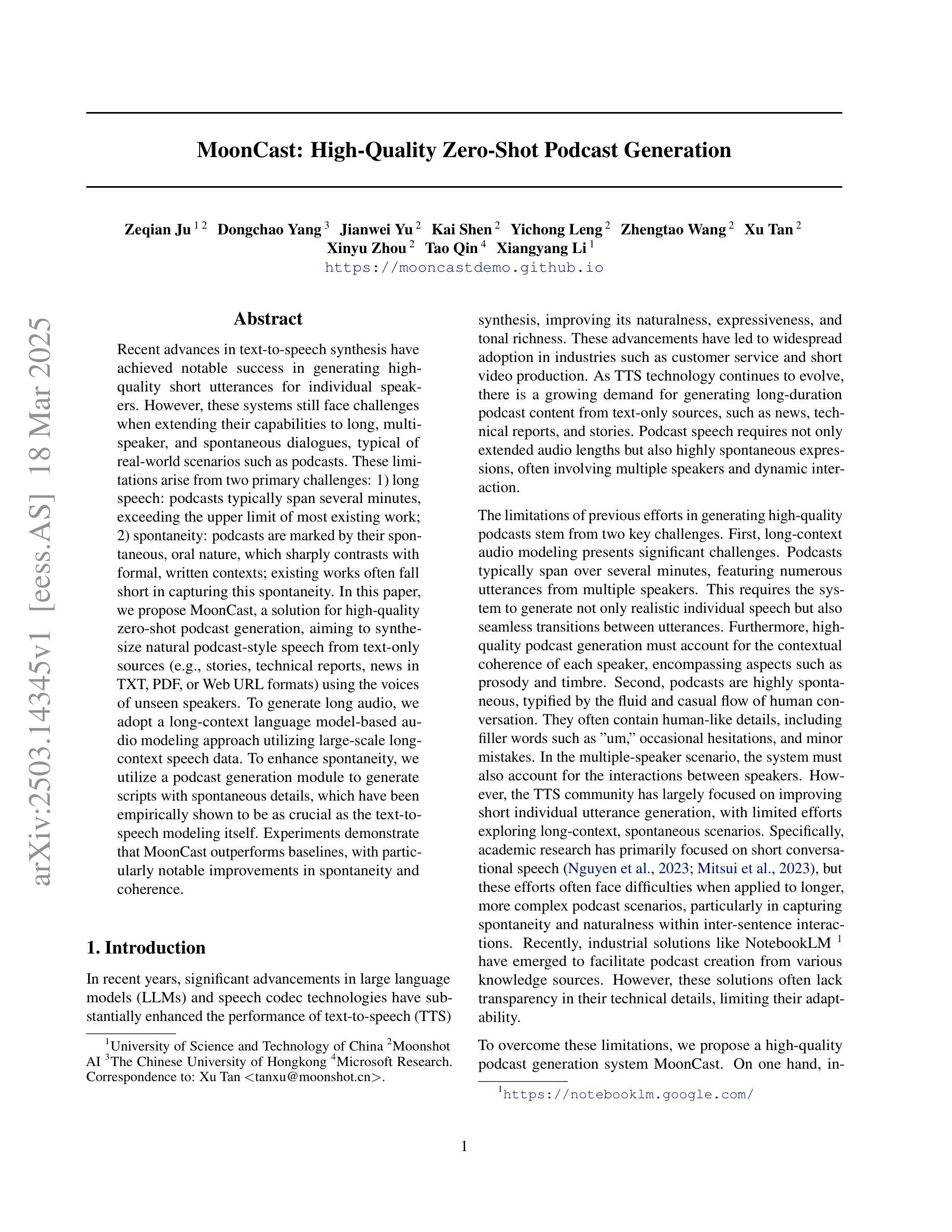

Recent advances in text-to-speech synthesis have achieved notable success in generating high-quality short utterances for individual speakers. However, these systems still face challenges when extending their capabilities to long, multi-speaker, and spontaneous dialogues, typical of real-world scenarios such as podcasts. These limitations arise from two primary challenges: 1) long speech: podcasts typically span several minutes, exceeding the upper limit of most existing work; 2) spontaneity: podcasts are marked by their spontaneous, oral nature, which sharply contrasts with formal, written contexts; existing works often fall short in capturing this spontaneity. In this paper, we propose MoonCast, a solution for high-quality zero-shot podcast generation, aiming to synthesize natural podcast-style speech from text-only sources (e.g., stories, technical reports, news in TXT, PDF, or Web URL formats) using the voices of unseen speakers. To generate long audio, we adopt a long-context language model-based audio modeling approach utilizing large-scale long-context speech data. To enhance spontaneity, we utilize a podcast generation module to generate scripts with spontaneous details, which have been empirically shown to be as crucial as the text-to-speech modeling itself. Experiments demonstrate that MoonCast outperforms baselines, with particularly notable improvements in spontaneity and coherence.

最近文本到语音合成技术的进展在生成单个演讲者的高质量简短陈述方面取得了显著的成功。然而,当这些系统尝试扩展到长对话、多说话人以及如播客等现实场景中的即兴对话时,仍然面临挑战。这些限制主要源于两个挑战:1)长语音:播客通常持续数分钟,超过现有工作的大多数上限;2)即兴性:播客以其即兴、口语的特点而著称,这与正式、书面语境形成鲜明对比;现有作品往往难以捕捉这种即兴性。在本文中,我们提出了MoonCast,这是一个用于高质量零样本播客生成的解决方案,旨在仅从文本源(如故事、技术报告、TXT、PDF或Web URL格式的新闻)合成自然播客风格的语音,并使用未见过的说话人的声音。为了生成长音频,我们采用基于大规模长上下文语音数据的长上下文语言模型音频建模方法。为了提高即兴性,我们使用播客生成模块来生成具有即兴细节的脚本,实证表明这与文本到语音建模本身一样至关重要。实验表明,MoonCast优于基线,在即兴和连贯性方面尤其显著。

论文及项目相关链接

Summary

本文介绍了针对高质量零样本Podcast生成的新解决方案MoonCast。它旨在从文本源(如故事、技术报告、新闻等)合成自然风格的Podcast语音,使用未见过的说话人的声音。通过采用基于大规模长语境语音数据的音频建模方法,生成长音频;并利用Podcast生成模块产生具有自发性的脚本细节,增强了口语的自然度。实验表明,MoonCast优于基线方法,在自发性和连贯性方面表现出显著改进。

Key Takeaways

- MoonCast是一个用于高质量零样本Podcast生成的解决方案。

- 它可以从文本源合成自然风格的Podcast语音,并使用未见过的说话人的声音。

- 采用基于大规模长语境语音数据的音频建模方法,以生成长音频。

- 利用Podcast生成模块产生具有自发性的脚本细节,增强口语的自然度。

- MoonCast在生成长音频和保持语音的连贯性方面表现优异。

- 实验表明MoonCast优于基线方法。

点此查看论文截图

VALL-T: Decoder-Only Generative Transducer for Robust and Decoding-Controllable Text-to-Speech

Authors:Chenpeng Du, Yiwei Guo, Hankun Wang, Yifan Yang, Zhikang Niu, Shuai Wang, Hui Zhang, Xie Chen, Kai Yu

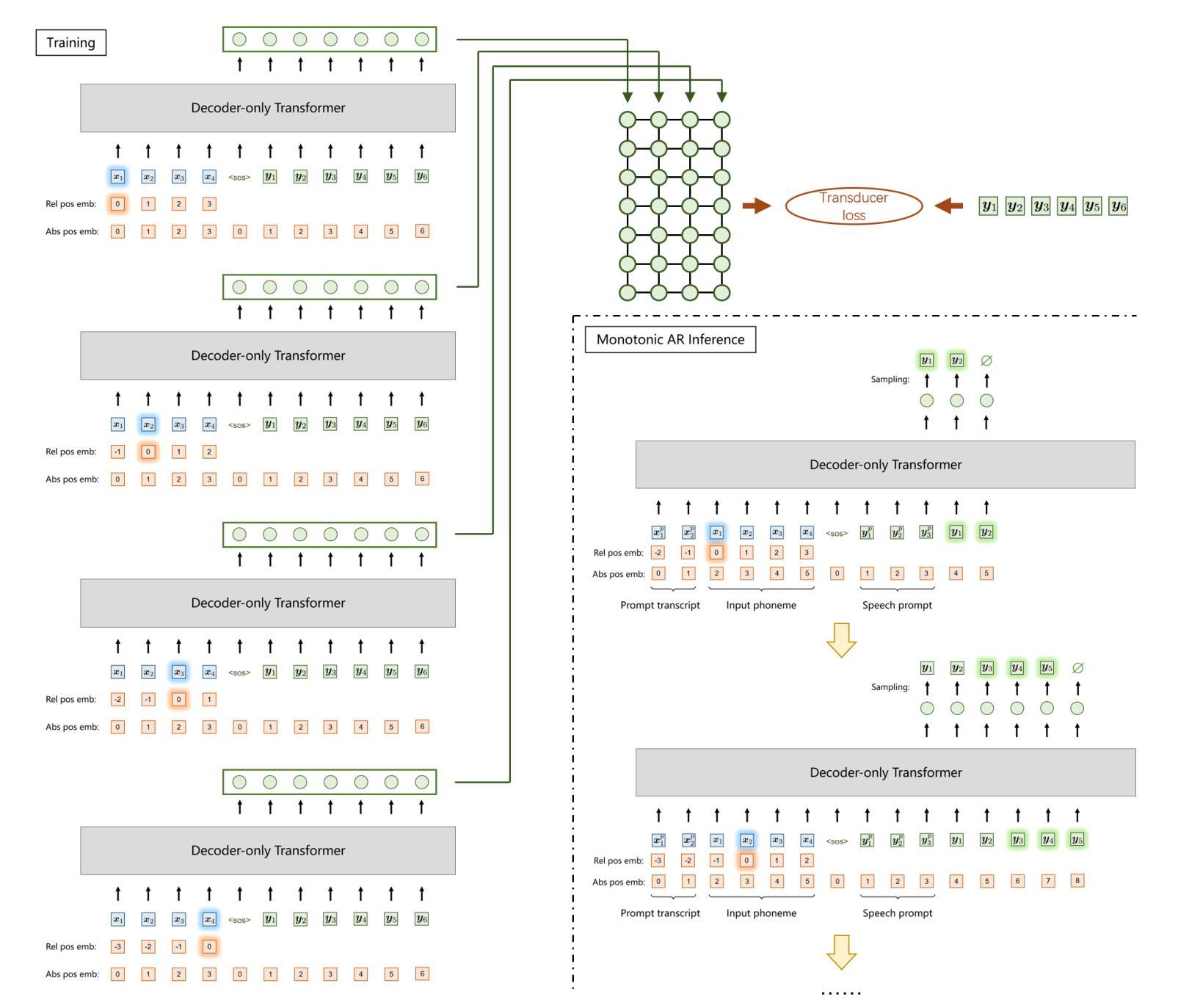

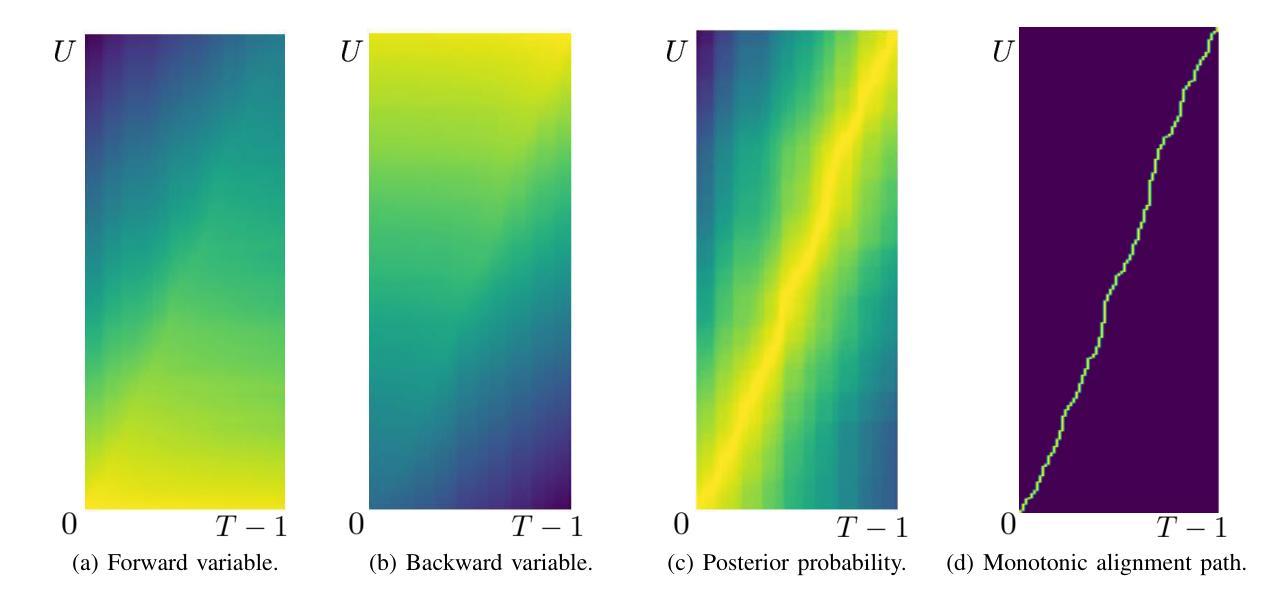

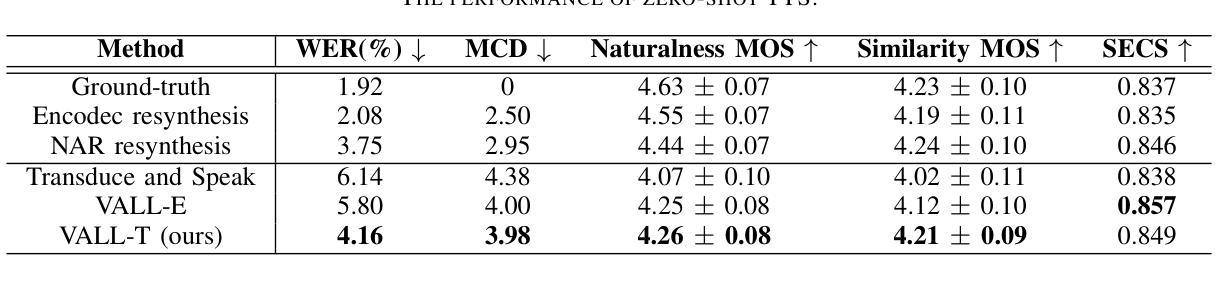

Recent TTS models with decoder-only Transformer architecture, such as SPEAR-TTS and VALL-E, achieve impressive naturalness and demonstrate the ability for zero-shot adaptation given a speech prompt. However, such decoder-only TTS models lack monotonic alignment constraints, sometimes leading to hallucination issues such as mispronunciation, word skipping and repeating. To address this limitation, we propose VALL-T, a generative Transducer model that introduces shifting relative position embeddings for input phoneme sequence, explicitly indicating the monotonic generation process while maintaining the architecture of decoder-only Transformer. Consequently, VALL-T retains the capability of prompt-based zero-shot adaptation and demonstrates better robustness against hallucinations with a relative reduction of 28.3% in the word error rate.

最近采用仅解码器Transformer架构的TTS模型,如SPEAR-TTS和VALL-E,在给定语音提示的情况下,实现了令人印象深刻的自然度,并展示了零样本适应的能力。然而,这种仅解码器的TTS模型缺乏单调对齐约束,有时会导致幻觉问题,如发音错误、跳词和重复。为了解决这一局限性,我们提出了VALL-T,这是一个生成式的转换器模型,通过引入输入音素序列的移位相对位置嵌入,明确指示单调生成过程,同时保持仅解码器Transformer的架构。因此,VALL-T保留了基于提示的零样本适应能力,并通过对幻觉的相对降低28.3%的词错误率来展示更好的稳健性。

论文及项目相关链接

PDF Accepted to ICASSP 2025

Summary

基于Transformer架构的解码器TTS模型(如SPEAR-TTS和VALL-E)表现出卓越的自然度,并能实现零样本自适应。然而,由于缺乏单调对齐约束,这些模型可能会出现诸如发音错误、单词跳过和重复等“hallucination”(幻觉)问题。为此,我们提出一种名为VALL-T的生成式转换器模型,通过引入输入音素序列的位置相对嵌入变化来显式指示单调生成过程,同时在维持原有Transformer架构的基础上增强了鲁棒性。VALL-T不仅能够实现基于提示的零样本自适应,而且在对抗幻觉问题方面表现出更好的稳健性,相对降低了28.3%的单词错误率。

Key Takeaways

- 现有基于Transformer架构的解码器TTS模型在自然度和零样本自适应方面表现出色。

- 解码器TTS模型存在的限制是缺乏单调对齐约束,可能导致发音错误等问题。

- VALL-T模型是一种生成式转换器模型,旨在解决解码器TTS模型的这一限制。

- VALL-T模型通过引入输入音素序列的位置相对嵌入变化来显式指示单调生成过程。

- VALL-T模型在保持原有Transformer架构的基础上增强了鲁棒性。

- VALL-T模型实现了基于提示的零样本自适应。

点此查看论文截图