⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-03-21 更新

Cafe-Talk: Generating 3D Talking Face Animation with Multimodal Coarse- and Fine-grained Control

Authors:Hejia Chen, Haoxian Zhang, Shoulong Zhang, Xiaoqiang Liu, Sisi Zhuang, Yuan Zhang, Pengfei Wan, Di Zhang, Shuai Li

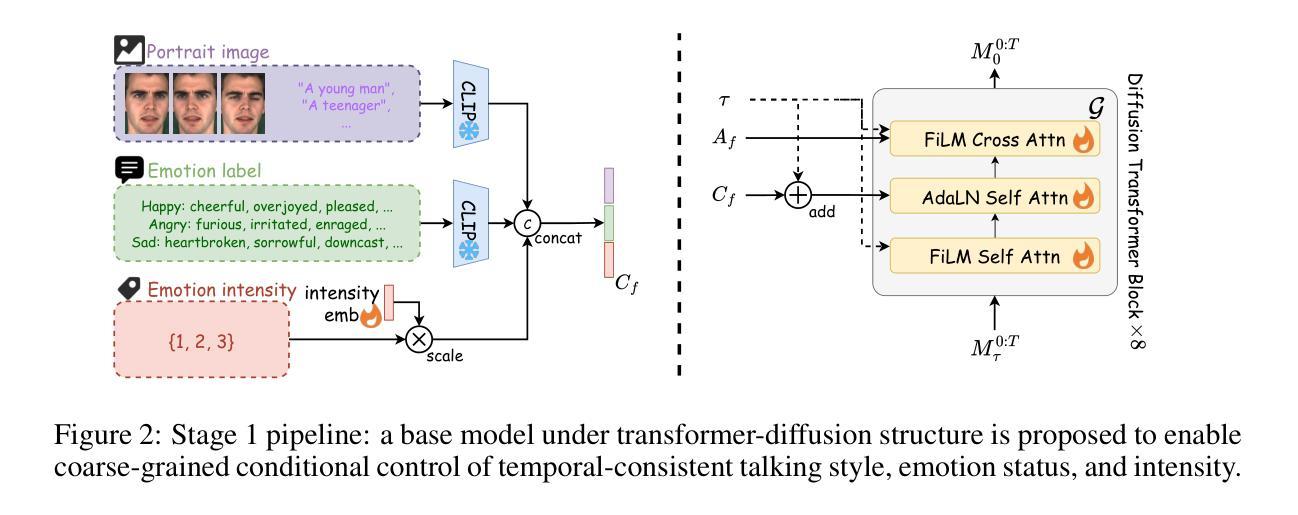

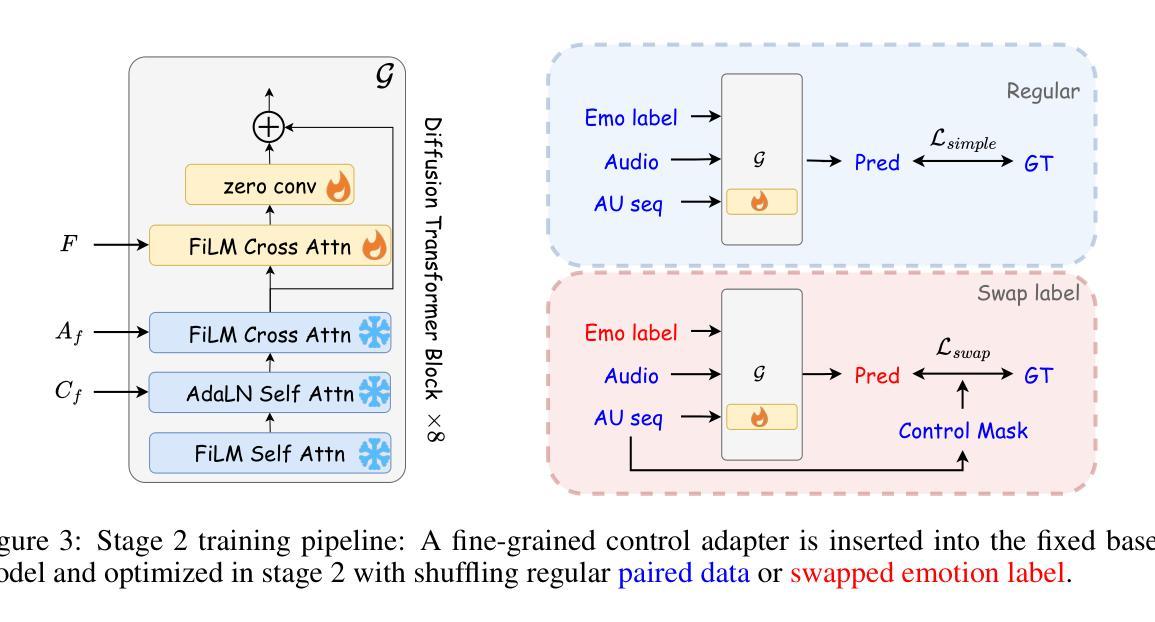

Speech-driven 3D talking face method should offer both accurate lip synchronization and controllable expressions. Previous methods solely adopt discrete emotion labels to globally control expressions throughout sequences while limiting flexible fine-grained facial control within the spatiotemporal domain. We propose a diffusion-transformer-based 3D talking face generation model, Cafe-Talk, which simultaneously incorporates coarse- and fine-grained multimodal control conditions. Nevertheless, the entanglement of multiple conditions challenges achieving satisfying performance. To disentangle speech audio and fine-grained conditions, we employ a two-stage training pipeline. Specifically, Cafe-Talk is initially trained using only speech audio and coarse-grained conditions. Then, a proposed fine-grained control adapter gradually adds fine-grained instructions represented by action units (AUs), preventing unfavorable speech-lip synchronization. To disentangle coarse- and fine-grained conditions, we design a swap-label training mechanism, which enables the dominance of the fine-grained conditions. We also devise a mask-based CFG technique to regulate the occurrence and intensity of fine-grained control. In addition, a text-based detector is introduced with text-AU alignment to enable natural language user input and further support multimodal control. Extensive experimental results prove that Cafe-Talk achieves state-of-the-art lip synchronization and expressiveness performance and receives wide acceptance in fine-grained control in user studies. Project page: https://harryxd2018.github.io/cafe-talk/

语音驱动的三维对话人脸方法应该同时提供准确的唇同步和可控制的表情。之前的方法只是采用离散的情绪标签来全局控制序列中的表情,而在时空领域限制了灵活的面部精细控制。我们提出了一种基于扩散变换器的三维对话人脸生成模型Cafe-Talk,它同时结合了粗粒度和细粒度的多模式控制条件。然而,多种条件的纠缠给实现满意性能带来了挑战。为了分离语音音频和细粒度条件,我们采用了两阶段训练管道。具体来说,Cafe-Talk最初仅使用语音音频和粗粒度条件进行训练。然后,所提出的细粒度控制适配器逐渐添加由动作单位(AUs)表示的细粒度指令,防止不利的语音唇同步。为了分离粗粒度和细粒度条件,我们设计了一种交换标签训练机制,使细粒度条件占据主导地位。我们还设计了一种基于掩码的CFG技术来调节细粒度控制的发生和强度。此外,引入了基于文本的检测器,并进行文本-AU对齐,以实现自然语言用户输入并进一步支持多模式控制。大量的实验结果证明,Cafe-Talk在唇同步和表达性能方面达到了最新水平,并在用户研究中获得了对细粒度控制的广泛认可。项目页面:https://harryxd2018.github.io/cafe-talk/

论文及项目相关链接

PDF Accepted by ICLR’25

Summary:

提出一种基于扩散变压器(diffusion-transformer)的3D谈话面部生成模型Cafe-Talk,能同时结合粗粒度和细粒度的多模态控制条件。通过两阶段训练管道实现语音音频与细粒度条件的分离,并设计交换标签训练机制和基于掩码的CFG技术,以提高模型的性能。同时引入基于文本的检测器,实现自然语言用户输入和多模态控制支持。实验结果和用户研究证明Cafe-Talk在唇同步和表现力方面达到最佳水平,并在细粒度控制方面获得广泛认可。

Key Takeaways:

- Cafe-Talk模型结合了粗粒度和细粒度的多模态控制条件,实现了更灵活的面部控制。

- 两阶段训练管道用于实现语音音频和细粒度条件的分离,以提高模型性能。

- 交换标签训练机制有助于细粒度条件的主导。

- 基于掩码的CFG技术用于调控细粒度控制的出现和强度。

- 引入基于文本的检测器和文本-AU对齐,支持自然语言用户输入和多模态控制。

- 实验结果表明Cafe-Talk在唇同步和表现力方面达到最佳水平。

点此查看论文截图

KeyFace: Expressive Audio-Driven Facial Animation for Long Sequences via KeyFrame Interpolation

Authors:Antoni Bigata, Michał Stypułkowski, Rodrigo Mira, Stella Bounareli, Konstantinos Vougioukas, Zoe Landgraf, Nikita Drobyshev, Maciej Zieba, Stavros Petridis, Maja Pantic

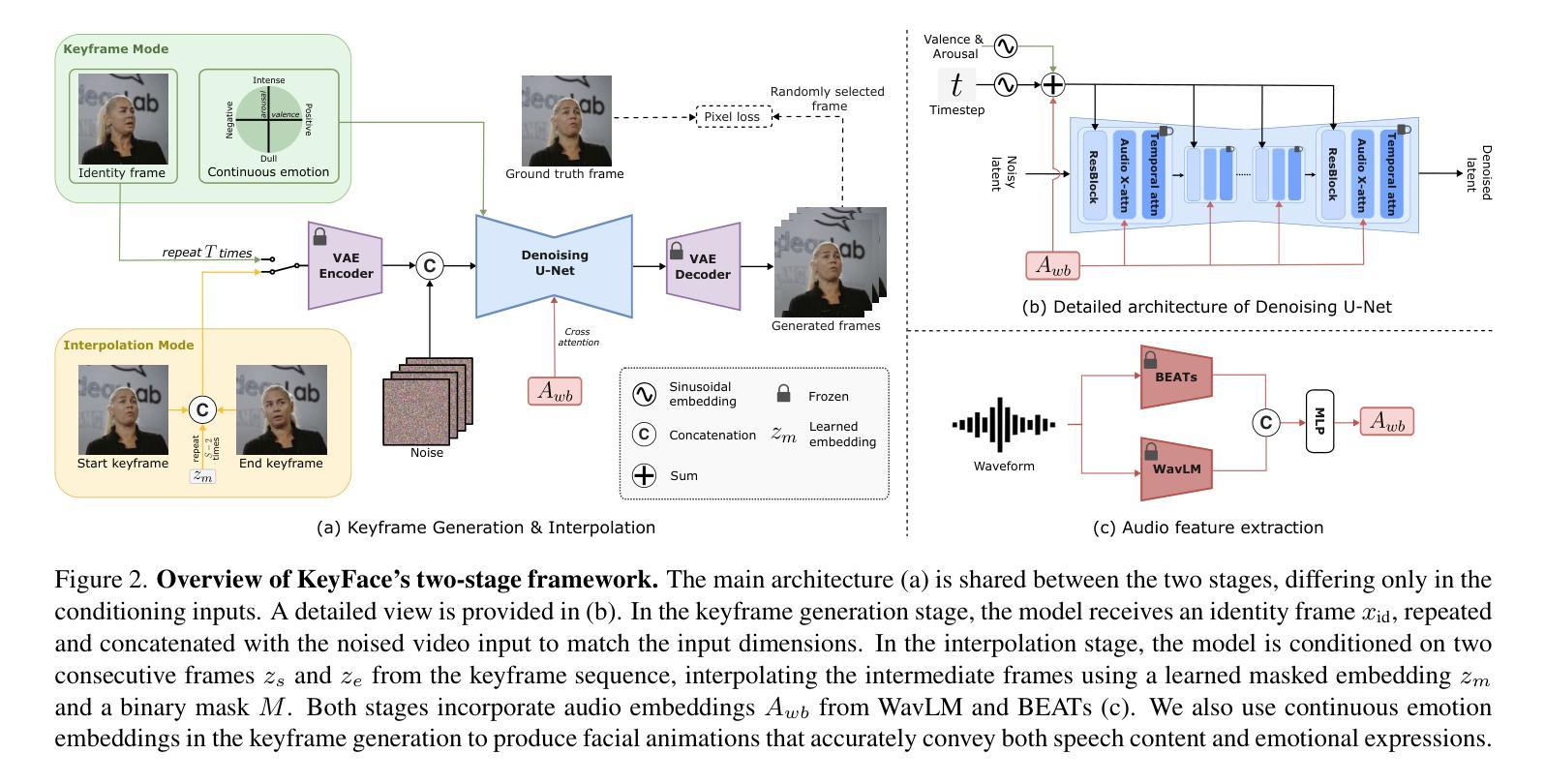

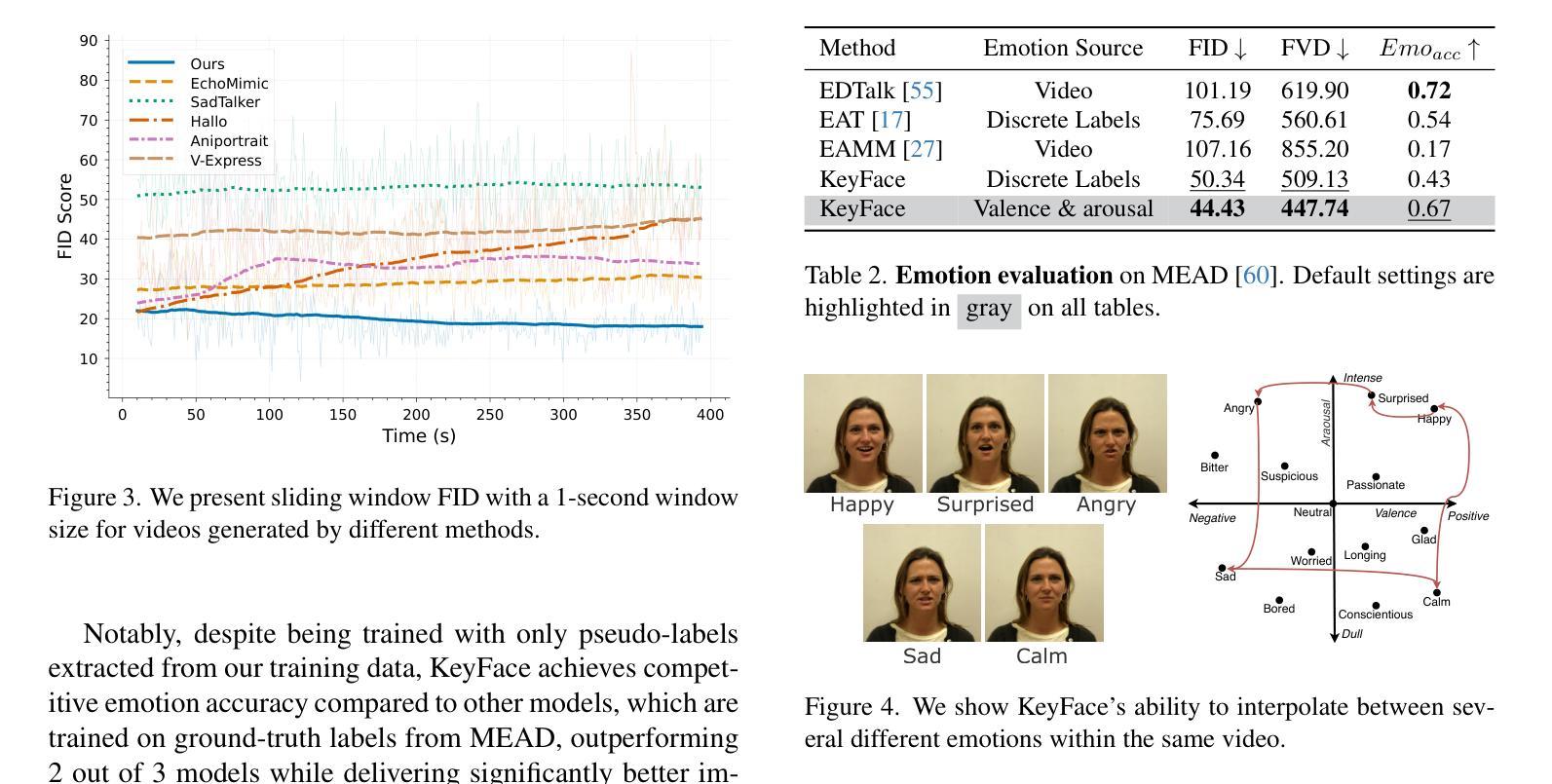

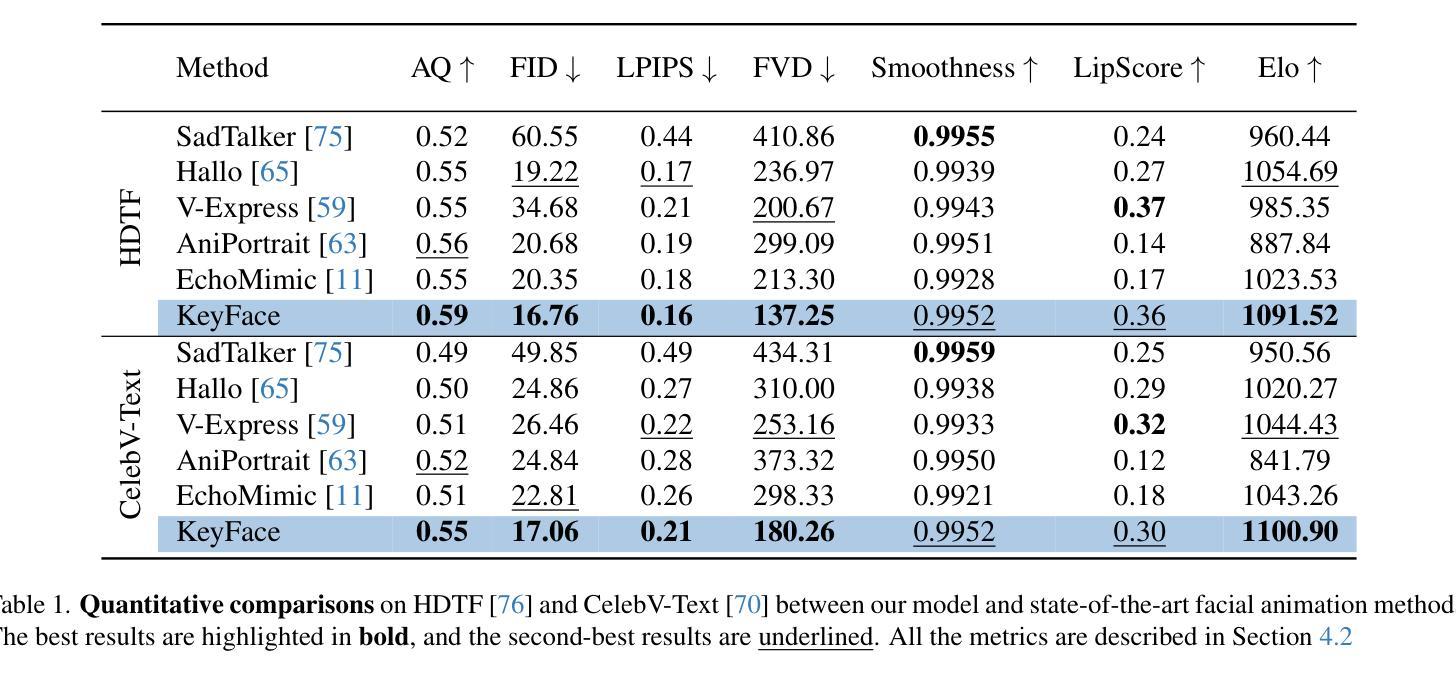

Current audio-driven facial animation methods achieve impressive results for short videos but suffer from error accumulation and identity drift when extended to longer durations. Existing methods attempt to mitigate this through external spatial control, increasing long-term consistency but compromising the naturalness of motion. We propose KeyFace, a novel two-stage diffusion-based framework, to address these issues. In the first stage, keyframes are generated at a low frame rate, conditioned on audio input and an identity frame, to capture essential facial expressions and movements over extended periods of time. In the second stage, an interpolation model fills in the gaps between keyframes, ensuring smooth transitions and temporal coherence. To further enhance realism, we incorporate continuous emotion representations and handle a wide range of non-speech vocalizations (NSVs), such as laughter and sighs. We also introduce two new evaluation metrics for assessing lip synchronization and NSV generation. Experimental results show that KeyFace outperforms state-of-the-art methods in generating natural, coherent facial animations over extended durations, successfully encompassing NSVs and continuous emotions.

当前基于音频驱动的面部动画方法在短视频上取得了令人印象深刻的效果,但当扩展到更长时间时,会出现误差累积和身份漂移的问题。现有方法试图通过外部空间控制来减轻这一问题,虽然增强了长期一致性,但牺牲了动作的自然性。我们提出了KeyFace,这是一个新颖的两阶段基于扩散的框架来解决这些问题。在第一阶段,根据音频输入和身份帧,以低帧率生成关键帧,以捕捉长时间的面部表情和基本动作。在第二阶段,插值模型填充关键帧之间的间隙,确保平滑过渡和时间连贯性。为了进一步提高逼真度,我们融入了连续的情绪表示并处理各种非语音发声(NSVs),如笑声和叹息声。我们还引入了两个新的评估指标来评估嘴唇同步和非语音生成。实验结果表明,在长时间的面部动画生成方面,KeyFace优于最新技术方法,成功地涵盖了非语音发声和连续情绪。

论文及项目相关链接

PDF CVPR 2025

Summary

本文提出一种名为KeyFace的新型两阶段扩散框架,用于解决音频驱动面部动画在长期应用中的误差累积和身份漂移问题。第一阶段生成关键帧,第二阶段填充关键帧之间的间隙,确保平滑过渡和时间连贯性。KeyFace还结合了连续情感表示,并处理各种非语音声音(NSVs),如笑声和叹息。

Key Takeaways

- 当前音频驱动的面部动画方法在短视频上表现令人印象深刻,但在长时间应用中面临误差累积和身份漂移的问题。

- KeyFace是一种新型的两阶段扩散框架,旨在解决这些问题,通过生成关键帧和填充关键帧之间的间隙来确保面部动画的自然性和连贯性。

- 第一阶段基于音频输入和身份帧生成低帧率的关键帧,以捕捉长时间的面部表情和动作。

- 第二阶段的插值模型填补了关键帧之间的空白,确保平滑过渡和时间连贯性。

- KeyFace结合了连续情感表示,能够处理各种非语音声音(NSVs),如笑声和叹息,增强了面部动画的真实感。

- 引入了两个新的评估指标,用于评估唇同步和非语音声音生成的效果。

点此查看论文截图