⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-04-17 更新

Mamba as a Bridge: Where Vision Foundation Models Meet Vision Language Models for Domain-Generalized Semantic Segmentation

Authors:Xin Zhang, Robby T. Tan

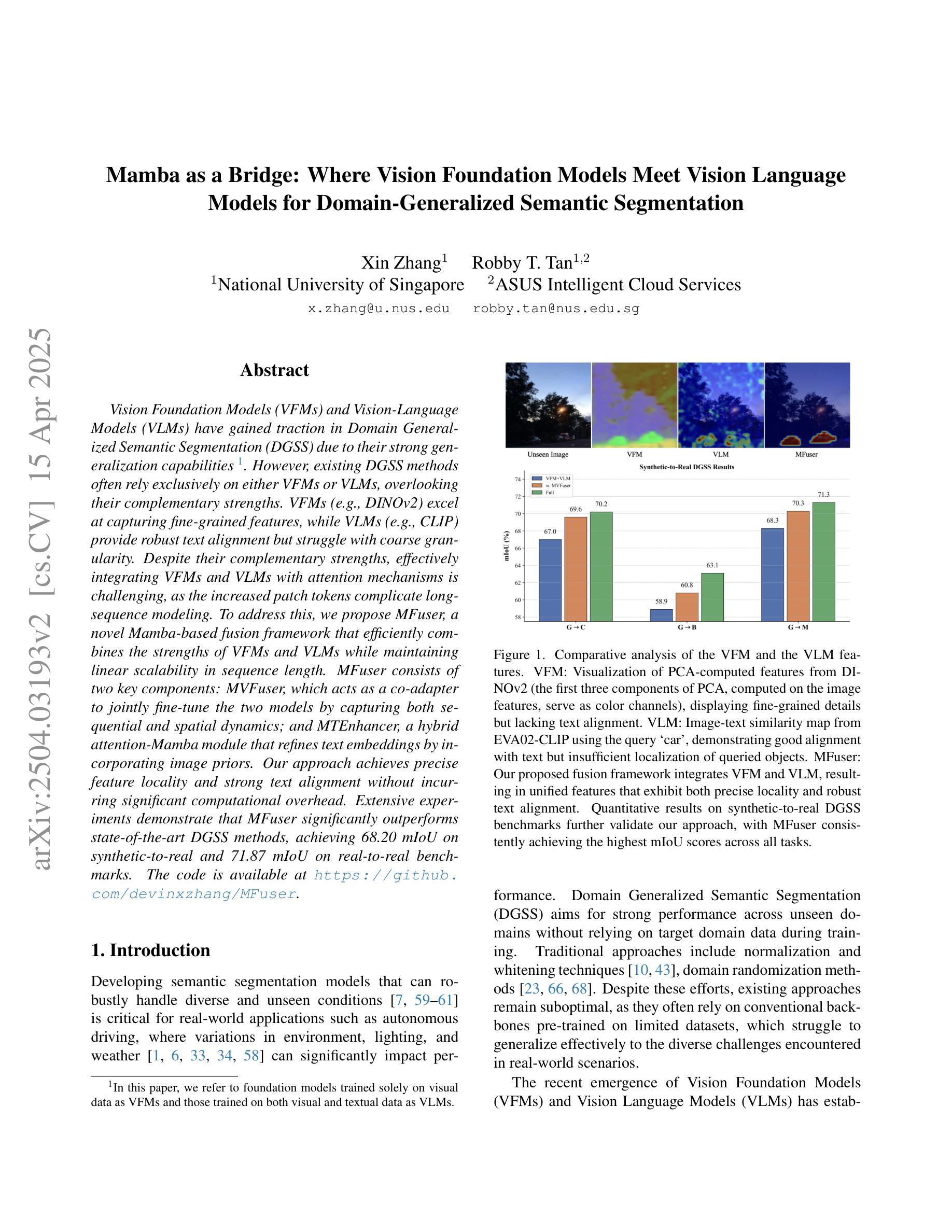

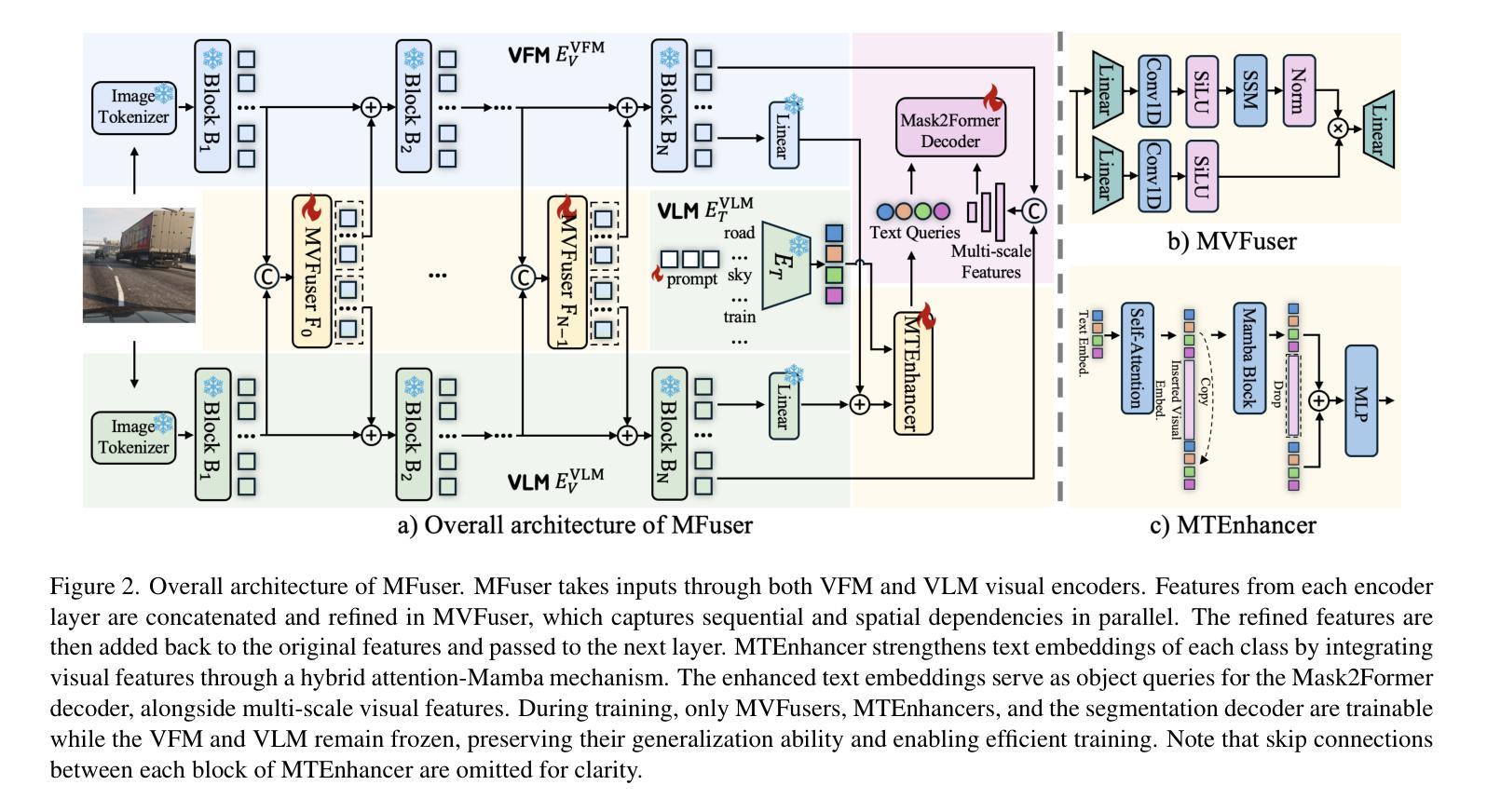

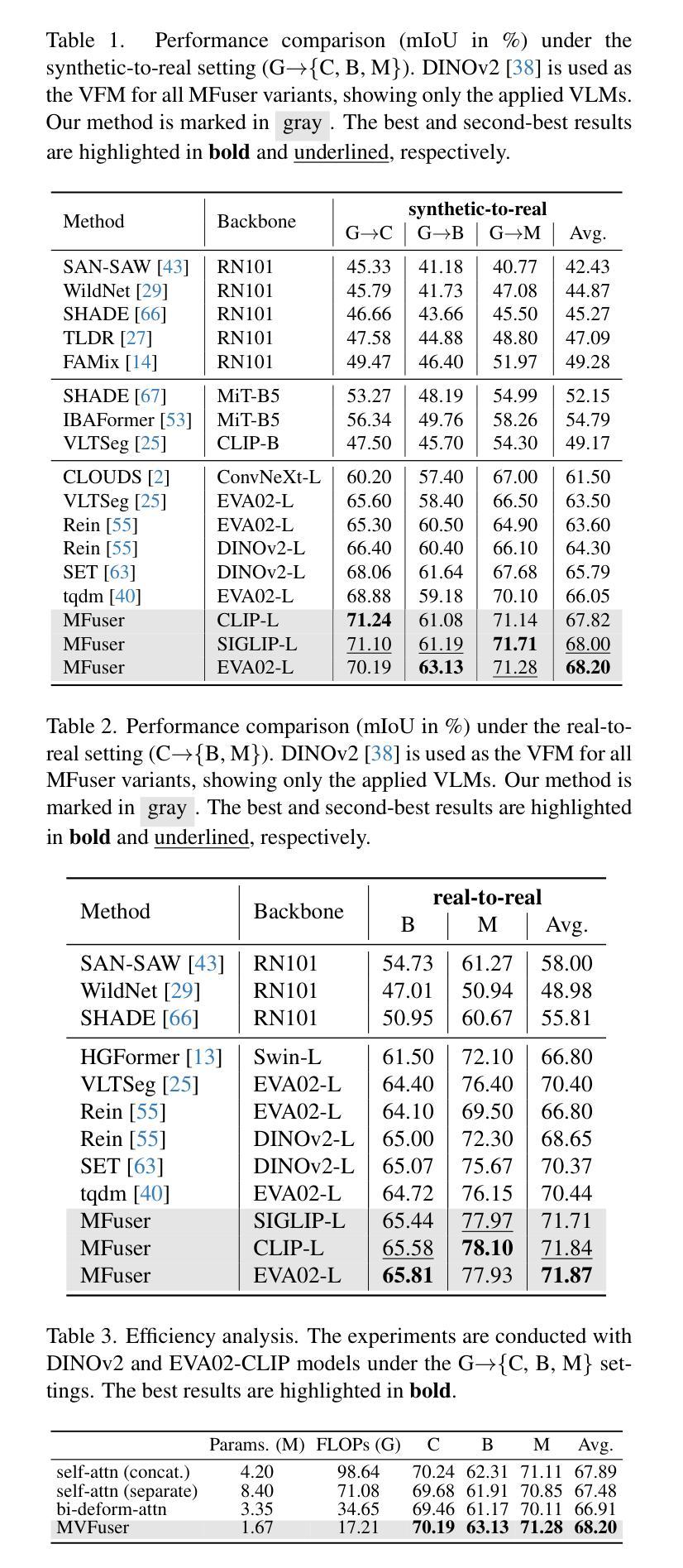

Vision Foundation Models (VFMs) and Vision-Language Models (VLMs) have gained traction in Domain Generalized Semantic Segmentation (DGSS) due to their strong generalization capabilities. However, existing DGSS methods often rely exclusively on either VFMs or VLMs, overlooking their complementary strengths. VFMs (e.g., DINOv2) excel at capturing fine-grained features, while VLMs (e.g., CLIP) provide robust text alignment but struggle with coarse granularity. Despite their complementary strengths, effectively integrating VFMs and VLMs with attention mechanisms is challenging, as the increased patch tokens complicate long-sequence modeling. To address this, we propose MFuser, a novel Mamba-based fusion framework that efficiently combines the strengths of VFMs and VLMs while maintaining linear scalability in sequence length. MFuser consists of two key components: MVFuser, which acts as a co-adapter to jointly fine-tune the two models by capturing both sequential and spatial dynamics; and MTEnhancer, a hybrid attention-Mamba module that refines text embeddings by incorporating image priors. Our approach achieves precise feature locality and strong text alignment without incurring significant computational overhead. Extensive experiments demonstrate that MFuser significantly outperforms state-of-the-art DGSS methods, achieving 68.20 mIoU on synthetic-to-real and 71.87 mIoU on real-to-real benchmarks. The code is available at https://github.com/devinxzhang/MFuser.

视觉基础模型(VFMs)和视觉语言模型(VLMs)由于其强大的泛化能力,在域通用语义分割(DGSS)中受到了广泛关注。然而,现有的DGSS方法往往仅依赖于VFMs或VLMs,忽略了它们的互补优势。VFMs(例如DINOv2)擅长捕捉精细特征,而VLMs(例如CLIP)提供稳健的文本对齐,但在粗粒度方面表现较差。尽管它们具有互补优势,但有效地结合VFMs和VLMs的注意力机制是具有挑战性的,因为增加的补丁令牌使得长序列建模复杂化。为了解决这个问题,我们提出了MFuser,这是一个基于Mamba的新型融合框架,能够有效地结合VFMs和VLMs的优势,同时保持序列长度的线性可扩展性。MFuser由两个关键组件组成:MVFuser,它作为协同适配器,通过捕捉序列和空间动态来共同微调两个模型;MTEnhancer是一个混合注意力-Mamba模块,它通过引入图像先验来优化文本嵌入。我们的方法实现了精确的特征局部性和强大的文本对齐,而不会引起显著的计算开销。大量实验表明,MFuser显著优于最新的DGSS方法,在合成到现实的基准测试上达到68.20 mIoU,在现实到现实的基准测试上达到71.87 mIoU。代码可在https://github.com/devinxzhang/MFuser找到。

论文及项目相关链接

PDF Accepted to CVPR 2025 (Highlight)

摘要

本文提出一种基于Mamba的MFuser融合框架,旨在有效地结合Vision Foundation Models(VFMs)和Vision-Language Models(VLMs)的优势,实现线性序列长度可扩展性。通过MFuser中的MVFuser组件联合微调两种模型,同时利用MTEnhancer进行文本嵌入优化,实现了精准的特征局部性和强大的文本对齐功能。在合成到现实和真实到现实的基准测试中,MFuser显著优于现有最先进的Domain Generalized Semantic Segmentation(DGSS)方法,实现了较高的性能表现。代码已公开于GitHub上。

关键见解

- Vision Foundation Models(VFMs)和Vision-Language Models(VLMs)在Domain Generalized Semantic Segmentation(DGSS)中受到关注,二者具有强大的泛化能力。

- 现有DGSS方法往往只依赖VFMs或VLMs,忽略了它们的互补优势。

- VFMs擅长捕捉精细特征,而VLMs提供稳健的文本对齐但难以处理粗粒度数据。

- MFuser框架结合了VFMs和VLMs的优势,通过MVFuser组件联合微调两种模型,实现精准的特征局部性和强大的文本对齐。

- MFuser包含两个关键组件:MVFuser和MTEnhancer,分别负责捕捉序列和空间的动态以及优化文本嵌入。

- MFuser实现了线性序列长度可扩展性,且在不产生显著计算开销的情况下实现了高性能。

- 实验结果表明,MFuser在合成到现实和真实到现实的基准测试中显著优于现有方法,达到了较高的mIoU值。

点此查看论文截图