⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-04-19 更新

Efficient Fourier Filtering Network with Contrastive Learning for UAV-based Unaligned Bi-modal Salient Object Detection

Authors:Pengfei Lyu, Pak-Hei Yeung, Xiaosheng Yu, Xiufei Cheng, Chengdong Wu, Jagath C. Rajapakse

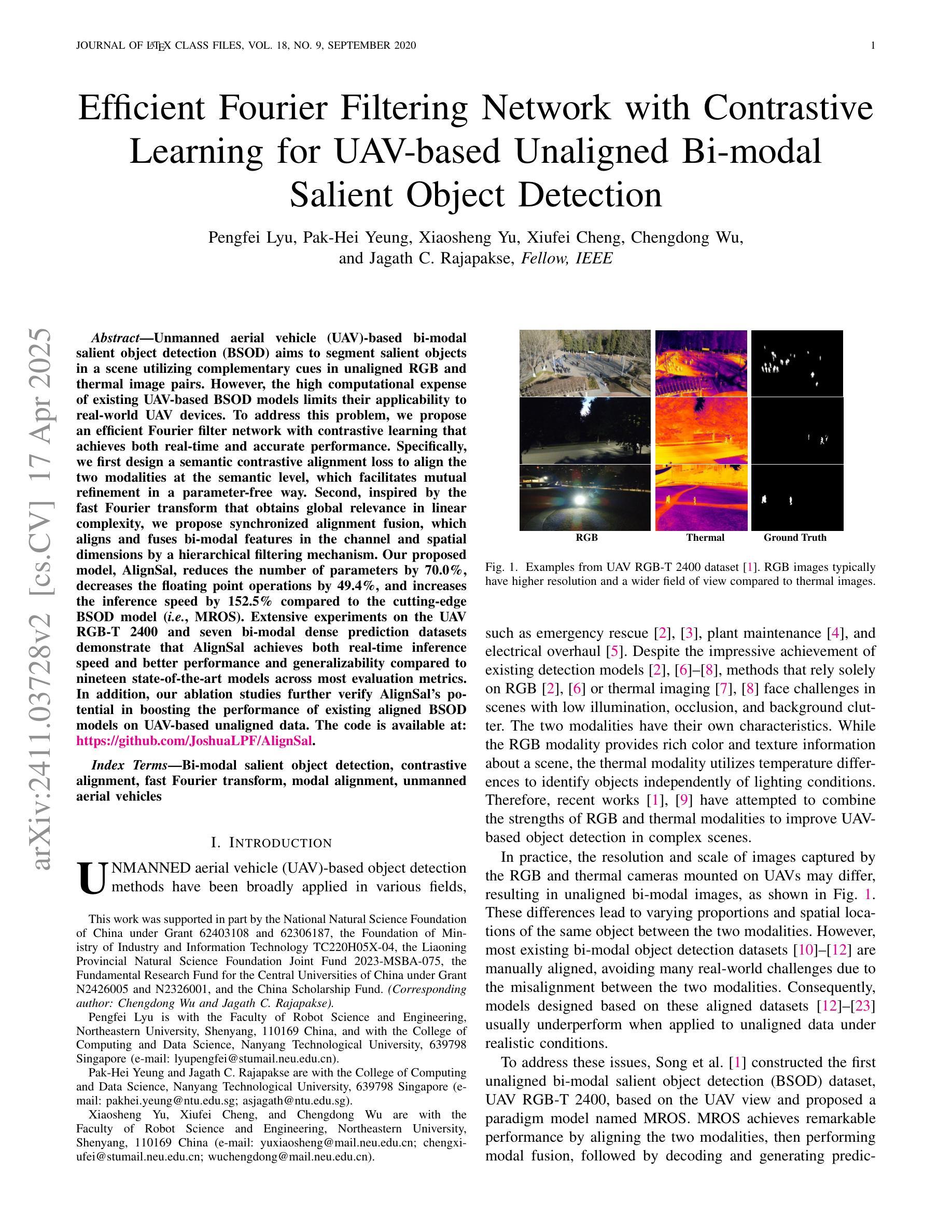

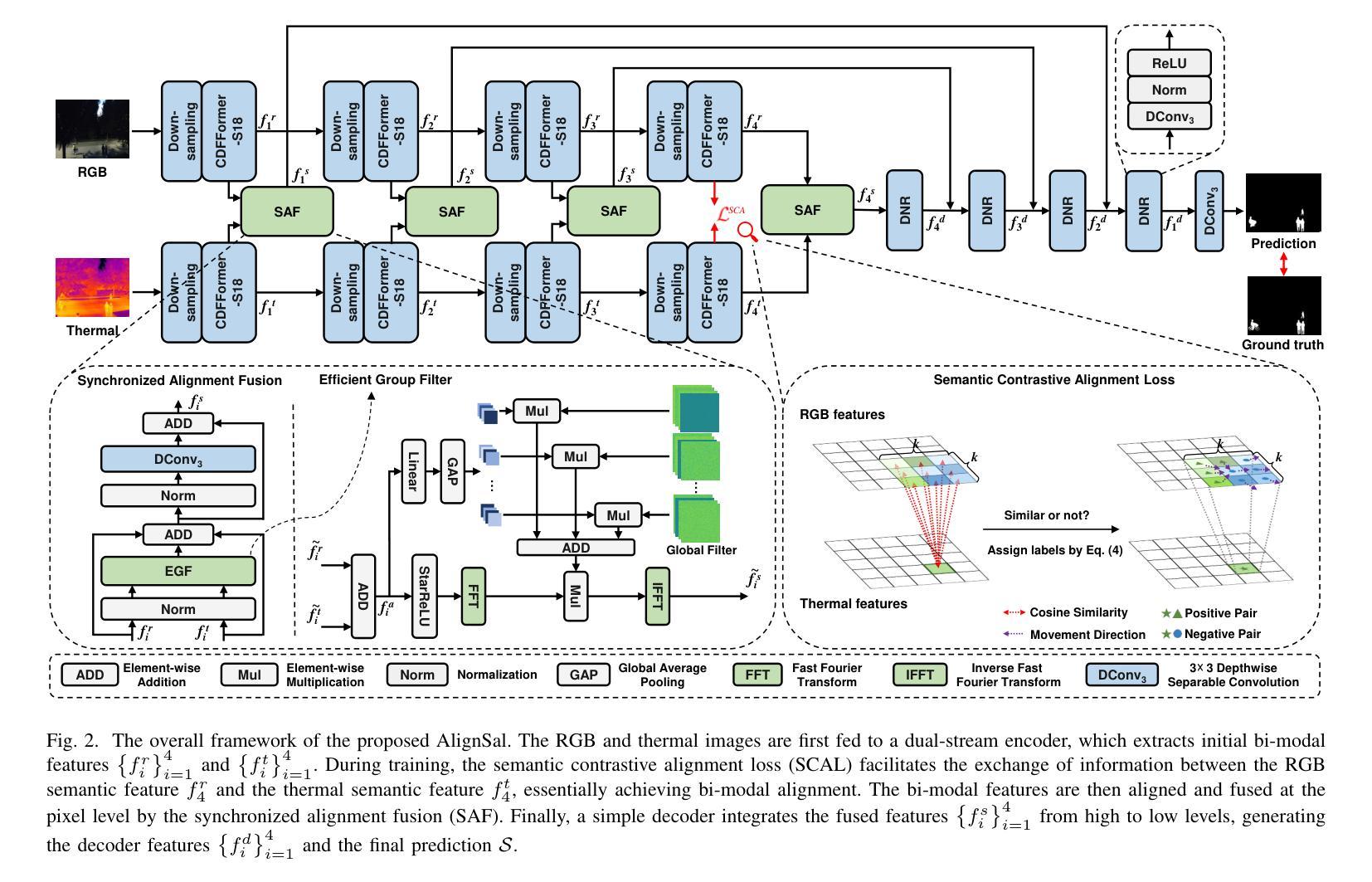

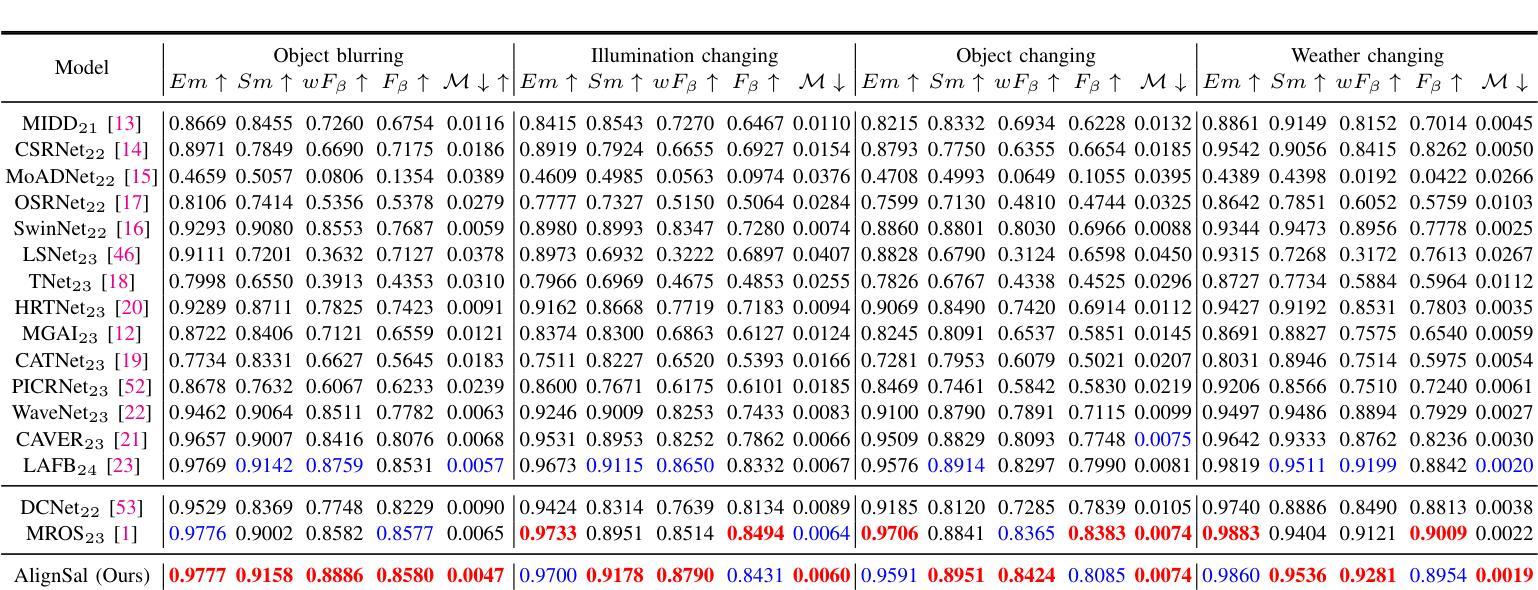

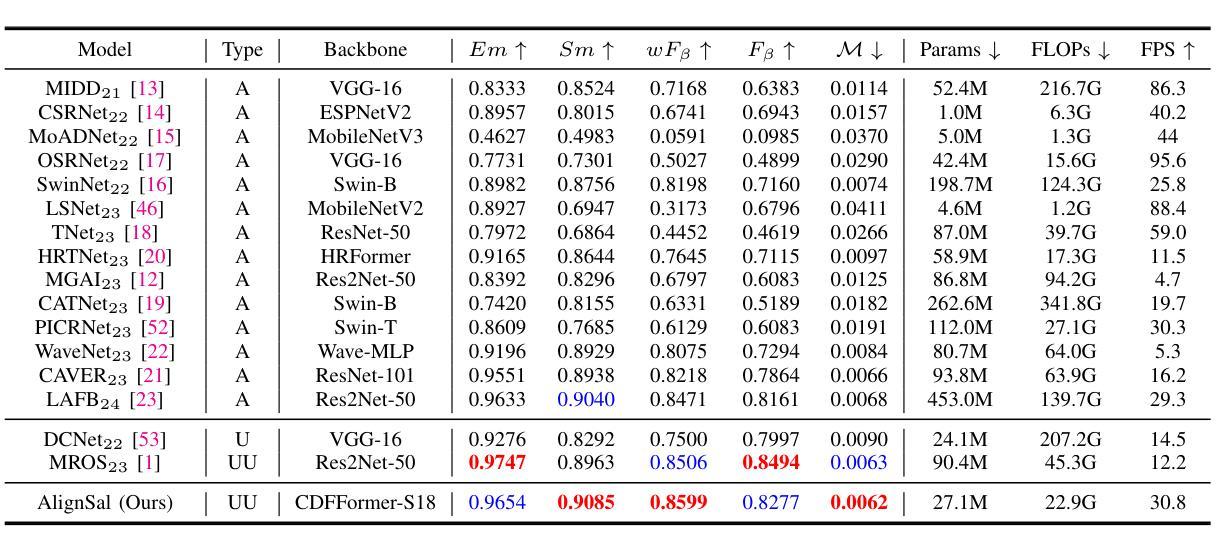

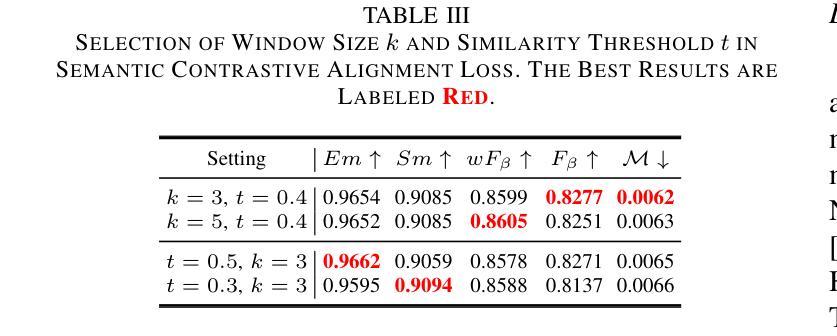

Unmanned aerial vehicle (UAV)-based bi-modal salient object detection (BSOD) aims to segment salient objects in a scene utilizing complementary cues in unaligned RGB and thermal image pairs. However, the high computational expense of existing UAV-based BSOD models limits their applicability to real-world UAV devices. To address this problem, we propose an efficient Fourier filter network with contrastive learning that achieves both real-time and accurate performance. Specifically, we first design a semantic contrastive alignment loss to align the two modalities at the semantic level, which facilitates mutual refinement in a parameter-free way. Second, inspired by the fast Fourier transform that obtains global relevance in linear complexity, we propose synchronized alignment fusion, which aligns and fuses bi-modal features in the channel and spatial dimensions by a hierarchical filtering mechanism. Our proposed model, AlignSal, reduces the number of parameters by 70.0%, decreases the floating point operations by 49.4%, and increases the inference speed by 152.5% compared to the cutting-edge BSOD model (i.e., MROS). Extensive experiments on the UAV RGB-T 2400 and seven bi-modal dense prediction datasets demonstrate that AlignSal achieves both real-time inference speed and better performance and generalizability compared to nineteen state-of-the-art models across most evaluation metrics. In addition, our ablation studies further verify AlignSal’s potential in boosting the performance of existing aligned BSOD models on UAV-based unaligned data. The code is available at: https://github.com/JoshuaLPF/AlignSal.

基于无人机的双模态显著目标检测(BSOD)旨在利用未对齐的RGB和热成像图像对中的互补线索来分割场景中的显著目标。然而,现有基于无人机的BSOD模型的高计算成本限制了它们在真实世界无人机设备中的应用。为了解决这一问题,我们提出了一种具有对比学习的高效傅里叶滤波器网络,实现了实时和准确的性能。具体来说,我们首先设计了一种语义对比对齐损失,以在语义层面上对齐两种模态,这有助于以无参数的方式进行相互细化。其次,受到以线性复杂度获得全局相关性的快速傅里叶变换的启发,我们提出了同步对齐融合,通过分层过滤机制在通道和空间维度上对双模态特征进行对齐和融合。我们提出的模型AlignSal,与最先进的BSOD模型(即MROS)相比,减少了70.0%的参数,减少了49.4%的浮点运算,并提高了152.5%的推理速度。在UAV RGB-T 2400和七个双模态密集预测数据集上的大量实验表明,与十九个最先进模型相比,AlignSal实现了实时推理速度、更好的性能和泛化能力,并在大多数评估指标上表现更好。此外,我们的消融研究进一步验证了AlignSal在提升现有对齐BSOD模型在基于无人机的未对齐数据上的性能的潜力。代码可用在:https://github.com/JoshuaLPF/AlignSal。

论文及项目相关链接

PDF Accepted by TGRS 2025

Summary

针对无人机双模态显著性目标检测(UAV-based Bi-modal Salient Object Detection, BSOD)的复杂性和高计算开销问题,本文提出了一种高效的傅里叶滤波网络与对比学习结合的方案,名为AlignSal。该方案通过语义对比对齐损失实现跨模态语义对齐,并采用傅里叶变换启发下的同步对齐融合机制。与前沿的BSOD模型相比,AlignSal减少了参数数量、降低了浮点运算次数,提高了推理速度,同时取得了更好的性能和泛化能力。代码已公开。

Key Takeaways

- AlignSal是一种针对无人机双模态显著性目标检测的高效模型。

- 该模型通过语义对比对齐损失实现跨模态语义对齐,促进无参数方式的相互优化。

- AlignSal采用傅里叶变换启发下的同步对齐融合机制,在通道和空间维度上进行双模态特征的对齐和融合。

- 与现有先进模型相比,AlignSal显著减少了参数数量和计算开销,同时提高了推理速度和性能。

- AlignSal在多个数据集上的表现优于其他先进模型,包括UAV RGB-T 2400和其他七个双模态密集预测数据集。

- 公开了代码,便于其他研究者使用和改进。

点此查看论文截图