⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-10 更新

MonoCoP: Chain-of-Prediction for Monocular 3D Object Detection

Authors:Zhihao Zhang, Abhinav Kumar, Girish Chandar Ganesan, Xiaoming Liu

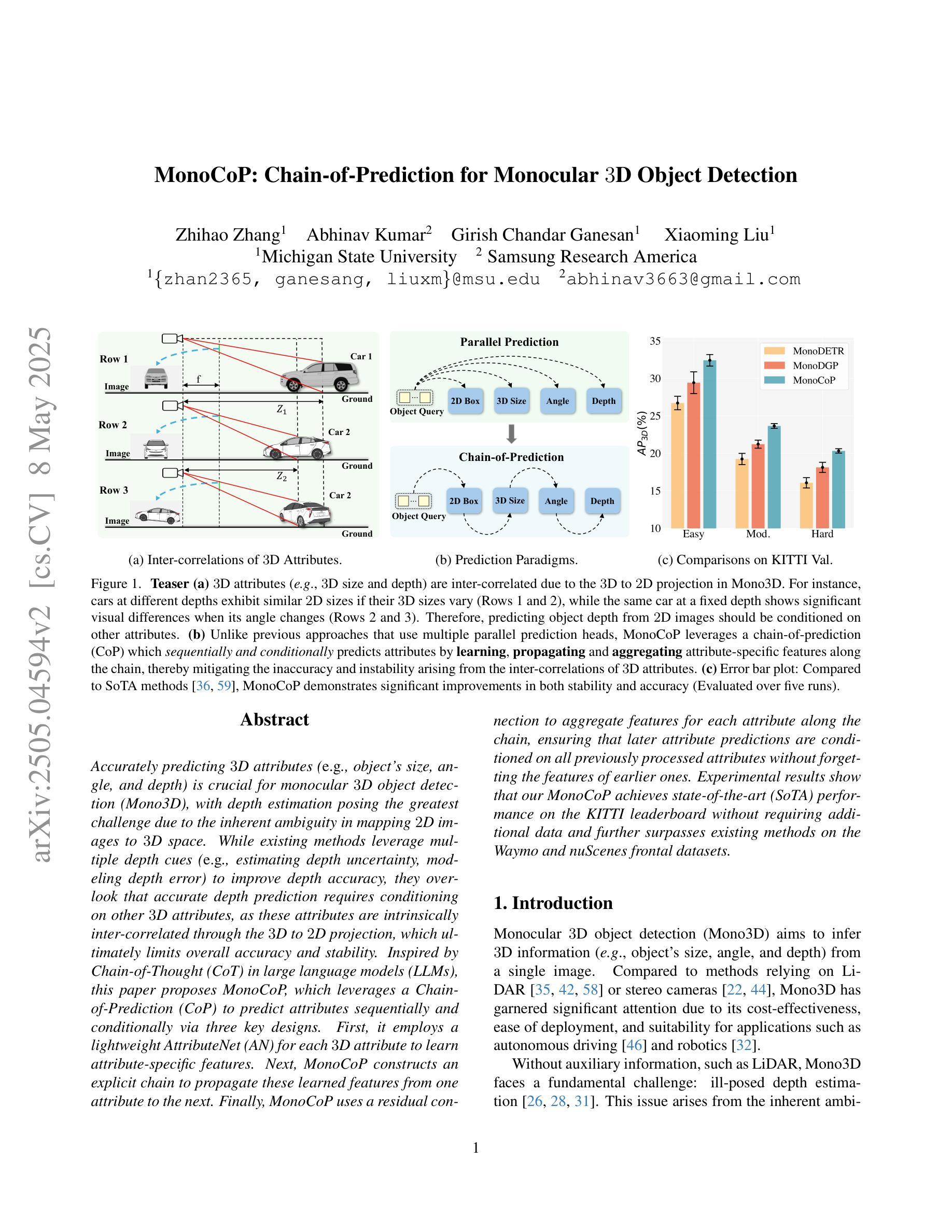

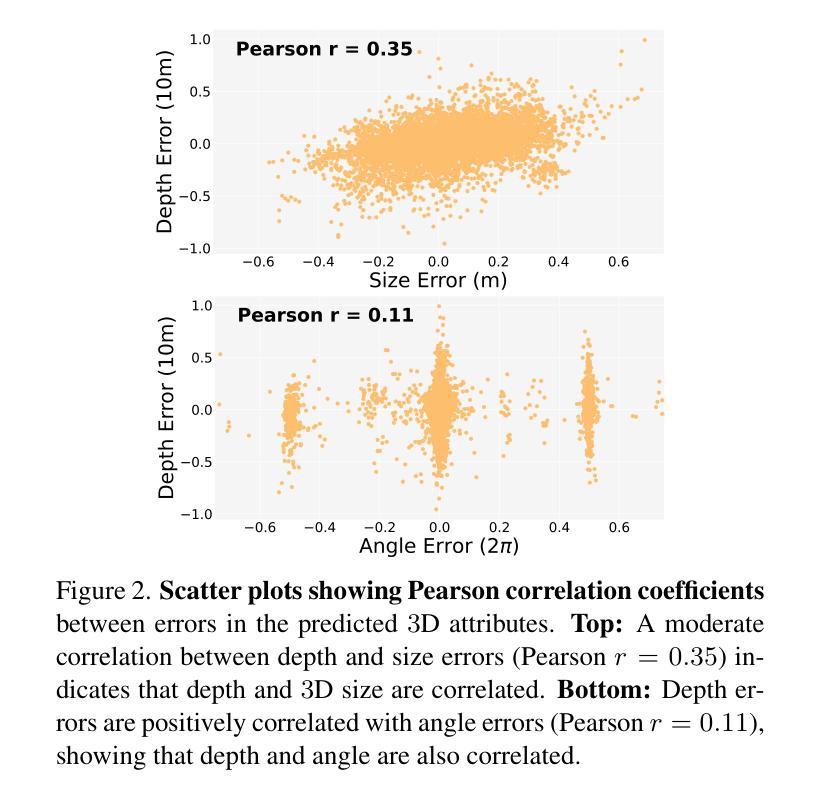

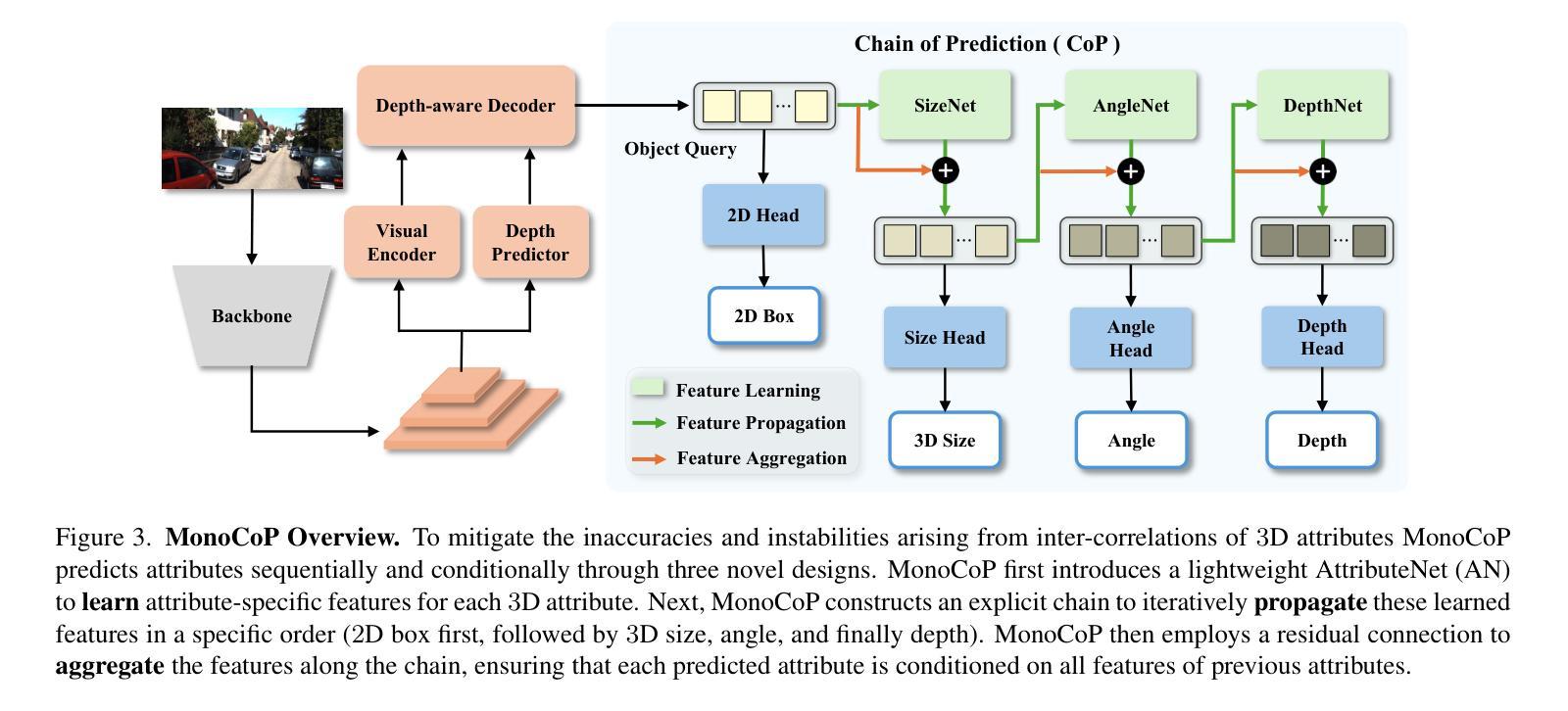

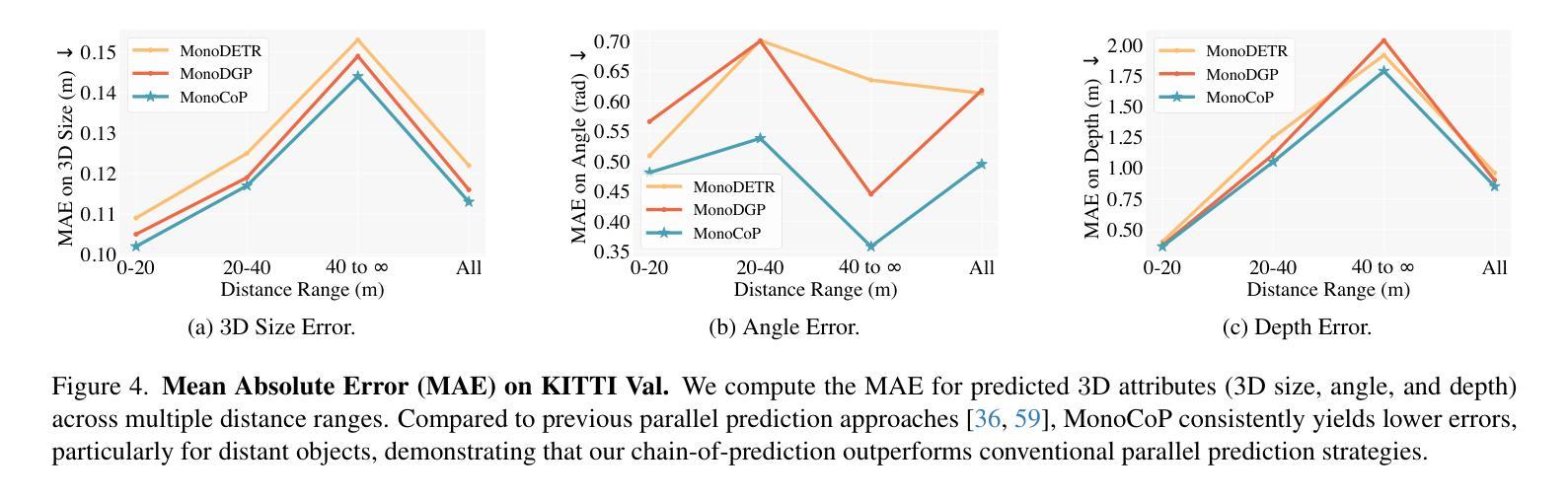

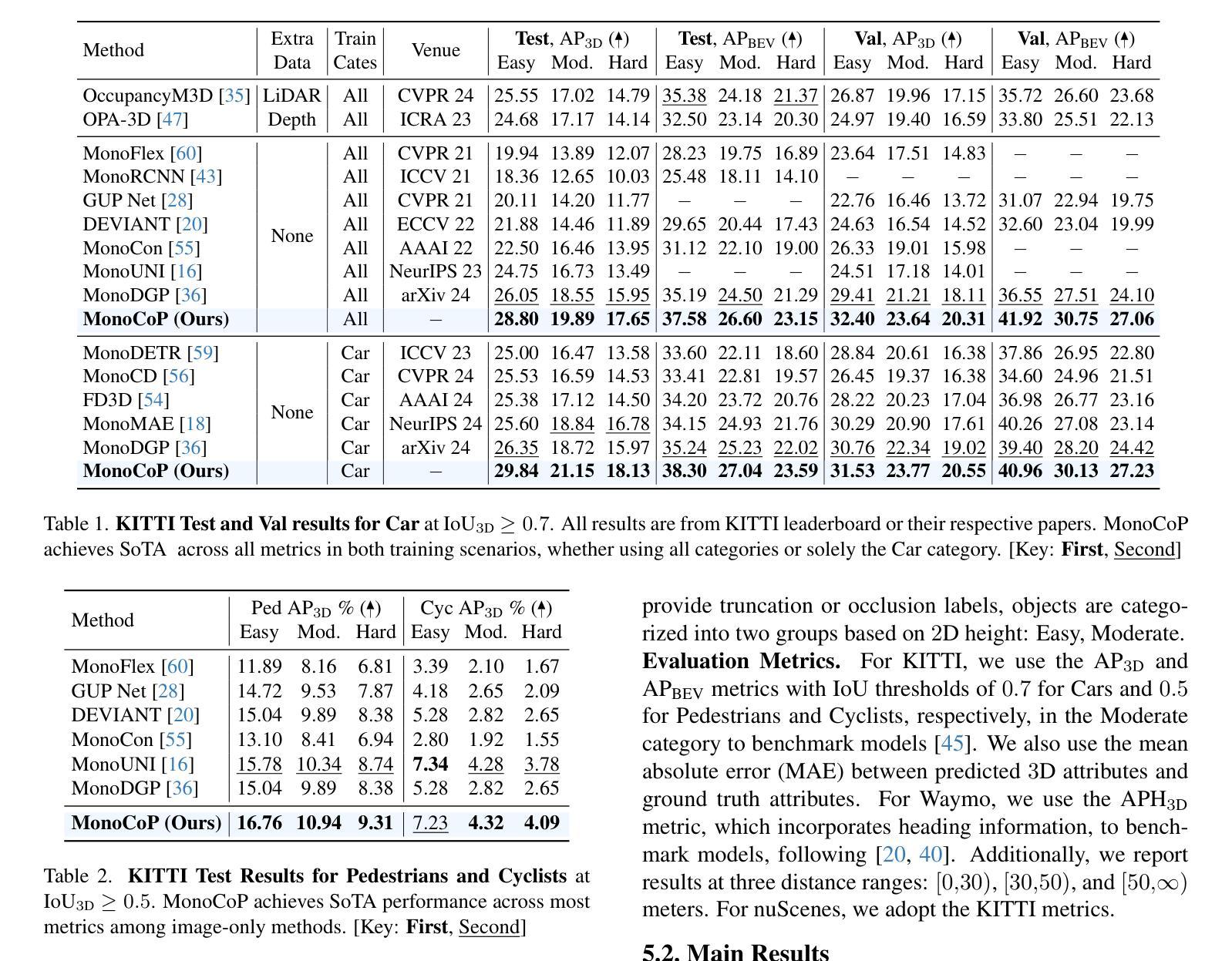

Accurately predicting 3D attributes is crucial for monocular 3D object detection (Mono3D), with depth estimation posing the greatest challenge due to the inherent ambiguity in mapping 2D images to 3D space. While existing methods leverage multiple depth cues (e.g., estimating depth uncertainty, modeling depth error) to improve depth accuracy, they overlook that accurate depth prediction requires conditioning on other 3D attributes, as these attributes are intrinsically inter-correlated through the 3D to 2D projection, which ultimately limits overall accuracy and stability. Inspired by Chain-of-Thought (CoT) in large language models (LLMs), this paper proposes MonoCoP, which leverages a Chain-of-Prediction (CoP) to predict attributes sequentially and conditionally via three key designs. First, it employs a lightweight AttributeNet (AN) for each 3D attribute to learn attribute-specific features. Next, MonoCoP constructs an explicit chain to propagate these learned features from one attribute to the next. Finally, MonoCoP uses a residual connection to aggregate features for each attribute along the chain, ensuring that later attribute predictions are conditioned on all previously processed attributes without forgetting the features of earlier ones. Experimental results show that our MonoCoP achieves state-of-the-art (SoTA) performance on the KITTI leaderboard without requiring additional data and further surpasses existing methods on the Waymo and nuScenes frontal datasets.

准确预测3D属性对于单目3D对象检测(Mono3D)至关重要,深度估计由于将2D图像映射到3D空间时固有的模糊性而构成最大的挑战。现有方法虽然利用多种深度线索(例如估计深度不确定性、建模深度误差)来提高深度准确性,但它们忽视了准确的深度预测需要依赖于其他3D属性,因为这些属性通过3D到2D投影固有地相互关联,这最终限制了总体精度和稳定性。本文受大型语言模型(LLM)中的思维链(Chain-of-Thought,CoT)的启发,提出了一种名为MonoCoP的方法,它利用预测链(Chain-of-Prediction,CoP)来按顺序和有条件地预测属性,主要通过三个关键设计实现。首先,它为每个3D属性使用一个轻量级的AttributeNet(AN)来学习特定于属性的特征。接下来,MonoCoP构建了一个明确的链条来传播从一个属性学到的特征到下一个属性。最后,MonoCoP使用残差连接来沿链条聚合每个属性的特征,确保后续的属性预测依赖于所有先前处理的属性,同时不会忘记早期的属性特征。实验结果表明,我们的MonoCoP在KITTI排行榜上达到了最新技术水平,并且不需要额外数据,在Waymo和nuScenes正面数据集上也超越了现有方法。

论文及项目相关链接

Summary

本文提出了MonoCoP方法,采用预测链(CoP)技术进行单眼三维物体检测中的三维属性预测。该方法通过三个关键设计实现了属性间的顺序和条件预测:一是为每个三维属性使用轻量级的AttributeNet(AN)学习特定特征;二是构建明确的链来传递这些特征从一个属性到下一个属性;三是使用残差连接来聚合沿预测链的每个属性的特征,确保后续的属性预测建立在所有先前处理过的属性之上。实验结果表明,MonoCoP在KITTI排行榜上达到了最新技术水平,且在Waymo和nuScenes前部数据集上超过了现有方法。

Key Takeaways

- 单眼三维物体检测中,准确预测三维属性至关重要,深度估计是一大挑战。

- 现有方法虽然利用多种深度线索提高深度准确性,但忽略了准确深度预测需要基于其他三维属性的条件。

- MonoCoP方法采用预测链(CoP)技术,实现了属性间的顺序和条件预测。

- MonoCoP通过三个关键设计:为每属性使用AttributeNet学习特征、构建明确的预测链传递特征、使用残差连接聚合特征。

- MonoCoP达到了KITTI排行榜的最新技术水平,且在Waymo和nuScenes数据集上表现优异。

- MonoCoP方法不需要额外数据。

点此查看论文截图