⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-15 更新

Multi-Party Supervised Fine-tuning of Language Models for Multi-Party Dialogue Generation

Authors:Xiaoyu Wang, Ningyuan Xi, Teng Chen, Qingqing Gu, Yue Zhao, Xiaokai Chen, Zhonglin Jiang, Yong Chen, Luo Ji

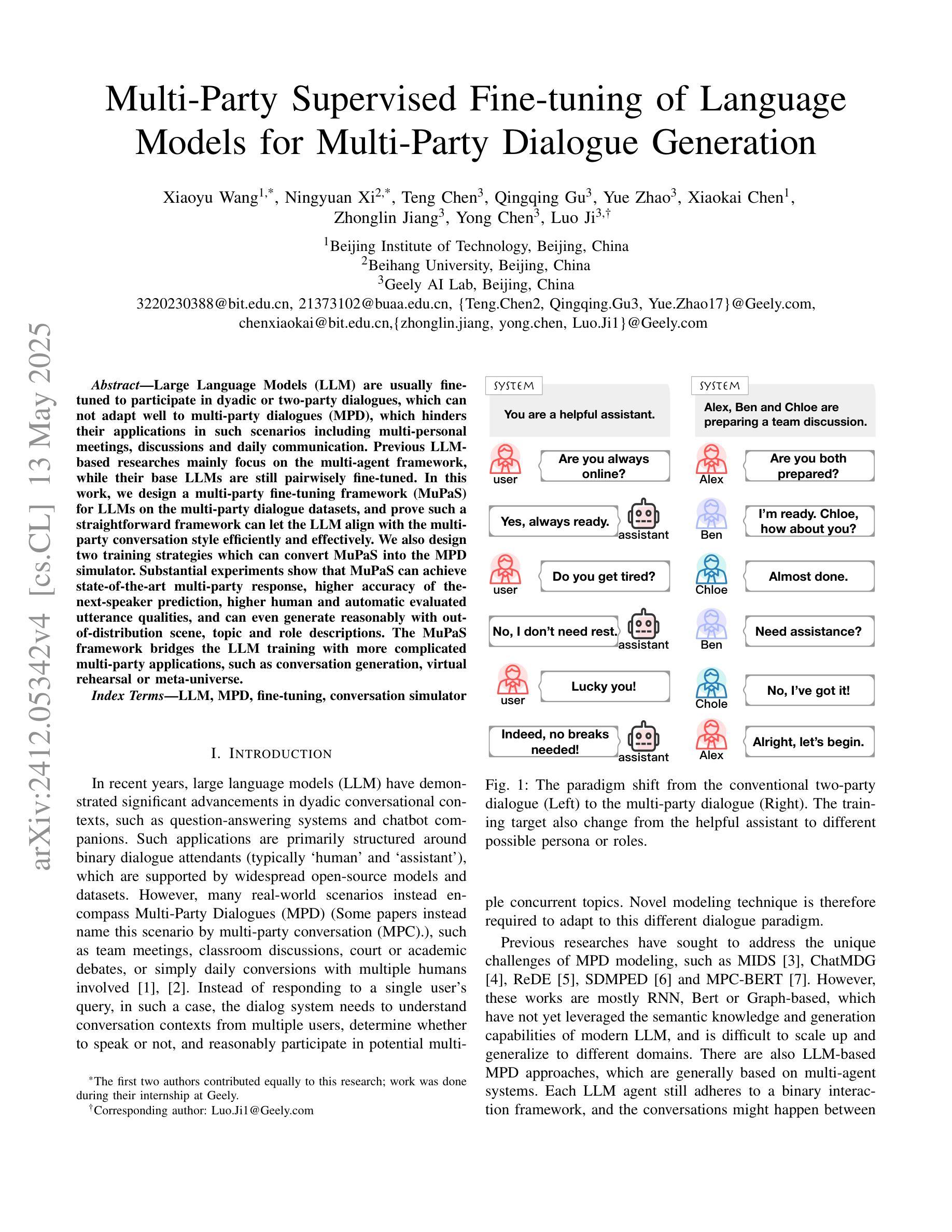

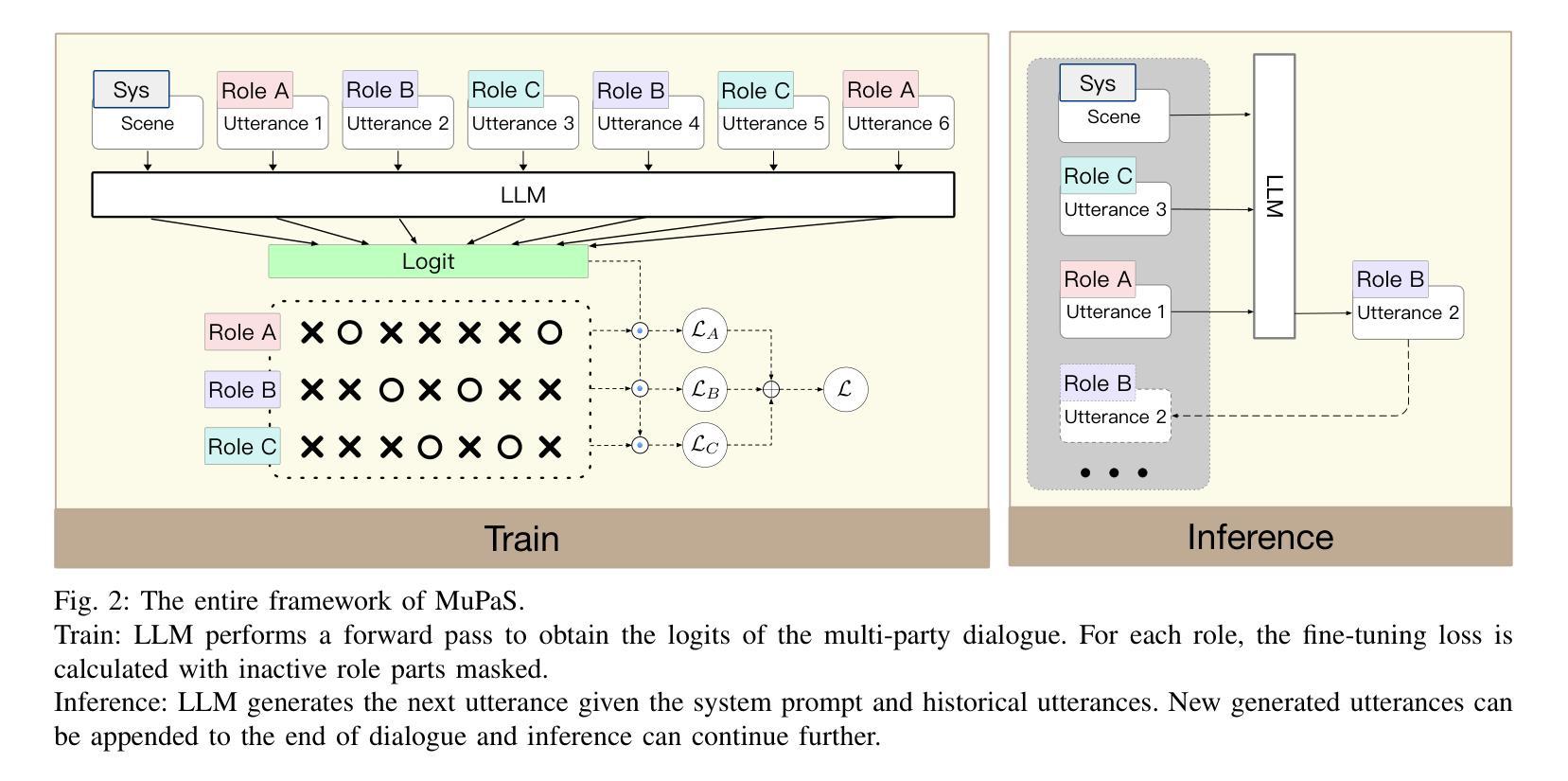

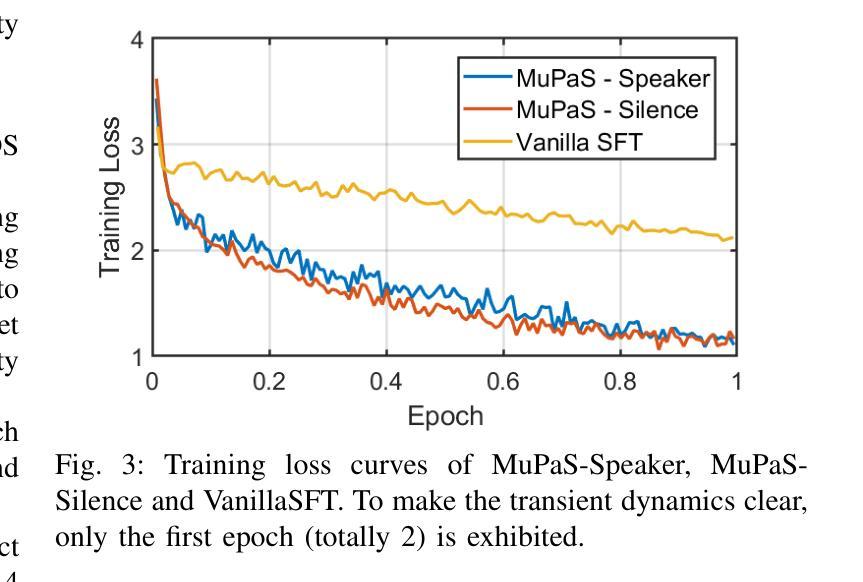

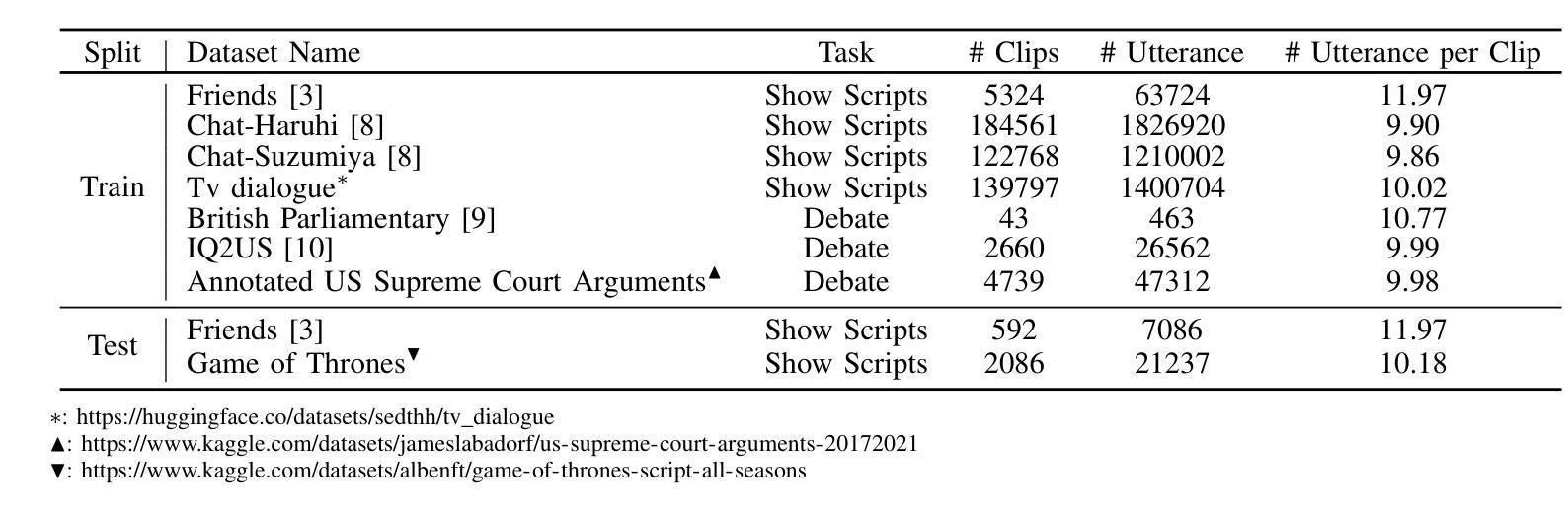

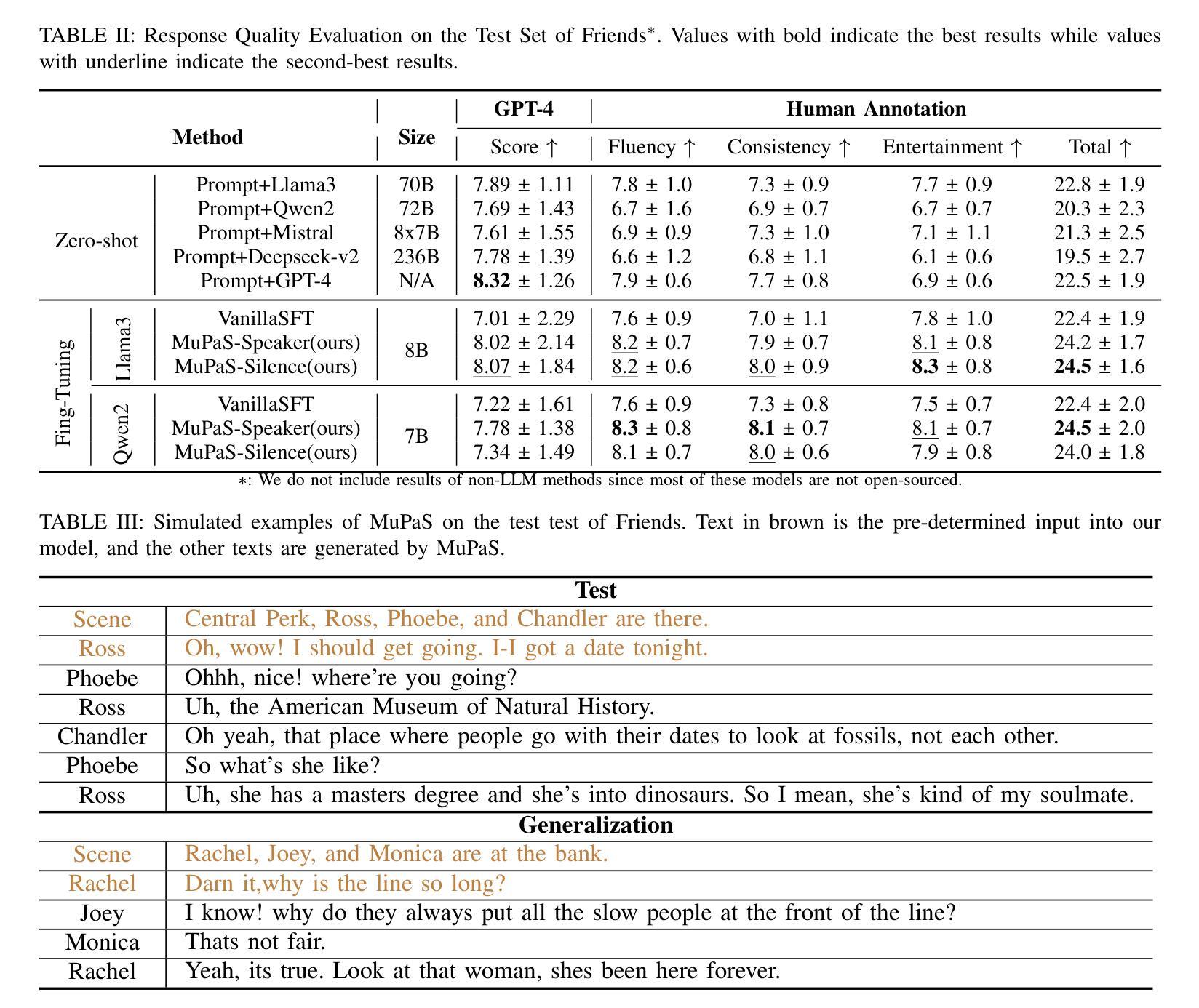

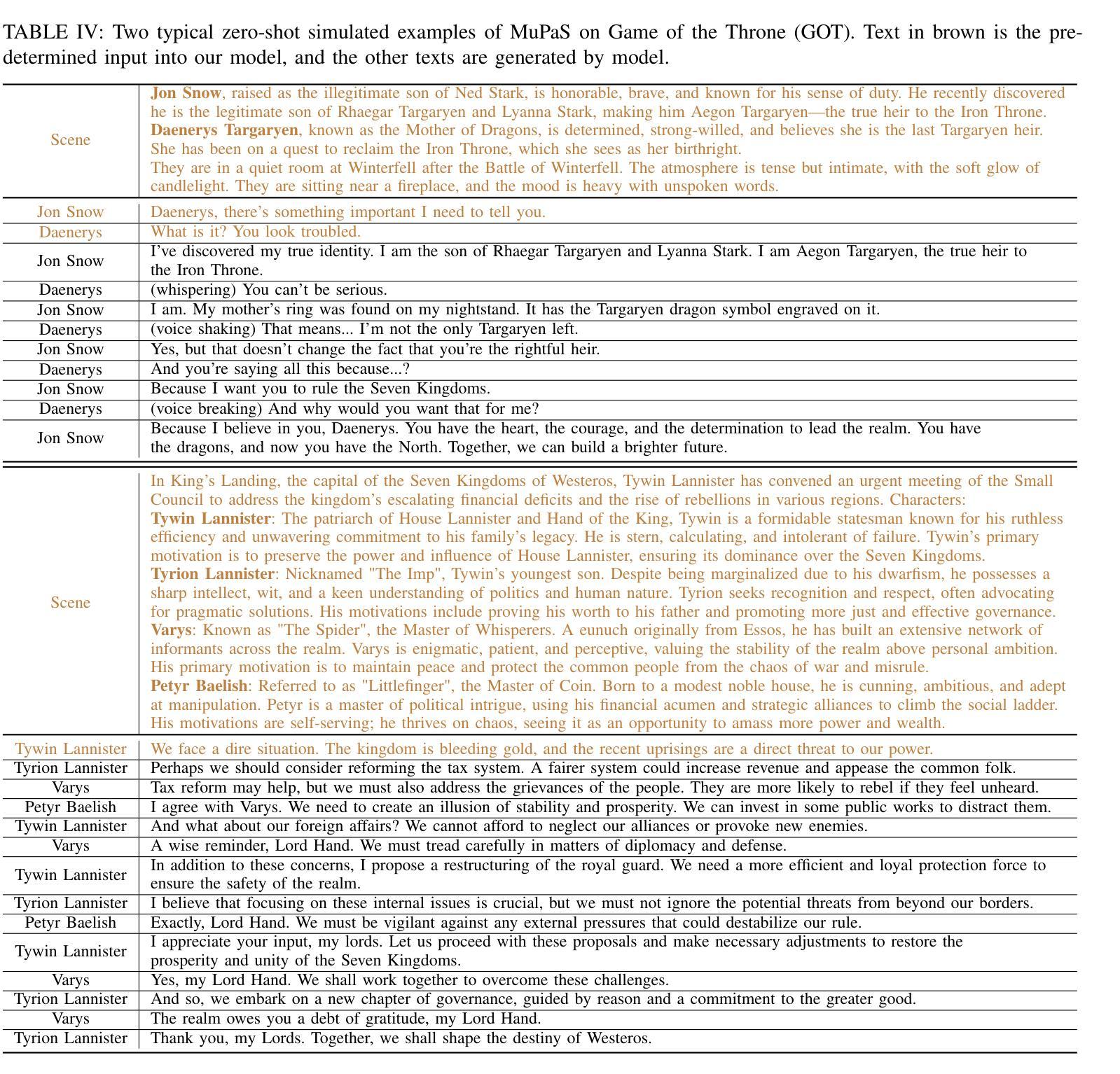

Large Language Models (LLM) are usually fine-tuned to participate in dyadic or two-party dialogues, which can not adapt well to multi-party dialogues (MPD), which hinders their applications in such scenarios including multi-personal meetings, discussions and daily communication. Previous LLM-based researches mainly focus on the multi-agent framework, while their base LLMs are still pairwisely fine-tuned. In this work, we design a multi-party fine-tuning framework (MuPaS) for LLMs on the multi-party dialogue datasets, and prove such a straightforward framework can let the LLM align with the multi-party conversation style efficiently and effectively. We also design two training strategies which can convert MuPaS into the MPD simulator. Substantial experiments show that MuPaS can achieve state-of-the-art multi-party response, higher accuracy of the-next-speaker prediction, higher human and automatic evaluated utterance qualities, and can even generate reasonably with out-of-distribution scene, topic and role descriptions. The MuPaS framework bridges the LLM training with more complicated multi-party applications, such as conversation generation, virtual rehearsal or meta-universe.

大型语言模型(LLM)通常经过微调以参与二元或两方对话,但它们无法很好地适应多方对话(MPD),这阻碍了它们在包括多人会议、讨论和日常交流等场景中的应用。之前基于LLM的研究主要集中在多代理框架上,而其基础LLM仍然是对对对话进行微调。在这项工作中,我们为LLM设计了多方微调框架(MuPaS),以用于多方对话数据集。我们证明这种简单的框架可以让LLM高效且有效地适应多方对话风格。我们还设计了两种训练策略,可以将MuPaS转化为MPD模拟器。大量实验表明,MuPaS可以实现最先进的多方响应、更高的下一位发言者预测准确率、更高的人类和自动评估的话语质量,并且即使在离群场景、主题和角色描述中也能产生合理的响应。MuPaS框架将LLM训练与更复杂的多方应用(如对话生成、虚拟排练或元宇宙)连接起来。

论文及项目相关链接

PDF Accepted by IJCNN 2025

Summary

大型语言模型(LLM)在应对多方对话(MPD)时存在不适应的问题,制约了其在多人会议、讨论和日常沟通等场景的应用。为此,本研究设计了一种针对多方对话数据集的多方微调框架(MuPaS),并证明该框架能使LLM有效适应多方对话风格。同时,还设计了两种训练策略,将MuPaS转化为MPD模拟器。实验显示,MuPaS在多方响应、预测下一位发言者准确性等方面达到了最佳状态,并在人机评价语句质量方面有所提高。此外,MuPaS框架还为更复杂的LLM应用如对话生成、虚拟排练或元宇宙等提供了桥梁。

Key Takeaways

- 大型语言模型(LLM)在多方对话(MPD)中表现不佳,限制了其在多人场景的应用。

- 提出了一种多方微调框架(MuPaS)来解决这一问题。

- MuPaS框架能有效使LLM适应多方对话风格。

- 设计了两种训练策略来将MuPaS转化为MPD模拟器。

- MuPaS在多方响应和预测下一位发言者方面达到了最佳状态。

- MuPaS提高了人机评价的语句质量。

点此查看论文截图