⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-17 更新

DINO-X: A Unified Vision Model for Open-World Object Detection and Understanding

Authors:Tianhe Ren, Yihao Chen, Qing Jiang, Zhaoyang Zeng, Yuda Xiong, Wenlong Liu, Zhengyu Ma, Junyi Shen, Yuan Gao, Xiaoke Jiang, Xingyu Chen, Zhuheng Song, Yuhong Zhang, Hongjie Huang, Han Gao, Shilong Liu, Hao Zhang, Feng Li, Kent Yu, Lei Zhang

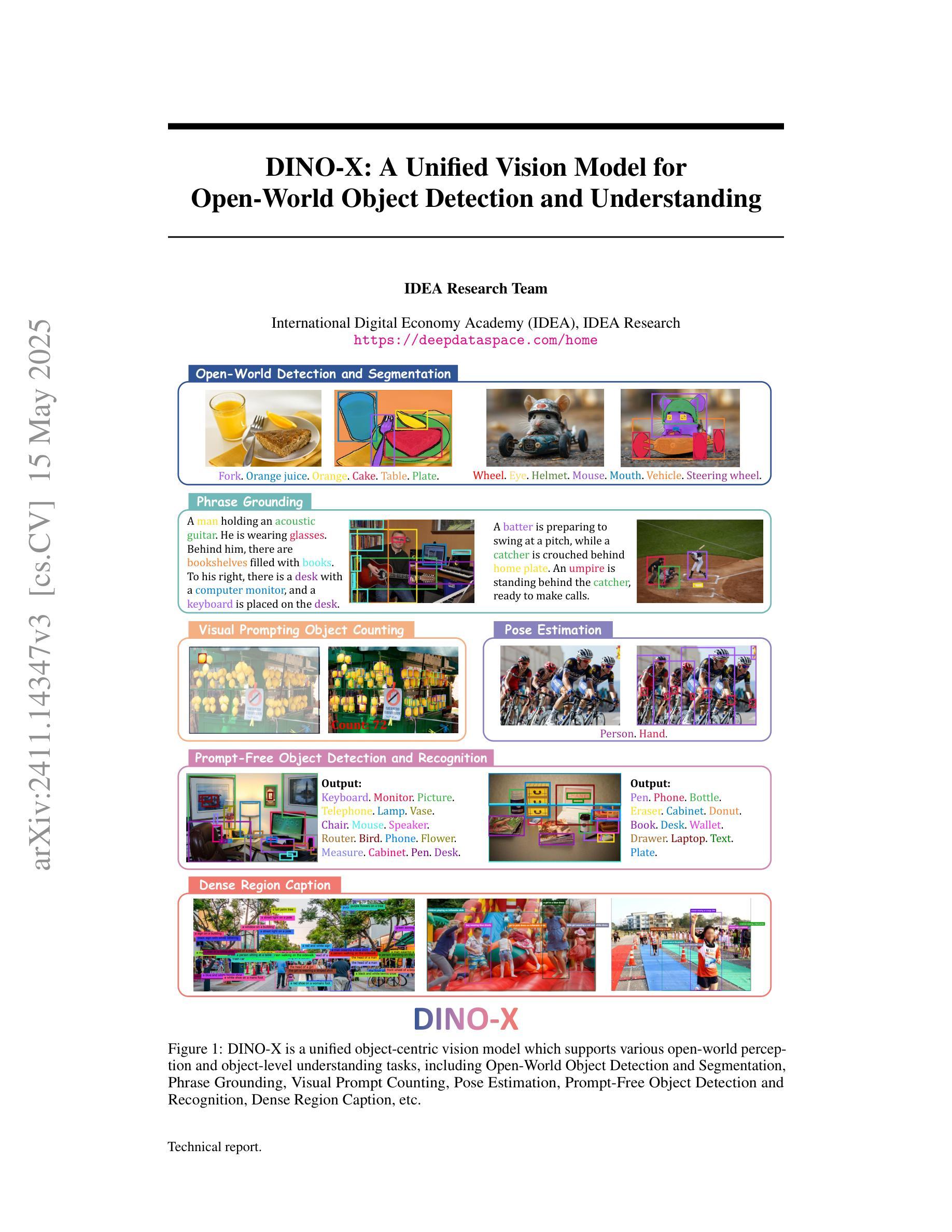

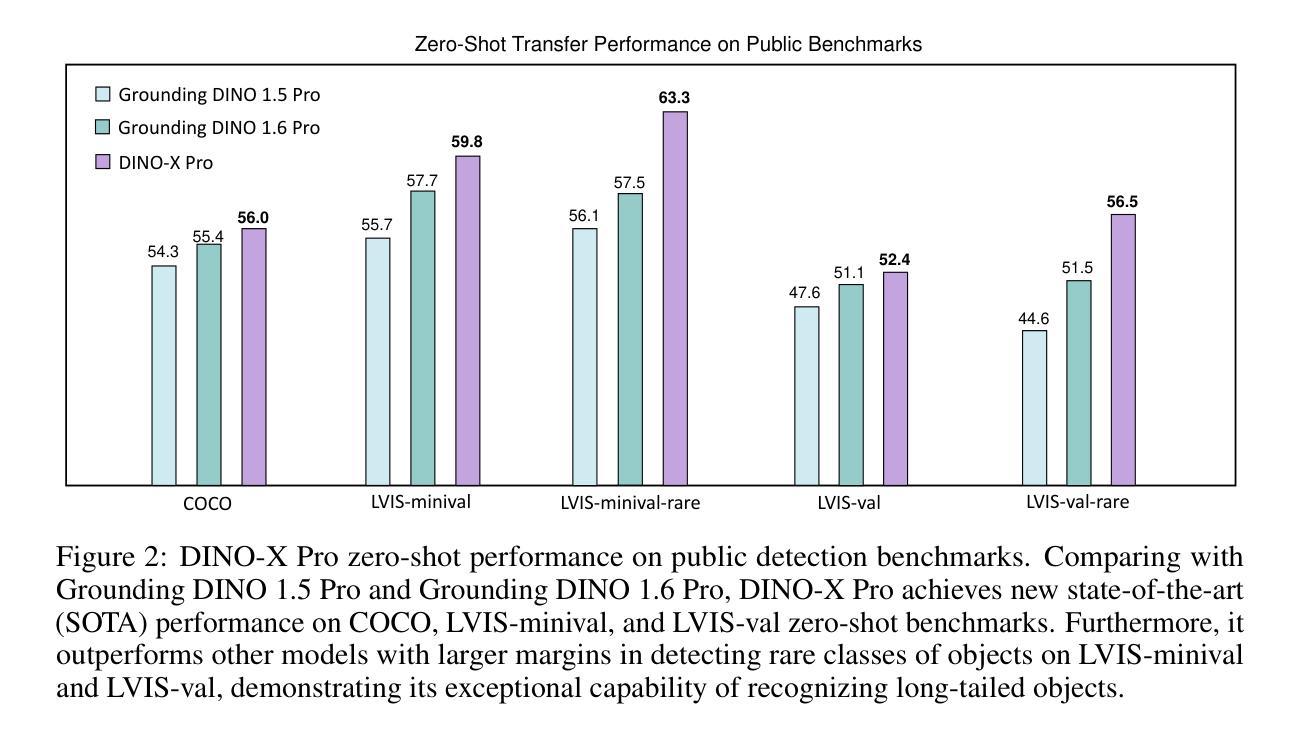

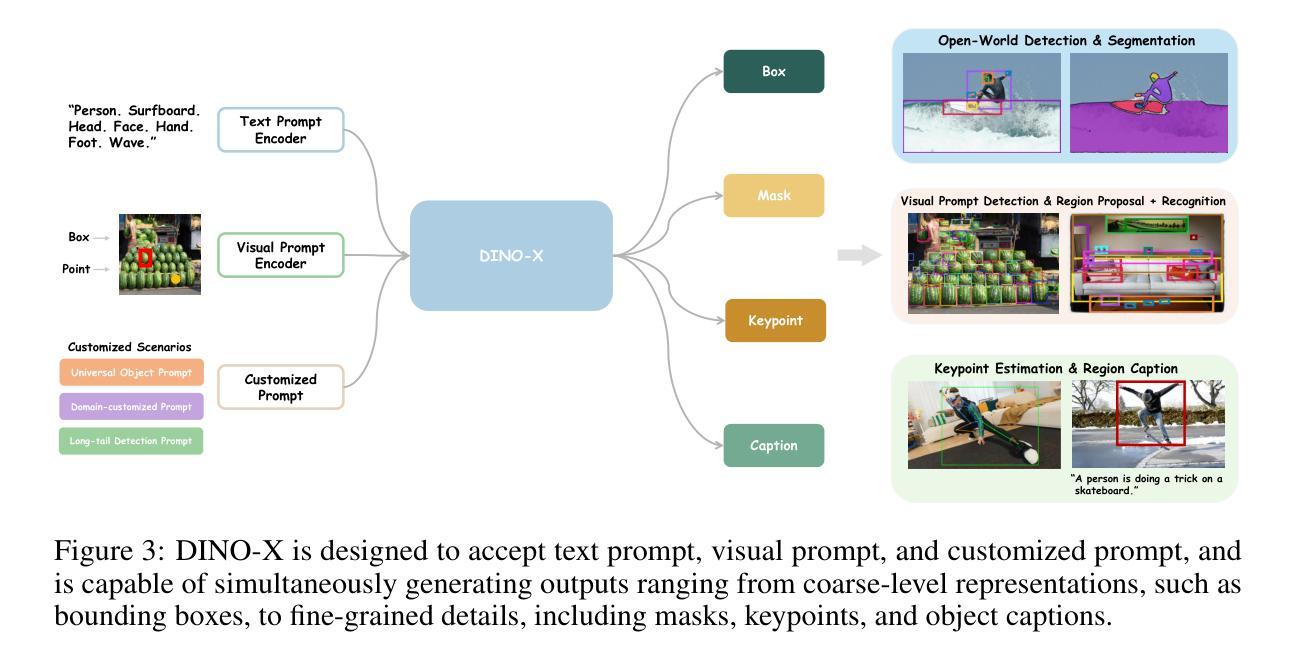

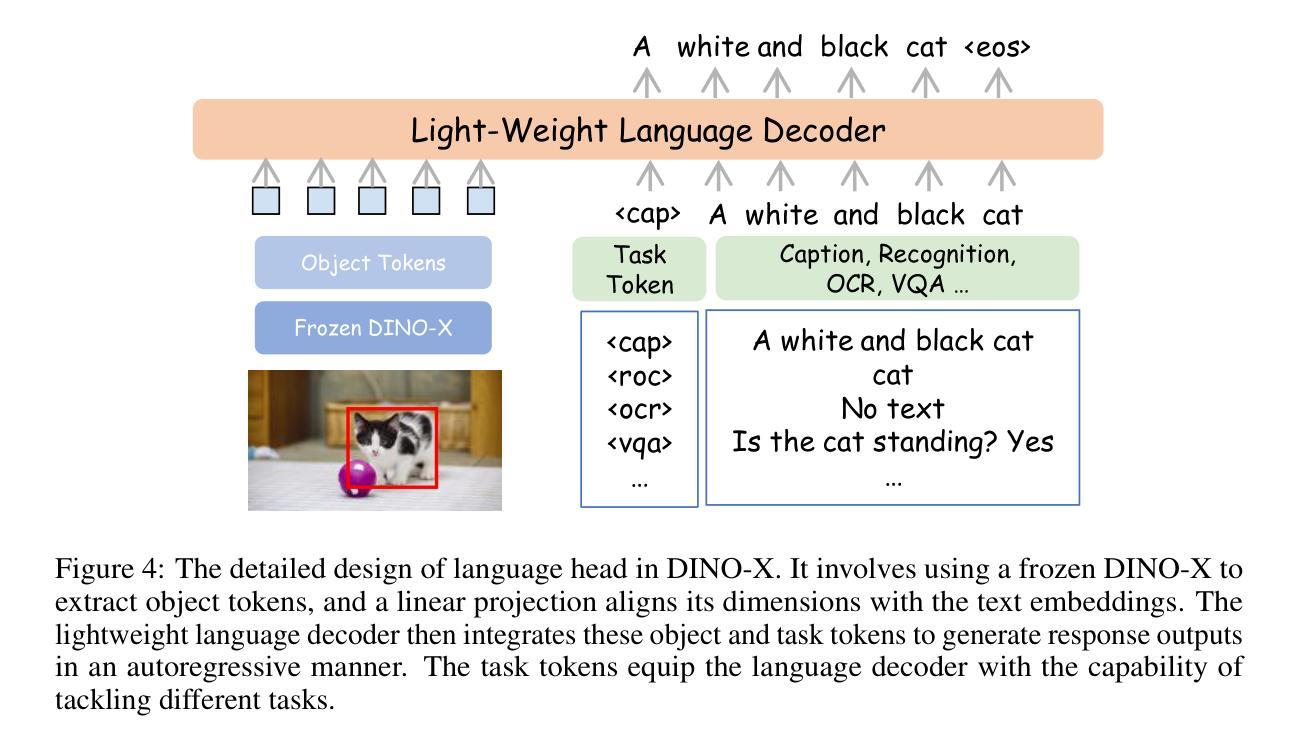

In this paper, we introduce DINO-X, which is a unified object-centric vision model developed by IDEA Research with the best open-world object detection performance to date. DINO-X employs the same Transformer-based encoder-decoder architecture as Grounding DINO 1.5 to pursue an object-level representation for open-world object understanding. To make long-tailed object detection easy, DINO-X extends its input options to support text prompt, visual prompt, and customized prompt. With such flexible prompt options, we develop a universal object prompt to support prompt-free open-world detection, making it possible to detect anything in an image without requiring users to provide any prompt. To enhance the model’s core grounding capability, we have constructed a large-scale dataset with over 100 million high-quality grounding samples, referred to as Grounding-100M, for advancing the model’s open-vocabulary detection performance. Pre-training on such a large-scale grounding dataset leads to a foundational object-level representation, which enables DINO-X to integrate multiple perception heads to simultaneously support multiple object perception and understanding tasks, including detection, segmentation, pose estimation, object captioning, object-based QA, etc. Experimental results demonstrate the superior performance of DINO-X. Specifically, the DINO-X Pro model achieves 56.0 AP, 59.8 AP, and 52.4 AP on the COCO, LVIS-minival, and LVIS-val zero-shot object detection benchmarks, respectively. Notably, it scores 63.3 AP and 56.5 AP on the rare classes of LVIS-minival and LVIS-val benchmarks, improving the previous SOTA performance by 5.8 AP and 5.0 AP. Such a result underscores its significantly improved capacity for recognizing long-tailed objects.

本文介绍了由IDEA Research开发的DINO-X,这是一种统一的对象级视觉模型,具有迄今为止最佳的开放世界对象检测性能。DINO-X采用与Grounding DINO 1.5相同的基于Transformer的编码器-解码器架构,追求对象级别的表示用于开放世界对象理解。为了简化长尾对象检测,DINO-X扩展了其输入选项以支持文本提示、视觉提示和自定义提示。凭借如此灵活的提示选项,我们开发了一种通用对象提示来支持无提示的开放世界检测,使得无需用户提供任何提示即可在图像中检测任何事物成为可能。为了提高模型的核心定位能力,我们构建了一个大规模数据集,称为Grounding-100M,包含超过1亿个高质量定位样本,用于提升模型的开放词汇检测性能。在如此大规模的定位数据集上进行预训练,为DINO-X提供了基础的对象级别表示,使其能够集成多个感知头来同时支持多个对象感知和理解任务,包括检测、分割、姿态估计、对象描述、基于对象的问答等。实验结果表明DINO-X的性能卓越。具体来说,DINO-X Pro模型在COCO、LVIS-minival和LVIS-val零样本对象检测基准测试上的得分分别为56.0 AP、59.8 AP和52.4 AP。值得注意的是,它在LVIS-minival和LVIS-val基准测试的罕见类别上的得分分别为63.3 AP和56.5 AP,比之前的最佳性能提高了5.8 AP和5.0 AP。这一结果突显了其在识别长尾对象方面的显著改进能力。

论文及项目相关链接

PDF Technical Report

Summary

本文介绍了由IDEA Research开发的一体化对象中心视觉模型DINO-X,具有迄今为止最佳的开放世界对象检测性能。DINO-X追求对象级别的表示,以支持开放世界中的对象理解。它支持文本提示、视觉提示和自定义提示等多种输入选项,开发了通用对象提示以实现无需提示的开放世界检测。通过构建大规模的Grounding-100M数据集,增强了模型的核心定位能力,并推动了开放词汇检测性能的提升。预训练在这样的大规模定位数据集上,使DINO-X能够进行多种对象感知和理解任务,如检测、分割、姿态估计、对象描述和基于对象的问答等。实验结果表明DINO-X性能卓越。

Key Takeaways

- DINO-X是一个一体化的对象中心视觉模型,具有优秀的开放世界对象检测性能。

- 采用与Grounding DINO 1.5相同的Transformer-based编码器-解码器架构,追求对象级别的表示。

- 支持文本提示、视觉提示和自定义提示的灵活输入选项,开发了通用对象提示实现无需提示的开放世界检测。

- 构建大规模的Grounding-100M数据集以增强模型的核心定位能力。

- 预训练在大型定位数据集上,使DINO-X能够支持多种对象感知和理解任务。

- DINO-X在COCO、LVIS-minival和LVIS-val等基准测试中表现出卓越的性能。

点此查看论文截图