⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-20 更新

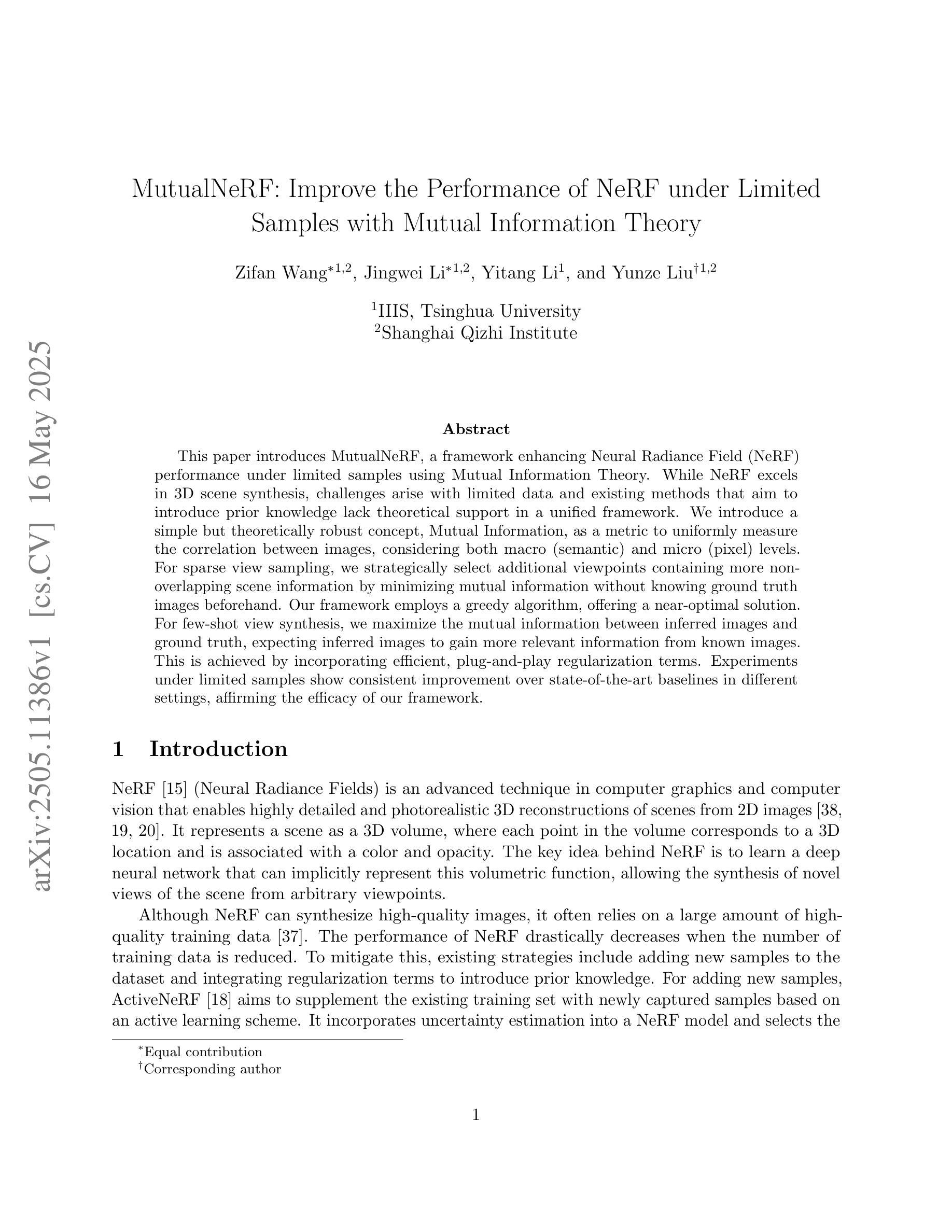

MutualNeRF: Improve the Performance of NeRF under Limited Samples with Mutual Information Theory

Authors:Zifan Wang, Jingwei Li, Yitang Li, Yunze Liu

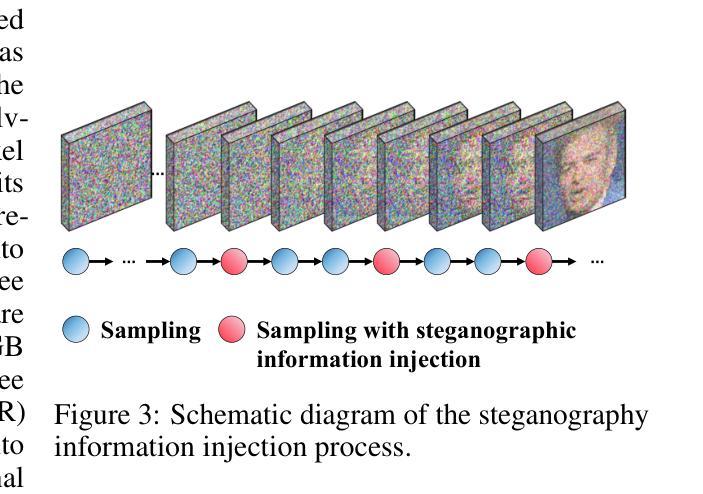

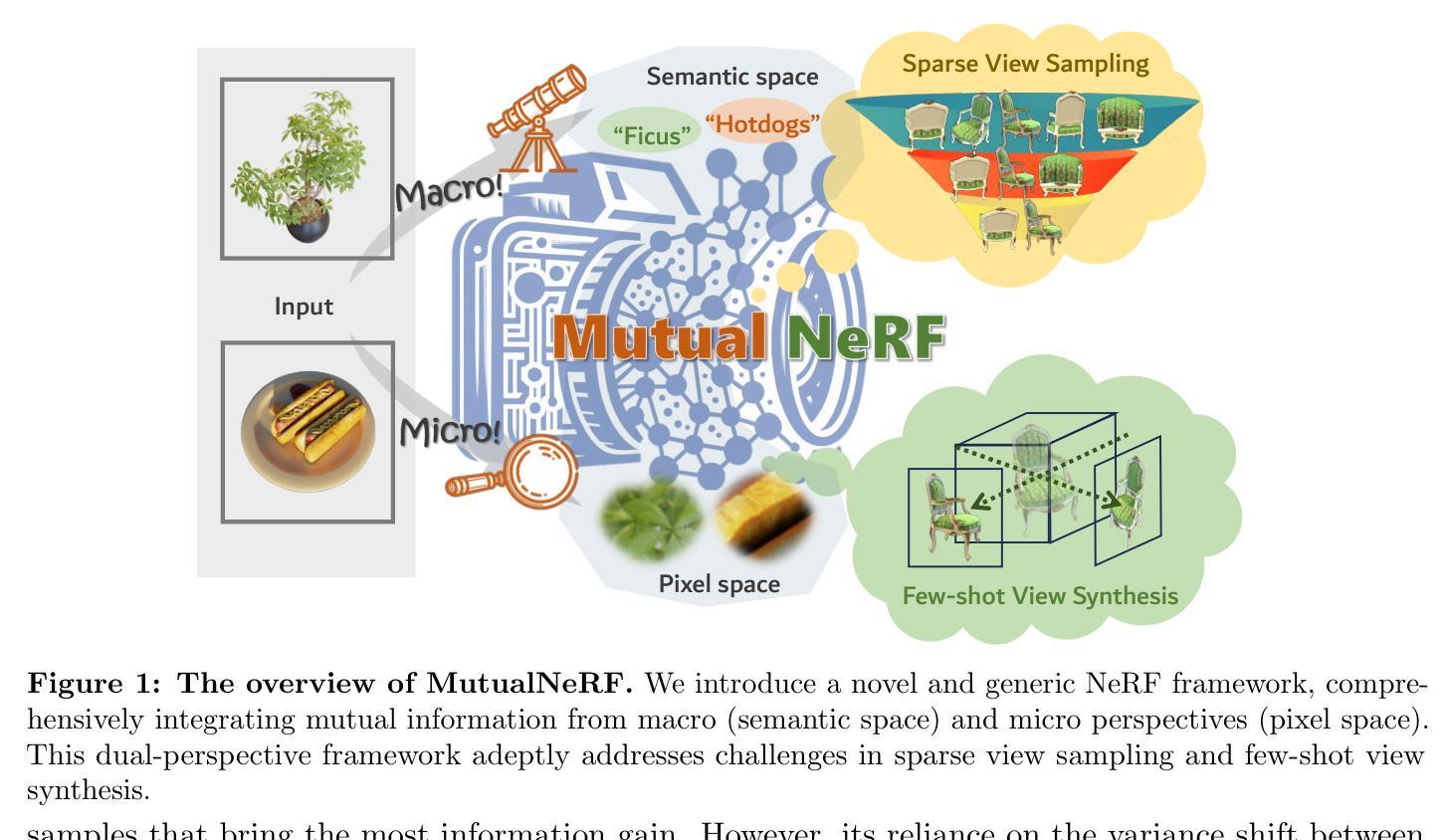

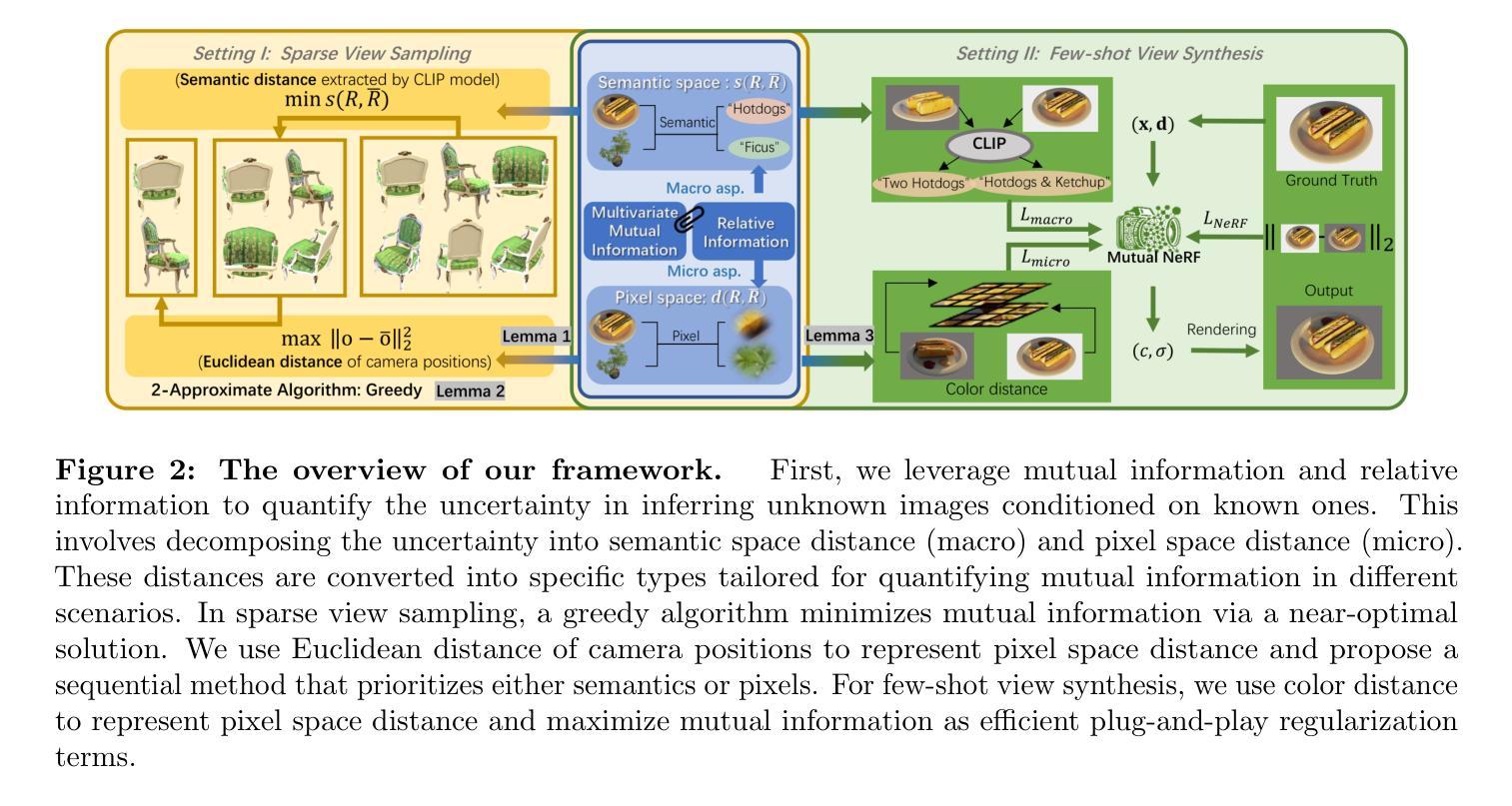

This paper introduces MutualNeRF, a framework enhancing Neural Radiance Field (NeRF) performance under limited samples using Mutual Information Theory. While NeRF excels in 3D scene synthesis, challenges arise with limited data and existing methods that aim to introduce prior knowledge lack theoretical support in a unified framework. We introduce a simple but theoretically robust concept, Mutual Information, as a metric to uniformly measure the correlation between images, considering both macro (semantic) and micro (pixel) levels. For sparse view sampling, we strategically select additional viewpoints containing more non-overlapping scene information by minimizing mutual information without knowing ground truth images beforehand. Our framework employs a greedy algorithm, offering a near-optimal solution. For few-shot view synthesis, we maximize the mutual information between inferred images and ground truth, expecting inferred images to gain more relevant information from known images. This is achieved by incorporating efficient, plug-and-play regularization terms. Experiments under limited samples show consistent improvement over state-of-the-art baselines in different settings, affirming the efficacy of our framework.

本文介绍了MutualNeRF,这是一个利用互信息理论在有限样本下增强神经网络辐射场(NeRF)性能的框架。虽然NeRF在3D场景合成方面表现出色,但在有限数据的情况下仍面临挑战,而且现有的引入先验知识的方法在统一框架中缺乏理论支持。我们引入了一个简单但理论稳健的概念——互信息,作为一个指标来统一测量图像之间的相关性,同时考虑宏观(语义)和微观(像素)两个层面。在稀疏视图采样中,我们通过最小化互信息,战略性地选择包含更多非重叠场景信息的其他视点,而无需事先了解真实图像。我们的框架采用贪心算法,提供接近最优的解决方案。在少量视图合成中e,我们最大化推断图像和真实图像之间的互信息,期望推断图像从已知图像中获得更多相关信息。这是通过引入高效、即插即用的正则化项来实现的。在有限样本下的实验表明,在不同的设置下,与最先进的基线相比,我们的框架始终表现出一致的改进,证明了其有效性。

论文及项目相关链接

Summary

该论文提出一种名为MutualNeRF的框架,利用信息论中的互信息理论提升基于有限样本的神经网络辐射场(NeRF)性能。该框架解决了NeRF在3D场景合成中面临的有限数据挑战,通过引入互信息作为衡量图像间关联性的指标,兼顾宏观语义和微观像素级别。对于稀疏视图采样,通过最小化互信息策略性地选择包含更多非重叠场景信息的额外视角。采用贪婪算法,提供近似最优解。在少量视图合成中,通过最大化推断图像和真实图像之间的互信息,使推断图像从已知图像中获得更多相关信息。通过有效的即插即用正则化项实现。在有限样本下的实验表明,该框架在不同设置下均优于最先进的基线方法,验证了其有效性。

Key Takeaways

- MutualNeRF框架基于互信息理论提升NeRF性能。

- 互信息用于衡量图像间的关联性,兼顾宏观和微观层面。

- 在稀疏视图采样中,通过最小化互信息选择包含更多非重叠场景信息的视角。

- 采用贪婪算法提供近似最优解。

- 在少量视图合成中,最大化推断图像和真实图像之间的互信息。

- 通过即插即用正则化项实现有效融合。

- 实验表明,该框架在有限样本下优于现有方法。

点此查看论文截图

EA-3DGS: Efficient and Adaptive 3D Gaussians with Highly Enhanced Quality for outdoor scenes

Authors:Jianlin Guo, Haihong Xiao, Wenxiong Kang

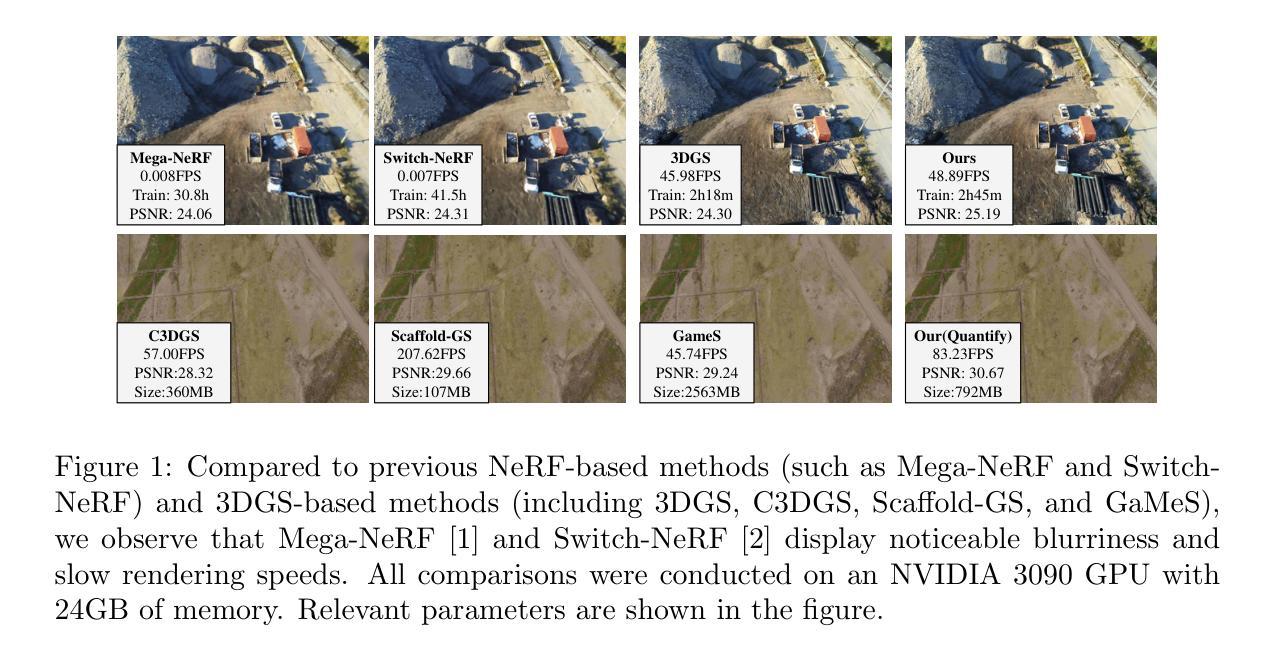

Efficient scene representations are essential for many real-world applications, especially those involving spatial measurement. Although current NeRF-based methods have achieved impressive results in reconstructing building-scale scenes, they still suffer from slow training and inference speeds due to time-consuming stochastic sampling. Recently, 3D Gaussian Splatting (3DGS) has demonstrated excellent performance with its high-quality rendering and real-time speed, especially for objects and small-scale scenes. However, in outdoor scenes, its point-based explicit representation lacks an effective adjustment mechanism, and the millions of Gaussian points required often lead to memory constraints during training. To address these challenges, we propose EA-3DGS, a high-quality real-time rendering method designed for outdoor scenes. First, we introduce a mesh structure to regulate the initialization of Gaussian components by leveraging an adaptive tetrahedral mesh that partitions the grid and initializes Gaussian components on each face, effectively capturing geometric structures in low-texture regions. Second, we propose an efficient Gaussian pruning strategy that evaluates each 3D Gaussian’s contribution to the view and prunes accordingly. To retain geometry-critical Gaussian points, we also present a structure-aware densification strategy that densifies Gaussian points in low-curvature regions. Additionally, we employ vector quantization for parameter quantization of Gaussian components, significantly reducing disk space requirements with only a minimal impact on rendering quality. Extensive experiments on 13 scenes, including eight from four public datasets (MatrixCity-Aerial, Mill-19, Tanks & Temples, WHU) and five self-collected scenes acquired through UAV photogrammetry measurement from SCUT-CA and plateau regions, further demonstrate the superiority of our method.

高效场景表示对于许多实际应用至关重要,特别是在涉及空间测量的应用中。尽管基于NeRF的当前方法在建筑规模场景的重建中取得了令人印象深刻的结果,但由于耗时的随机采样,它们仍然面临训练速度慢和推理速度慢的问题。最近,3D高斯涂斑(3DGS)以其高质量渲染和实时速度展示了出色的性能,特别是对于对象和小型场景。然而,在户外场景中,其基于点的显式表示缺乏有效的调整机制,并且所需的高达数百万的高斯点经常在训练期间导致内存约束。为了解决这些挑战,我们提出了EA-3DGS,一种针对户外场景设计的高质量实时渲染方法。首先,我们引入一个网格结构来调节高斯分量的初始化,利用自适应四面体网格对网格进行分割,并在每个面上初始化高斯分量,有效地捕捉低纹理区域的几何结构。其次,我们提出了一种高效的高斯修剪策略,该策略评估每个3D高斯对视图的影响并进行相应的修剪。为了保留关键的几何高斯点,我们还提出了一种结构感知的致密化策略,在低曲率区域加密高斯点。此外,我们对高斯分量的参数进行了向量量化,显著减少了磁盘空间需求,并且对渲染质量的影响微乎其微。在包括从四个公共数据集(MatrixCity-Aerial、Mill-19、Tanks&Temples和WHU)中选出的八个场景以及通过无人机摄影测量从SCUT-CA和高原地区收集的五个场景在内的共13个场景上的大量实验进一步证明了我们的方法的优越性。

论文及项目相关链接

Summary

该文本介绍了一种针对户外场景的高效实时渲染方法EA-3DGS。为解决现有方法中在户外场景存在的问题,EA-3DGS采用了一种基于自适应四面体网格结构的改进方案来管理高斯组件的初始化。此外,它还提出了一种有效的Gaussian修剪策略和结构感知的密集化策略,并采用向量量化技术压缩高斯组件的参数,以减小内存占用和提高渲染效率。实验结果表明,EA-3DGS在多种户外场景上均表现出优异的性能。

Key Takeaways

- EA-3DGS是针对户外场景设计的实时渲染方法,旨在解决现有方法在户外场景中的不足。

- 采用自适应四面体网格结构管理高斯组件的初始化,有效捕捉低纹理区域的几何结构。

- 提出高效的Gaussian修剪策略,根据视图评估每个三维高斯的作用并进行修剪。

- 为保留关键的几何高斯点,采用结构感知的密集化策略在低曲率区域增加高斯点密度。

- 采用向量量化技术压缩高斯组件参数,显著减小内存占用,同时保持较高的渲染质量。

- 在多个户外场景上的实验结果表明EA-3DGS具有卓越的性能表现。

点此查看论文截图

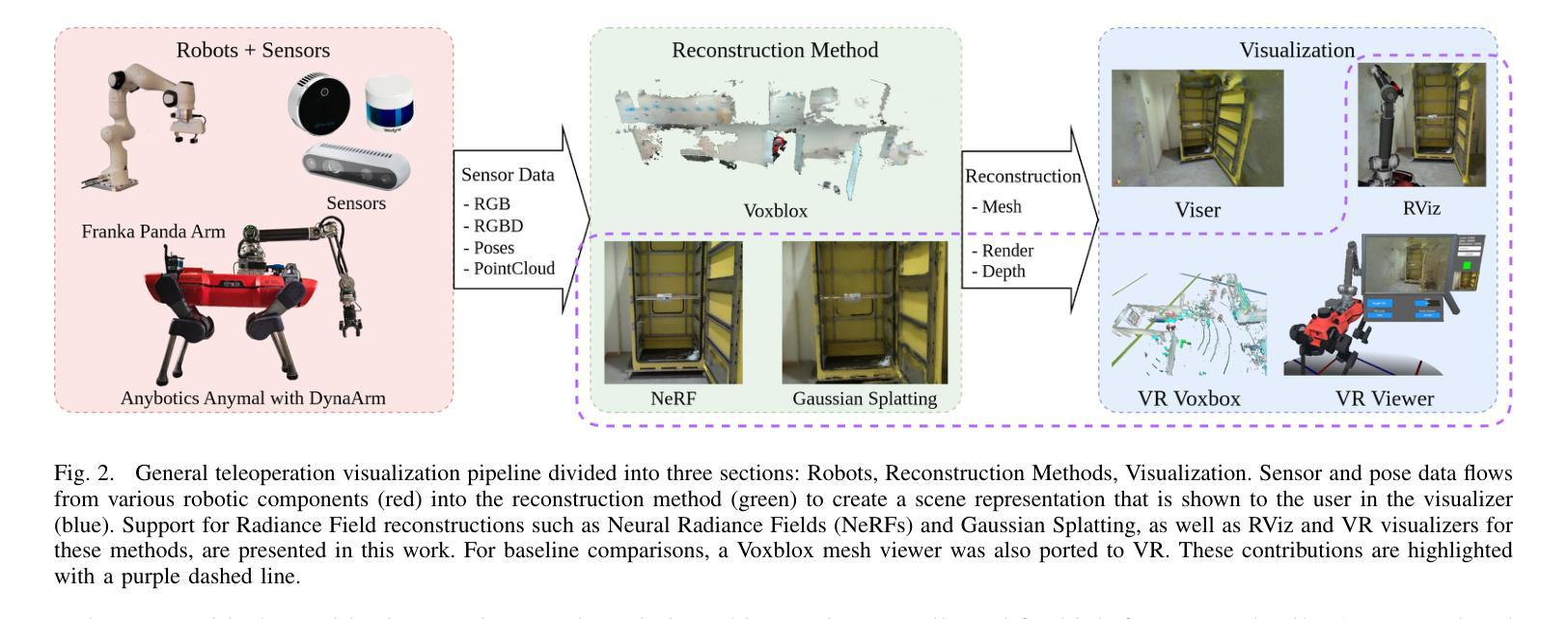

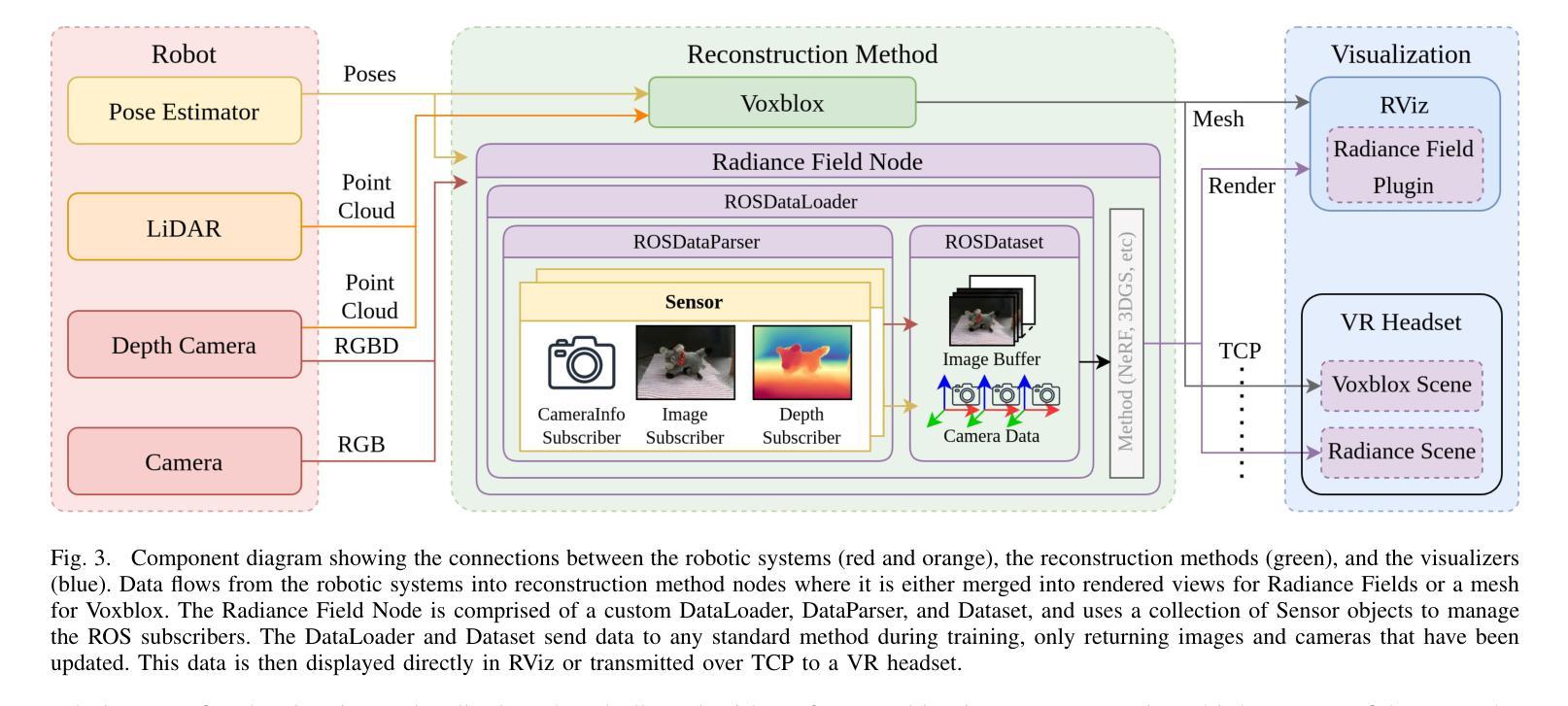

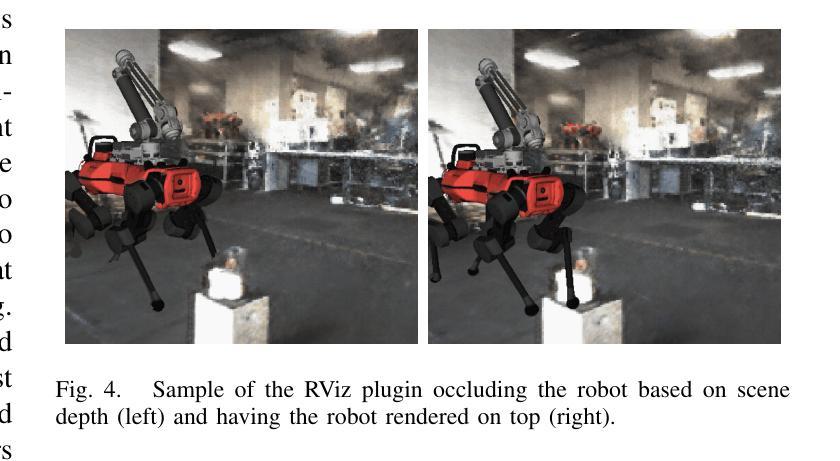

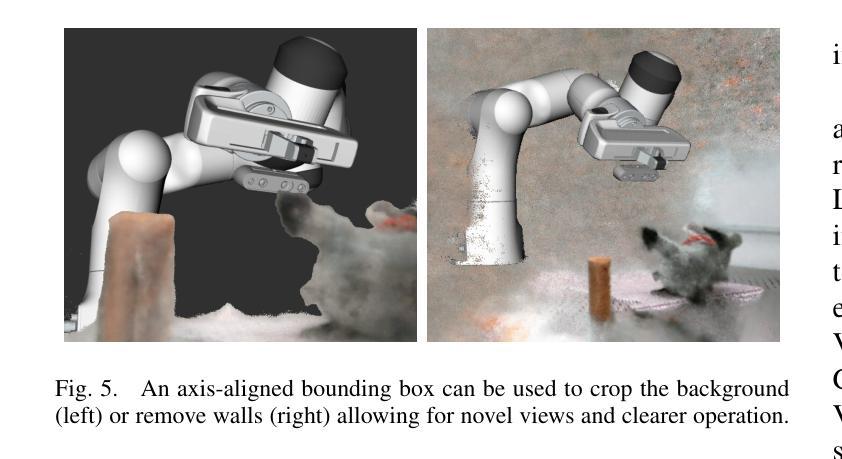

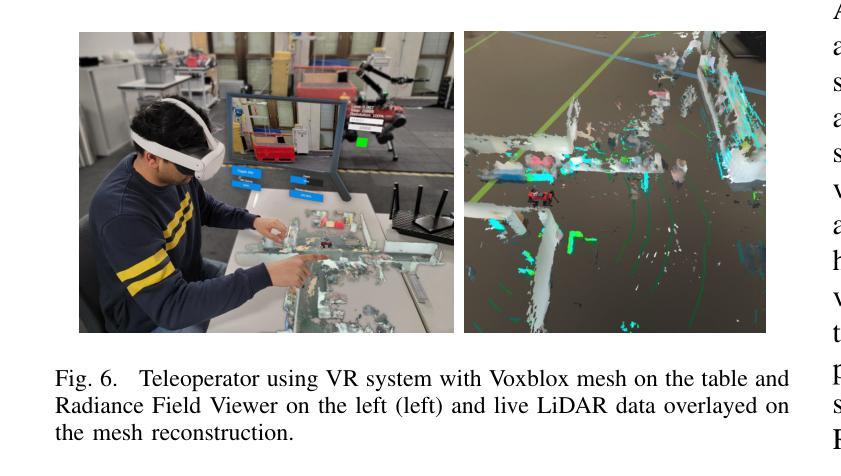

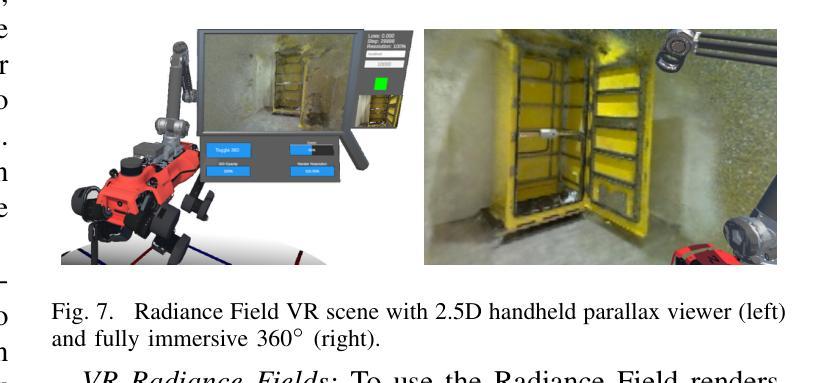

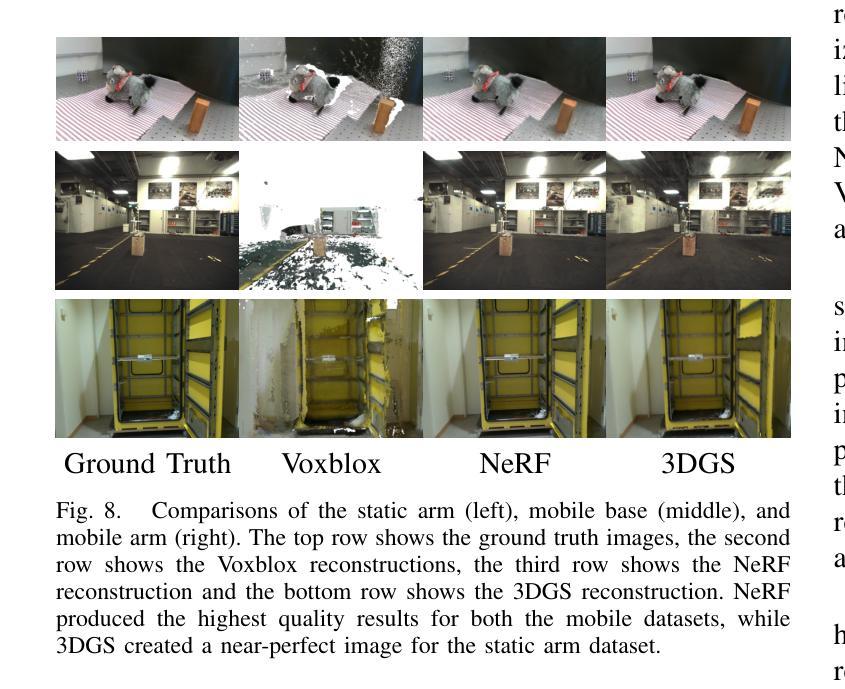

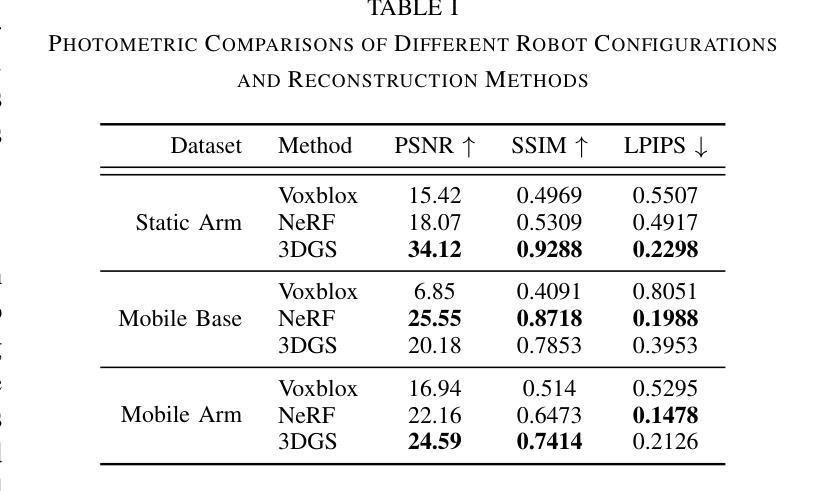

Radiance Fields for Robotic Teleoperation

Authors:Maximum Wilder-Smith, Vaishakh Patil, Marco Hutter

Radiance field methods such as Neural Radiance Fields (NeRFs) or 3D Gaussian Splatting (3DGS), have revolutionized graphics and novel view synthesis. Their ability to synthesize new viewpoints with photo-realistic quality, as well as capture complex volumetric and specular scenes, makes them an ideal visualization for robotic teleoperation setups. Direct camera teleoperation provides high-fidelity operation at the cost of maneuverability, while reconstruction-based approaches offer controllable scenes with lower fidelity. With this in mind, we propose replacing the traditional reconstruction-visualization components of the robotic teleoperation pipeline with online Radiance Fields, offering highly maneuverable scenes with photorealistic quality. As such, there are three main contributions to state of the art: (1) online training of Radiance Fields using live data from multiple cameras, (2) support for a variety of radiance methods including NeRF and 3DGS, (3) visualization suite for these methods including a virtual reality scene. To enable seamless integration with existing setups, these components were tested with multiple robots in multiple configurations and were displayed using traditional tools as well as the VR headset. The results across methods and robots were compared quantitatively to a baseline of mesh reconstruction, and a user study was conducted to compare the different visualization methods. For videos and code, check out https://rffr.leggedrobotics.com/works/teleoperation/.

神经辐射场(NeRF)或三维高斯飞溅(3DGS)等辐射场方法已经彻底改变了图形和新颖视图合成领域。它们具有以照片级真实感合成新视角以及捕捉复杂体积和镜面场景的能力,使其成为机器人遥操作设置的理想可视化工具。直接相机遥操作提供了高保真操作,但牺牲了机动性,而基于重建的方法提供了可控场景,但真实度较低。鉴于此,我们提出用在线辐射场替换机器人遥操作管道的传统重建-可视化组件,提供具有照片级真实感的高度机动场景。因此,这是对最新技术的三个主要贡献:(1)使用来自多个相机的实时数据在线训练辐射场,(2)支持包括NeRF和3DGS在内的多种辐射方法,(3)这些方法可视化套件,包括虚拟现实场景。为了实现与现有设置的无缝集成,这些组件已在多种配置的多个机器人上进行了测试,并使用传统工具和VR头戴显示器进行展示。跨各种方法和机器人的结果均与网格重建基线进行了定量比较,并通过用户研究对比了不同的可视化方法。如需查看视频和代码,请访问:[https://rffr.leggedrobotics.com/works/teleoperation/] 。

论文及项目相关链接

PDF 8 pages, 10 figures, Accepted to IROS 2024

Summary

神经网络辐射场(NeRF)等辐射场方法,如神经辐射场(NeRF)或三维高斯溅出(3DGS),已经彻底改变了图形和新颖视图合成领域。它们能够合成具有真实感质量的新视角,并捕捉复杂的体积和镜面场景,使其成为机器人遥操作设置的理想可视化工具。本研究提出将机器人遥操作管道的传统重建可视化组件替换为在线辐射场,提供具有真实感质量的高度机动场景。研究的主要贡献包括:利用多摄像机现场数据在线训练辐射场、支持多种辐射方法(包括NeRF和3DGS),以及包括虚拟现实场景在内的可视化套件。这些组件与现有设置的集成无缝,已在多种配置的多个机器人上进行了测试,并使用传统工具和虚拟现实头盔进行展示。通过定量比较各种方法和机器人的结果与基于网格重建的基线,以及通过用户研究比较不同的可视化方法,验证了其有效性。

Key Takeaways

- 辐射场方法如NeRF或3DGS已革新图形和视图合成领域。

- 它们能合成新视角并捕捉复杂场景,适用于机器人遥操作。

- 提出用在线辐射场替换传统重建可视化组件,提高场景机动性和真实感质量。

- 主要贡献包括在线训练辐射场、支持多种辐射方法以及包含VR场景的可视化套件。

- 组件在多种机器人配置上测试,展示其与现有设置的无缝集成。

- 通过与基于网格重建的基线以及不同可视化方法的用户研究比较,验证了其有效性。

点此查看论文截图