⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-24 更新

EVA: Expressive Virtual Avatars from Multi-view Videos

Authors:Hendrik Junkawitsch, Guoxing Sun, Heming Zhu, Christian Theobalt, Marc Habermann

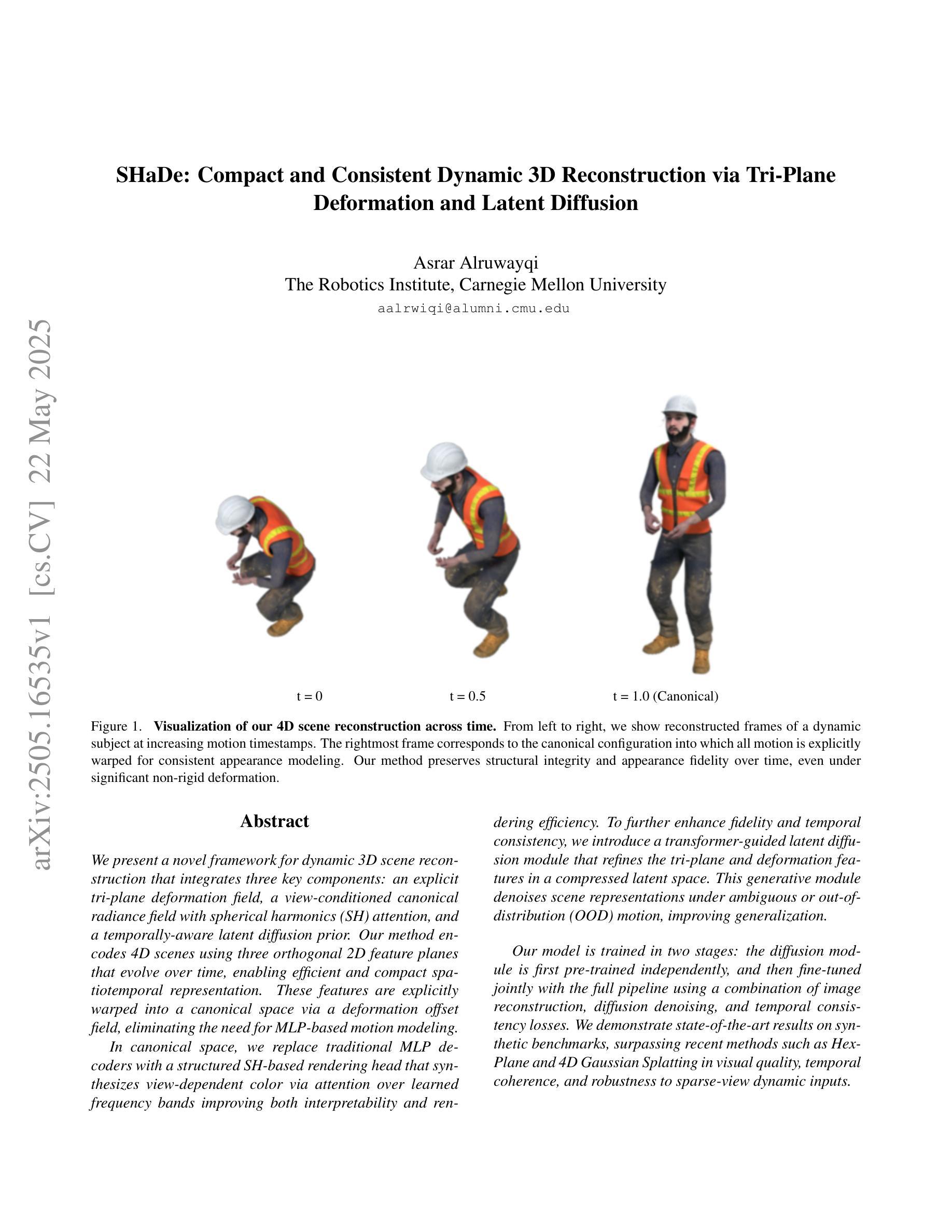

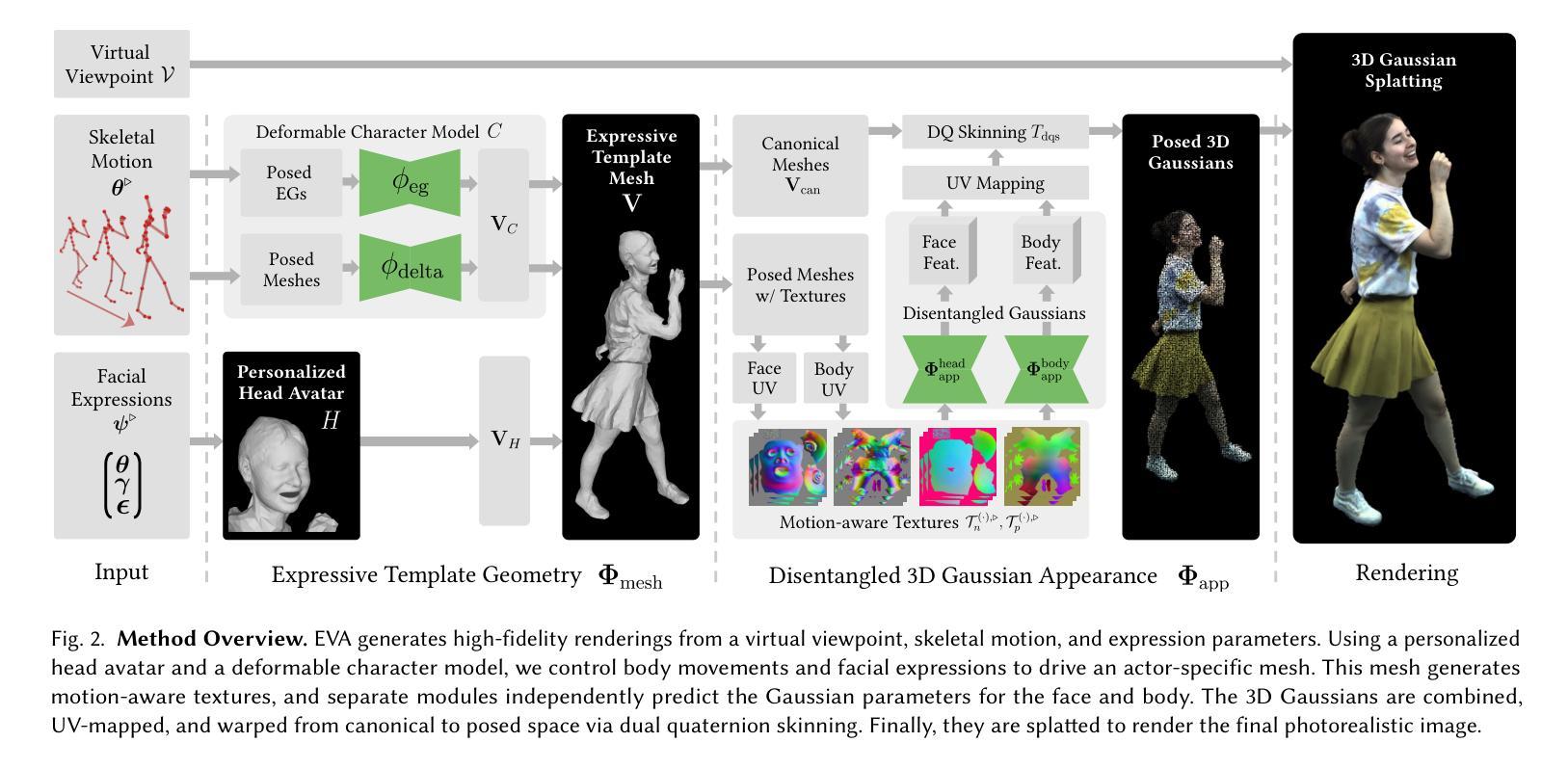

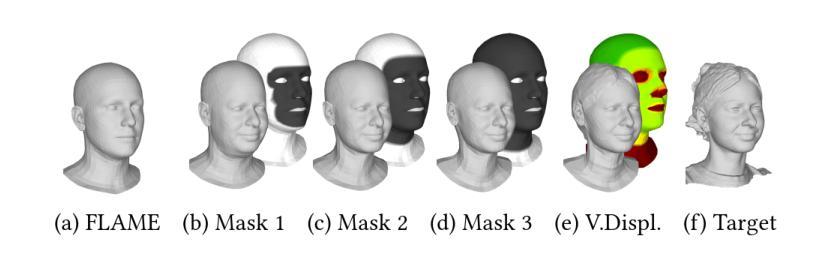

With recent advancements in neural rendering and motion capture algorithms, remarkable progress has been made in photorealistic human avatar modeling, unlocking immense potential for applications in virtual reality, augmented reality, remote communication, and industries such as gaming, film, and medicine. However, existing methods fail to provide complete, faithful, and expressive control over human avatars due to their entangled representation of facial expressions and body movements. In this work, we introduce Expressive Virtual Avatars (EVA), an actor-specific, fully controllable, and expressive human avatar framework that achieves high-fidelity, lifelike renderings in real time while enabling independent control of facial expressions, body movements, and hand gestures. Specifically, our approach designs the human avatar as a two-layer model: an expressive template geometry layer and a 3D Gaussian appearance layer. First, we present an expressive template tracking algorithm that leverages coarse-to-fine optimization to accurately recover body motions, facial expressions, and non-rigid deformation parameters from multi-view videos. Next, we propose a novel decoupled 3D Gaussian appearance model designed to effectively disentangle body and facial appearance. Unlike unified Gaussian estimation approaches, our method employs two specialized and independent modules to model the body and face separately. Experimental results demonstrate that EVA surpasses state-of-the-art methods in terms of rendering quality and expressiveness, validating its effectiveness in creating full-body avatars. This work represents a significant advancement towards fully drivable digital human models, enabling the creation of lifelike digital avatars that faithfully replicate human geometry and appearance.

随着神经网络渲染和运动捕捉算法的最新进展,在基于真人渲染的人物角色建模方面取得了显著进展,为虚拟现实、增强现实、远程通信以及游戏、电影和医学等行业的应用提供了巨大的潜力。然而,现有方法由于面部表情和身体动作的表达纠缠,无法提供对人物角色的完整、真实和富有表现力的控制。在这项工作中,我们引入了“表现性虚拟角色(EVA)”,这是一个针对特定演员、可完全控制和富有表现力的角色框架,可在实时实现高保真、逼真的渲染,同时实现对面部表情、身体动作和手势的独立控制。具体来说,我们的方法将人物角色设计为双层模型:一个表情模板几何层和一个3D高斯外观层。首先,我们提出了一种表情模板跟踪算法,它利用从粗到细的优化方法,从多角度视频中准确恢复身体动作、面部表情和非刚性变形参数。接下来,我们提出了一种新型的解耦3D高斯外观模型,能够有效地分离身体和面部外观。不同于统一的高斯估计方法,我们的方法采用两个专门和独立的模块来分别建模身体和面部。实验结果表明,EVA在渲染质量和表现力方面超过了最先进的方法,验证了其在创建全身角色方面的有效性。这项工作朝着完全可驱动的数字化人物模型迈出了重要的一步,能够创建出逼真且忠实于人类几何和外观的数字化角色。

论文及项目相关链接

PDF Accepted at SIGGRAPH 2025 Conference Track, Project page: https://vcai.mpi-inf.mpg.de/projects/EVA/

Summary

基于神经网络渲染和动作捕捉算法的最新进展,已经在逼真的人形化身建模方面取得了显著进展,为虚拟现实、增强现实、远程通信以及游戏、电影和医学等行业带来了巨大潜力。然而,现有方法由于面部表情和肢体动作的纠缠表示,无法提供完整、忠诚和富有表现力的控制。在此工作中,我们推出了表达性虚拟化身(EVA),一个特定演员、可全控且富有表现力的人形化身框架,实现了高保真、实时渲染,同时能独控制面部表情、肢体动作和手势。

Key Takeaways

- 近期神经网络渲染和动作捕捉算法的进步,在逼真的人形化身建模方面取得显著进展。

- 现有方法在控制面部表情和肢体动作时存在纠缠表示的问题,无法提供完整、忠诚和富有表现力的控制。

- EVA框架实现了高保真、实时渲染的人形化身,并独立于面部表情、肢体动作和手势进行控制。

- EVA采用两层模型设计:表达性模板几何层和3D高斯外观层。

- EVA提出一种表达性模板跟踪算法,通过由粗到细的优化,从多视角视频准确恢复身体动作、面部表情和非刚性变形参数。

- EVA采用解耦的3D高斯外观模型,有效地分离身体和面部外观,采用两个独立模块对它们进行建模。

点此查看论文截图

AsynFusion: Towards Asynchronous Latent Consistency Models for Decoupled Whole-Body Audio-Driven Avatars

Authors:Tianbao Zhang, Jian Zhao, Yuer Li, Zheng Zhu, Ping Hu, Zhaoxin Fan, Wenjun Wu, Xuelong Li

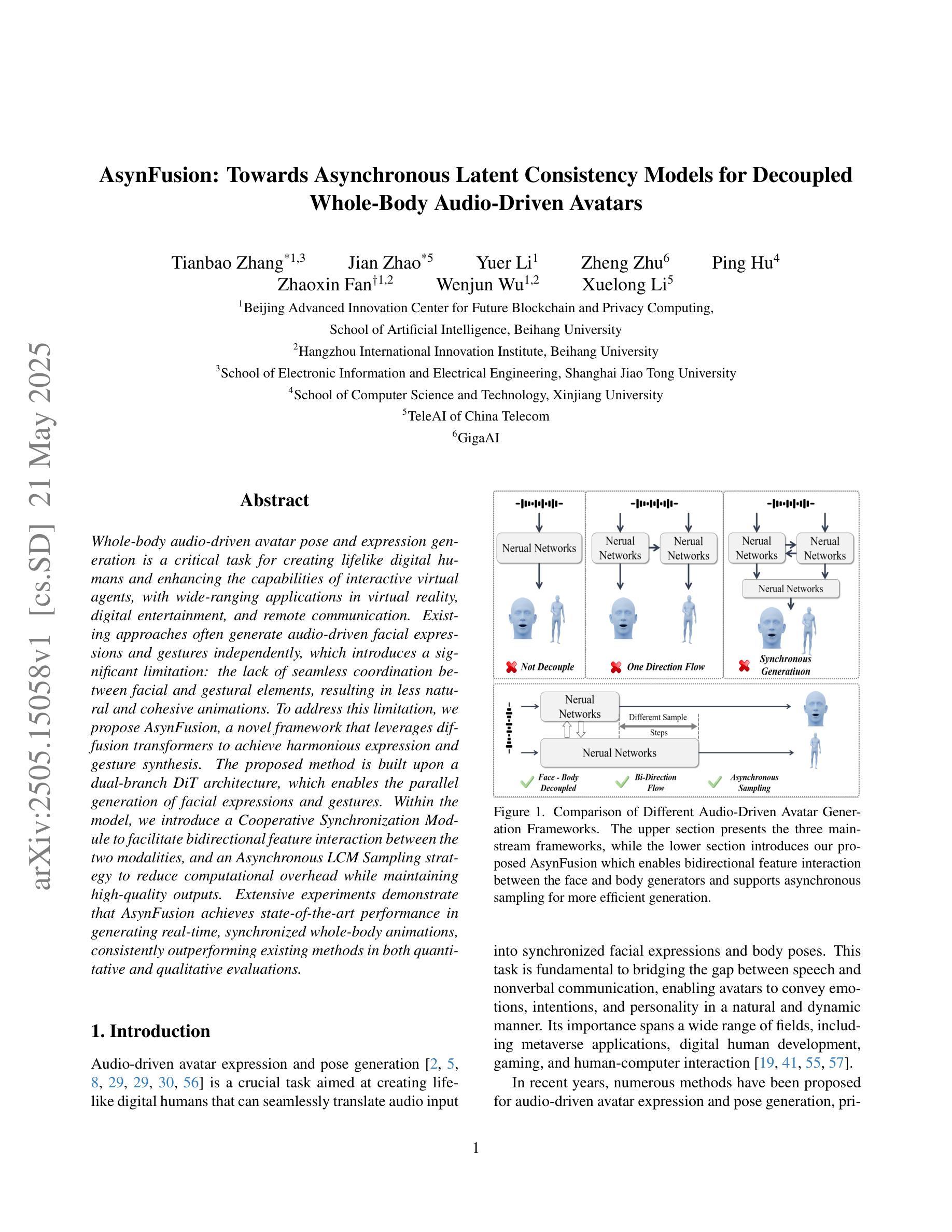

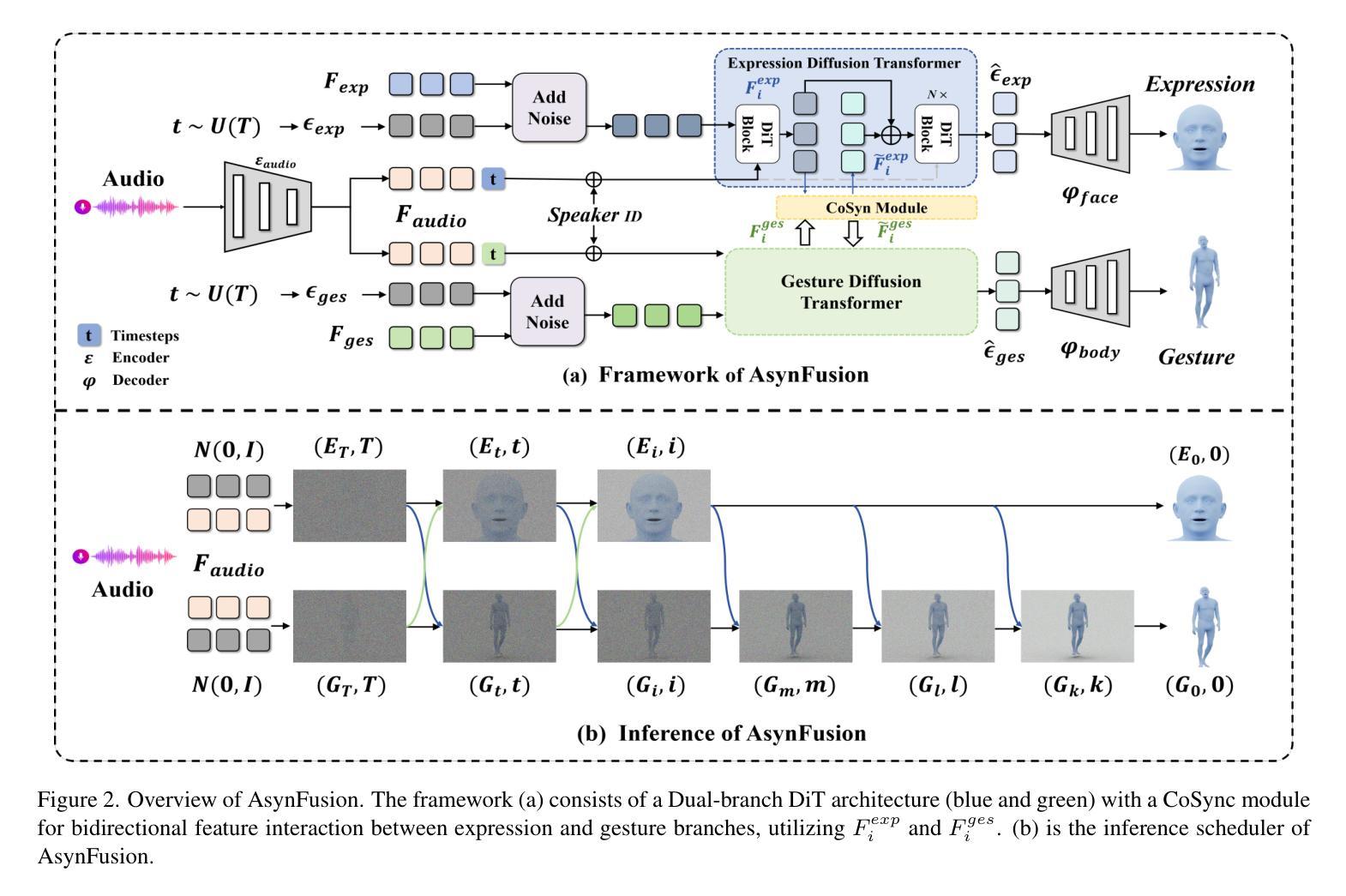

Whole-body audio-driven avatar pose and expression generation is a critical task for creating lifelike digital humans and enhancing the capabilities of interactive virtual agents, with wide-ranging applications in virtual reality, digital entertainment, and remote communication. Existing approaches often generate audio-driven facial expressions and gestures independently, which introduces a significant limitation: the lack of seamless coordination between facial and gestural elements, resulting in less natural and cohesive animations. To address this limitation, we propose AsynFusion, a novel framework that leverages diffusion transformers to achieve harmonious expression and gesture synthesis. The proposed method is built upon a dual-branch DiT architecture, which enables the parallel generation of facial expressions and gestures. Within the model, we introduce a Cooperative Synchronization Module to facilitate bidirectional feature interaction between the two modalities, and an Asynchronous LCM Sampling strategy to reduce computational overhead while maintaining high-quality outputs. Extensive experiments demonstrate that AsynFusion achieves state-of-the-art performance in generating real-time, synchronized whole-body animations, consistently outperforming existing methods in both quantitative and qualitative evaluations.

全身音频驱动虚拟角色姿势与表情生成在创建逼真数字人类和提升交互虚拟代理人能力方面是一项至关重要的任务,在虚拟现实、数字娱乐和远程通信等领域具有广泛应用。现有方法通常独立生成音频驱动的面部表情和动作,这带来了一个显著局限性:面部表情和动作元素之间缺乏无缝协调,导致动画不够自然和连贯。为了解决这一局限性,我们提出了AsynFusion,这是一个利用扩散变压器实现和谐表情和动作合成的新框架。该方法建立在双分支DiT架构之上,能够实现面部表情和动作的并行生成。在模型中,我们引入了一个协作同步模块,以促进两种模式之间的双向特征交互,以及一种异步LCM采样策略,以降低计算开销同时保持高质量输出。大量实验表明,AsynFusion在生成实时同步全身动画方面达到了最新技术水平,在定量和定性评估中都优于现有方法。

论文及项目相关链接

PDF 11pages, conference

Summary

全新音频驱动全身动作捕捉技术——虚拟人与增强交互的突破革新。引入Dual-branch DiT架构及协作同步模块等机制实现表情与动作的协同融合生成,构建高质实时动态动作捕捉系统,推动虚拟人物及虚拟交互的自然度和实时性。广泛应用于虚拟娱乐、远程通讯等领域。

Key Takeaways

- 音频驱动全身动作捕捉技术对于创建逼真数字人类和增强交互虚拟代理至关重要。

- 当前方法常独立生成音频驱动面部表情和动作,缺乏协调性。

- 提出新框架AsynFusion,采用扩散Transformer技术实现表情和动作的和谐合成。

- 基于双分支DiT架构建立模型,实现面部表情和动作的并行生成。

- 引入协作同步模块促进两种模态之间的双向特征交互。

- 采用异步LCM采样策略减少计算开销并保持高质量输出。

点此查看论文截图

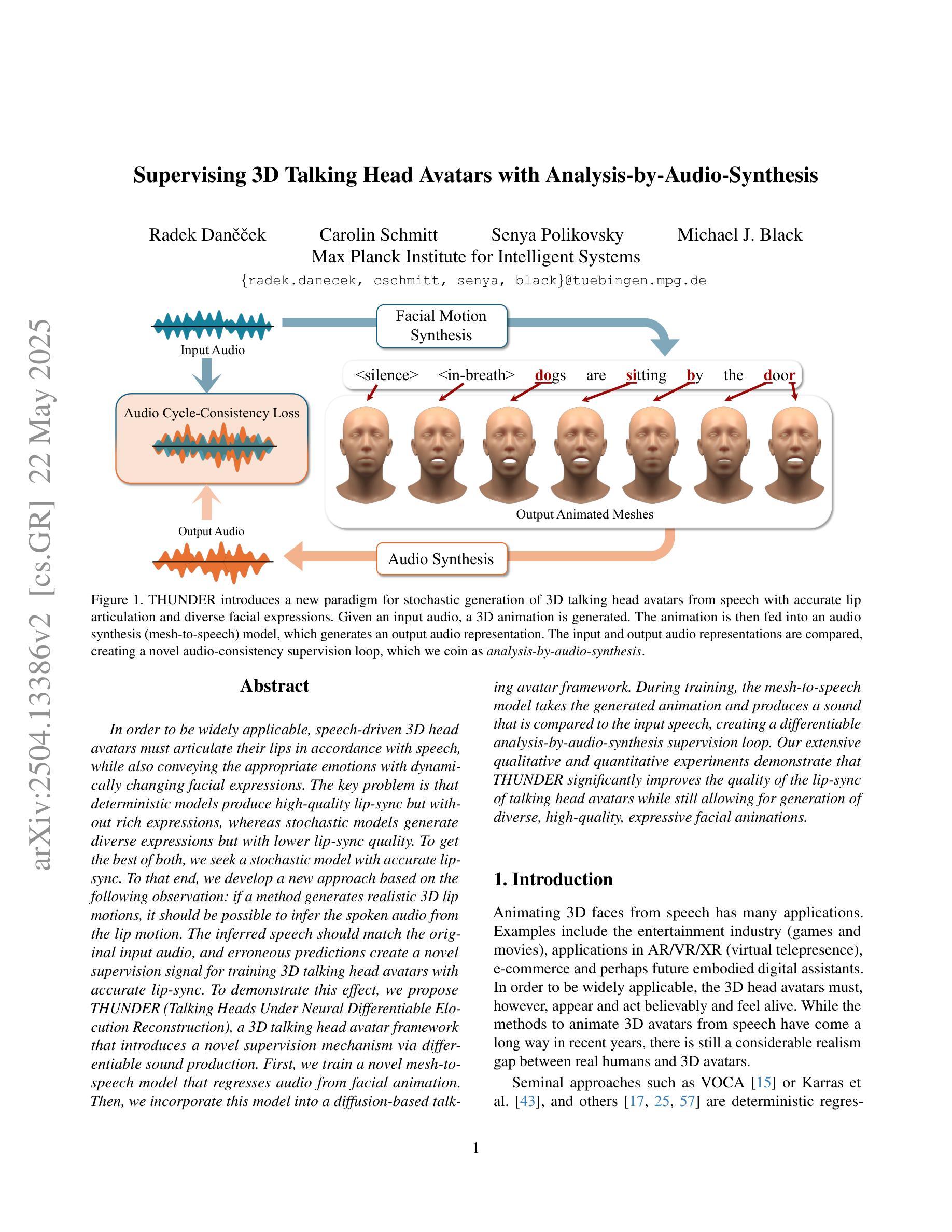

Supervising 3D Talking Head Avatars with Analysis-by-Audio-Synthesis

Authors:Radek Daněček, Carolin Schmitt, Senya Polikovsky, Michael J. Black

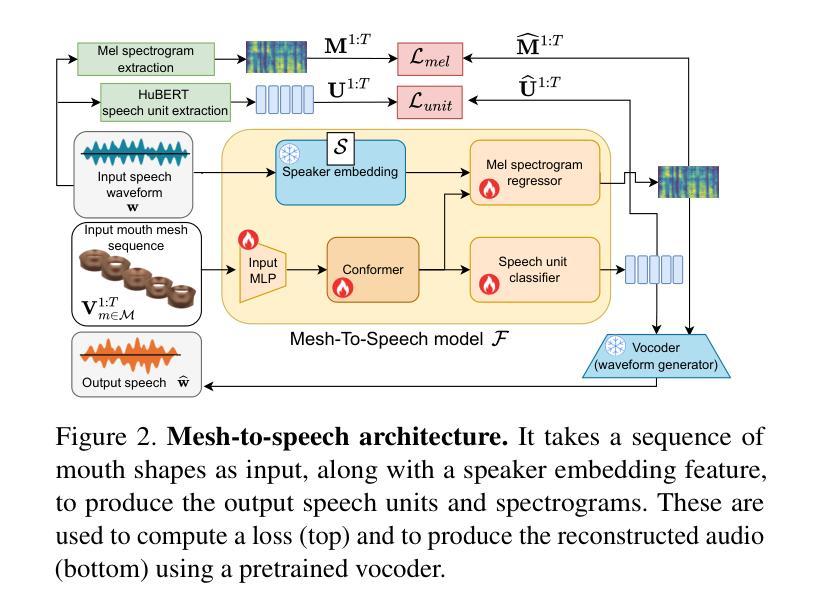

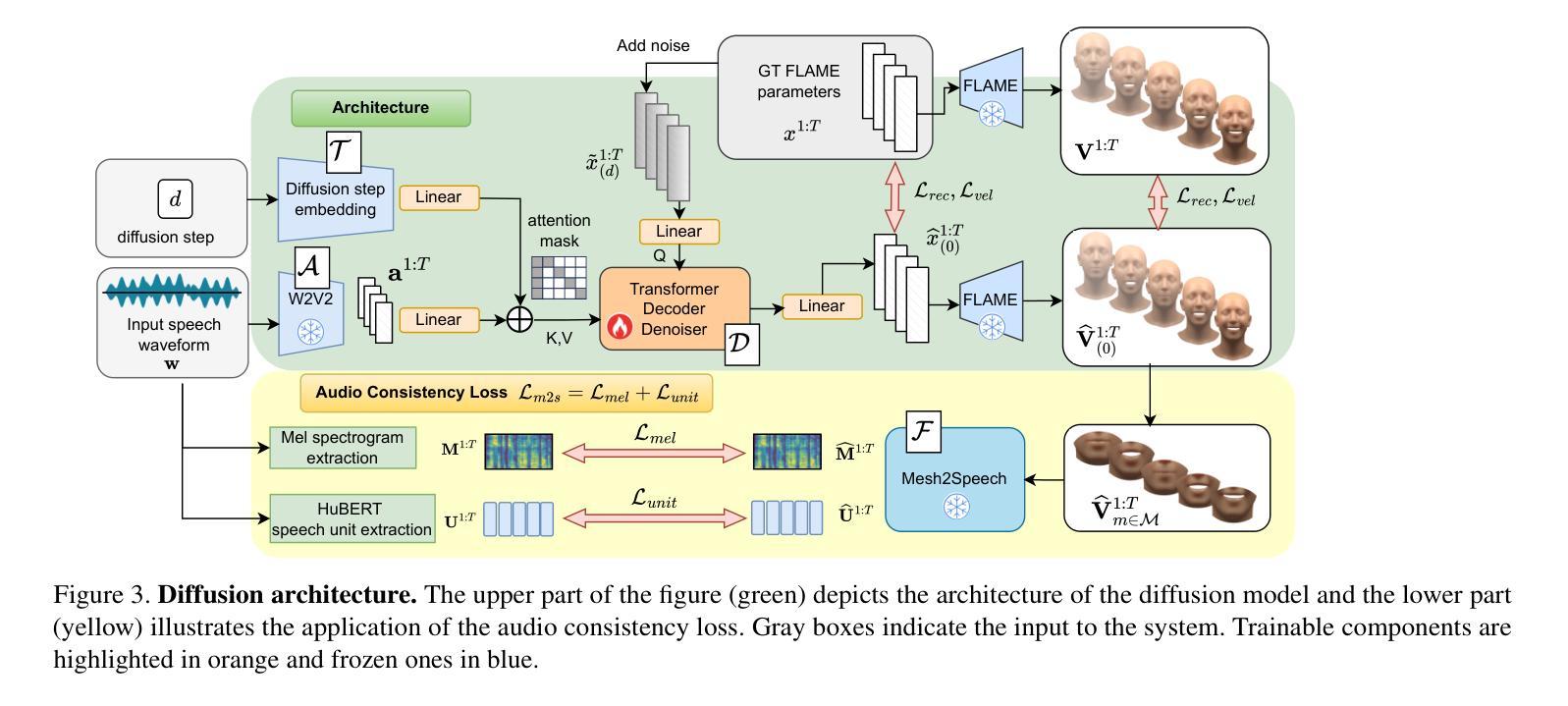

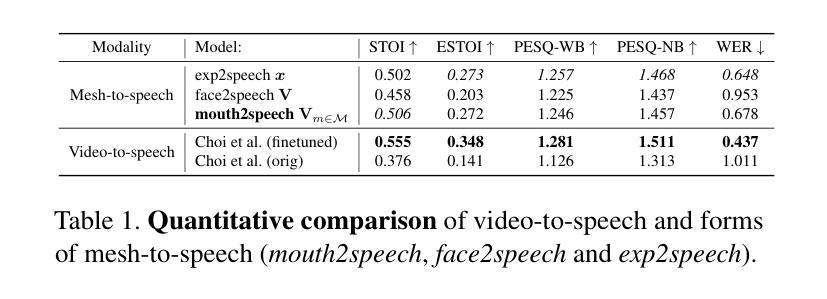

In order to be widely applicable, speech-driven 3D head avatars must articulate their lips in accordance with speech, while also conveying the appropriate emotions with dynamically changing facial expressions. The key problem is that deterministic models produce high-quality lip-sync but without rich expressions, whereas stochastic models generate diverse expressions but with lower lip-sync quality. To get the best of both, we seek a stochastic model with accurate lip-sync. To that end, we develop a new approach based on the following observation: if a method generates realistic 3D lip motions, it should be possible to infer the spoken audio from the lip motion. The inferred speech should match the original input audio, and erroneous predictions create a novel supervision signal for training 3D talking head avatars with accurate lip-sync. To demonstrate this effect, we propose THUNDER (Talking Heads Under Neural Differentiable Elocution Reconstruction), a 3D talking head avatar framework that introduces a novel supervision mechanism via differentiable sound production. First, we train a novel mesh-to-speech model that regresses audio from facial animation. Then, we incorporate this model into a diffusion-based talking avatar framework. During training, the mesh-to-speech model takes the generated animation and produces a sound that is compared to the input speech, creating a differentiable analysis-by-audio-synthesis supervision loop. Our extensive qualitative and quantitative experiments demonstrate that THUNDER significantly improves the quality of the lip-sync of talking head avatars while still allowing for generation of diverse, high-quality, expressive facial animations. The code and models will be available at https://thunder.is.tue.mpg.de/

为了广泛应用于各个领域,语音驱动的3D头部虚拟人必须根据语音进行唇部动作,同时借助动态变化的面部表情传达适当的情绪。关键问题在于,确定性模型虽然能产生高质量的唇部同步,但缺乏丰富的表情;而随机模型虽然能产生多种表情,但唇部同步质量较低。为了兼顾两者之优点,我们寻求具有精确唇部同步的随机模型。为此,我们基于以下观察结果开发了一种新方法:如果一个方法能够生成逼真的3D唇部运动,那么就应该可以从唇部运动中推断出语音。推断出的语音应与原始输入音频相匹配,错误的预测结果将作为训练具有精确唇部同步的3D谈话头部虚拟人的新型监督信号。为了证明这一效果,我们提出了THUNDER(神经可微语音重建下的谈话头),这是一个3D谈话头部虚拟人框架,它通过可微声音产生引入了一种新型监督机制。首先,我们训练了一种新型网格语音模型,该模型从面部动画回归音频。然后,我们将该模型纳入基于扩散的谈话虚拟人框架。在训练过程中,网格语音模型接受生成的动画并产生声音,将其与输入语音进行比较,从而创建一个可微的音频合成监督循环。我们的广泛定性和定量实验表明,THUNDER在改进谈话头部虚拟人的唇部同步质量的同时,仍能够生成多样、高质量、富有表现力的面部动画。相关代码和模型将在[https://thunder.is.tue.mpg.de/]上发布。

论文及项目相关链接

Summary

本文探讨了在创建广泛适用的语音驱动的三维头像时,既要确保头部模型唇部动作与语音相符,又要表现出丰富的情感动态表情的挑战。为此,提出了一种新的方法THUNDER(基于神经可分化语言重建的说话头部框架),它引入了可分化声音产生的监督机制,以此改进传统的确定性模型,从而能同时产生高质量的唇部和面部表情同步。实验结果证明了THUNDER在提高唇形同步质量的同时,还能生成多样且高质量的表情动画。代码和模型已在相关网站公开。

Key Takeaways

- 在创建语音驱动的三维头像时,面临挑战在于实现高质量的唇部同步与丰富的面部表情之间的平衡。

- 当前确定性模型虽然可以实现高质量的唇部同步,但缺乏丰富的表情;而随机模型虽然能产生丰富的表情,但唇部同步质量较低。

- 提出了一种新的方法THUNDER,旨在结合确定性模型和随机模型的优点,实现高质量的唇部同步和丰富的面部表情。

- THUNDER引入了可分化声音产生的监督机制,通过训练一个从面部动画回归音频的模型,实现了对说话头部动画的可分化分析。

- 该方法通过创建一个监督循环,将生成的动画与输入的语音进行比较,从而提高了唇部同步的质量。

- 实验结果表明,THUNDER显著提高了说话头部头像的唇形同步质量,同时保持了生成表情动画的多样性和高质量。

点此查看论文截图