⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-27 更新

DualTalk: Dual-Speaker Interaction for 3D Talking Head Conversations

Authors:Ziqiao Peng, Yanbo Fan, Haoyu Wu, Xuan Wang, Hongyan Liu, Jun He, Zhaoxin Fan

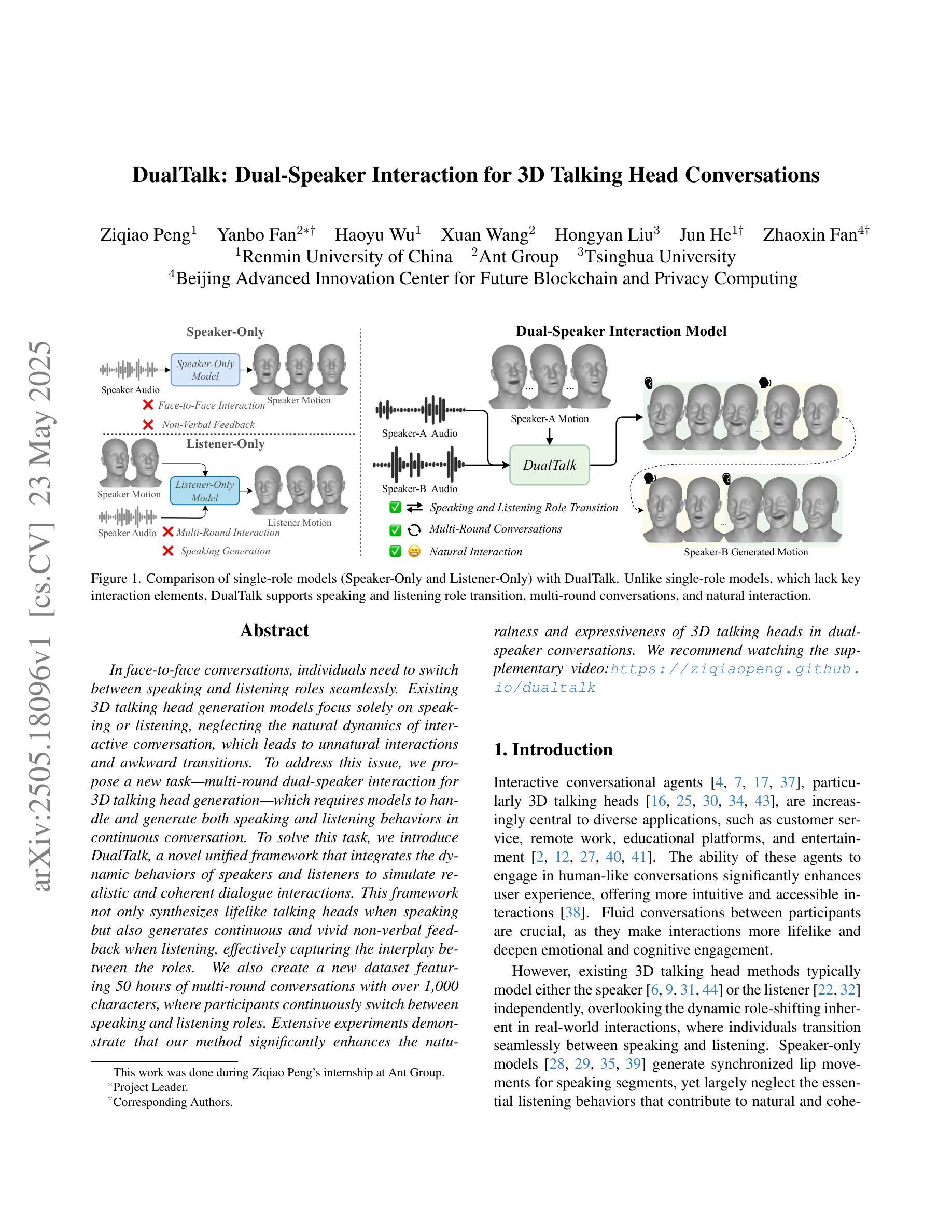

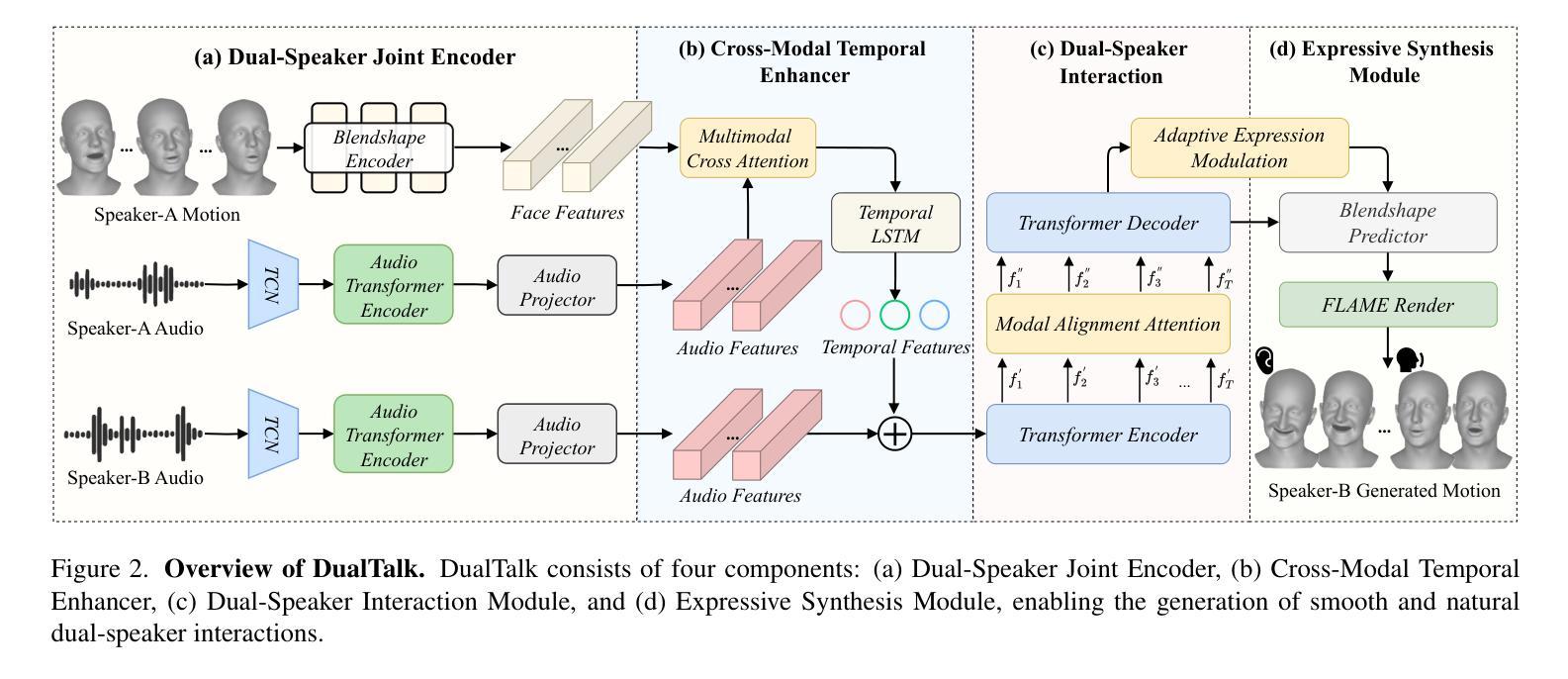

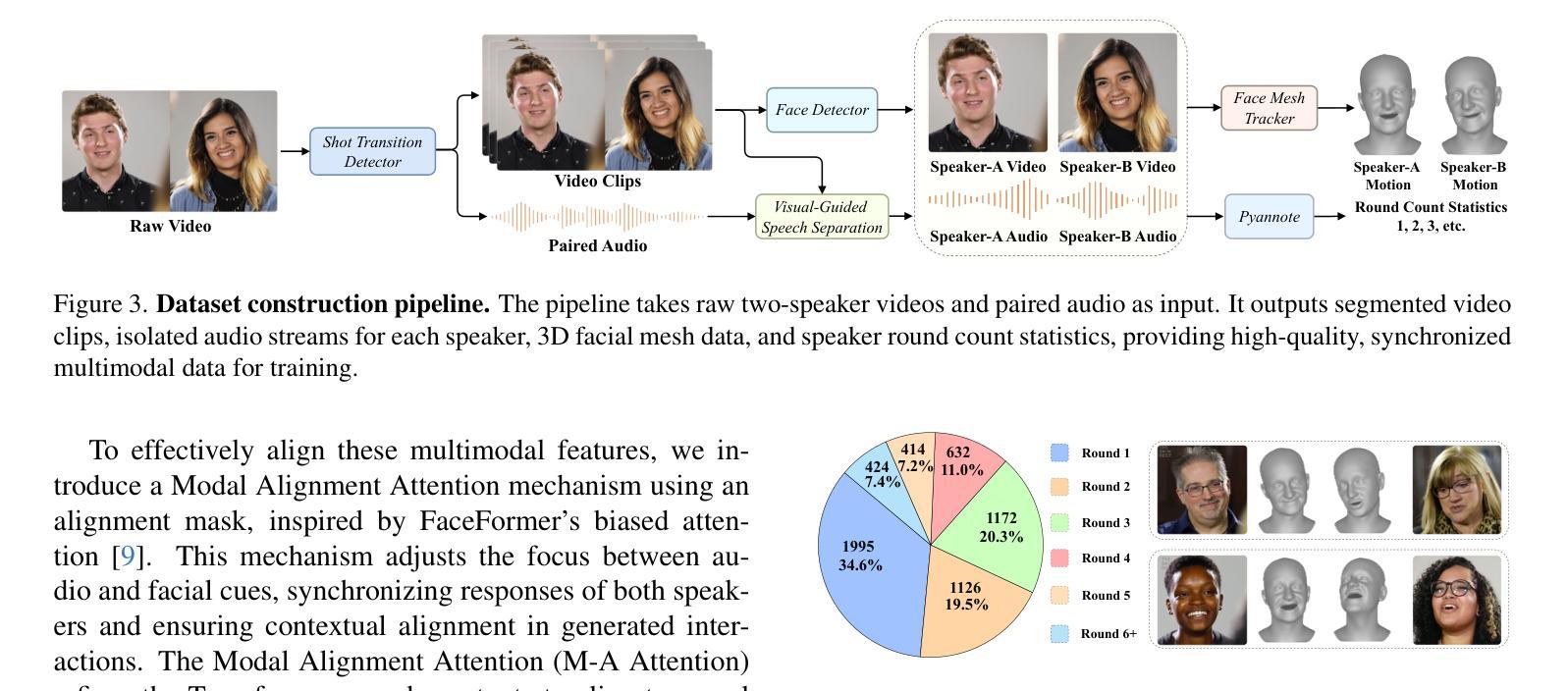

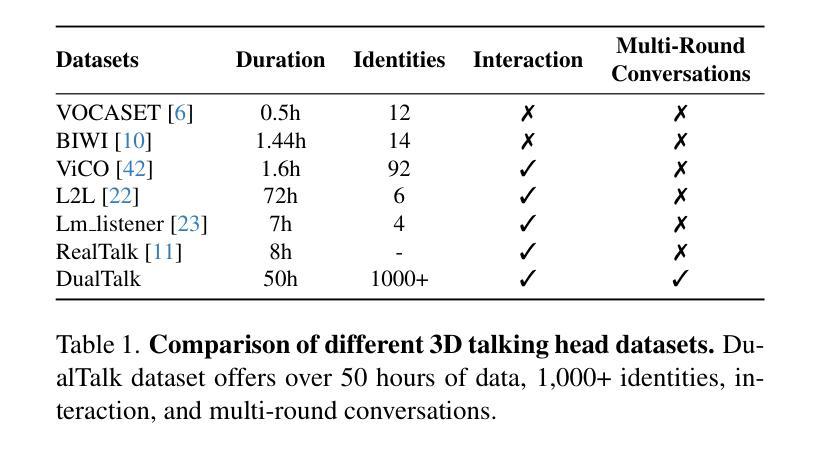

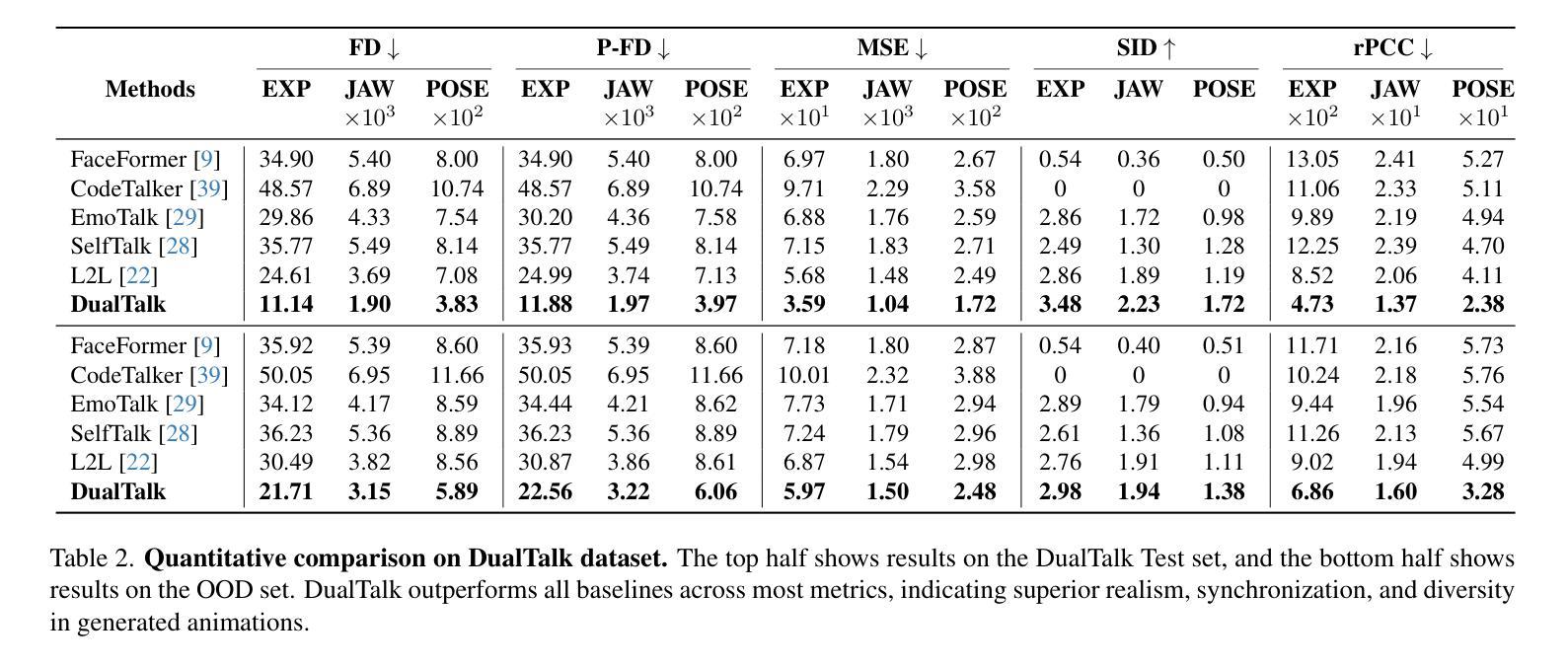

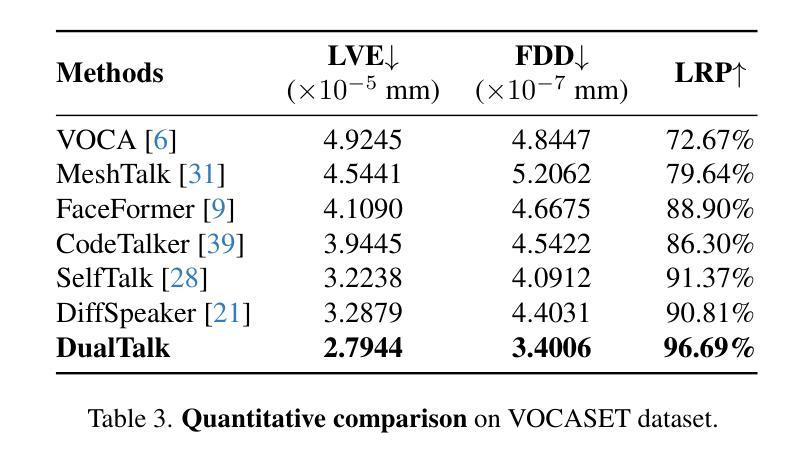

In face-to-face conversations, individuals need to switch between speaking and listening roles seamlessly. Existing 3D talking head generation models focus solely on speaking or listening, neglecting the natural dynamics of interactive conversation, which leads to unnatural interactions and awkward transitions. To address this issue, we propose a new task – multi-round dual-speaker interaction for 3D talking head generation – which requires models to handle and generate both speaking and listening behaviors in continuous conversation. To solve this task, we introduce DualTalk, a novel unified framework that integrates the dynamic behaviors of speakers and listeners to simulate realistic and coherent dialogue interactions. This framework not only synthesizes lifelike talking heads when speaking but also generates continuous and vivid non-verbal feedback when listening, effectively capturing the interplay between the roles. We also create a new dataset featuring 50 hours of multi-round conversations with over 1,000 characters, where participants continuously switch between speaking and listening roles. Extensive experiments demonstrate that our method significantly enhances the naturalness and expressiveness of 3D talking heads in dual-speaker conversations. We recommend watching the supplementary video: https://ziqiaopeng.github.io/dualtalk.

在面对面交谈中,人们需要在说话和倾听的角色之间无缝切换。现有的3D对话头模型只专注于说话或倾听,忽略了互动对话的自然动态,导致互动不自然和过渡尴尬。为了解决这个问题,我们提出了一个新的任务——用于3D对话头生成的多轮双人对话任务,要求模型在连续对话中处理并生成说话和倾听行为。为了解决这个问题,我们引入了DualTalk,这是一个新的统一框架,它集成了说话者和听众的动态行为来模拟真实和连贯的对话互动。该框架不仅在说话时合成逼真的对话头,而且在倾听时生成连续和生动的非语言反馈,有效地捕捉角色之间的相互作用。我们还创建了一个新的数据集,包含超过一千个字符的五十小时的多轮对话,参与者不断在说话和倾听角色之间切换。大量实验表明,我们的方法在双人对话的3D对话头中显著提高了自然性和表现力。建议观看补充视频:https://ziqiaopeng.github.io/dualtalk。

论文及项目相关链接

PDF Accepted by CVPR 2025

Summary

简洁地模拟面对面对话场景下的多轮双向互动情况。为解决当前问题,本文提出了一种全新的任务要求——在连续的对话中进行三维头像的说话与聆听角色的切换,并提出一个统一框架DualTalk来解决此任务。框架不仅能够合成逼真动态的说话者头像,而且在聆听时还能产生连贯生动的非语言反馈,展现了角色的互赖关系。创建了涵盖超过一千个角色、五十小时对话的新数据集,试验证实,本方法显著提高说话头在自然与情感方面的表达能力。

补充视频推荐:观看地址链接。

Key Takeaways

- 当前3D对话头像模型在处理交互式对话的自然动态时存在不足,不能实现流畅的角色切换,缺少连续性。为改进这一不足,提出了新的任务——在多轮对话中进行头像角色转换,建立流畅的动态模型生成体系。这要求在对话中模拟同时生成说话和聆听的行为。

点此查看论文截图