⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-05-30 更新

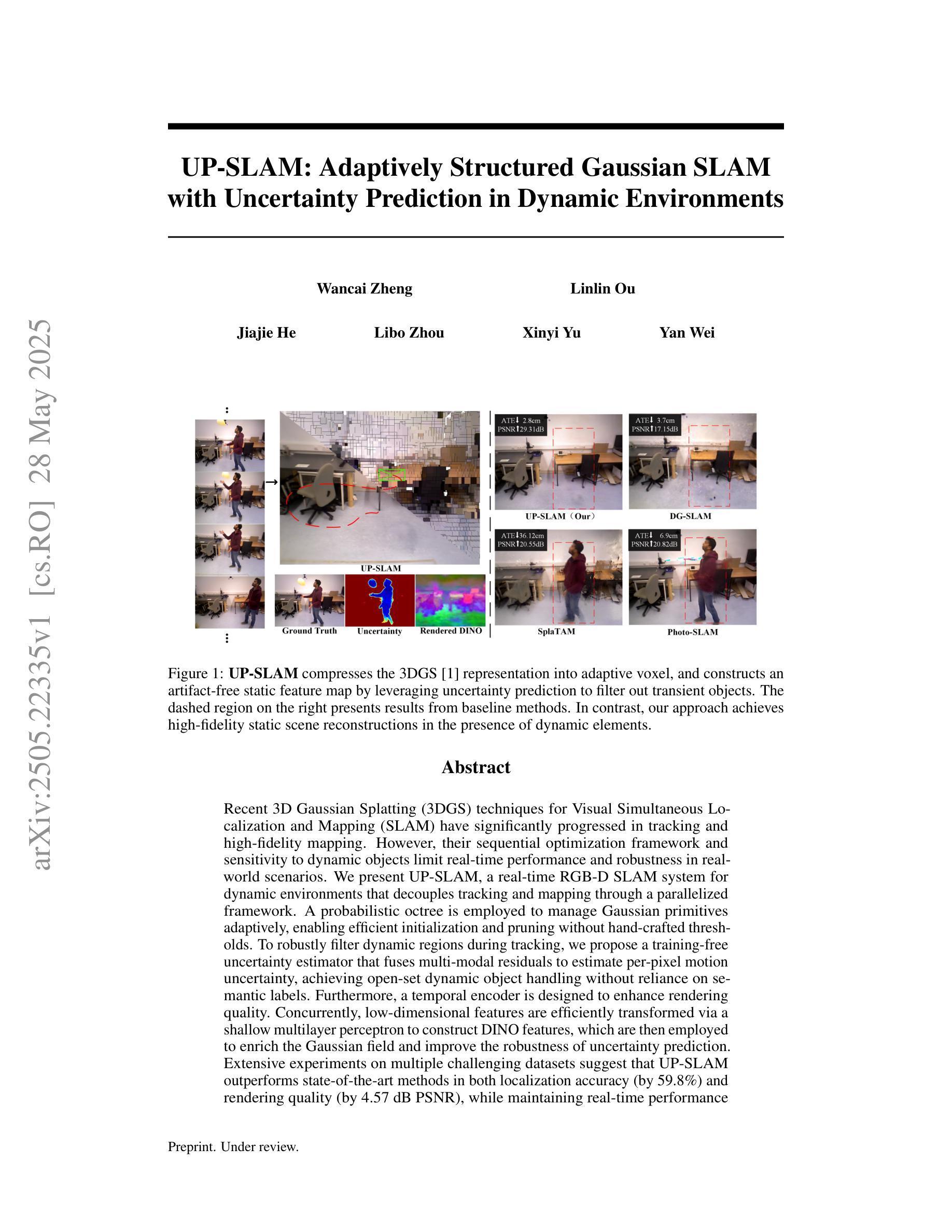

UP-SLAM: Adaptively Structured Gaussian SLAM with Uncertainty Prediction in Dynamic Environments

Authors:Wancai Zheng, Linlin Ou, Jiajie He, Libo Zhou, Xinyi Yu, Yan Wei

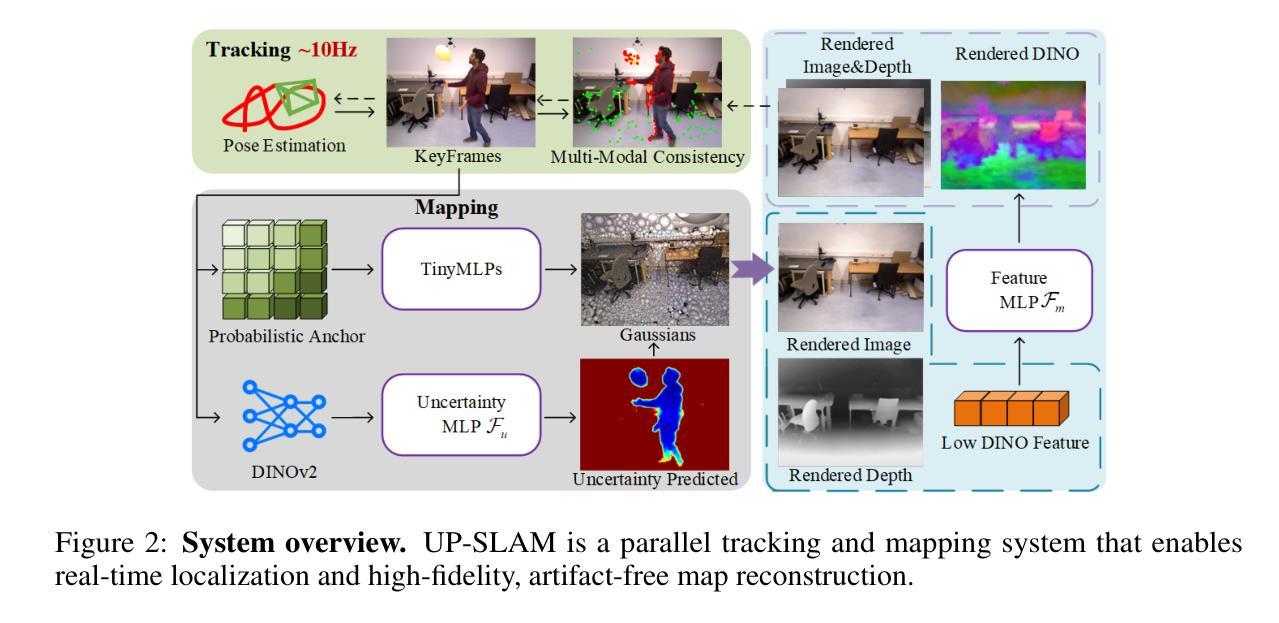

Recent 3D Gaussian Splatting (3DGS) techniques for Visual Simultaneous Localization and Mapping (SLAM) have significantly progressed in tracking and high-fidelity mapping. However, their sequential optimization framework and sensitivity to dynamic objects limit real-time performance and robustness in real-world scenarios. We present UP-SLAM, a real-time RGB-D SLAM system for dynamic environments that decouples tracking and mapping through a parallelized framework. A probabilistic octree is employed to manage Gaussian primitives adaptively, enabling efficient initialization and pruning without hand-crafted thresholds. To robustly filter dynamic regions during tracking, we propose a training-free uncertainty estimator that fuses multi-modal residuals to estimate per-pixel motion uncertainty, achieving open-set dynamic object handling without reliance on semantic labels. Furthermore, a temporal encoder is designed to enhance rendering quality. Concurrently, low-dimensional features are efficiently transformed via a shallow multilayer perceptron to construct DINO features, which are then employed to enrich the Gaussian field and improve the robustness of uncertainty prediction. Extensive experiments on multiple challenging datasets suggest that UP-SLAM outperforms state-of-the-art methods in both localization accuracy (by 59.8%) and rendering quality (by 4.57 dB PSNR), while maintaining real-time performance and producing reusable, artifact-free static maps in dynamic environments.The project: https://aczheng-cai.github.io/up_slam.github.io/

最新的三维高斯拼贴(3DGS)技术,对于视觉同时定位与地图构建(SLAM)在追踪和高保真地图构建方面取得了显著进展。然而,其顺序优化框架和对动态物体的敏感性限制了其在真实场景中的实时性能和稳健性。我们提出了UP-SLAM,这是一个针对动态环境的实时RGB-D SLAM系统,它通过并行框架解耦追踪和地图构建。采用概率八叉树来适应性地管理高斯基本体,实现高效初始化和无需手工设置的阈值的修剪。为了在执行追踪时稳健地过滤动态区域,我们提出了一种无需训练的不确定性估计器,该估计器融合了多模态残差来估计像素运动的不确定性,实现了开放式动态对象处理,无需依赖语义标签。此外,设计了时间编码器以增强渲染质量。同时,通过浅层多层感知机有效地转换低维特征来构建DINO特征,这些特征然后用于丰富高斯场并提高不确定性预测的稳健性。在多个具有挑战性的数据集上的广泛实验表明,UP-SLAM在定位精度(提高59.8%)和渲染质量(提高4.57 dB PSNR)方面均优于最先进的方法,同时保持实时性能并在动态环境中生成可重用、无伪影的静态地图。项目网站:https://aczheng-cai.github.io/up_slam.github.io/

论文及项目相关链接

Summary

新一代基于视觉的即时定位与地图构建系统UP-SLAM提出并行化框架处理动态环境,利用概率八叉树管理高斯原始数据,提出无训练的不确定性估计器过滤动态区域,设计时间编码器提升渲染质量。实验证明,UP-SLAM在定位精度和渲染质量上优于现有方法。

Key Takeaways

- UP-SLAM采用并行化框架实现动态环境下的实时RGB-D SLAM系统。

- 概率八叉树用于自适应管理高斯原始数据,实现高效初始化和修剪。

- 提出无训练的不确定性估计器,融合多模态残差估计像素运动不确定性,实现开放集动态对象处理。

- 设计时间编码器以提升渲染质量,利用浅层多层感知器转换低维特征构建DINO特征,丰富高斯场并提升不确定性预测稳健性。

点此查看论文截图

Learning Fine-Grained Geometry for Sparse-View Splatting via Cascade Depth Loss

Authors:Wenjun Lu, Haodong Chen, Anqi Yi, Yuk Ying Chung, Zhiyong Wang, Kun Hu

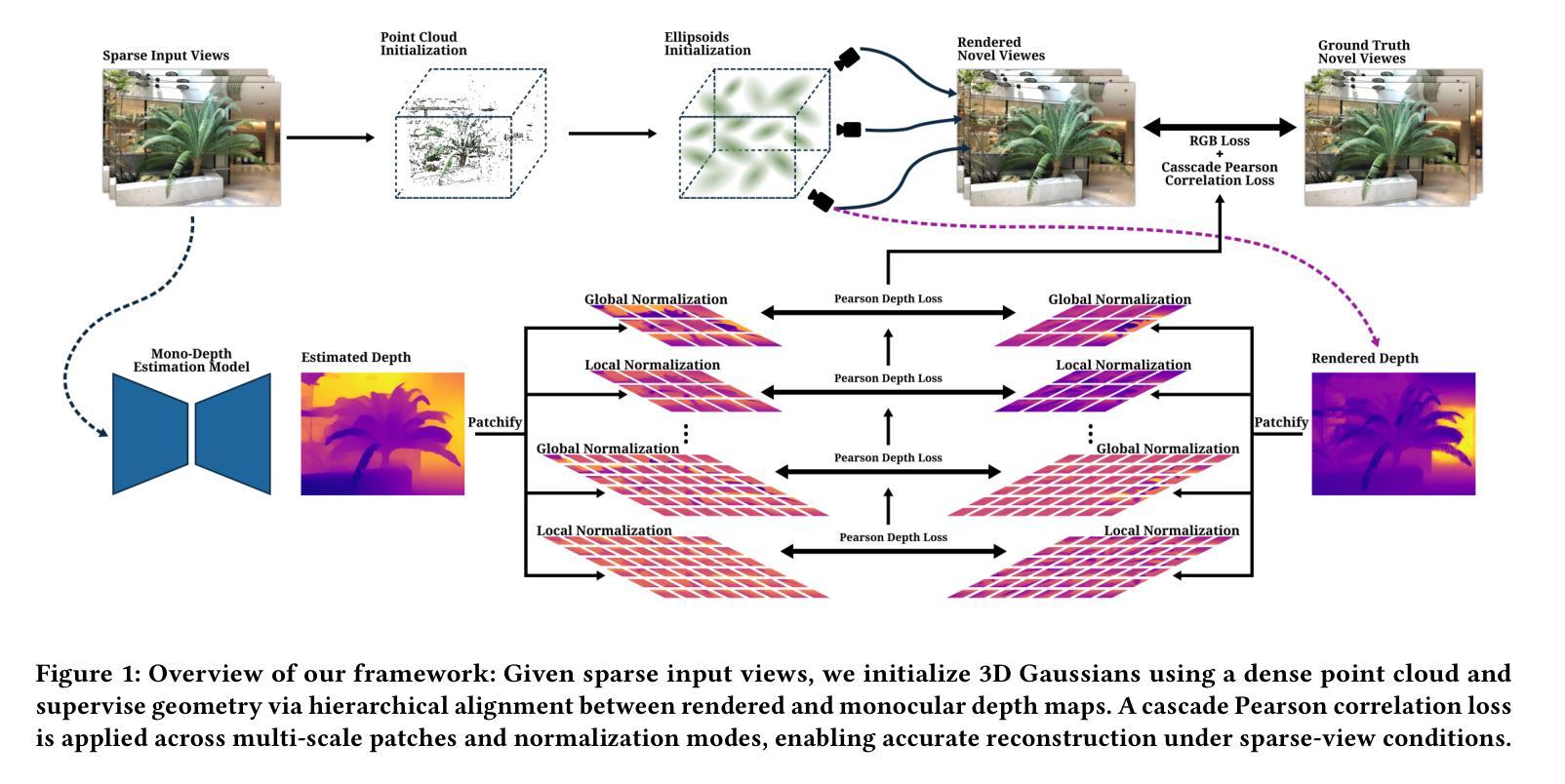

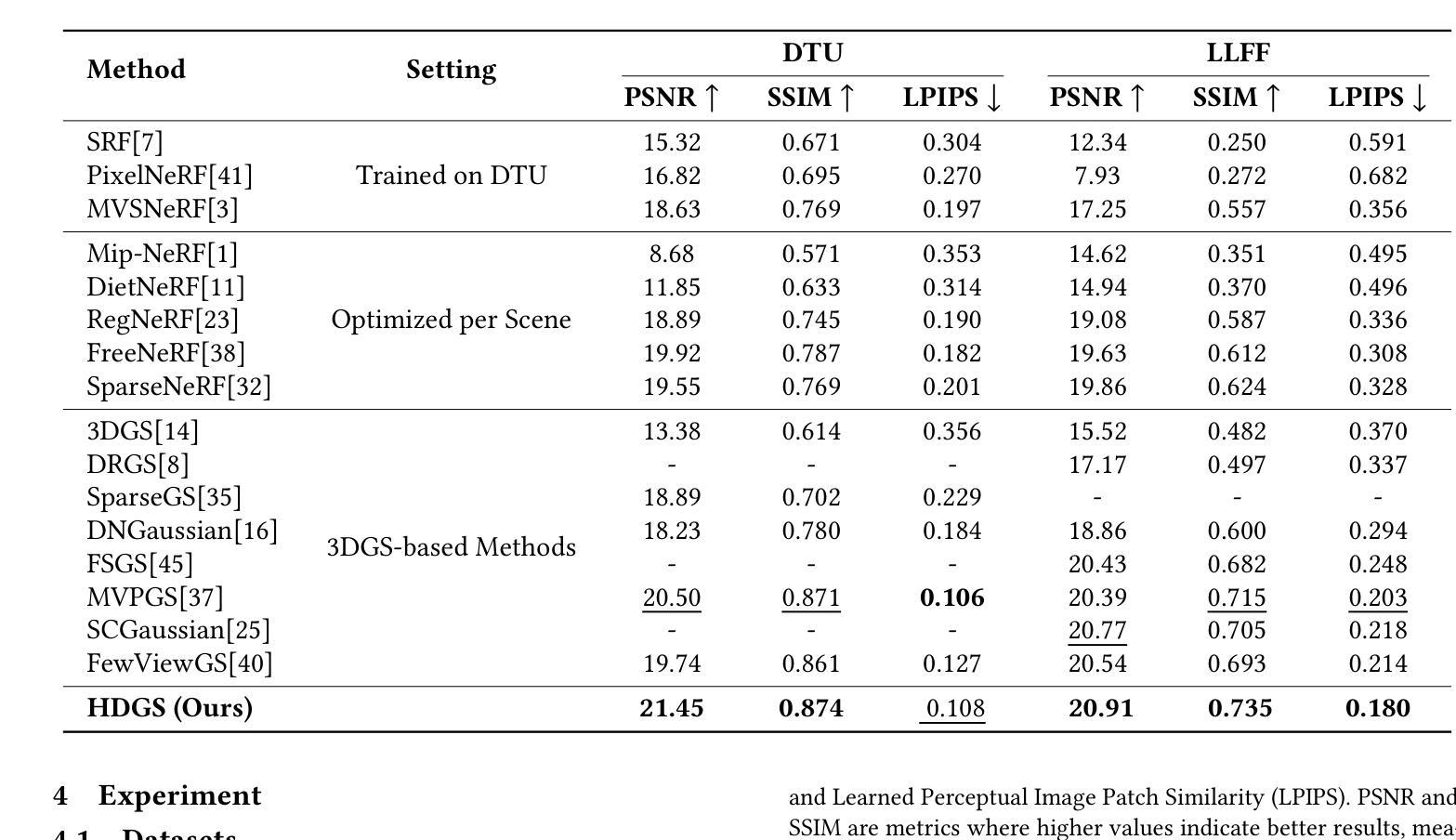

Novel view synthesis is a fundamental task in 3D computer vision that aims to reconstruct realistic images from a set of posed input views. However, reconstruction quality degrades significantly under sparse-view conditions due to limited geometric cues. Existing methods, such as Neural Radiance Fields (NeRF) and the more recent 3D Gaussian Splatting (3DGS), often suffer from blurred details and structural artifacts when trained with insufficient views. Recent works have identified the quality of rendered depth as a key factor in mitigating these artifacts, as it directly affects geometric accuracy and view consistency. In this paper, we address these challenges by introducing Hierarchical Depth-Guided Splatting (HDGS), a depth supervision framework that progressively refines geometry from coarse to fine levels. Central to HDGS is a novel Cascade Pearson Correlation Loss (CPCL), which aligns rendered and estimated monocular depths across multiple spatial scales. By enforcing multi-scale depth consistency, our method substantially improves structural fidelity in sparse-view scenarios. Extensive experiments on the LLFF and DTU benchmarks demonstrate that HDGS achieves state-of-the-art performance under sparse-view settings while maintaining efficient and high-quality rendering

新型视图合成是三维计算机视觉中的一项基本任务,旨在从一组设定的输入视图中重建逼真的图像。然而,在稀疏视图条件下,由于几何线索有限,重建质量会显著下降。现有方法,如神经辐射场(NeRF)和最近的3D高斯拼贴(3DGS),在训练视图不足时,往往会出现细节模糊和结构伪影。最近的研究工作将渲染深度质量作为减轻这些伪影的关键因素,因为它直接影响几何精度和视图一致性。在本文中,我们通过引入分层深度引导拼贴(HDGS)来解决这些挑战,这是一种深度监督框架,可以从粗到细级别逐步优化几何形状。HDGS的核心是一种新型级联皮尔逊相关性损失(CPCL),它可以在多个空间尺度上对齐渲染和估计的单眼深度。通过强制多尺度深度一致性,我们的方法在稀疏视图场景中大大提高了结构保真度。在LLFF和DTU基准测试上的大量实验表明,HDGS在稀疏视图设置下实现了最佳性能,同时保持了高效和高质量的渲染。

论文及项目相关链接

Summary

本文介绍了在3D计算机视觉中的基础任务——新型视图合成。针对稀疏视图条件下重建质量下降的问题,提出了Hierarchical Depth-Guided Splatting(HDGS)深度监督框架,通过多尺度深度一致性来改进几何形状的精细度。实验证明,在稀疏视图场景下,HDGS实现了最先进的性能,同时保持了高效和高质量的渲染。

Key Takeaways

- 新型视图合成是3D计算机视觉中的基础任务,旨在从一组定位输入视图中重建真实图像。

- 在稀疏视图条件下,由于有限的几何线索,重建质量会显著下降。

- 现有方法(如NeRF和3DGS)在训练视图不足时,常出现模糊细节和结构伪影。

- 渲染深度质量是缓解这些伪影的关键因素,直接影响几何准确性和视图一致性。

- 本文提出了Hierarchical Depth-Guided Splatting(HDGS)深度监督框架,通过从粗到细的多层次几何细化来解决这些挑战。

- HDGS的核心是新颖的Cascade Pearson Correlation Loss(CPCL),它在多个空间尺度上对齐渲染和估计的单眼深度,实现多尺度深度一致性。

点此查看论文截图

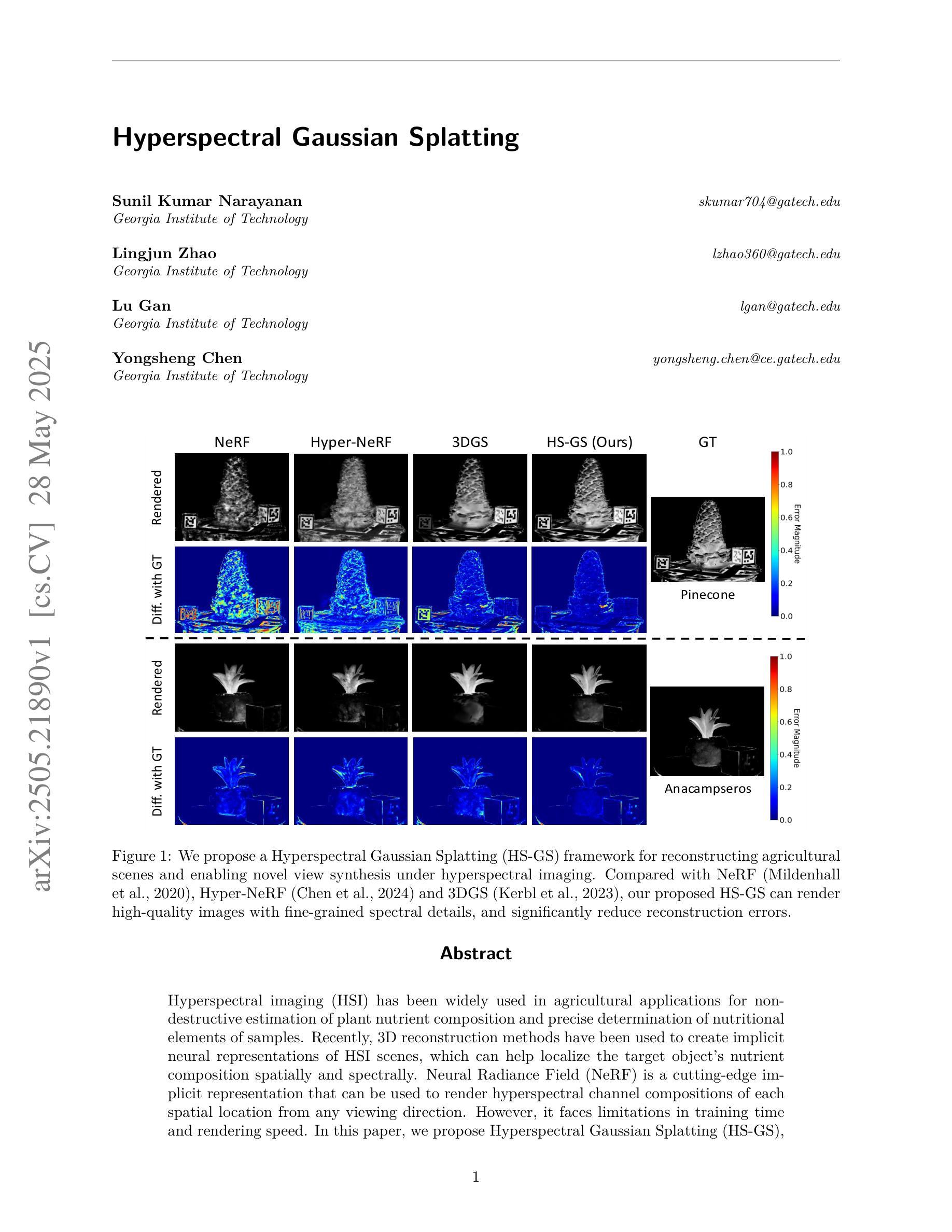

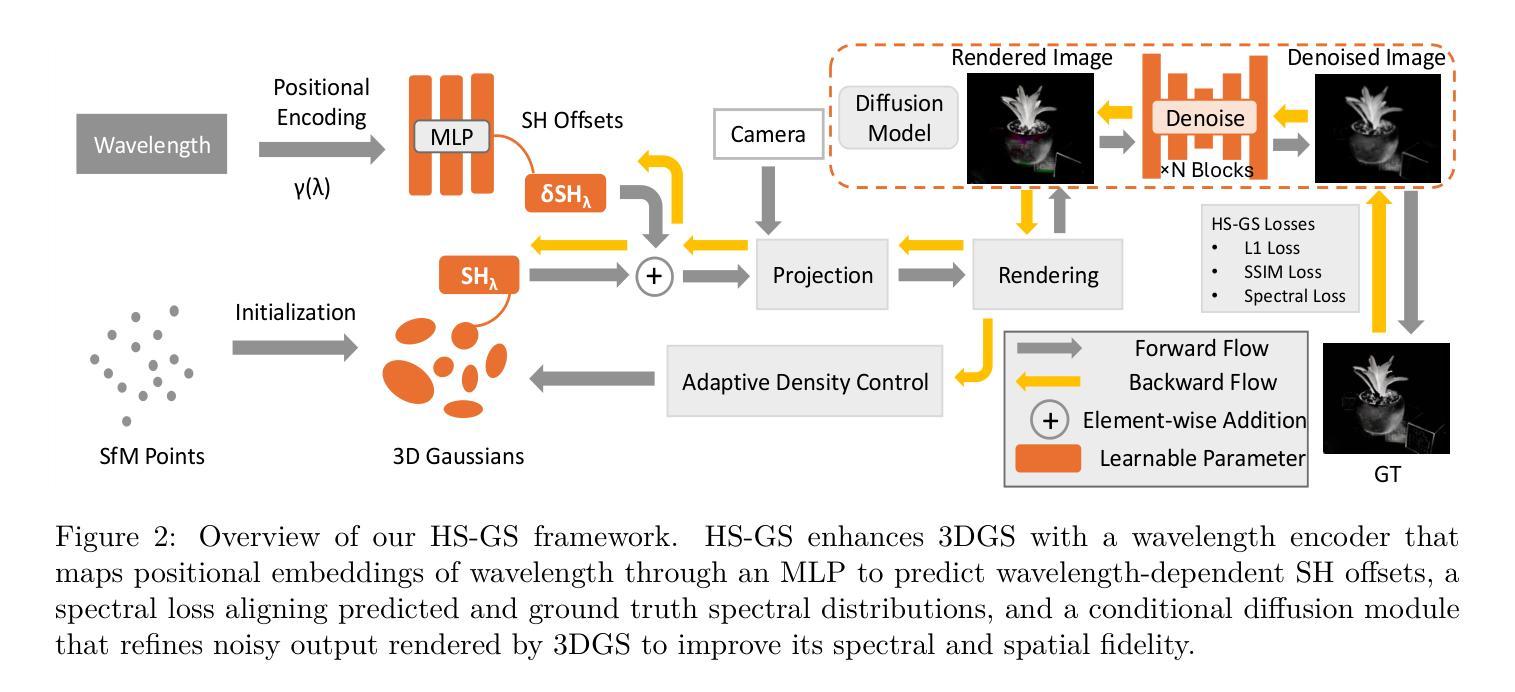

Hyperspectral Gaussian Splatting

Authors:Sunil Kumar Narayanan, Lingjun Zhao, Lu Gan, Yongsheng Chen

Hyperspectral imaging (HSI) has been widely used in agricultural applications for non-destructive estimation of plant nutrient composition and precise determination of nutritional elements in samples. Recently, 3D reconstruction methods have been used to create implicit neural representations of HSI scenes, which can help localize the target object’s nutrient composition spatially and spectrally. Neural Radiance Field (NeRF) is a cutting-edge implicit representation that can render hyperspectral channel compositions of each spatial location from any viewing direction. However, it faces limitations in training time and rendering speed. In this paper, we propose Hyperspectral Gaussian Splatting (HS-GS), which combines the state-of-the-art 3D Gaussian Splatting (3DGS) with a diffusion model to enable 3D explicit reconstruction of the hyperspectral scenes and novel view synthesis for the entire spectral range. To enhance the model’s ability to capture fine-grained reflectance variations across the light spectrum and leverage correlations between adjacent wavelengths for denoising, we introduce a wavelength encoder to generate wavelength-specific spherical harmonics offsets. We also introduce a novel Kullback–Leibler divergence-based loss to mitigate the spectral distribution gap between the rendered image and the ground truth. A diffusion model is further applied for denoising the rendered images and generating photorealistic hyperspectral images. We present extensive evaluations on five diverse hyperspectral scenes from the Hyper-NeRF dataset to show the effectiveness of our proposed HS-GS framework. The results demonstrate that HS-GS achieves new state-of-the-art performance among all previously published methods. Code will be released upon publication.

高光谱成像(HSI)在农业应用中得到广泛应用,用于非破坏性估计植物养分组成和精确确定样品中的营养元素。最近,三维重建方法被用来创建HSI场景的隐式神经表示,这有助于在空间上定位目标对象的养分组成并在光谱上定位。神经辐射场(NeRF)是一种前沿的隐式表示方法,可以从任何观看方向呈现每个空间位置的高光谱通道组成。然而,它在训练时间和渲染速度方面存在局限性。在本文中,我们提出了高光谱高斯拼贴(HS-GS),它将最先进的三维高斯拼贴(3DGS)与扩散模型相结合,实现对高光谱场景的三维显式重建以及整个光谱范围的全新视图合成。为了提高模型捕捉光谱中精细反射率变化的能力,并利用相邻波长之间的相关性进行去噪,我们引入了一个波长编码器来生成特定波长的球面谐波偏移。我们还引入了一种新型的基于Kullback-Leibler散度损失的函数,以减小渲染图像和真实图像之间的光谱分布差距。扩散模型进一步应用于对渲染图像进行去噪,并生成逼真的高光谱图像。我们在Hyper-NeRF数据集上的五个不同高光谱场景进行了广泛评估,以展示我们提出HS-GS框架的有效性。结果表明,HS-GS在所有已发布的方法中达到了新的最先进的性能。代码将在出版时发布。

论文及项目相关链接

Summary

该论文将前沿的3D高斯溅射技术与扩散模型相结合,实现了高光谱场景的3D显式重建和整个光谱范围的全新视角合成。通过引入波长编码器和基于Kullback-Leibler散度损失的优化方法,提高了模型捕捉光谱细粒度反射变化和利用相邻波长去噪的能力。在Hyper-NeRF数据集上的五个不同高光谱场景的综合评估表明,HS-GS框架取得了最新先进技术成果。

Key Takeaways

- 该论文使用高光谱成像(HSI)技术,结合先进的3D重建方法,进行植物营养组成的非破坏性估计和样本中营养元素的精确测定。

- 采用Neural Radiance Field(NeRF)技术的隐式表示方法能够按空间位置和光谱进行目标物体营养组成的定位。

- 论文提出了Hyperspectral Gaussian Splatting(HS-GS)框架,融合了3D高斯溅射技术与扩散模型,实现了高光谱场景的3D显式重建和全新视角合成。

- 为了捕捉光谱中的细粒度反射变化并利用相邻波长进行去噪,引入了波长编码器和基于Kullback-Leibler散度损失的优化方法。

- 在Hyper-NeRF数据集上的实验表明,HS-GS框架在多种高光谱场景下的表现达到了最新技术水准。

- HS-GS框架能够实现渲染图像的去噪,并生成逼真的高光谱图像。

- 代码将在论文发表后公开。

点此查看论文截图