⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-06-04 更新

MFCLIP: Multi-modal Fine-grained CLIP for Generalizable Diffusion Face Forgery Detection

Authors:Yaning Zhang, Tianyi Wang, Zitong Yu, Zan Gao, Linlin Shen, Shengyong Chen

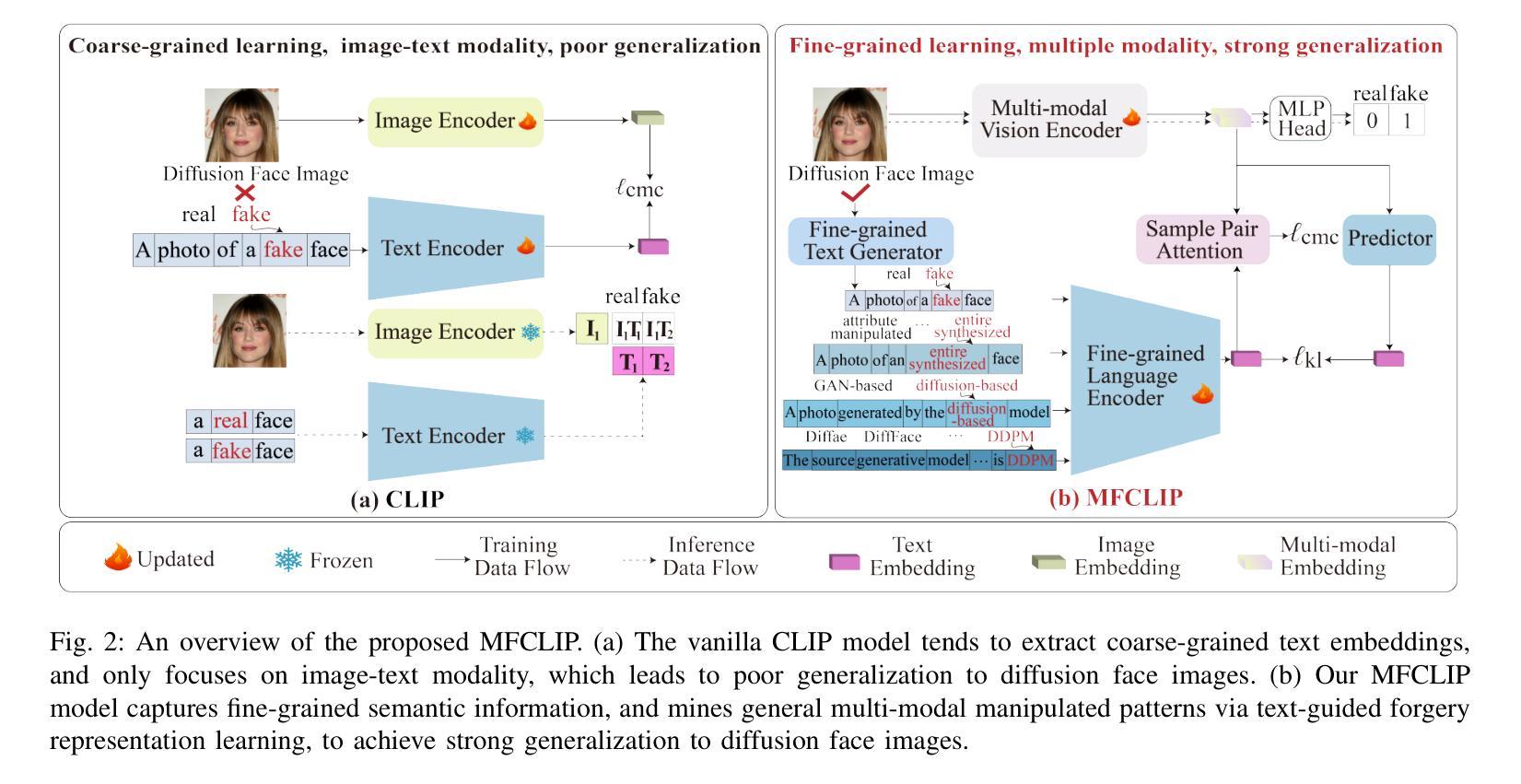

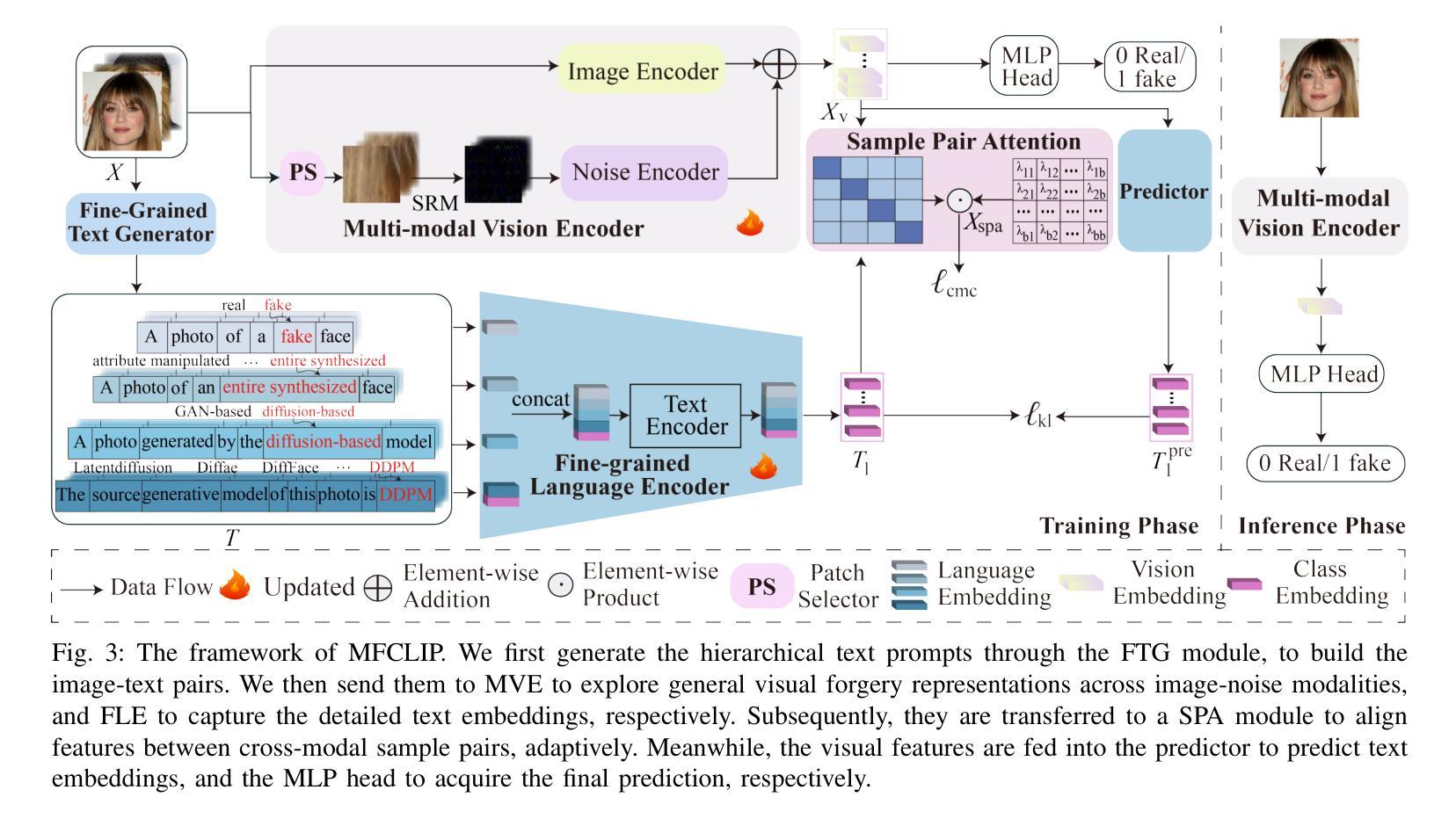

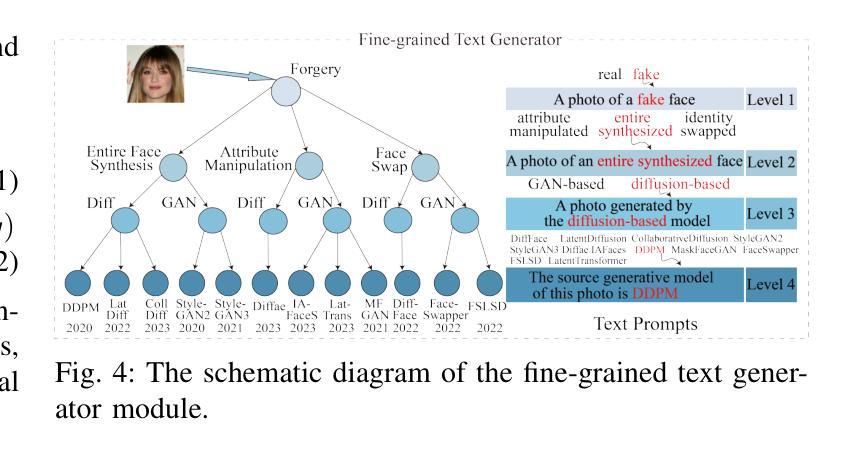

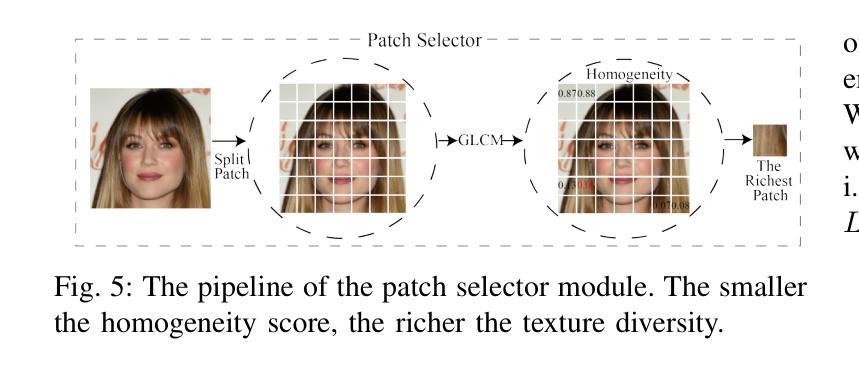

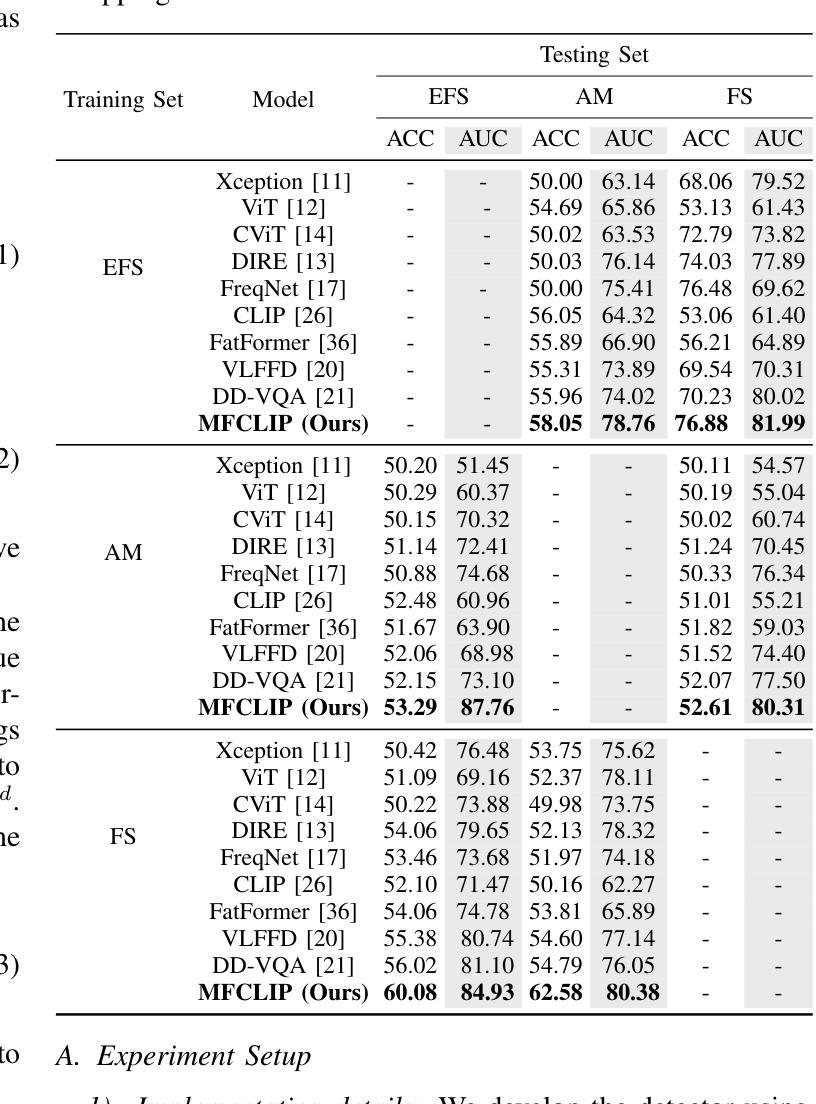

The rapid development of photo-realistic face generation methods has raised significant concerns in society and academia, highlighting the urgent need for robust and generalizable face forgery detection (FFD) techniques. Although existing approaches mainly capture face forgery patterns using image modality, other modalities like fine-grained noises and texts are not fully explored, which limits the generalization capability of the model. In addition, most FFD methods tend to identify facial images generated by GAN, but struggle to detect unseen diffusion-synthesized ones. To address the limitations, we aim to leverage the cutting-edge foundation model, contrastive language-image pre-training (CLIP), to achieve generalizable diffusion face forgery detection (DFFD). In this paper, we propose a novel multi-modal fine-grained CLIP (MFCLIP) model, which mines comprehensive and fine-grained forgery traces across image-noise modalities via language-guided face forgery representation learning, to facilitate the advancement of DFFD. Specifically, we devise a fine-grained language encoder (FLE) that extracts fine global language features from hierarchical text prompts. We design a multi-modal vision encoder (MVE) to capture global image forgery embeddings as well as fine-grained noise forgery patterns extracted from the richest patch, and integrate them to mine general visual forgery traces. Moreover, we build an innovative plug-and-play sample pair attention (SPA) method to emphasize relevant negative pairs and suppress irrelevant ones, allowing cross-modality sample pairs to conduct more flexible alignment. Extensive experiments and visualizations show that our model outperforms the state of the arts on different settings like cross-generator, cross-forgery, and cross-dataset evaluations.

随着逼真面部生成技术的快速发展,社会和学术界对此产生了重大关注,这突显了对稳健且可推广的面部伪造检测(FFD)技术的迫切需求。尽管现有方法主要使用图像模式来捕捉面部伪造模式,但其他模式(例如细微噪声和文本)尚未得到完全探索,这限制了模型的推广能力。此外,大多数FFD方法倾向于识别由GAN生成的面部图像,但难以检测未见过的扩散合成图像。为了克服这些限制,我们旨在利用最新的基础模型——对比语言图像预训练(CLIP),以实现可推广的扩散面部伪造检测(DFFD)。

论文及项目相关链接

PDF Accepted by IEEE Transactions on Information Forensics and Security 2025

Summary

生成对抗网络(GAN)人脸生成技术的快速发展引发了社会和学术界的广泛关注,促进了对面部伪造检测(FFD)技术的迫切需求。当前方法主要依赖图像模态捕捉面部伪造模式,忽略了细粒度噪声和文本等其他模态,限制了模型的泛化能力。针对这些局限,本文提出了利用最新的基础模型——对比语言图像预训练(CLIP)实现通用扩散面部伪造检测(DFFD)的方法。本文提出了一种新颖的多模态细粒度CLIP(MFCLIP)模型,通过语言引导的面部伪造表示学习,挖掘图像噪声模态的细粒度伪造痕迹,推动DFFD的进步。

Key Takeaways

- GAN人脸生成技术快速发展,引发对脸部伪造检测技术的关注。

- 现有面部伪造检测方法主要依赖图像模态,限制了模型的泛化能力。

- MFCLIP模型利用多模态数据(包括图像和噪声),提高检测性能。

- MFCLIP模型引入了语言编码器(FLE)捕捉全局语言特征。

- 该模型通过多模态视觉编码器(MVE)挖掘全局图像伪造嵌入和细粒度噪声伪造模式。

- 创新性的SPA方法能强调相关负样本对并抑制不相关样本对,实现跨模态样本对的灵活对齐。

点此查看论文截图