⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-06-13 更新

DIsoN: Decentralized Isolation Networks for Out-of-Distribution Detection in Medical Imaging

Authors:Felix Wagner, Pramit Saha, Harry Anthony, J. Alison Noble, Konstantinos Kamnitsas

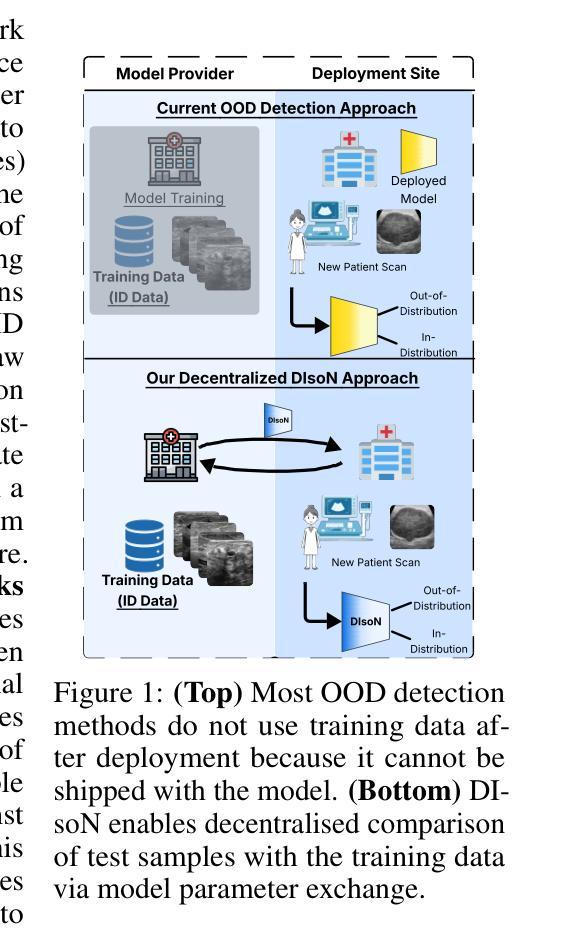

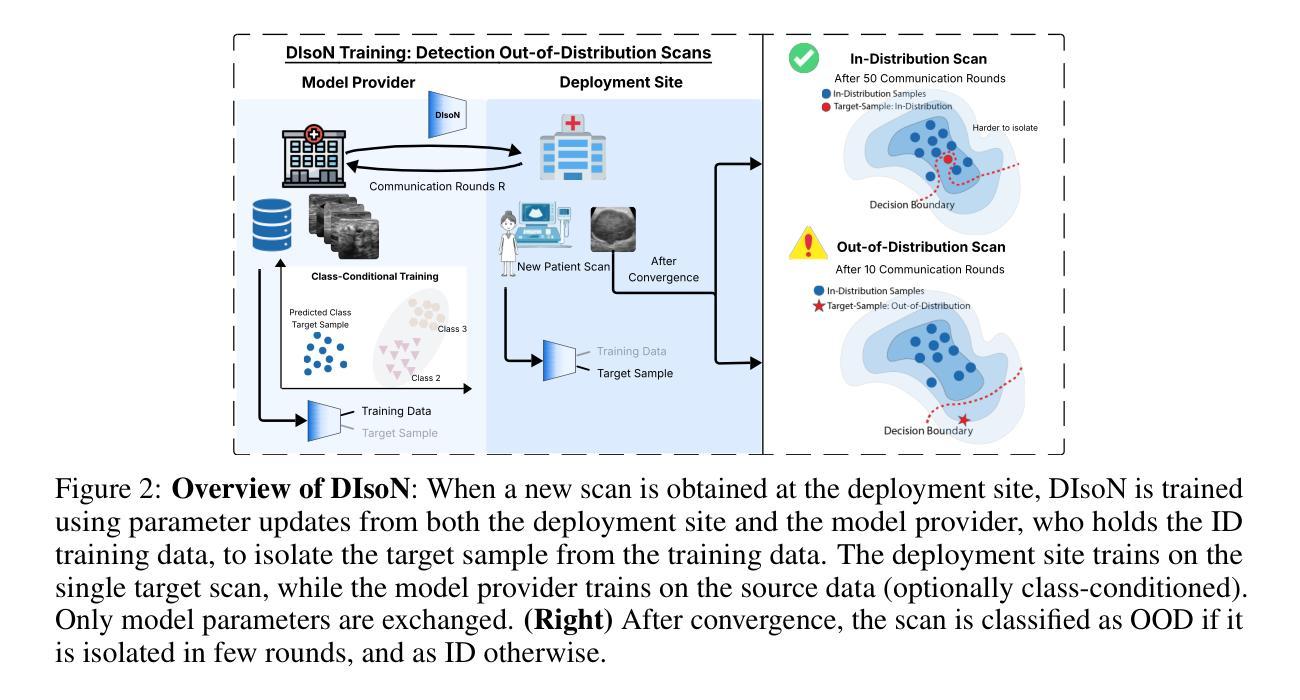

Safe deployment of machine learning (ML) models in safety-critical domains such as medical imaging requires detecting inputs with characteristics not seen during training, known as out-of-distribution (OOD) detection, to prevent unreliable predictions. Effective OOD detection after deployment could benefit from access to the training data, enabling direct comparison between test samples and the training data distribution to identify differences. State-of-the-art OOD detection methods, however, either discard training data after deployment or assume that test samples and training data are centrally stored together, an assumption that rarely holds in real-world settings. This is because shipping training data with the deployed model is usually impossible due to the size of training databases, as well as proprietary or privacy constraints. We introduce the Isolation Network, an OOD detection framework that quantifies the difficulty of separating a target test sample from the training data by solving a binary classification task. We then propose Decentralized Isolation Networks (DIsoN), which enables the comparison of training and test data when data-sharing is impossible, by exchanging only model parameters between the remote computational nodes of training and deployment. We further extend DIsoN with class-conditioning, comparing a target sample solely with training data of its predicted class. We evaluate DIsoN on four medical imaging datasets (dermatology, chest X-ray, breast ultrasound, histopathology) across 12 OOD detection tasks. DIsoN performs favorably against existing methods while respecting data-privacy. This decentralized OOD detection framework opens the way for a new type of service that ML developers could provide along with their models: providing remote, secure utilization of their training data for OOD detection services. Code will be available upon acceptance at: *****

在医学影像等安全关键领域部署机器学习(ML)模型时,需要检测训练过程中未见特征输入,这被称为离群分布(OOD)检测,以防止不可靠的预测。部署后的有效OOD检测可以受益于访问训练数据,从而能够直接比较测试样本和训练数据分布并找出差异。然而,最先进的OOD检测方法要么在部署后丢弃训练数据,要么假设测试样本和训练数据集中存储在一起,这在现实环境中很少成立。这是因为由于训练数据库的大小以及专有或隐私约束,通常不可能将训练数据与部署的模型一起传输。我们引入了隔离网络(Isolation Network),这是一种OOD检测框架,通过解决二分类任务来量化将目标测试样本从训练数据中分离的难度。然后,我们提出了去中心化隔离网络(Decentralized Isolation Networks,简称DIsoN),在数据共享不可能的情况下,通过交换仅模型参数来比较训练和测试数据。我们进一步扩展了DIsoN的类条件性,仅将目标样本与其预测类的训练数据进行比较。我们在四个医学影像数据集(皮肤科、胸部X射线、乳腺超声、组织病理学)上进行了12项OOD检测任务评估DIsoN。相较于现有方法,DIsoN表现良好且尊重数据隐私。这种去中心化的OOD检测框架为ML开发者提供了一种新的服务方式:在提供模型的同时提供远程安全地使用其训练数据进行OOD检测服务。代码在接受后将提供于:*****

论文及项目相关链接

摘要

在医学影像等安全关键领域,部署机器学习模型时,需要检测具有未在训练中见过的特征的输入,即所谓的“超出分布范围”(OOD)的检测,以防止不可靠的预测。有效的部署后的OOD检测可以受益于访问训练数据,以便对测试样本和训练数据分布进行直接比较以识别差异。然而,当前主流的OOD检测方法要么在部署后丢弃训练数据,要么假设测试样本和训练数据是集中存储的,这在现实环境中很少见。这是因为由于训练数据库的大小以及专有或隐私约束,通常不可能将训练数据与部署的模型一起传输。我们引入了隔离网络(Isolation Network),这是一种OOD检测框架,它通过解决二分类任务来量化将目标测试样本与训练数据分离的难度。然后,我们提出了去中心化隔离网络(Decentralized Isolation Networks,DIsoN),在数据共享不可能的情况下,通过交换模型参数在训练和部署的远程计算节点之间进行比较。我们进一步将DIsoN扩展到类条件,仅将目标样本与其预测类的训练数据进行比较。我们在四个医学成像数据集(皮肤病学、胸部X光、乳腺超声、组织病理学)上对DIsoN进行了评估,跨越了12个OOD检测任务。DIsoN表现良好,同时尊重数据隐私。这种去中心化的OOD检测框架为ML开发者提供了一种新型服务模式:在提供模型的同时提供远程、安全地利用其训练数据进行OOD检测服务。

关键见解

- 机器学习的部署在医疗成像等安全关键领域需要检测超出训练数据分布范围的输入(OOD)。

- 现有的OOD检测方法在现实场景中面临挑战,如训练数据不可用或数据集中存储的假设不成立。

- 引入隔离网络(Isolation Network)框架,通过二分类任务量化测试样本与训练数据的分离难度。

- 提出Decentralized Isolation Networks (DIsoN),在无法共享数据的情况下比较训练和测试数据,仅通过交换模型参数。

- DIsoN通过类条件扩展,仅将目标样本与相应类的训练数据进行比较。

- 在四个医学成像数据集上评估DIsoN,表现良好,且尊重数据隐私。

点此查看论文截图

Adapting Vision-Language Foundation Model for Next Generation Medical Ultrasound Image Analysis

Authors:Jingguo Qu, Xinyang Han, Tonghuan Xiao, Jia Ai, Juan Wu, Tong Zhao, Jing Qin, Ann Dorothy King, Winnie Chiu-Wing Chu, Jing Cai, Michael Tin-Cheung Ying

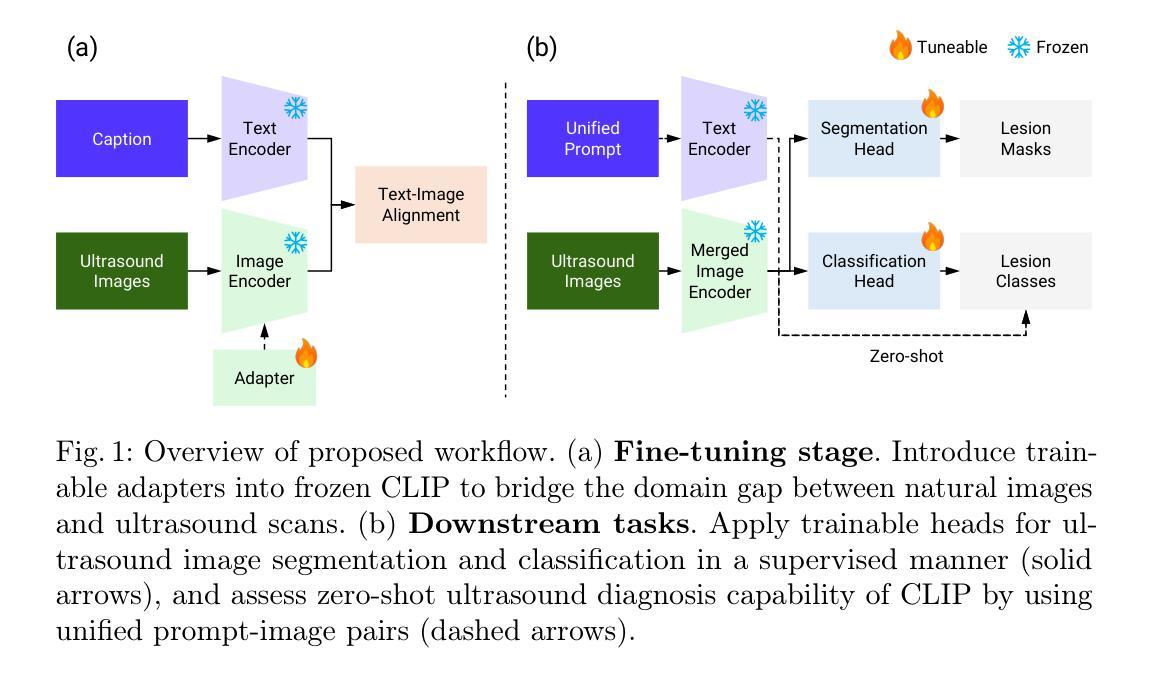

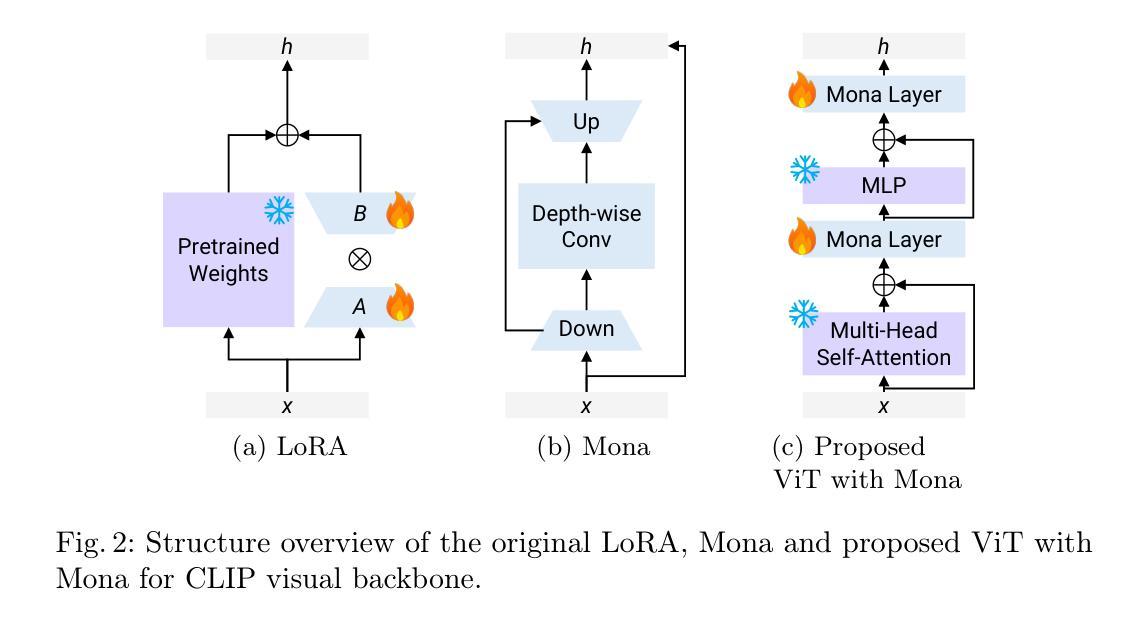

Medical ultrasonography is an essential imaging technique for examining superficial organs and tissues, including lymph nodes, breast, and thyroid. It employs high-frequency ultrasound waves to generate detailed images of the internal structures of the human body. However, manually contouring regions of interest in these images is a labor-intensive task that demands expertise and often results in inconsistent interpretations among individuals. Vision-language foundation models, which have excelled in various computer vision applications, present new opportunities for enhancing ultrasound image analysis. Yet, their performance is hindered by the significant differences between natural and medical imaging domains. This research seeks to overcome these challenges by developing domain adaptation methods for vision-language foundation models. In this study, we explore the fine-tuning pipeline for vision-language foundation models by utilizing large language model as text refiner with special-designed adaptation strategies and task-driven heads. Our approach has been extensively evaluated on six ultrasound datasets and two tasks: segmentation and classification. The experimental results show that our method can effectively improve the performance of vision-language foundation models for ultrasound image analysis, and outperform the existing state-of-the-art vision-language and pure foundation models. The source code of this study is available at https://github.com/jinggqu/NextGen-UIA.

医学超声是检查浅表器官和组织(包括淋巴结、乳房和甲状腺)的重要成像技术。它利用高频超声波生成人体内部结构的详细图像。然而,在这些图像上手动勾画感兴趣区域是一项劳动密集型任务,需要专业知识,并且在不同个体之间常常导致解释不一致。视觉语言基础模型在各种计算机视觉应用中表现出色,为增强超声图像分析提供了新的机会。然而,由于其与自然和医学影像域之间的显著差异,它们的表现受到了阻碍。本研究旨在通过开发视觉语言基础模型的域适应方法来克服这些挑战。在这项研究中,我们探索了视觉语言基础模型的微调流程,利用大型语言模型作为文本精炼器,并采用了特殊设计的适应策略和任务驱动的头。我们的方法已在六个超声数据集和两个任务(分割和分类)上进行了广泛评估。实验结果表明,我们的方法可以有效地提高视觉语言基础模型在超声图像分析方面的性能,并且优于现有的最先进的视觉语言模型和纯基础模型。该研究的源代码可在https://github.com/jinggqu/NextGen-UIA获取。

论文及项目相关链接

Summary:医学超声是检查浅表器官和组织的重要成像技术,包括淋巴结、乳房和甲状腺。但手动在这些图像中轮廓化感兴趣区域是一项劳动密集型任务,需求专家技能且易出现不同个体间的不一致解释。本研究旨在克服这些挑战,通过开发针对视觉语言基础模型的领域适应方法。研究通过利用大型语言模型作为文本精炼器,采用特殊设计的适应策略和任务驱动头来微调视觉语言基础模型。在六个超声数据集和两个任务(分割和分类)上进行了广泛评估,实验结果表明,该方法可有效提高视觉语言基础模型在超声图像分析中的性能,并优于现有的最先进的视觉语言和纯基础模型。

Key Takeaways:

- 医学超声是一种重要的成像技术,用于检查浅表器官和组织。

- 手动轮廓感兴趣区域在医学超声图像分析中是劳动密集型的,并且存在解释不一致的问题。

- 研究利用视觉语言基础模型克服挑战,通过开发领域适应方法提高模型性能。

- 采用大型语言模型作为文本精炼器,通过特殊设计的适应策略和任务驱动头来微调视觉语言基础模型。

- 在多个超声数据集和分割及分类任务上进行了广泛评估。

- 实验结果表明该方法能有效提高超声图像分析的模型性能。

点此查看论文截图