⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-06-17 更新

Motion-R1: Chain-of-Thought Reasoning and Reinforcement Learning for Human Motion Generation

Authors:Runqi Ouyang, Haoyun Li, Zhenyuan Zhang, Xiaofeng Wang, Zheng Zhu, Guan Huang, Xingang Wang

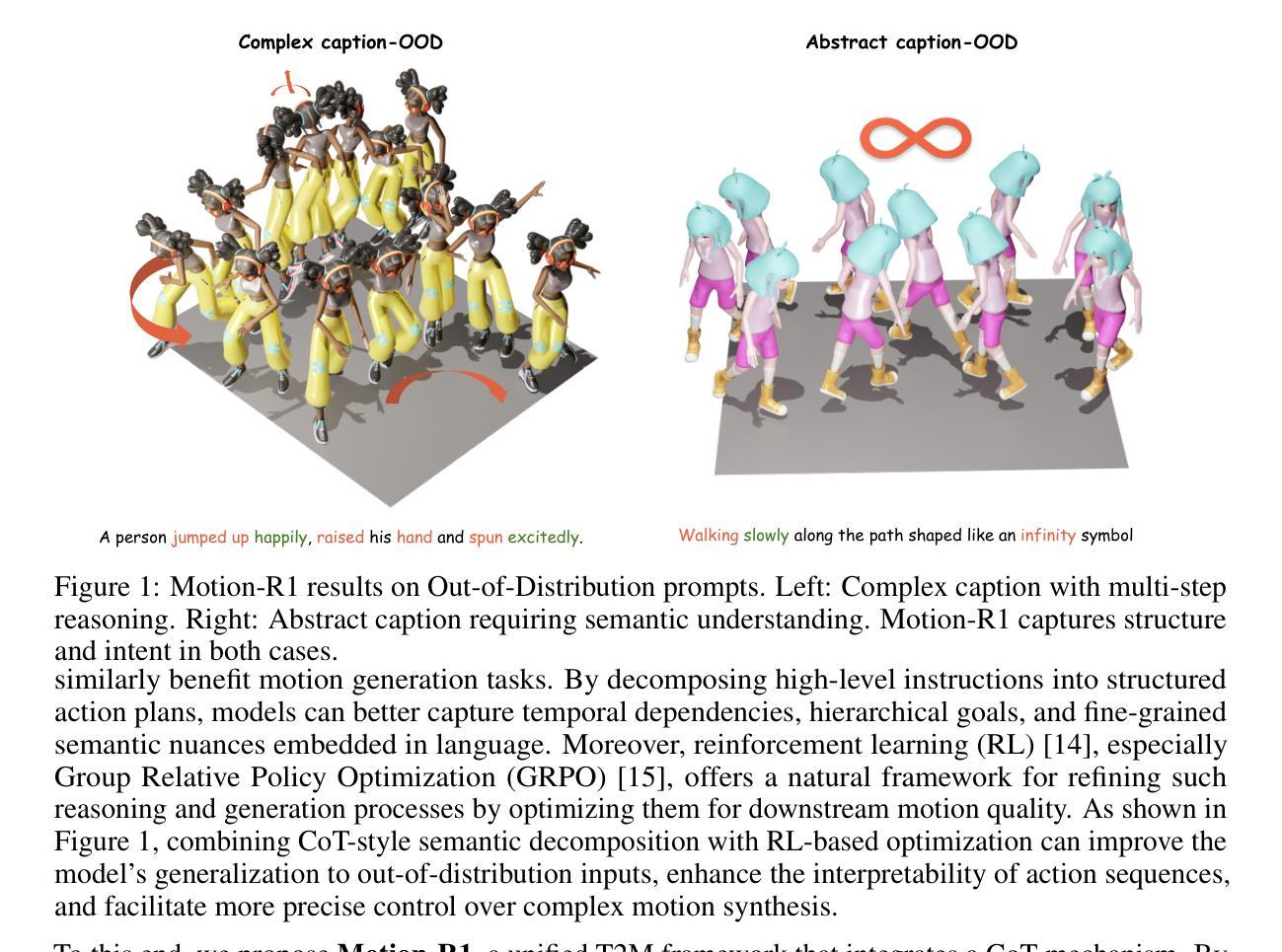

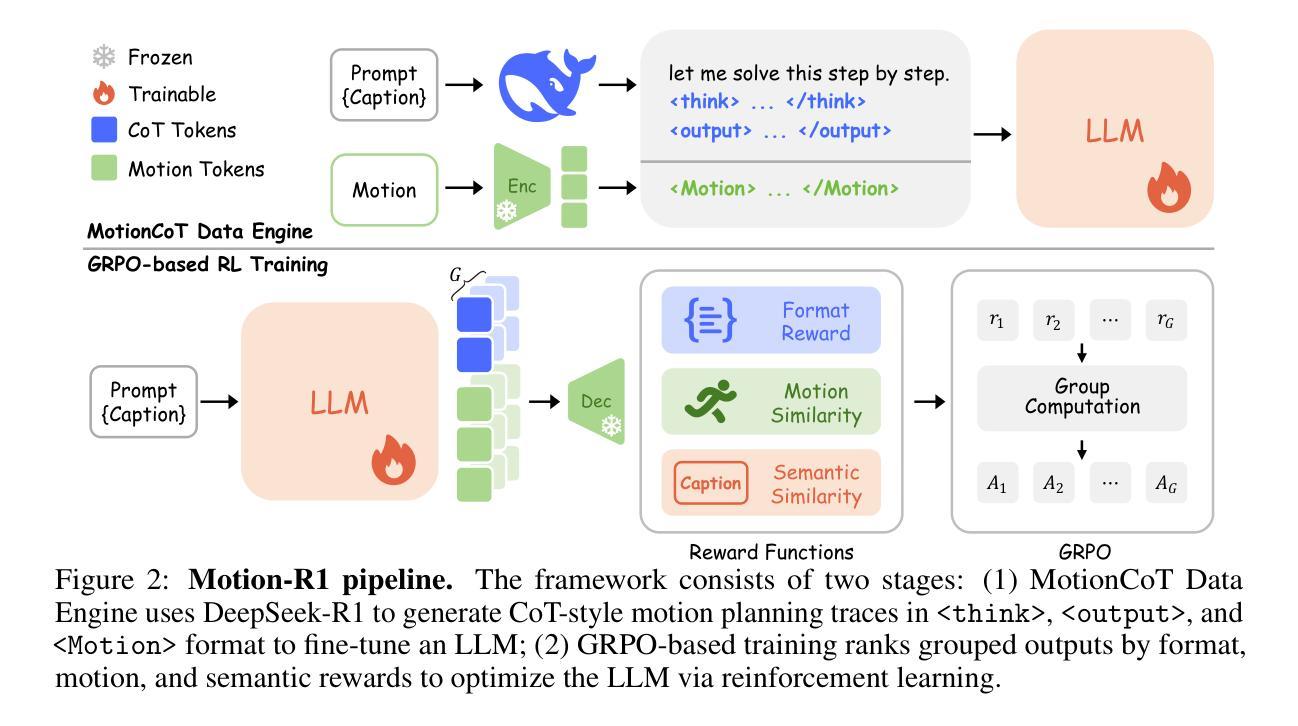

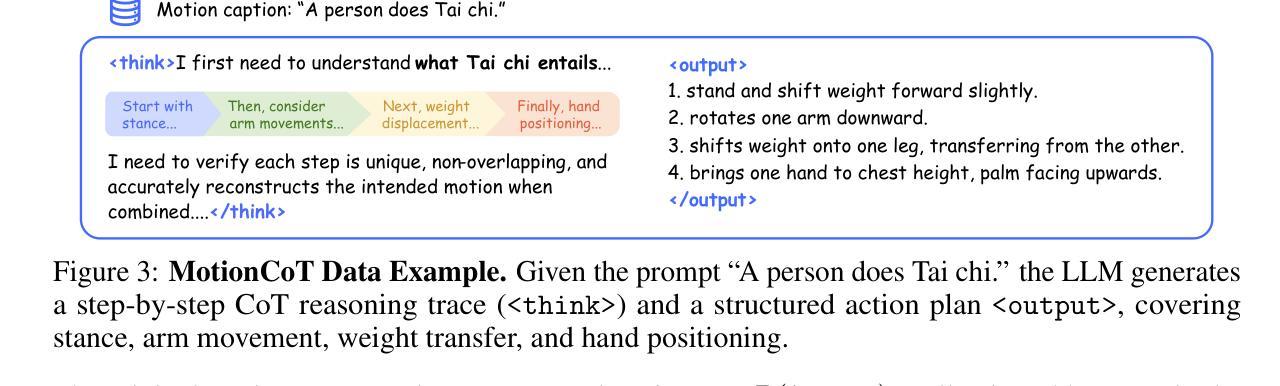

Recent advances in large language models, especially in natural language understanding and reasoning, have opened new possibilities for text-to-motion generation. Although existing approaches have made notable progress in semantic alignment and motion synthesis, they often rely on end-to-end mapping strategies that fail to capture deep linguistic structures and logical reasoning. Consequently, generated motions tend to lack controllability, consistency, and diversity. To address these limitations, we propose Motion-R1, a unified motion-language modeling framework that integrates a Chain-of-Thought mechanism. By explicitly decomposing complex textual instructions into logically structured action paths, Motion-R1 provides high-level semantic guidance for motion generation, significantly enhancing the model’s ability to interpret and execute multi-step, long-horizon, and compositionally rich commands. To train our model, we adopt Group Relative Policy Optimization, a reinforcement learning algorithm designed for large models, which leverages motion quality feedback to optimize reasoning chains and motion synthesis jointly. Extensive experiments across multiple benchmark datasets demonstrate that Motion-R1 achieves competitive or superior performance compared to state-of-the-art methods, particularly in scenarios requiring nuanced semantic understanding and long-term temporal coherence. The code, model and data will be publicly available.

近期大型语言模型的进展,特别是在自然语言理解和推理方面,为文本到动作生成领域带来了新的可能性。尽管现有方法已经在语义对齐和运动合成方面取得了显著的进步,但它们通常依赖于端到端的映射策略,无法捕捉深层语言结构和逻辑推理。因此,生成的动作往往缺乏可控性、一致性和多样性。为了解决这些局限性,我们提出了Motion-R1,这是一个统一的动作语言建模框架,集成了Chain-of-Thought机制。通过显式地将复杂的文本指令分解为逻辑结构化的动作路径,Motion-R1为动作生成提供高级语义指导,显著提高了模型解释和执行多步骤、长期和组合丰富指令的能力。为了训练我们的模型,我们采用了针对大型模型的强化学习算法——Group Relative Policy Optimization,该算法利用动作质量反馈来联合优化推理链和动作合成。在多个基准数据集上的广泛实验表明,Motion-R1在需要微妙语义理解和长期时间连贯性的场景中,实现了与最先进方法相当的或更好的性能。代码、模型和数据将公开可用。

论文及项目相关链接

Summary

本文介绍了基于大型语言模型的文本到动作生成技术的新进展。针对现有方法的局限性,提出了一种名为Motion-R1的统一运动语言建模框架,该框架集成了链式思维机制,能够明确地将复杂文本指令分解为逻辑结构化的行动路径,为动作生成提供高级语义指导。采用针对大型模型的强化学习算法——群组相对策略优化,通过动作质量反馈联合优化推理链和动作合成。实验表明,Motion-R1在多个基准数据集上实现了与最新技术相比具有竞争力的性能,特别是在需要微妙语义理解和长期时间连贯性的场景中。

Key Takeaways

- 大型语言模型在文本到动作生成领域的新进展及其在自然语言理解和推理方面的应用。

- 现有方法在语义对齐和动作合成方面的进展以及它们依赖的端到端映射策略的局限性。

- Motion-R1框架的提出,通过链式思维机制明确分解复杂文本指令为逻辑结构化的行动路径,为动作生成提供高级语义指导。

- 采用群组相对策略优化这一强化学习算法,通过动作质量反馈联合优化推理链和动作合成。

- Motion-R1框架在多个基准数据集上的表现,与最新技术相比具有竞争力。

- Motion-R1框架特别适用于需要微妙语义理解和长期时间连贯性的场景。

点此查看论文截图