⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-06-26 更新

Self-Supervised Multimodal NeRF for Autonomous Driving

Authors:Gaurav Sharma, Ravi Kothari, Josef Schmid

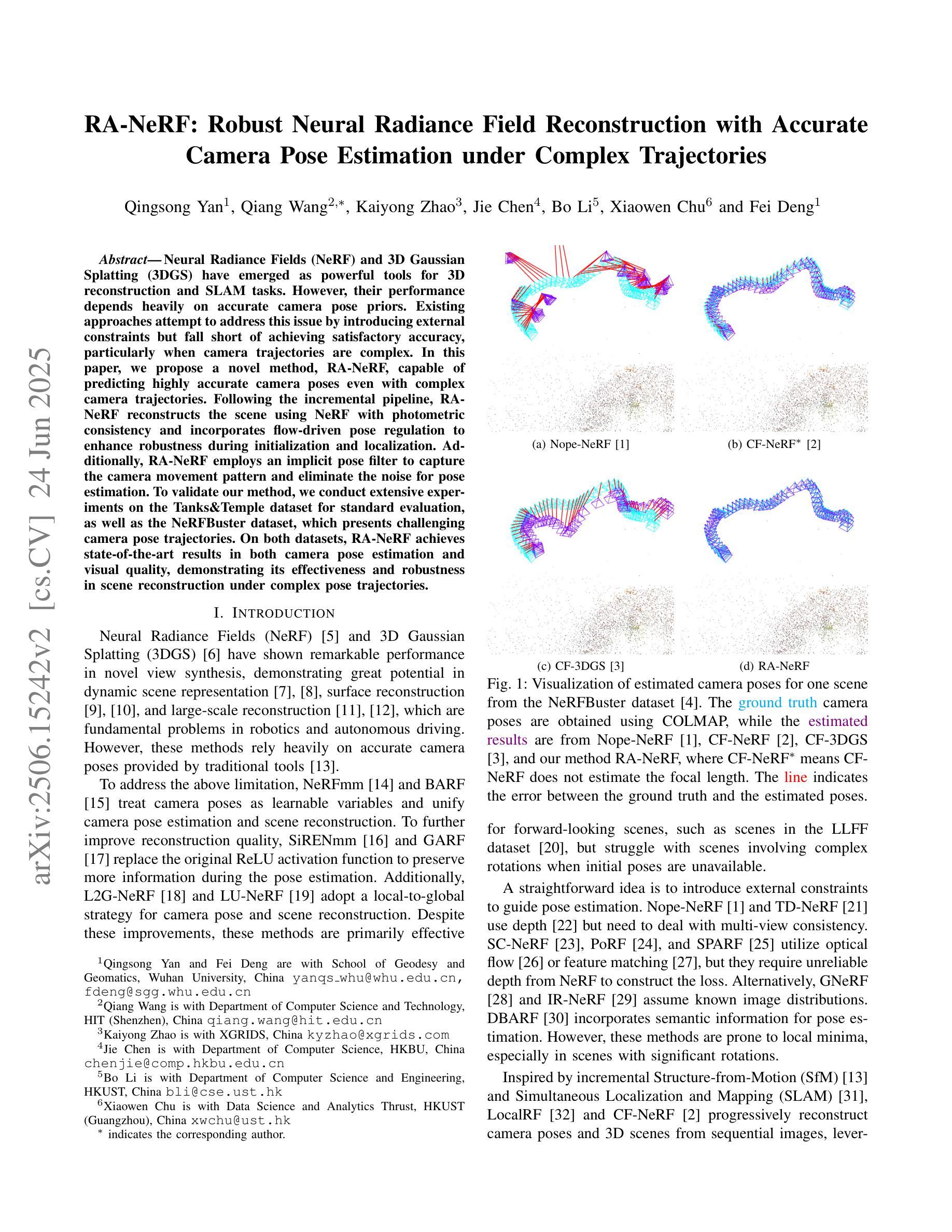

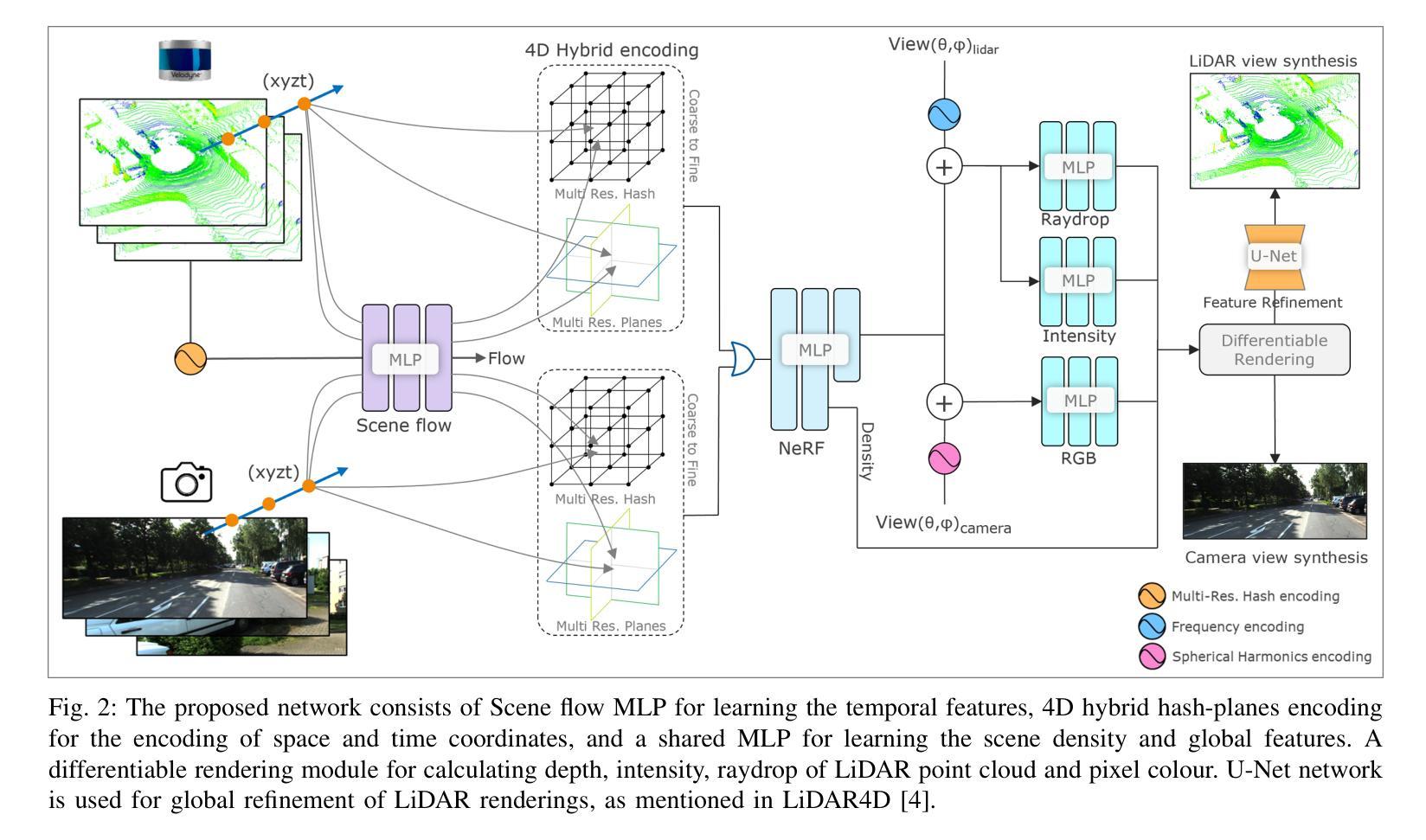

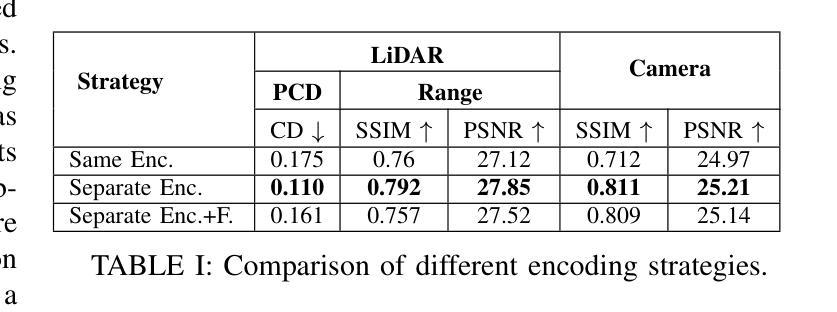

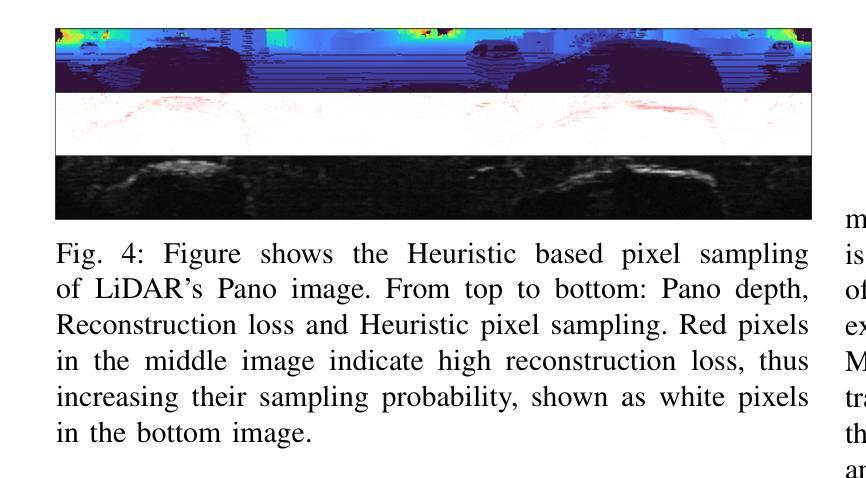

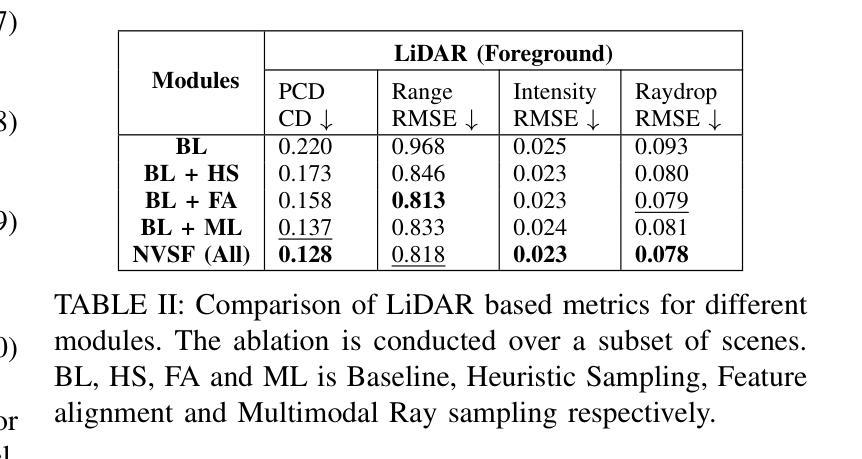

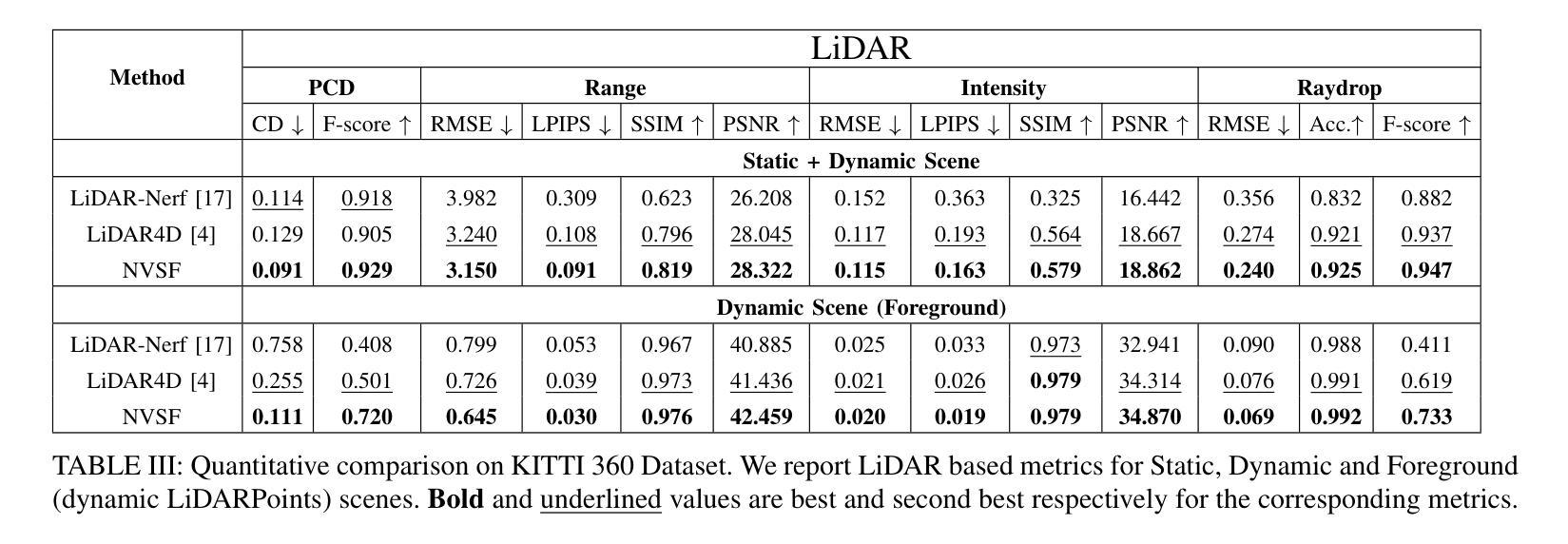

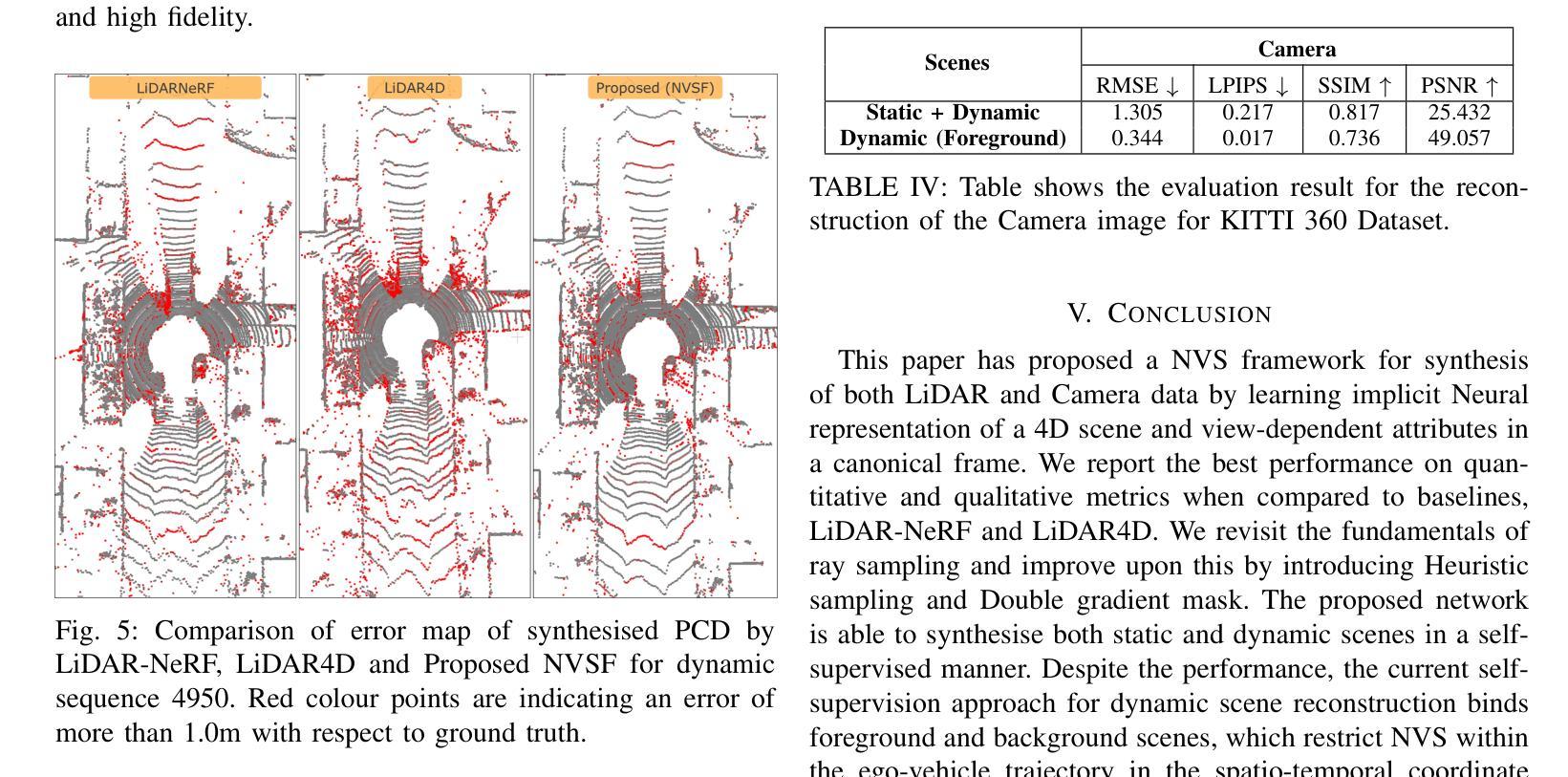

In this paper, we propose a Neural Radiance Fields (NeRF) based framework, referred to as Novel View Synthesis Framework (NVSF). It jointly learns the implicit neural representation of space and time-varying scene for both LiDAR and Camera. We test this on a real-world autonomous driving scenario containing both static and dynamic scenes. Compared to existing multimodal dynamic NeRFs, our framework is self-supervised, thus eliminating the need for 3D labels. For efficient training and faster convergence, we introduce heuristic-based image pixel sampling to focus on pixels with rich information. To preserve the local features of LiDAR points, a Double Gradient based mask is employed. Extensive experiments on the KITTI-360 dataset show that, compared to the baseline models, our framework has reported best performance on both LiDAR and Camera domain. Code of the model is available at https://github.com/gaurav00700/Selfsupervised-NVSF

在这篇论文中,我们提出了一个基于神经辐射场(NeRF)的框架,称为新型视图合成框架(NVSF)。该框架联合学习激光雷达和相机的时空变化场景的隐式神经表示。我们在包含静态和动态场景的真实世界自动驾驶场景中对其实行了测试。与现有的多模式动态NeRF相比,我们的框架是自我监督的,从而不需要3D标签。为了进行高效的训练和更快的收敛,我们引入了基于启发式图像像素采样,以关注信息丰富的像素。为了保留激光雷达点的局部特征,采用了基于双重梯度的掩膜。在KITTI-360数据集上的广泛实验表明,与基准模型相比,我们的框架在激光雷达和相机领域都取得了最佳性能。模型代码可在https://github.com/gaurav00700/Selfsupervised-NVSF找到。

论文及项目相关链接

Summary

NeRF技术为基础,提出名为NVSF的新型视图合成框架,用于联合学习激光雷达和相机的时空变化场景的隐式神经表示。采用自监督学习方式,无需3D标签。通过启发式图像像素采样提高训练效率和收敛速度,采用双重梯度掩膜保留激光雷达点的局部特征。在KITTI-360数据集上的实验表明,与基准模型相比,该框架在激光雷达和相机领域均达到最佳性能。

Key Takeaways

- 提出了基于NeRF的NVSF框架,用于联合学习激光雷达和相机的时空变化场景的表示。

- 采用自监督学习方式,消除了对3D标签的需求。

- 引入启发式图像像素采样,以提高训练效率和收敛速度。

- 采用双重梯度掩膜技术,保留激光雷达点的局部特征。

- 在KITTI-360数据集上进行了广泛实验,表明NVSF框架性能优越。

- 该框架对静态和动态场景均适用。

点此查看论文截图

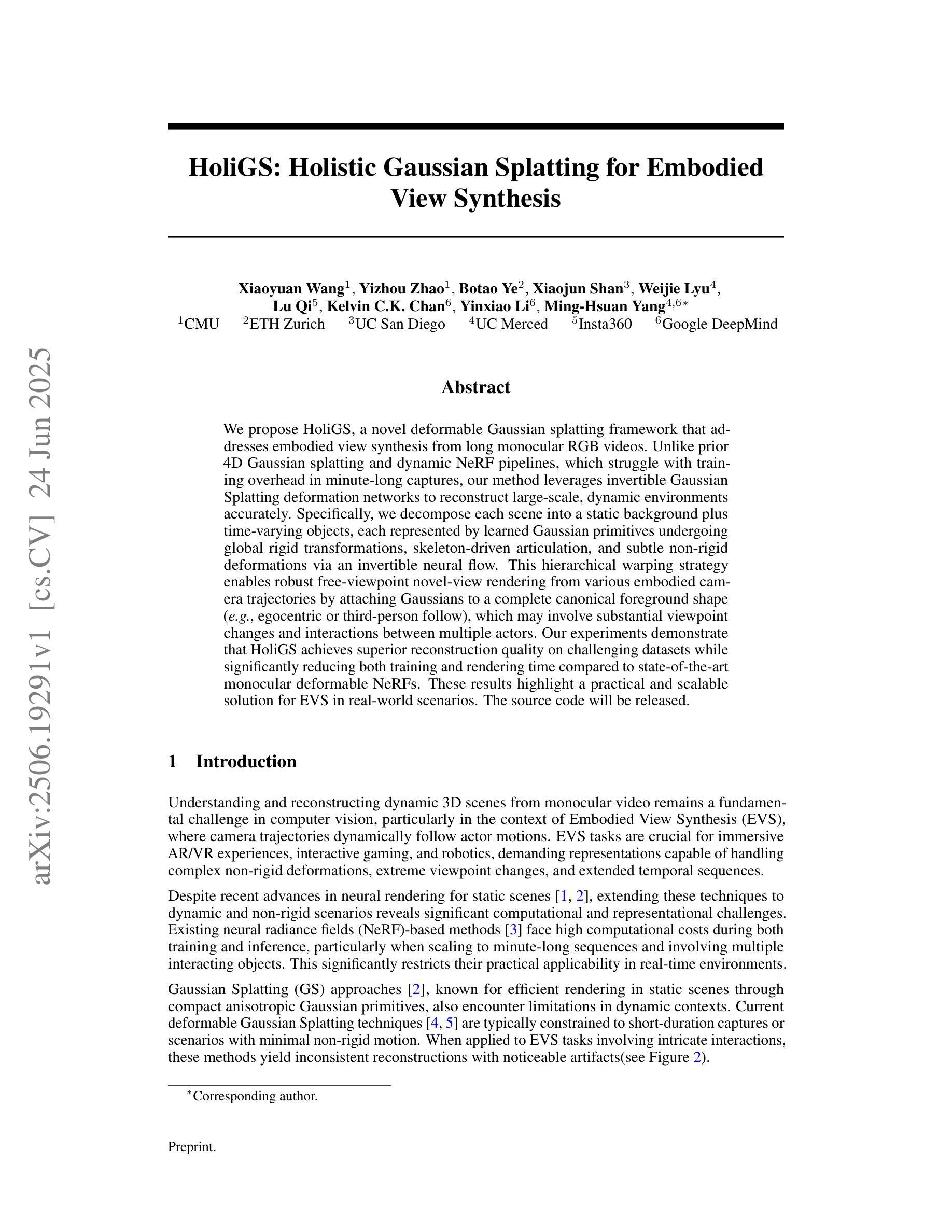

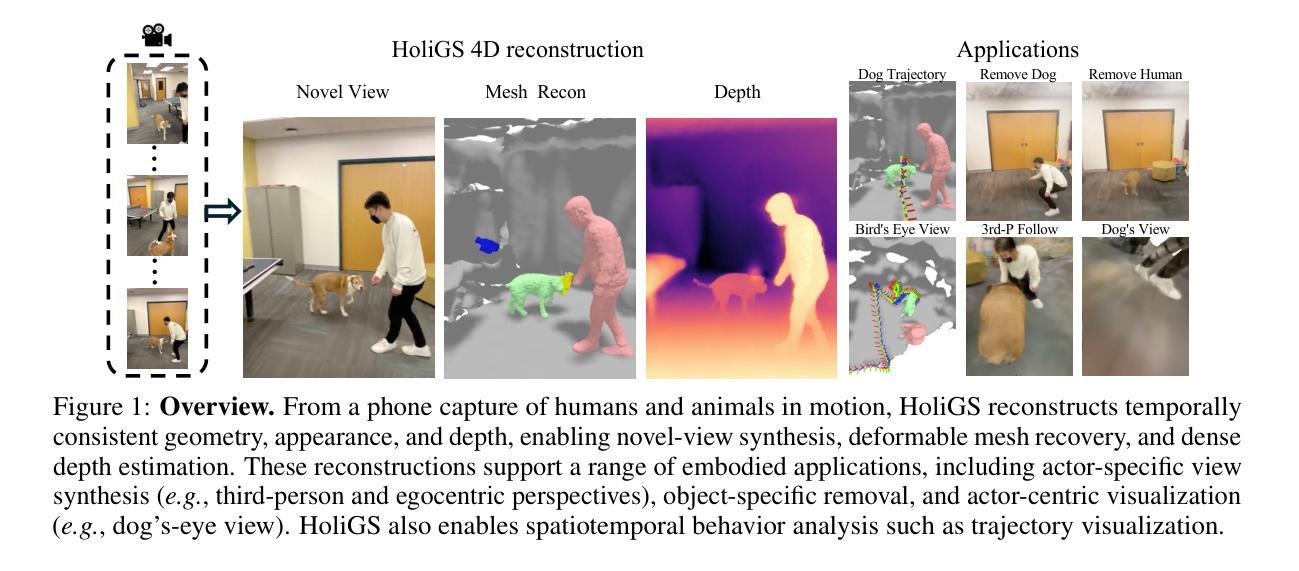

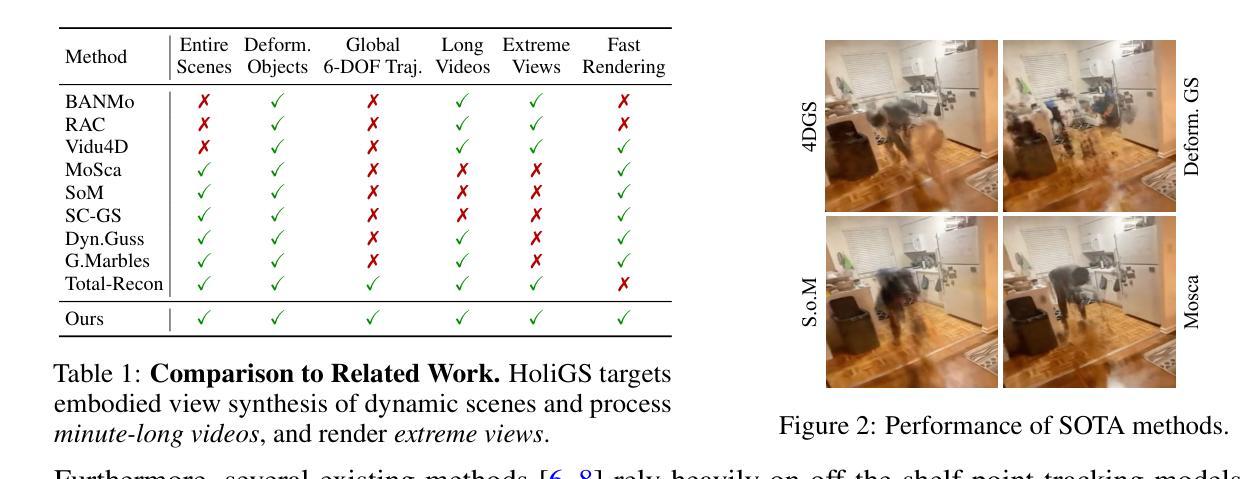

HoliGS: Holistic Gaussian Splatting for Embodied View Synthesis

Authors:Xiaoyuan Wang, Yizhou Zhao, Botao Ye, Xiaojun Shan, Weijie Lyu, Lu Qi, Kelvin C. K. Chan, Yinxiao Li, Ming-Hsuan Yang

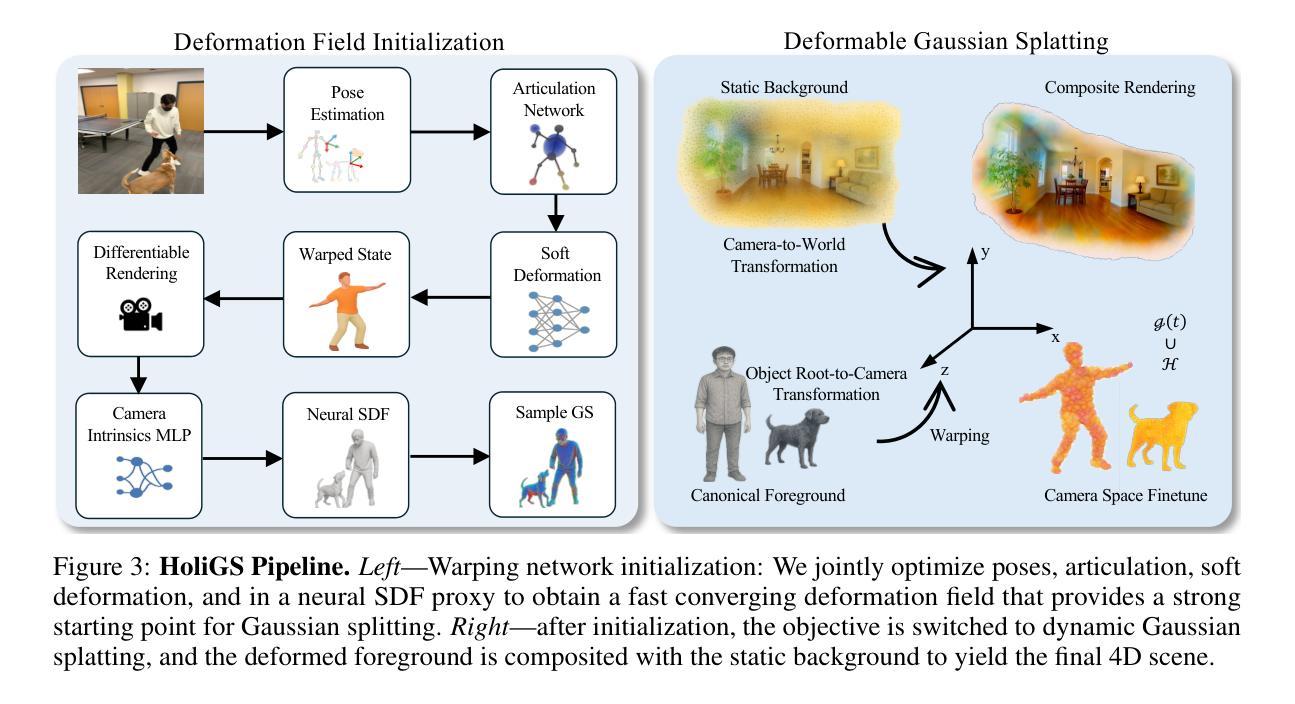

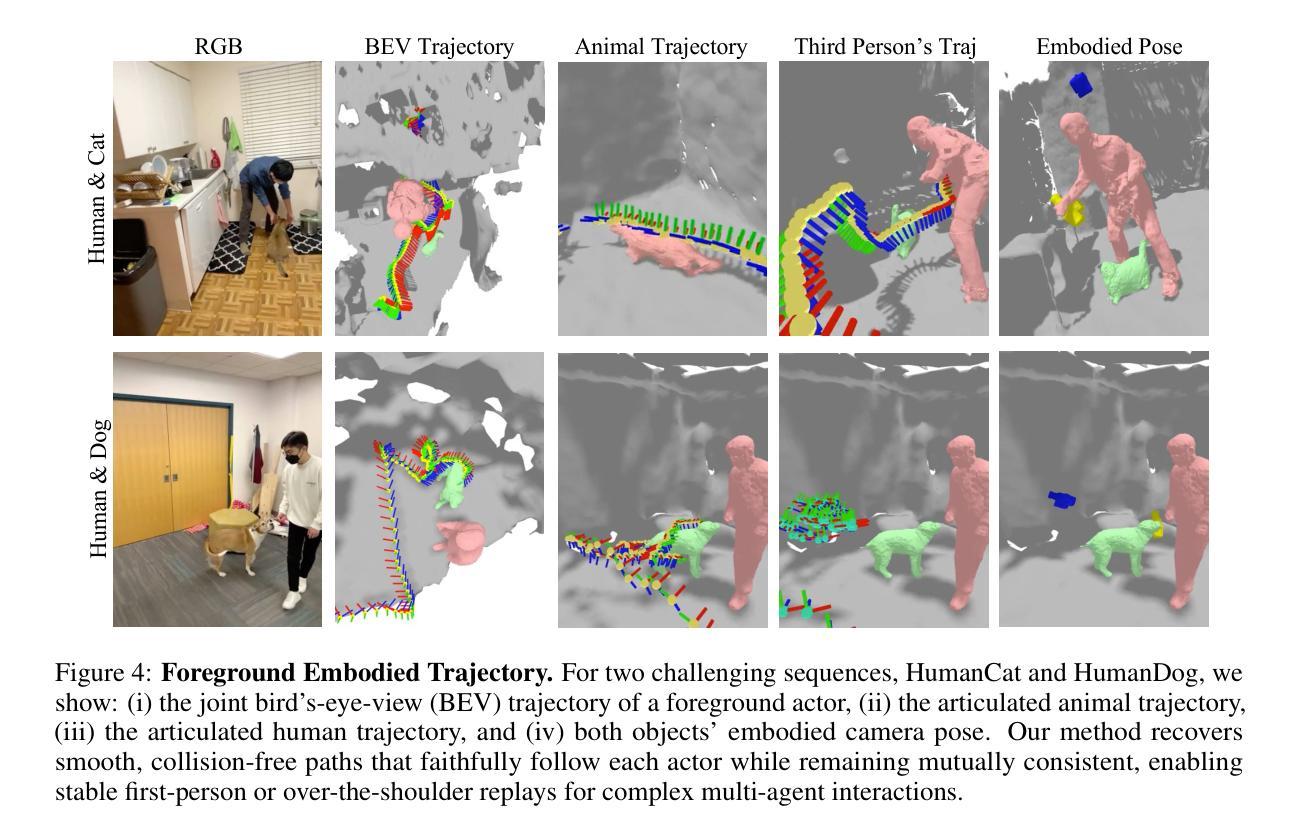

We propose HoliGS, a novel deformable Gaussian splatting framework that addresses embodied view synthesis from long monocular RGB videos. Unlike prior 4D Gaussian splatting and dynamic NeRF pipelines, which struggle with training overhead in minute-long captures, our method leverages invertible Gaussian Splatting deformation networks to reconstruct large-scale, dynamic environments accurately. Specifically, we decompose each scene into a static background plus time-varying objects, each represented by learned Gaussian primitives undergoing global rigid transformations, skeleton-driven articulation, and subtle non-rigid deformations via an invertible neural flow. This hierarchical warping strategy enables robust free-viewpoint novel-view rendering from various embodied camera trajectories by attaching Gaussians to a complete canonical foreground shape (\eg, egocentric or third-person follow), which may involve substantial viewpoint changes and interactions between multiple actors. Our experiments demonstrate that \ourmethod~ achieves superior reconstruction quality on challenging datasets while significantly reducing both training and rendering time compared to state-of-the-art monocular deformable NeRFs. These results highlight a practical and scalable solution for EVS in real-world scenarios. The source code will be released.

我们提出了HoliGS,这是一种新型的可变形高斯展布框架,用于从长单目RGB视频中合成全身视角。不同于之前面临长达数分钟捕捉时的训练开销问题的四维高斯展布和动态NeRF管道,我们的方法利用可逆高斯展布变形网络来精确重建大规模动态环境。具体来说,我们将每个场景分解为静态背景加上随时间变化的物体,每个物体都由经历全局刚体变换、骨架驱动的关节活动和通过可逆神经流产生的微妙非刚性变形的可学习高斯基本体表示。这种分层映射策略允许通过将高斯附着到完整的规范前景形状(例如以自我为中心或第三人称跟踪)来实现稳健的自由视点新型视图渲染,这可能涉及大量的视点变化和多个参与者之间的交互。我们的实验表明,在具有挑战性的数据集上,我们的方法实现了较高的重建质量,与最先进的单目可变形NeRF相比,显著减少了训练和渲染时间。这些结果强调了我们在现实世界的场景视图中实现实用和可扩展解决方案的实用性。源代码将发布。

论文及项目相关链接

Summary

本文提出一种名为HoliGS的新型可变形高斯喷绘框架,用于从长单目RGB视频中合成视图。相较于以往四维高斯喷绘和动态NeRF管道,在长达数分钟的捕捉中面临训练开销大的问题,我们的方法利用可逆高斯喷绘变形网络精确地重建大规模动态环境。通过分解场景为静态背景和时间变化物体,采用层次化变形策略来实现各种视角下视点的新型视图渲染。此外,高斯采样贴合完整的前台形状提供了更高效的可视化展示方式。实验结果展示了该方法在具有挑战性的数据集上达到了卓越的重构质量,并且相较于单目可变NeRF大大缩短了训练和渲染时间。这表明了该方法在实际和可量化的场景中实现了对EVS的有效解决方案。我们将公开源代码。

Key Takeaways

- HoliGS是一种新的可变形高斯喷绘框架,用于从长单目RGB视频中合成视图。

- 与其他方法相比,HoliGS利用可逆高斯喷绘变形网络精确重建大规模动态环境。

- HoliGS将场景分解为静态背景和时间变化物体,并采用层次化变形策略实现新颖视图渲染。

- 高斯采样贴合完整前台形状提供高效可视化展示方式。

- 实验结果表明HoliGS在挑战性数据集上实现卓越重构质量,且训练及渲染时间大幅降低。

- HoliGS提供实用且可扩展的解决方案,适用于真实世界场景中的EVS问题。

点此查看论文截图

RA-NeRF: Robust Neural Radiance Field Reconstruction with Accurate Camera Pose Estimation under Complex Trajectories

Authors:Qingsong Yan, Qiang Wang, Kaiyong Zhao, Jie Chen, Bo Li, Xiaowen Chu, Fei Deng

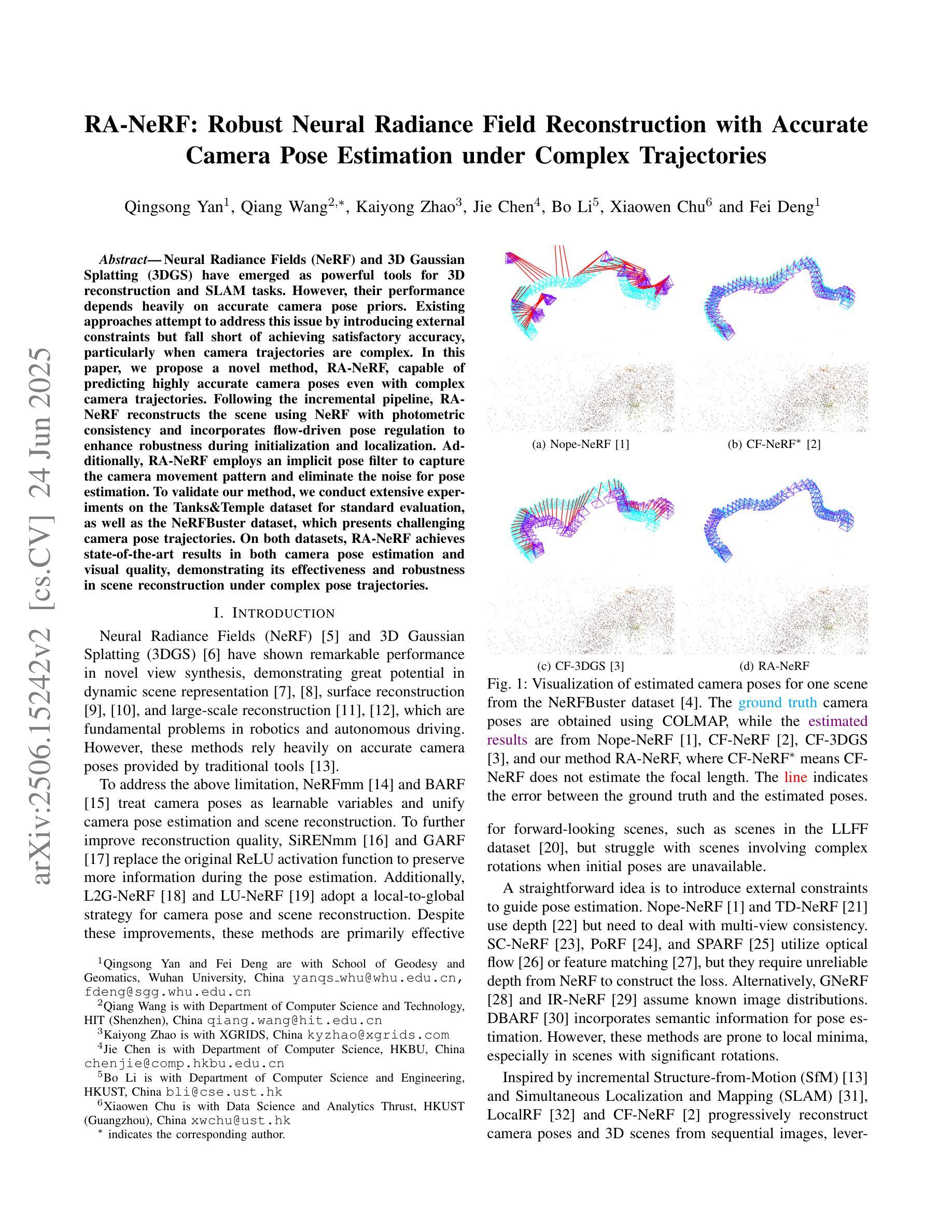

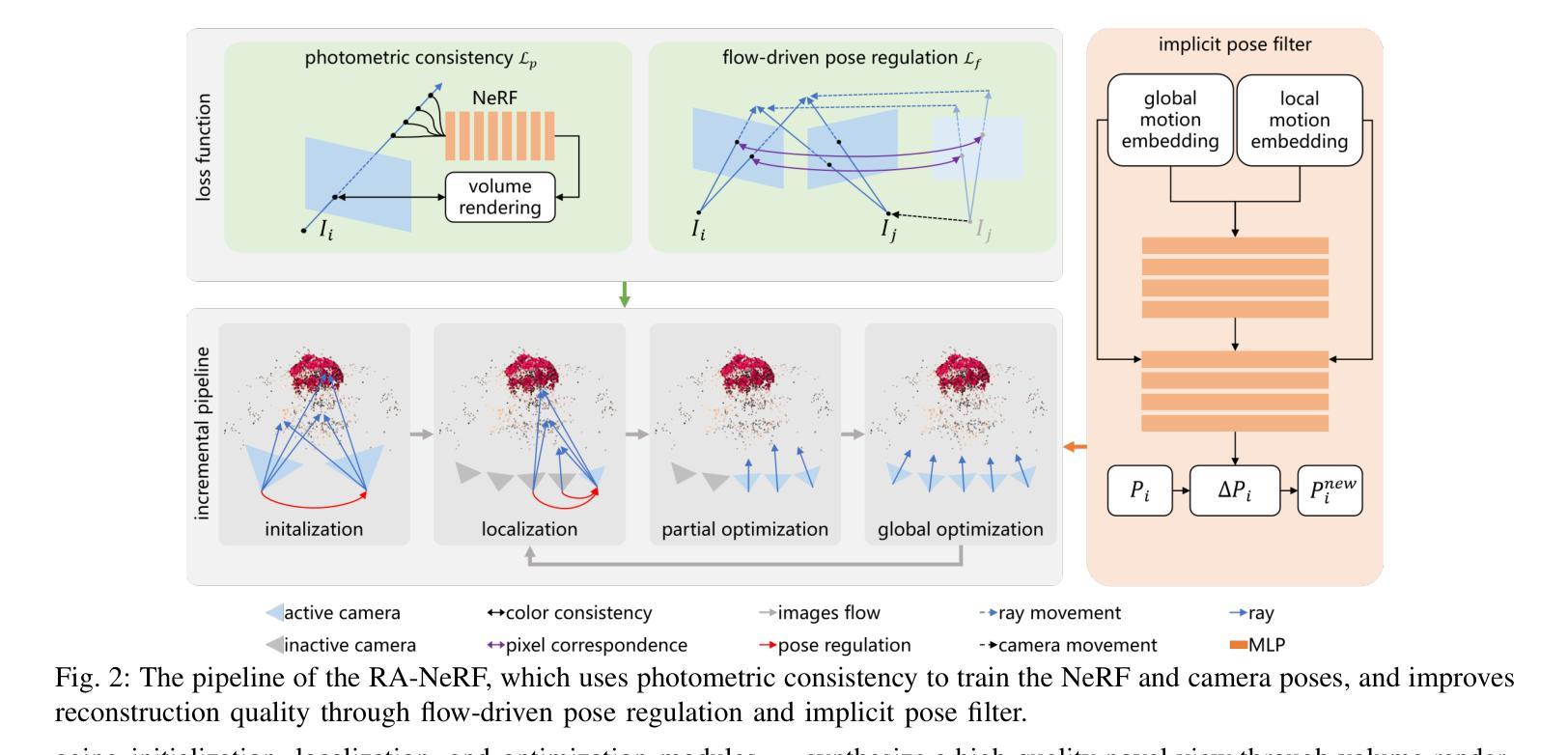

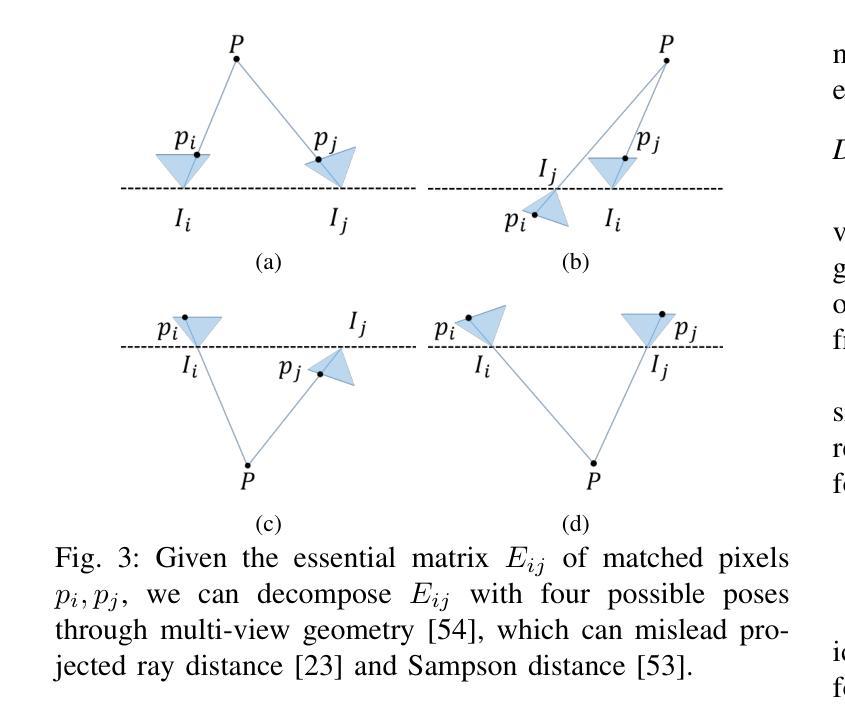

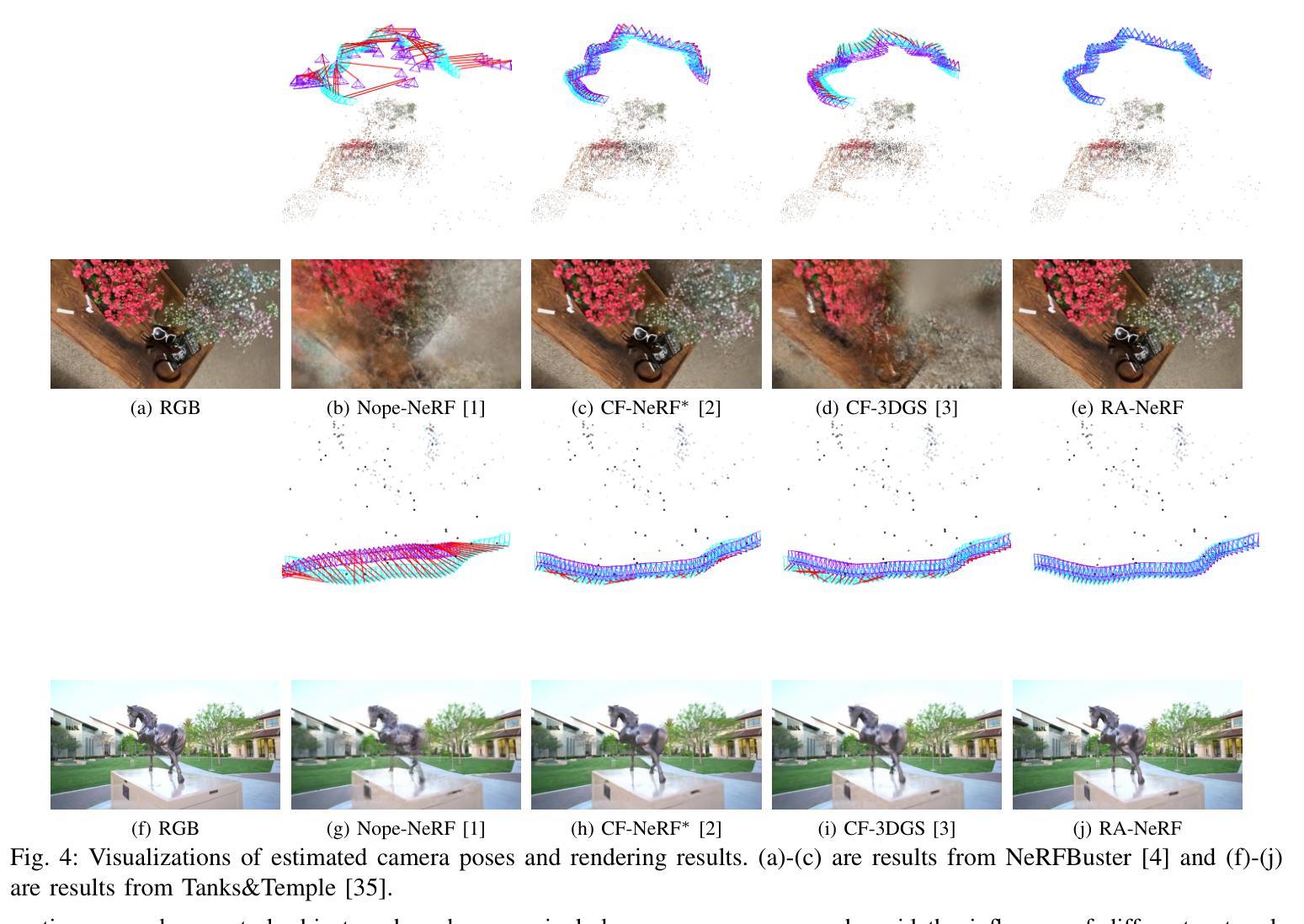

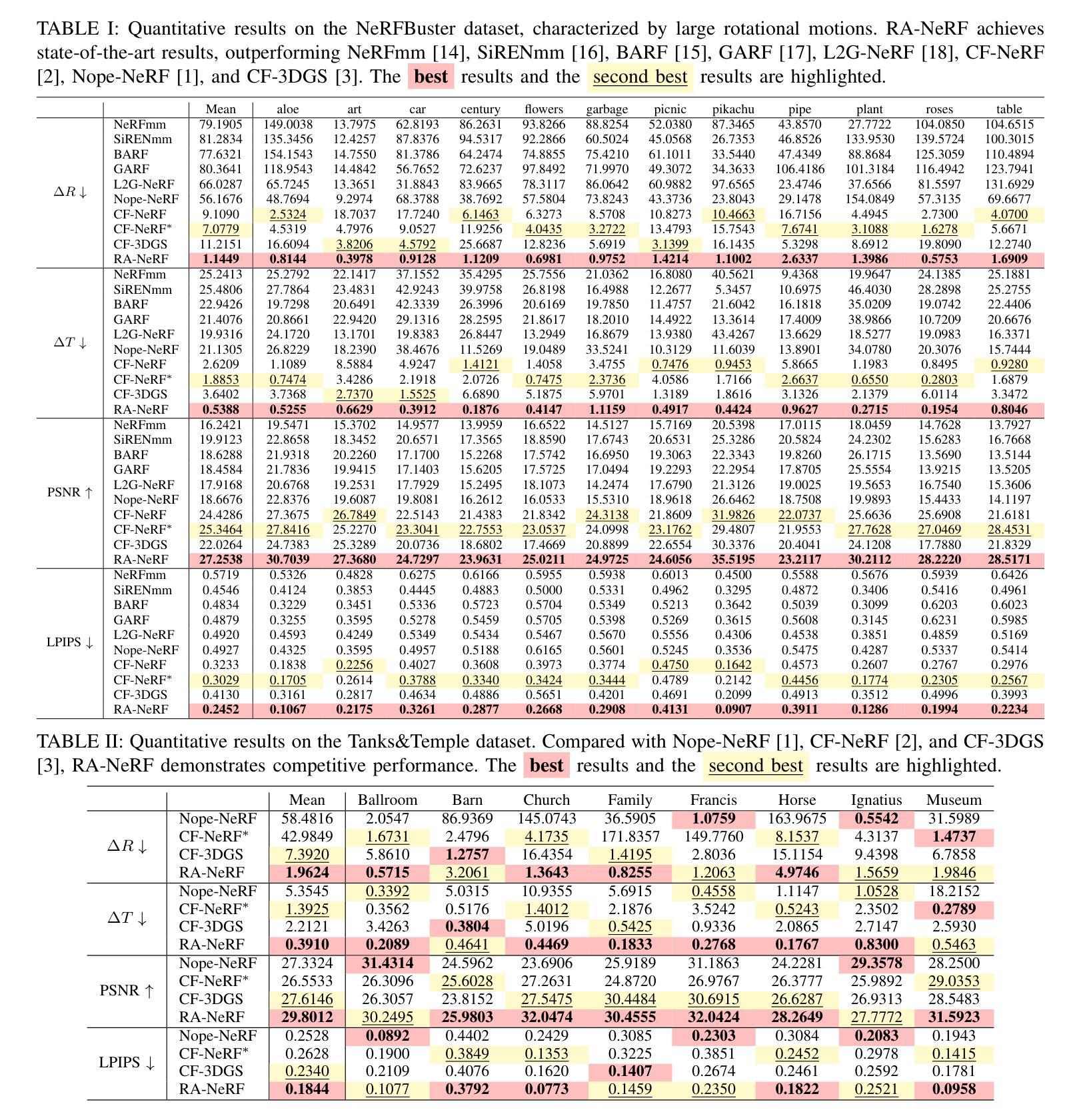

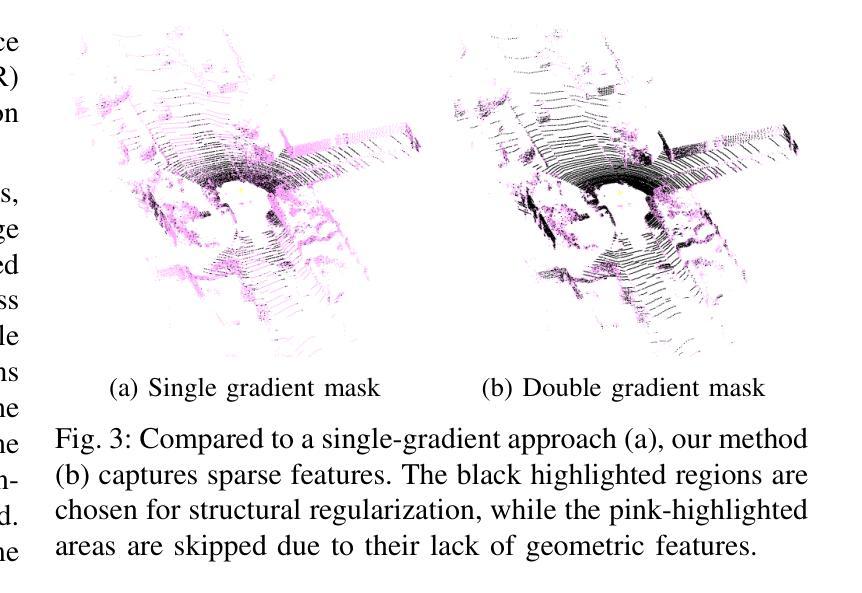

Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS) have emerged as powerful tools for 3D reconstruction and SLAM tasks. However, their performance depends heavily on accurate camera pose priors. Existing approaches attempt to address this issue by introducing external constraints but fall short of achieving satisfactory accuracy, particularly when camera trajectories are complex. In this paper, we propose a novel method, RA-NeRF, capable of predicting highly accurate camera poses even with complex camera trajectories. Following the incremental pipeline, RA-NeRF reconstructs the scene using NeRF with photometric consistency and incorporates flow-driven pose regulation to enhance robustness during initialization and localization. Additionally, RA-NeRF employs an implicit pose filter to capture the camera movement pattern and eliminate the noise for pose estimation. To validate our method, we conduct extensive experiments on the Tanks&Temple dataset for standard evaluation, as well as the NeRFBuster dataset, which presents challenging camera pose trajectories. On both datasets, RA-NeRF achieves state-of-the-art results in both camera pose estimation and visual quality, demonstrating its effectiveness and robustness in scene reconstruction under complex pose trajectories.

神经辐射场(NeRF)和三维高斯涂抹(3DGS)作为强大的工具,在三维重建和SLAM任务中备受瞩目。然而,它们的性能在很大程度上依赖于准确的相机姿态先验。现有方法试图通过引入外部约束来解决这个问题,但在处理复杂的相机轨迹时无法达到令人满意的准确性。在本文中,我们提出了一种名为RA-NeRF的新方法,即使在复杂的相机轨迹下也能预测出高度准确的相机姿态。遵循增量管道,RA-NeRF使用NeRF进行场景重建,利用光度一致性并融入流动驱动的姿态调节,以提高初始化和定位阶段的稳健性。此外,RA-NeRF还采用隐式姿态滤波器捕捉相机运动模式,消除姿态估计中的噪声。为了验证我们的方法,我们在标准的Tanks&Temple数据集上进行了大量实验,以及在具有挑战性的相机姿态轨迹的NeRFBuster数据集上进行了实验。在两个数据集上,RA-NeRF在相机姿态估计和视觉质量方面都达到了最先进的水平,证明了其在复杂姿态轨迹下场景重建的有效性和稳健性。

论文及项目相关链接

PDF IROS 2025

Summary

这篇论文提出了RA-NeRF方法,通过引入姿态滤波和流程驱动的姿态调整策略,即使面对复杂的相机轨迹,也能预测出高精度的相机姿态。其在Tanks&Temple数据集和具有挑战性的NeRFBuster数据集上的实验验证了其有效性。RA-NeRF在相机姿态估计和视觉质量方面均达到了业界领先水平,展现出其在复杂姿态轨迹下的场景重建效果和稳健性。

Key Takeaways

- RA-NeRF能预测复杂的相机轨迹下的高精度相机姿态。

- 通过引入姿态滤波,RA-NeRF能有效捕捉相机运动模式并消除姿态估计中的噪声。

- RA-NeRF采用流程驱动的姿态调整策略,增强了初始化和定位阶段的稳健性。

- 在Tanks&Temple数据集和NeRFBuster数据集上,RA-NeRF的实验结果达到了业界领先水平。

- RA-NeRF在相机姿态估计和视觉质量方面表现优异。

- NeRF和3DGS工具在3D重建和SLAM任务中表现出强大的能力,但它们的性能依赖于准确的相机姿态先验。

点此查看论文截图