⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-06-28 更新

Detection of Breast Cancer Lumpectomy Margin with SAM-incorporated Forward-Forward Contrastive Learning

Authors:Tyler Ward, Xiaoqin Wang, Braxton McFarland, Md Atik Ahamed, Sahar Nozad, Talal Arshad, Hafsa Nebbache, Jin Chen, Abdullah Imran

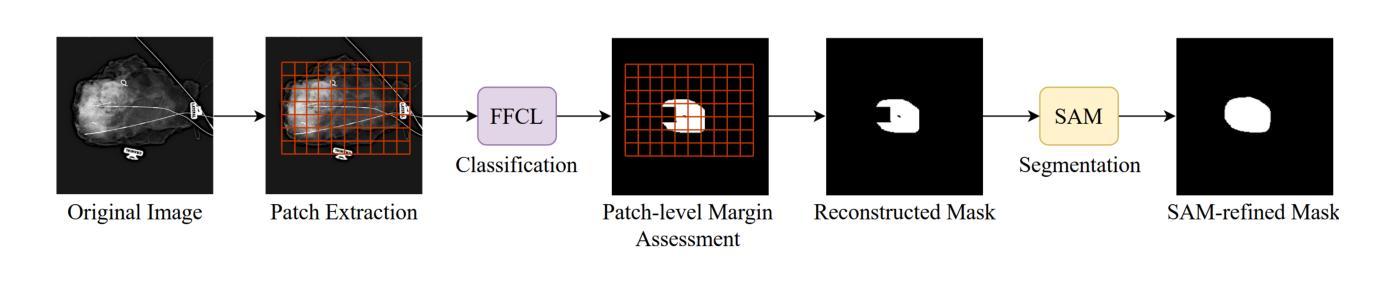

Complete removal of cancer tumors with a negative specimen margin during lumpectomy is essential in reducing breast cancer recurrence. However, 2D specimen radiography (SR), the current method used to assess intraoperative specimen margin status, has limited accuracy, resulting in nearly a quarter of patients requiring additional surgery. To address this, we propose a novel deep learning framework combining the Segment Anything Model (SAM) with Forward-Forward Contrastive Learning (FFCL), a pre-training strategy leveraging both local and global contrastive learning for patch-level classification of SR images. After annotating SR images with regions of known maligancy, non-malignant tissue, and pathology-confirmed margins, we pre-train a ResNet-18 backbone with FFCL to classify margin status, then reconstruct coarse binary masks to prompt SAM for refined tumor margin segmentation. Our approach achieved an AUC of 0.8455 for margin classification and segmented margins with a 27.4% improvement in Dice similarity over baseline models, while reducing inference time to 47 milliseconds per image. These results demonstrate that FFCL-SAM significantly enhances both the speed and accuracy of intraoperative margin assessment, with strong potential to reduce re-excision rates and improve surgical outcomes in breast cancer treatment. Our code is available at https://github.com/tbwa233/FFCL-SAM/.

在保乳手术中,完全移除癌症肿瘤并且保证切缘阴性对于降低乳腺癌复发至关重要。然而,目前用于评估术中标本切缘状态的二维标本放射照相术(SR)的准确性有限,导致近四分之一的患者需要接受二次手术。为了解决这一问题,我们提出了一种新型的深度学习框架,结合了Segment Anything Model(SAM)和Forward-Forward Contrastive Learning(FFCL)。FFCL是一种预训练策略,利用局部和全局对比学习对SR图像进行补丁级别的分类。我们在SR图像上标注了已知恶性区域、非恶性组织和经病理证实的切缘区域,用FFCL预训练一个ResNet-18主干网络来分类切缘状态,然后重建粗糙二进制掩膜来提示SAM进行精细的肿瘤切缘分割。我们的方法实现了0.8455的曲线下面积用于切缘分类,并且在切缘分割上相对于基准模型提高了27.4%的Dice相似度,同时减少了每图像的推理时间至47毫秒。这些结果证明了FFCL-SAM显著提高了术中切缘评估的速度和准确性,具有强大的潜力降低再切除率并改善乳腺癌治疗的手术效果。我们的代码可在https://github.com/tbwa233/FFCL-SAM/获取。

论文及项目相关链接

PDF 19 pages, 7 figures, 3 tables

Summary

本文提出一种结合Segment Anything Model(SAM)与Forward-Forward Contrastive Learning(FFCL)的深度学习框架,用于改进乳腺肿瘤切除术中标本边缘状态的评估。通过结合局部和全局对比学习,该框架提高了标本边缘分类的准确性,并减少了再手术的需要,有望降低乳腺癌复发的风险。

Key Takeaways

- 乳腺肿瘤切除术中完全去除肿瘤并保证阴性标本边缘是降低癌症复发的关键。

- 当前使用的2D标本放射摄影(SR)在评估标本边缘状态方面存在局限性,导致约四分之一的患者需要再次手术。

- 提出的深度学习框架结合了Segment Anything Model(SAM)和Forward-Forward Contrastive Learning(FFCL)。

- 使用已知恶性区域、非恶性组织和病理学确认的边缘对SR图像进行标注,以进行预训练。

- FFCL策略用于训练模型分类边缘状态,并结合SAM进行精细的肿瘤边缘分割。

- 该方法实现了较高的边缘分类AUC值(0.8455),在Dice相似度方面相较于基线模型提升了27.4%。

- 推理时间减少到每图像47毫秒,显示出在术中边缘评估中提高速度和准确性的潜力。

点此查看论文截图

Variational Supervised Contrastive Learning

Authors:Ziwen Wang, Jiajun Fan, Thao Nguyen, Heng Ji, Ge Liu

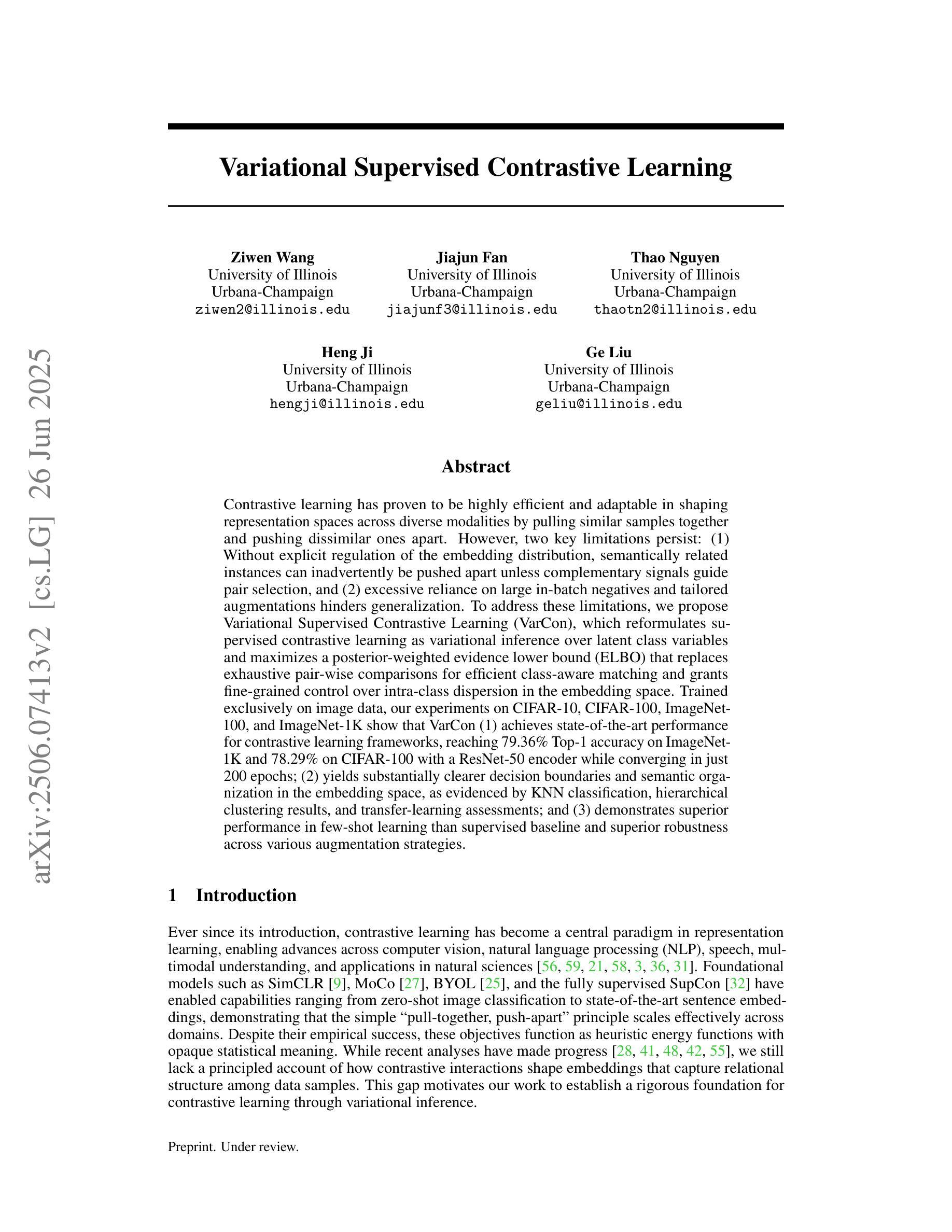

Contrastive learning has proven to be highly efficient and adaptable in shaping representation spaces across diverse modalities by pulling similar samples together and pushing dissimilar ones apart. However, two key limitations persist: (1) Without explicit regulation of the embedding distribution, semantically related instances can inadvertently be pushed apart unless complementary signals guide pair selection, and (2) excessive reliance on large in-batch negatives and tailored augmentations hinders generalization. To address these limitations, we propose Variational Supervised Contrastive Learning (VarCon), which reformulates supervised contrastive learning as variational inference over latent class variables and maximizes a posterior-weighted evidence lower bound (ELBO) that replaces exhaustive pair-wise comparisons for efficient class-aware matching and grants fine-grained control over intra-class dispersion in the embedding space. Trained exclusively on image data, our experiments on CIFAR-10, CIFAR-100, ImageNet-100, and ImageNet-1K show that VarCon (1) achieves state-of-the-art performance for contrastive learning frameworks, reaching 79.36% Top-1 accuracy on ImageNet-1K and 78.29% on CIFAR-100 with a ResNet-50 encoder while converging in just 200 epochs; (2) yields substantially clearer decision boundaries and semantic organization in the embedding space, as evidenced by KNN classification, hierarchical clustering results, and transfer-learning assessments; and (3) demonstrates superior performance in few-shot learning than supervised baseline and superior robustness across various augmentation strategies.

对比学习已证明在多种模态下构建表示空间时具有高效和适应性。它通过拉近相似样本并推远不相似样本。然而,仍存在两个主要局限性:(1)没有显式地调节嵌入分布,除非有补充信号引导配对选择,语义上相关的实例可能会无意中被推远;(2)过度依赖大量批次内的负样本和定制的增强策略会阻碍泛化。为了解决这些局限性,我们提出了变分监督对比学习(VarCon),它将监督对比学习重新表述为潜在类别变量的变分推断,并最大化后验加权证据下限(ELBO),以替代详尽的配对比较,实现高效的类感知匹配,并在嵌入空间中精细控制类内离散度。仅通过在图像数据上进行训练,我们在CIFAR-10、CIFAR-100、ImageNet-100和ImageNet-1K上的实验表明,VarCon(1)实现了对比学习框架的最新性能,在ImageNet-1K上达到79.36%的Top-1准确率,在CIFAR-100上达到78.29%,同时使用ResNet-50编码器在仅200个周期内收敛;(2)在嵌入空间中的决策边界和语义组织更加清晰,这由KNN分类、层次聚类结果和迁移学习评估所证明;(3)在少样本学习上表现出优于监督基准线的性能,并且在各种增强策略中具有出色的稳健性。

论文及项目相关链接

Summary

本文介绍了对比学习在多样模态中的有效性及其在构建表示空间方面的优势。针对现有对比学习的两个关键局限性,提出变分监督对比学习(VarCon)。VarCon通过引入变分推理,解决了对比学习中样本选择的问题,同时提高了类内分散的精细控制。实验结果表明,VarCon在图像数据上取得了显著成果,实现了高效的类感知匹配和清晰的决策边界。

Key Takeaways

- 对比学习在多样模态中表现出高效性和适应性,通过拉近相似样本并推远不同样本构建表示空间。

- 现有对比学习存在两个主要局限:缺乏嵌入分布的显式调控,以及过度依赖大量内部批次负样本和定制增强策略。

- 变分监督对比学习(VarCon)通过变分推理解决对比学习中的样本选择问题,实现对嵌入空间内类内分散的精细控制。

- VarCon在多个数据集上取得了最先进的对比学习性能,包括CIFAR-10、CIFAR-100、ImageNet-100和ImageNet-1K。

- VarCon在少量样本学习方面表现出卓越性能,并且对各种增强策略具有稳健性。

- VarCon能形成更清晰的决策边界和语义组织,这体现在KNN分类、层次聚类结果和迁移学习评估中。

点此查看论文截图

Discovering Global False Negatives On the Fly for Self-supervised Contrastive Learning

Authors:Vicente Balmaseda, Bokun Wang, Ching-Long Lin, Tianbao Yang

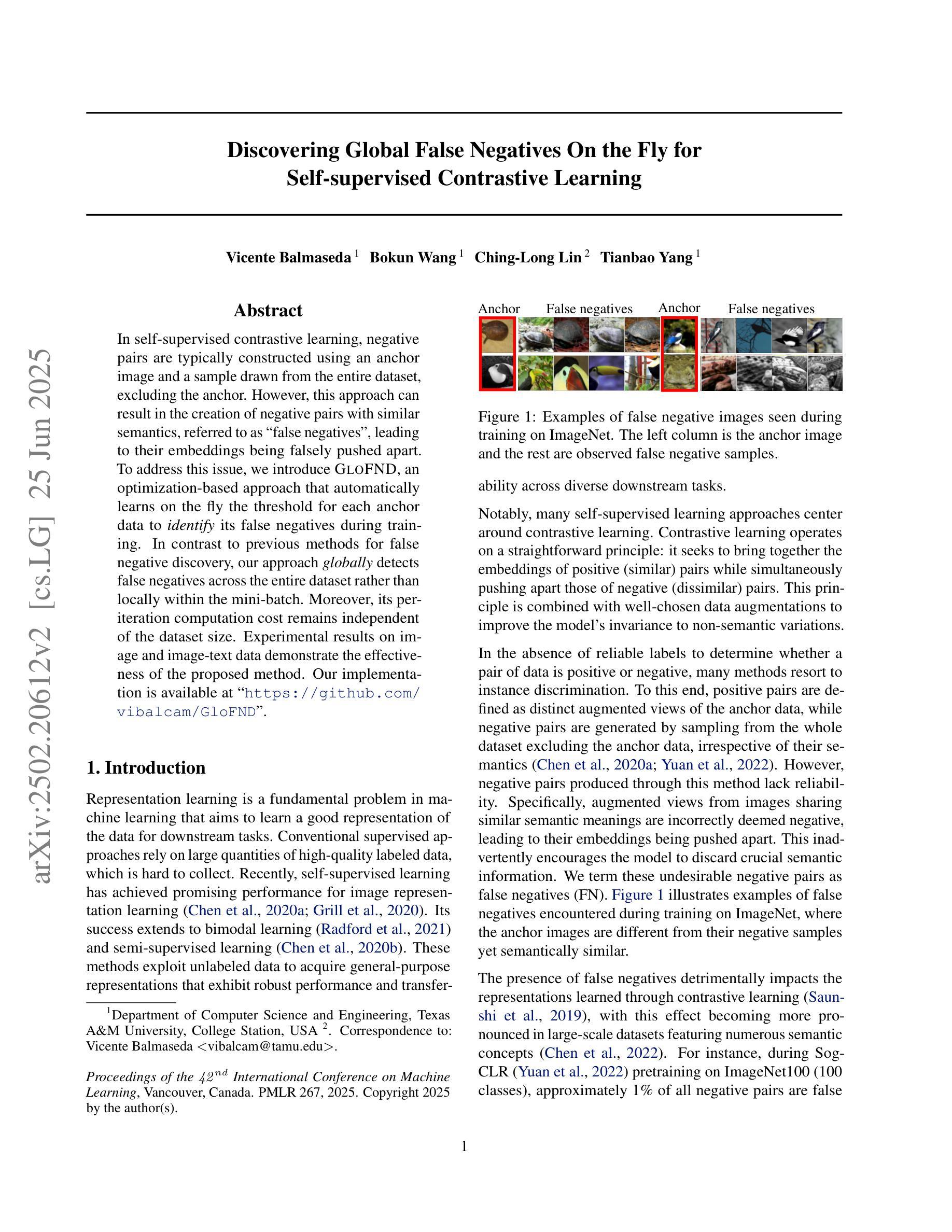

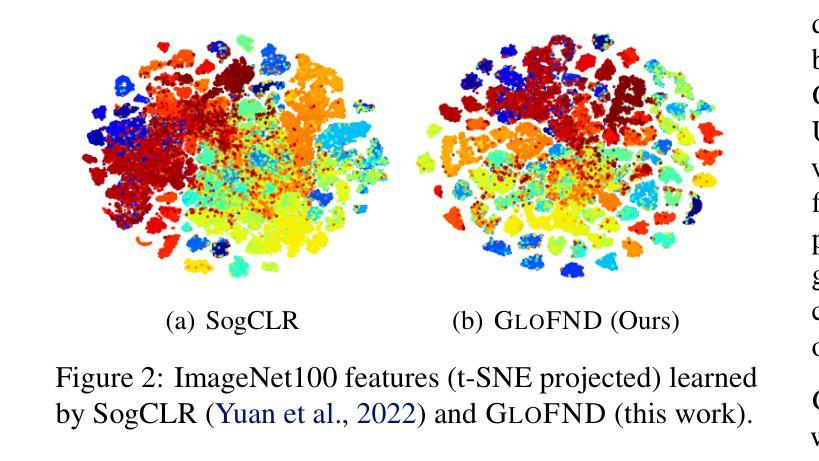

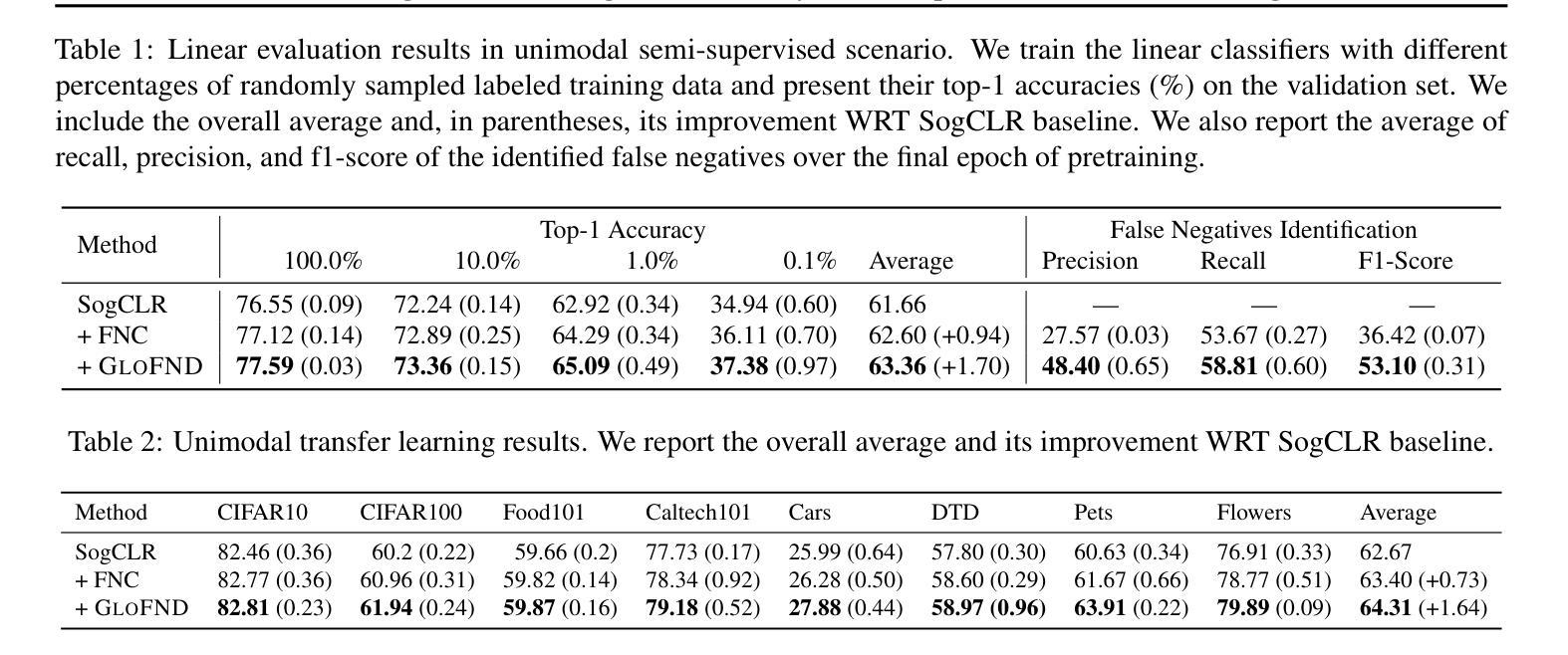

In self-supervised contrastive learning, negative pairs are typically constructed using an anchor image and a sample drawn from the entire dataset, excluding the anchor. However, this approach can result in the creation of negative pairs with similar semantics, referred to as “false negatives”, leading to their embeddings being falsely pushed apart. To address this issue, we introduce GloFND, an optimization-based approach that automatically learns on the fly the threshold for each anchor data to identify its false negatives during training. In contrast to previous methods for false negative discovery, our approach globally detects false negatives across the entire dataset rather than locally within the mini-batch. Moreover, its per-iteration computation cost remains independent of the dataset size. Experimental results on image and image-text data demonstrate the effectiveness of the proposed method. Our implementation is available at https://github.com/vibalcam/GloFND.

在自监督对比学习中,通常使用锚图像和从整个数据集中抽取的样本构建负对,但不包括锚点。然而,这种方法可能会导致创建具有相似语义的负对,称为“假阴性”,导致它们的嵌入被错误地推离。为了解决这一问题,我们引入了GloFND,这是一种基于优化的方法,可以自动地在训练过程中为每个锚数据学习阈值,以识别其假阴性。与之前检测假阴性的方法相比,我们的方法是在整个数据集上全局检测假阴性,而不是在mini-batch内部局部检测。此外,其每次迭代的计算成本独立于数据集大小。在图像和图文数据上的实验结果证明了所提方法的有效性。我们的实现可在 https://github.com/vibalcam/GloFND 找到。

论文及项目相关链接

PDF Accepted to ICML 2025

Summary

本论文介绍了在自监督对比学习中遇到的“假阴性”问题,并提出了一种新的解决方案GloFND。该优化方法能够自动学习为每个锚数据设定阈值,以在训练过程中识别其假阴性样本。相较于之前的假阴性检测方法,GloFND全局检测整个数据集中的假阴性样本,而不是仅限于mini-batch内的局部检测,并且其每次迭代的计算成本独立于数据集大小。实验结果表明,该方法在图像和图文数据上的有效性。

Key Takeaways

- 自监督对比学习中存在“假阴性”问题,即语义相似的负样本导致嵌入被错误地推远。

- GloFND是一种优化方法,可自动为每个锚数据学习识别假阴性的阈值。

- GloFND全局检测整个数据集中的假阴性样本,而非局限于mini-batch内的局部检测。

- GloFND的计算成本与数据集大小无关。

- GloFND在图像和图文数据上的实验验证了其有效性。

- 该方法的实现已公开发布在https://github.com/vibalcam/GloFND。

点此查看论文截图