⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-07-03 更新

SqueezeMe: Mobile-Ready Distillation of Gaussian Full-Body Avatars

Authors:Forrest Iandola, Stanislav Pidhorskyi, Igor Santesteban, Divam Gupta, Anuj Pahuja, Nemanja Bartolovic, Frank Yu, Emanuel Garbin, Tomas Simon, Shunsuke Saito

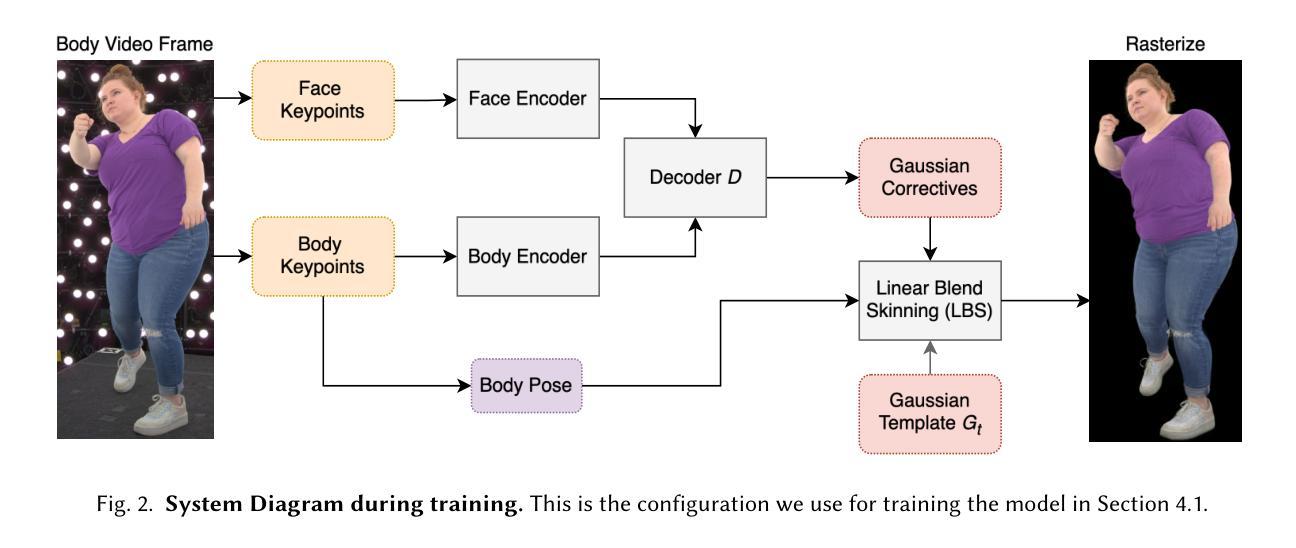

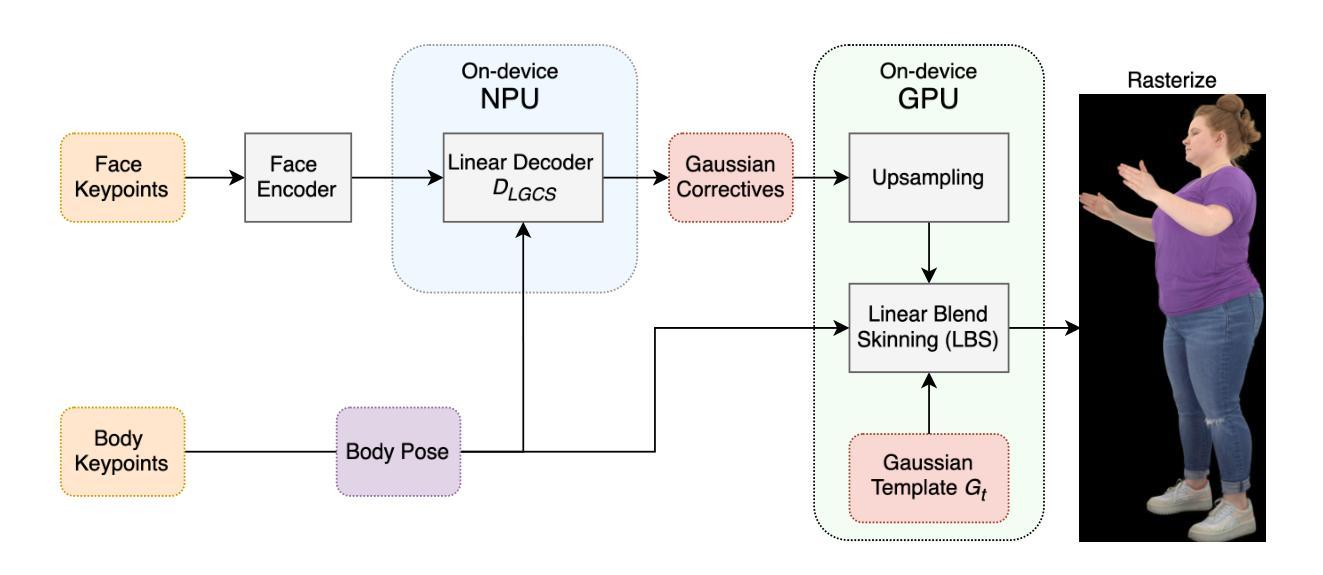

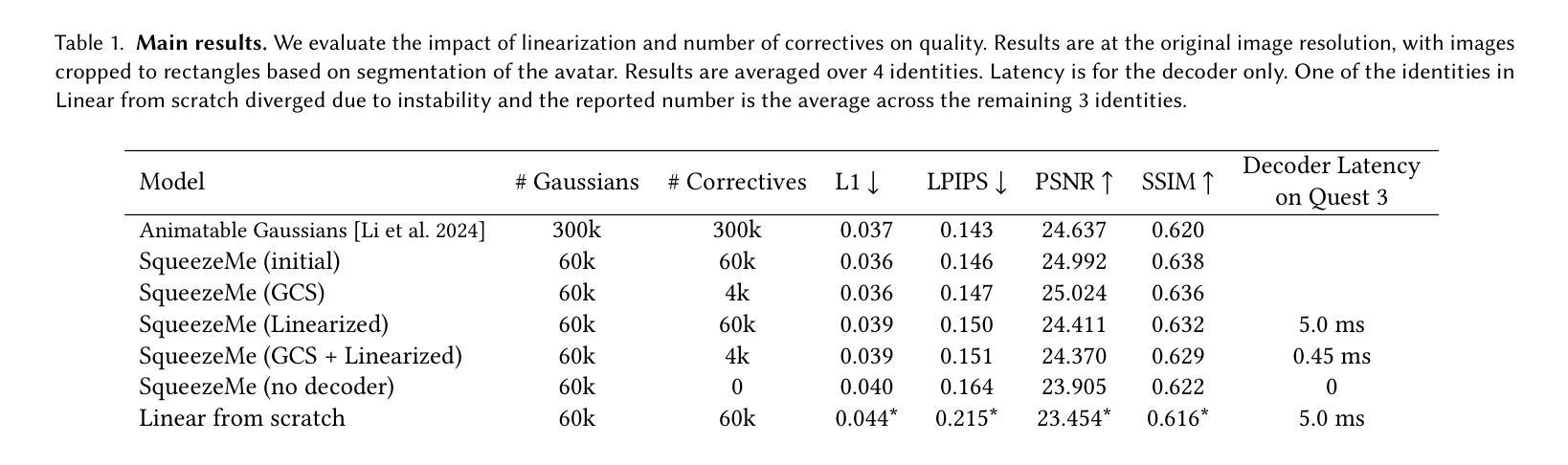

Gaussian-based human avatars have achieved an unprecedented level of visual fidelity. However, existing approaches based on high-capacity neural networks typically require a desktop GPU to achieve real-time performance for a single avatar, and it remains non-trivial to animate and render such avatars on mobile devices including a standalone VR headset due to substantially limited memory and computational bandwidth. In this paper, we present SqueezeMe, a simple and highly effective framework to convert high-fidelity 3D Gaussian full-body avatars into a lightweight representation that supports both animation and rendering with mobile-grade compute. Our key observation is that the decoding of pose-dependent Gaussian attributes from a neural network creates non-negligible memory and computational overhead. Inspired by blendshapes and linear pose correctives widely used in Computer Graphics, we address this by distilling the pose correctives learned with neural networks into linear layers. Moreover, we further reduce the parameters by sharing the correctives among nearby Gaussians. Combining them with a custom splatting pipeline based on Vulkan, we achieve, for the first time, simultaneous animation and rendering of 3 Gaussian avatars in real-time (72 FPS) on a Meta Quest 3 VR headset. Demo videos are available at https://forresti.github.io/squeezeme.

基于高斯的人体化身已经实现了前所未有的视觉逼真度。然而,现有的方法大多基于高容量神经网络,通常需要桌面GPU才能实现单个化身的实时性能,而且由于内存和计算带宽的限制,在移动设备(包括独立VR耳机)上实现此类化身的动画和渲染仍然是一项艰巨的任务。在本文中,我们介绍了SqueezeMe,这是一个简单而高效的框架,能够将高保真3D高斯全身化身转换为轻量级表示,支持使用移动级计算进行动画和渲染。我们的关键观察是,从神经网络解码姿势相关的高斯属性会产生不可忽略的内存和计算开销。我们借鉴计算机图形中广泛使用的blendshapes和线性姿势校正器,通过蒸馏神经网络学习的姿势校正器到线性层来解决这个问题。此外,我们通过共享附近高斯之间的校正来进一步减少参数。将它们与基于Vulkan的自定义喷涂管道相结合,我们首次在Meta Quest 3 VR耳机上实现了3个高斯化身的实时(72 FPS)同时动画和渲染。演示视频可在https://forresti.github.io/squeezeme上查看。

论文及项目相关链接

PDF Accepted to SIGGRAPH 2025

Summary

本文提出了SqueezeMe框架,可将高保真3D高斯全身化身简化为轻量级表示,支持在移动设备上使用和渲染。通过利用神经网络学习姿态修正并转化为线性层,同时共享邻近高斯修正,并结合Vulkan的自定义平铺管道,成功实现了在Meta Quest 3 VR头显上实时渲染三个高斯化身。

Key Takeaways

- SqueezeMe框架成功实现了高保真3D高斯全身化身的轻量化表示。

- 通过简化姿态修正的方式,减少了内存和计算开销。

- 邻近高斯修正共享进一步降低了参数数量。

- 结合Vulkan的自定义平铺管道,首次实现了在VR头显上实时渲染多个高斯化身。

- 该框架支持动画和渲染在移动设备上的使用。

- 提供Demo视频供观众查看效果。

点此查看论文截图