⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-07-09 更新

De-Fake: Style based Anomaly Deepfake Detection

Authors:Sudev Kumar Padhi, Harshit Kumar, Umesh Kashyap, Sk. Subidh Ali

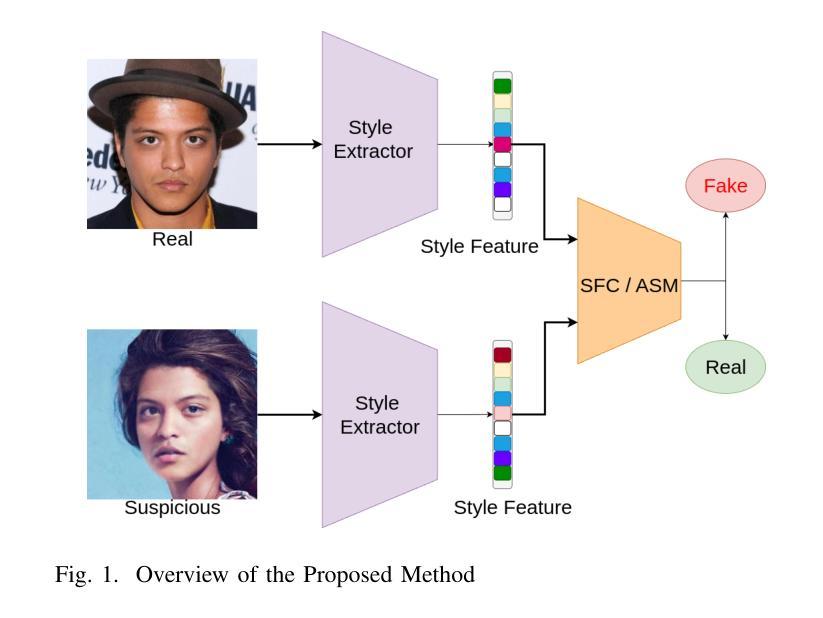

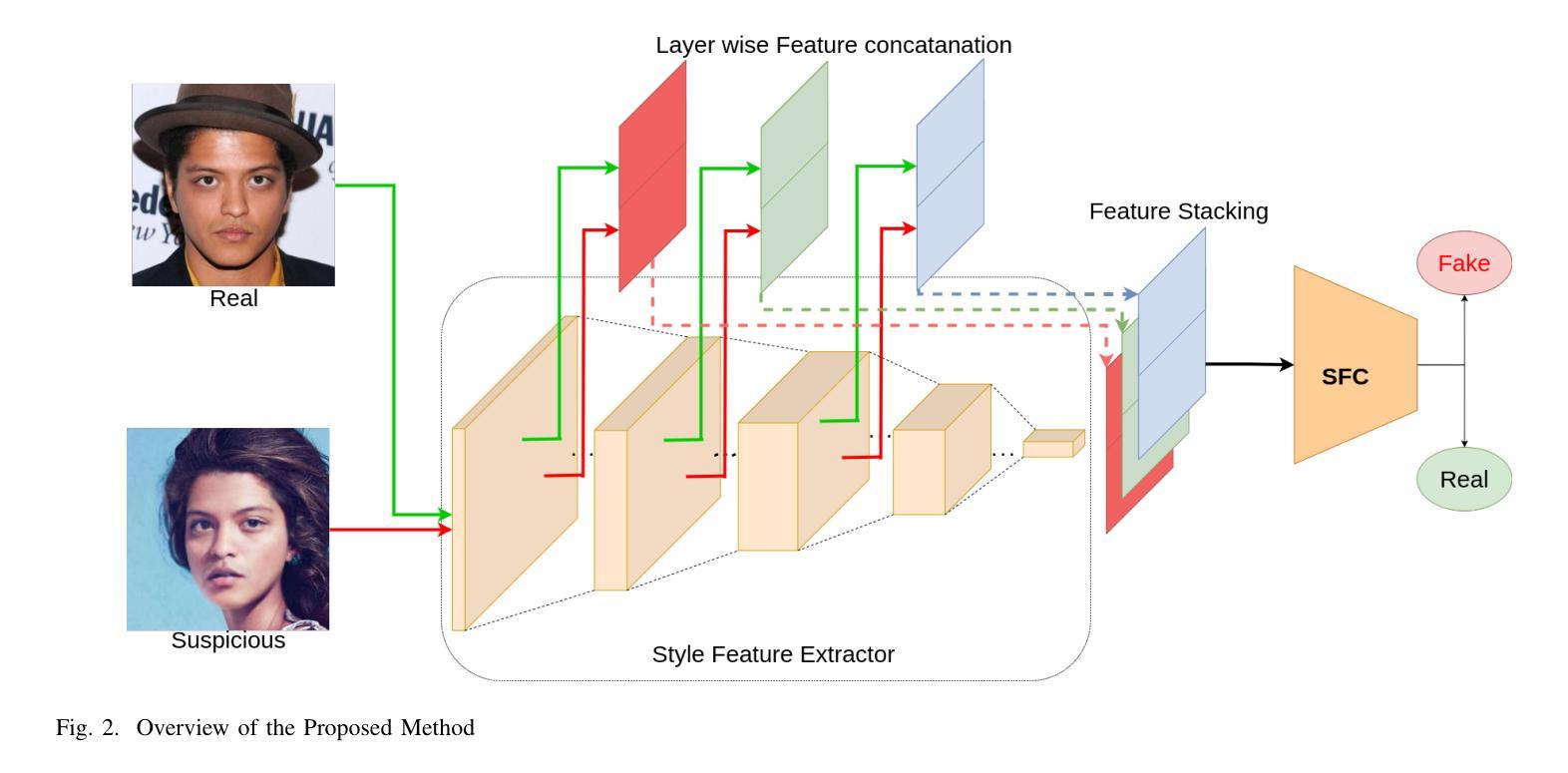

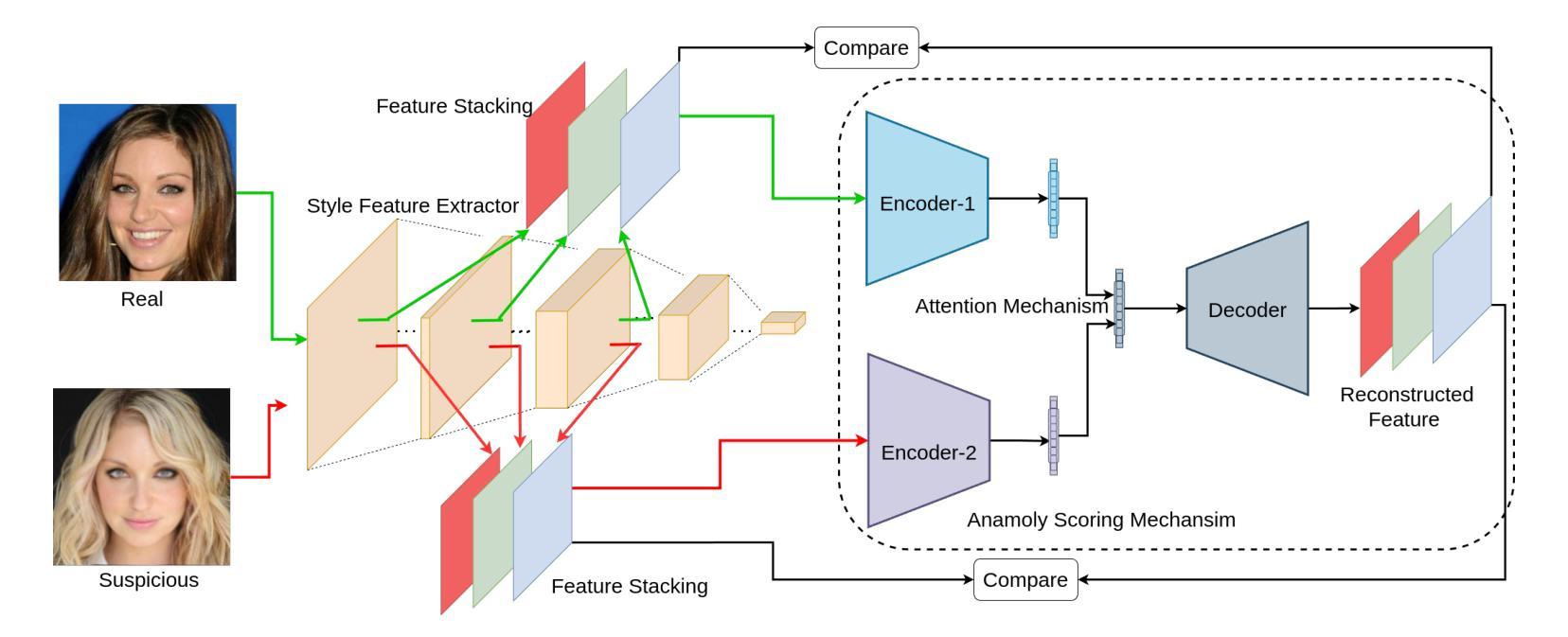

Detecting deepfakes involving face-swaps presents a significant challenge, particularly in real-world scenarios where anyone can perform face-swapping with freely available tools and apps without any technical knowledge. Existing deepfake detection methods rely on facial landmarks or inconsistencies in pixel-level features and often struggle with face-swap deepfakes, where the source face is seamlessly blended into the target image or video. The prevalence of face-swap is evident in everyday life, where it is used to spread false information, damage reputations, manipulate political opinions, create non-consensual intimate deepfakes (NCID), and exploit children by enabling the creation of child sexual abuse material (CSAM). Even prominent public figures are not immune to its impact, with numerous deepfakes of them circulating widely across social media platforms. Another challenge faced by deepfake detection methods is the creation of datasets that encompass a wide range of variations, as training models require substantial amounts of data. This raises privacy concerns, particularly regarding the processing and storage of personal facial data, which could lead to unauthorized access or misuse. Our key idea is to identify these style discrepancies to detect face-swapped images effectively without accessing the real facial image. We perform comprehensive evaluations using multiple datasets and face-swapping methods, which showcases the effectiveness of SafeVision in detecting face-swap deepfakes across diverse scenarios. SafeVision offers a reliable and scalable solution for detecting face-swaps in a privacy preserving manner, making it particularly effective in challenging real-world applications. To the best of our knowledge, SafeVision is the first deepfake detection using style features while providing inherent privacy protection.

检测涉及面部交换的深度伪造(deepfakes)是一个巨大的挑战,特别是在现实世界场景中,任何人都可以使用无需技术知识的自由可用的工具和应用程序进行面部交换。现有的深度伪造检测方法依赖于面部特征点或像素级特征的不一致性,并且经常难以应对面部交换的深度伪造,其中源面部无缝地融合到目标图像或视频中。面部交换在日常生活中的普遍存在,被用于传播虚假信息、损害声誉、操纵政治舆论、创建非共识亲密深度伪造(NCID),并通过创建儿童性虐待材料(CSAM)来剥削儿童。甚至在社交媒体平台上广泛传播的许多著名公众人物的深度伪造也无法免疫其影响。深度伪造检测方法所面临的另一个挑战是创建涵盖广泛变化的数据集,因为训练模型需要大量的数据。这引发了隐私担忧,特别是在处理和存储个人面部数据方面,可能导致未经授权的访问或滥用。我们的主要思想是识别这些风格差异,以有效检测面部交换的图像,而无需访问真正的面部图像。我们使用多个数据集和面部交换方法进行了全面评估,展示了SafeVision在检测面部交换深度伪造方面的有效性。SafeVision提供了一种可靠且可扩展的解决方案,以隐私保护的方式检测面部交换,使其在具有挑战性的现实世界中特别有效。据我们所知,SafeVision是首个使用风格特征进行深度伪造检测的同时提供内在隐私保护的系统。

论文及项目相关链接

摘要

人脸深度伪造技术引发一系列社会难题。针对这一问题,该文介绍了一种新的检测方式SafeVision,通过识别风格差异来检测人脸替换图像,无需访问真实面部图像,既有效又保护了隐私。此技术在不同数据集和人脸替换方法下展现出高检测力。这为在保护隐私的情况下可靠地检测人脸替换提供了可能的解决方案。

关键见解

- 人脸深度伪造技术在现实世界中广泛使用,造成声誉损害、传播虚假信息等问题。

- 现有深度伪造检测方法主要依赖面部标志或像素级特征的不一致性,但对人脸替换深度伪造技术效果不佳。

- SafeVision通过识别风格差异来检测人脸替换图像,为检测提供了一种新的思路。

- SafeVision在多种数据集和人脸替换方法下的评估表现优秀,具有有效性和可靠性。

- SafeVision为检测人脸替换提供了隐私保护的方式,符合现实世界的挑战和需求。

- 创建涵盖多种变化的数据集是深度伪造检测的另一挑战,这涉及到个人隐私的担忧。

- SafeVision是首个利用风格特征进行深度伪造检测同时提供内在隐私保护的方法。

点此查看论文截图

DynamicFace: High-Quality and Consistent Face Swapping for Image and Video using Composable 3D Facial Priors

Authors:Runqi Wang, Yang Chen, Sijie Xu, Tianyao He, Wei Zhu, Dejia Song, Nemo Chen, Xu Tang, Yao Hu

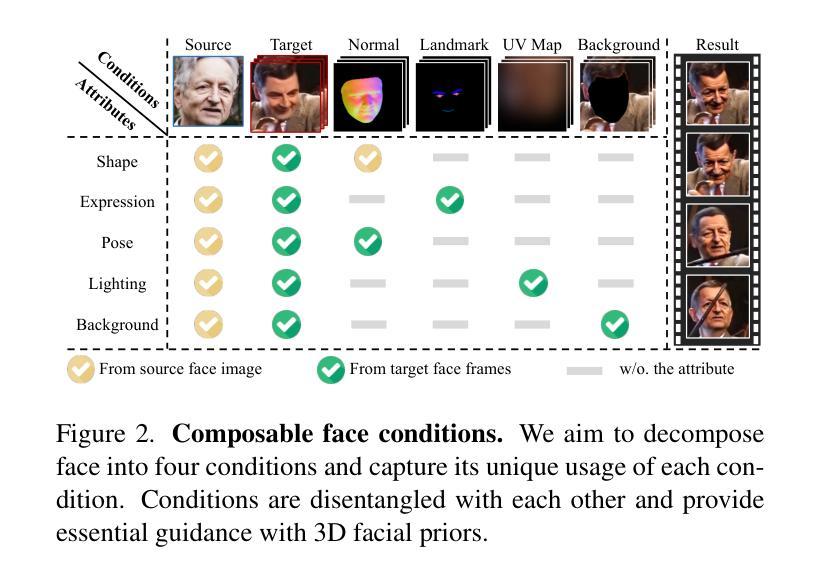

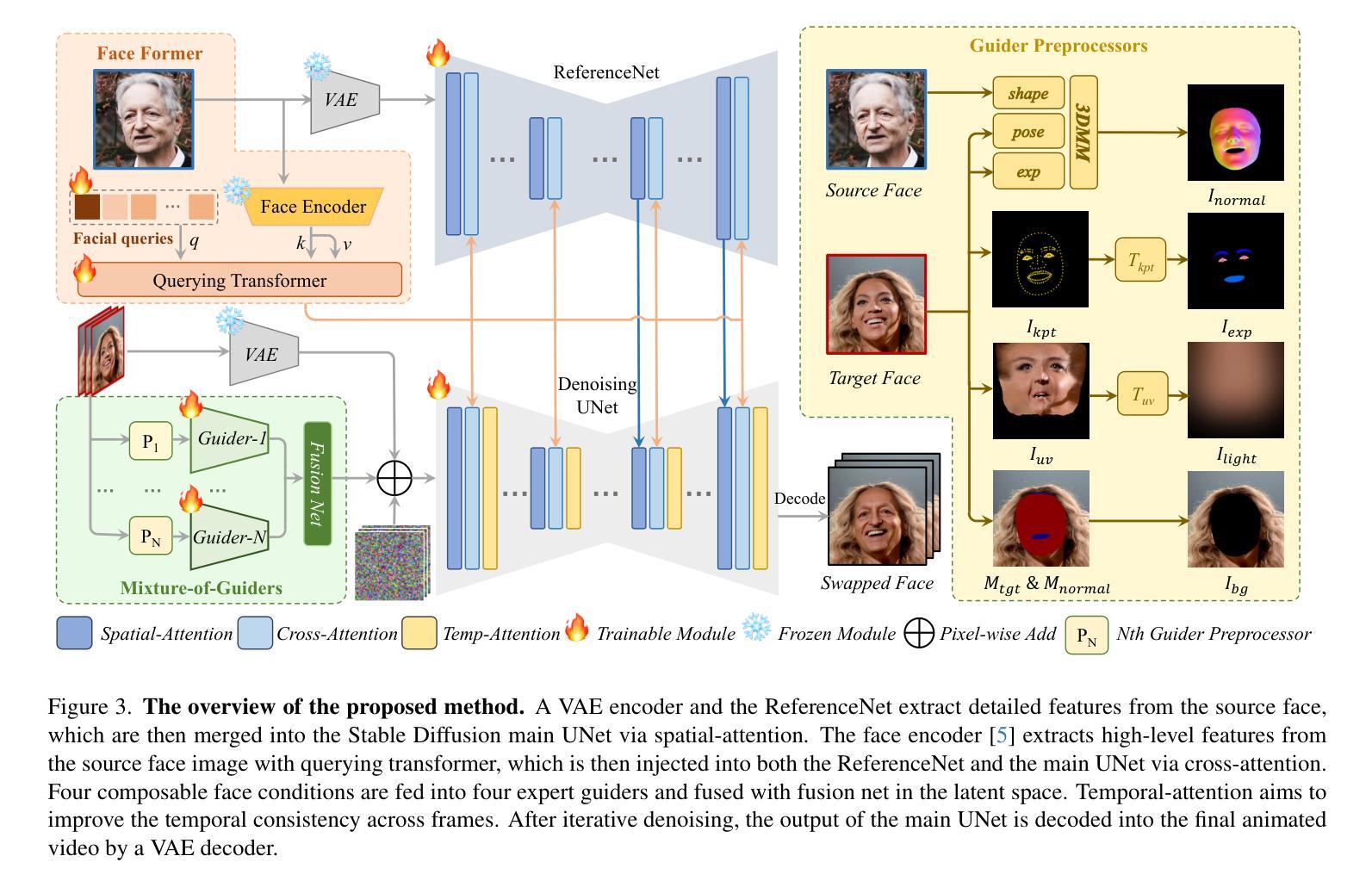

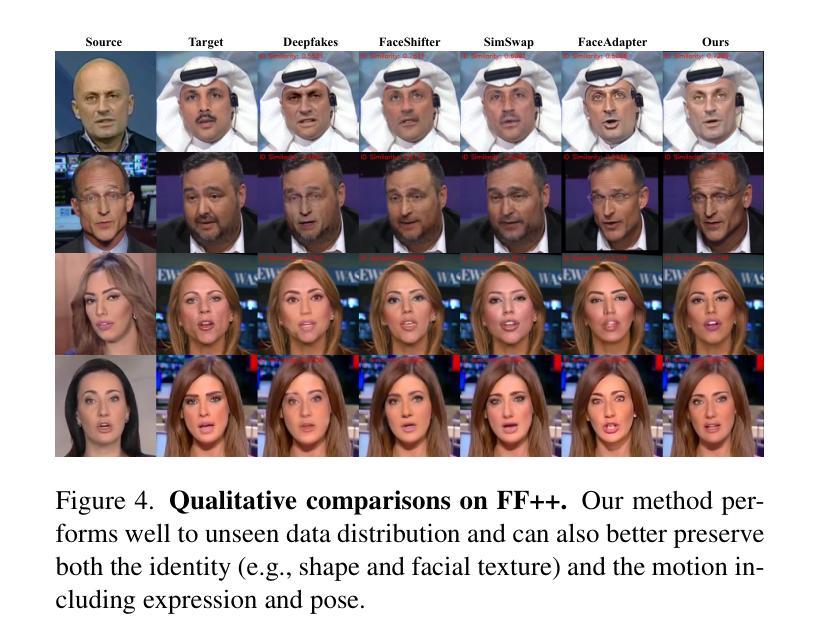

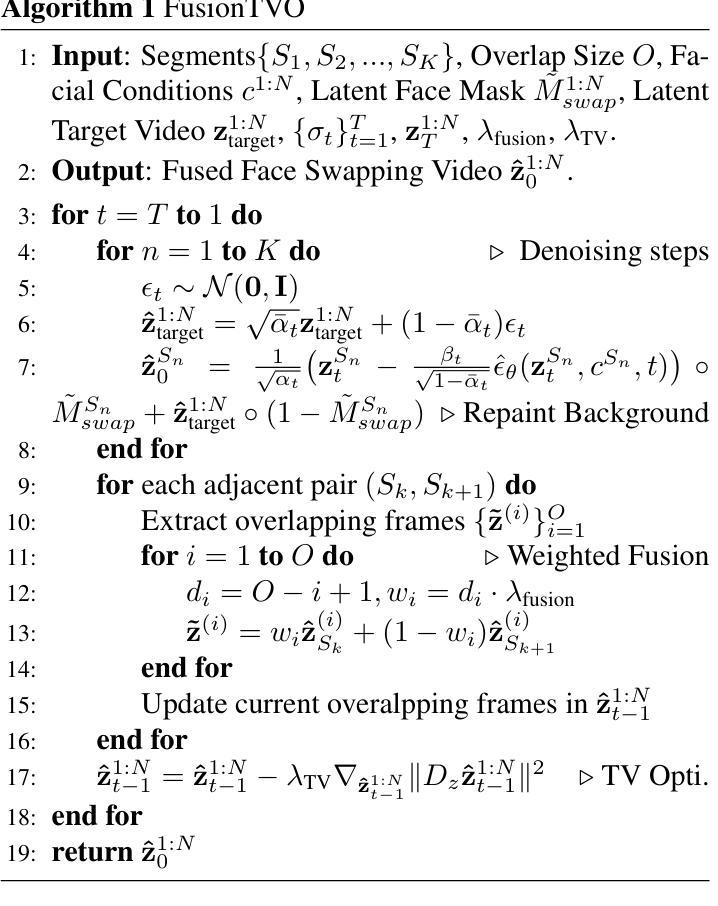

Face swapping transfers the identity of a source face to a target face while retaining the attributes like expression, pose, hair, and background of the target face. Advanced face swapping methods have achieved attractive results. However, these methods often inadvertently transfer identity information from the target face, compromising expression-related details and accurate identity. We propose a novel method DynamicFace that leverages the power of diffusion models and plug-and-play adaptive attention layers for image and video face swapping. First, we introduce four fine-grained facial conditions using 3D facial priors. All conditions are designed to be disentangled from each other for precise and unique control. Then, we adopt Face Former and ReferenceNet for high-level and detailed identity injection. Through experiments on the FF++ dataset, we demonstrate that our method achieves state-of-the-art results in face swapping, showcasing superior image quality, identity preservation, and expression accuracy. Our framework seamlessly adapts to both image and video domains. Our code and results will be available on the project page: https://dynamic-face.github.io/

面部替换技术将源面部的身份转移到目标面部,同时保留目标面部的表情、姿态、发型和背景等属性。先进的面部替换方法已经取得了吸引人的结果。然而,这些方法通常会在不经意间从目标面部转移身份信息,从而损失表情相关的细节和准确的身份。我们提出了一种新的方法DynamicFace,它利用扩散模型和即插即用自适应注意层进行图像和视频面部替换。首先,我们利用3D面部先验知识引入了四种精细的面部条件。所有条件都被设计成彼此分离,以实现精确和独特的控制。然后,我们采用Face Former和ReferenceNet进行高级和详细的身份注入。在FF++数据集上的实验表明,我们的方法在面部替换方面达到了最新水平,展现了卓越的图片质量、身份保留和表情准确性。我们的框架可以无缝适应图像和视频领域。我们的代码和结果将在项目页面上进行公开:https://dynamic-face.github.io/

论文及项目相关链接

PDF Accepted by ICCV 2025. Project page: https://dynamic-face.github.io/

Summary

一种新型动态面部换脸方法被提出,该方法结合扩散模型和自适应注意力层,实现了图像和视频中的面部换脸。该方法通过引入四个精细面部条件并利用Face Former和ReferenceNet进行高级和详细的身份注入,实现了高质量、身份保留和表情准确的换脸效果。此框架同时适用于图像和视频领域。详细信息可在项目页面查看。

Key Takeaways

- 新型动态面部换脸方法结合了扩散模型和自适应注意力层技术。

- 方法引入四个精细面部条件以实现精准独特的控制。

- 通过Face Former和ReferenceNet实现高级和详细的身份注入。

- 在FF++数据集上的实验证明了该方法在面部换脸领域的优越性。

- 该方法实现了高质量、身份保留和表情准确的换脸效果。

- 框架能够无缝适应图像和视频领域。

点此查看论文截图