⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-07-10 更新

ScoreAdv: Score-based Targeted Generation of Natural Adversarial Examples via Diffusion Models

Authors:Chihan Huang, Hao Tang

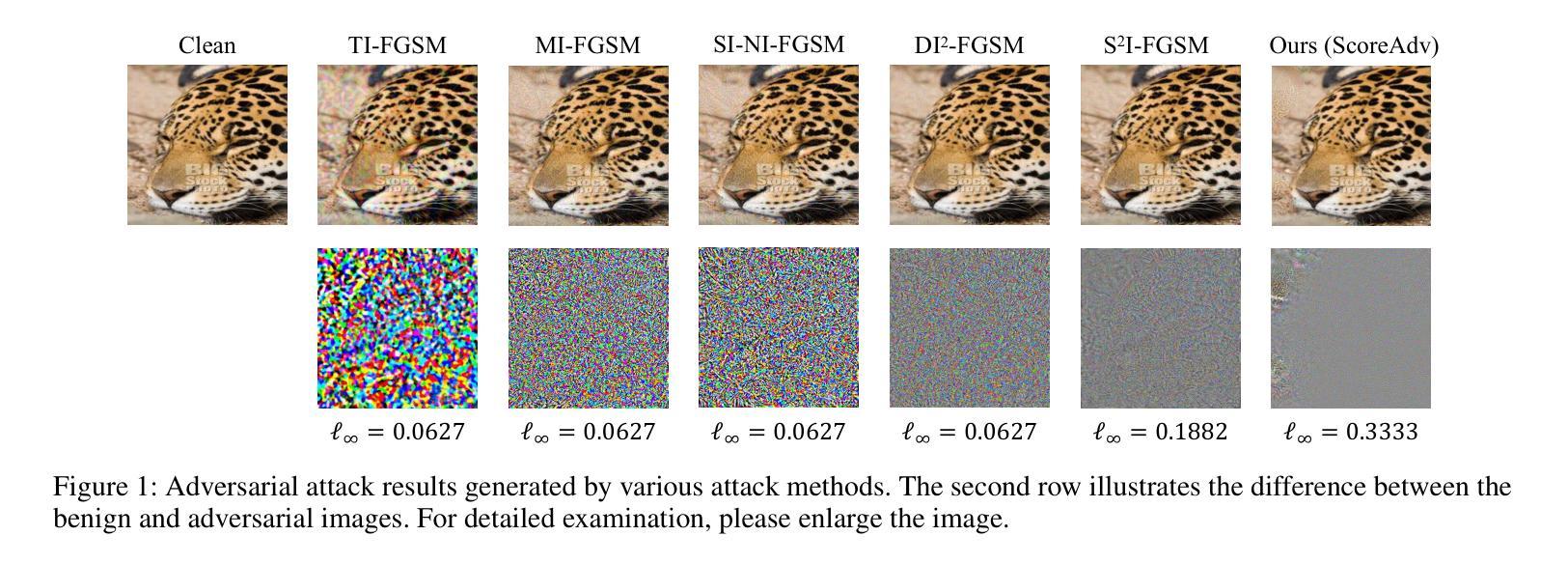

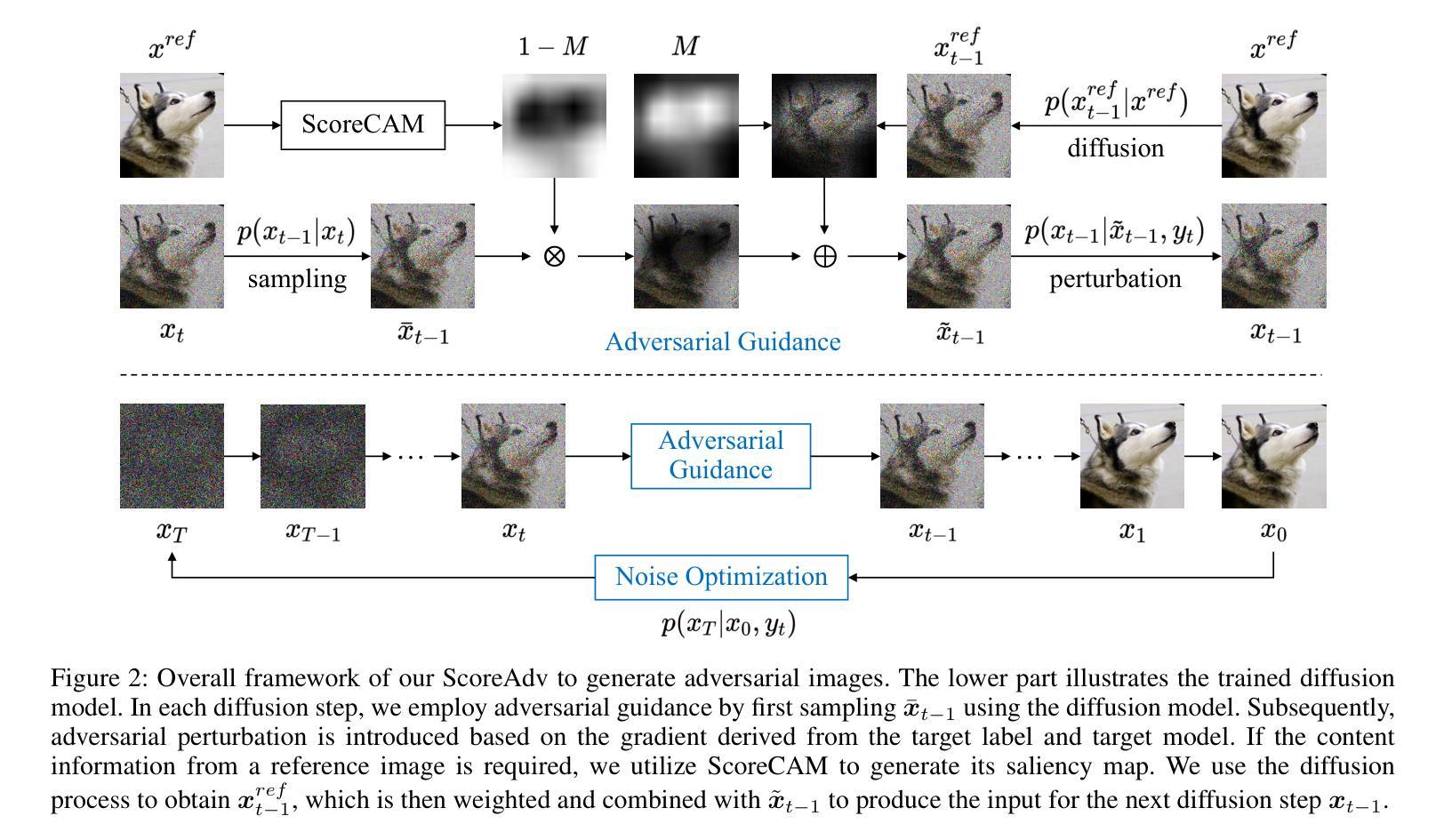

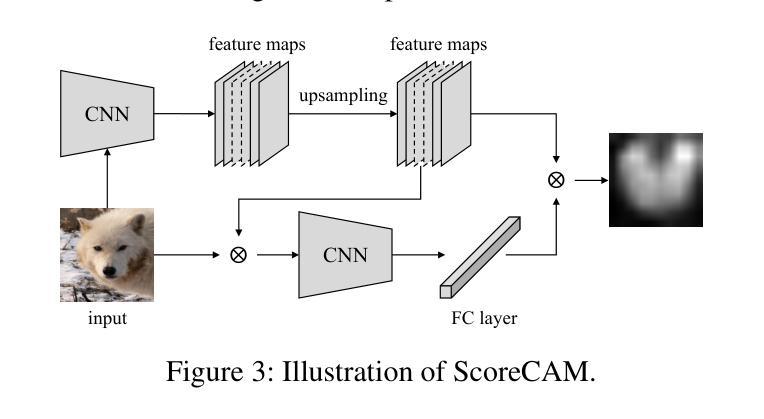

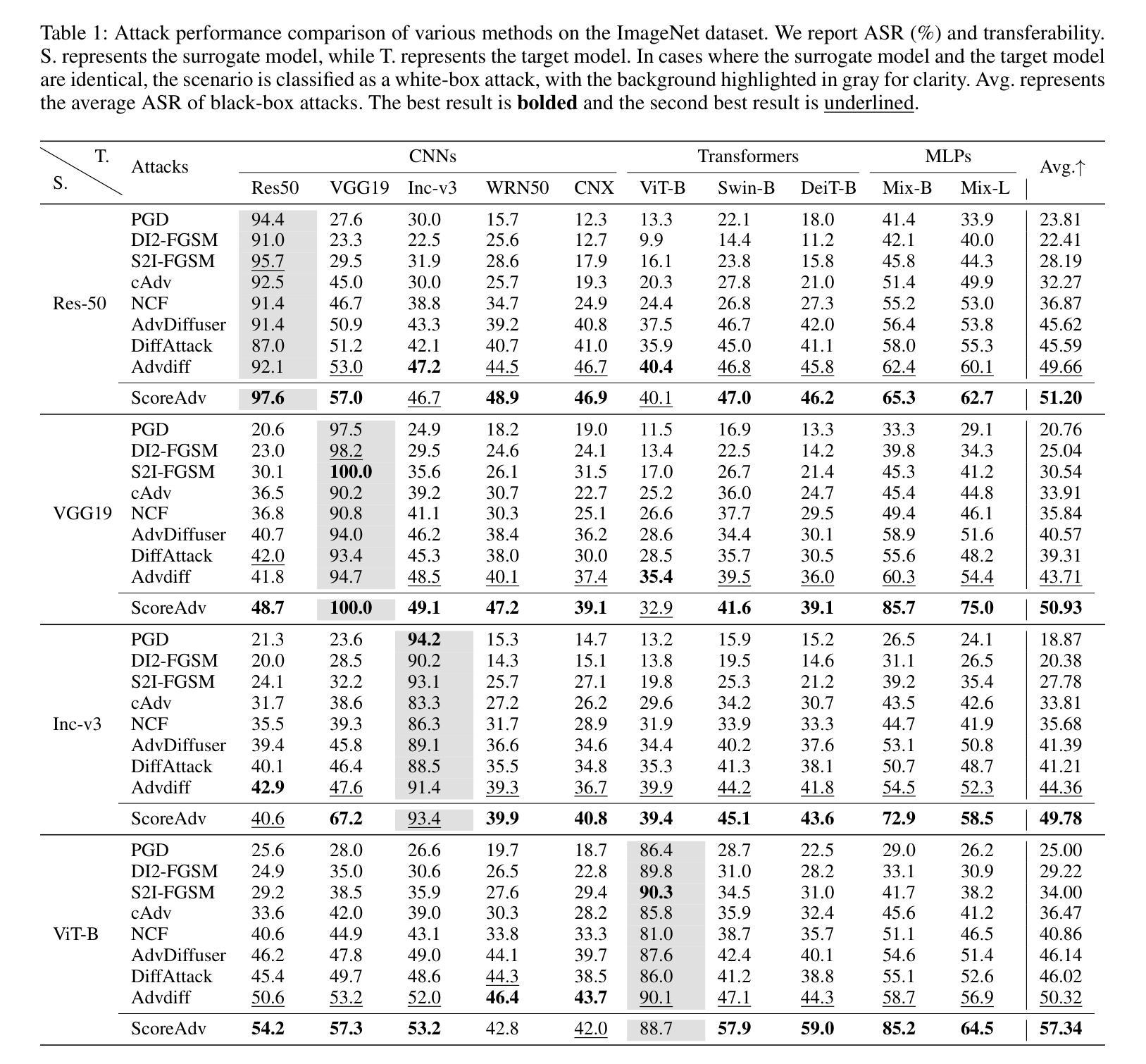

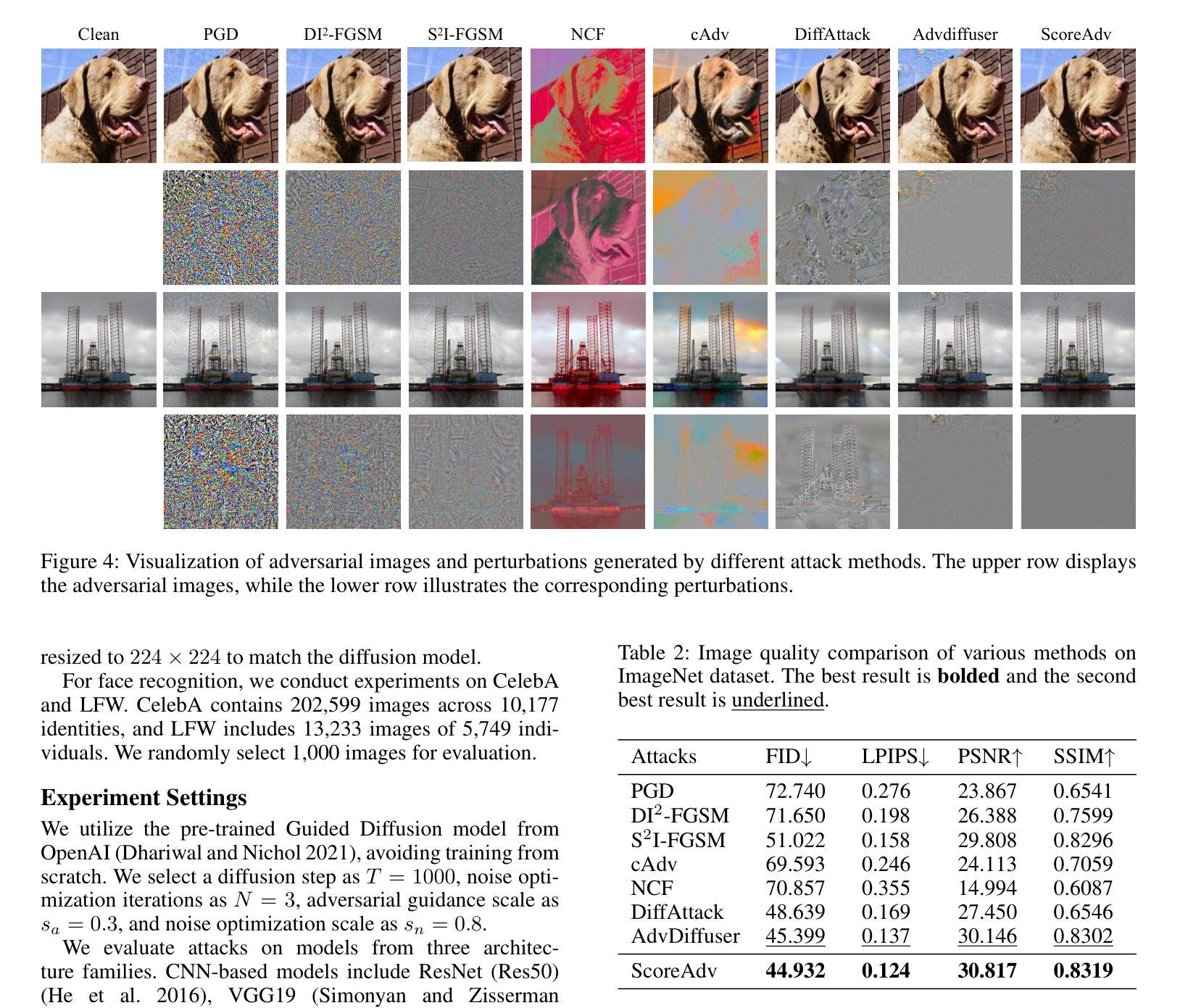

Despite the success of deep learning across various domains, it remains vulnerable to adversarial attacks. Although many existing adversarial attack methods achieve high success rates, they typically rely on $\ell_{p}$-norm perturbation constraints, which do not align with human perceptual capabilities. Consequently, researchers have shifted their focus toward generating natural, unrestricted adversarial examples (UAEs). GAN-based approaches suffer from inherent limitations, such as poor image quality due to instability and mode collapse. Meanwhile, diffusion models have been employed for UAE generation, but they still rely on iterative PGD perturbation injection, without fully leveraging their central denoising capabilities. In this paper, we introduce a novel approach for generating UAEs based on diffusion models, named ScoreAdv. This method incorporates an interpretable adversarial guidance mechanism to gradually shift the sampling distribution towards the adversarial distribution, while using an interpretable saliency map to inject the visual information of a reference image into the generated samples. Notably, our method is capable of generating an unlimited number of natural adversarial examples and can attack not only classification models but also retrieval models. We conduct extensive experiments on ImageNet and CelebA datasets, validating the performance of ScoreAdv across ten target models in both black-box and white-box settings. Our results demonstrate that ScoreAdv achieves state-of-the-art attack success rates and image quality. Furthermore, the dynamic balance between denoising and adversarial perturbation enables ScoreAdv to remain robust even under defensive measures.

尽管深度学习在各个领域都取得了成功,但它仍然容易受到对抗性攻击的影响。尽管现有的许多对抗性攻击方法达到了高成功率,但它们通常依赖于$\ell_{p}$范数扰动约束,这些约束并不符合人类的感知能力。因此,研究人员已将重点转向生成自然、无限制的对抗性样本(UAEs)。基于GAN的方法存在固有的局限性,例如由于不稳定和模式崩溃导致的图像质量差。同时,虽然扩散模型已被用于UAE生成,但它们仍然依赖于迭代PGD扰动注入,并没有充分利用其核心的降噪能力。在本文中,我们介绍了一种基于扩散模型生成UAEs的新型方法,名为ScoreAdv。该方法结合了一种可解释的对抗性指导机制,使采样分布逐渐转向对抗性分布,同时使用可解释显著图将参考图像的可视信息注入到生成的样本中。值得注意的是,我们的方法能够生成无数自然的对抗性样本,并且可以攻击不仅分类模型,还可以攻击检索模型。我们在ImageNet和CelebA数据集上进行了大量实验,在黑名单和白名单设置中对十个目标模型验证了ScoreAdv的性能。我们的结果表明,ScoreAdv达到了最先进的攻击成功率和图像质量。此外,降噪和对抗性扰动之间的动态平衡使ScoreAdv在防御措施下仍能保持稳定。

论文及项目相关链接

Summary

深度学习方法尽管在多个领域取得了成功,但仍面临对抗性攻击的威胁。现有的攻击方法多数基于$\ell_{p}$范数扰动约束,不符合人类感知能力。为生成更自然的无限制对抗样本(UAEs),研究者已尝试使用GAN和扩散模型。本文提出一种基于扩散模型的新方法ScoreAdv,结合可解释的对抗性指导机制,逐步改变采样分布并注入参考图像的可视信息。ScoreAdv能生成无限数量的自然对抗样本,不仅能攻击分类模型,还能攻击检索模型。在ImageNet和CelebA数据集上的实验验证了ScoreAdv在黑白盒设置中的卓越性能。

Key Takeaways

- 深度学习方法易受对抗性攻击的影响。

- 现有攻击方法多数基于$\ell_{p}$范数扰动,不符合人类感知。

- 研究者致力于生成更自然的无限制对抗样本(UAEs)。

- ScoreAdv是一种基于扩散模型的新方法,能生成无限数量的自然对抗样本。

- ScoreAdv结合可解释的对抗性指导机制,逐步改变采样分布并注入参考图像信息。

- ScoreAdv不仅能攻击分类模型,还能攻击检索模型。

点此查看论文截图