⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-07-12 更新

GGTalker: Talking Head Systhesis with Generalizable Gaussian Priors and Identity-Specific Adaptation

Authors:Wentao Hu, Shunkai Li, Ziqiao Peng, Haoxian Zhang, Fan Shi, Xiaoqiang Liu, Pengfei Wan, Di Zhang, Hui Tian

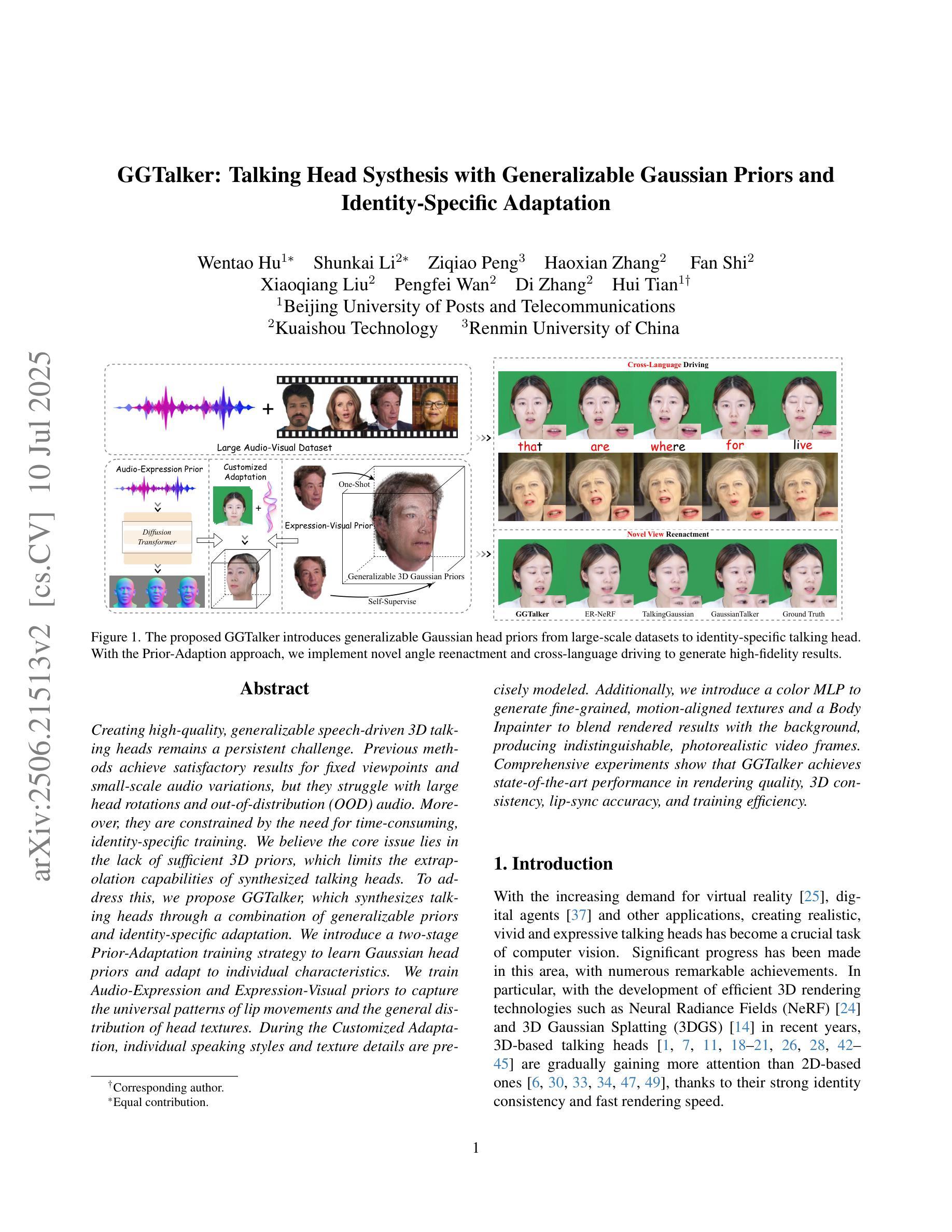

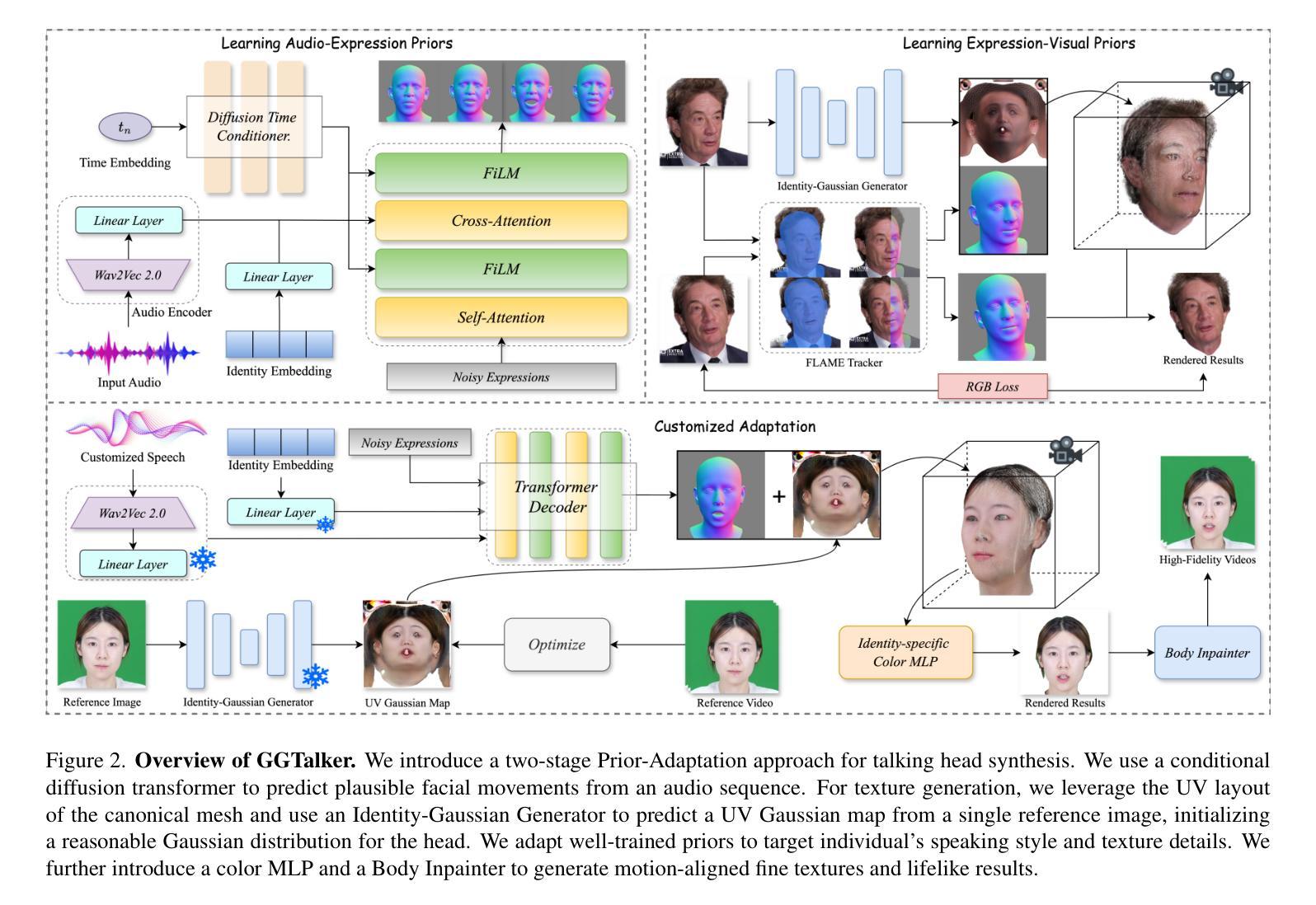

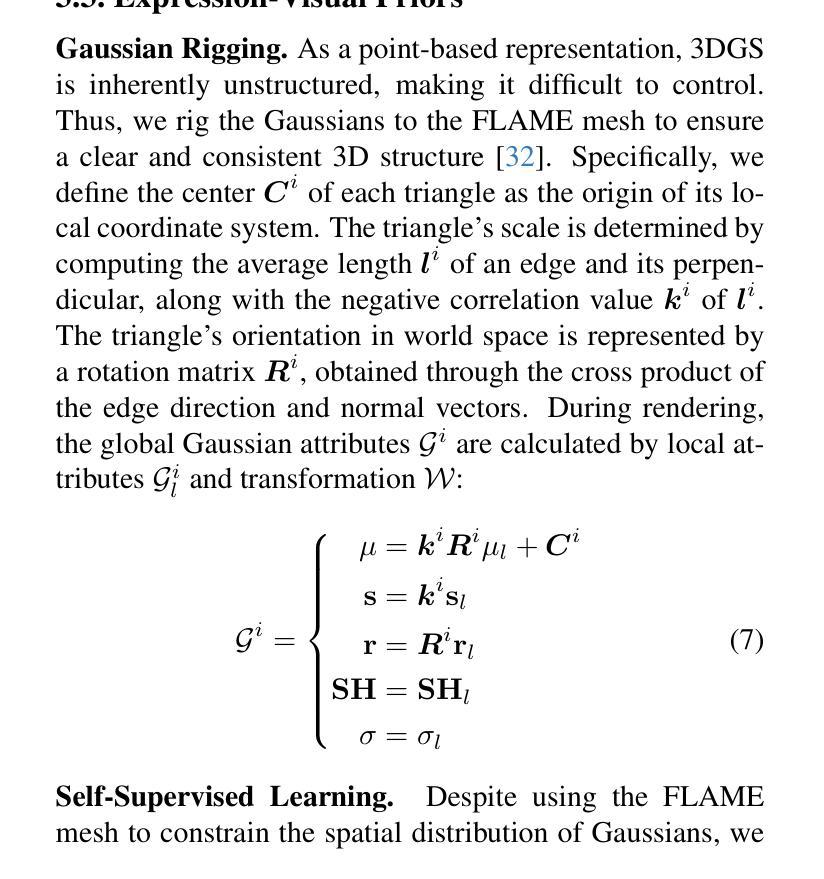

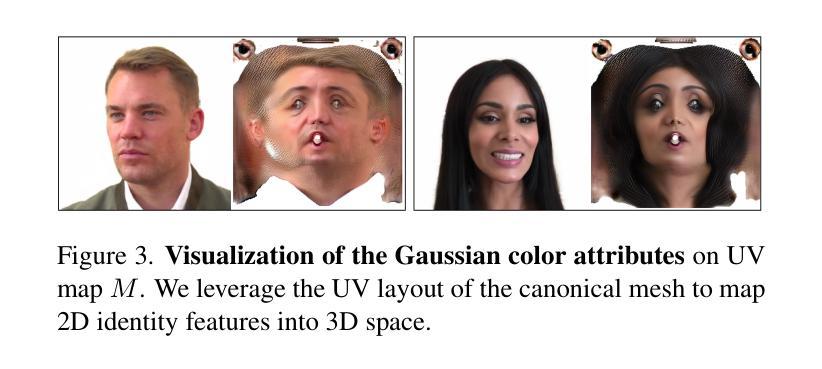

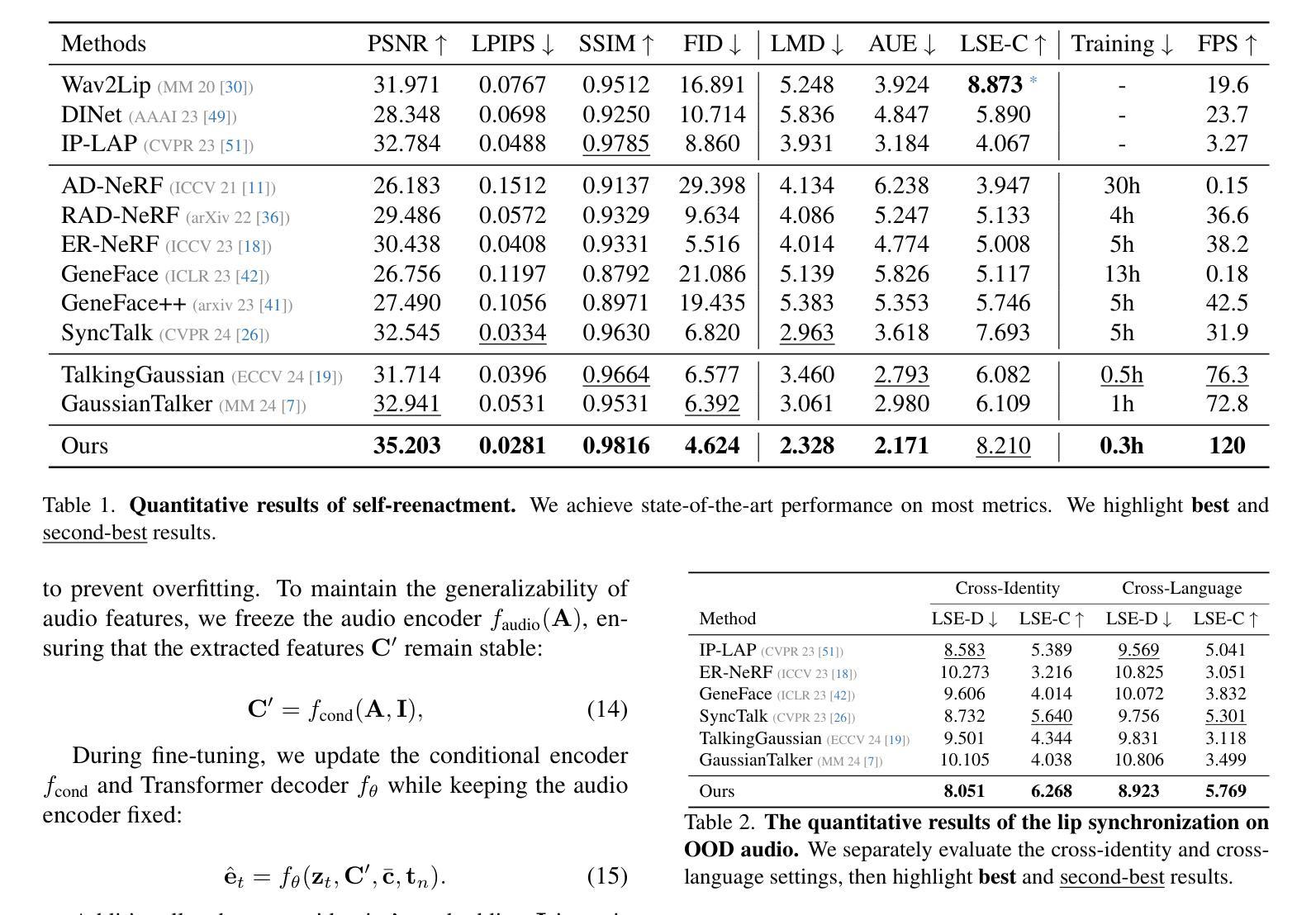

Creating high-quality, generalizable speech-driven 3D talking heads remains a persistent challenge. Previous methods achieve satisfactory results for fixed viewpoints and small-scale audio variations, but they struggle with large head rotations and out-of-distribution (OOD) audio. Moreover, they are constrained by the need for time-consuming, identity-specific training. We believe the core issue lies in the lack of sufficient 3D priors, which limits the extrapolation capabilities of synthesized talking heads. To address this, we propose GGTalker, which synthesizes talking heads through a combination of generalizable priors and identity-specific adaptation. We introduce a two-stage Prior-Adaptation training strategy to learn Gaussian head priors and adapt to individual characteristics. We train Audio-Expression and Expression-Visual priors to capture the universal patterns of lip movements and the general distribution of head textures. During the Customized Adaptation, individual speaking styles and texture details are precisely modeled. Additionally, we introduce a color MLP to generate fine-grained, motion-aligned textures and a Body Inpainter to blend rendered results with the background, producing indistinguishable, photorealistic video frames. Comprehensive experiments show that GGTalker achieves state-of-the-art performance in rendering quality, 3D consistency, lip-sync accuracy, and training efficiency.

创建高质量、可推广的语音驱动3D说话头仍然是一个持续性的挑战。之前的方法对于固定观点和小规模音频变化取得了令人满意的结果,但它们在处理大头部旋转和离群(OOD)音频时遇到了困难。此外,它们受到需要耗时、特定身份训练的限制。我们认为核心问题在于缺乏足够的3D先验知识,这限制了合成说话头的推广能力。为了解决这一问题,我们提出了GGTalker,它结合了可推广的先验知识和特定身份的适应,合成说话头。我们引入了两阶段Prior-Adaptation训练策略来学习高斯头部先验知识并适应个人特征。我们训练了音频表达与表情视觉先验来捕捉唇动的通用模式和头部纹理的一般分布。在个性化适应过程中,个人的讲话风格和纹理细节得到了精确建模。此外,我们引入了一个颜色MLP来生成精细的、与运动对齐的纹理和一个Body Inpainter来将渲染结果与背景混合,生成难以区分、逼真的视频帧。综合实验表明,GGTalker在渲染质量、3D一致性、唇同步准确性和训练效率方面达到了最新技术水平。

论文及项目相关链接

PDF ICCV 2025, Project page: https://vincenthu19.github.io/GGTalker/

Summary

本文提出一种名为GGTalker的方法,通过结合通用先验知识和个性化适应,合成高质量、可推广的3D说话头。为解决核心问题——缺乏足够的3D先验知识,限制了合成说话头的外推能力,引入了两阶段Prior-Adaptation训练策略,学习高斯头部先验并适应个体特征。同时,训练音频表达与视觉表达先验,捕捉唇部运动的通用模式与头部纹理的一般分布。在个性化适应阶段,精确建模个人说话风格和纹理细节。此外,引入颜色MLP生成精细、动态对齐的纹理,以及Body Inpainter将渲染结果与背景融合,生成难以区分的、逼真的视频帧。实验表明,GGTalker在渲染质量、3D一致性、唇形同步精度和训练效率方面达到领先水平。

Key Takeaways

- GGTalker通过结合通用先验知识和个性化适应,提高了3D说话头的质量和可推广性。

- 引入两阶段Prior-Adaptation训练策略,学习高斯头部先验并适应个体特征。

- 训练音频表达与视觉表达先验,以捕捉唇部运动的通用模式与头部纹理的一般分布。

- 在个性化适应阶段,精确建模个人说话风格和纹理细节。

- 引入颜色MLP生成精细、动态对齐的纹理。

- Body Inpainter技术用于将渲染结果与背景融合,生成逼真的视频帧。

点此查看论文截图