⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-07-15 更新

RegGS: Unposed Sparse Views Gaussian Splatting with 3DGS Registration

Authors:Chong Cheng, Yu Hu, Sicheng Yu, Beizhen Zhao, Zijian Wang, Hao Wang

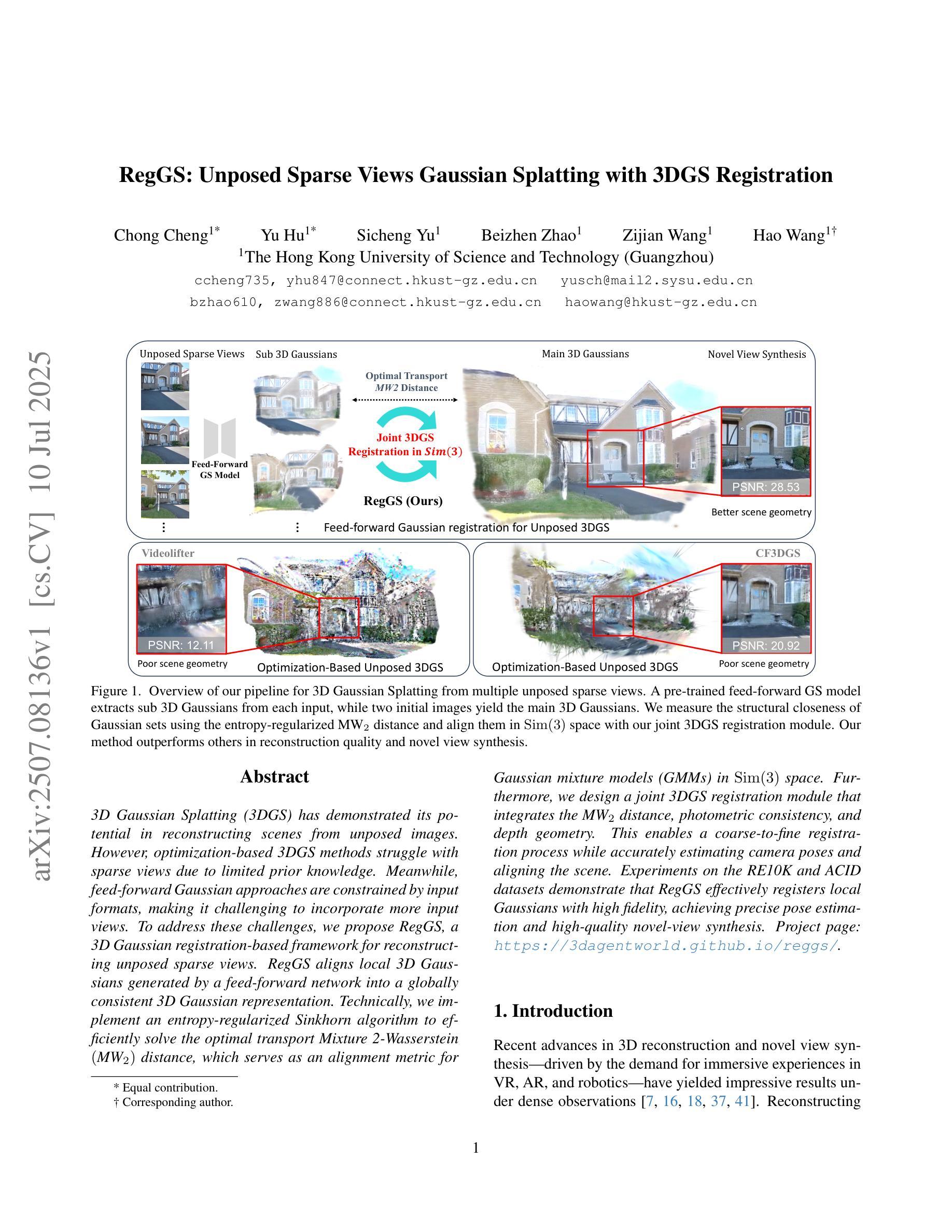

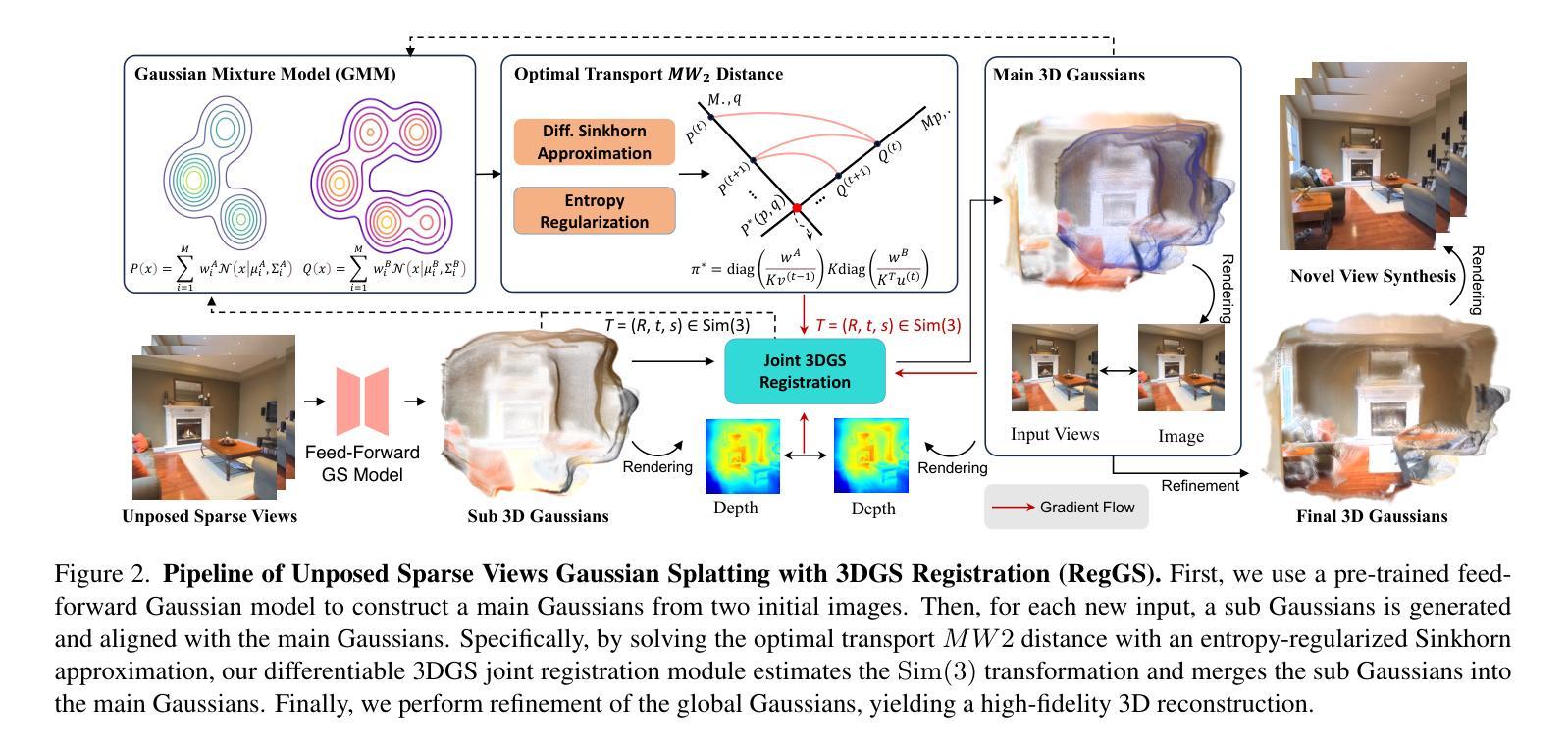

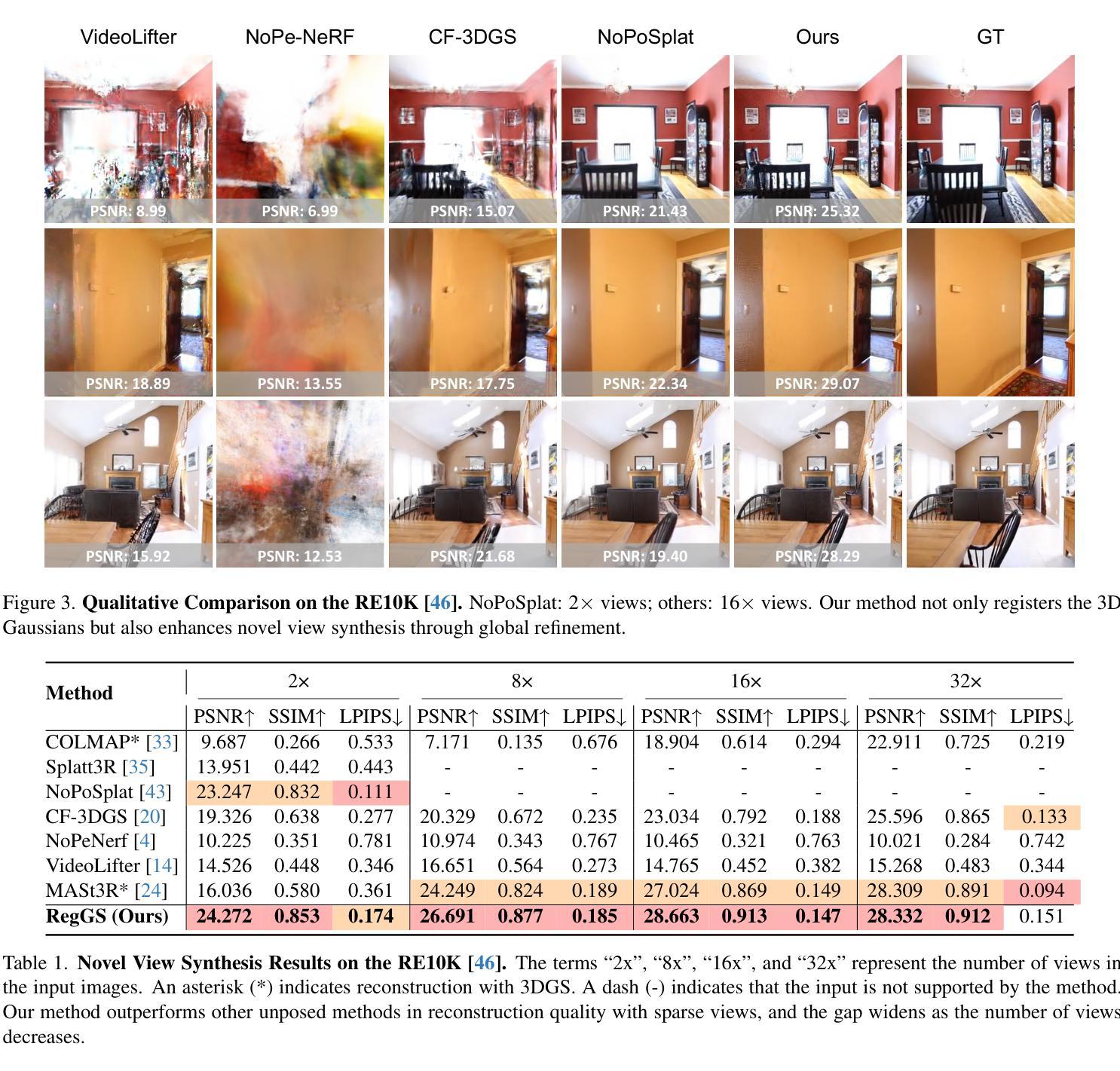

3D Gaussian Splatting (3DGS) has demonstrated its potential in reconstructing scenes from unposed images. However, optimization-based 3DGS methods struggle with sparse views due to limited prior knowledge. Meanwhile, feed-forward Gaussian approaches are constrained by input formats, making it challenging to incorporate more input views. To address these challenges, we propose RegGS, a 3D Gaussian registration-based framework for reconstructing unposed sparse views. RegGS aligns local 3D Gaussians generated by a feed-forward network into a globally consistent 3D Gaussian representation. Technically, we implement an entropy-regularized Sinkhorn algorithm to efficiently solve the optimal transport Mixture 2-Wasserstein $(\text{MW}_2)$ distance, which serves as an alignment metric for Gaussian mixture models (GMMs) in $\mathrm{Sim}(3)$ space. Furthermore, we design a joint 3DGS registration module that integrates the $\text{MW}_2$ distance, photometric consistency, and depth geometry. This enables a coarse-to-fine registration process while accurately estimating camera poses and aligning the scene. Experiments on the RE10K and ACID datasets demonstrate that RegGS effectively registers local Gaussians with high fidelity, achieving precise pose estimation and high-quality novel-view synthesis. Project page: https://3dagentworld.github.io/reggs/.

3D Gaussian Splatting(3DGS)在从无姿态图像重建场景方面展现出了巨大潜力。然而,基于优化的3DGS方法在稀疏视图上由于先验知识有限而面临挑战。同时,前馈高斯方法受到输入格式的限制,难以融入更多输入视图。为了解决这些挑战,我们提出了RegGS,这是一种基于3D高斯注册的无姿态稀疏视图重建框架。RegGS将前馈网络生成的局部3D高斯对齐到全局一致的3D高斯表示。技术上,我们实现了一种基于熵正则化的Sinkhorn算法,以有效地解决最优传输混合2-Wasserstein(MW2)距离,该距离作为Sim(3)空间中高斯混合模型(GMM)的对齐度量。此外,我们设计了一个联合3DGS注册模块,集成了MW2距离、光度一致性以及深度几何。这实现了一个从粗到细的注册过程,同时准确估计相机姿态并对场景进行对齐。在RE10K和ACID数据集上的实验表明,RegGS能够有效地注册局部高斯,实现高精度的姿态估计和高质量的新视图合成。项目页面:https://3dagentworld.github.io/reggs/。

论文及项目相关链接

PDF Accepted to ICCV 2025

Summary

本文介绍了针对无姿态稀疏视图重建的RegGS方法。该方法结合了基于优化的3DGS方法和前馈高斯方法的优点,通过局部三维高斯分布对齐生成全局一致的三维高斯表示。利用熵正则化的Sinkhorn算法解决最优传输混合二瓦森斯坦距离问题,将其作为高斯混合模型在Sim(3)空间中的对齐度量。设计了一个联合三维高斯注册模块,整合了二瓦森斯坦距离、光度一致性以及深度几何,实现了从粗到细的注册过程,准确估计相机姿态和对齐场景。实验证明RegGS方法能有效注册局部高斯分布,实现高精度的姿态估计和高质量的新视角合成。

Key Takeaways

- RegGS是一个用于重建无姿态稀疏视图的三维高斯注册框架。

- 它结合了优化基于的3DGS和前馈高斯方法的优点。

- RegGS通过对局部三维高斯分布进行对齐,生成全局一致的三维高斯表示。

- 利用熵正则化的Sinkhorn算法解决最优传输混合二瓦森斯坦距离问题,用于高斯混合模型的对齐。

- 设计了一个联合三维高斯注册模块,整合了二瓦森斯坦距离、光度一致性以及深度几何。

- RegGS实现了从粗到细的注册过程,准确估计相机姿态和对齐场景。

点此查看论文截图

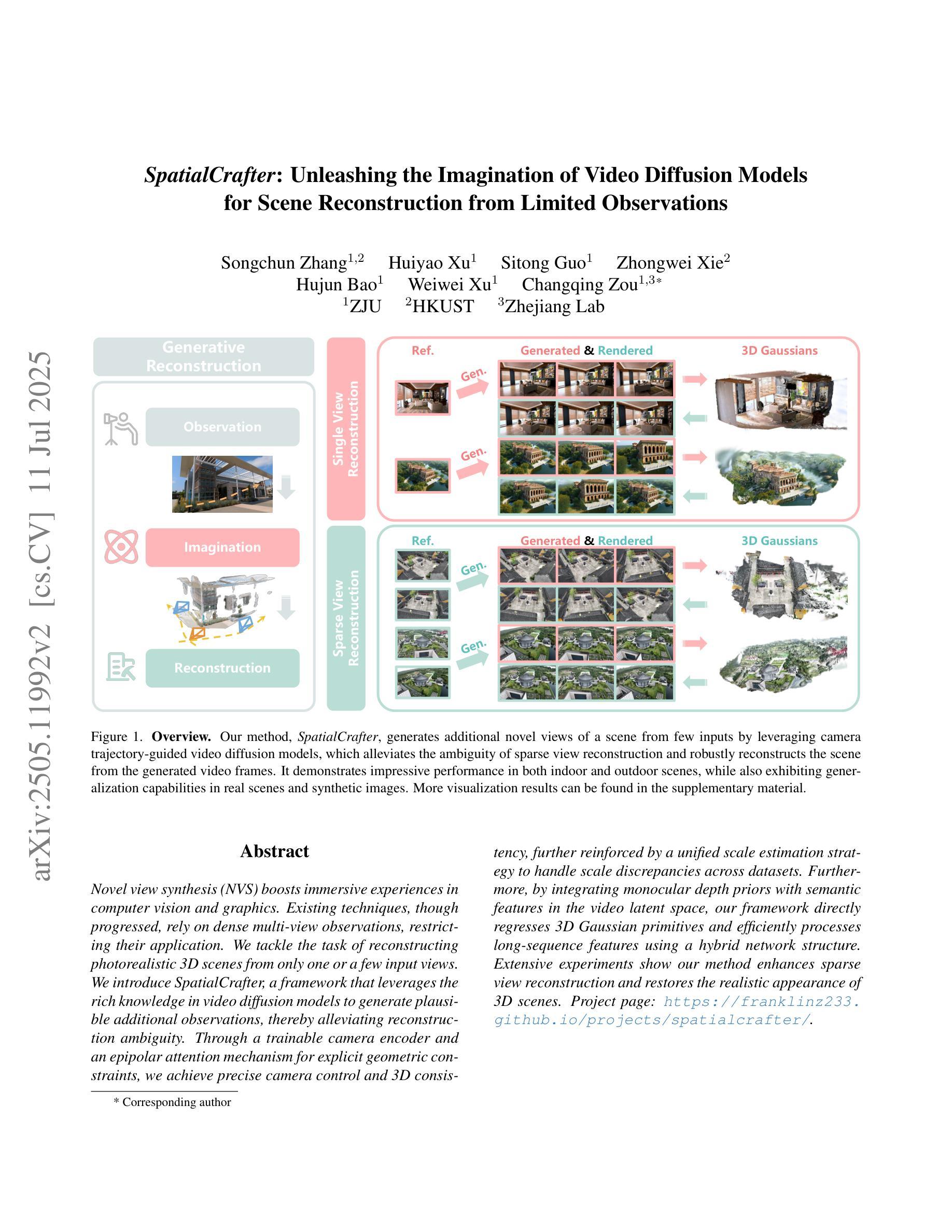

SpatialCrafter: Unleashing the Imagination of Video Diffusion Models for Scene Reconstruction from Limited Observations

Authors:Songchun Zhang, Huiyao Xu, Sitong Guo, Zhongwei Xie, Hujun Bao, Weiwei Xu, Changqing Zou

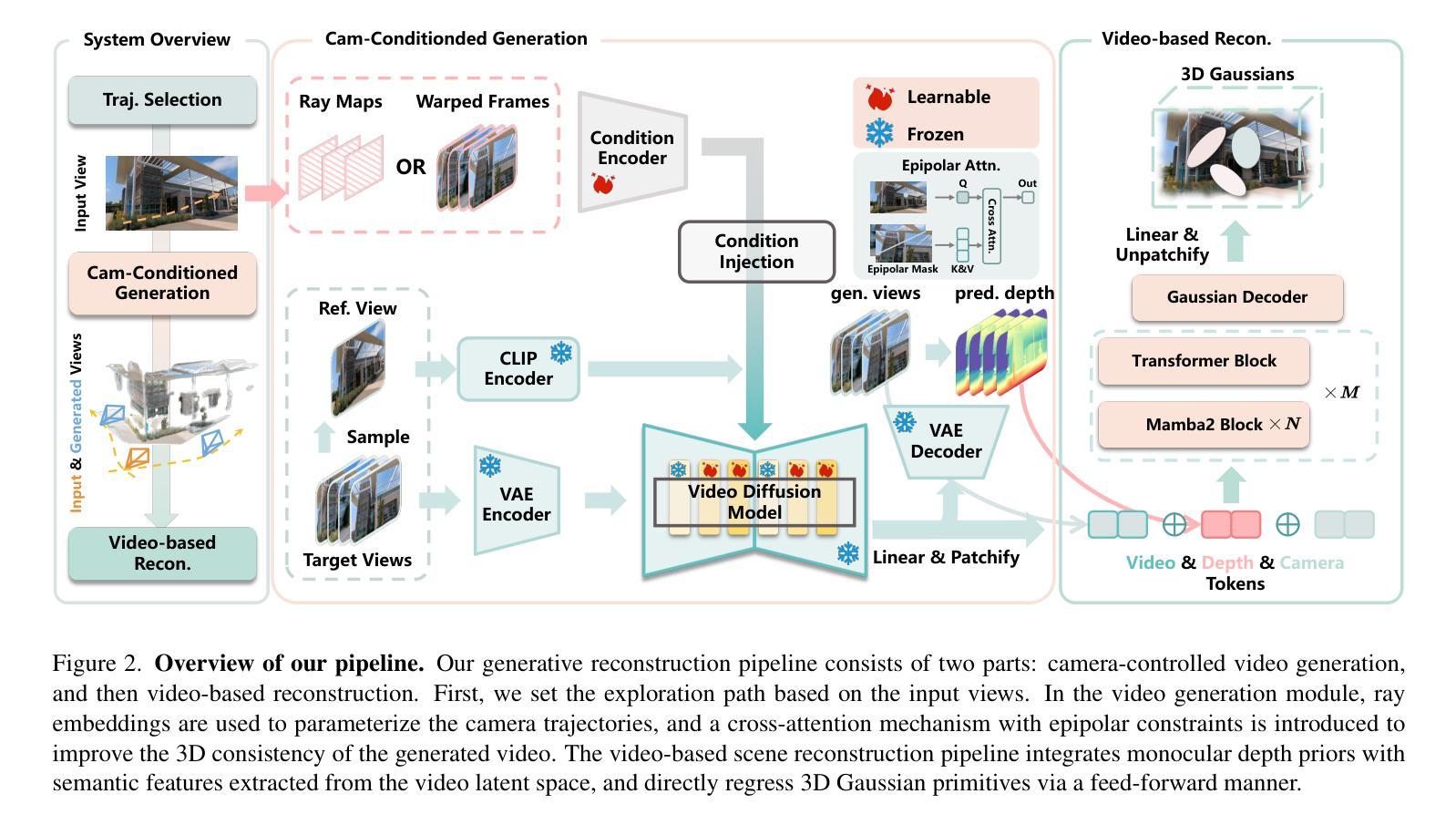

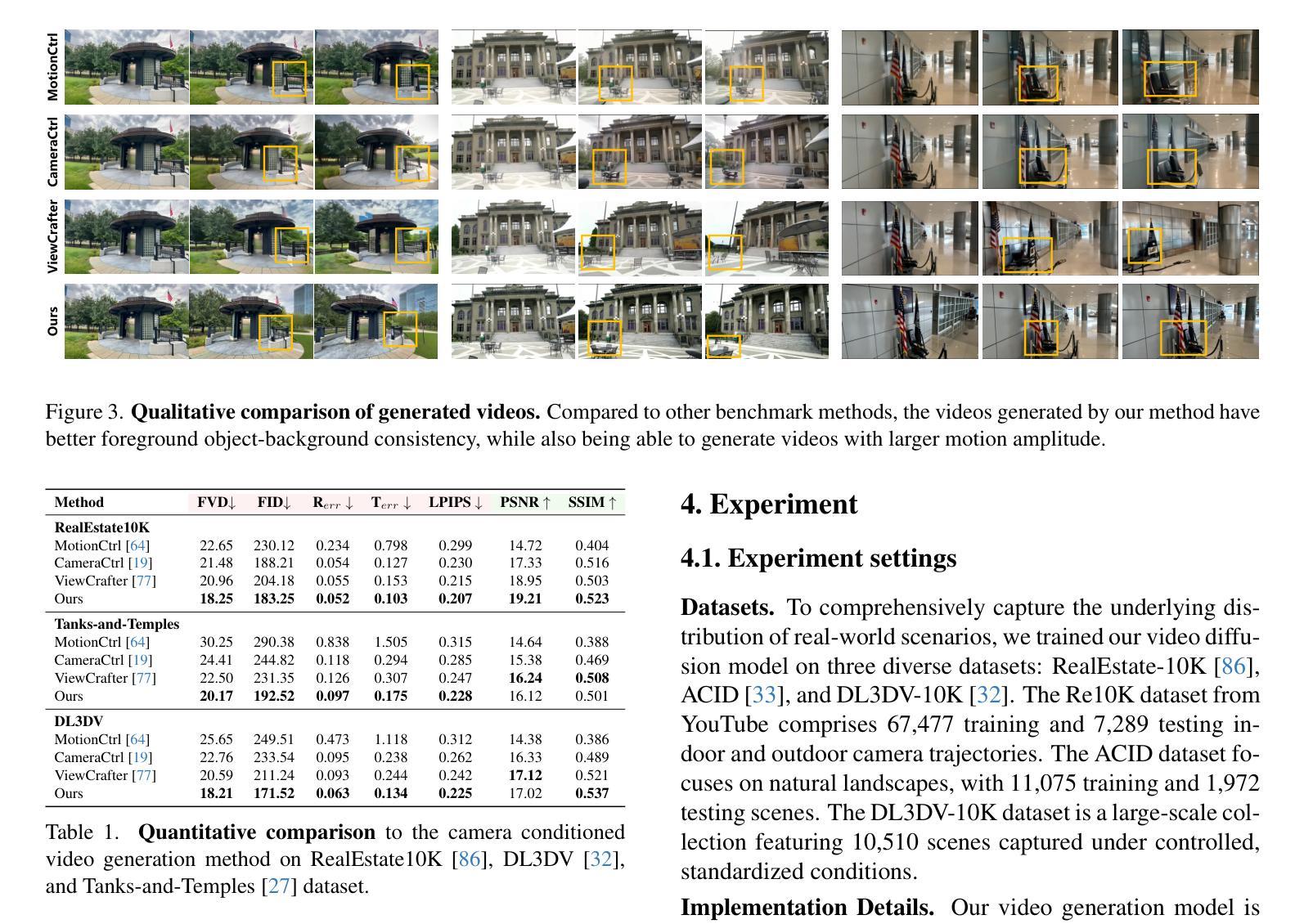

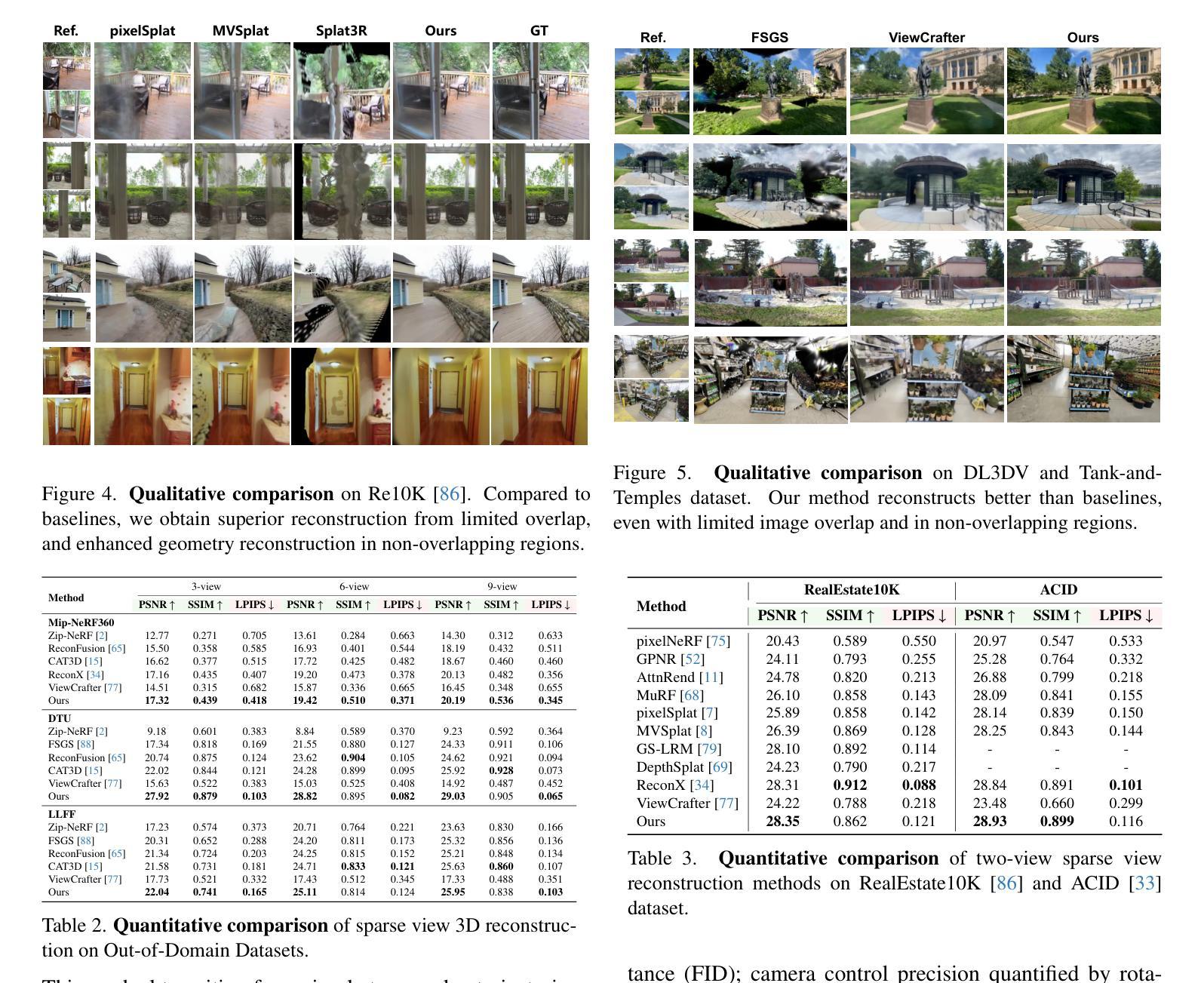

Novel view synthesis (NVS) boosts immersive experiences in computer vision and graphics. Existing techniques, though progressed, rely on dense multi-view observations, restricting their application. This work takes on the challenge of reconstructing photorealistic 3D scenes from sparse or single-view inputs. We introduce SpatialCrafter, a framework that leverages the rich knowledge in video diffusion models to generate plausible additional observations, thereby alleviating reconstruction ambiguity. Through a trainable camera encoder and an epipolar attention mechanism for explicit geometric constraints, we achieve precise camera control and 3D consistency, further reinforced by a unified scale estimation strategy to handle scale discrepancies across datasets. Furthermore, by integrating monocular depth priors with semantic features in the video latent space, our framework directly regresses 3D Gaussian primitives and efficiently processes long-sequence features using a hybrid network structure. Extensive experiments show our method enhances sparse view reconstruction and restores the realistic appearance of 3D scenes.

新型视图合成(NVS)提升了计算机视觉和图形中的沉浸式体验。虽然现有技术已经取得进展,但它们依赖于密集的多视角观察,从而限制了其应用。本研究致力于从稀疏或单视角输入重建逼真的三维场景。我们引入了SpatialCrafter框架,它利用视频扩散模型中的丰富知识来生成合理的额外观察,从而减轻重建的歧义性。通过可训练的相机编码器和用于明确几何约束的极线注意力机制,我们实现了精确的相机控制和三维一致性,通过统一的尺度估计策略进一步解决数据集之间的尺度差异问题。此外,通过将单眼深度先验与视频潜在空间中的语义特征相结合,我们的框架直接回归三维高斯基本体,并借助混合网络结构有效地处理长序列特征。大量实验表明,我们的方法提高了稀疏视角的重建能力,恢复了三维场景的真实外观。

论文及项目相关链接

PDF Accepted by ICCV 2025. 12 pages, 9 figures

Summary

新一代视图合成技术通过利用视频扩散模型的丰富知识,从稀疏或单视图输入中重建出逼真的三维场景。SpatialCrafter框架通过生成合理的额外观察数据,减轻了重建的不确定性问题。它结合了可训练的相机编码器、极线注意力机制和统一尺度估计策略,实现精确的相机控制和三维一致性。同时,整合单目深度先验和视频潜在空间中的语义特征,直接回归三维高斯基本体,并通过混合网络结构高效处理长序列特征。实验证明,该方法提高了稀疏视图重建的效果,恢复了三维场景的逼真度。

Key Takeaways

- 新型视图合成技术提升计算机视觉和图形的沉浸式体验。

- SpatialCrafter框架利用视频扩散模型的丰富知识生成合理额外观察数据,减轻重建的不确定性。

- 通过相机编码器和极线注意力机制实现精确相机控制和三维一致性。

- 统一尺度估计策略处理不同数据集间的尺度差异问题。

- 集成单目深度先验和语义特征,直接回归三维高斯基本体。

- 混合网络结构高效处理长序列特征。

点此查看论文截图

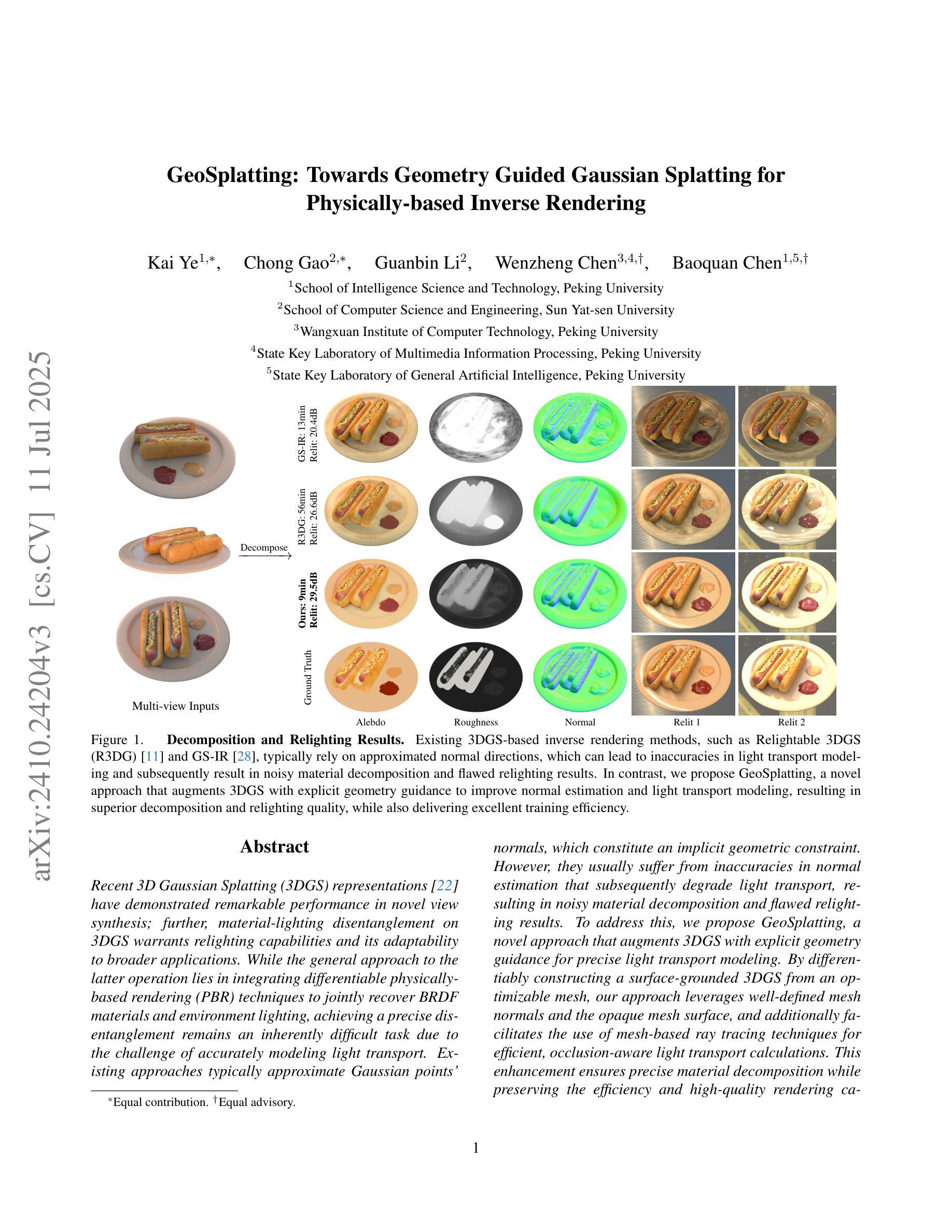

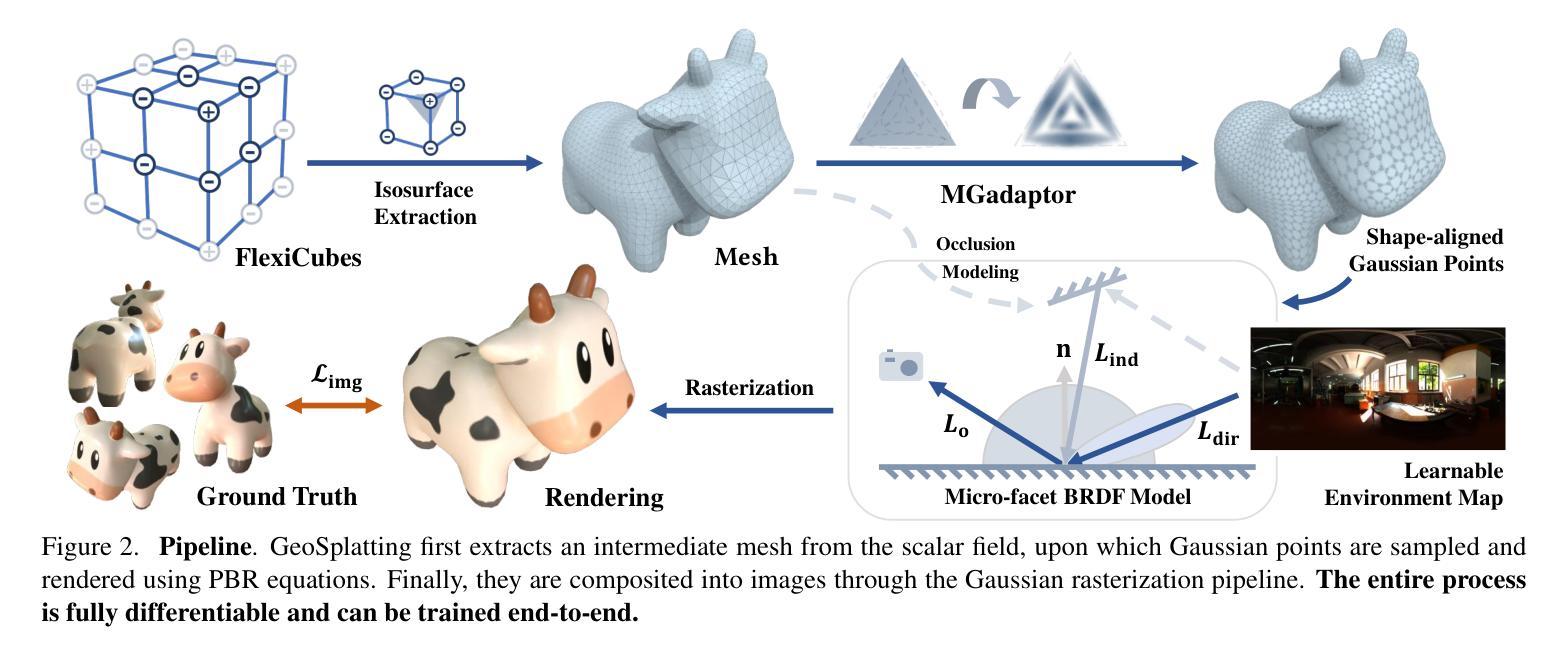

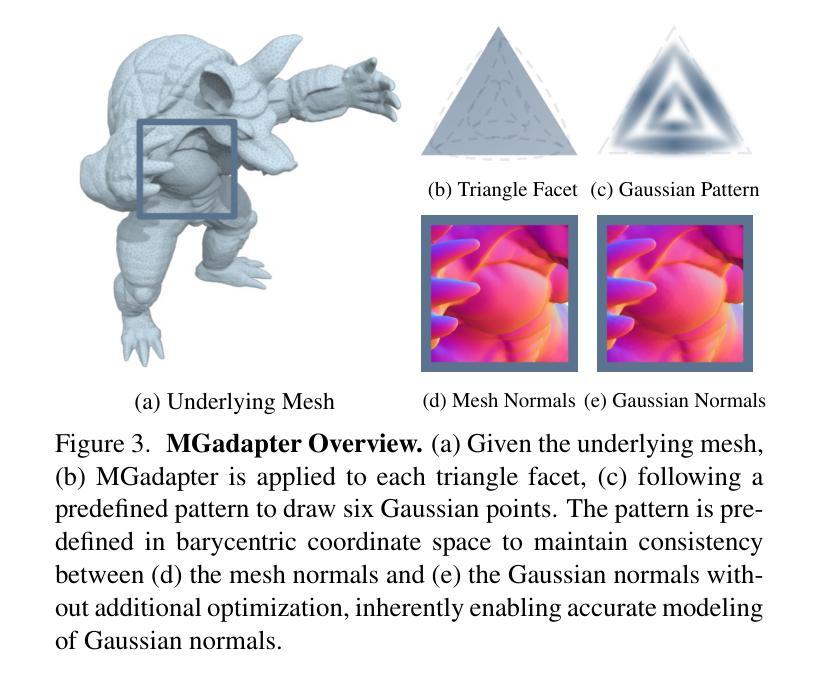

GeoSplatting: Towards Geometry Guided Gaussian Splatting for Physically-based Inverse Rendering

Authors:Kai Ye, Chong Gao, Guanbin Li, Wenzheng Chen, Baoquan Chen

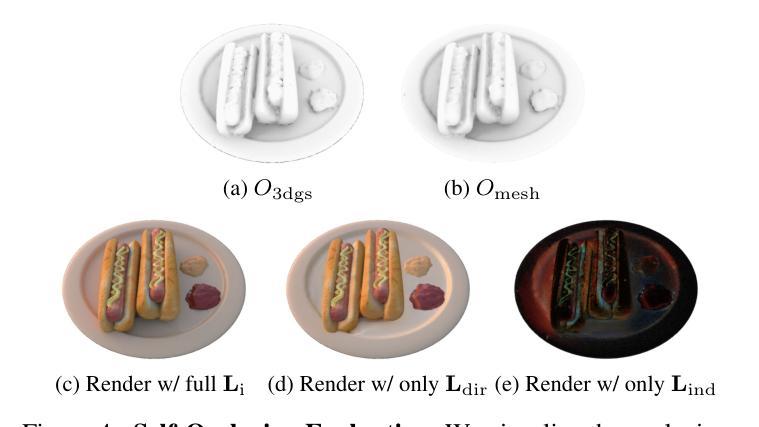

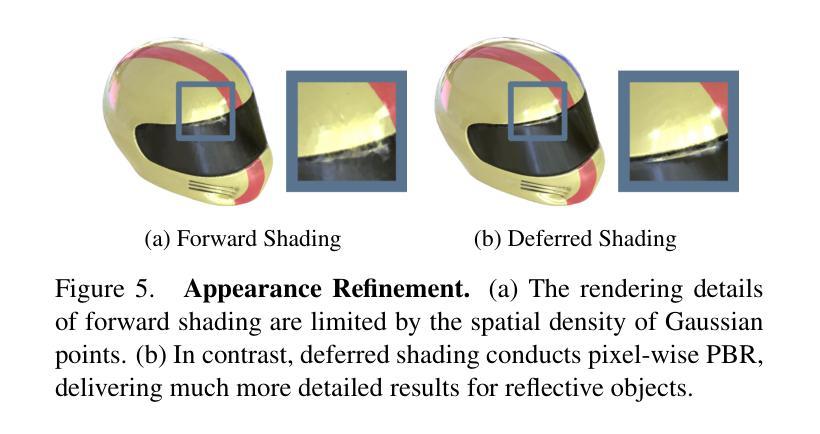

Recent 3D Gaussian Splatting (3DGS) representations have demonstrated remarkable performance in novel view synthesis; further, material-lighting disentanglement on 3DGS warrants relighting capabilities and its adaptability to broader applications. While the general approach to the latter operation lies in integrating differentiable physically-based rendering (PBR) techniques to jointly recover BRDF materials and environment lighting, achieving a precise disentanglement remains an inherently difficult task due to the challenge of accurately modeling light transport. Existing approaches typically approximate Gaussian points’ normals, which constitute an implicit geometric constraint. However, they usually suffer from inaccuracies in normal estimation that subsequently degrade light transport, resulting in noisy material decomposition and flawed relighting results. To address this, we propose GeoSplatting, a novel approach that augments 3DGS with explicit geometry guidance for precise light transport modeling. By differentiably constructing a surface-grounded 3DGS from an optimizable mesh, our approach leverages well-defined mesh normals and the opaque mesh surface, and additionally facilitates the use of mesh-based ray tracing techniques for efficient, occlusion-aware light transport calculations. This enhancement ensures precise material decomposition while preserving the efficiency and high-quality rendering capabilities of 3DGS. Comprehensive evaluations across diverse datasets demonstrate the effectiveness of GeoSplatting, highlighting its superior efficiency and state-of-the-art inverse rendering performance. The project page can be found at https://pku-vcl-geometry.github.io/GeoSplatting/.

近期的三维高斯展平(3DGS)表示法在新视角合成中展现了卓越的性能;此外,3DGS上的材质-光照解构保证了其重新照明的能力,并使其适应更广泛的应用。虽然后者操作的一般方法在于将可微分的基于物理的渲染(PBR)技术集成到一起来恢复BRDF材质和环境光照,但实现精确解构仍然是一个固有的困难任务,因为准确建模光传输是一个挑战。现有方法通常近似高斯点的法线,这构成了隐式几何约束。然而,它们通常受到法线估计不准确的影响,随后会退化光传输,导致材质分解产生噪声和重新照明结果出现缺陷。为了解决这一问题,我们提出了GeoSplatting,这是一种新型方法,它通过明确的几何指导来增强3DGS,以实现精确的光传输建模。通过可微分地从可优化网格构建基于表面的3DGS,我们的方法利用定义良好的网格法线和不透明网格表面,并额外使用基于网格的射线追踪技术,进行高效、带遮挡意识的光传输计算。这种增强确保了精确的材质分解,同时保持了3DGS的高效和高质量渲染能力。在多个数据集上的综合评估证明了GeoSplatting的有效性,凸显了其超高的效率和最先进的逆向渲染性能。项目页面可在[https://pku-vcl-geometry.github.io/GeoSplatting/]找到。

论文及项目相关链接

PDF ICCV 2025

Summary

本文介绍了基于三维高斯蒙皮表示法(3DGS)的新颖技术GeoSplatting。它利用显式的几何指导来改善光照传输建模,实现更精确的材料分解和重新照明效果。通过从可优化的网格构建表面基础的三维高斯蒙皮,并结合网格追踪技术,提高光线传输计算的效率和准确性。该技术优化了现有的三维高斯蒙皮表示法,提高了逆渲染性能。更多信息可参见项目网站。

Key Takeaways

- GeoSplatting是一种基于三维高斯蒙皮表示法(3DGS)的新技术。

- 它通过显式的几何指导改善光照传输建模,以进行更准确和材料分解和重新照明。

- 该技术利用可优化的网格构建表面基础的三维高斯蒙皮,并利用网格追踪技术进行高效的光线传输计算。

点此查看论文截图