⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-07-17 更新

ScaffoldAvatar: High-Fidelity Gaussian Avatars with Patch Expressions

Authors:Shivangi Aneja, Sebastian Weiss, Irene Baeza, Prashanth Chandran, Gaspard Zoss, Matthias Nießner, Derek Bradley

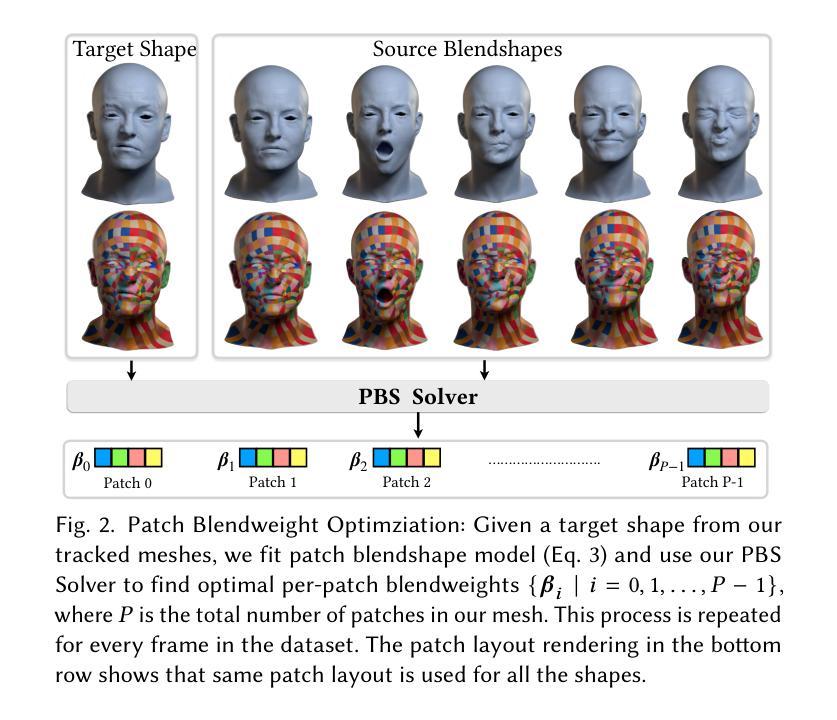

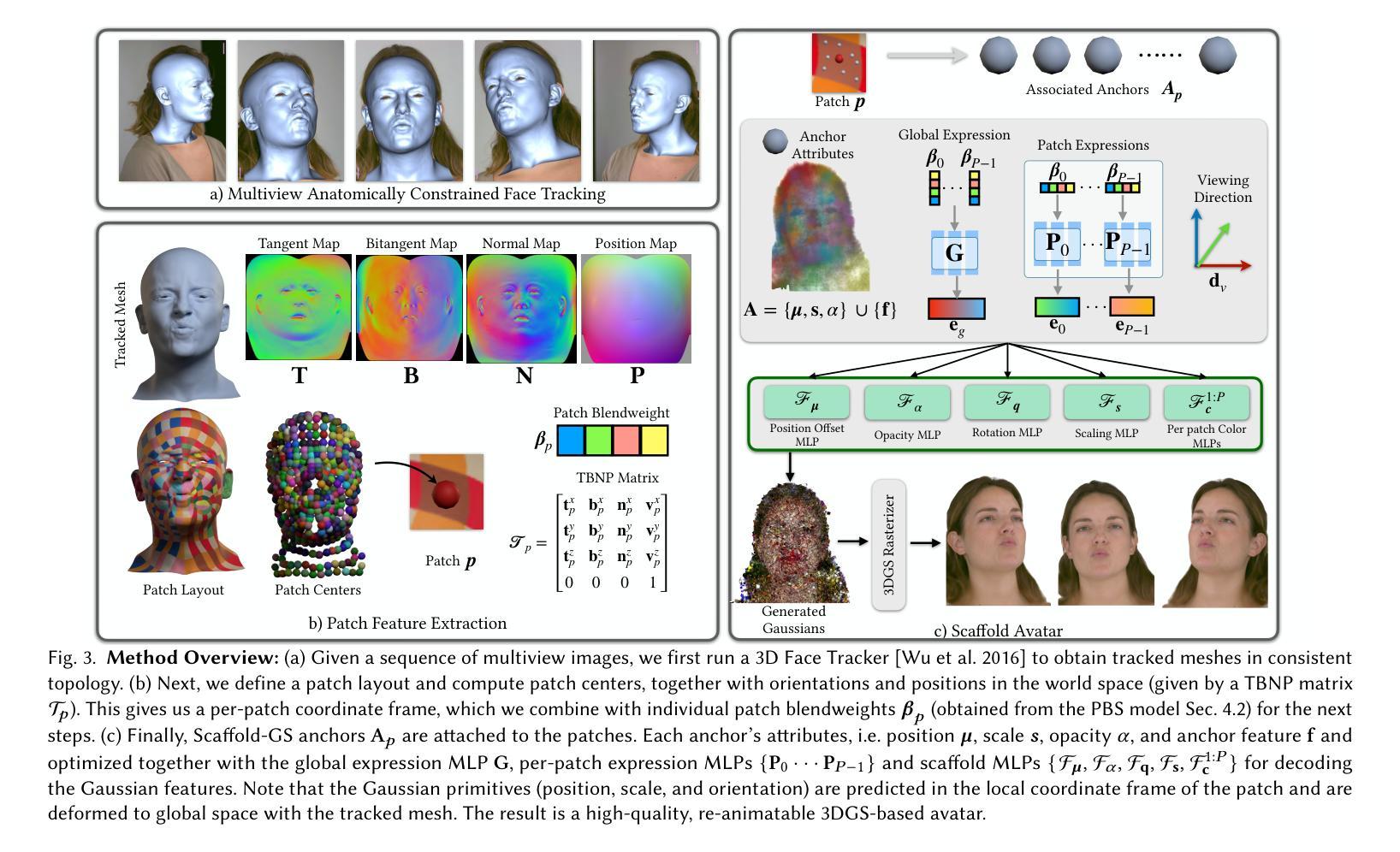

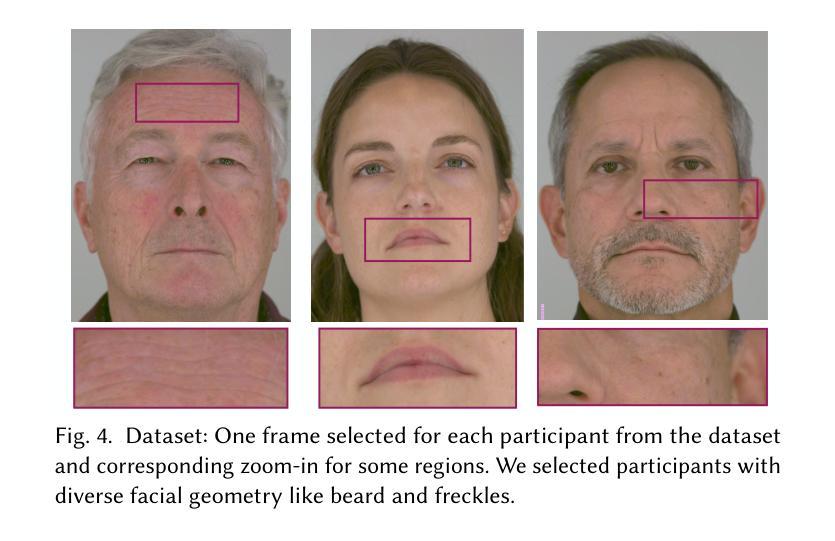

Generating high-fidelity real-time animated sequences of photorealistic 3D head avatars is important for many graphics applications, including immersive telepresence and movies. This is a challenging problem particularly when rendering digital avatar close-ups for showing character’s facial microfeatures and expressions. To capture the expressive, detailed nature of human heads, including skin furrowing and finer-scale facial movements, we propose to couple locally-defined facial expressions with 3D Gaussian splatting to enable creating ultra-high fidelity, expressive and photorealistic 3D head avatars. In contrast to previous works that operate on a global expression space, we condition our avatar’s dynamics on patch-based local expression features and synthesize 3D Gaussians at a patch level. In particular, we leverage a patch-based geometric 3D face model to extract patch expressions and learn how to translate these into local dynamic skin appearance and motion by coupling the patches with anchor points of Scaffold-GS, a recent hierarchical scene representation. These anchors are then used to synthesize 3D Gaussians on-the-fly, conditioned by patch-expressions and viewing direction. We employ color-based densification and progressive training to obtain high-quality results and faster convergence for high resolution 3K training images. By leveraging patch-level expressions, ScaffoldAvatar consistently achieves state-of-the-art performance with visually natural motion, while encompassing diverse facial expressions and styles in real time.

生成高保真实时动画序列的光照真实三维头部虚拟人是图形应用中的重要内容,这些应用包括沉浸式远程存在和电影。这是一个具有挑战性的问题,尤其是在渲染数字虚拟人的特写镜头以展示角色的面部细微特征和表情时。为了捕捉人类头部的表情和细节特征,包括皮肤皱纹和更精细的面部运动,我们提议将局部定义的面部表情与三维高斯混涂技术相结合,以创建超高分辨率、表情丰富且光照真实的三维头部虚拟人。与之前在全球表达空间上操作的工作不同,我们将虚拟人的动态特性建立在基于补丁的局部表情特征上,并在补丁级别上合成三维高斯分布。特别是,我们利用基于补丁的三维几何面部模型来提取补丁表情,并学习如何将它们转化为局部动态皮肤外观和运动,通过将补丁与支架GS的锚点相结合,这是一种最新的层次场景表示。然后,这些锚点被用来根据补丁表情和观看方向实时合成三维高斯分布。我们采用基于颜色的加密和逐步训练的方法,以获得高质量的结果并加快对高分辨率3K训练图像的收敛速度。通过利用补丁级别的表情,ScaffoldAvatar始终实现了最先进的性能,具有视觉上自然运动,同时实时包含各种面部表情和风格。

论文及项目相关链接

PDF (SIGGRAPH 2025) Paper Video: https://youtu.be/VyWkgsGdbkk Project Page: https://shivangi-aneja.github.io/projects/scaffoldavatar/

Summary

高保真实时动画序列的光照现实三维头像表情动画对于图形应用非常重要,包括沉浸式远程参与和电影制作。本文提出了一种基于局部定义面部表情与三维高斯泼溅技术的方法,以创建超高保真、表现力强、光照现实的三维头像表情动画。该方法以斑块为基础,将头像表情动态与斑块级别的高斯合成相结合,实现了更精细的面部微特征和表情表现。通过基于颜色的稠密化和渐进训练,该方法在高分辨率3K训练图像上获得了高质量的结果和更快的收敛速度。

Key Takeaways

- 生成高保真实时动画序列的光照现实三维头像表情动画在图形应用中的重要性。

- 局部定义面部表情与三维高斯泼溅技术的结合,以创建超高保真、表现力强、光照现实的三维头像。

- 以斑块为基础的方法实现了更精细的面部微特征和表情表现。

- 通过斑块级别的高斯合成,实现了实时的多样化面部表情和风格表现。

- 基于颜色的稠密化和渐进训练技术提高了结果的质量和收敛速度。

- ScaffoldAvatar方法达到了先进性能,具有视觉自然运动的特点。

点此查看论文截图