⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-08-03 更新

NVS-SQA: Exploring Self-Supervised Quality Representation Learning for Neurally Synthesized Scenes without References

Authors:Qiang Qu, Yiran Shen, Xiaoming Chen, Yuk Ying Chung, Weidong Cai, Tongliang Liu

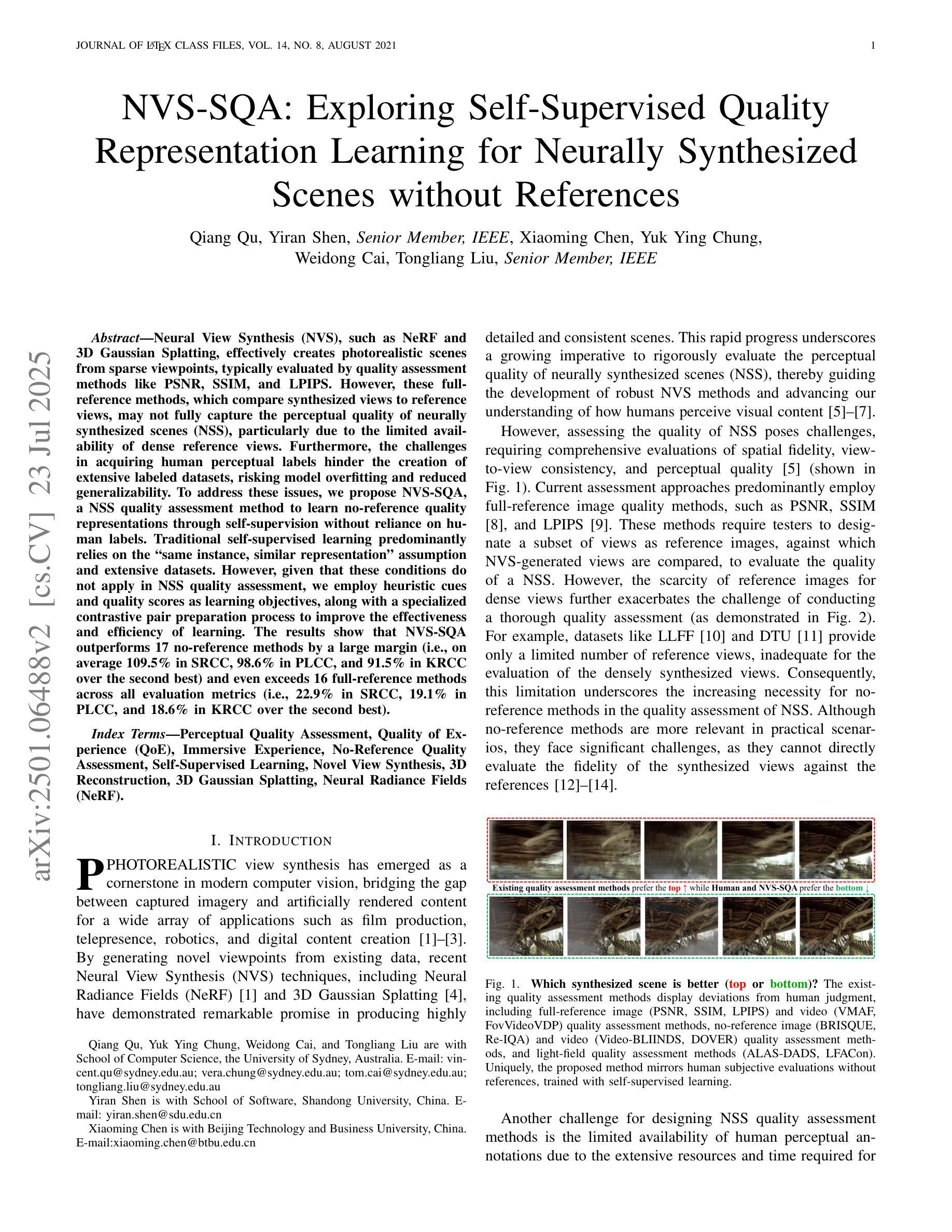

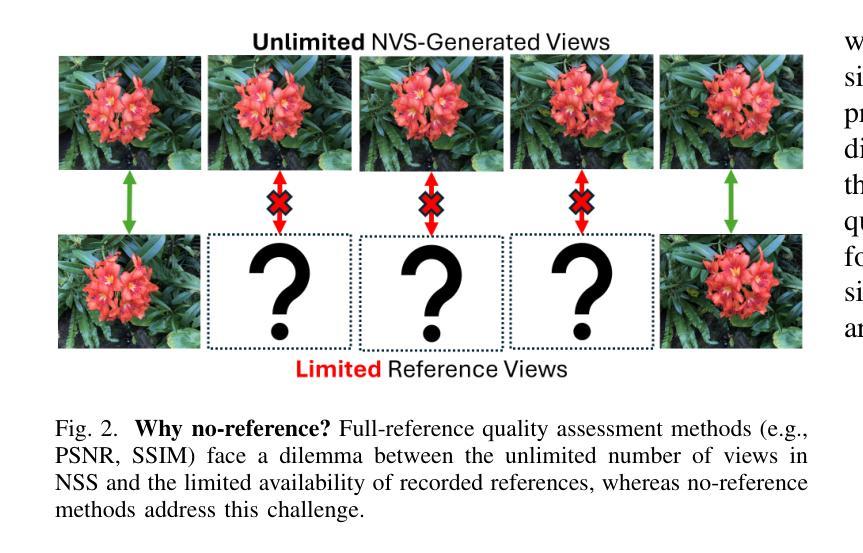

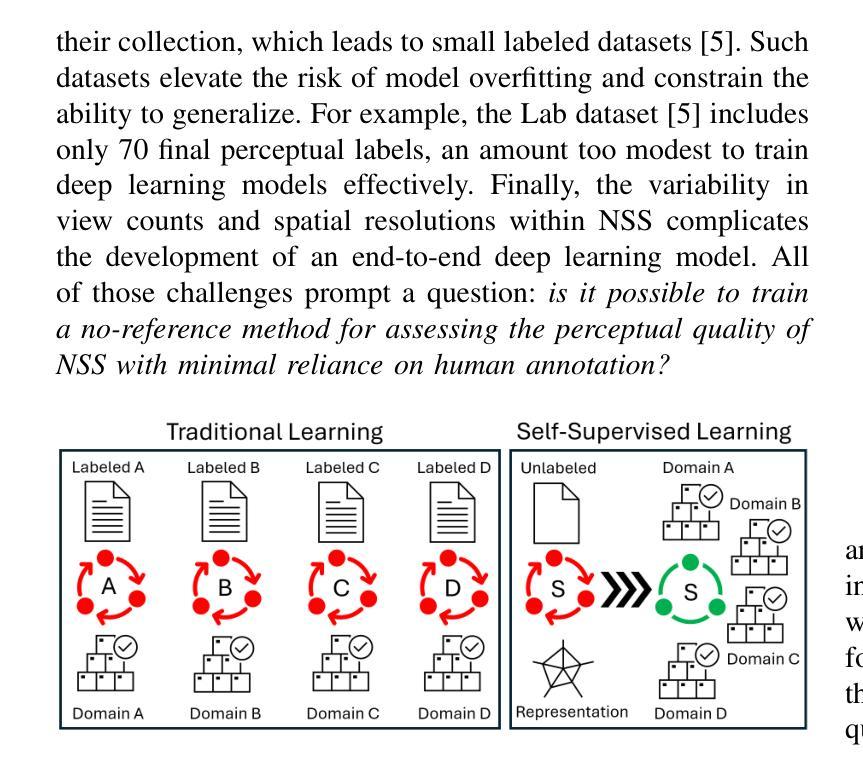

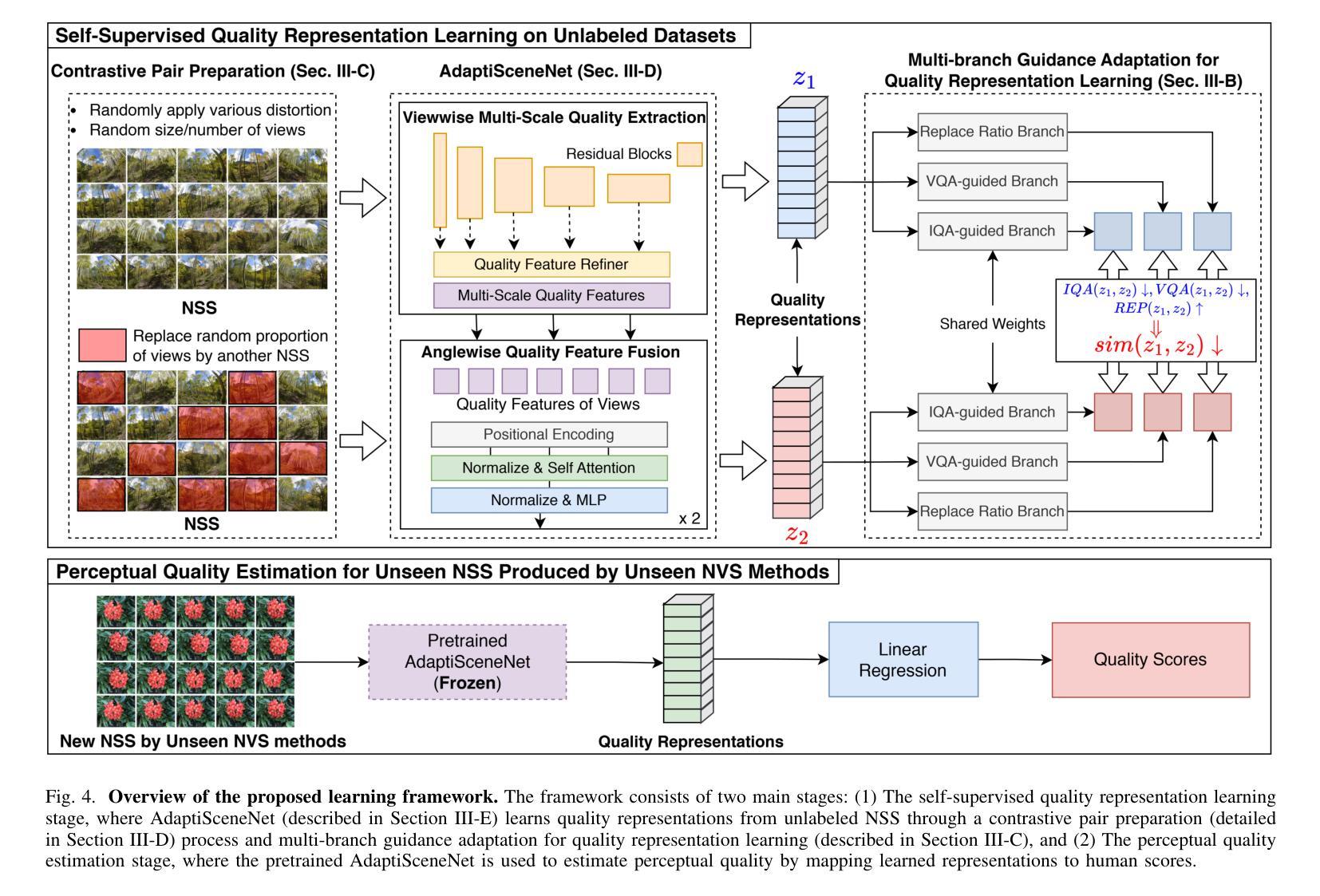

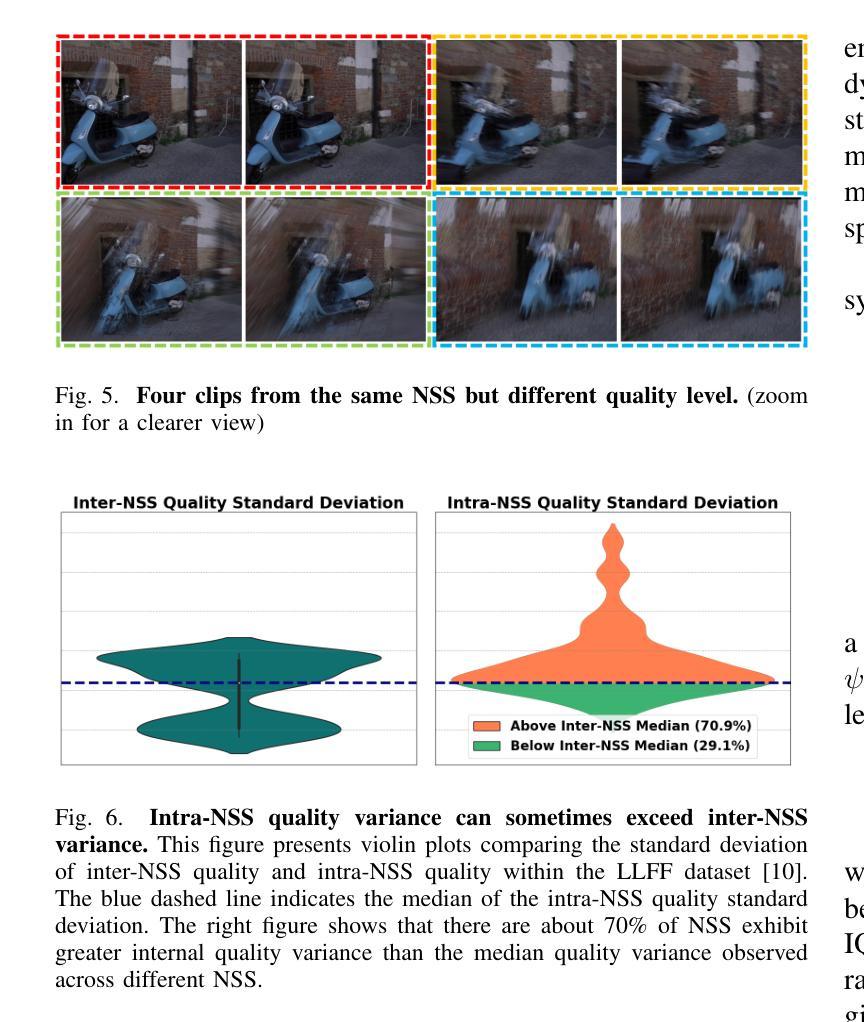

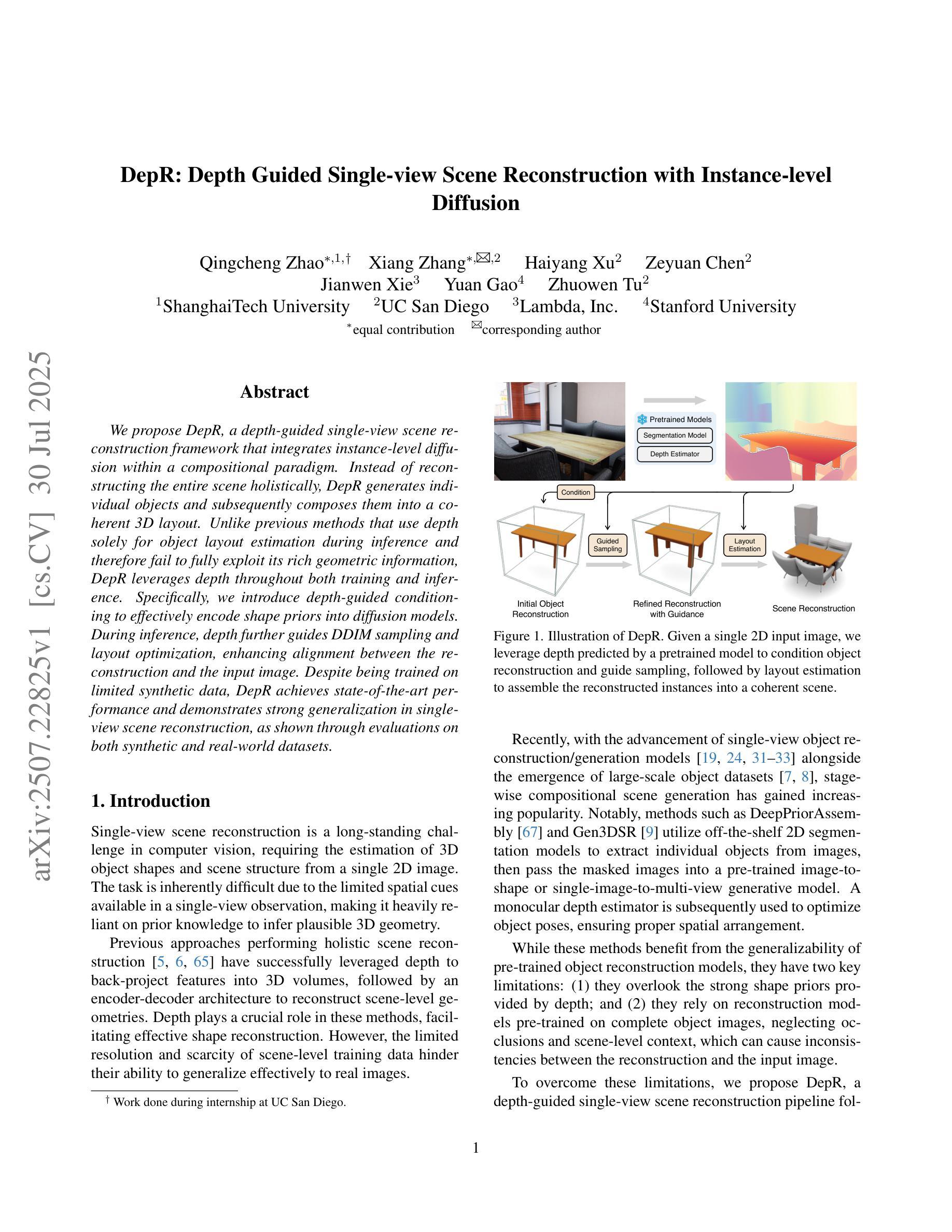

Neural View Synthesis (NVS), such as NeRF and 3D Gaussian Splatting, effectively creates photorealistic scenes from sparse viewpoints, typically evaluated by quality assessment methods like PSNR, SSIM, and LPIPS. However, these full-reference methods, which compare synthesized views to reference views, may not fully capture the perceptual quality of neurally synthesized scenes (NSS), particularly due to the limited availability of dense reference views. Furthermore, the challenges in acquiring human perceptual labels hinder the creation of extensive labeled datasets, risking model overfitting and reduced generalizability. To address these issues, we propose NVS-SQA, a NSS quality assessment method to learn no-reference quality representations through self-supervision without reliance on human labels. Traditional self-supervised learning predominantly relies on the “same instance, similar representation” assumption and extensive datasets. However, given that these conditions do not apply in NSS quality assessment, we employ heuristic cues and quality scores as learning objectives, along with a specialized contrastive pair preparation process to improve the effectiveness and efficiency of learning. The results show that NVS-SQA outperforms 17 no-reference methods by a large margin (i.e., on average 109.5% in SRCC, 98.6% in PLCC, and 91.5% in KRCC over the second best) and even exceeds 16 full-reference methods across all evaluation metrics (i.e., 22.9% in SRCC, 19.1% in PLCC, and 18.6% in KRCC over the second best).

神经视图合成(NVS),如NeRF和3D高斯贴图,能够有效地从稀疏视角生成逼真的场景,通常通过PSNR、SSIM和LPIPS等质量评估方法进行评估。然而,这些全参考方法将合成视图与参考视图进行比较,可能无法完全捕捉神经合成场景(NSS)的感知质量,尤其是因为密集参考视图的可用性有限。此外,获取人类感知标签的挑战阻碍了大规模标记数据集的创建,从而增加了模型过度拟合和泛化性降低的风险。为了解决这些问题,我们提出了NVS-SQA,这是一种NSS质量评估方法,通过自监督学习无参考质量表示,无需依赖人工标签。传统的自监督学习主要依赖于“同一实例,相似表示”的假设和大量数据集。然而,鉴于这些条件不适用于NSS质量评估,我们采用启发式线索和质量分数作为学习目标,同时配合专门的对比对准备过程,以提高学习的有效性和效率。结果表明,NVS-SQA在17种无参考方法中有很大优势(例如,SRCC平均提高109.5%,PLCC提高98.6%,KRCC提高91.5%);在所有评估指标上,甚至超过了16种全参考方法(例如,SRCC提高22.9%,PLCC提高19.1%,KRCC提高18.6%)。

论文及项目相关链接

摘要

本文提出了NVS-SQA,这是一种无需依赖人类标签的无参考质量表示学习方法,用于神经合成场景的质量评估。通过启发式线索和质量分数作为学习目标,结合专门的对比对准备过程,提高学习的有效性和效率。NVS-SQA在无需参考方法中表现出卓越性能,并在所有评估指标上超越多数全参考方法。

**关键见解**

1. 神经视图合成(NVS)技术如NeRF和3D高斯飞溅,能有效从稀疏视角创建逼真的场景。

2. 全参考质量评估方法,如PSNR、SSIM和LPIPS,可能无法充分捕捉神经合成场景(NSS)的感知质量,尤其是因为密集参考视图的可获得性有限。

3. 提出NVS-SQA方法,无需人类标签即可学习无参考质量表示。

4. NVS-SQA采用启发式线索和质量分数作为学习目标,结合对比对准备过程,提高学习和评估的有效性。

5. NVS-SQA在无需参考方法上表现出显著优势,大幅超越17种无参考方法和16种全参考方法。

6. NVS-SQA的改进体现在特殊对比对准备过程和启发式线索的运用。

点此查看论文截图