⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-08-05 更新

Is It Really You? Exploring Biometric Verification Scenarios in Photorealistic Talking-Head Avatar Videos

Authors:Laura Pedrouzo-Rodriguez, Pedro Delgado-DeRobles, Luis F. Gomez, Ruben Tolosana, Ruben Vera-Rodriguez, Aythami Morales, Julian Fierrez

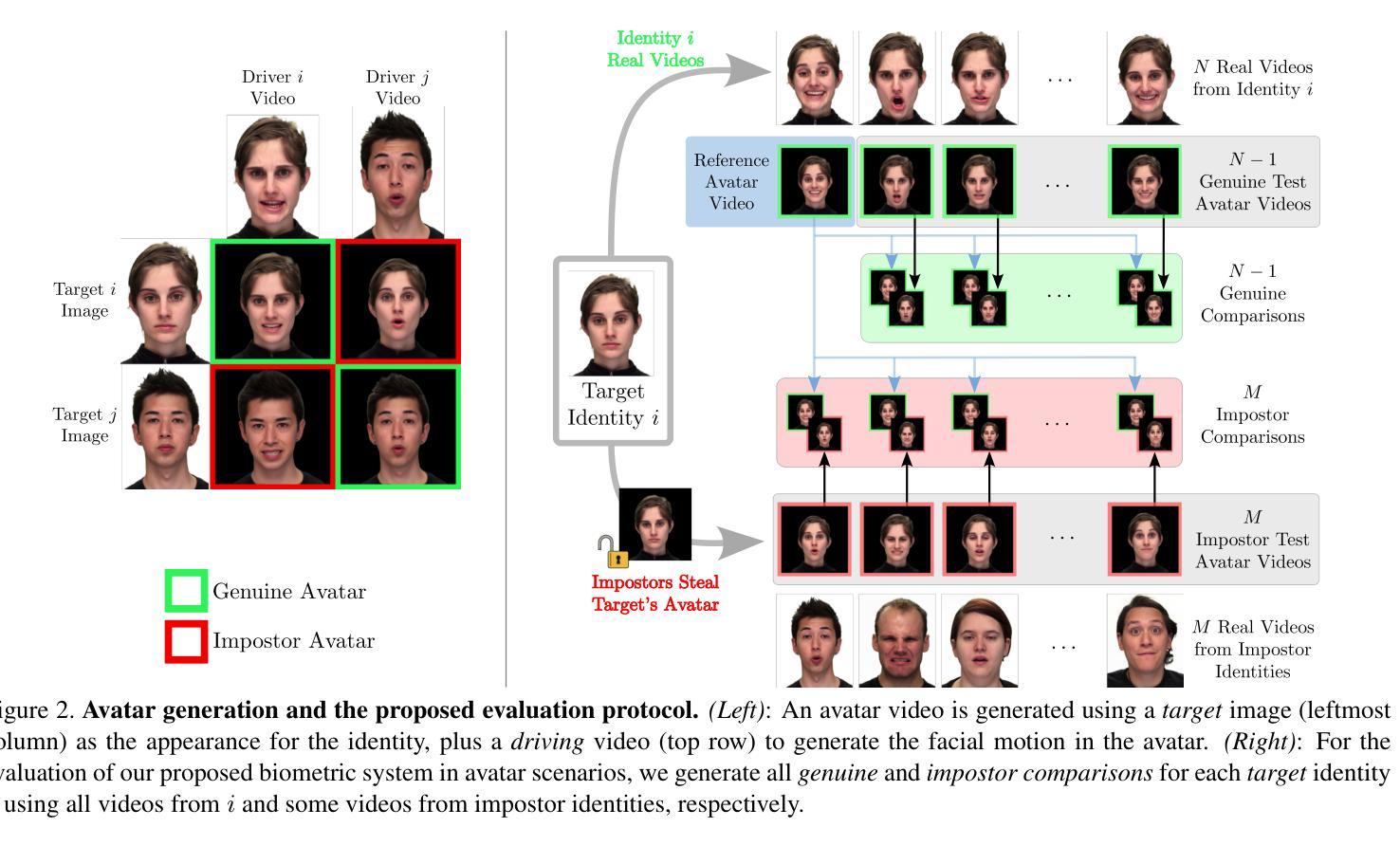

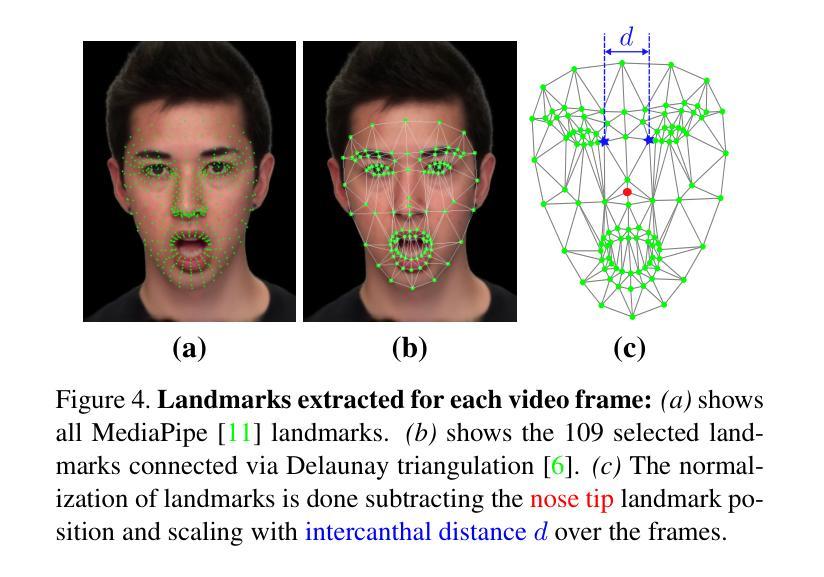

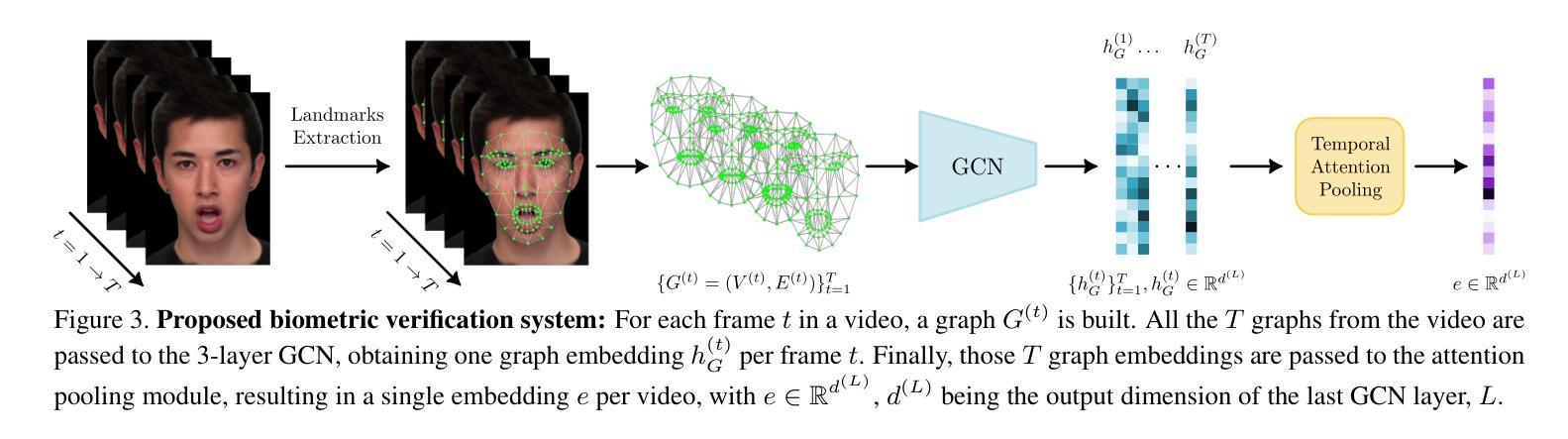

Photorealistic talking-head avatars are becoming increasingly common in virtual meetings, gaming, and social platforms. These avatars allow for more immersive communication, but they also introduce serious security risks. One emerging threat is impersonation: an attacker can steal a user’s avatar-preserving their appearance and voice-making it nearly impossible to detect its fraudulent usage by sight or sound alone. In this paper, we explore the challenge of biometric verification in such avatar-mediated scenarios. Our main question is whether an individual’s facial motion patterns can serve as reliable behavioral biometrics to verify their identity when the avatar’s visual appearance is a facsimile of its owner. To answer this question, we introduce a new dataset of realistic avatar videos created using a state-of-the-art one-shot avatar generation model, GAGAvatar, with genuine and impostor avatar videos. We also propose a lightweight, explainable spatio-temporal Graph Convolutional Network architecture with temporal attention pooling, that uses only facial landmarks to model dynamic facial gestures. Experimental results demonstrate that facial motion cues enable meaningful identity verification with AUC values approaching 80%. The proposed benchmark and biometric system are available for the research community in order to bring attention to the urgent need for more advanced behavioral biometric defenses in avatar-based communication systems.

在虚拟会议、游戏和社交平台中,逼真说话的虚拟半身形象变得日益普遍。这些虚拟半身形象使沟通更加沉浸,但也带来了严重的安全风险。其中一个新兴威胁是伪装:攻击者可以窃取用户的虚拟半身像,保留其外观和声音,仅通过视觉或听觉几乎无法检测到其欺诈使用。在本文中,我们探讨了这种虚拟半身形象介导场景中的生物特征验证挑战。我们的问题是,当虚拟半身像的外观与其所有者相像时,个人的面部运动模式是否可以作为可靠的行为生物特征来验证其身份。为了回答这个问题,我们使用最先进的单镜头虚拟半身形象生成模型GAGAvatar创建了包含真实和假冒虚拟半身形象的视频新数据集。我们还提出了一种轻量级、可解释的时空图卷积网络架构,该架构具有时间注意力池化功能,仅使用面部特征点来模拟动态面部表情。实验结果表明,面部运动线索能够实现有意义的身份验证,AUC值接近80%。所提出的基准测试和生物识别系统可供研究界使用,以突显基于虚拟半身形象的通信系统中对更先进的行为生物特征防御的迫切需求。

论文及项目相关链接

PDF Accepted at the IEEE International Joint Conference on Biometrics (IJCB 2025)

Summary

该文探讨了虚拟人物生成所带来的安全问题,特别是在身份验证方面。研究重点在于面部运动模式能否作为可靠的行为生物识别技术来验证虚拟人物的真实身份。实验结果显示,面部运动线索有助于进行有效的身份验证,AUC值接近80%。为此研究提供了新型数据集和一个创新的面部动作分析架构。

Key Takeaways

- 虚拟人物生成技术日益普及,带来沉浸式沟通体验的同时,也带来严重的安全风险。

- 假冒身份问题突出,攻击者可利用虚拟人物进行身份伪装。

- 研究关注面部运动模式作为行为生物识别技术的可行性,以验证虚拟人物的真实身份。

- 实验结果显示面部运动线索对身份验证至关重要,AUC值接近80%。

- 引入新型数据集,用于评估虚拟人物身份验证技术的性能。

- 提出一种创新的面部动作分析架构,仅使用面部标志进行身份验证。

点此查看论文截图

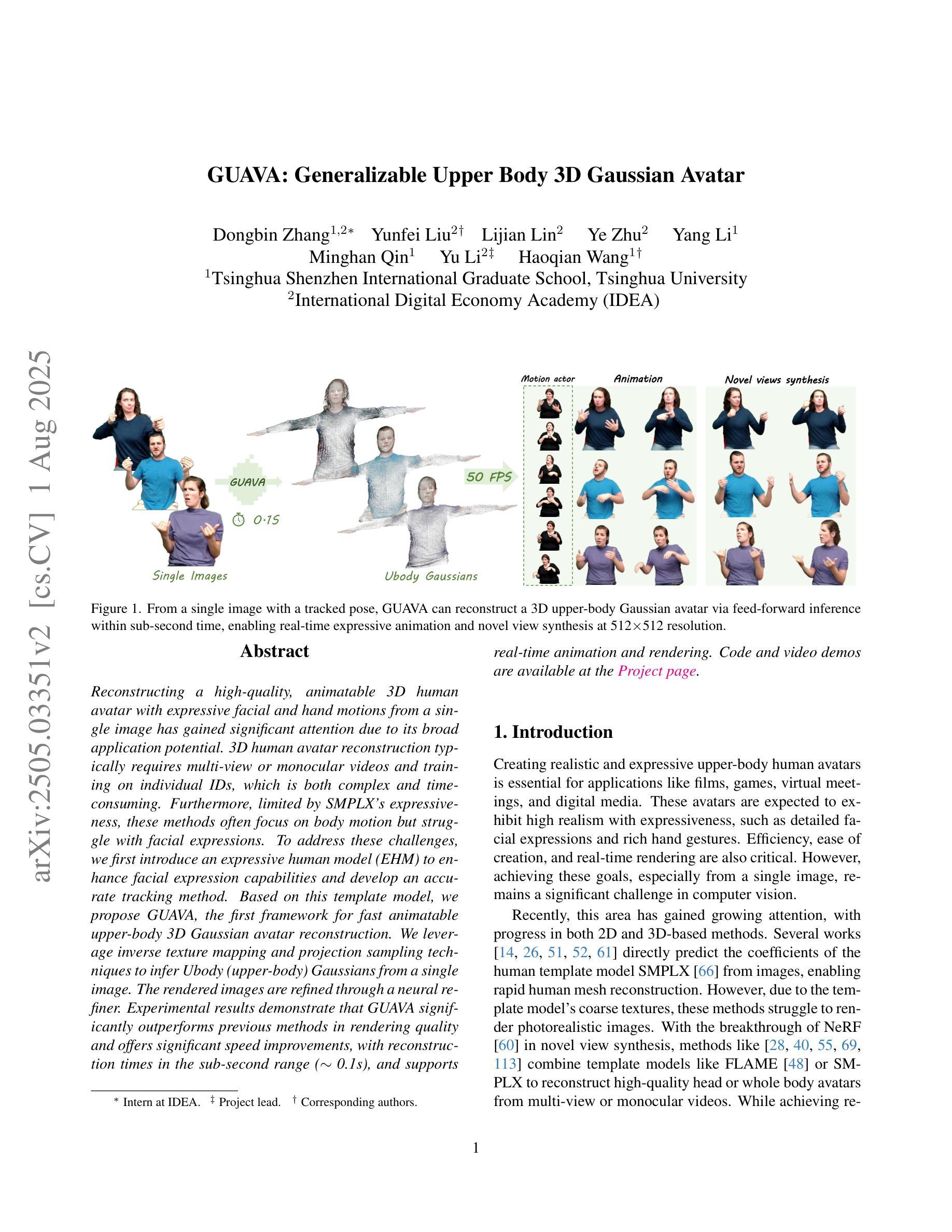

GUAVA: Generalizable Upper Body 3D Gaussian Avatar

Authors:Dongbin Zhang, Yunfei Liu, Lijian Lin, Ye Zhu, Yang Li, Minghan Qin, Yu Li, Haoqian Wang

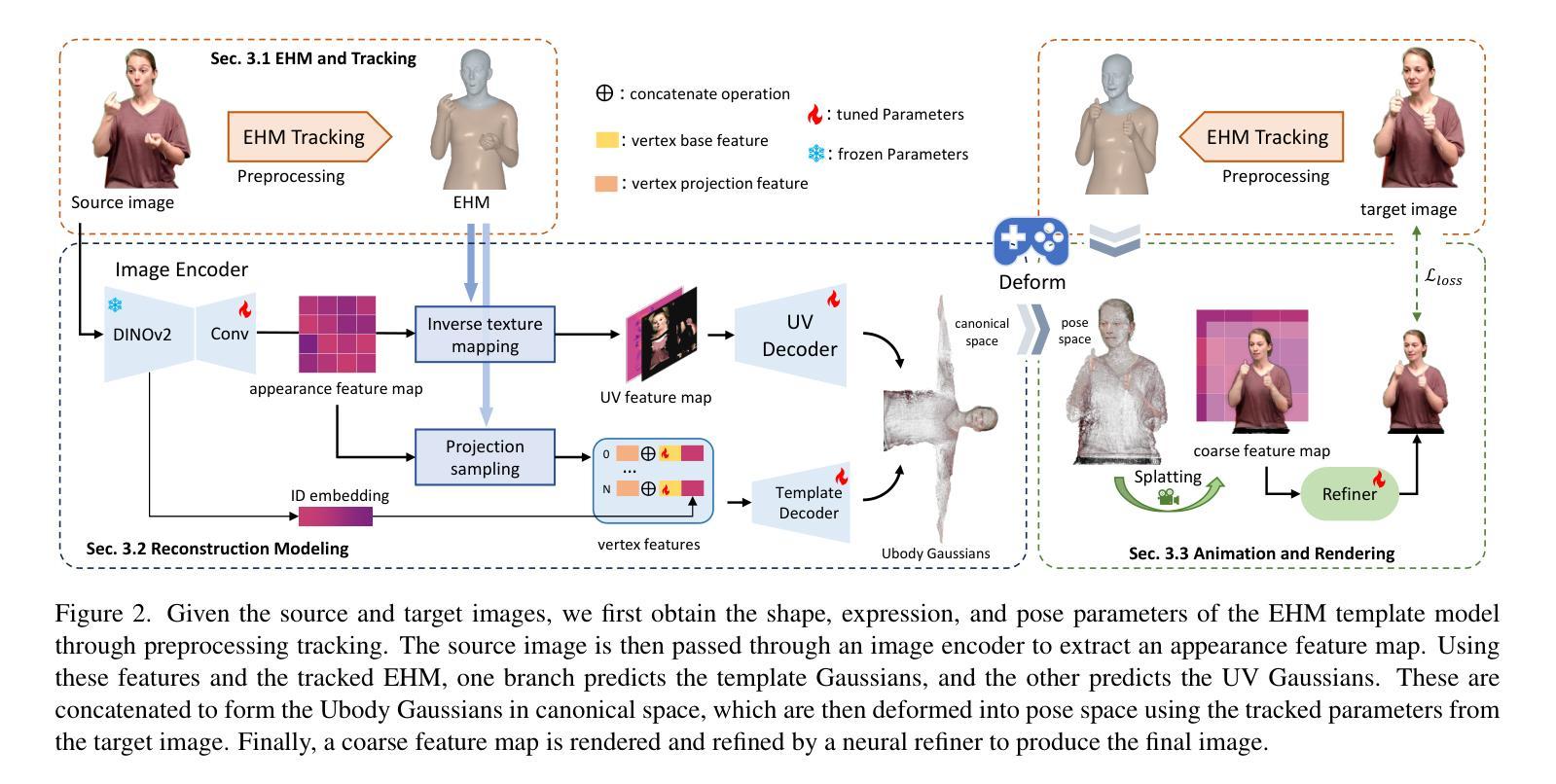

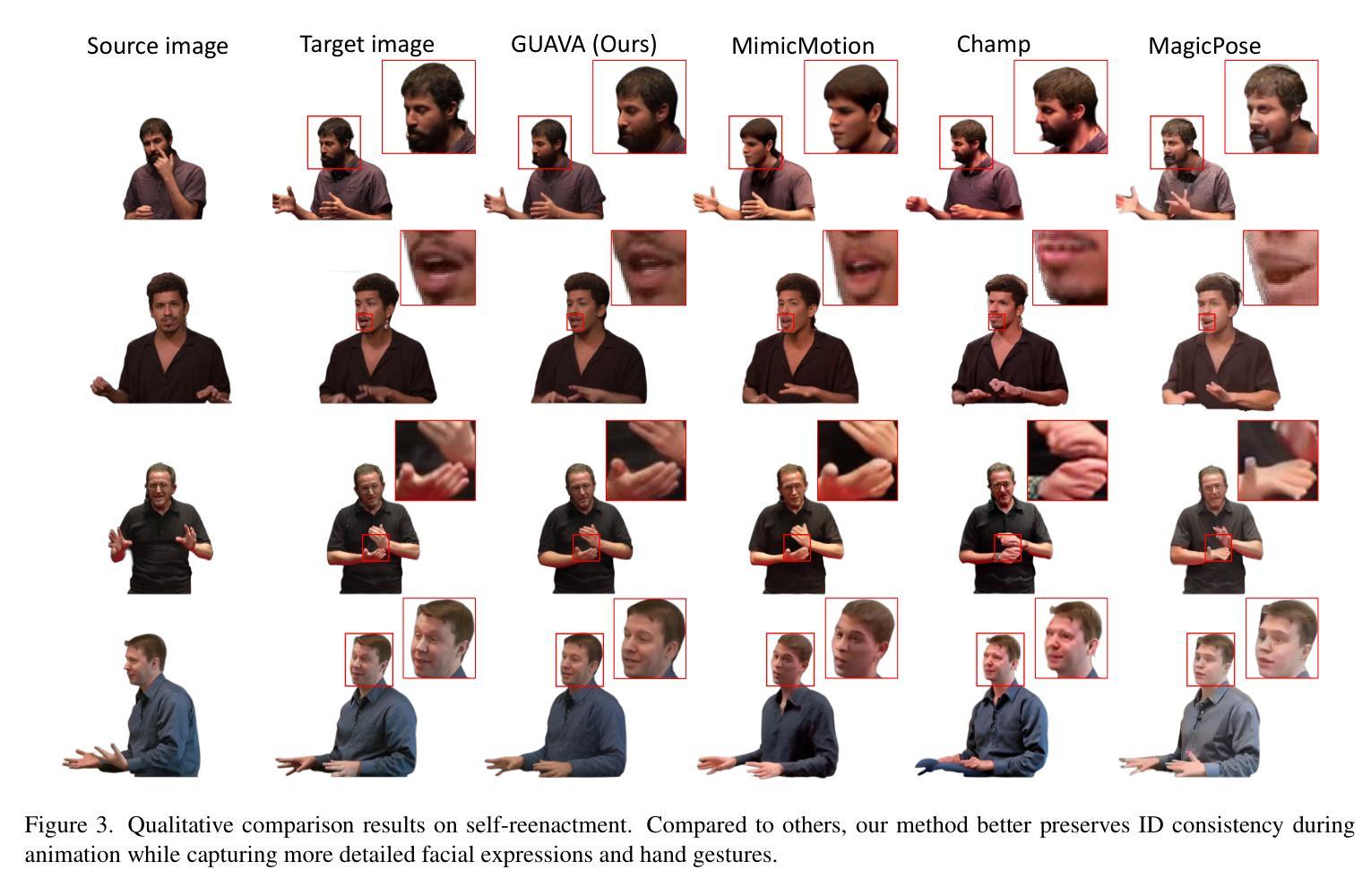

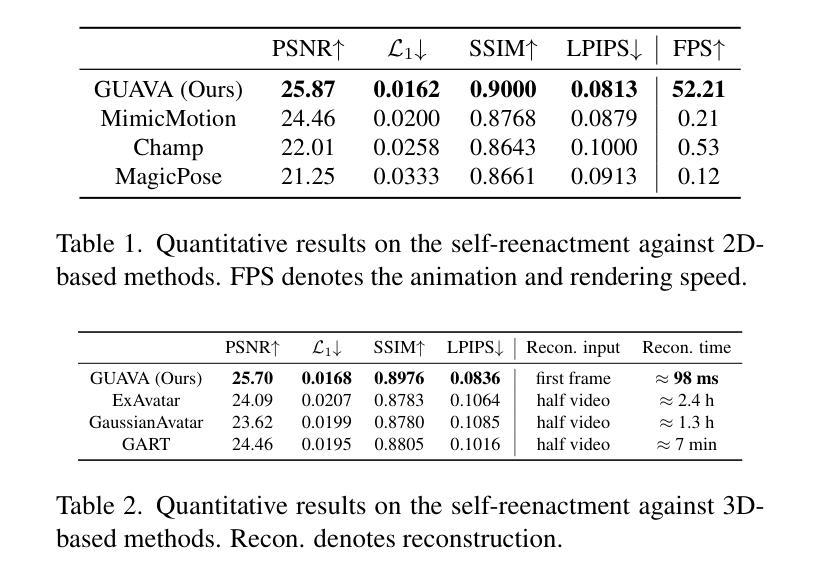

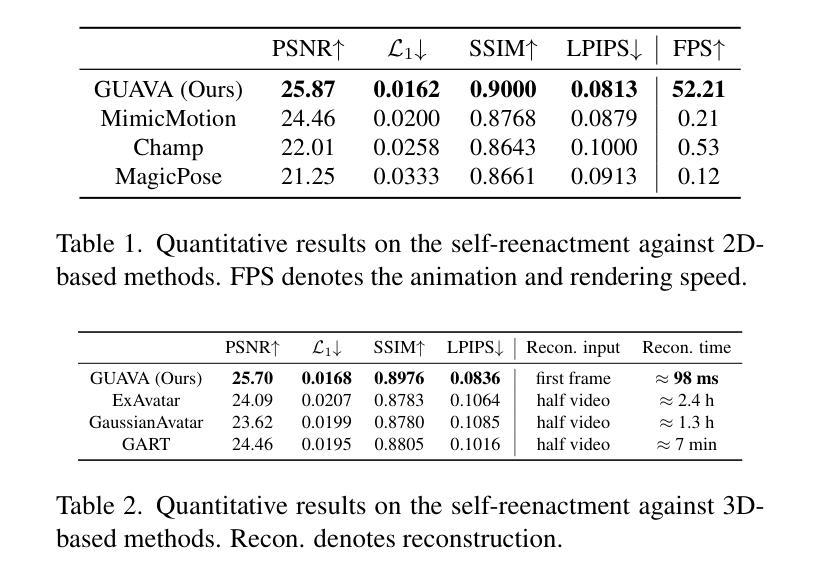

Reconstructing a high-quality, animatable 3D human avatar with expressive facial and hand motions from a single image has gained significant attention due to its broad application potential. 3D human avatar reconstruction typically requires multi-view or monocular videos and training on individual IDs, which is both complex and time-consuming. Furthermore, limited by SMPLX’s expressiveness, these methods often focus on body motion but struggle with facial expressions. To address these challenges, we first introduce an expressive human model (EHM) to enhance facial expression capabilities and develop an accurate tracking method. Based on this template model, we propose GUAVA, the first framework for fast animatable upper-body 3D Gaussian avatar reconstruction. We leverage inverse texture mapping and projection sampling techniques to infer Ubody (upper-body) Gaussians from a single image. The rendered images are refined through a neural refiner. Experimental results demonstrate that GUAVA significantly outperforms previous methods in rendering quality and offers significant speed improvements, with reconstruction times in the sub-second range (0.1s), and supports real-time animation and rendering.

从单幅图像重建高质量、可动画的3D人类角色化身,并表现出具有表情的面部和手部动作,由于其广泛的应用潜力而备受关注。3D人类角色化身的重建通常需要多视角或单眼视频以及对个体ID的训练,这既复杂又耗时。此外,受限于SMPLX的表现力,这些方法通常侧重于身体动作,但在面部表情方面表现不佳。为了解决这些挑战,我们首先引入了一个表现力丰富的人类模型(EHM)以增强面部表情能力,并开发了一种精确的跟踪方法。基于这个模板模型,我们提出了GUAVA,这是第一个用于快速可动画的上半身3D高斯角色化身重建框架。我们利用逆向纹理映射和投影采样技术从单幅图像推断出Ubody(上半身)的高斯分布。渲染的图像通过神经细化器进行精炼。实验结果表明,GUAVA在渲染质量上显著优于以前的方法,并在速度上实现了显著改进,重建时间在亚秒范围内(0.1秒),支持实时动画和渲染。

论文及项目相关链接

PDF Accepted to ICCV 2025, Project page: https://eastbeanzhang.github.io/GUAVA/

Summary

从单张图像重建高质量、可动画的3D人物角色,具备表达性面部和手部动作,因其广泛的应用潜力而受到广泛关注。3D人物角色重建通常需要多视角或单视角视频,并对个体ID进行训练,过程复杂且耗时。为解决这个问题,我们引入了表达性人物模型(EHM)以增强面部表达能力,并开发了精准追踪方法。基于该模板模型,我们提出了GUAVA,首个快速可动画的3D高斯人体上半身重建框架。我们利用逆向纹理映射和投影采样技术从单张图像推断出上半身高斯分布,并通过神经细化器优化渲染图像。实验结果表明,GUAVA在渲染质量上显著优于以前的方法,重建时间在亚秒范围内(0.1秒),支持实时动画和渲染。

Key Takeaways

- 3D人物角色重建从单张图像重建具有广泛的应用潜力。

- 现有方法通常需要复杂的多视角或单视角视频数据以及对个体ID的训练,过程复杂且耗时。

- 为提高面部表达能力,引入了表达性人物模型(EHM)和精准追踪方法。

- 基于模板模型,提出了GUAVA框架,实现快速可动画的3D高斯人体上半身重建。

- 利用逆向纹理映射和投影采样技术从单张图像推断上半身高斯分布。

- 通过神经细化器优化渲染图像,提高渲染质量。

点此查看论文截图