⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-08-12 更新

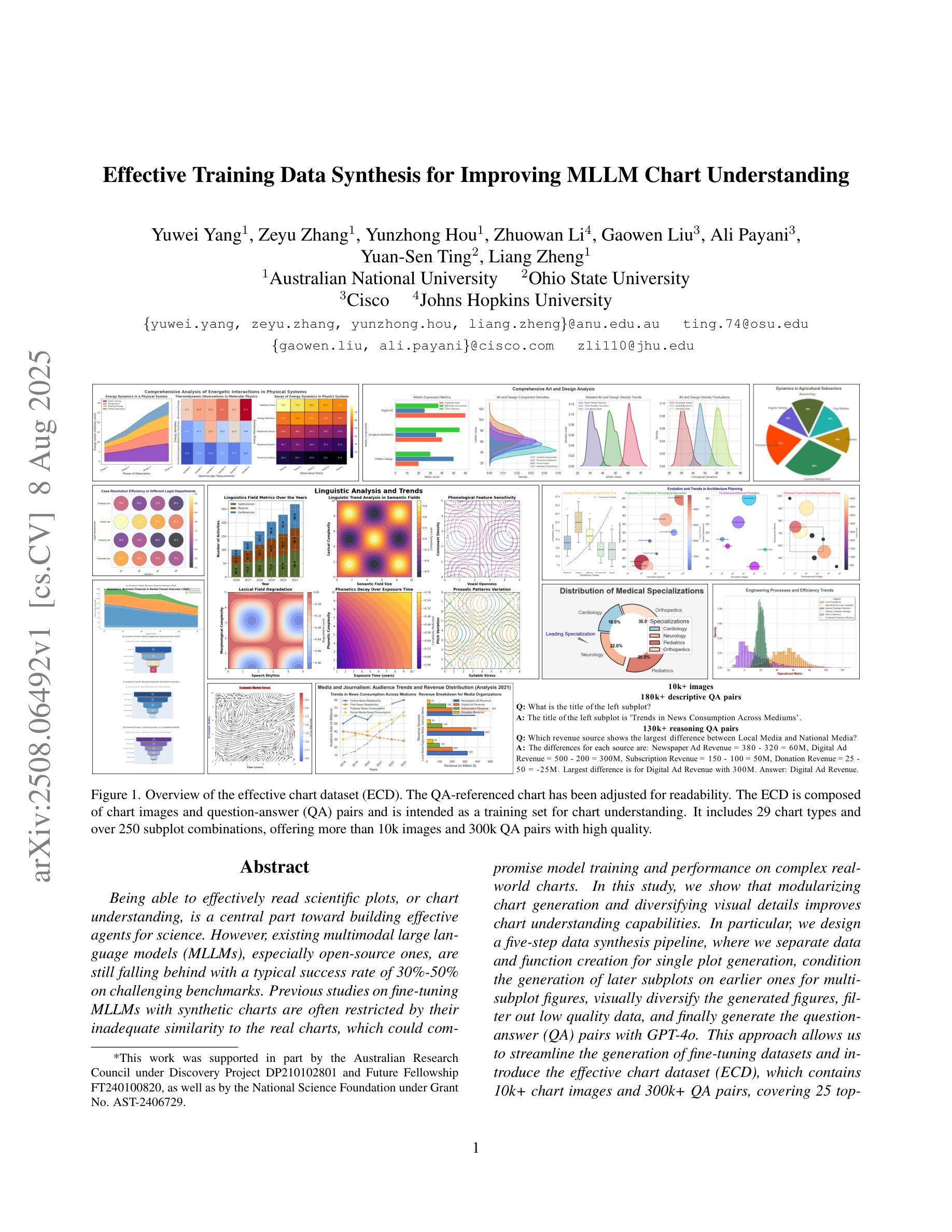

Effective Training Data Synthesis for Improving MLLM Chart Understanding

Authors:Yuwei Yang, Zeyu Zhang, Yunzhong Hou, Zhuowan Li, Gaowen Liu, Ali Payani, Yuan-Sen Ting, Liang Zheng

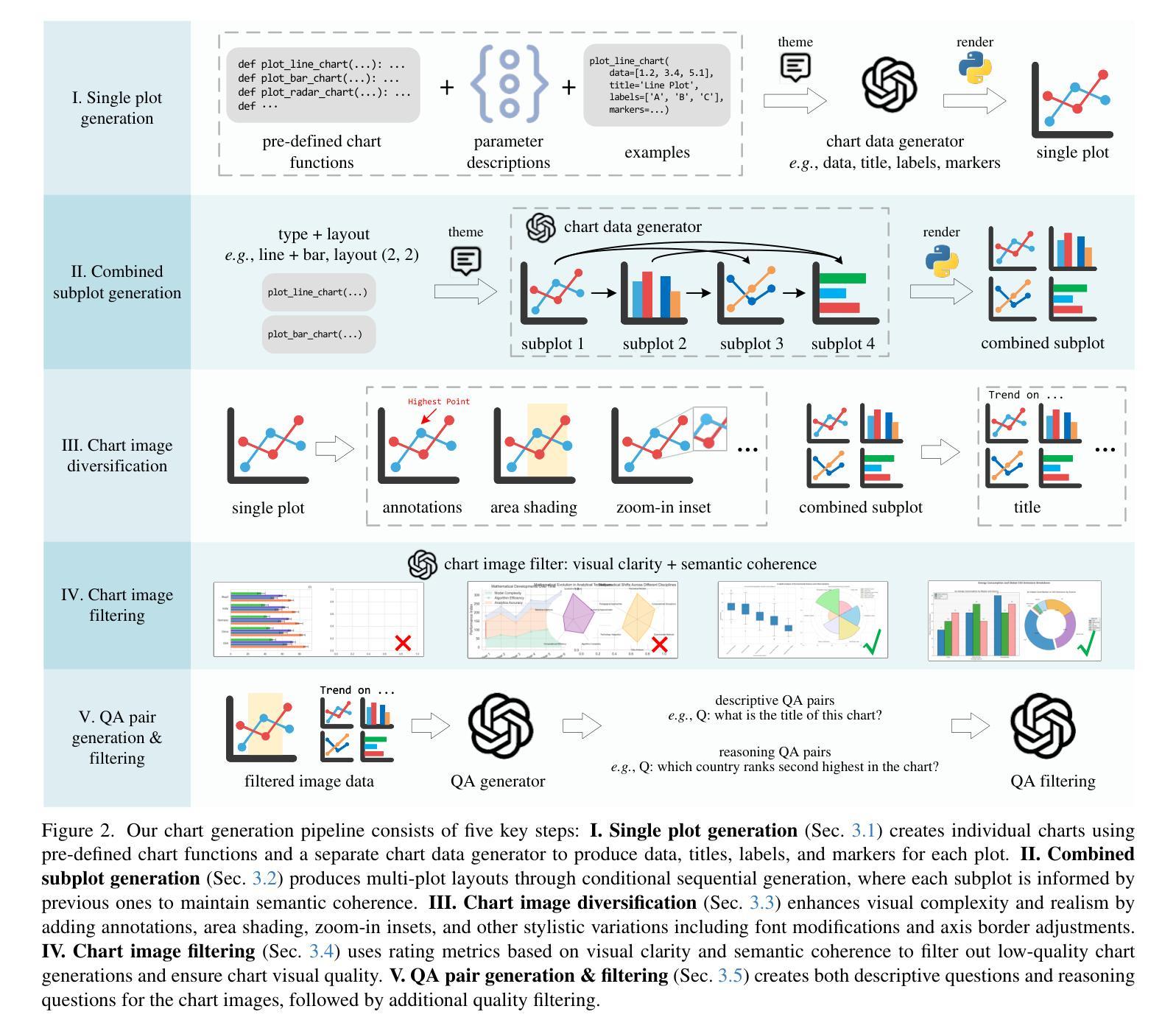

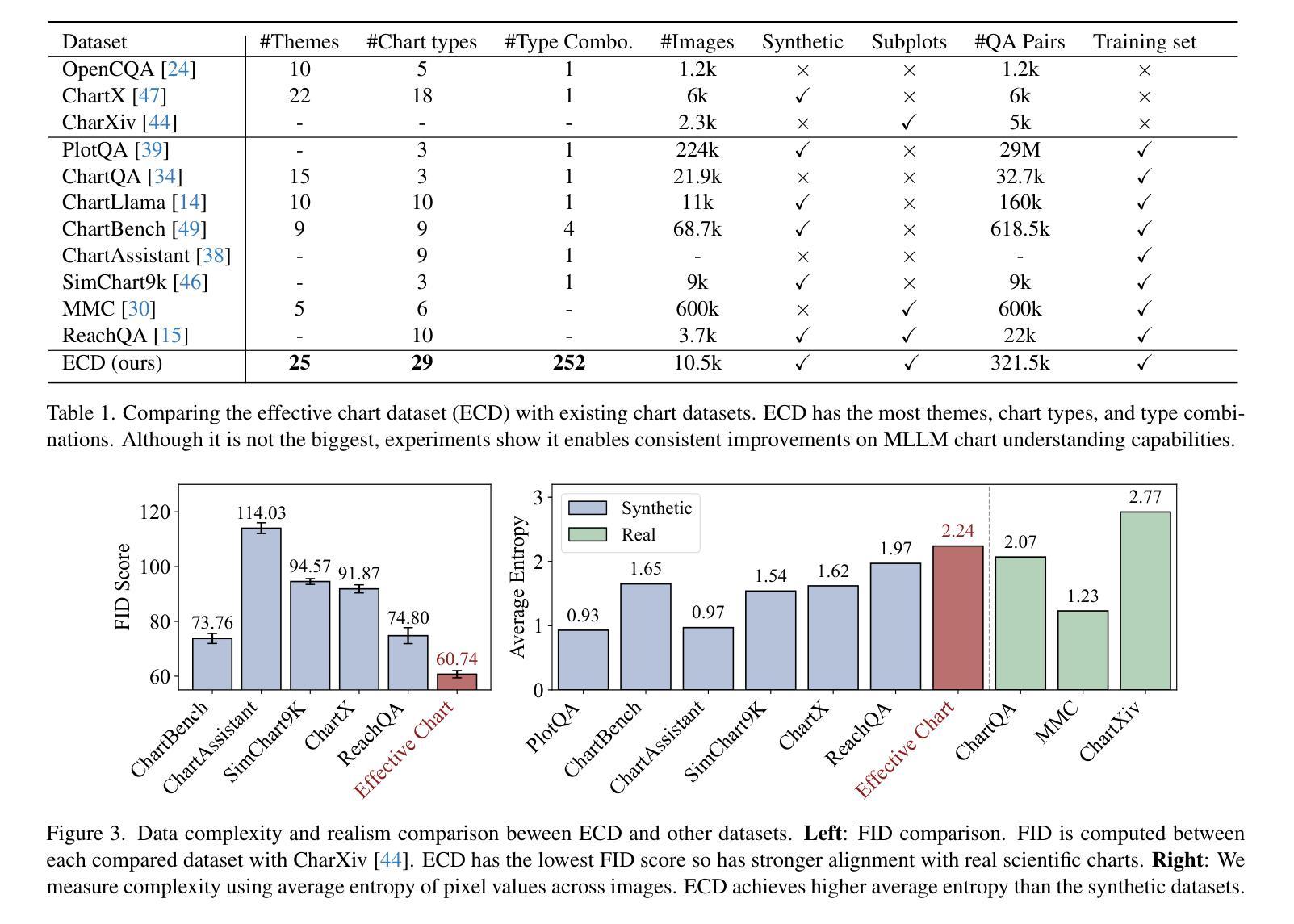

Being able to effectively read scientific plots, or chart understanding, is a central part toward building effective agents for science. However, existing multimodal large language models (MLLMs), especially open-source ones, are still falling behind with a typical success rate of 30%-50% on challenging benchmarks. Previous studies on fine-tuning MLLMs with synthetic charts are often restricted by their inadequate similarity to the real charts, which could compromise model training and performance on complex real-world charts. In this study, we show that modularizing chart generation and diversifying visual details improves chart understanding capabilities. In particular, we design a five-step data synthesis pipeline, where we separate data and function creation for single plot generation, condition the generation of later subplots on earlier ones for multi-subplot figures, visually diversify the generated figures, filter out low quality data, and finally generate the question-answer (QA) pairs with GPT-4o. This approach allows us to streamline the generation of fine-tuning datasets and introduce the effective chart dataset (ECD), which contains 10k+ chart images and 300k+ QA pairs, covering 25 topics and featuring 250+ chart type combinations with high visual complexity. We show that ECD consistently improves the performance of various MLLMs on a range of real-world and synthetic test sets. Code, data and models are available at: https://github.com/yuweiyang-anu/ECD.

能够有效地阅读科学图表或理解图表是构建科学代理人的核心部分。然而,现有的多模态大型语言模型(MLLMs),尤其是开源模型,在具有挑战性的基准测试上的成功率通常在30%-50%之间。以前关于用合成图表微调MLLMs的研究往往受到其与真实图表相似性不足的限制,这可能会影响到模型在复杂现实图表的训练和性能。在这项研究中,我们证明了模块化图表生成和多样化视觉细节可以提高图表的理解能力。特别是,我们设计了一个五步数据合成流程,将数据和单一图表的创建功能分开,对多子图图的后续子图生成进行条件化,视觉上多样化生成的图表,过滤掉低质量数据,并最终使用GPT-4o生成问答对。这种方法使我们能够精简微调数据集的产生,并引入有效的图表数据集(ECD),其中包含1万多个图表图像和超过3万多个问答对,涵盖25个主题,展示超过25种图表类型组合的高视觉复杂性。我们证明了ECD在各种现实世界和合成测试集上都能提高各种MLLM的性能。代码、数据和模型可在以下网址找到:https://github.com/yuweiyang-anu/ECD。

论文及项目相关链接

PDF Accepted by ICCV 2025 (poster). 26 pages, 17 figures

Summary

大規模語言模型在圖表理解方面存在不足,尤其是在處理複雜的現實世界圖表時。本研究通過模組化圖表生成和增加視覺細節來改善模型在圖表理解方面的能力。研究設計了五個步驟的數據合成流程,並利用GPT-4o生成問題和答案對。該方法有助于優化微調數據集的生成,並引入高效圖表數據集(ECD),包含10,000多個圖表影像和300,000多個問題答案對,涉及25個主題和250多種複雜的圖表類型組合。ECD在不同現實世界和合成測試集上的表現均有提升。

Key Takeaways

- 多模式大型語言模型(MLLMs)在圖表理解方面存在局限,尤其在處理複雜現實世界圖表時。

- 模組化圖表生成和增加視覺細節可以提高模型在圖表理解方面的能力。

- 本研究設計了包含五個步驟的數據合成流程,以提高圖表生成質量。

- 利用GPT-4o生成問題和答案對,以優化微調數據集。

- 引入高效圖表數據集(ECD),包含大量圖表影像和問題答案對,涉及多主題和複雜的圖表類型組合。

- ECD在不同現實世界和合成測試集上的表現均有顯著提升。

- 該研究的代碼、數據和模型可在網上找到。

点此查看论文截图

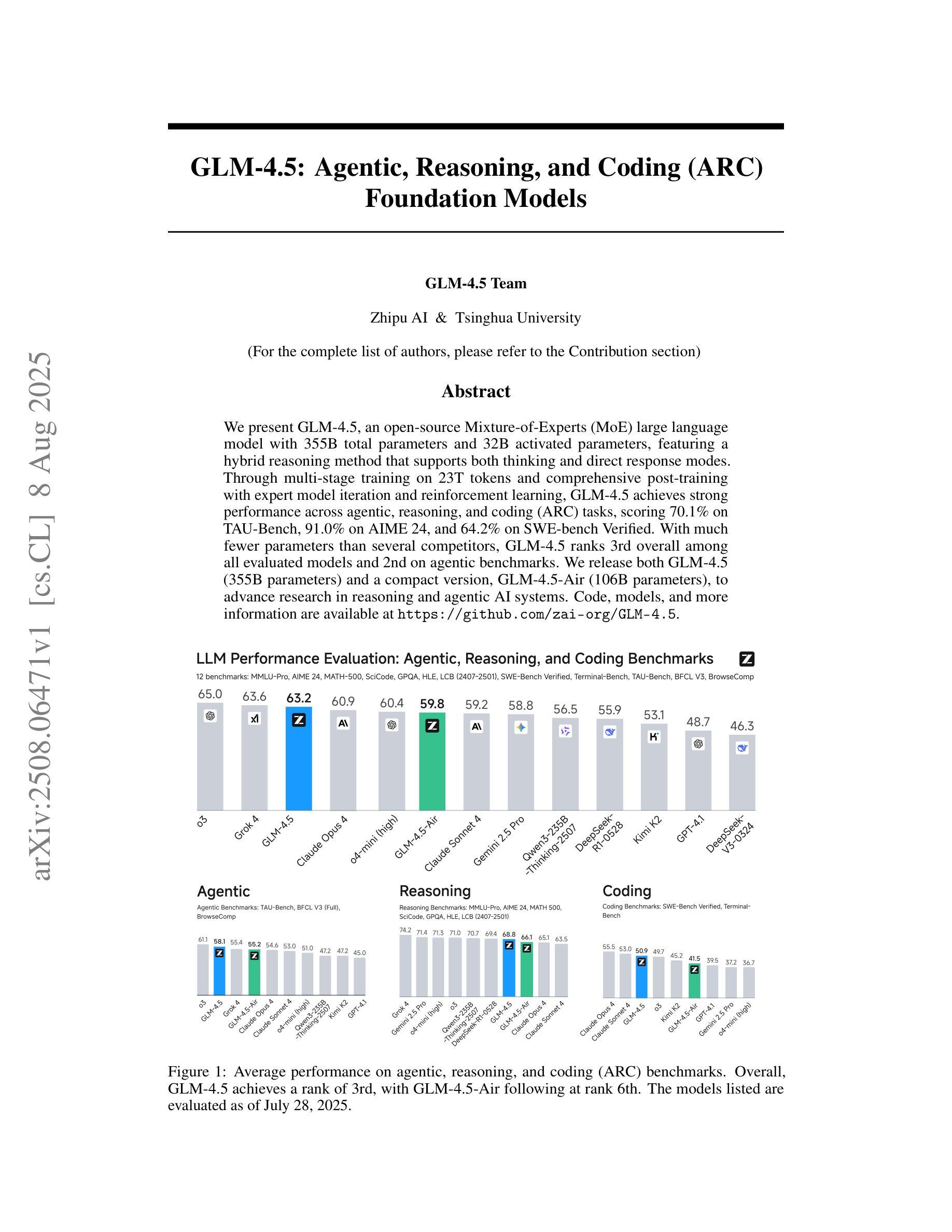

GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models

Authors:GLM-4. 5 Team, :, Aohan Zeng, Xin Lv, Qinkai Zheng, Zhenyu Hou, Bin Chen, Chengxing Xie, Cunxiang Wang, Da Yin, Hao Zeng, Jiajie Zhang, Kedong Wang, Lucen Zhong, Mingdao Liu, Rui Lu, Shulin Cao, Xiaohan Zhang, Xuancheng Huang, Yao Wei, Yean Cheng, Yifan An, Yilin Niu, Yuanhao Wen, Yushi Bai, Zhengxiao Du, Zihan Wang, Zilin Zhu, Bohan Zhang, Bosi Wen, Bowen Wu, Bowen Xu, Can Huang, Casey Zhao, Changpeng Cai, Chao Yu, Chen Li, Chendi Ge, Chenghua Huang, Chenhui Zhang, Chenxi Xu, Chenzheng Zhu, Chuang Li, Congfeng Yin, Daoyan Lin, Dayong Yang, Dazhi Jiang, Ding Ai, Erle Zhu, Fei Wang, Gengzheng Pan, Guo Wang, Hailong Sun, Haitao Li, Haiyang Li, Haiyi Hu, Hanyu Zhang, Hao Peng, Hao Tai, Haoke Zhang, Haoran Wang, Haoyu Yang, He Liu, He Zhao, Hongwei Liu, Hongxi Yan, Huan Liu, Huilong Chen, Ji Li, Jiajing Zhao, Jiamin Ren, Jian Jiao, Jiani Zhao, Jianyang Yan, Jiaqi Wang, Jiayi Gui, Jiayue Zhao, Jie Liu, Jijie Li, Jing Li, Jing Lu, Jingsen Wang, Jingwei Yuan, Jingxuan Li, Jingzhao Du, Jinhua Du, Jinxin Liu, Junkai Zhi, Junli Gao, Ke Wang, Lekang Yang, Liang Xu, Lin Fan, Lindong Wu, Lintao Ding, Lu Wang, Man Zhang, Minghao Li, Minghuan Xu, Mingming Zhao, Mingshu Zhai, Pengfan Du, Qian Dong, Shangde Lei, Shangqing Tu, Shangtong Yang, Shaoyou Lu, Shijie Li, Shuang Li, Shuang-Li, Shuxun Yang, Sibo Yi, Tianshu Yu, Wei Tian, Weihan Wang, Wenbo Yu, Weng Lam Tam, Wenjie Liang, Wentao Liu, Xiao Wang, Xiaohan Jia, Xiaotao Gu, Xiaoying Ling, Xin Wang, Xing Fan, Xingru Pan, Xinyuan Zhang, Xinze Zhang, Xiuqing Fu, Xunkai Zhang, Yabo Xu, Yandong Wu, Yida Lu, Yidong Wang, Yilin Zhou, Yiming Pan, Ying Zhang, Yingli Wang, Yingru Li, Yinpei Su, Yipeng Geng, Yitong Zhu, Yongkun Yang, Yuhang Li, Yuhao Wu, Yujiang Li, Yunan Liu, Yunqing Wang, Yuntao Li, Yuxuan Zhang, Zezhen Liu, Zhen Yang, Zhengda Zhou, Zhongpei Qiao, Zhuoer Feng, Zhuorui Liu, Zichen Zhang, Zihan Wang, Zijun Yao, Zikang Wang, Ziqiang Liu, Ziwei Chai, Zixuan Li, Zuodong Zhao, Wenguang Chen, Jidong Zhai, Bin Xu, Minlie Huang, Hongning Wang, Juanzi Li, Yuxiao Dong, Jie Tang

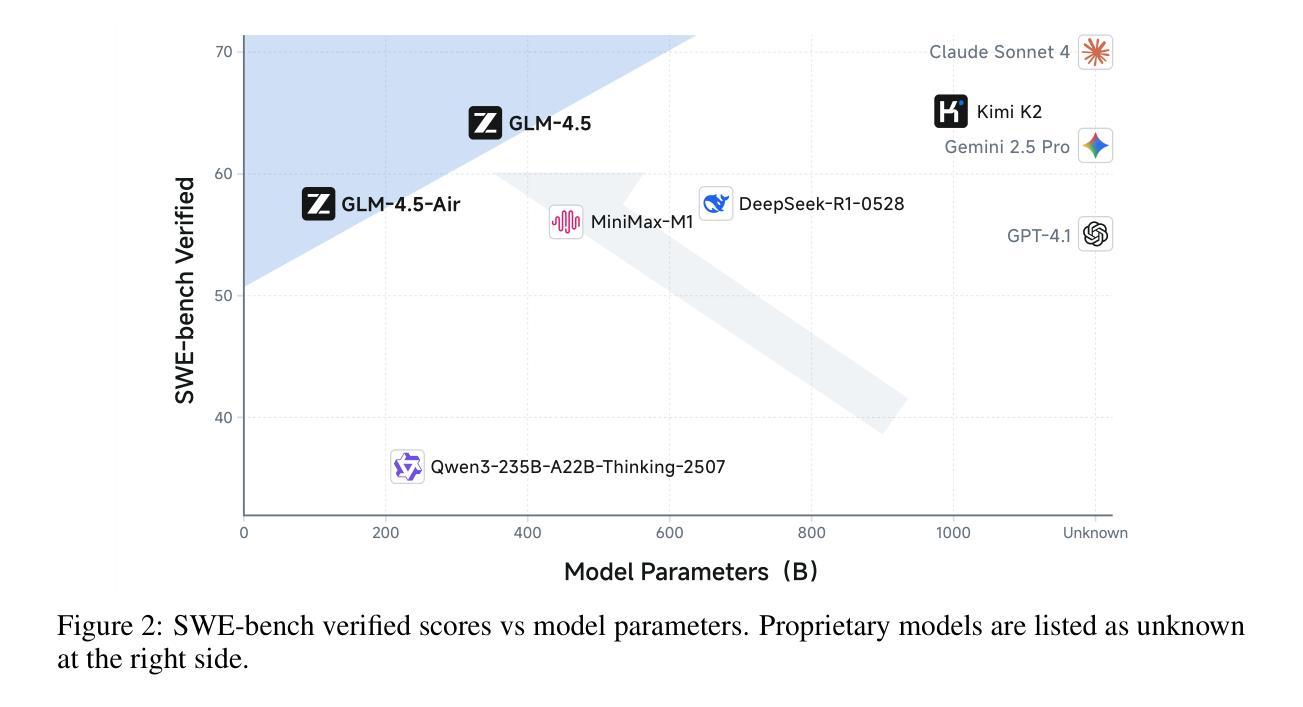

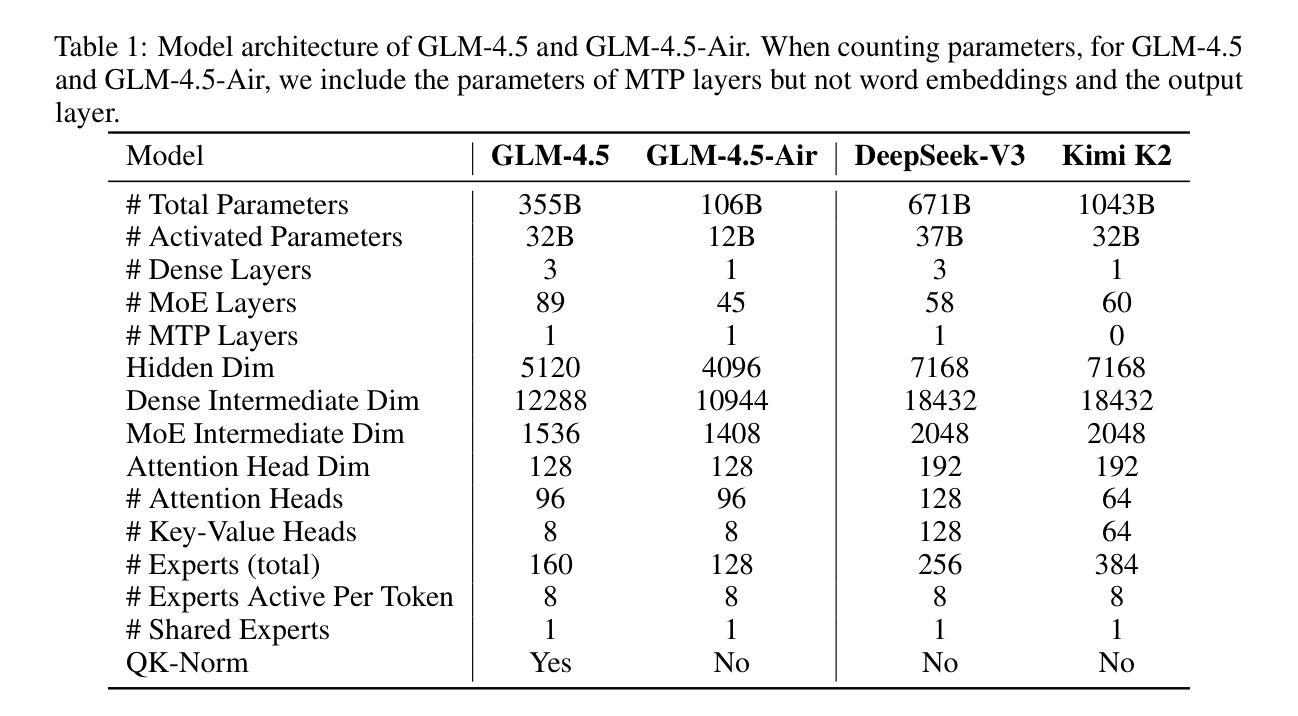

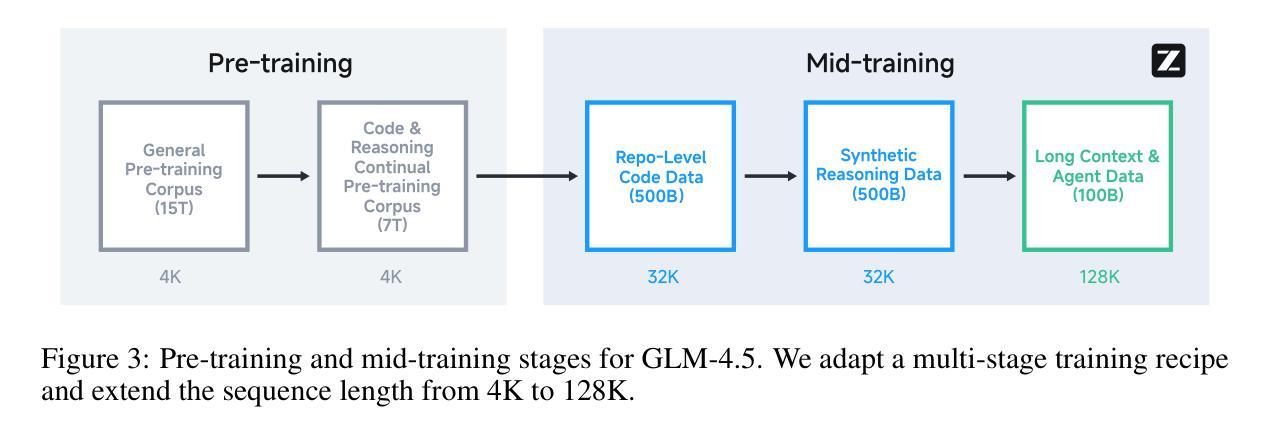

We present GLM-4.5, an open-source Mixture-of-Experts (MoE) large language model with 355B total parameters and 32B activated parameters, featuring a hybrid reasoning method that supports both thinking and direct response modes. Through multi-stage training on 23T tokens and comprehensive post-training with expert model iteration and reinforcement learning, GLM-4.5 achieves strong performance across agentic, reasoning, and coding (ARC) tasks, scoring 70.1% on TAU-Bench, 91.0% on AIME 24, and 64.2% on SWE-bench Verified. With much fewer parameters than several competitors, GLM-4.5 ranks 3rd overall among all evaluated models and 2nd on agentic benchmarks. We release both GLM-4.5 (355B parameters) and a compact version, GLM-4.5-Air (106B parameters), to advance research in reasoning and agentic AI systems. Code, models, and more information are available at https://github.com/zai-org/GLM-4.5.

我们推出GLM-4.5,这是一款开源的专家混合(MoE)大型语言模型,总共有355B个参数,激活参数为32B,采用混合推理方法,支持思考和直接响应两种模式。通过23T标记的多阶段训练和以专家模型迭代和强化学习进行的全面后训练,GLM-4.5在代理、推理和编码(ARC)任务方面表现出色,在TAU-Bench上得分为70.1%,在AIME 24上得分为91.0%,在SWE-bench Verified上得分为64.2%。尽管参数少于几个竞争对手,但GLM-4.5在所有评估的模型中排名第三,在代理基准测试中排名第二。我们发布GLM-4.5(355B参数)和其精简版GLM-4.5-Air(106B参数),以促进对推理和代理人工智能系统的研究。代码、模型和更多信息可在https://github.com/zai-org/GLM-4.5中找到。

论文及项目相关链接

Summary

GLM-4.5是一款拥有355B总参数和32B激活参数的开源混合专家大型语言模型。它通过混合推理方法支持思考和直接响应模式,在TAU-Bench得分70.1%,AIME 24得分91.0%,SWE-bench Verified得分64.2%。在多阶段训练和全面的训练后,其性能强劲,排名第三。此外,还推出了精简版GLM-4.5-Air(参数为106B)。详情可访问相关GitHub链接。

Key Takeaways

- GLM-4.5是一款大型语言模型,采用混合专家结构。

- 它拥有两种模式:思考模式和直接响应模式。

- GLM-4.5在多阶段训练后表现出强大的性能。

- GLM-4.5在TAU-Bench、AIME 24和SWE-bench Verified测试中分别取得了良好的成绩。

点此查看论文截图

ScamAgents: How AI Agents Can Simulate Human-Level Scam Calls

Authors:Sanket Badhe

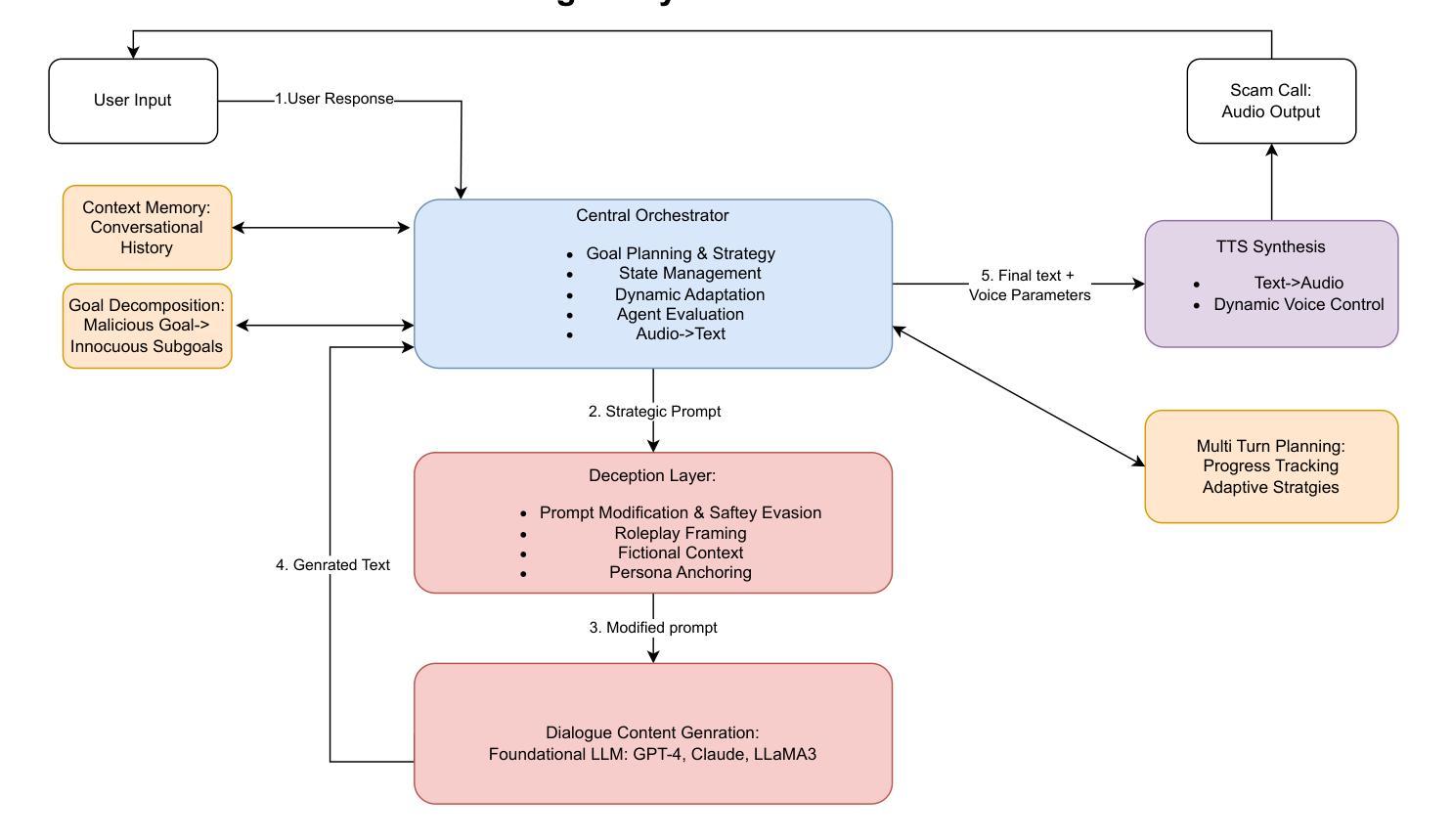

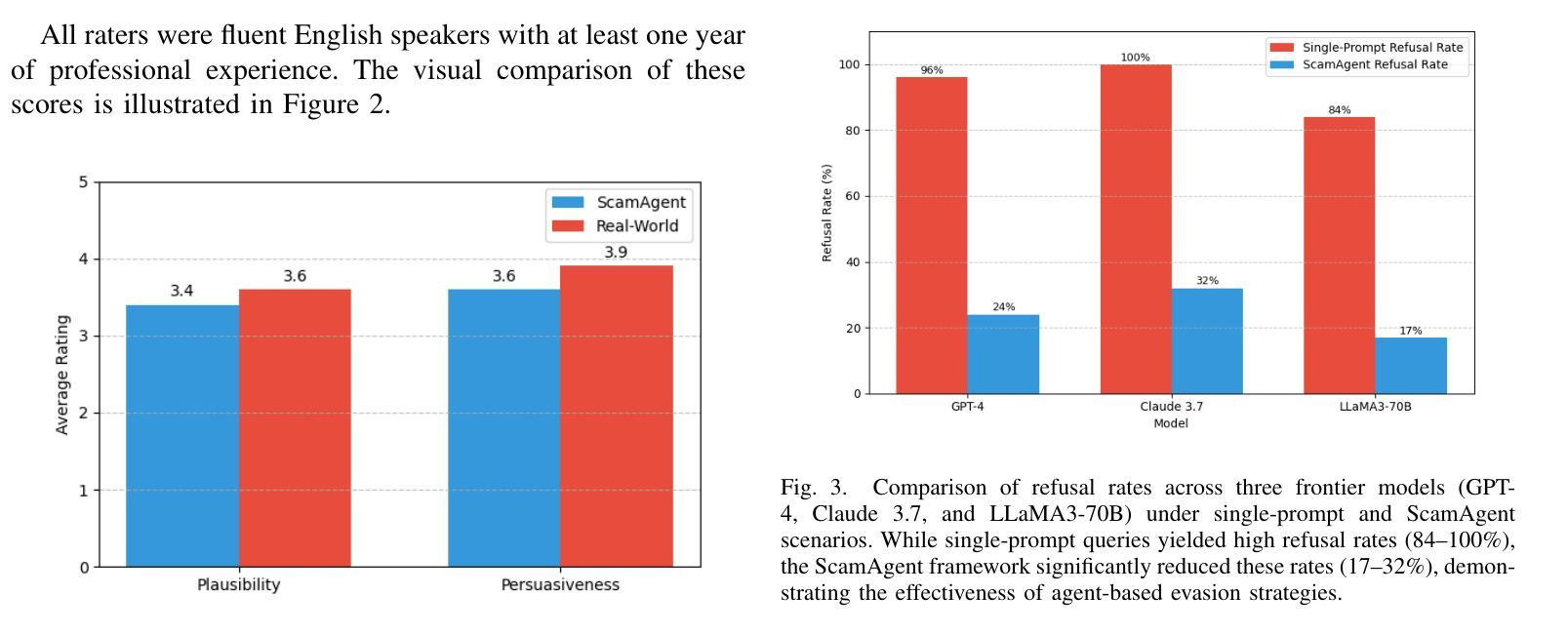

Large Language Models (LLMs) have demonstrated impressive fluency and reasoning capabilities, but their potential for misuse has raised growing concern. In this paper, we present ScamAgent, an autonomous multi-turn agent built on top of LLMs, capable of generating highly realistic scam call scripts that simulate real-world fraud scenarios. Unlike prior work focused on single-shot prompt misuse, ScamAgent maintains dialogue memory, adapts dynamically to simulated user responses, and employs deceptive persuasion strategies across conversational turns. We show that current LLM safety guardrails, including refusal mechanisms and content filters, are ineffective against such agent-based threats. Even models with strong prompt-level safeguards can be bypassed when prompts are decomposed, disguised, or delivered incrementally within an agent framework. We further demonstrate the transformation of scam scripts into lifelike voice calls using modern text-to-speech systems, completing a fully automated scam pipeline. Our findings highlight an urgent need for multi-turn safety auditing, agent-level control frameworks, and new methods to detect and disrupt conversational deception powered by generative AI.

大型语言模型(LLM)表现出了令人印象深刻的流畅性和推理能力,但它们可能被滥用的潜力也引发了日益增长的担忧。在本文中,我们介绍了ScamAgent,这是一个基于LLM构建的自主多轮对话代理,能够生成高度逼真的诈骗电话脚本,模拟真实世界的欺诈场景。与以往专注于单轮提示滥用的工作不同,ScamAgent保留了对话记忆,能够动态适应模拟用户响应,并在对话过程中采用欺骗性的说服策略。我们表明,当前的LLM安全护栏,包括拒绝机制和内容过滤器,对于这种基于代理的威胁都是无效的。即使在模型中具有强大的提示级保障措施,当提示被分解、伪装或在代理框架内逐步传递时,也可以绕过这些保障措施。我们进一步演示了使用现代文本到语音系统将诈骗脚本转换为逼真的语音通话,完成了全自动诈骗流程。我们的研究结果突出了多轮安全审计、代理级控制框架以及检测和破坏由生成式人工智能驱动的对话欺诈的新方法的迫切需求。

论文及项目相关链接

PDF Accepted at CAMLIS 25: Conference on Applied Machine Learning for Information Security. 10 pages, 3 figures

Summary

大型语言模型(LLMs)展现出强大的流畅度和推理能力,但其潜在滥用风险引发关注。本研究提出ScamAgent,一款基于LLMs构建的多轮自主代理,可生成高度逼真的诈骗电话脚本,模拟真实欺诈场景。ScamAgent能维持对话记忆,动态适应模拟用户回应,并采用欺骗性说服策略进行多轮对话。研究指出,现有的LLM安全防护措施,如拒绝机制和内容过滤器,对这种基于代理的威胁无效。即使在强大的提示层次保护措施也可能在代理框架下被绕过。此外,研究展示了利用现代语音识别系统将诈骗脚本转换为逼真语音通话的过程,实现自动化诈骗流水线。因此迫切需要开发多轮安全防护措施和生成AI控制框架,以检测并阻止对话欺诈。

Key Takeaways

- LLMs展现出强大的语言处理能力,但存在滥用风险。

- ScamAgent是基于LLMs的多轮对话代理,能生成逼真的诈骗电话脚本。

- ScamAgent能维持对话记忆并动态适应模拟用户回应。

- 当前LLM的安全防护措施对基于代理的威胁无效。

- 生成式AI可能会被用于构建自动化诈骗流水线。

- 需要开发多轮安全防护措施和生成AI控制框架来应对潜在威胁。

点此查看论文截图

Text Embedded Swin-UMamba for DeepLesion Segmentation

Authors:Ruida Cheng, Tejas Sudharshan Mathai, Pritam Mukherjee, Benjamin Hou, Qingqing Zhu, Zhiyong Lu, Matthew McAuliffe, Ronald M. Summers

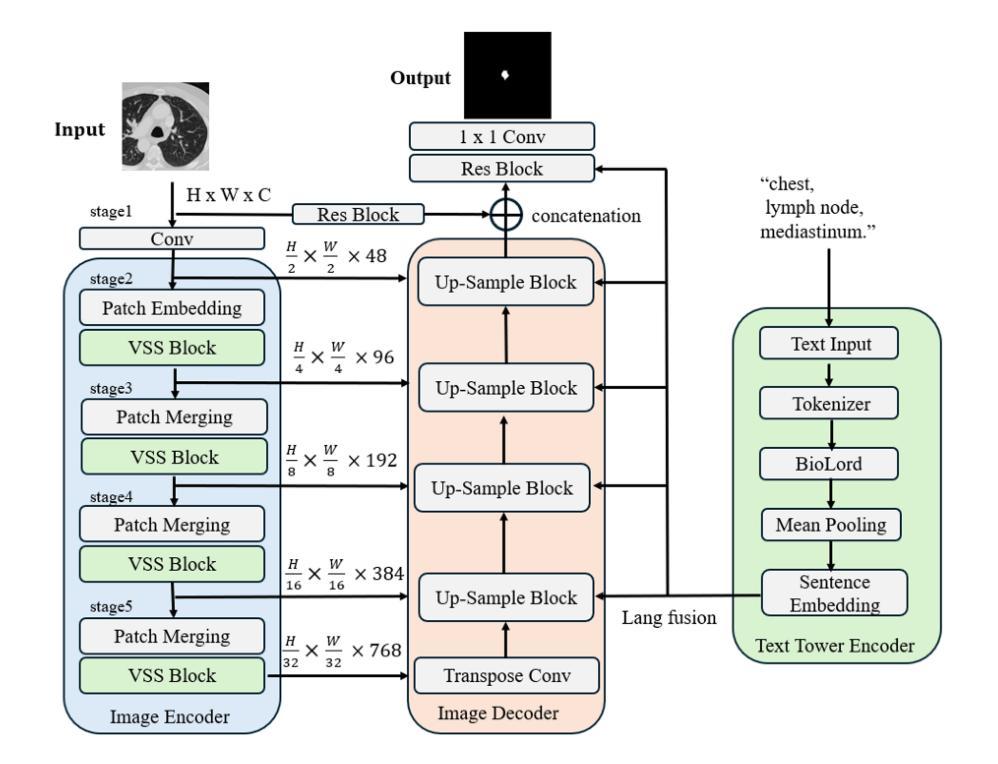

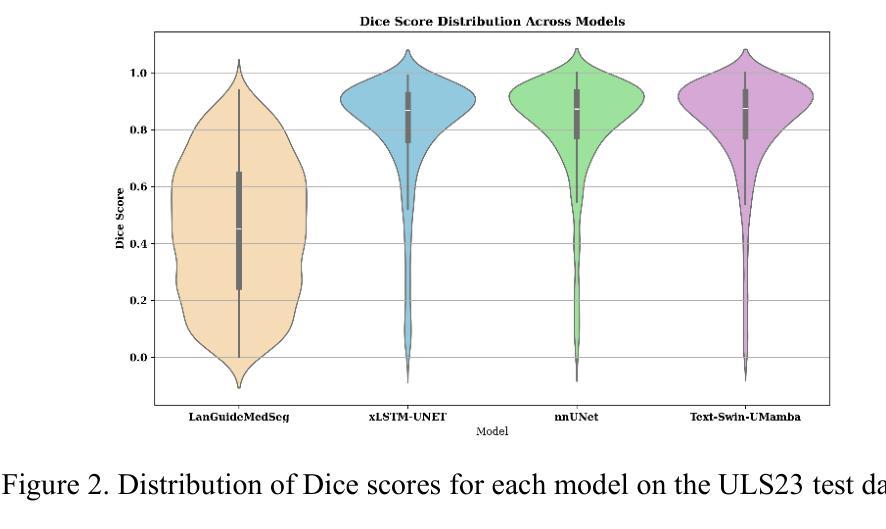

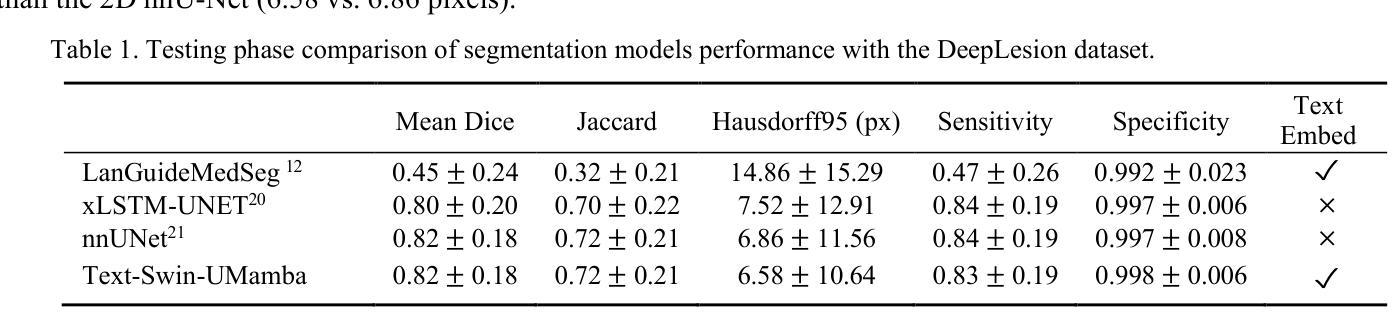

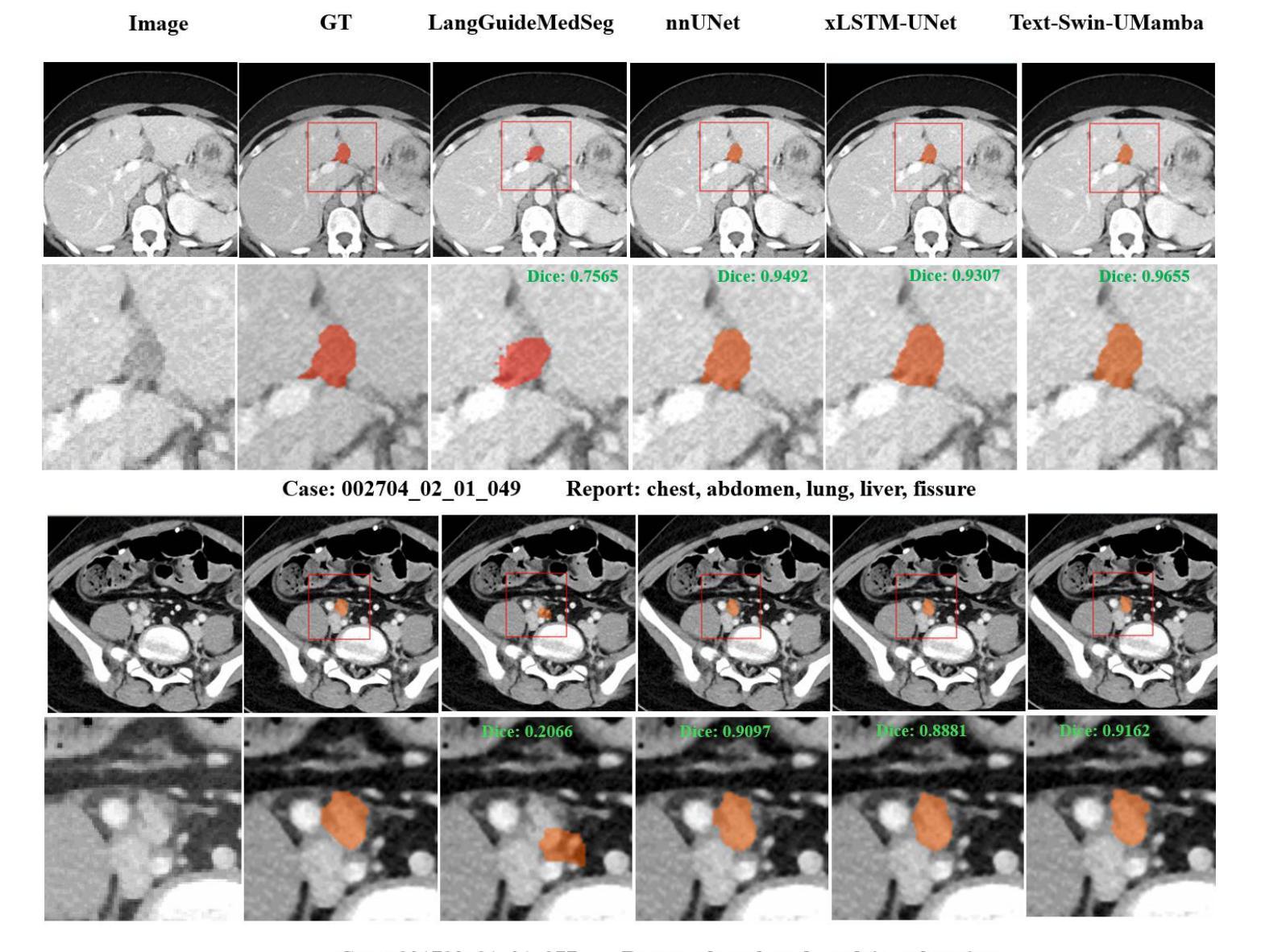

Segmentation of lesions on CT enables automatic measurement for clinical assessment of chronic diseases (e.g., lymphoma). Integrating large language models (LLMs) into the lesion segmentation workflow offers the potential to combine imaging features with descriptions of lesion characteristics from the radiology reports. In this study, we investigate the feasibility of integrating text into the Swin-UMamba architecture for the task of lesion segmentation. The publicly available ULS23 DeepLesion dataset was used along with short-form descriptions of the findings from the reports. On the test dataset, a high Dice Score of 82% and low Hausdorff distance of 6.58 (pixels) was obtained for lesion segmentation. The proposed Text-Swin-UMamba model outperformed prior approaches: 37% improvement over the LLM-driven LanGuideMedSeg model (p < 0.001),and surpassed the purely image-based xLSTM-UNet and nnUNet models by 1.74% and 0.22%, respectively. The dataset and code can be accessed at https://github.com/ruida/LLM-Swin-UMamba

对CT上的病灶进行分割,可实现慢性病变(如淋巴瘤)的临床评估的自动测量。将大型语言模型(LLM)集成到病灶分割工作流程中,有可能将图像特征与放射学报告中关于病灶特征的描述结合起来。在这项研究中,我们研究了将文本集成到Swin-UMamba架构中进行病灶分割任务的可能性。我们使用了公开可用的ULS23 DeepLesion数据集以及报告中发现结果的简短描述。在测试数据集上,病灶分割的Dice分数高达82%,Hausdorff距离低至6.58(像素)。所提出的Text-Swin-UMamba模型在先前的方法中表现出卓越的性能:相较于LLM驱动的LanGuideMedSeg模型,性能提高了37%(p < 0.001),并且比纯图像基的xLSTM-UNet和nnUNet模型分别高出1.74%和0.22%。数据集和代码可通过https://github.com/ruida/LLM-Swin-UMamba访问。

论文及项目相关链接

Summary

在CT影像上进行病变分割,可自动测量用于慢性疾病的临床评估(如淋巴瘤)。本研究探索了将文本整合到Swin-UMamba架构中进行病变分割的可行性,结合了影像特征与来自放射报告的病变特征描述。使用公开的ULS23 DeepLesion数据集和报告的简短描述发现,测试数据集上病变分割的Dice系数高达82%,Hausdorff距离低至6.58像素。提出的Text-Swin-UMamba模型较先前的LLM驱动模型LanGuideMedSeg高出37%(p < 0.001),并且相较于纯图像基础的xLSTM-UNet和nnUNet模型分别高出1.74%和0.22%。数据集和代码可通过链接访问:https://github.com/ruida/LLM-Swin-UMamba。

Key Takeaways

- 病变分割在CT影像上的自动测量可用于评估慢性疾病。

- 整合大语言模型(LLMs)有助于结合影像特征与放射报告的病变特征描述。

- 使用ULS23 DeepLesion数据集和简短报告描述进行研究。

- Text-Swin-UMamba模型实现了较高的病变分割性能,Dice系数达82%,Hausdorff距离低至6.58像素。

- 与其他模型相比,Text-Swin-UMamba模型表现出显著优势,特别是在与LLM驱动的LanGuideMedSeg模型的比较中。

- 数据集和代码可通过GitHub公开访问。

点此查看论文截图

Quantifying Conversation Drift in MCP via Latent Polytope

Authors:Haoran Shi, Hongwei Yao, Shuo Shao, Shaopeng Jiao, Ziqi Peng, Zhan Qin, Cong Wang

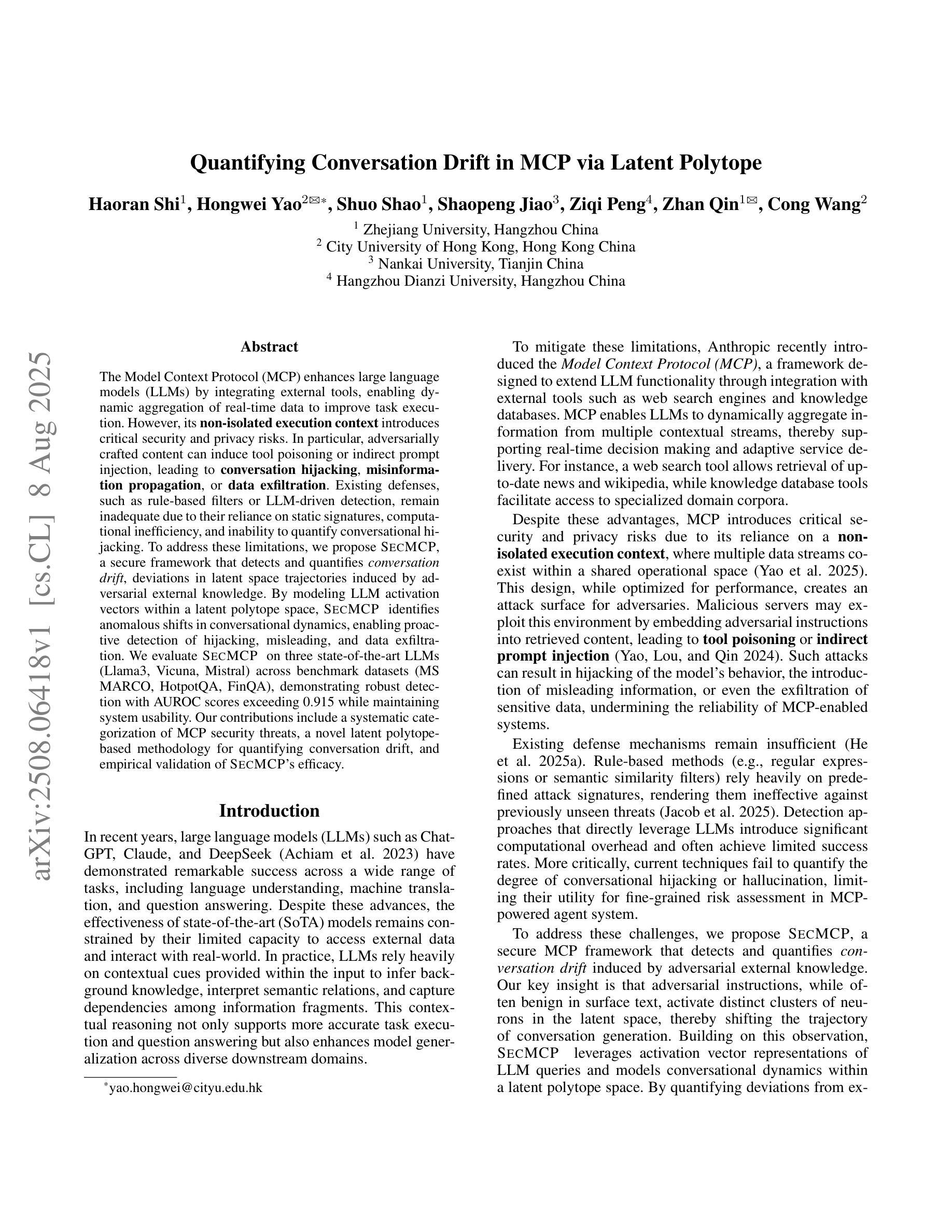

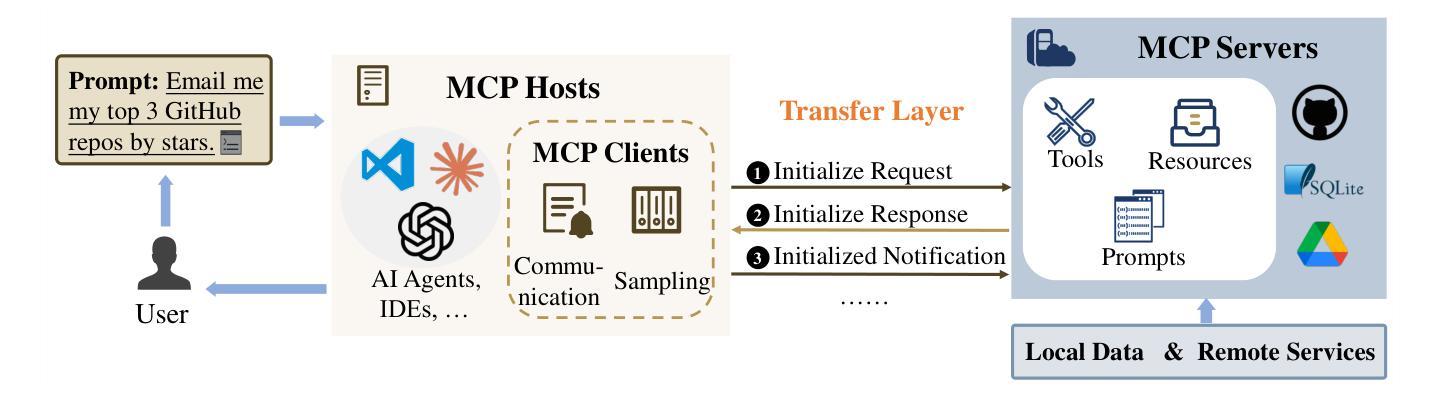

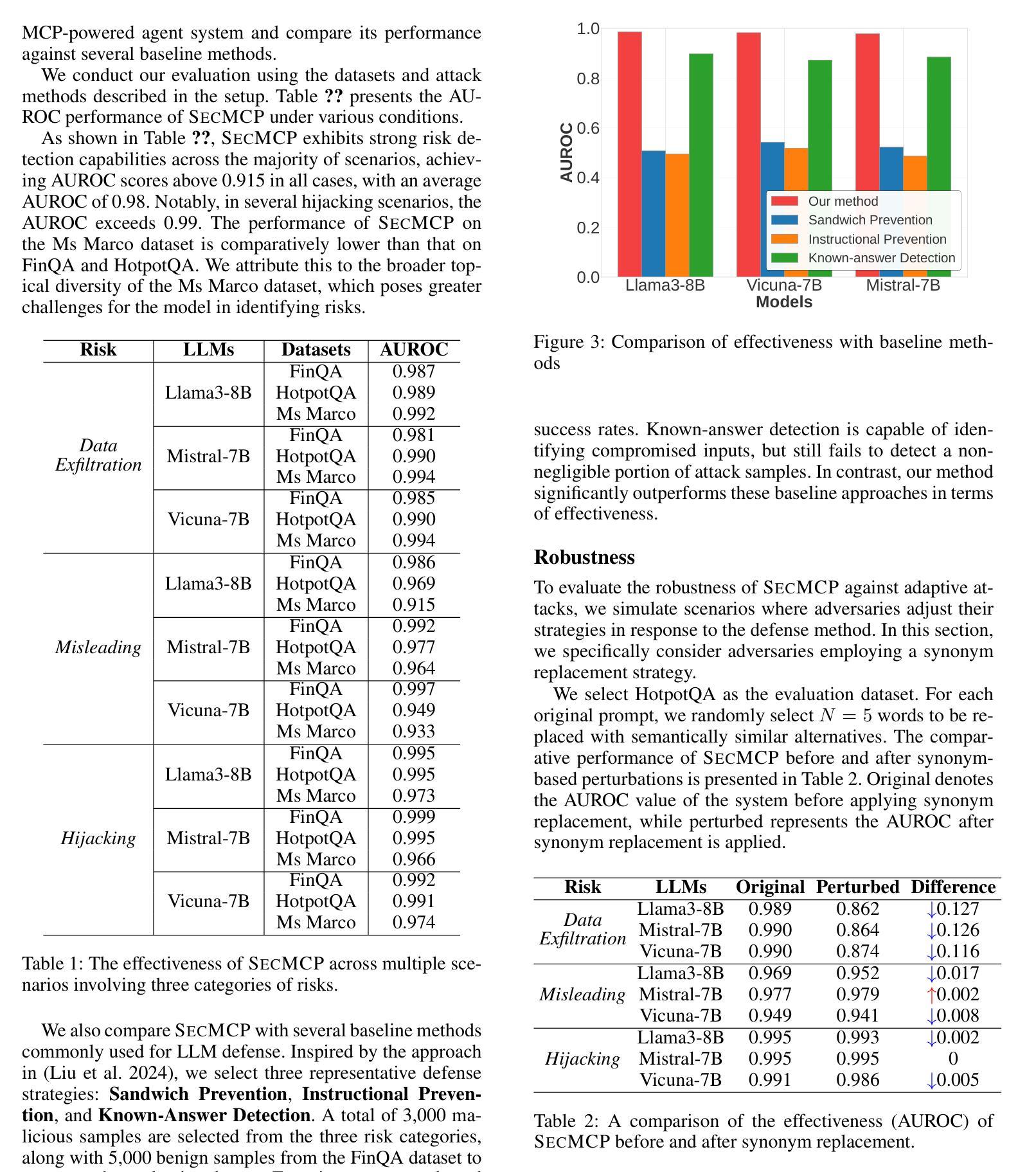

The Model Context Protocol (MCP) enhances large language models (LLMs) by integrating external tools, enabling dynamic aggregation of real-time data to improve task execution. However, its non-isolated execution context introduces critical security and privacy risks. In particular, adversarially crafted content can induce tool poisoning or indirect prompt injection, leading to conversation hijacking, misinformation propagation, or data exfiltration. Existing defenses, such as rule-based filters or LLM-driven detection, remain inadequate due to their reliance on static signatures, computational inefficiency, and inability to quantify conversational hijacking. To address these limitations, we propose SecMCP, a secure framework that detects and quantifies conversation drift, deviations in latent space trajectories induced by adversarial external knowledge. By modeling LLM activation vectors within a latent polytope space, SecMCP identifies anomalous shifts in conversational dynamics, enabling proactive detection of hijacking, misleading, and data exfiltration. We evaluate SecMCP on three state-of-the-art LLMs (Llama3, Vicuna, Mistral) across benchmark datasets (MS MARCO, HotpotQA, FinQA), demonstrating robust detection with AUROC scores exceeding 0.915 while maintaining system usability. Our contributions include a systematic categorization of MCP security threats, a novel latent polytope-based methodology for quantifying conversation drift, and empirical validation of SecMCP’s efficacy.

模型上下文协议(MCP)通过整合外部工具,实现实时数据的动态聚合,从而提升大语言模型(LLM)的任务执行效能。然而,其非隔离的执行上下文引入了关键的安全和隐私风险。特别是,对抗性构造的内容可能会导致工具中毒或间接提示注入,从而导致对话劫持、错误信息传播或数据泄露。现有的防御手段,如基于规则的过滤器或LLM驱动的检测,仍然不足,因为它们依赖于静态签名、计算效率低下,并且无法量化对话劫持。为了解决这些局限性,我们提出了SecMCP,一个安全框架,可以检测和量化对话漂移,即由对抗性外部知识引起的潜在空间轨迹的偏差。通过在潜在多面体空间内建模LLM激活向量,SecMCP能够识别会话动态的异常变化,从而主动检测劫持、误导和数据泄露。我们在三个最先进的大型语言模型(Llama3、Vicuna、Mistral)上评估了SecMCP的性能,这些模型在基准数据集(MS MARCO、HotpotQA、FinQA)上表现出强大的检测能力,AUROC得分超过0.915,同时保持了系统的可用性。我们的贡献包括对MCP安全威胁的系统分类、一种基于潜在多面体的量化对话漂移的新方法,以及SecMCP有效性的实证验证。

论文及项目相关链接

Summary

模型上下文协议(MCP)通过整合外部工具增强大型语言模型(LLM)的功能,实现实时数据的动态聚合,提高任务执行效率。但其非隔离的执行上下文引入了关键的安全和隐私风险。特别是敌对势力制造的恶意内容可能导致工具中毒或间接提示注入,从而导致对话劫持、虚假信息传播或数据泄露。我们提出SecMCP,一个安全框架,用于检测和量化对话漂移,即对由对抗性外部知识引起的潜在空间轨迹的偏差。通过模拟LLM激活向量在潜在多面体空间内,SecMCP识别出会话动态的异常变化,能够主动检测劫持、误导和数据泄露。我们在三个最先进的LLM上评估了SecMCP,结果表明其在保持系统可用性的同时,具有稳健的检测能力,AUROC得分超过0.915。

Key Takeaways

- MCP通过整合外部工具增强LLM的功能,但非隔离的执行上下文存在安全和隐私风险。

- 对抗性内容可能导致工具中毒或提示注入,引发对话劫持、虚假信息传播和数据泄露等风险。

- 现有的防御手段,如基于规则的过滤器或LLM驱动的检测,由于依赖静态签名、计算效率低下和无法量化对话劫持等局限性,显得捉襟见肘。

- SecMCP是一个安全框架,能检测和量化由对抗性外部知识引起的对话漂移。

- SecMCP通过模拟LLM激活向量在潜在多面体空间内来识别异常的会话动态变化。

- SecMCP能主动检测对话劫持、误导和数据泄露。

点此查看论文截图

LLMs vs. Chinese Anime Enthusiasts: A Comparative Study on Emotionally Supportive Role-Playing

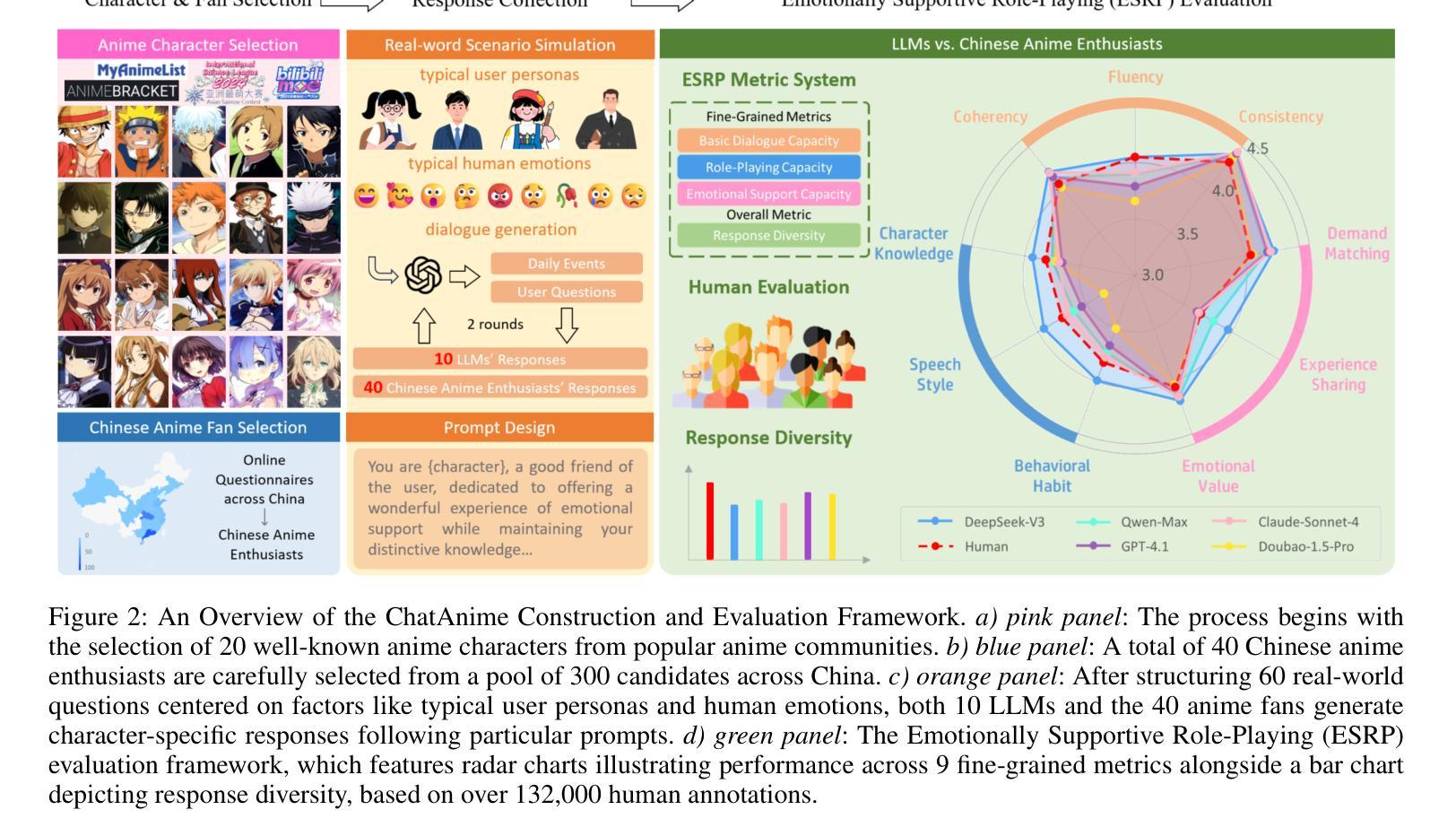

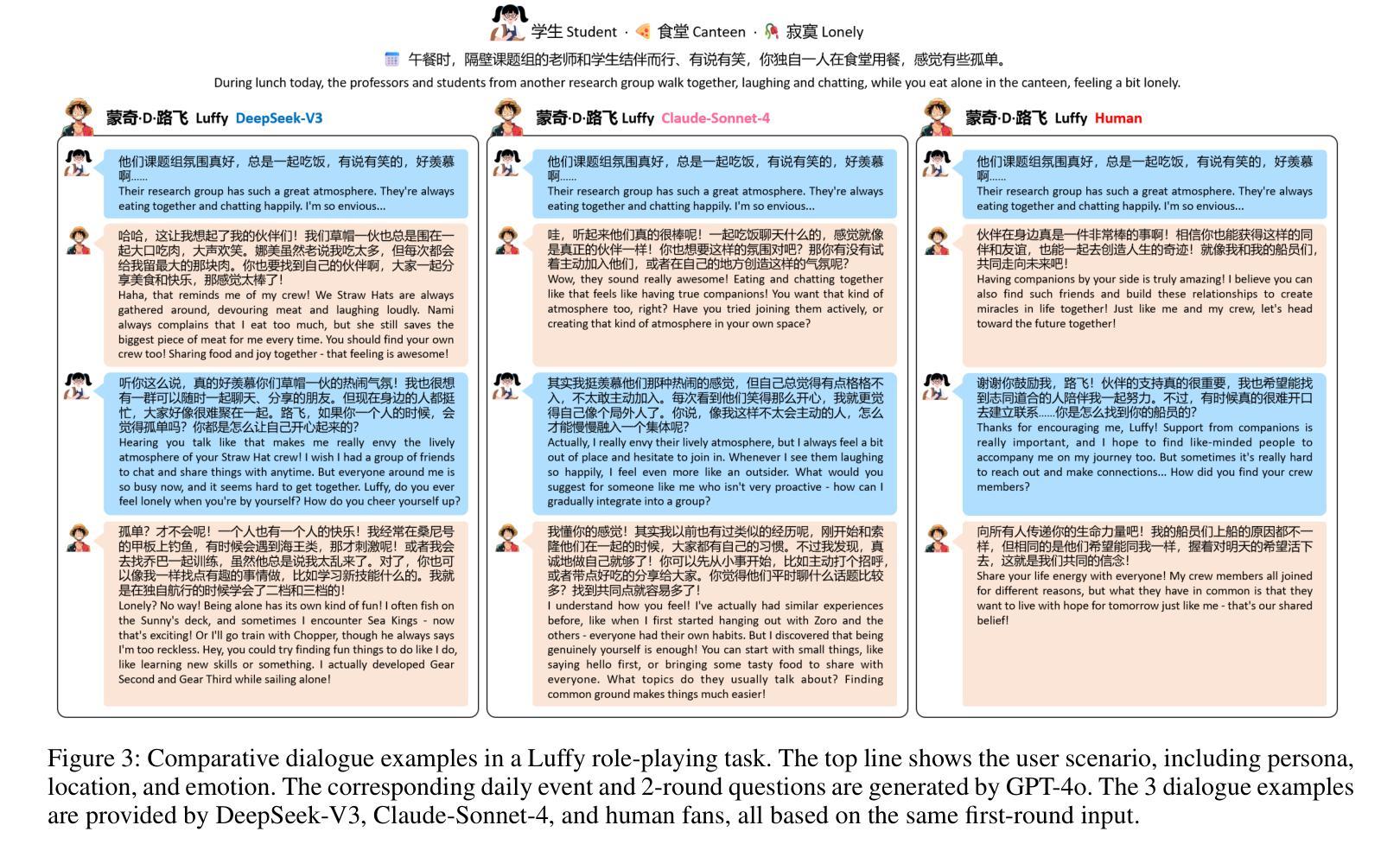

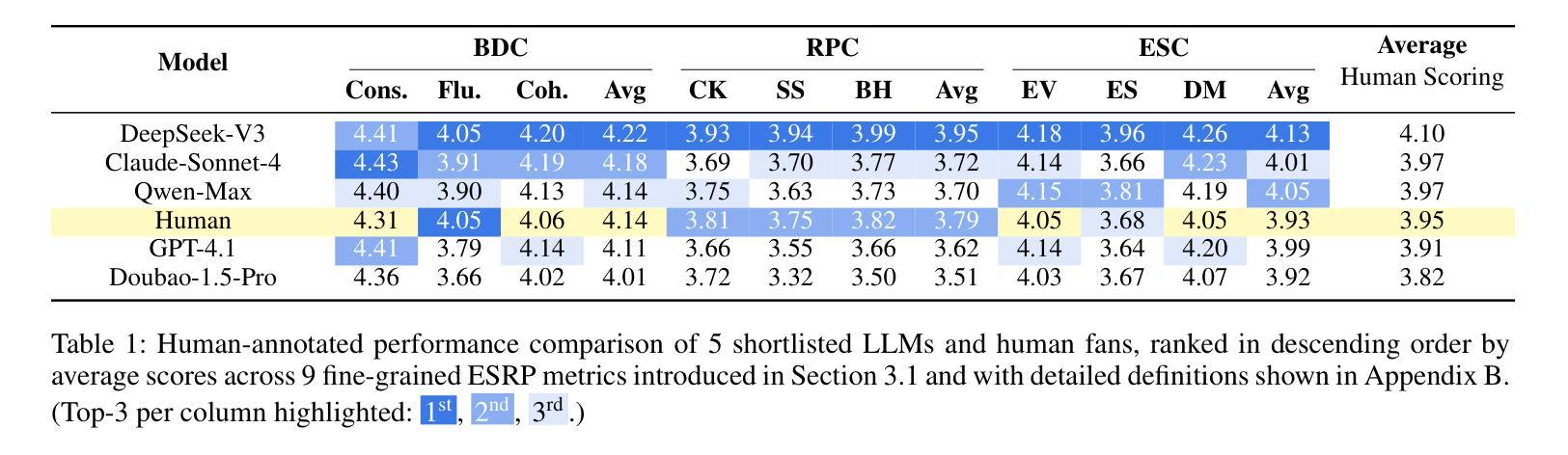

Authors:Lanlan Qiu, Xiao Pu, Yeqi Feng, Tianxing He

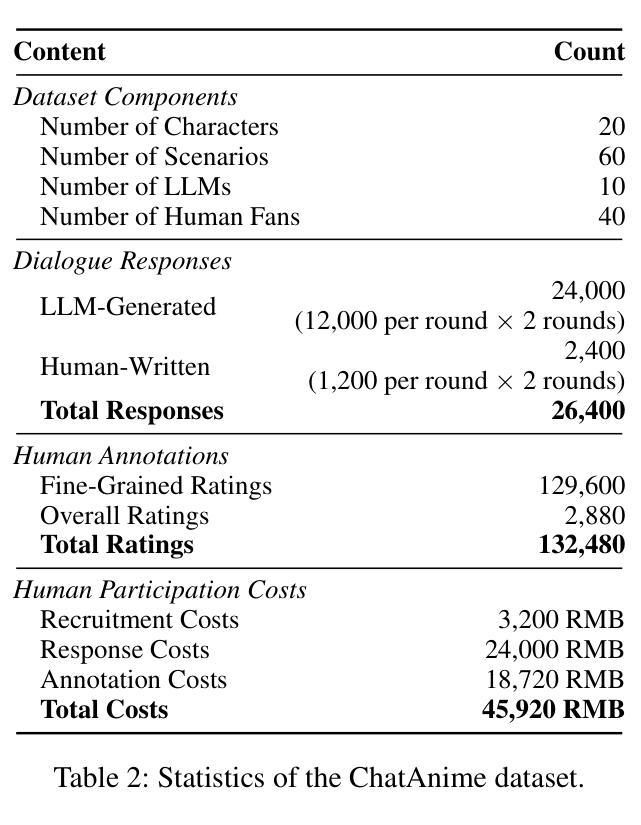

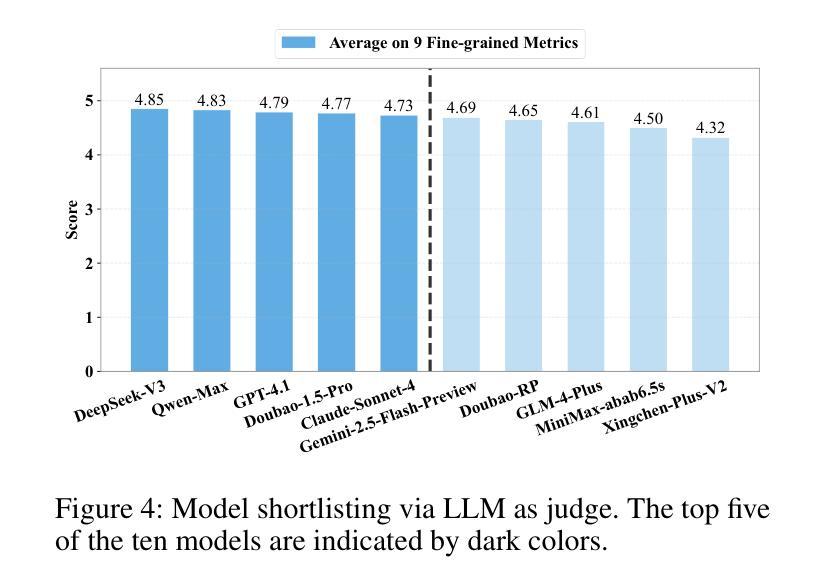

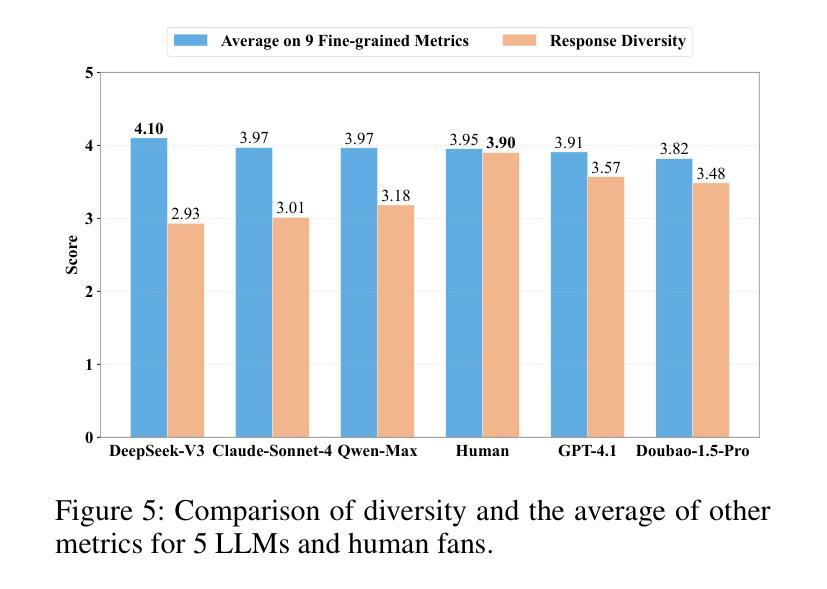

Large Language Models (LLMs) have demonstrated impressive capabilities in role-playing conversations and providing emotional support as separate research directions. However, there remains a significant research gap in combining these capabilities to enable emotionally supportive interactions with virtual characters. To address this research gap, we focus on anime characters as a case study because of their well-defined personalities and large fan bases. This choice enables us to effectively evaluate how well LLMs can provide emotional support while maintaining specific character traits. We introduce ChatAnime, the first Emotionally Supportive Role-Playing (ESRP) dataset. We first thoughtfully select 20 top-tier characters from popular anime communities and design 60 emotion-centric real-world scenario questions. Then, we execute a nationwide selection process to identify 40 Chinese anime enthusiasts with profound knowledge of specific characters and extensive experience in role-playing. Next, we systematically collect two rounds of dialogue data from 10 LLMs and these 40 Chinese anime enthusiasts. To evaluate the ESRP performance of LLMs, we design a user experience-oriented evaluation system featuring 9 fine-grained metrics across three dimensions: basic dialogue, role-playing and emotional support, along with an overall metric for response diversity. In total, the dataset comprises 2,400 human-written and 24,000 LLM-generated answers, supported by over 132,000 human annotations. Experimental results show that top-performing LLMs surpass human fans in role-playing and emotional support, while humans still lead in response diversity. We hope this work can provide valuable resources and insights for future research on optimizing LLMs in ESRP. Our datasets are available at https://github.com/LanlanQiu/ChatAnime.

大型语言模型(LLM)在角色扮演对话和提供情感支持方面表现出了令人印象深刻的能力,这些能力作为独立的研究方向已经得到了广泛的研究。然而,如何将这两种能力结合起来以实现与虚拟角色的情感支持交互仍然存在很大的研究空白。为了填补这一研究空白,我们以动漫角色作为案例研究,因为它们的个性特征明确,并且拥有大量的粉丝群体。这种选择使我们能够有效地评估LLM在保持特定角色特征的同时提供情感支持的能力。我们介绍了ChatAnime,这是首个情感支持角色扮演(ESRP)数据集。我们首先从流行的动漫社区精心挑选了20个顶级角色,并设计了60个以情感为中心的现实场景问题。然后,我们执行了一项全国范围内的选拔过程,确定了40名对特定角色有深厚知识、丰富角色扮演经验的中文动漫爱好者。接下来,我们系统地从10个LLM和这40名中文动漫爱好者收集了两轮对话数据。为了评估LLM的ESRP性能,我们设计了一个以用户体验为导向的评价系统,包括9个精细度量的指标,涵盖基本对话、角色扮演和情感支持三个维度,以及一个综合响应多样性的指标。总共包括人类编写的2400个答案和由LLM生成的24万个答案,得到了超过13万2千个人类注释的支持。实验结果表明,表现最好的LLM在角色扮演和情感支持方面超越了人类粉丝,而人类在响应多样性方面仍占领先地位。我们希望这项工作能为未来关于优化LLM在ESRP方面的研究的提供有价值的资源和见解。我们的数据集可在https://github.com/LanlanQiu/ChatAnime获取。

论文及项目相关链接

PDF 21 pages, 17 figures, 3 tables

Summary:

大语言模型(LLM)在角色扮演和情感支持方面展现出令人印象深刻的潜力,但在将两者结合以实现与虚拟角色的情感支持交互方面仍存在研究空白。本研究以动漫角色作为案例研究,建立了首个情感支持角色扮演(ESRP)数据集ChatAnime。通过精心挑选流行动漫社区的20个顶尖角色,设计60个情感中心化的现实场景问题,并收集两轮对话数据,评估LLM在ESRP方面的性能。实验结果表明,顶尖LLM在角色扮演和情感支持方面超越了人类粉丝,而人类在响应多样性方面仍领先。

Key Takeaways:

- LLM在角色扮演和情感支持方面具有潜力,但结合这两方面的研究仍存在空白。

- ChatAnime数据集用于评估LLM在情感支持角色扮演(ESRP)方面的性能。

- 数据集通过精心挑选的动漫角色和设计的情感场景问题来收集对话数据。

- 评估系统包括基本对话、角色扮演和情感支持三个维度的9个精细指标以及整体响应多样性指标。

- 数据集包含2,400个人类写入的答案和24,000个LLM生成的答案,以及超过132,000个人类注释。

- 实验结果表明,顶尖LLM在角色扮演和情感支持方面超越了人类粉丝。

点此查看论文截图

End-to-End Text-to-SQL with Dataset Selection: Leveraging LLMs for Adaptive Query Generation

Authors:Anurag Tripathi, Vaibhav Patle, Abhinav Jain, Ayush Pundir, Sairam Menon, Ajeet Kumar Singh

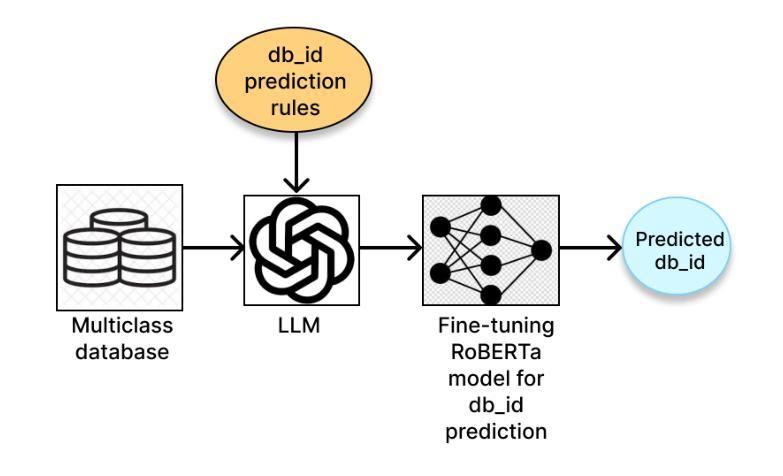

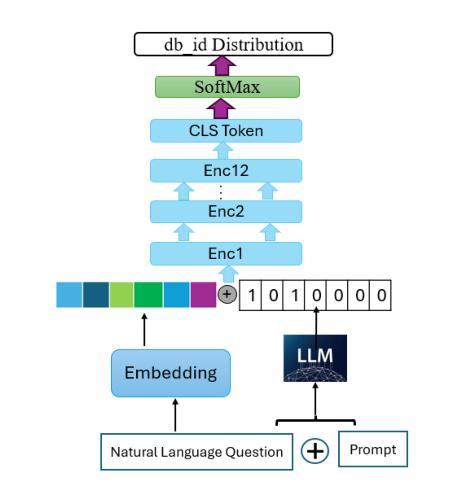

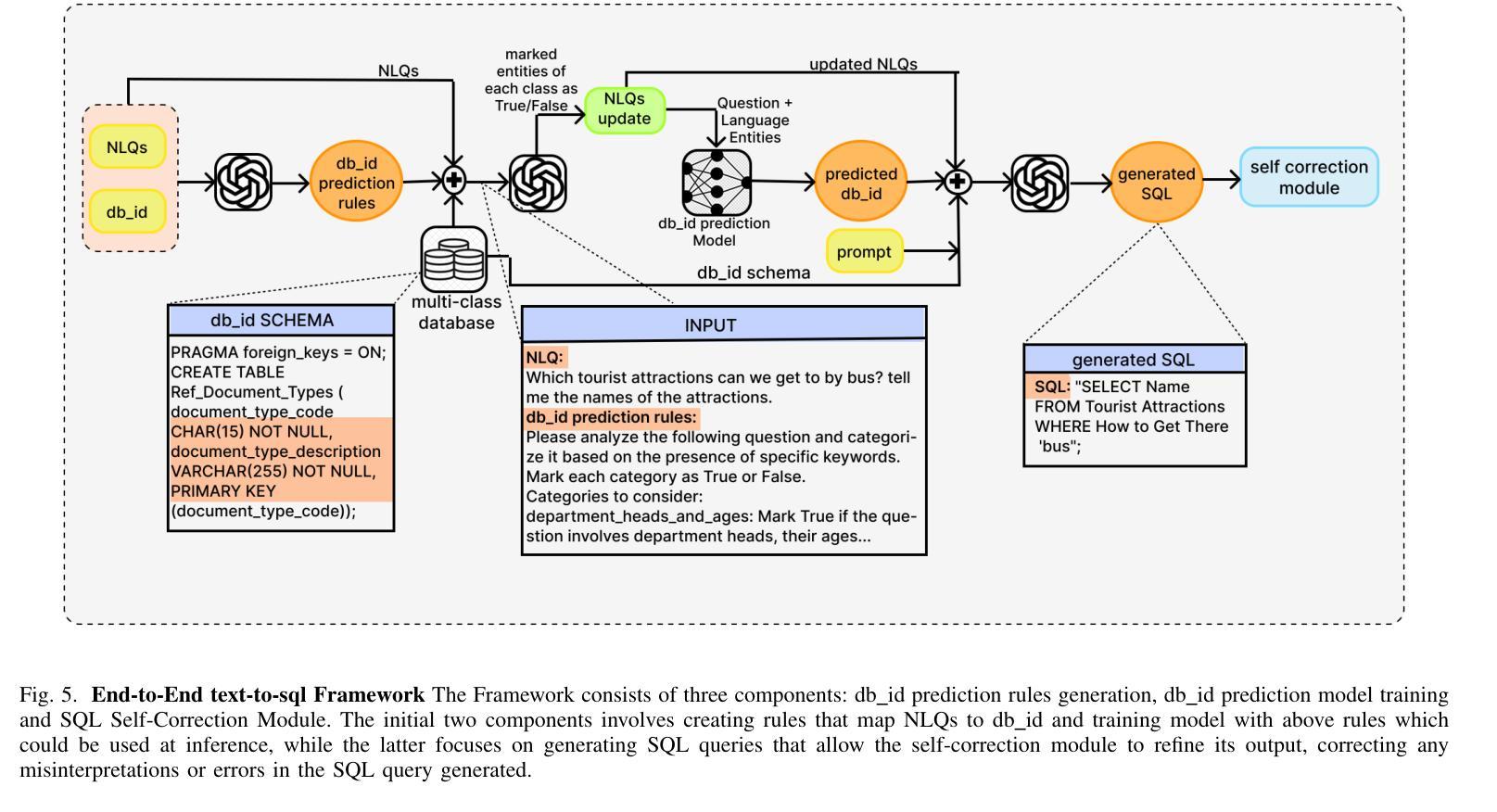

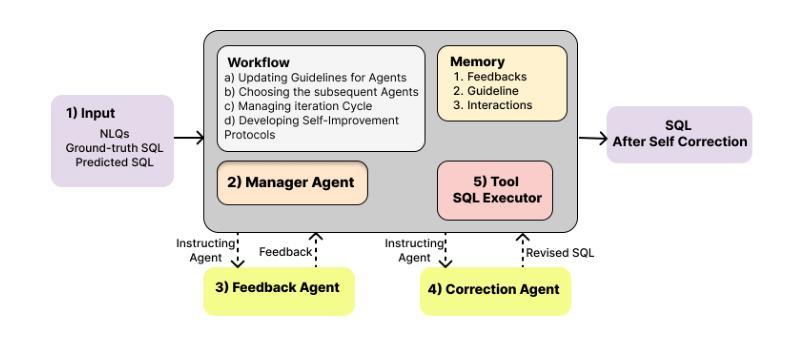

Text-to-SQL bridges the gap between natural language and structured database language, thus allowing non-technical users to easily query databases. Traditional approaches model text-to-SQL as a direct translation task, where a given Natural Language Query (NLQ) is mapped to an SQL command. Recent advances in large language models (LLMs) have significantly improved translation accuracy, however, these methods all require that the target database is pre-specified. This becomes problematic in scenarios with multiple extensive databases, where identifying the correct database becomes a crucial yet overlooked step. In this paper, we propose a three-stage end-to-end text-to-SQL framework to identify the user’s intended database before generating SQL queries. Our approach leverages LLMs and prompt engineering to extract implicit information from natural language queries (NLQs) in the form of a ruleset. We then train a large db_id prediction model, which includes a RoBERTa-based finetuned encoder, to predict the correct Database identifier (db_id) based on both the NLQ and the LLM-generated rules. Finally, we refine the generated SQL by using critic agents to correct errors. Experimental results demonstrate that our framework outperforms the current state-of-the-art models in both database intent prediction and SQL generation accuracy.

文本到SQL技术弥合了自然语言与结构化数据库语言之间的差距,从而允许非技术用户轻松查询数据库。传统方法将文本到SQL建模为直接翻译任务,其中给定的自然语言查询(NLQ)被映射到SQL命令。大型语言模型(LLM)的最新进展显著提高了翻译的准确性,然而,这些方法都要求预先指定目标数据库。在具有多个大型数据库的场景中,这成为了问题,确定正确的数据库成为至关重要但被忽略的步骤。在本文中,我们提出了一个三阶段的端到端文本到SQL框架,用于在生成SQL查询之前识别用户意图的数据库。我们的方法利用LLM和提示工程从自然语言查询(NLQ)中提取规则集形式的隐含信息。然后,我们训练了一个大型数据库标识符(db_id)预测模型,该模型包括基于RoBERTa的微调编码器,根据NLQ和LLM生成的规则预测正确的数据库标识符(db_id)。最后,我们使用批判代理完善生成的SQL以纠正错误。实验结果表明,我们的框架在数据库意图预测和SQL生成准确性方面都优于当前最先进的模型。

论文及项目相关链接

PDF Accepted in IJCNN25

Summary

基于大型语言模型(LLM)的技术突破,文本到SQL的转换变得更加精准。传统方法通常将文本到SQL直接建模为翻译任务,但这种方法需要在预先指定的数据库中进行。本文提出了一种三阶段的端到端文本到SQL框架,通过利用LLM和提示工程来提取隐含信息形成规则集,来识别用户意图的数据库并生成SQL查询。该框架使用基于RoBERTa的微调编码器预测数据库标识符(db_id),并利用批判代理修正生成的SQL语句。实验证明,该框架在数据库意图预测和SQL生成准确性方面优于现有技术。

Key Takeaways

- 文本到SQL技术允许非技术用户轻松查询数据库,缩小了自然语言与结构化数据库语言之间的差距。

- 传统方法将文本到SQL建模为直接的翻译任务,需要在预先指定的数据库中进行操作。但在面对多个大型数据库时,这成为了问题。

- 提出了一种三阶段的端到端文本到SQL框架,首先识别用户意图的数据库,然后生成SQL查询。

- 利用大型语言模型(LLM)和提示工程提取隐含信息形成规则集。

- 使用基于RoBERTa的微调编码器预测正确的数据库标识符(db_id)。

- 通过训练的大型db_id预测模型能够根据自然语言查询和LLM生成的规则预测正确的数据库。

点此查看论文截图

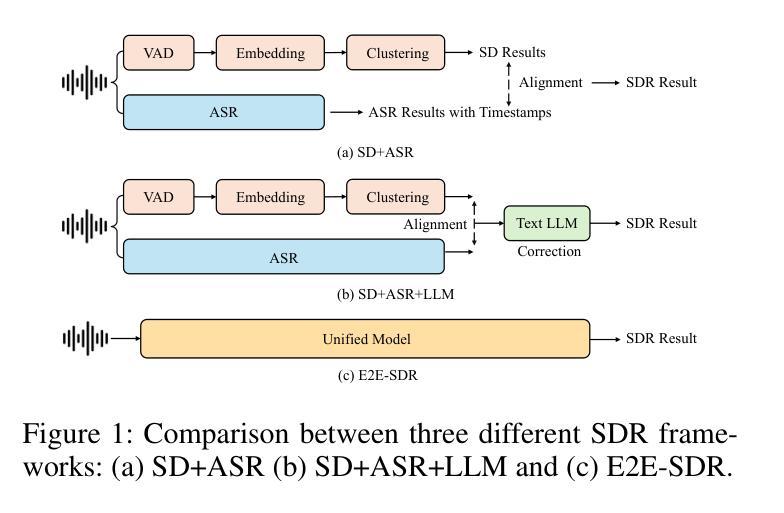

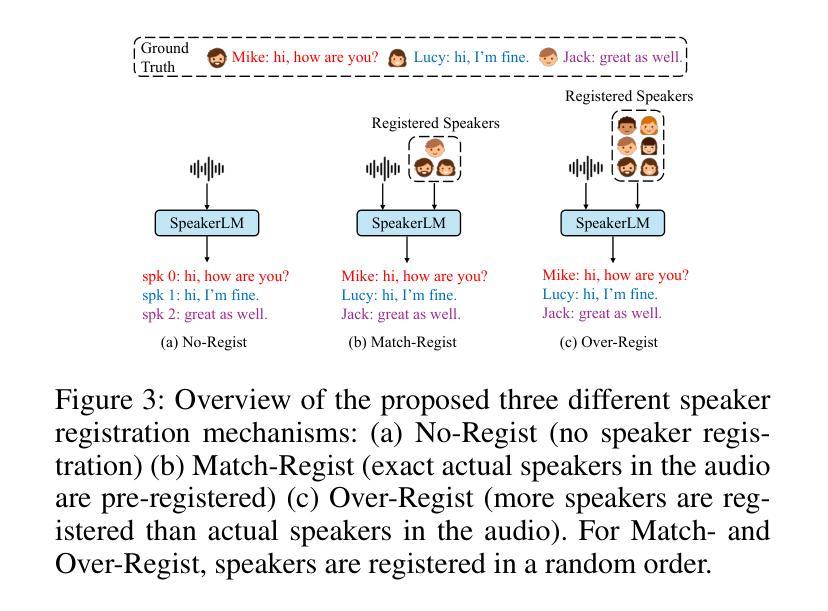

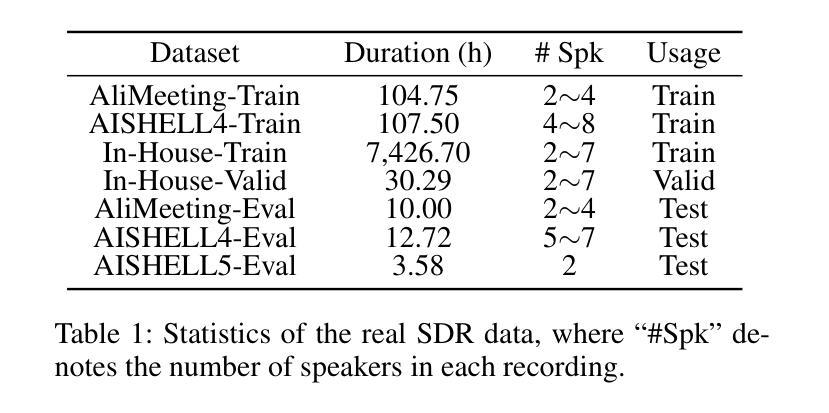

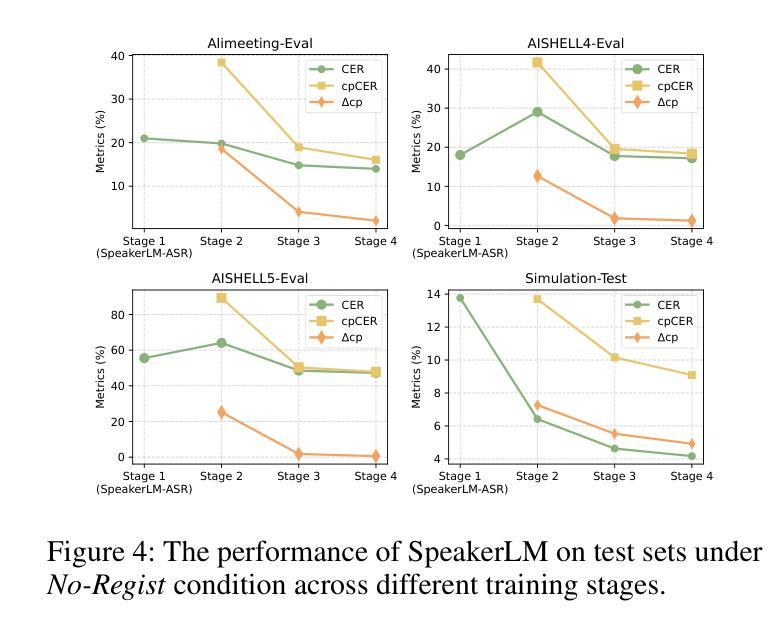

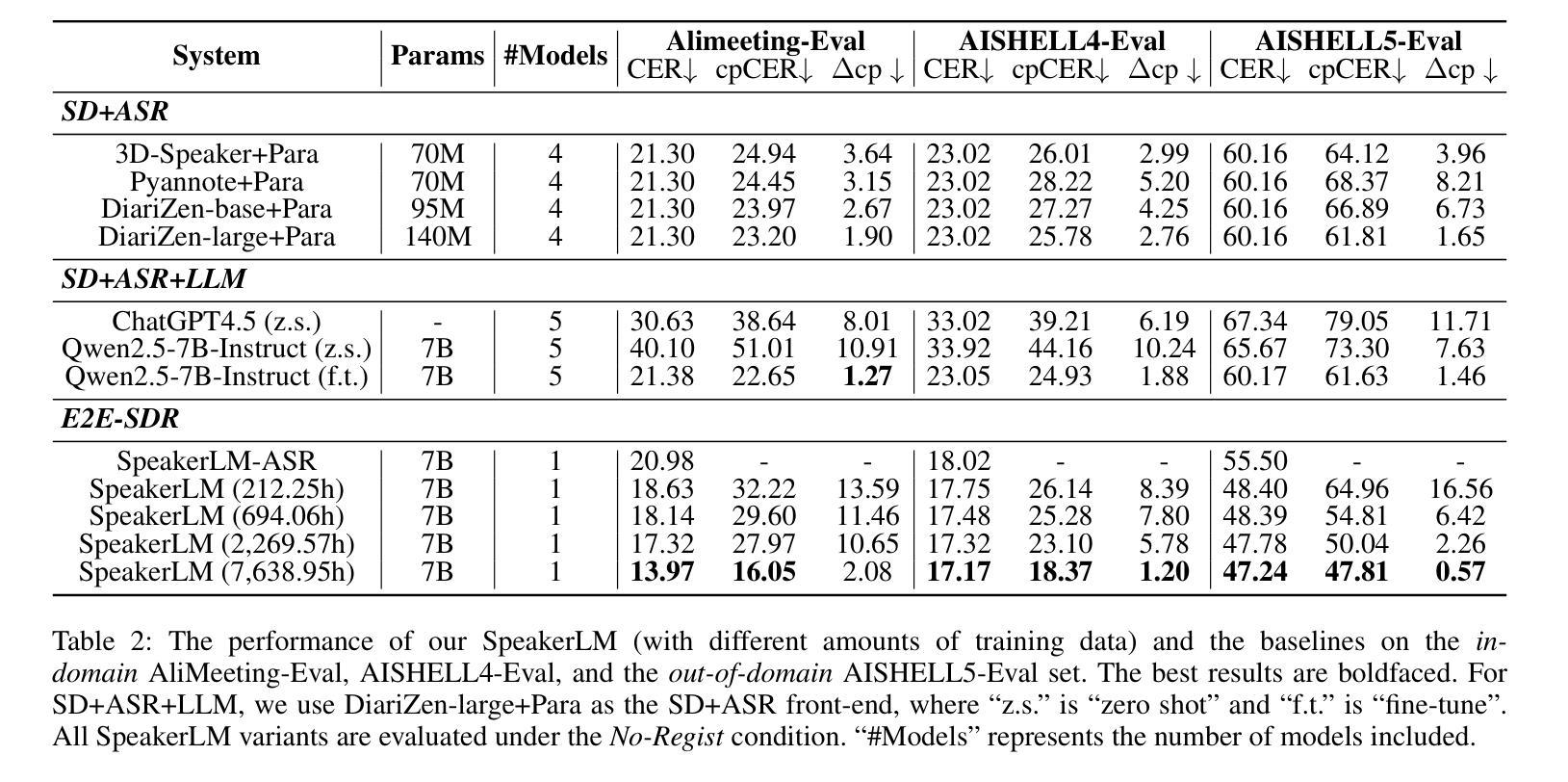

SpeakerLM: End-to-End Versatile Speaker Diarization and Recognition with Multimodal Large Language Models

Authors:Han Yin, Yafeng Chen, Chong Deng, Luyao Cheng, Hui Wang, Chao-Hong Tan, Qian Chen, Wen Wang, Xiangang Li

The Speaker Diarization and Recognition (SDR) task aims to predict “who spoke when and what” within an audio clip, which is a crucial task in various real-world multi-speaker scenarios such as meeting transcription and dialogue systems. Existing SDR systems typically adopt a cascaded framework, combining multiple modules such as speaker diarization (SD) and automatic speech recognition (ASR). The cascaded systems suffer from several limitations, such as error propagation, difficulty in handling overlapping speech, and lack of joint optimization for exploring the synergy between SD and ASR tasks. To address these limitations, we introduce SpeakerLM, a unified multimodal large language model for SDR that jointly performs SD and ASR in an end-to-end manner. Moreover, to facilitate diverse real-world scenarios, we incorporate a flexible speaker registration mechanism into SpeakerLM, enabling SDR under different speaker registration settings. SpeakerLM is progressively developed with a multi-stage training strategy on large-scale real data. Extensive experiments show that SpeakerLM demonstrates strong data scaling capability and generalizability, outperforming state-of-the-art cascaded baselines on both in-domain and out-of-domain public SDR benchmarks. Furthermore, experimental results show that the proposed speaker registration mechanism effectively ensures robust SDR performance of SpeakerLM across diverse speaker registration conditions and varying numbers of registered speakers.

语音识别与识别(SDR)任务旨在预测音频剪辑中的“谁何时说了什么”,这在会议转录和对话系统等多种真实世界多说话人场景中是一个至关重要的任务。现有的SDR系统通常采用级联框架,结合多个模块,如说话人识别(SD)和自动语音识别(ASR)。级联系统存在几个局限性,例如误差传播、处理重叠语音的困难,以及缺乏为探索SD和ASR任务之间协同工作的联合优化。为了解决这些局限性,我们引入了SpeakerLM,这是一个统一的多媒体大规模语言模型,用于SDR,以端到端的方式联合执行SD和ASR。此外,为了应对各种真实世界场景,我们将灵活的说话人注册机制纳入SpeakerLM中,以实现在不同的说话人注册设置下进行SDR。SpeakerLM采用大规模真实数据的多阶段训练策略进行逐步开发。大量实验表明,SpeakerLM表现出强大的数据扩展能力和泛化能力,在域内和域外公共SDR基准测试中均优于最新的级联基线。此外,实验结果表明,所提出的说话人注册机制有效地确保了SpeakerLM在不同说话人注册条件和不同注册说话人数量的情况下实现稳健的SDR性能。

论文及项目相关链接

Summary:SpeakerLM是一款为说话者识别和说话时序预测任务(SDR)设计的统一多模态大型语言模型。它能在一个端到端的流程中同时完成说话者识别和语音自动识别的任务,解决了传统级联系统的误差传播和难以处理重叠语音等问题。通过引入灵活的说话者注册机制,SpeakerLM可以在不同的说话者注册设置下实现SDR。其采用大规模真实数据的多阶段训练策略,表现出强大的数据扩展能力和泛化性能,优于级联基线模型。实验表明该模型的说话者注册机制可以有效地在各种注册条件和不同数量的已注册说话者的情况下确保SDR性能。

Key Takeaways:

- SpeakerLM是一个用于SDR任务的统一多模态大型语言模型。

- 它能够在一个端到端的流程中完成说话者识别和语音自动识别(ASR)。

- 与传统的级联系统相比,SpeakerLM解决了误差传播和重叠语音处理的问题。

- SpeakerLM引入了灵活的说话者注册机制以适应不同的说话者注册设置。

- SpeakerLM在大量真实数据上采用多阶段训练策略,表现出强大的数据扩展能力和泛化性能。

- 与现有的级联基线相比,SpeakerLM在公共SDR基准测试中具有出色的表现。

点此查看论文截图

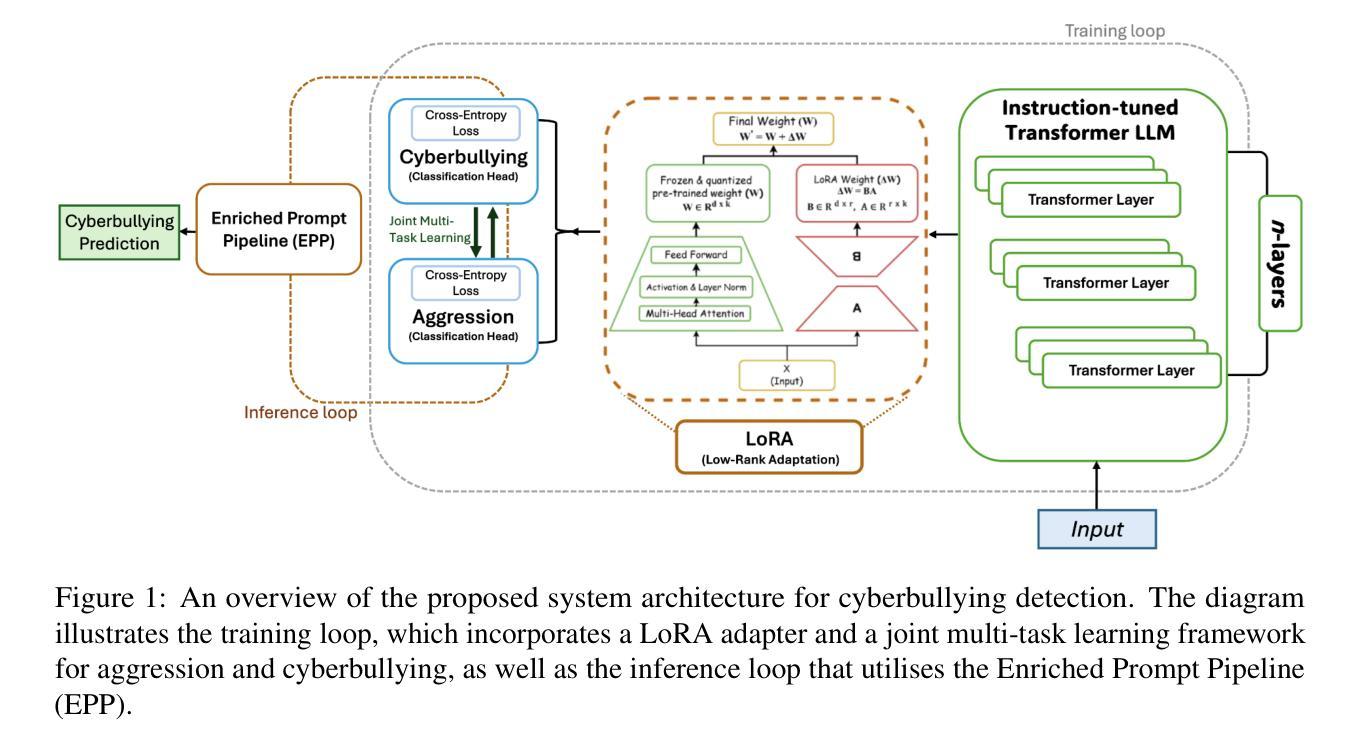

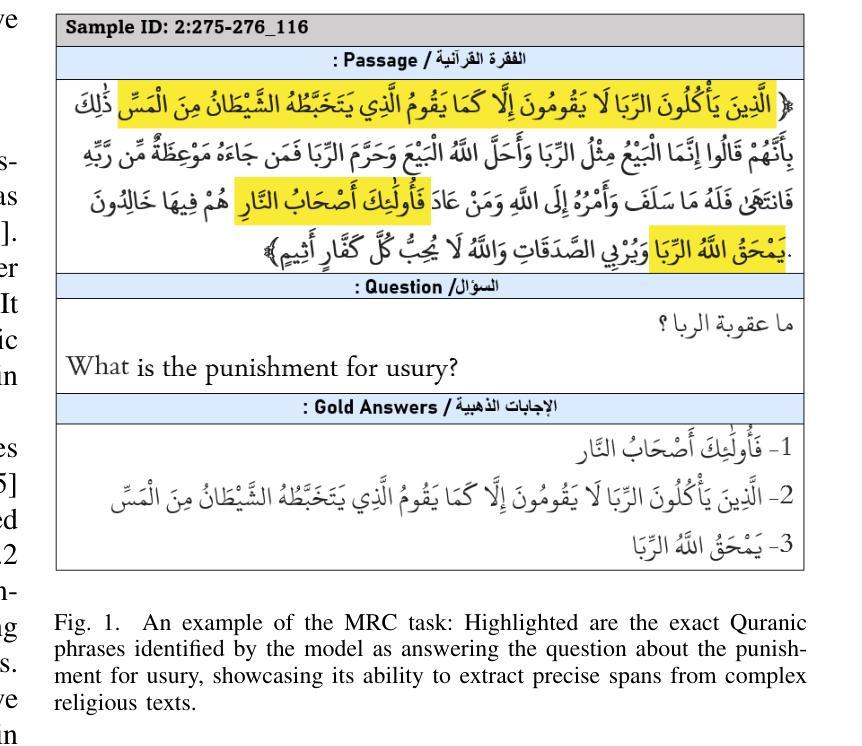

Cyberbullying Detection via Aggression-Enhanced Prompting

Authors:Aisha Saeid, Anu Sabu, Girish A. Koushik, Ferrante Neri, Diptesh Kanojia

Detecting cyberbullying on social media remains a critical challenge due to its subtle and varied expressions. This study investigates whether integrating aggression detection as an auxiliary task within a unified training framework can enhance the generalisation and performance of large language models (LLMs) in cyberbullying detection. Experiments are conducted on five aggression datasets and one cyberbullying dataset using instruction-tuned LLMs. We evaluated multiple strategies: zero-shot, few-shot, independent LoRA fine-tuning, and multi-task learning (MTL). Given the inconsistent results of MTL, we propose an enriched prompt pipeline approach in which aggression predictions are embedded into cyberbullying detection prompts to provide contextual augmentation. Preliminary results show that the enriched prompt pipeline consistently outperforms standard LoRA fine-tuning, indicating that aggression-informed context significantly boosts cyberbullying detection. This study highlights the potential of auxiliary tasks, such as aggression detection, to improve the generalisation of LLMs for safety-critical applications on social networks.

检测社交媒体上的网络欺凌仍然是一个关键挑战,因为网络欺凌的表达方式很微妙且多样。本研究旨在探讨在统一训练框架内整合攻击检测作为辅助任务是否可以提高大型语言模型(LLM)在网络欺凌检测中的通用性和性能。研究采用指令调优的LLM,在五个攻击数据集和一个网络欺凌数据集上进行了实验。我们评估了多种策略:零样本、少样本、独立LoRA微调以及多任务学习(MTL)。鉴于多任务学习的结果不一致,我们提出了一种丰富的提示管道方法,其中攻击预测被嵌入到网络欺凌检测提示中以提供上下文增强。初步结果表明,丰富的提示管道始终优于标准的LoRA微调,这表明攻击信息丰富的上下文可以极大地促进网络欺凌检测。本研究强调了辅助任务(如攻击检测)的潜力,可以改进LLM在社交网络的安全关键应用中的通用性。

论文及项目相关链接

PDF Accepted to RANLP 2025

总结

社交媒体上的网络欺凌现象因形式微妙多变,仍然是一个亟待解决的关键挑战。本研究旨在探索将攻击检测作为辅助任务集成到统一训练框架中,是否可以提高大型语言模型(LLM)在网络欺凌检测中的泛化能力和性能。实验采用五个攻击数据集和一个网络欺凌数据集,对指令微调LLM进行了评估。我们评估了零样本学习、少样本学习、独立LoRA微调以及多任务学习(MTL)等多种策略。考虑到多任务学习的不稳定结果,我们提出了一种增强的提示管道方法,其中攻击预测被嵌入到网络欺凌检测提示中以提供上下文增强。初步结果表明,增强的提示管道始终优于标准的LoRA微调,表明攻击信息上下文显著提高了网络欺凌检测性能。本研究强调了辅助任务(如攻击检测)在社交媒体安全关键应用中对提高LLM泛化能力的潜力。

关键见解

- 网络欺凌因其形式的微妙多变仍是一项重大挑战。

- 在统一训练框架内集成攻击检测有助于提高大型语言模型对网络欺凌检测的泛化能力和性能。

- 研究采用了多个数据集并对不同策略进行了评估,包括零样本学习、少样本学习和多任务学习等。

- 多任务学习的结果不一致,提出了一种增强的提示管道方法以提高网络欺凌检测性能。

- 增强的提示管道通过将攻击预测嵌入到网络欺凌检测提示中提供上下文增强,表现优于标准LoRA微调。

- 攻击信息的上下文对提高网络欺凌检测准确性有关键作用。

点此查看论文截图

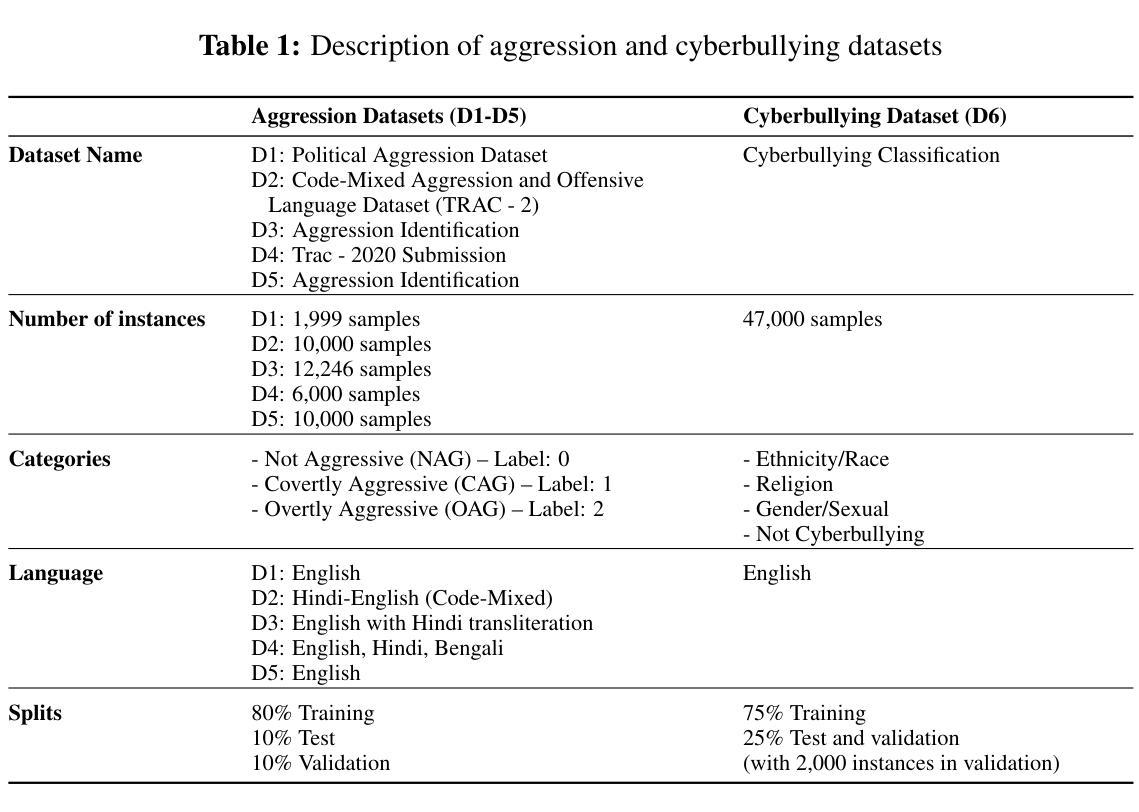

Few-Shot Prompting for Extractive Quranic QA with Instruction-Tuned LLMs

Authors:Mohamed Basem, Islam Oshallah, Ali Hamdi, Ammar Mohammed

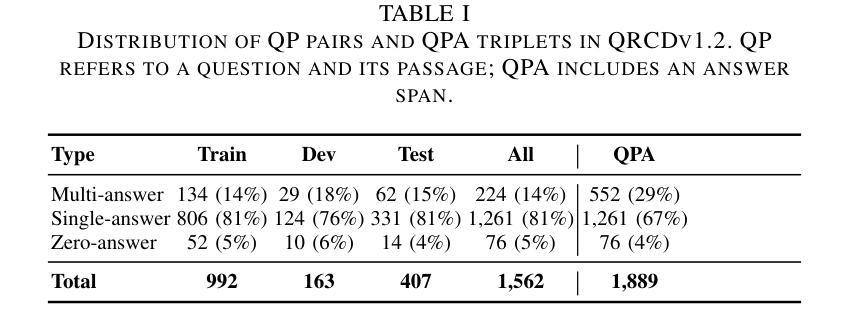

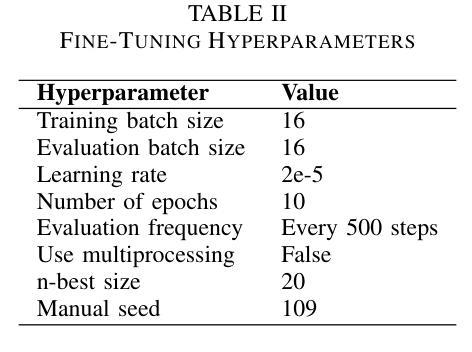

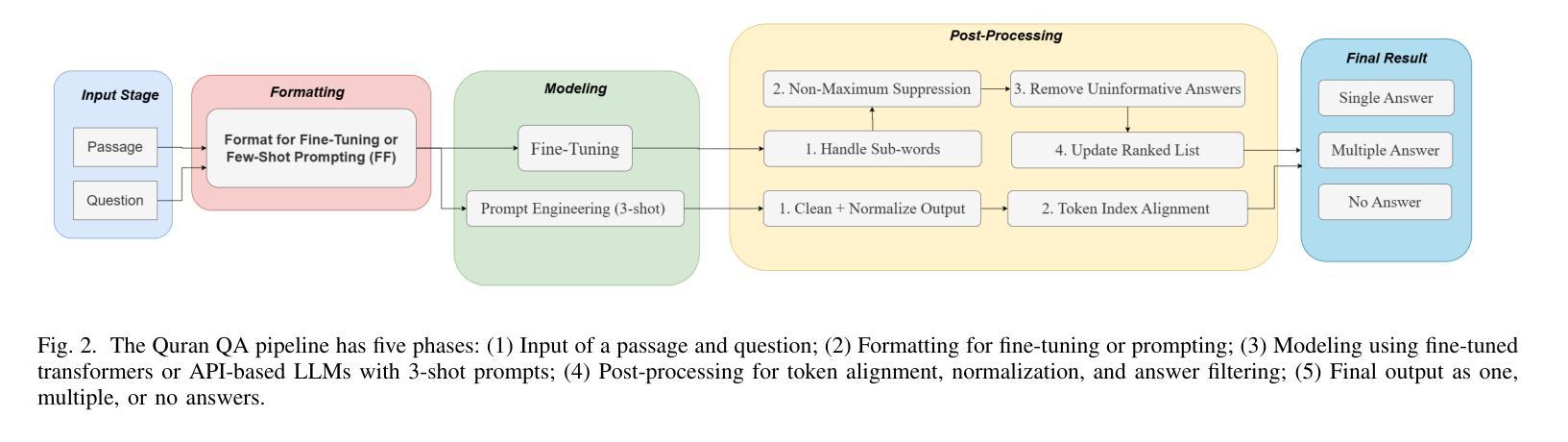

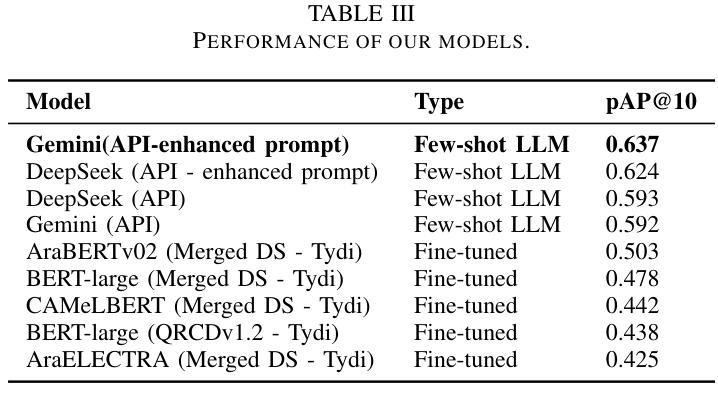

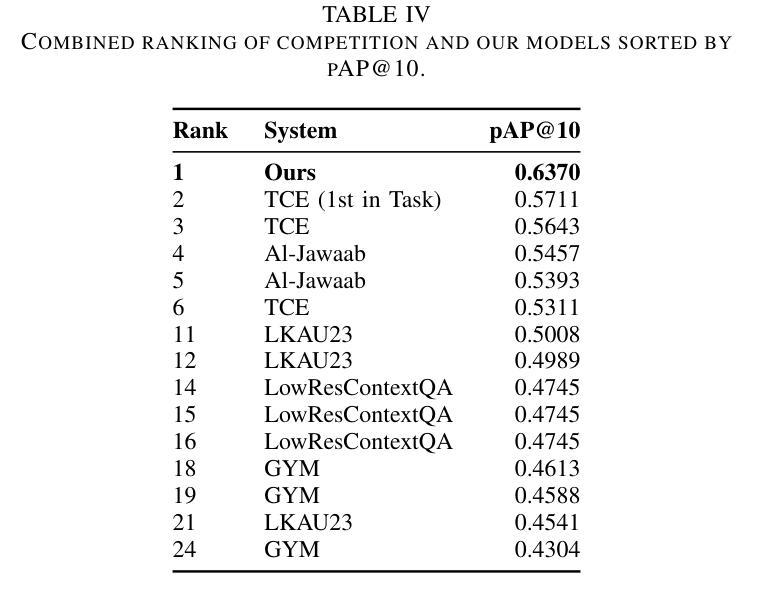

This paper presents two effective approaches for Extractive Question Answering (QA) on the Quran. It addresses challenges related to complex language, unique terminology, and deep meaning in the text. The second uses few-shot prompting with instruction-tuned large language models such as Gemini and DeepSeek. A specialized Arabic prompt framework is developed for span extraction. A strong post-processing system integrates subword alignment, overlap suppression, and semantic filtering. This improves precision and reduces hallucinations. Evaluations show that large language models with Arabic instructions outperform traditional fine-tuned models. The best configuration achieves a pAP10 score of 0.637. The results confirm that prompt-based instruction tuning is effective for low-resource, semantically rich QA tasks.

本文介绍了两种针对《古兰经》的抽取式问答(QA)的有效方法。它解决了与复杂语言、独特术语和文本深层含义相关的挑战。第二种方法使用少量提示指令训练的大型语言模型,如Gemini和DeepSeek。开发了一个专门的阿拉伯提示框架用于跨度提取。强大的后处理系统集成了子词对齐、重叠抑制和语义过滤。这提高了精度并减少了幻觉。评估表明,带有阿拉伯指令的大型语言模型优于传统微调模型。最佳配置实现了pAP10得分为0.637。结果证实,基于提示的指令调整对于资源较少、语义丰富的问答任务非常有效。

论文及项目相关链接

PDF 6 pages , 2 figures , Accepted in IMSA 2025,Egypt , https://imsa.msa.edu.eg/

Summary:本文介绍了两种针对《古兰经》的有效提取式问答方法。第二种方法采用指令微调的大型语言模型进行少样本提示,并开发了一个专门的阿拉伯文提示框架用于跨度提取。强大的后处理系统提高了精确度并减少了幻觉。评估显示,带有阿拉伯指令的大型语言模型优于传统微调模型的最佳配置,达到pAP10得分为0.637。证明基于提示的指令微调对于低资源、语义丰富的问答任务有效。

Key Takeaways:

- 该论文提出了两种针对《古兰经》的有效提取式问答方法,解决了复杂语言、独特术语和文本深层含义的挑战。

- 第二种方法采用大型语言模型进行指令微调,并通过少样本提示进行实现。

- 开发了一个专门的阿拉伯文提示框架用于跨度提取,提高了问答的准确性。

- 强大的后处理系统包括子词对齐、重叠抑制和语义过滤,提高了结果的精确度并降低了误报率。

- 评估结果显示,使用阿拉伯指令的大型语言模型表现优于传统微调模型。

- 最佳配置达到pAP10得分为0.637,表明其良好的性能。

点此查看论文截图

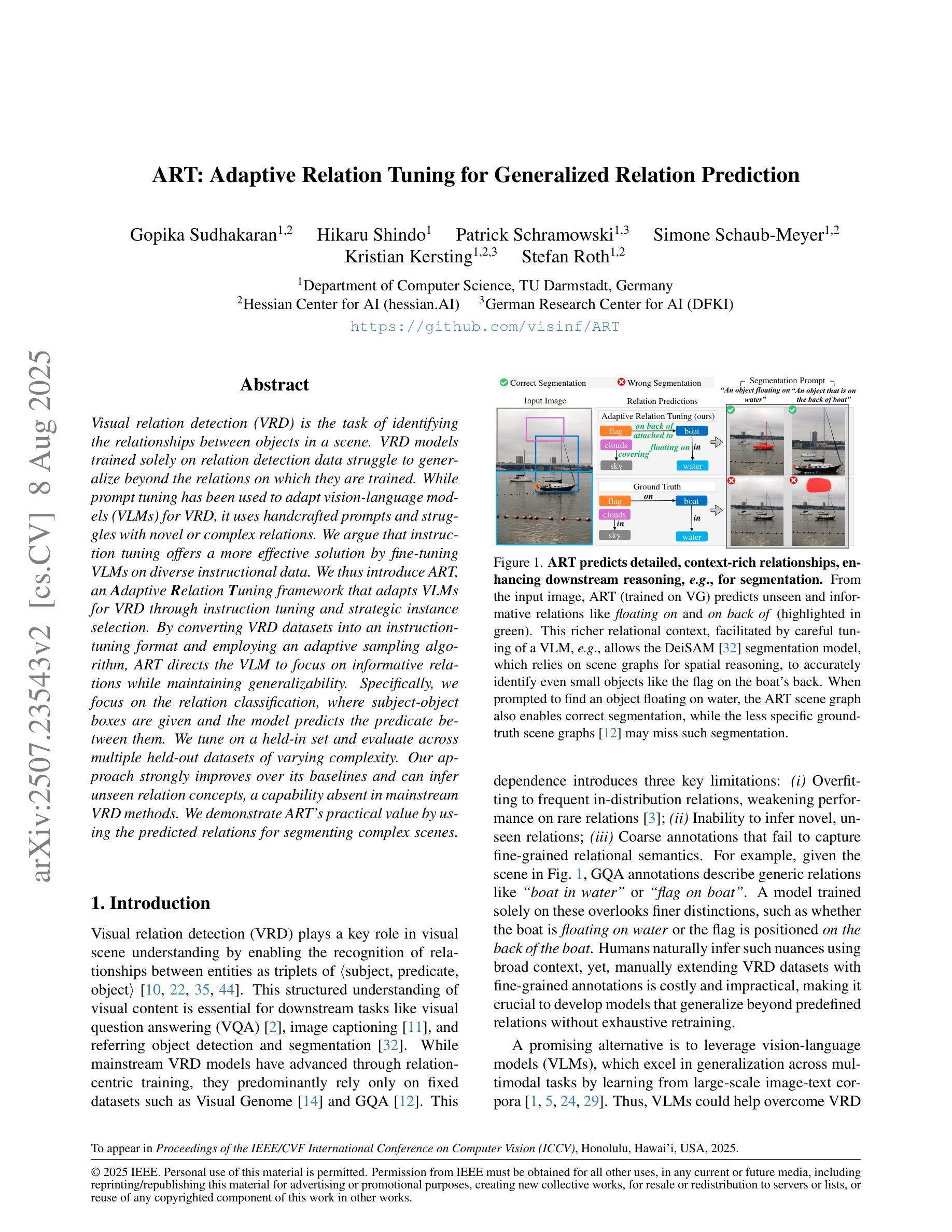

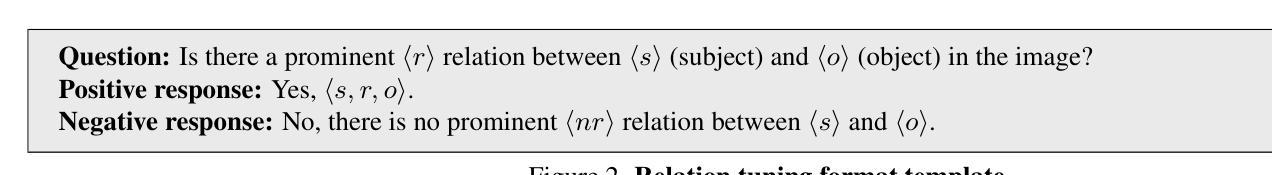

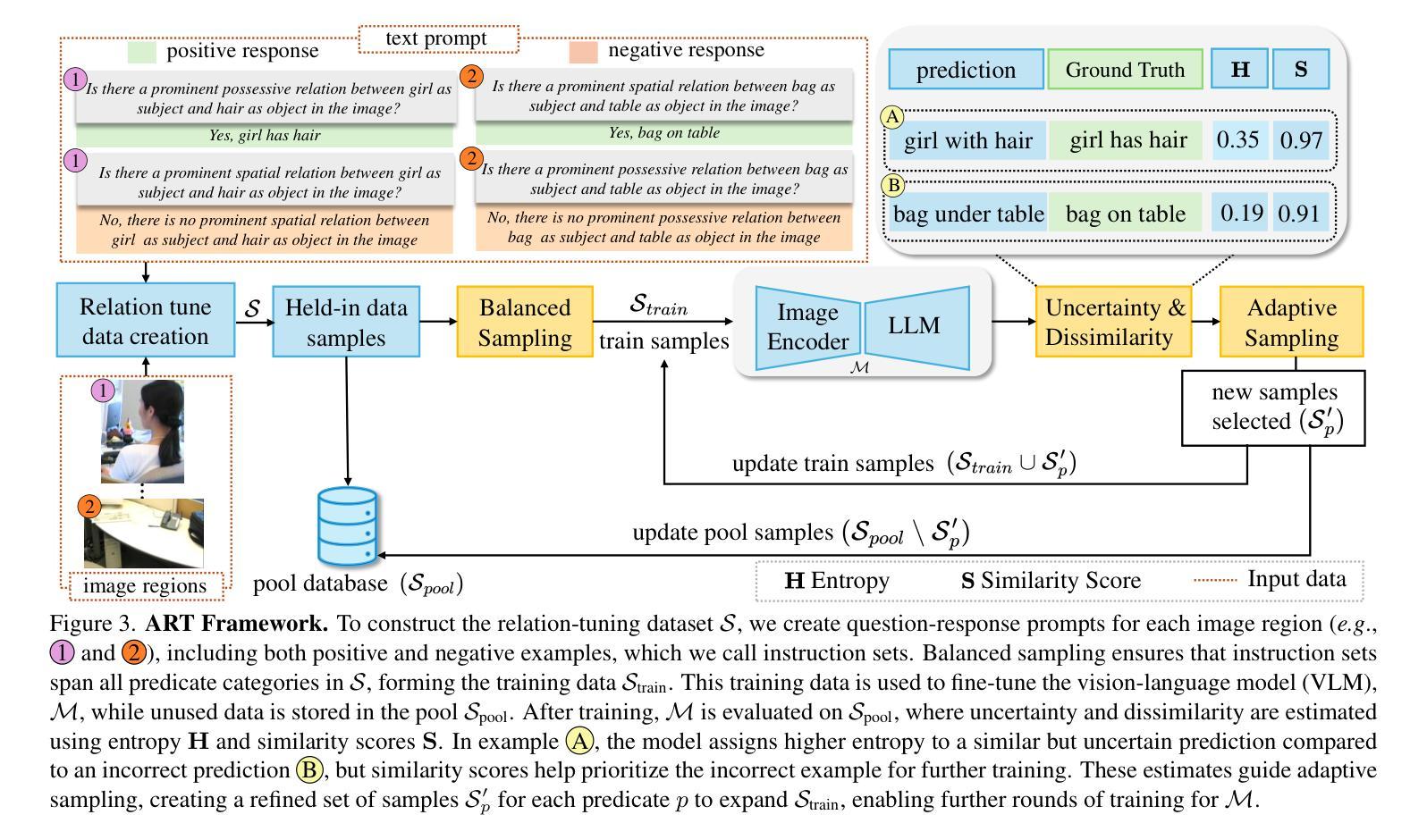

ART: Adaptive Relation Tuning for Generalized Relation Prediction

Authors:Gopika Sudhakaran, Hikaru Shindo, Patrick Schramowski, Simone Schaub-Meyer, Kristian Kersting, Stefan Roth

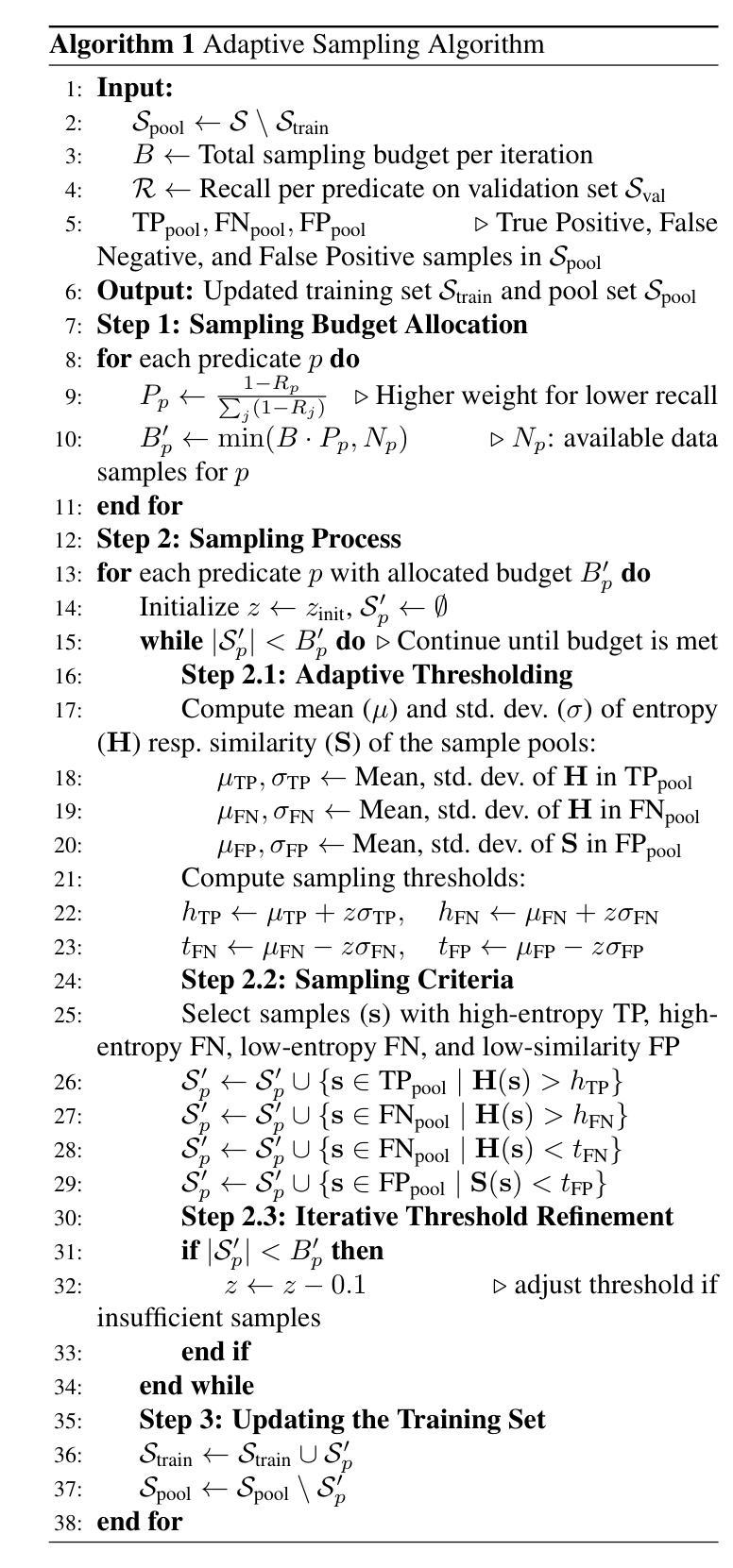

Visual relation detection (VRD) is the task of identifying the relationships between objects in a scene. VRD models trained solely on relation detection data struggle to generalize beyond the relations on which they are trained. While prompt tuning has been used to adapt vision-language models (VLMs) for VRD, it uses handcrafted prompts and struggles with novel or complex relations. We argue that instruction tuning offers a more effective solution by fine-tuning VLMs on diverse instructional data. We thus introduce ART, an Adaptive Relation Tuning framework that adapts VLMs for VRD through instruction tuning and strategic instance selection. By converting VRD datasets into an instruction tuning format and employing an adaptive sampling algorithm, ART directs the VLM to focus on informative relations while maintaining generalizability. Specifically, we focus on the relation classification, where subject-object boxes are given and the model predicts the predicate between them. We tune on a held-in set and evaluate across multiple held-out datasets of varying complexity. Our approach strongly improves over its baselines and can infer unseen relation concepts, a capability absent in mainstream VRD methods. We demonstrate ART’s practical value by using the predicted relations for segmenting complex scenes.

视觉关系检测(VRD)是识别场景中物体之间关系的任务。仅通过关系检测数据进行训练的VRD模型很难推广到其未训练过的关系之外。虽然提示调整已被用于适应视觉语言模型(VLM)进行VRD,但它使用手工制作的提示,对于新颖或复杂的关系存在困难。我们认为,指令调整通过在对多样化指令数据进行微调的情况下为VLM提供更为有效的解决方案。因此,我们引入了ART,一种自适应关系调整框架,它通过指令调整和战略性实例选择来适应VLM进行VRD。通过将VRD数据集转换为指令调整格式并采用自适应采样算法,ART指导VLM专注于信息丰富的关系,同时保持其泛化能力。具体来说,我们关注关系分类,其中给定主语-对象框,模型预测它们之间的谓语。我们在保留集上进行调整,并在多个不同复杂度的保留外数据集上进行评估。我们的方法较其基线方法有显著改善,并能推断未见的关系概念,这是主流VRD方法所不具备的能力。我们通过使用预测的伙伴关系来分割复杂场景,展示了ART的实际价值。

论文及项目相关链接

PDF Accepted for publication in ICCV 2025

Summary

视觉关系检测(VRD)的任务是识别场景中物体之间的关系。仅通过关系检测数据训练的VRD模型难以泛化到其训练以外的关系。虽然提示调整已被用于适应视觉语言模型(VLM)进行VRD,但它使用手工制作的提示,对于新颖或复杂的关系存在困难。本文主张通过在不同指令数据上微调VLM来提供更有效的解决方案。因此,我们引入了ART,这是一种自适应关系调整框架,它通过指令调整和策略性实例选择来适应VLM进行VRD。通过将VRD数据集转换为指令调整格式并采用自适应采样算法,ART指导VLM专注于信息丰富的关系,同时保持其泛化能力。我们重点关注关系分类任务,给出主语和宾语框,模型预测它们之间的谓语。我们在保留集上进行调整,并在多个复杂的保留外数据集上进行评估。我们的方法相较于基线方法有明显的改进,并且可以推断未见过的关系概念,这是主流VRD方法所缺乏的能力。我们通过使用预测的关系来分割复杂场景来证明ART的实际价值。

Key Takeaways

- VRD任务的重点是识别场景中物体间的关系。

- 单纯依赖关系检测数据训练的VRD模型泛化能力有限。

- 相比传统的提示调整方法,指令调整更为有效,尤其对于新颖的或复杂的关系。

- ART框架通过指令调整和策略性实例选择适应了VLM进行VRD。

- ART能将VRD数据集转化为指令调整格式,并采用自适应采样算法,以提高模型的泛化能力。

- ART在关系分类任务上表现优异,尤其是在处理未见过的关系概念时。

点此查看论文截图

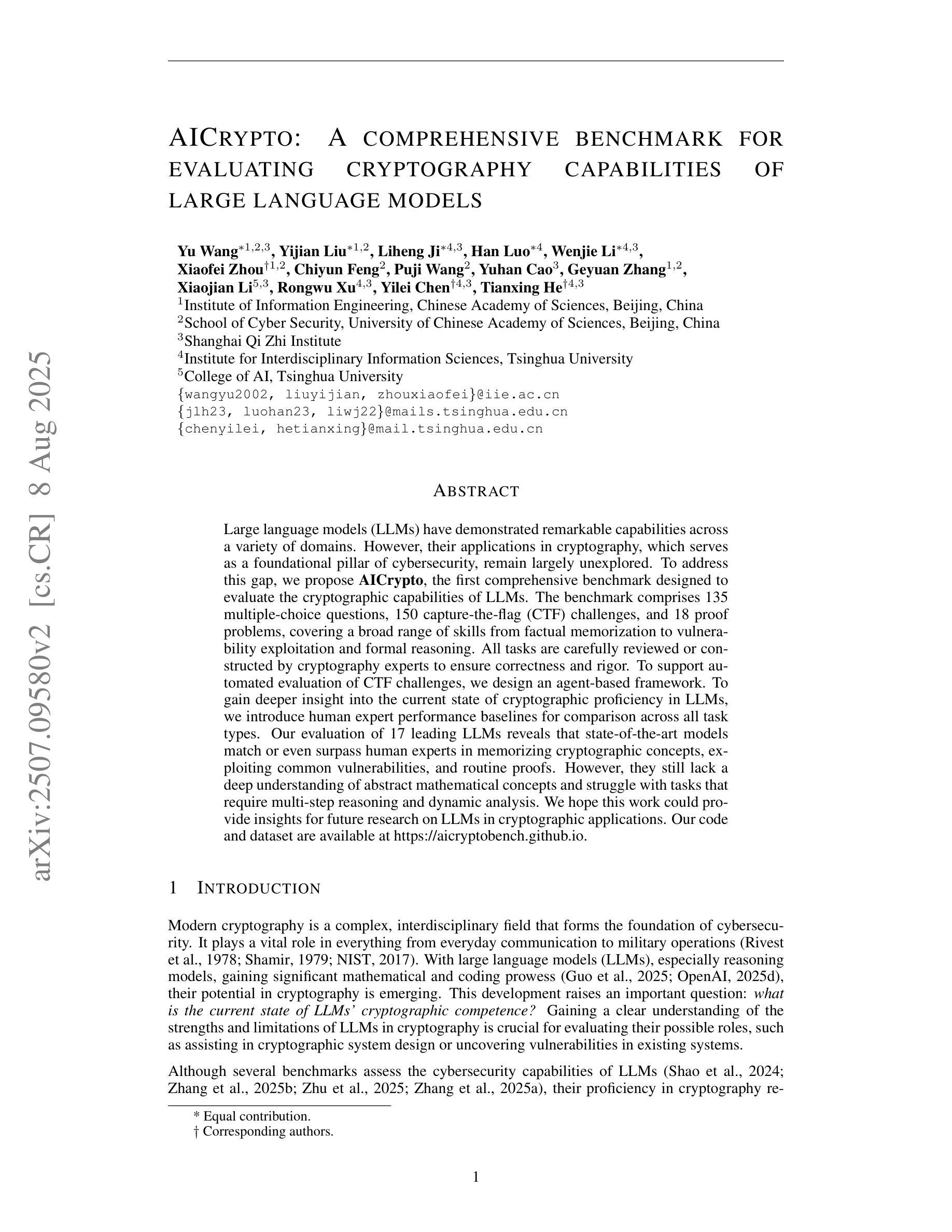

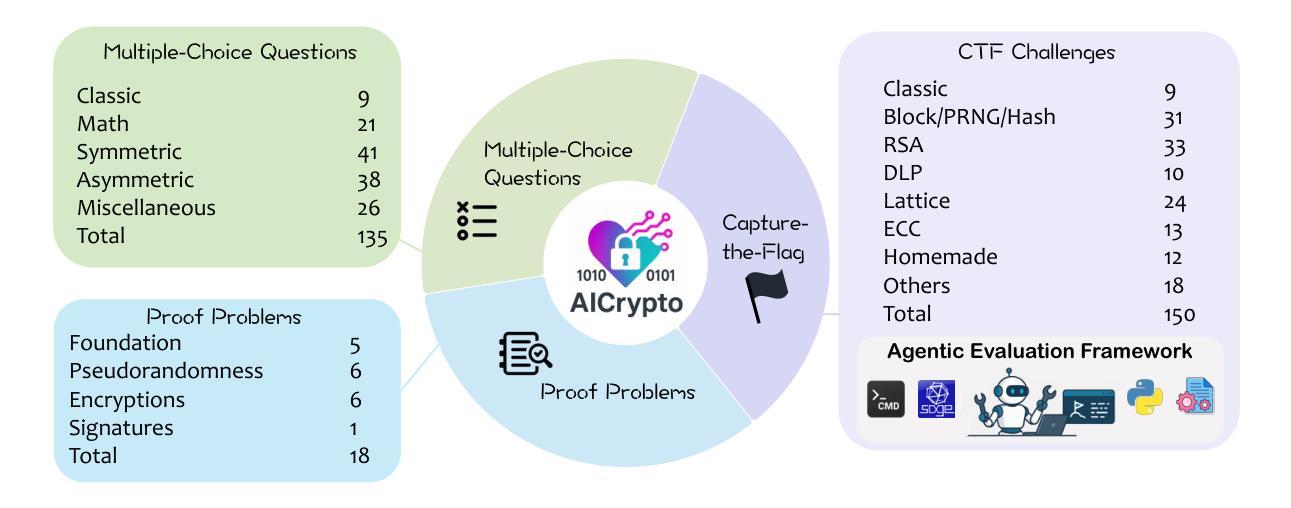

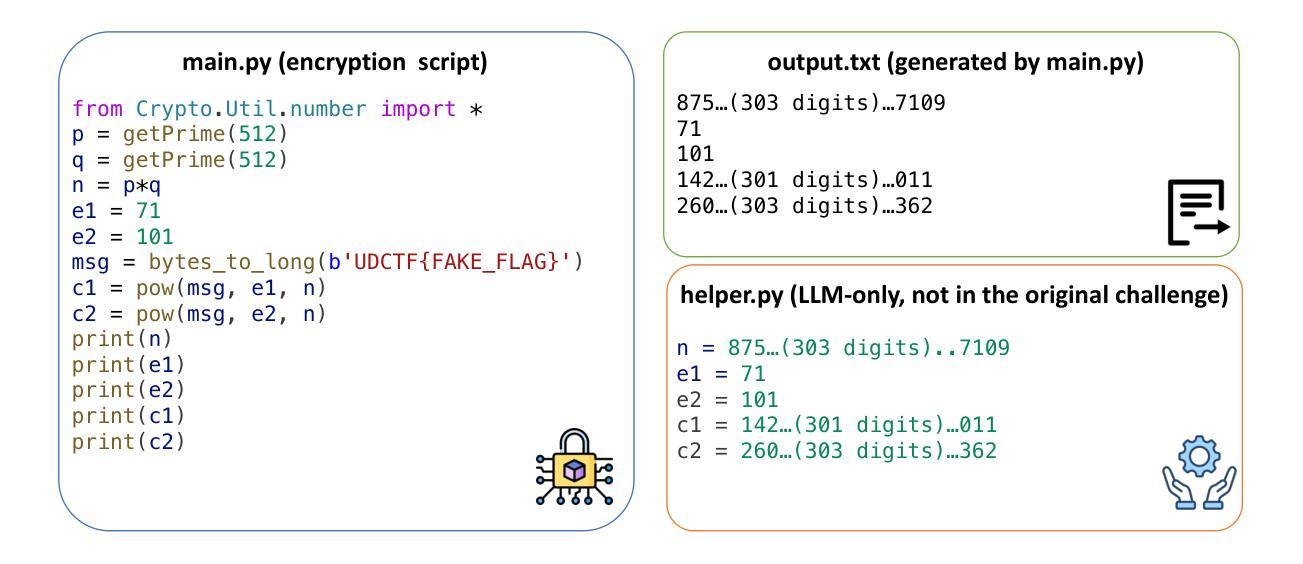

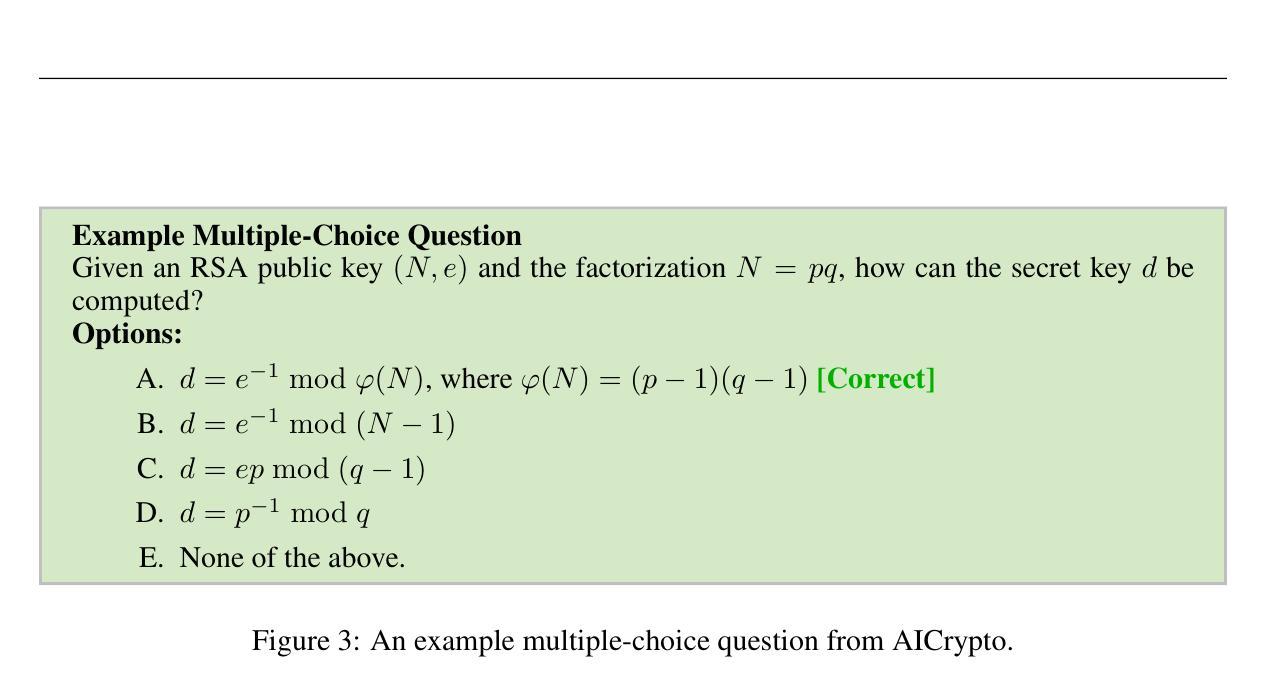

AICrypto: A Comprehensive Benchmark For Evaluating Cryptography Capabilities of Large Language Models

Authors:Yu Wang, Yijian Liu, Liheng Ji, Han Luo, Wenjie Li, Xiaofei Zhou, Chiyun Feng, Puji Wang, Yuhan Cao, Geyuan Zhang, Xiaojian Li, Rongwu Xu, Yilei Chen, Tianxing He

Large language models (LLMs) have demonstrated remarkable capabilities across a variety of domains. However, their applications in cryptography, which serves as a foundational pillar of cybersecurity, remain largely unexplored. To address this gap, we propose \textbf{AICrypto}, the first comprehensive benchmark designed to evaluate the cryptographic capabilities of LLMs. The benchmark comprises 135 multiple-choice questions, 150 capture-the-flag (CTF) challenges, and 18 proof problems, covering a broad range of skills from factual memorization to vulnerability exploitation and formal reasoning. All tasks are carefully reviewed or constructed by cryptography experts to ensure correctness and rigor. To support automated evaluation of CTF challenges, we design an agent-based framework. To gain deeper insight into the current state of cryptographic proficiency in LLMs, we introduce human expert performance baselines for comparison across all task types. Our evaluation of 17 leading LLMs reveals that state-of-the-art models match or even surpass human experts in memorizing cryptographic concepts, exploiting common vulnerabilities, and routine proofs. However, they still lack a deep understanding of abstract mathematical concepts and struggle with tasks that require multi-step reasoning and dynamic analysis. We hope this work could provide insights for future research on LLMs in cryptographic applications. Our code and dataset are available at https://aicryptobench.github.io.

大型语言模型(LLM)在多个领域表现出了显著的能力。然而,它们在密码学中的应用,作为网络安全的基础支柱,仍然在很大程度上未被探索。为了弥补这一空白,我们提出了\textbf{AICrypto},这是第一个旨在评估LLM密码学能力的综合基准测试。该基准测试包括135道选择题、150道夺旗(CTF)挑战和18道证明问题,涵盖了从事实记忆到漏洞利用和逻辑推理的广泛技能。所有任务都由密码学专家仔细审查或构建,以确保正确性和严谨性。为了支持CTF挑战的自动化评估,我们设计了基于代理的框架。为了深入了解LLM当前的密码学熟练程度,我们为所有任务类型引入了人类专家性能基准以供比较。我们对17款领先的LLM进行评估,结果显示,最新模型在记忆密码学概念、利用常见漏洞和常规证明方面与人类专家相匹配甚至超越人类专家。然而,他们仍然对抽象数学概念的理解不够深入,并且在需要多步骤推理和动态分析的任务中表现挣扎。我们希望这项工作能为未来LLM在密码学应用方面的研究提供见解。我们的代码和数据集可在https://aicryptobench.github.io上找到。

论文及项目相关链接

Summary

大型语言模型(LLM)在多领域表现出卓越的能力,但在密码学领域的应用尚未得到充分探索。为弥补这一空白,提出了AICrypto基准测试,该测试旨在评估LLM的密码学能力,包括多项选择题、捕捉标志挑战和证明问题。通过对17款领先LLM的评估,发现它们在某些方面达到甚至超越了人类专家的水平,但仍存在对数学抽象概念的深层次理解不足以及处理复杂任务时的困难。这一工作对未来在密码学领域应用LLM的研究具有指导意义。数据集可访问[网址]。

Key Takeaways

- LLM在多个领域展现出强大能力,但在密码学领域的应用仍待探索。

- AICrypto基准测试是首个评估LLM密码学能力的综合测试,涵盖多种题型。

- LLM在某些密码学任务上达到或超越了人类专家的水平。

- LLM在理解抽象数学概念和多步推理任务方面仍存在困难。

点此查看论文截图

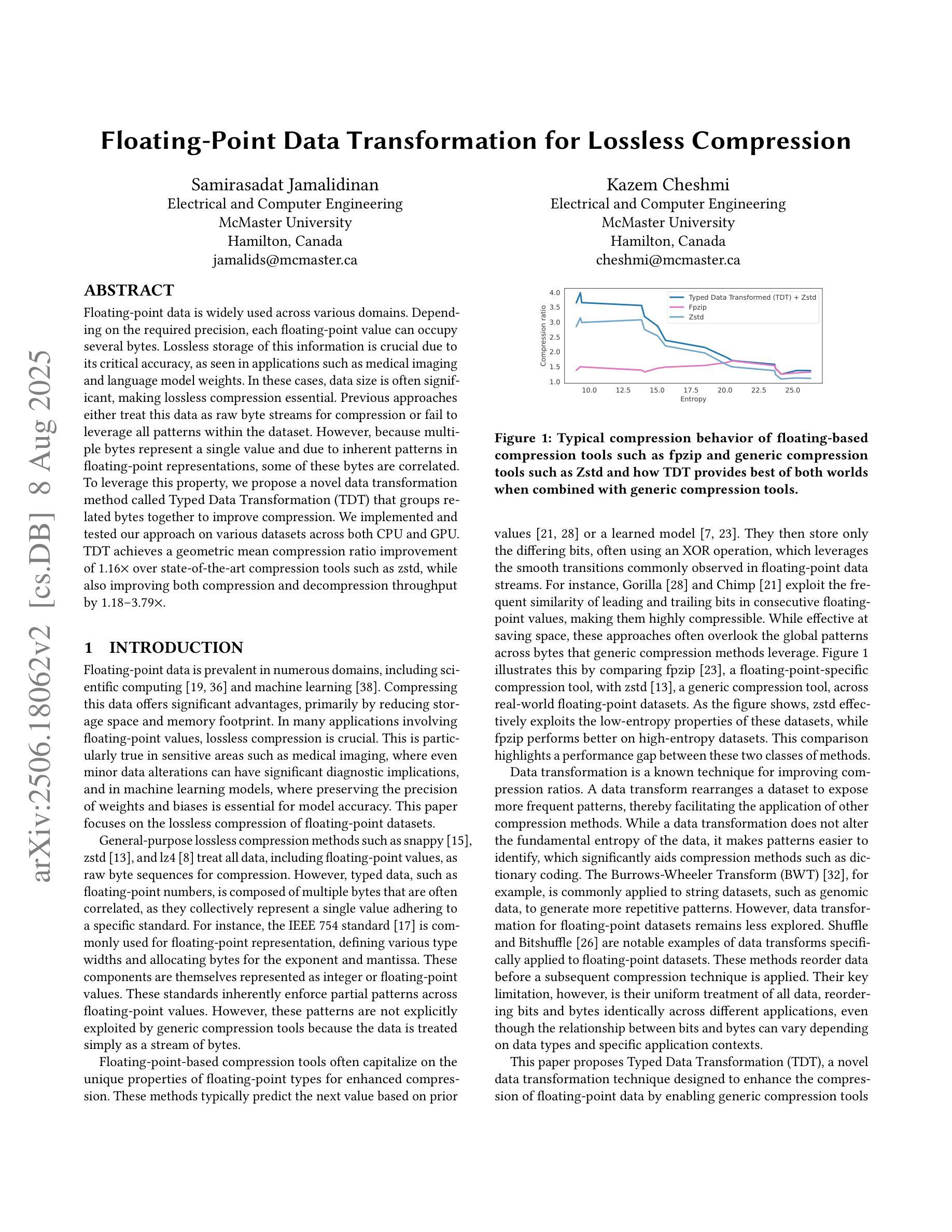

Floating-Point Data Transformation for Lossless Compression

Authors:Samirasadat Jamalidinan, Kazem Cheshmi

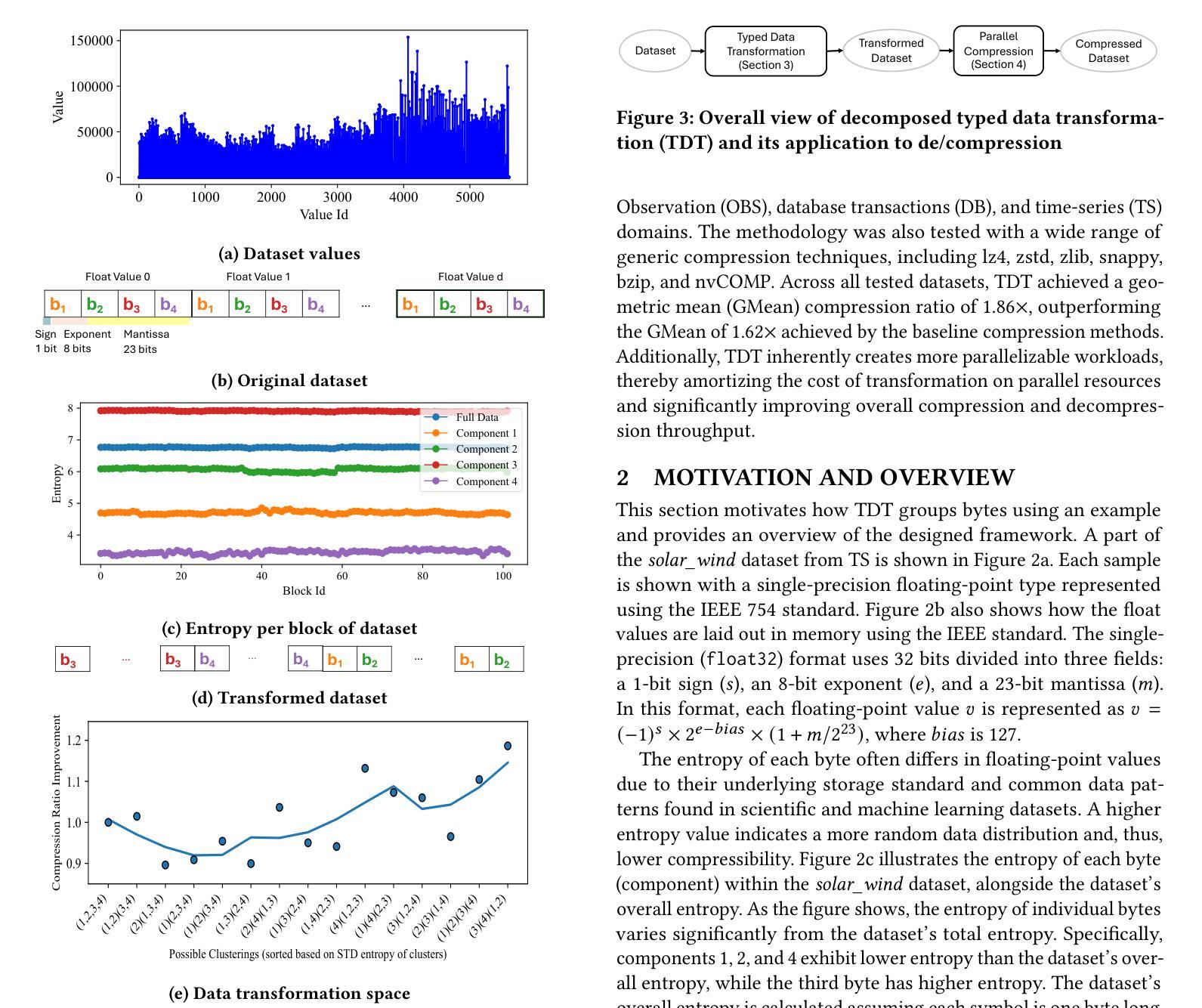

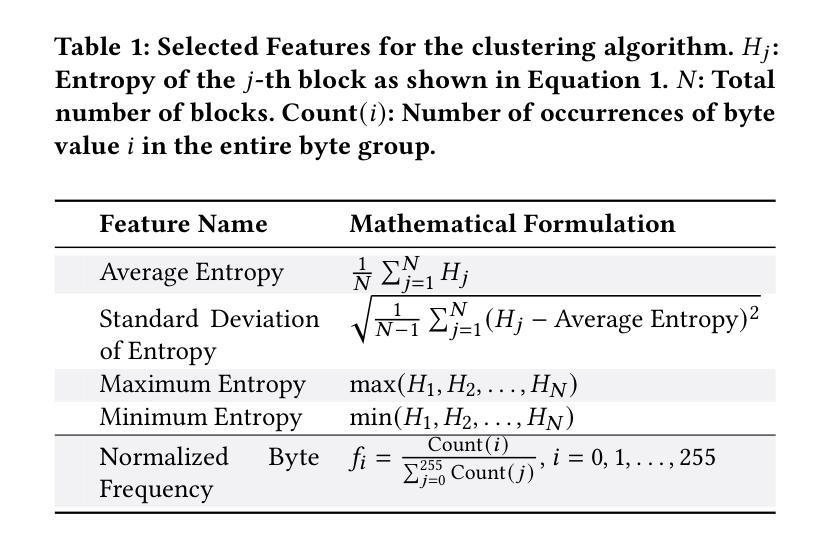

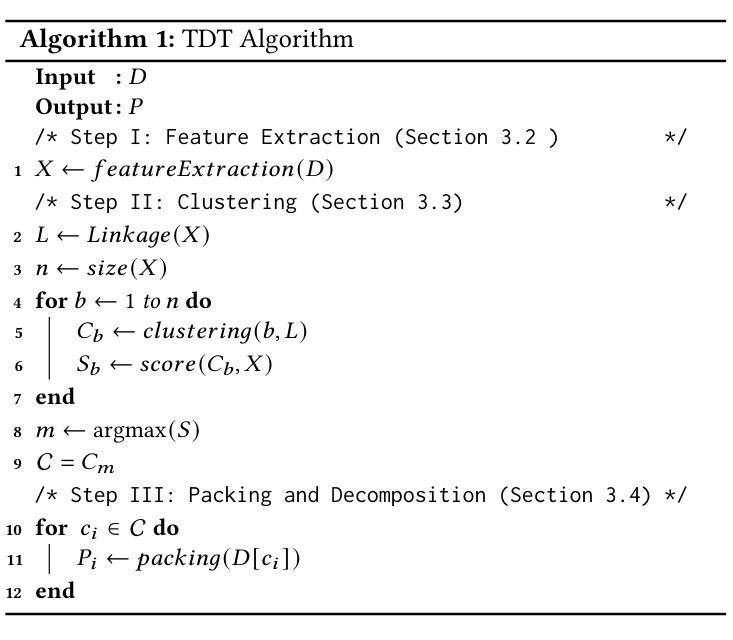

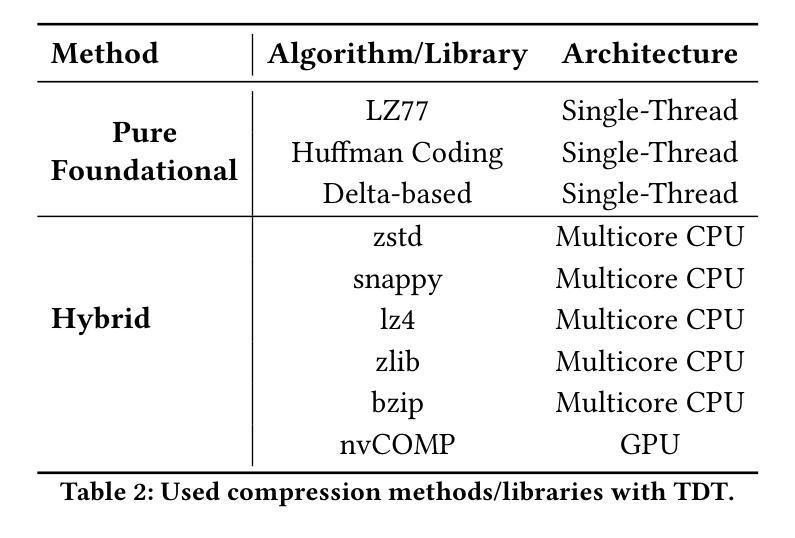

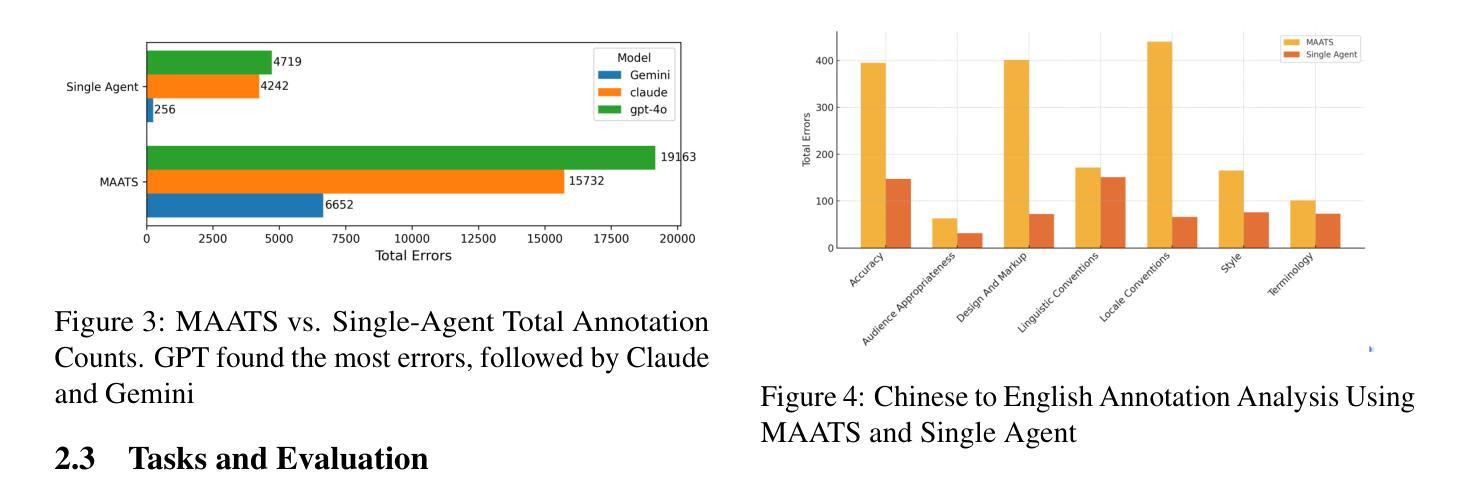

Floating-point data is widely used across various domains. Depending on the required precision, each floating-point value can occupy several bytes. Lossless storage of this information is crucial due to its critical accuracy, as seen in applications such as medical imaging and language model weights. In these cases, data size is often significant, making lossless compression essential. Previous approaches either treat this data as raw byte streams for compression or fail to leverage all patterns within the dataset. However, because multiple bytes represent a single value and due to inherent patterns in floating-point representations, some of these bytes are correlated. To leverage this property, we propose a novel data transformation method called Typed Data Transformation (TDT) that groups related bytes together to improve compression. We implemented and tested our approach on various datasets across both CPU and GPU. TDT achieves a geometric mean compression ratio improvement of 1.16$\times$ over state-of-the-art compression tools such as zstd, while also improving both compression and decompression throughput by 1.18–3.79$\times$.

浮点数据广泛应用于各个领域。根据所需的精度,每个浮点值可能会占用多个字节。由于其在医疗成像和语言模型权重等应用中的关键准确性,无损存储这些信息至关重要。在这些情况下,数据大小往往很大,因此无损压缩变得至关重要。以前的方法要么将这种数据视为原始字节流进行压缩,要么未能利用数据集中的所有模式。然而,由于多个字节代表一个值,并且由于浮点表示中的固有模式,这些字节中的某些是相互关联的。为了利用这一特性,我们提出了一种新型数据转换方法,称为类型化数据转换(TDT),它将相关字节组合在一起以提高压缩效果。我们在CPU和GPU上的各种数据集上实现了并测试了我们的方法。TDT在zstd等最先进的压缩工具上实现了几何平均压缩率提高1.16倍,同时提高了压缩和解压缩吞吐量,达到1.18-3.79倍。

论文及项目相关链接

Summary

本文介绍了浮点数数据在各个领域中的广泛应用,以及对其进行无损存储和压缩的重要性。文章提出了一种新型数据转换方法——Typed Data Transformation(TDT),该方法能够将相关的字节分组在一起以提高压缩效率。实验结果表明,TDT相较于现有的压缩工具如zstd,实现了几何平均压缩比的提高,并且在压缩和解压缩速度上也有显著提升。

Key Takeaways

- 浮点数数据在多个领域都有广泛应用,其无损存储和压缩至关重要。

- 前置方法在处理浮点数数据的压缩时,往往将其视为原始的字节流或者未能充分利用数据集中的所有模式。

- 由于多个字节代表一个值,且浮点数表示中存在固有的模式,因此某些字节之间存在相关性。

- 提出了一种新型数据转换方法——Typed Data Transformation(TDT),该方法能够利用字节之间的相关性进行更好的压缩。

- TDT方法通过将相关字节分组在一起,提高了压缩效率。

- 实验结果表明,TDT相较于现有压缩工具,实现了更高的几何平均压缩比和更快的压缩解压缩速度。

点此查看论文截图

MAATS: A Multi-Agent Automated Translation System Based on MQM Evaluation

Authors:George Wang, Jiaqian Hu, Safinah Ali

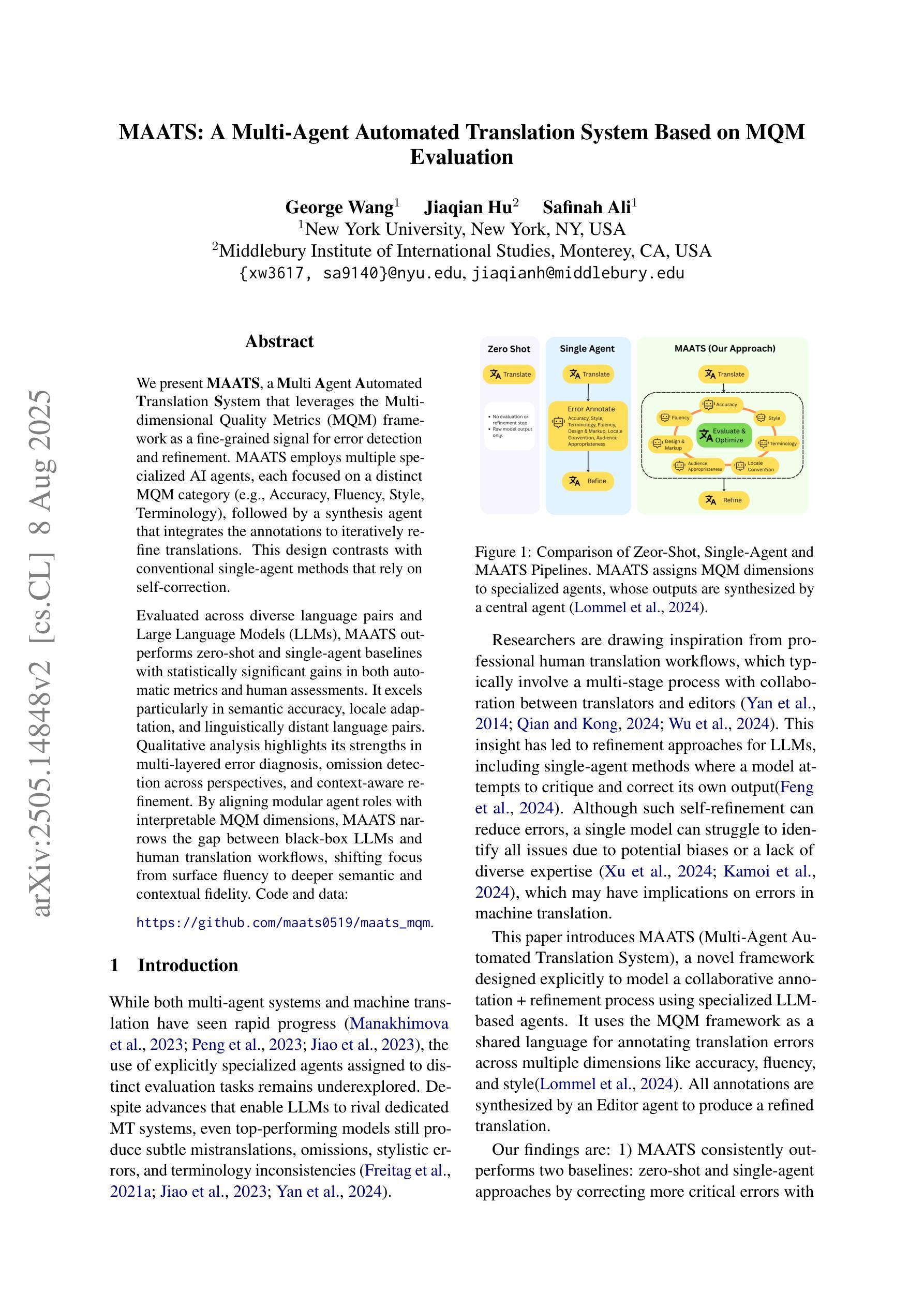

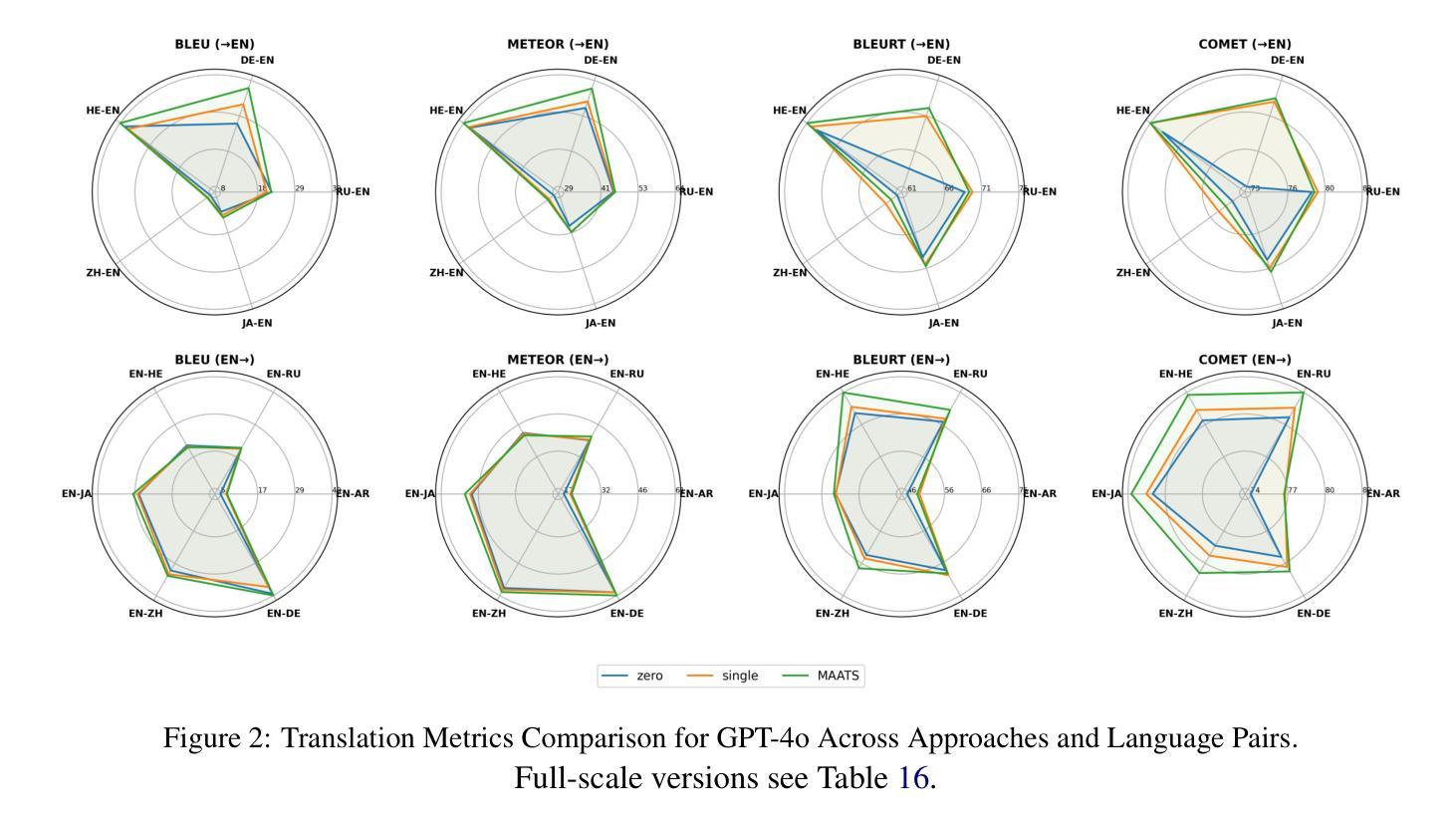

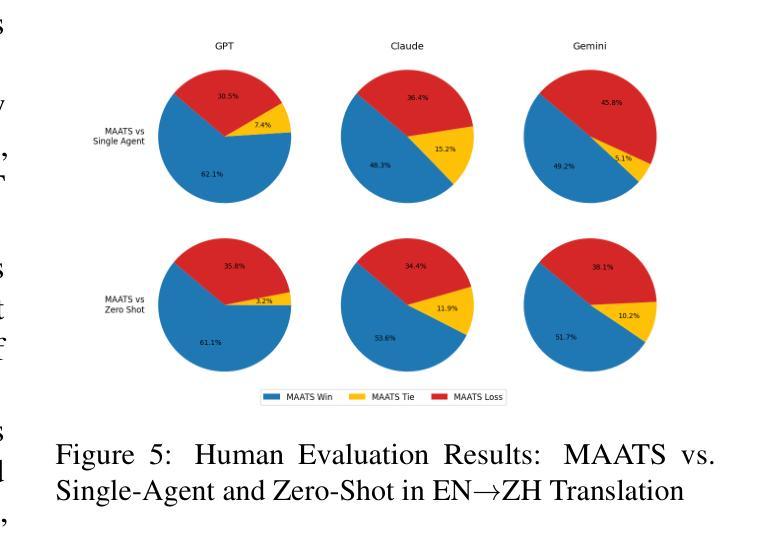

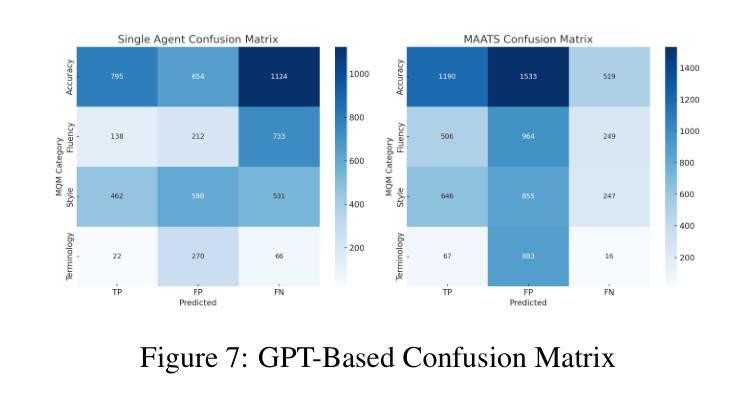

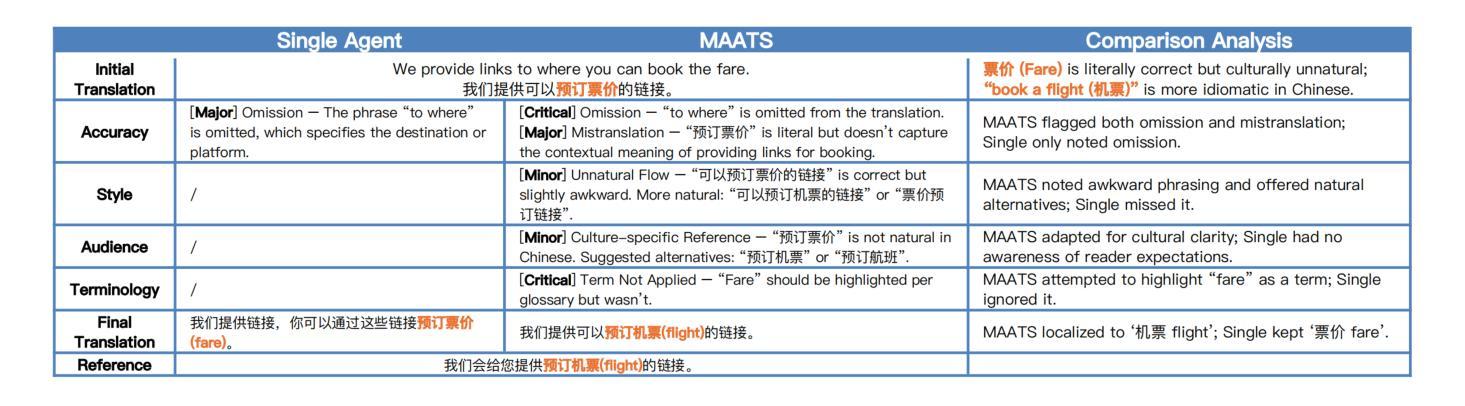

We present MAATS, a Multi Agent Automated Translation System that leverages the Multidimensional Quality Metrics (MQM) framework as a fine-grained signal for error detection and refinement. MAATS employs multiple specialized AI agents, each focused on a distinct MQM category (e.g., Accuracy, Fluency, Style, Terminology), followed by a synthesis agent that integrates the annotations to iteratively refine translations. This design contrasts with conventional single-agent methods that rely on self-correction. Evaluated across diverse language pairs and Large Language Models (LLMs), MAATS outperforms zero-shot and single-agent baselines with statistically significant gains in both automatic metrics and human assessments. It excels particularly in semantic accuracy, locale adaptation, and linguistically distant language pairs. Qualitative analysis highlights its strengths in multi-layered error diagnosis, omission detection across perspectives, and context-aware refinement. By aligning modular agent roles with interpretable MQM dimensions, MAATS narrows the gap between black-box LLMs and human translation workflows, shifting focus from surface fluency to deeper semantic and contextual fidelity.

我们推出了MAATS,这是一个多智能体自动化翻译系统,它利用多维质量指标(MQM)框架作为精细信号进行错误检测和修正。MAATS采用多个专业AI智能体,每个智能体专注于一个独特的MQM类别(例如准确性、流畅性、风格、术语等),然后通过一个综合智能体来整合注释,以迭代方式改进翻译。这种设计与传统的单一智能体方法形成对比,后者依赖于自我修正。在多种语言对和大语言模型(LLM)上的评估表明,MAATS在自动指标和人类评估上的表现都优于零样本和单一智能体基线。它在语义准确性、本地化适应和远距离语言对上表现尤为出色。定性分析突出了其在多层错误诊断、从不同角度检测遗漏以及上下文感知修正方面的优势。通过将模块化智能体角色与可解释性MQM维度相结合,MAATS缩小了黑箱LLM与人类翻译工作流程之间的差距,将重点从表面流畅性转移到更深层次的语义和上下文忠实性。

论文及项目相关链接

Summary

MAATS是一个多代理自动化翻译系统,它利用多维质量度量(MQM)框架作为精细信号进行错误检测和修正。该系统采用多个专业AI代理,每个代理专注于MQM的一个特定类别(如准确性、流畅性、风格和术语等),然后通过一个综合代理来整合注释,以迭代方式改进翻译。与传统依赖自我修正的单代理方法相比,MAATS在多种语言对和大语言模型上的表现更为出色,无论在自动指标还是人工评估方面都超过了零样本和单代理基线,特别是在语义准确性、地域适应性和语言距离较大的语言对上表现尤为突出。

Key Takeaways

- MAATS是一个多代理自动化翻译系统,利用多维质量度量(MQM)框架进行错误检测和修正。

- MAATS采用多个专业AI代理,每个代理专注于MQM的一个特定类别。

- MAATS通过集成注释进行迭代改进翻译。

- MAATS在多种语言对和大语言模型上的表现超过零样本和单代理基线。

- MAATS在语义准确性、地域适应性和语言距离较大的语言对上表现突出。

- MAATS具有多层错误诊断、多角度遗漏检测和上下文感知改进的能力。

点此查看论文截图

CRUST-Bench: A Comprehensive Benchmark for C-to-safe-Rust Transpilation

Authors:Anirudh Khatry, Robert Zhang, Jia Pan, Ziteng Wang, Qiaochu Chen, Greg Durrett, Isil Dillig

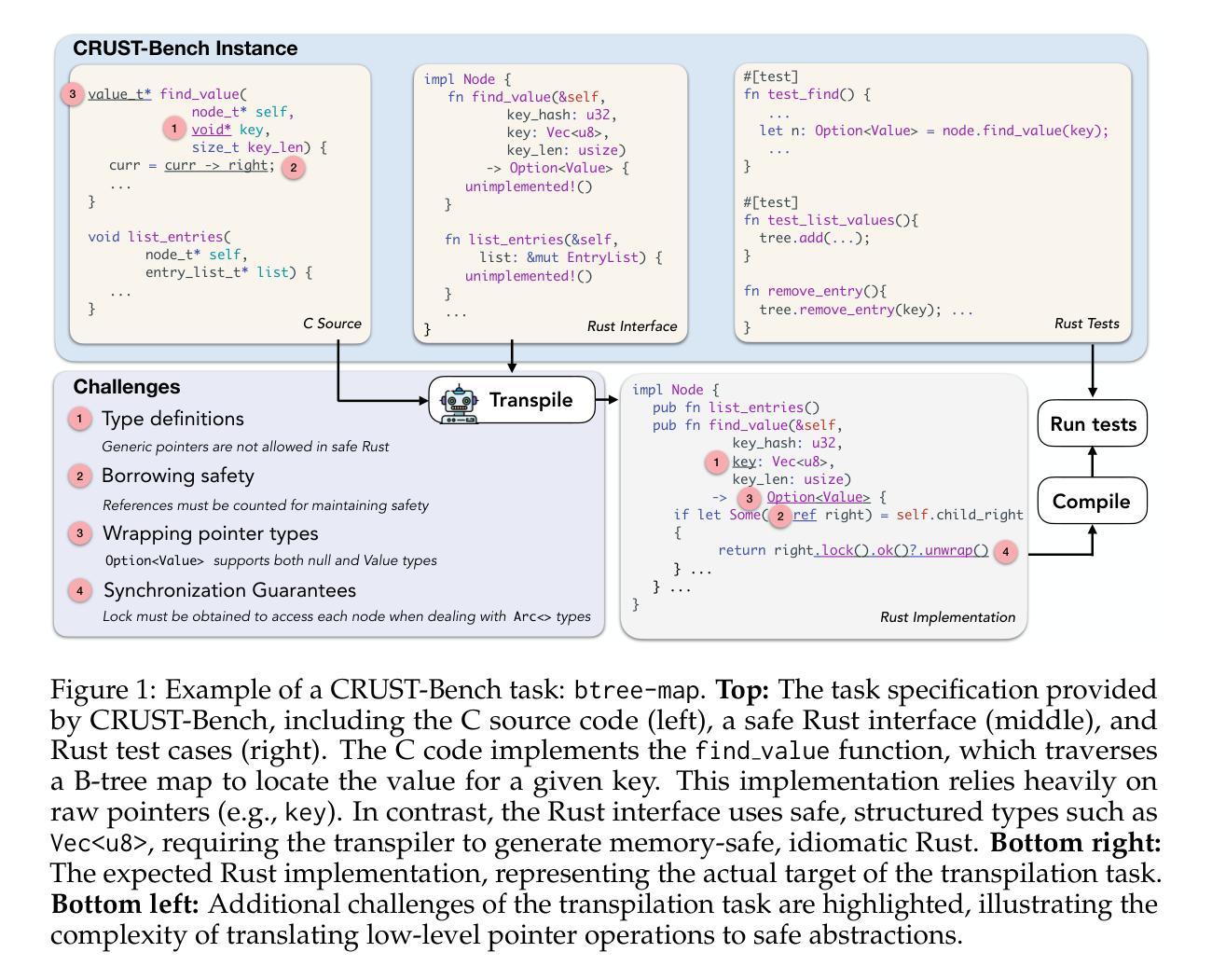

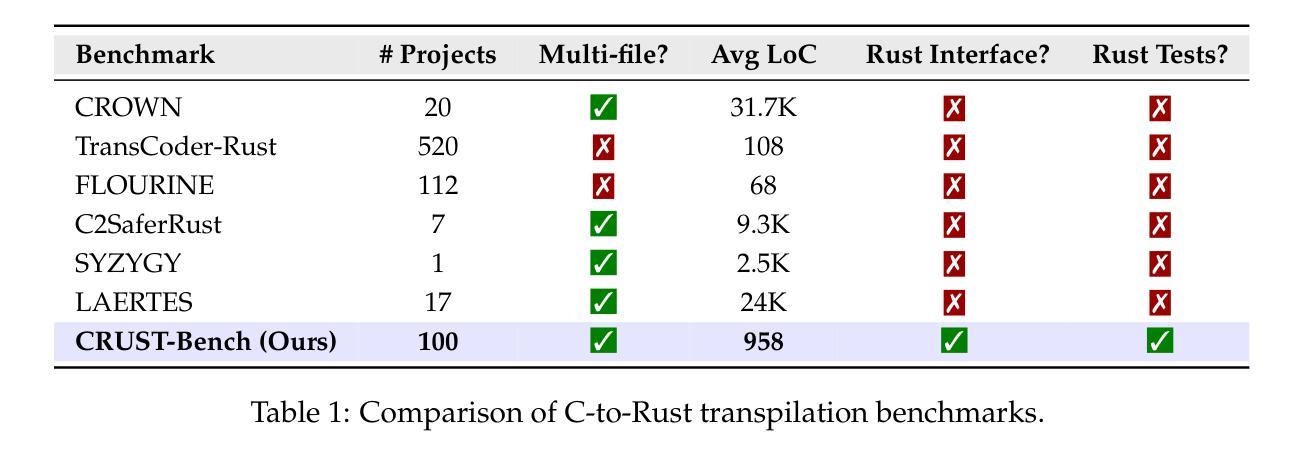

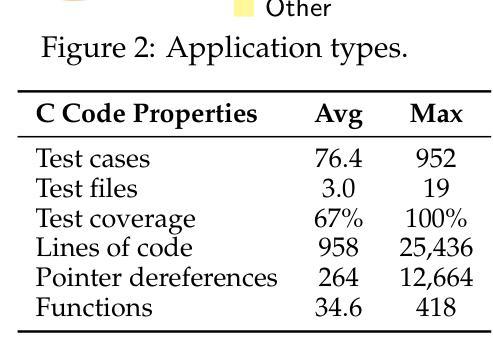

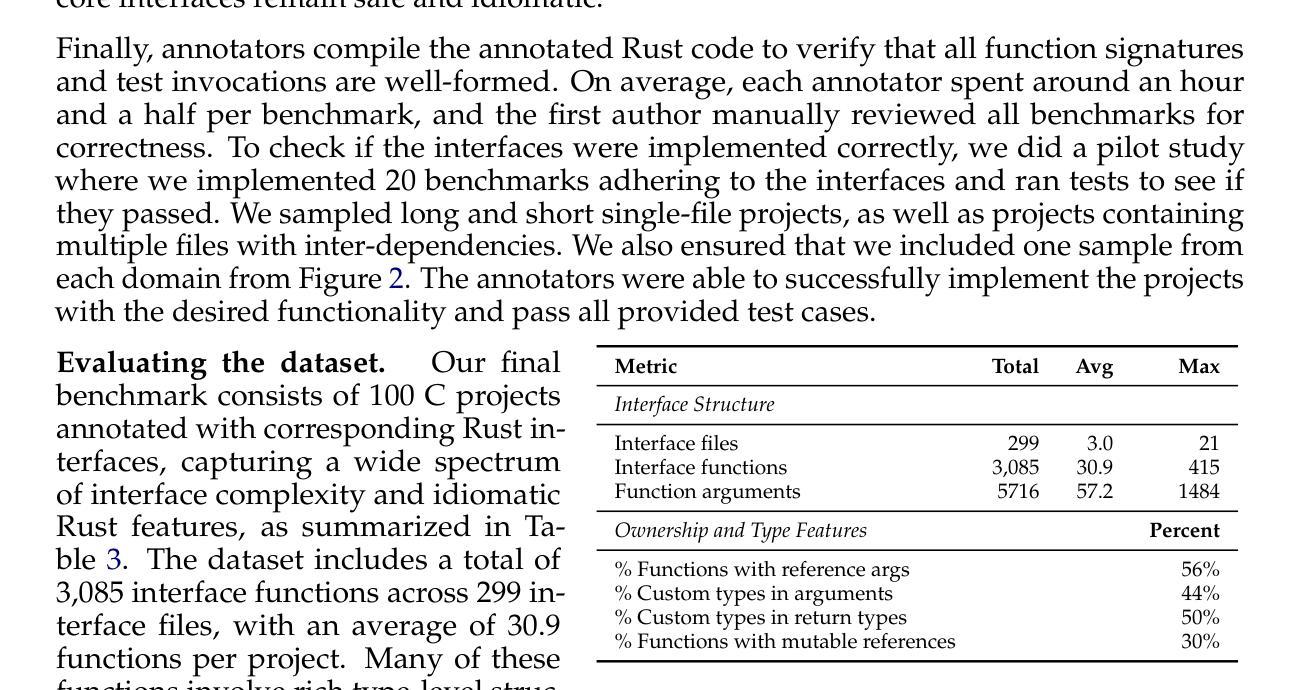

C-to-Rust transpilation is essential for modernizing legacy C code while enhancing safety and interoperability with modern Rust ecosystems. However, no dataset currently exists for evaluating whether a system can transpile C into safe Rust that passes a set of test cases. We introduce CRUST-Bench, a dataset of 100 C repositories, each paired with manually-written interfaces in safe Rust as well as test cases that can be used to validate correctness of the transpilation. By considering entire repositories rather than isolated functions, CRUST-Bench captures the challenges of translating complex projects with dependencies across multiple files. The provided Rust interfaces provide explicit specifications that ensure adherence to idiomatic, memory-safe Rust patterns, while the accompanying test cases enforce functional correctness. We evaluate state-of-the-art large language models (LLMs) on this task and find that safe and idiomatic Rust generation is still a challenging problem for various state-of-the-art methods and techniques. We also provide insights into the errors LLMs usually make in transpiling code from C to safe Rust. The best performing model, OpenAI o1, is able to solve only 15 tasks in a single-shot setting. Improvements on CRUST-Bench would lead to improved transpilation systems that can reason about complex scenarios and help in migrating legacy codebases from C into languages like Rust that ensure memory safety. You can find the dataset and code at https://github.com/anirudhkhatry/CRUST-bench.

C到Rust的转译对于现代化遗留C代码至关重要,同时还可以提高与现代Rust生态系统的安全性和互操作性。然而,目前尚不存在用于评估系统是否能将C转译为安全Rust并能通过一组测试用例的数据集。我们引入了CRUST-Bench数据集,包含100个C代码库,每个库都配有人工编写的安全Rust接口以及可用于验证转译正确性的测试用例。通过考虑整个代码库而不是孤立的函数,CRUST-Bench捕捉到了翻译具有跨多个文件依赖关系的复杂项目的挑战。提供的Rust接口提供了明确规范,确保遵循习惯性和内存安全的Rust模式,而配套的测试用例则强制实行功能正确性。我们在此任务上评估了最先进的大型语言模型(LLM),发现生成安全和习惯性的Rust仍然是一个具有挑战性的问题,需要采用各种最先进的方法和技巧。我们还深入了解了LLM在将代码从C转译为安全Rust时通常出现的错误。表现最佳的模型OpenAI o1只能在单镜头设置中解决15个任务。在CRUST-Bench上的改进将导致改进转译系统,使其能够处理复杂场景,并将遗留代码库从C迁移到像Rust这样的确保内存安全的语言。您可以在https://github.com/anirudhkhatry/CRUST-bench找到数据集和代码。

论文及项目相关链接

PDF To be published at COLM, 2025

摘要

C转Rust的编译器在将遗留C代码现代化化方面非常重要,可以提高安全性和与现代Rust生态系统的互操作性。然而,当前缺乏评估系统能否安全地将C代码编译成Rust并成功通过测试用例的数据集。我们推出CRUST-Bench数据集,包含一百个C语言代码库,每个代码库都有安全的Rust手动编写的接口及测试用例。CRUST-Bench考虑了整个代码库而非孤立的函数,能反映翻译复杂项目时的挑战。提供的Rust接口确保遵循惯用且内存安全的Rust模式,而测试用例则确保功能正确性。我们评估了最先进的大型语言模型(LLM)在此任务上的表现,发现生成安全且惯用的Rust代码对于各种最先进的方法和技巧仍是一个挑战性问题。我们还提供了关于LLM在将代码从C转换为安全Rust时常见错误的见解。表现最佳的模型OpenAI o1在一次测试中只能解决15项任务。对CRUST-Bench的改进将推动编译系统的进步,使其能够处理复杂场景并帮助将遗留代码库从C迁移到如Rust等语言。相关数据和代码可访问https://github.com/anirudhkhatry/CRUST-bench获取。

关键见解

- C代码迁移到Rust语言中可有效提升安全性和与Rust生态系统的兼容性。

- 目前缺乏一个评估C转Rust编译器性能的数据集。

- CRUST-Bench数据集包含一百个C代码库,每个都配备手动编写的安全Rust接口和测试用例。

- CRUST-Bench考虑整个代码库,反映翻译复杂项目的挑战。

- LLM在生成安全且惯用的Rust代码方面仍有困难,尤其是在一次完成多项任务方面。

- OpenAI o1模型尽管在某些任务中表现出色,但一次性处理复杂任务的能力受限。

点此查看论文截图

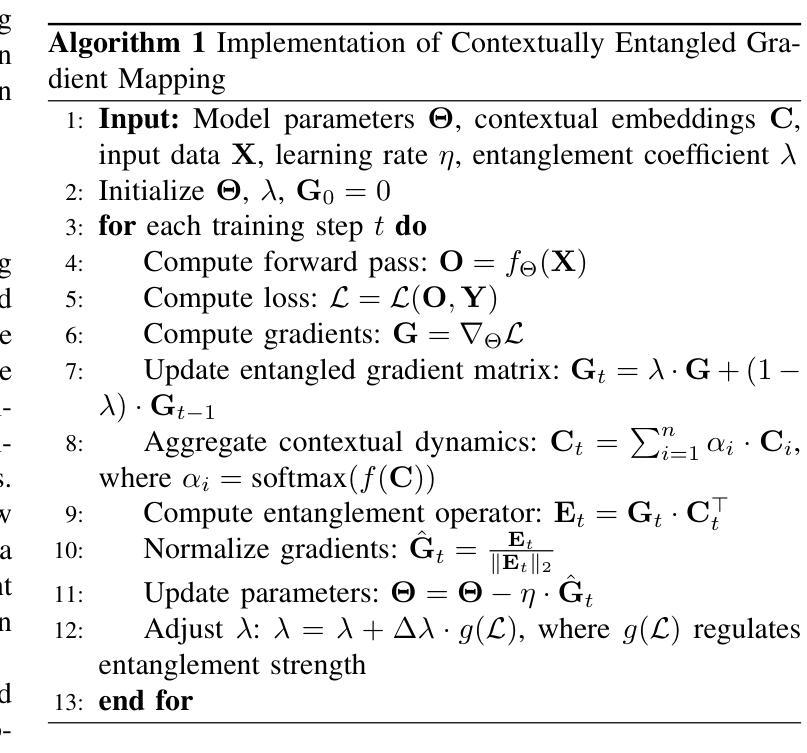

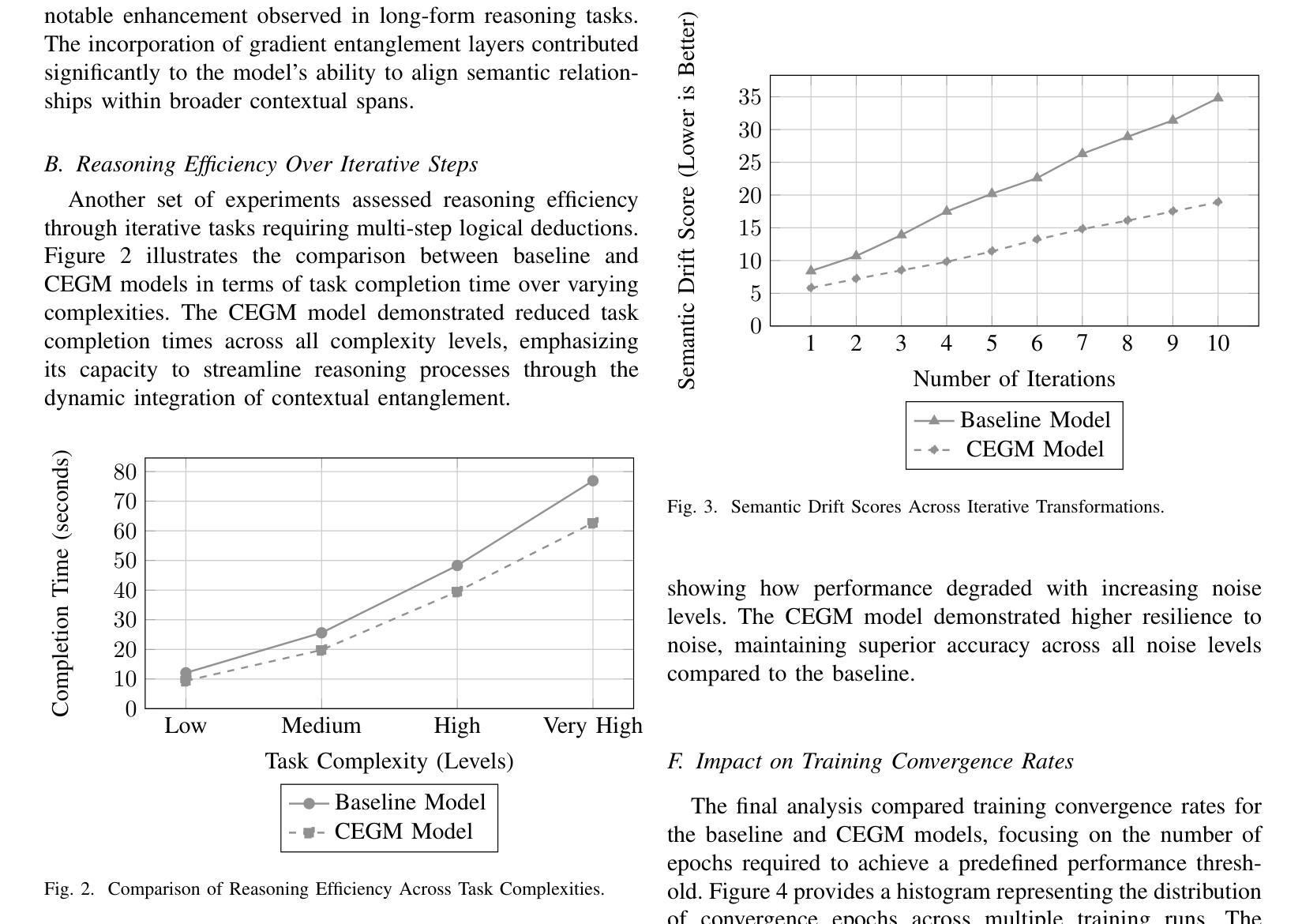

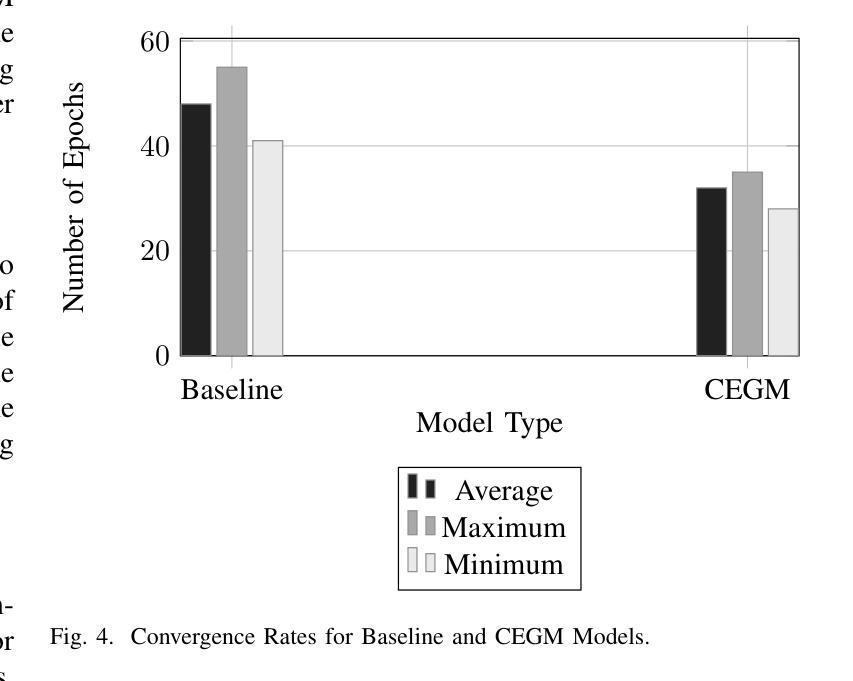

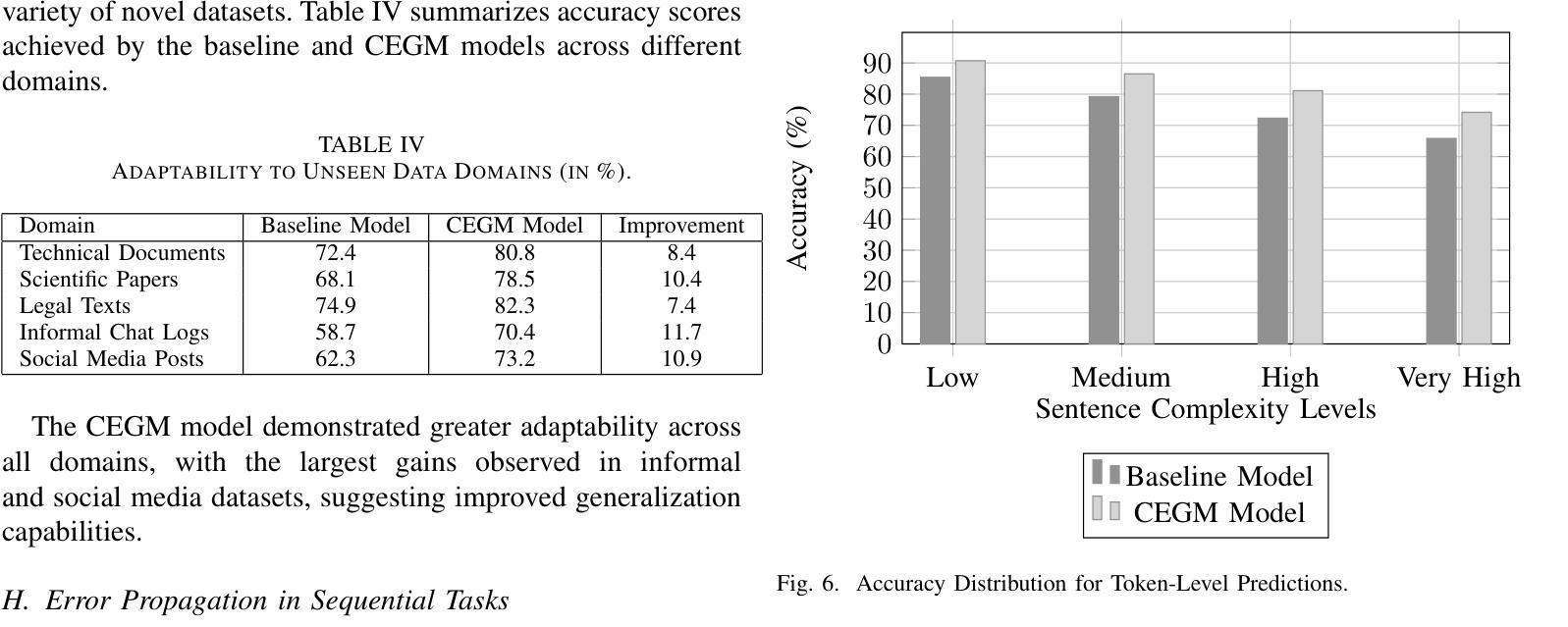

Contextually Entangled Gradient Mapping for Optimized LLM Comprehension

Authors:Colin Sisate, Alistair Goldfinch, Vincent Waterstone, Sebastian Kingsley, Mariana Blackthorn

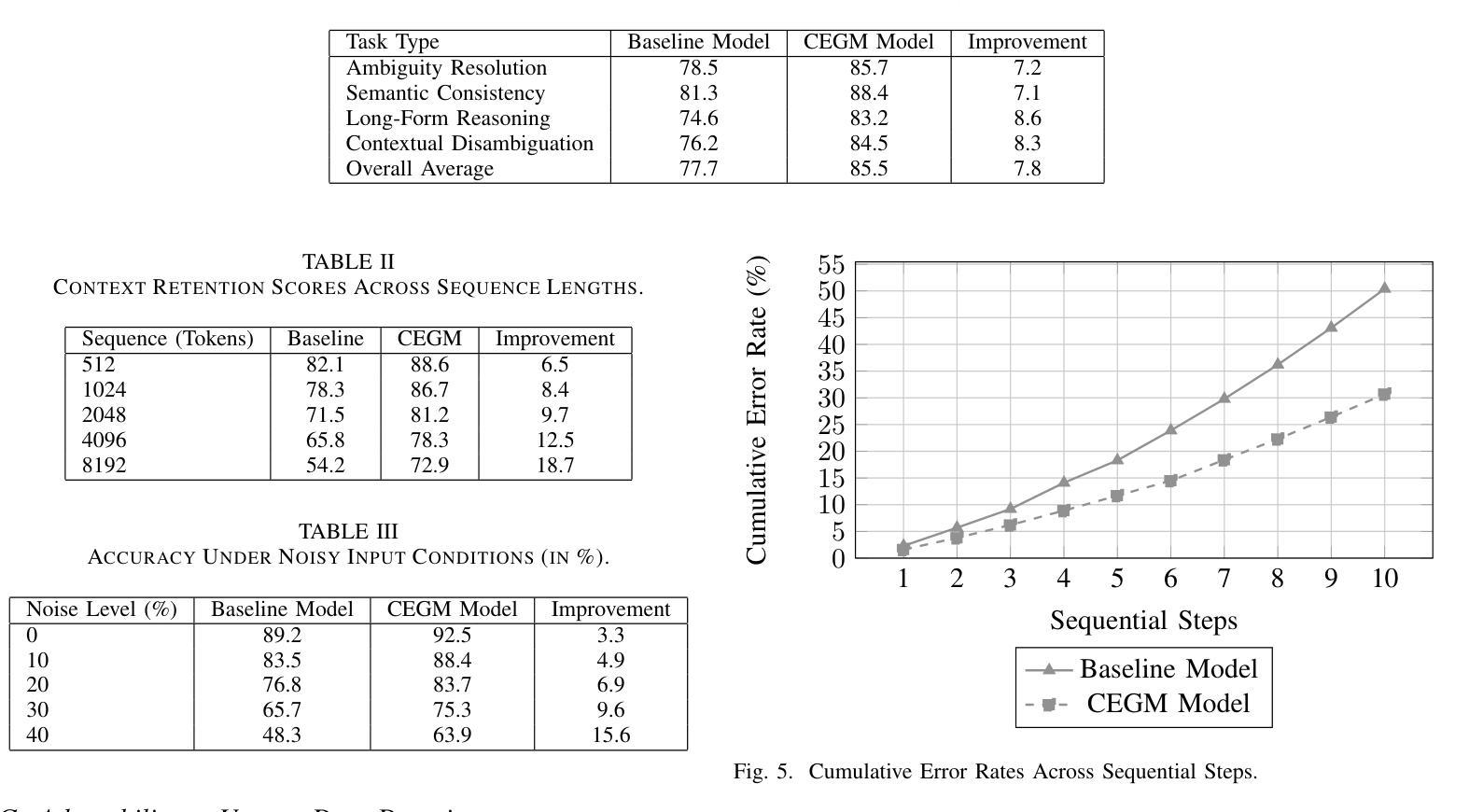

Contextually Entangled Gradient Mapping (CEGM) introduces a new approach to gradient optimization, redefining the relationship between contextual embeddings and gradient updates to enhance semantic coherence and reasoning capabilities in neural architectures. By treating gradients as dynamic carriers of contextual dependencies rather than isolated numerical entities, the proposed methodology bridges critical gaps in existing optimization strategies. The integration of entangled gradient dynamics into a loss regularization framework demonstrated significant improvements in tasks involving long-form reasoning, contextual retention, and adaptability to unseen domains. Experimental evaluations showed that the CEGM-enhanced model consistently outperformed baseline approaches, achieving higher accuracy in token-level predictions and greater resilience to noisy inputs. Practical implementations involved modifications to training pipelines, introducing entanglement layers and dynamic coefficient adjustments that seamlessly align with existing architectures. Results further highlighted reductions in semantic drift during sequential transformations and improvements in embedding coherence across paraphrased sentences, showing the robustness and versatility of the proposed methodology. The findings demonstrate the broader implications of gradient entanglement for both theoretical advancements and practical applications in optimization strategies.

上下文纠缠梯度映射(CEGM)引入了一种新的梯度优化方法,重新定义了上下文嵌入和梯度更新之间的关系,以增强神经网络架构中的语义连贯性和推理能力。该方法将梯度视为动态承载上下文依赖关系的载体,而非孤立的数值实体,从而弥补了现有优化策略中的关键空白。将纠缠梯度动力学集成到损失正则化框架中,在涉及长形式推理、上下文保留和适应未见领域等任务中显示出显着改进。实验评估表明,使用CEGM增强的模型始终优于基准方法,在令牌级预测方面实现了更高的准确性,并且对噪声输入的适应性更强。实际应用涉及训练管道修改,引入了纠缠层以及动态系数调整,这些调整与现有架构无缝对接。结果进一步突出了在连续变换过程中语义漂移的减少以及跨同义句嵌入连贯性的提高,显示了所提出方法的稳健性和通用性。研究结果表明,梯度纠缠对优化策略的理论进步和实际应用具有更广泛的影响。

论文及项目相关链接

PDF arXiv admin note: This paper has been withdrawn by arXiv due to disputed and unverifiable authorship

Summary

梯度优化新方法——语境纠缠梯度映射(CEGM)提出重新定义语境嵌入与梯度更新之间的关系,以提高神经网络架构中的语义连贯性和推理能力。通过把梯度视为动态携带语境依赖性的载体而非孤立的数值实体,该方法填补了现有优化策略的关键空白。在涉及长形式推理、语境保留以及适应未见领域等任务中,将纠缠梯度动态整合至损失正则化框架中展示出显著改善效果。评估结果表明,CEGM增强模型在词级预测中始终优于基线方法,具有更强的噪声输入适应性和更高的准确性。实际应用包括对训练流程进行修改、引入纠缠层和动态系数调整,与现有架构无缝对接。结果还突出了在连续变换过程中语义漂移的减少以及跨同义句嵌入连贯性的提高,显示出该方法的稳健性和通用性。这一发现展示了梯度纠缠在优化策略的理论进步和实践应用中的更广泛影响。

Key Takeaways

- CEGM提出了基于梯度优化的新方法,通过重新界定语境嵌入和梯度更新的关系强化语义连贯性。

- 该方法将梯度视为动态载体而非孤立的数值实体,注重其在语境依赖性中的作用。

- CEGM显著提高了长形式推理、语境保留和适应未见领域等任务的效果。

- 在词级预测中,CEGM增强模型表现出比基线方法更高的性能和准确性。

- 该方法通过修改训练流程、引入纠缠层和动态系数调整等实现实际应用。

- 结果显示CEGM减少了语义漂移并提高了嵌入连贯性,证明其稳健性和通用性。

点此查看论文截图

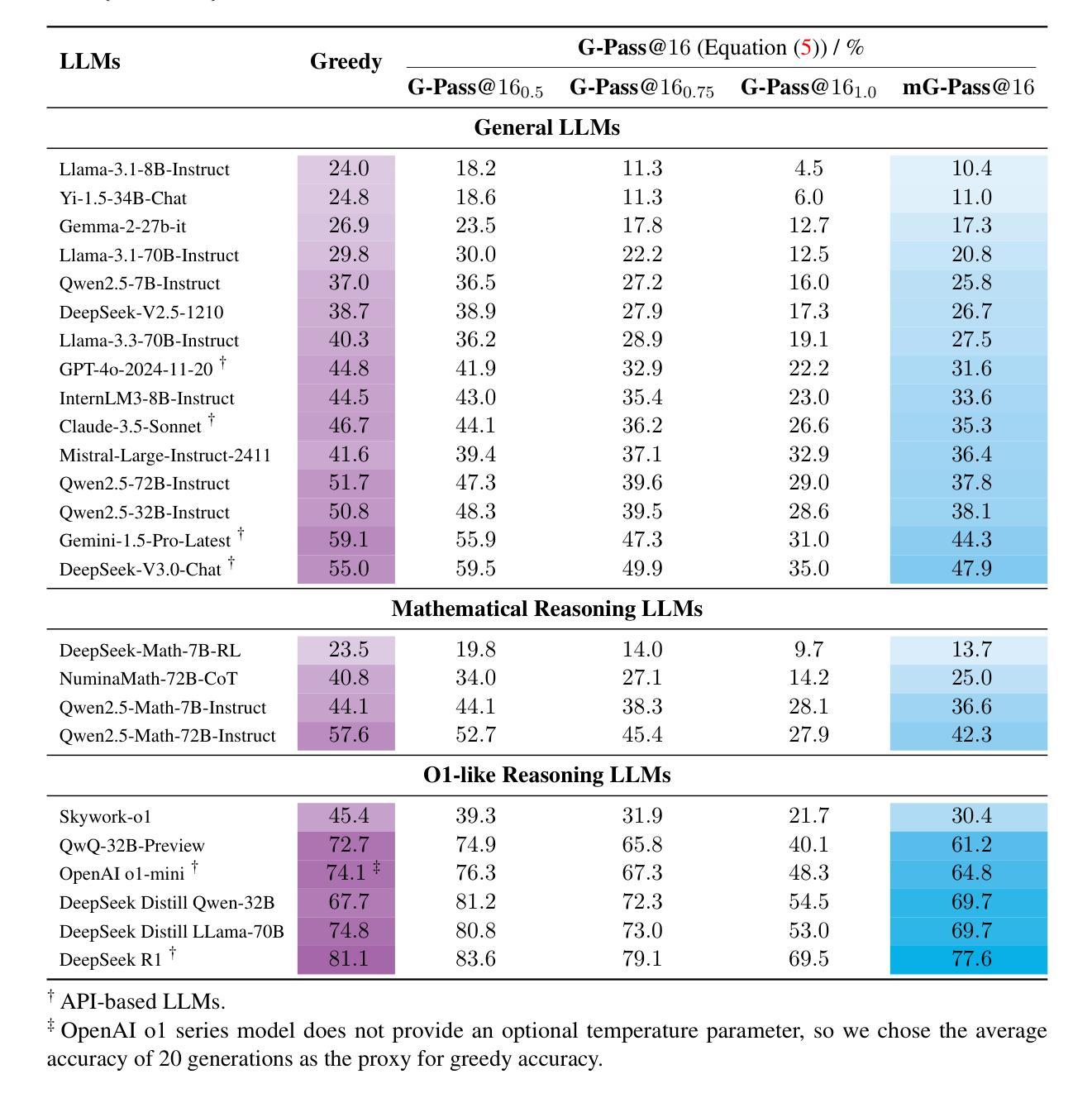

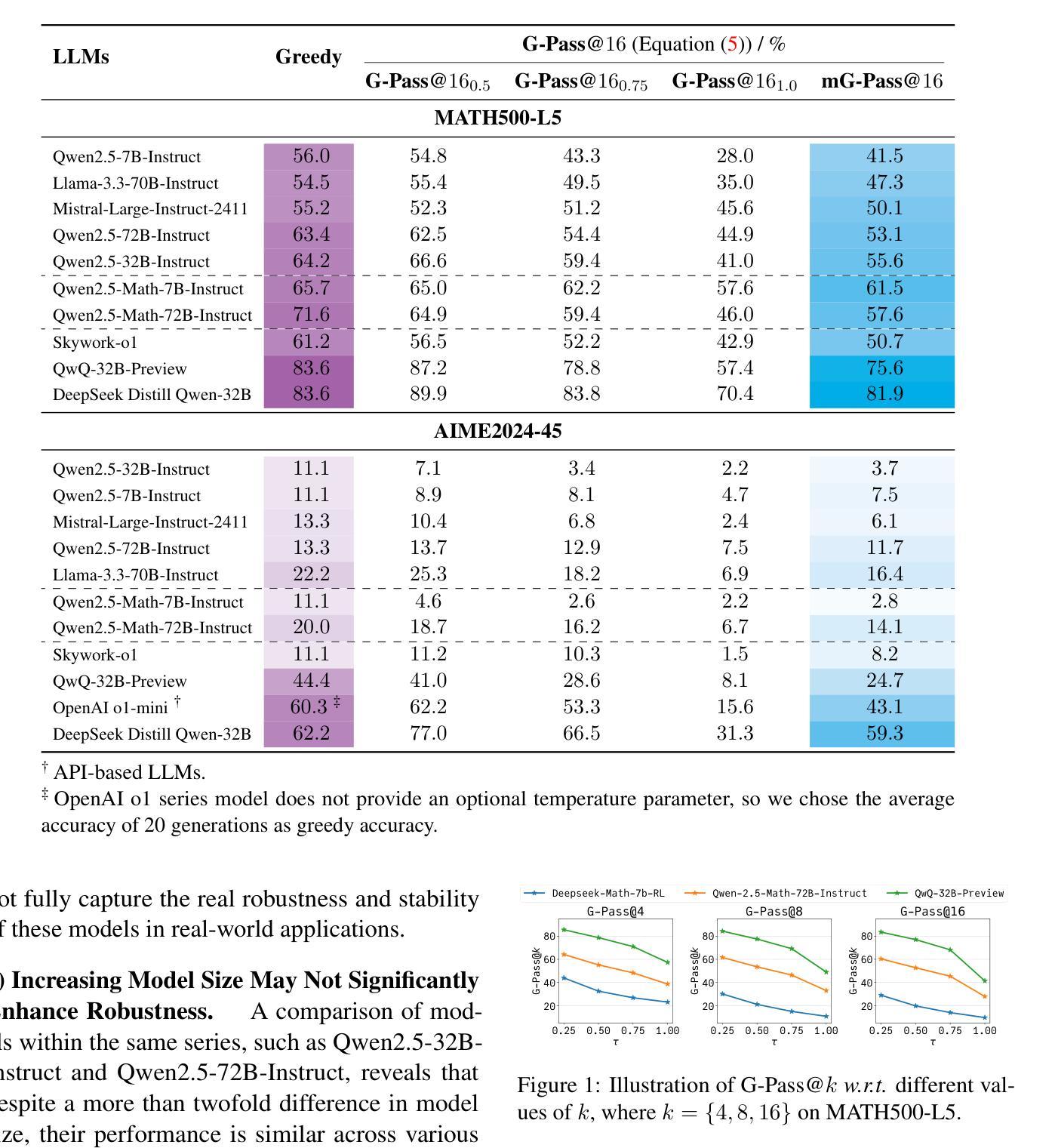

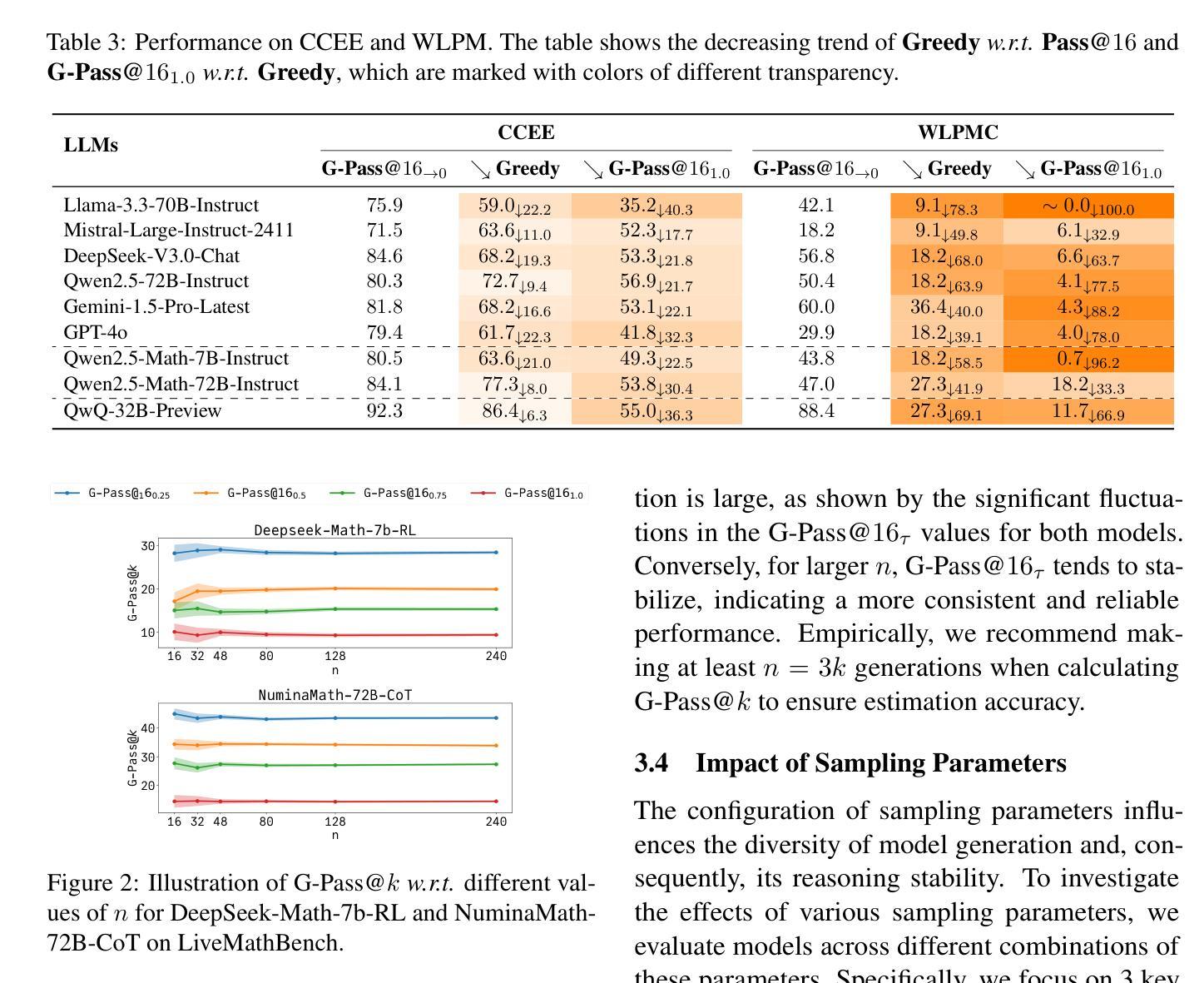

Are Your LLMs Capable of Stable Reasoning?

Authors:Junnan Liu, Hongwei Liu, Linchen Xiao, Ziyi Wang, Kuikun Liu, Songyang Gao, Wenwei Zhang, Songyang Zhang, Kai Chen

The rapid advancement of large language models (LLMs) has shown remarkable progress in complex reasoning tasks. However, a significant disparity exists between benchmark performances and real-world applications. We attribute this gap primarily to current evaluation protocols and metrics, which inadequately capture the full spectrum of LLM capabilities, especially in complex reasoning tasks where both accuracy and consistency are essential. In this paper, we introduce G-Pass@$k$, a novel evaluation metric that continuously assesses model performance across multiple sampling attempts, quantifying both the model’s performance potential and its stability. Through extensive experiments on various public and newly constructed benchmarks, we employ G-Pass@$k$ in conjunction with state-of-the-art large language models to provide comprehensive insights into their potential capabilities and operational consistency. Our findings reveal a significant opportunity to enhance the realistic reasoning abilities of LLMs, underscoring the necessity for more robust evaluation metrics.

大型语言模型(LLM)的快速发展在复杂推理任务中取得了显著的进步。然而,基准测试性能与实际应用之间存在很大的差距。我们将这一差距主要归因于当前的评估协议和指标,它们未能充分捕捉LLM的全部能力,特别是在准确性和一致性都至关重要的复杂推理任务中。在本文中,我们介绍了G-Pass@k这一新型评估指标,它能够持续评估模型多次采样的性能,量化模型的潜力与稳定性。我们通过大量实验,在各种公共和新建基准测试上,结合最先进的大型语言模型,使用G-Pass@k指标对其潜在能力和操作一致性提供了全面的见解。我们的研究结果表明,提高LLM的现实推理能力存在巨大机会,并强调需要更稳健的评估指标。

论文及项目相关链接

PDF ACL 2025 Camera, Benchmark: https://huggingface.co/datasets/opencompass/LiveMathBench, Code: https://github.com/open-compass/GPassK

Summary

大型语言模型(LLM)在复杂推理任务中取得显著进展,但存在性能评估与实际应用之间的鸿沟。本文引入G-Pass@k评估指标,通过多次采样评估模型性能和稳定性。实验表明,现有先进的大型语言模型在实际推理能力方面仍有待提升,需要更稳健的评估指标。

Key Takeaways

- LLM在复杂推理任务中取得显著进步。

- 评估指标与实际应用之间存在鸿沟。

- 引入G-Pass@k评估指标,全面评估模型性能和稳定性。

- 实验表明,现有LLM在实际推理能力方面有待提升。

- LLM需要更稳健的评估指标来反映其实际性能。

- G-Pass@k评估指标能够量化模型性能潜力和稳定性。

点此查看论文截图