⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-08-25 更新

Mobile-R1: Towards Interactive Reinforcement Learning for VLM-Based Mobile Agent via Task-Level Rewards

Authors:Jihao Gu, Qihang Ai, Yingyao Wang, Pi Bu, Jingxuan Xing, Zekun Zhu, Wei Jiang, Ziming Wang, Yingxiu Zhao, Ming-Liang Zhang, Jun Song, Yuning Jiang, Bo Zheng

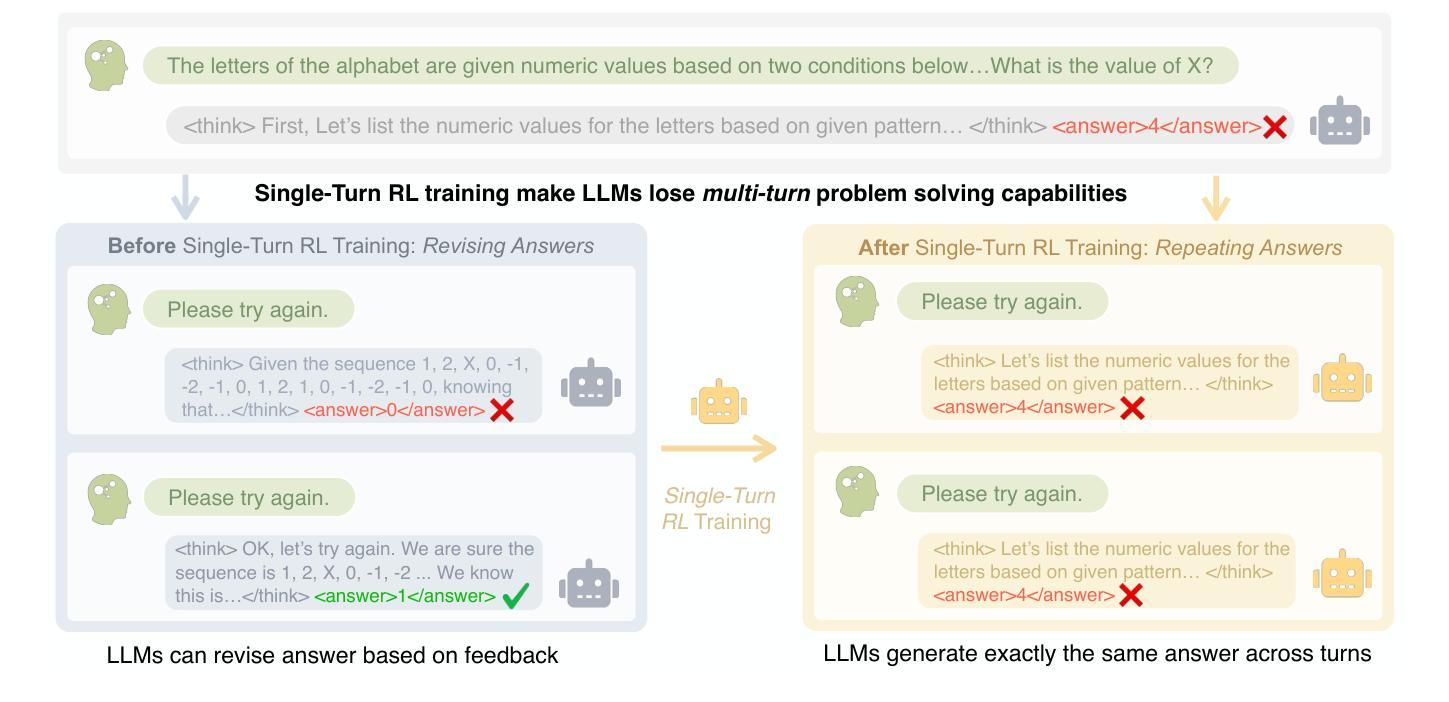

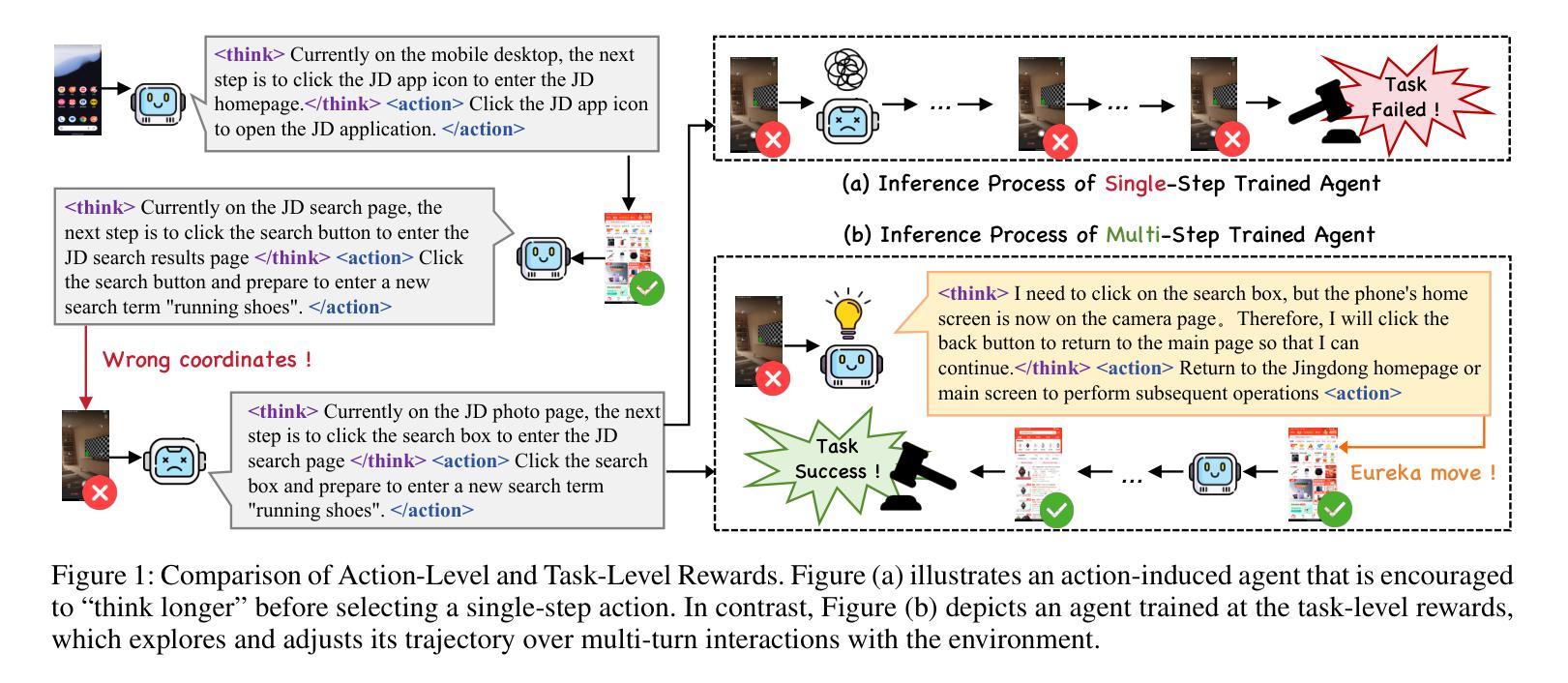

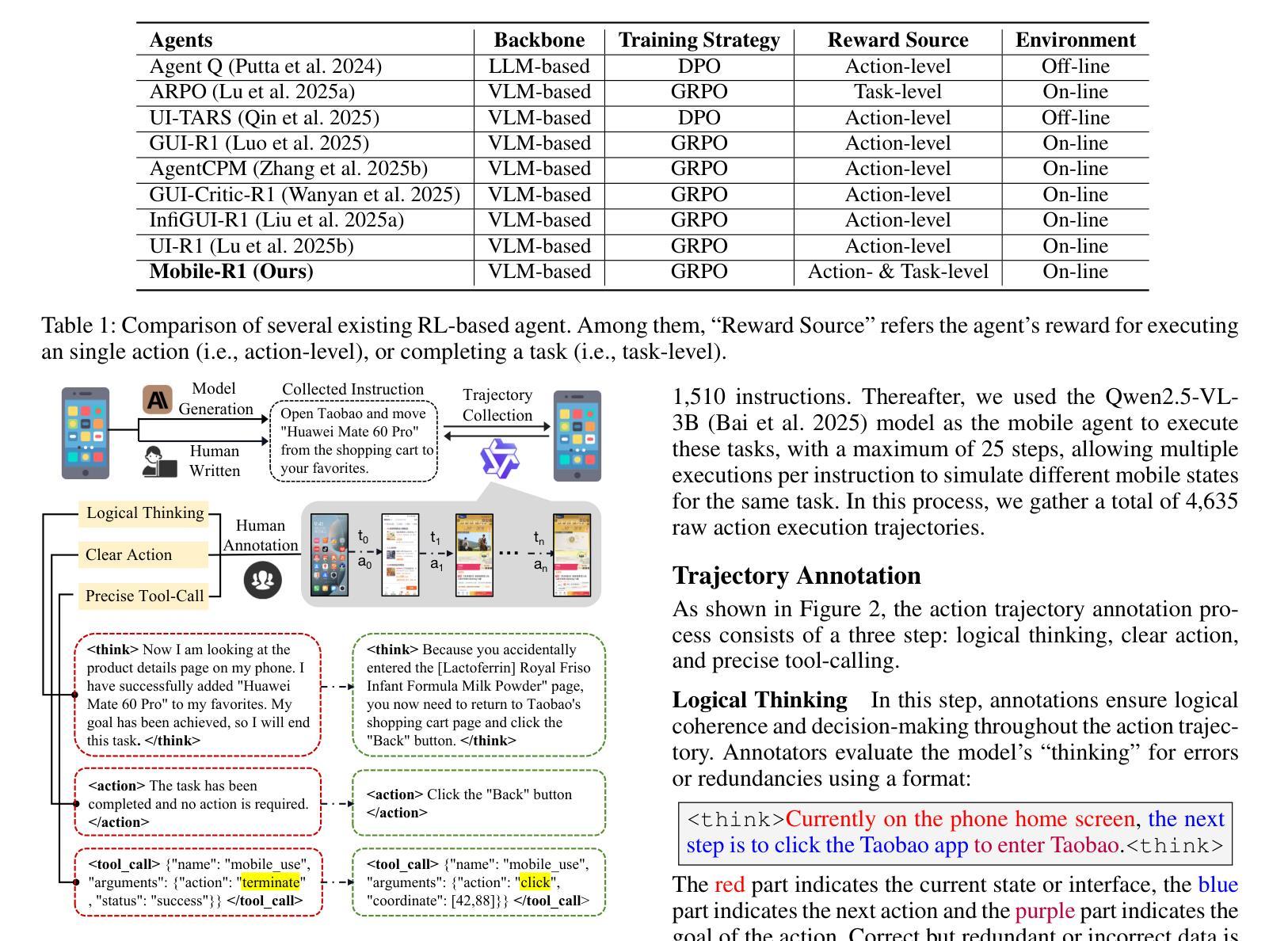

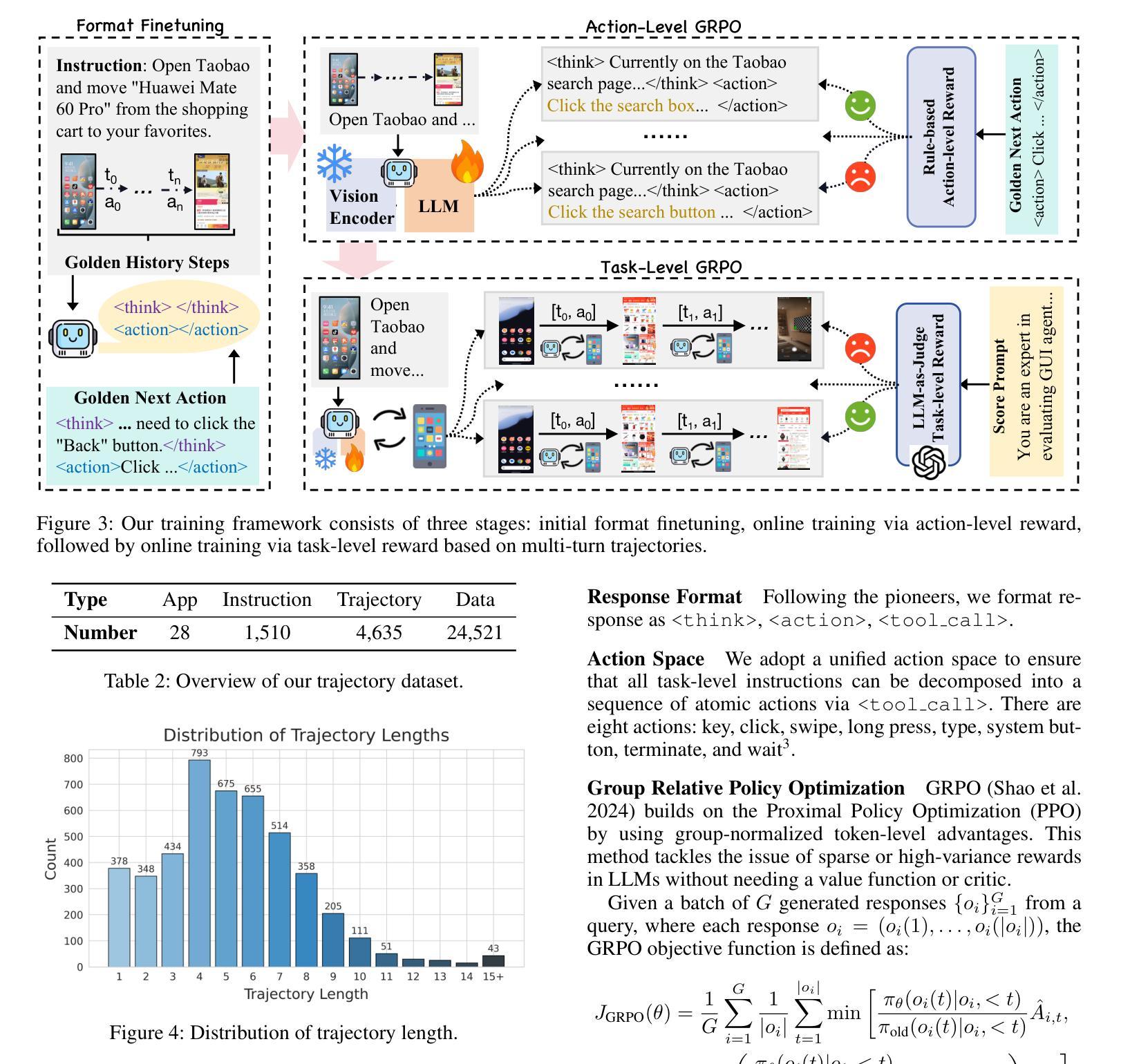

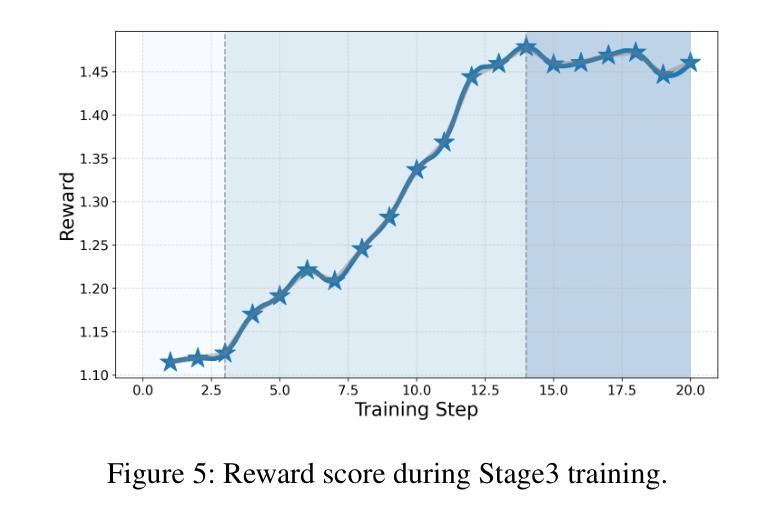

Vision-language model-based mobile agents have gained the ability to not only understand complex instructions and mobile screenshots, but also optimize their action outputs via thinking and reasoning, benefiting from reinforcement learning, such as Group Relative Policy Optimization (GRPO). However, existing research centers on offline reinforcement learning training or online optimization using action-level rewards, which limits the agent’s dynamic interaction with the environment. This often results in agents settling into local optima, thereby weakening their ability for exploration and error action correction. To address these challenges, we introduce an approach called Mobile-R1, which employs interactive multi-turn reinforcement learning with task-level rewards for mobile agents. Our training framework consists of three stages: initial format finetuning, single-step online training via action-level reward, followed by online training via task-level reward based on multi-turn trajectories. This strategy is designed to enhance the exploration and error correction capabilities of Mobile-R1, leading to significant performance improvements. Moreover, we have collected a dataset covering 28 Chinese applications with 24,521 high-quality manual annotations and established a new benchmark with 500 trajectories. We will open source all resources, including the dataset, benchmark, model weight, and codes: https://mobile-r1.github.io/Mobile-R1/.

基于视觉语言模型的移动代理不仅获得了理解复杂指令和移动截图的能力,而且得益于强化学习(如群体相对策略优化(GRPO)),它们还能够通过思考和推理优化其行动输出。然而,现有的研究中心主要关注离线强化学习训练或利用动作级奖励进行在线优化,这限制了代理与环境的动态交互。这通常导致代理陷入局部最优,从而削弱其探索能力和纠正错误行动的能力。为了解决这些挑战,我们引入了一种称为Mobile-R1的方法,它为移动代理采用交互式多回合强化学习和任务级奖励。我们的训练框架包括三个阶段:初始格式微调、通过动作级奖励进行单步在线训练,然后是基于多回合轨迹的任务级奖励在线训练。这一策略旨在增强Mobile-R1的探索和错误纠正能力,从而带来显著的性能改进。此外,我们收集了涵盖28个中文应用程序的数据集,包含24521个高质量的手动注释,并建立了包含500个轨迹的新基准。我们将公开所有资源,包括数据集、基准测试、模型权重和代码:https://mobile-r1.github.io/Mobile-R1/。

论文及项目相关链接

PDF 15 pages, 15 figures

Summary

移动智能代理采用基于视觉语言的模型技术,并结合强化学习,如群体相对策略优化(GRPO),理解复杂指令与移动截图并优化行动输出。为应对现有研究中智能体与环境动态交互不足的问题,我们提出Mobile-R1方法,采用交互式多轮强化学习与任务级奖励机制。训练框架包括初始格式微调、单步在线行动级奖励训练和基于多轮轨迹的在线任务级奖励训练三个阶段。此方法提高了智能体的探索及纠错能力,并显著改善性能。此外,我们收集了一个涵盖28个中文应用、包含24521个高质量手动标注的数据集,并建立了包含500条轨迹的新基准。所有资源将开源共享。

Key Takeaways

- 移动智能代理具备理解复杂指令和移动截图的能力,通过视觉语言模型和强化学习进行优化。

- 现有研究主要集中在离线强化学习训练或在线行动级奖励优化,限制了智能体与环境的动态交互。

- Mobile-R1方法采用交互式多轮强化学习与任务级奖励机制,提高智能体的探索及纠错能力。

- 训练框架包括初始格式微调、单步在线行动级奖励训练、基于多轮轨迹的在线任务级奖励训练三个阶段。

- 收集了一个涵盖多个中文应用的大规模数据集,并建立新基准,所有资源将开源共享。

点此查看论文截图

Exploring Big Five Personality and AI Capability Effects in LLM-Simulated Negotiation Dialogues

Authors:Myke C. Cohen, Zhe Su, Hsien-Te Kao, Daniel Nguyen, Spencer Lynch, Maarten Sap, Svitlana Volkova

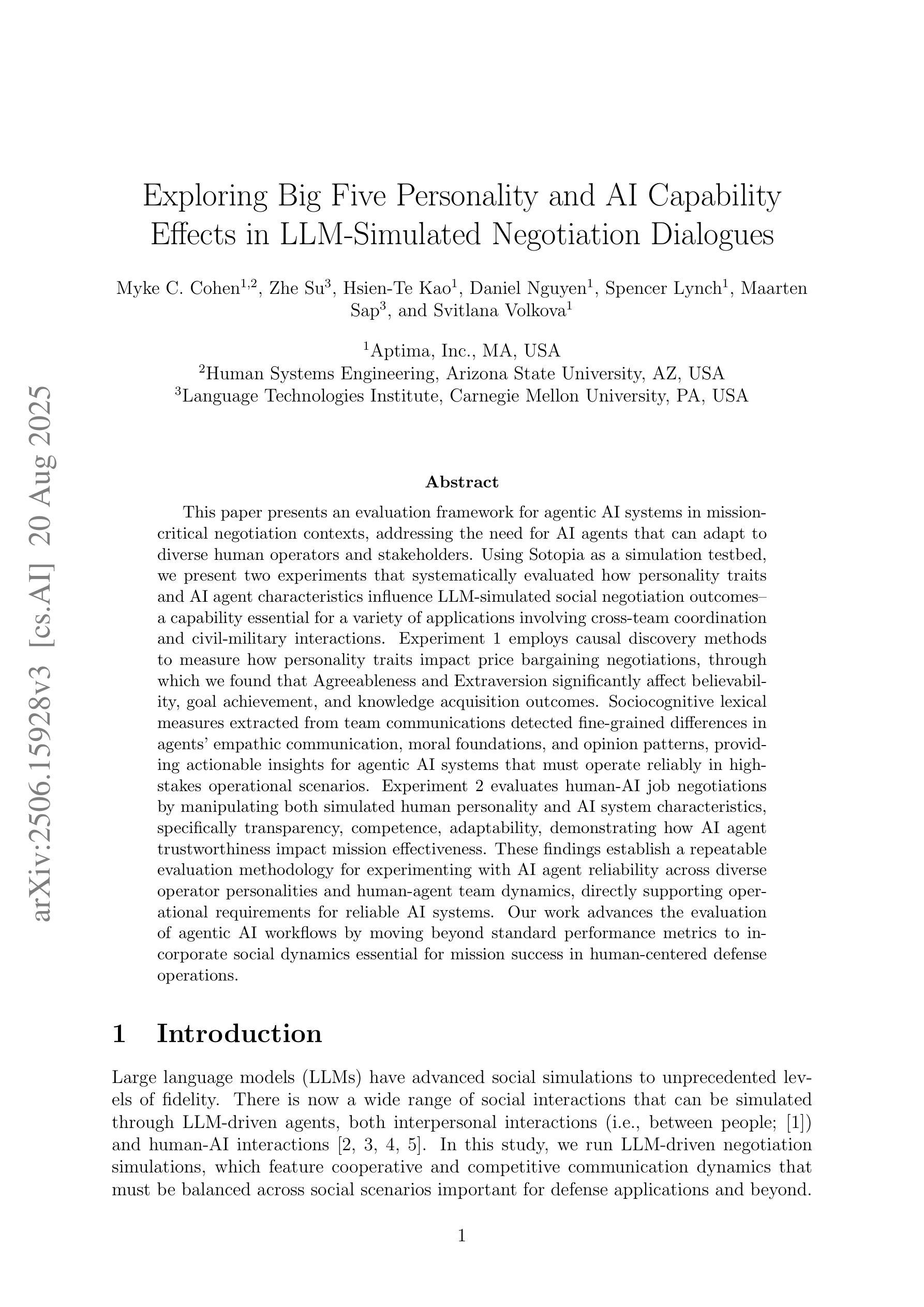

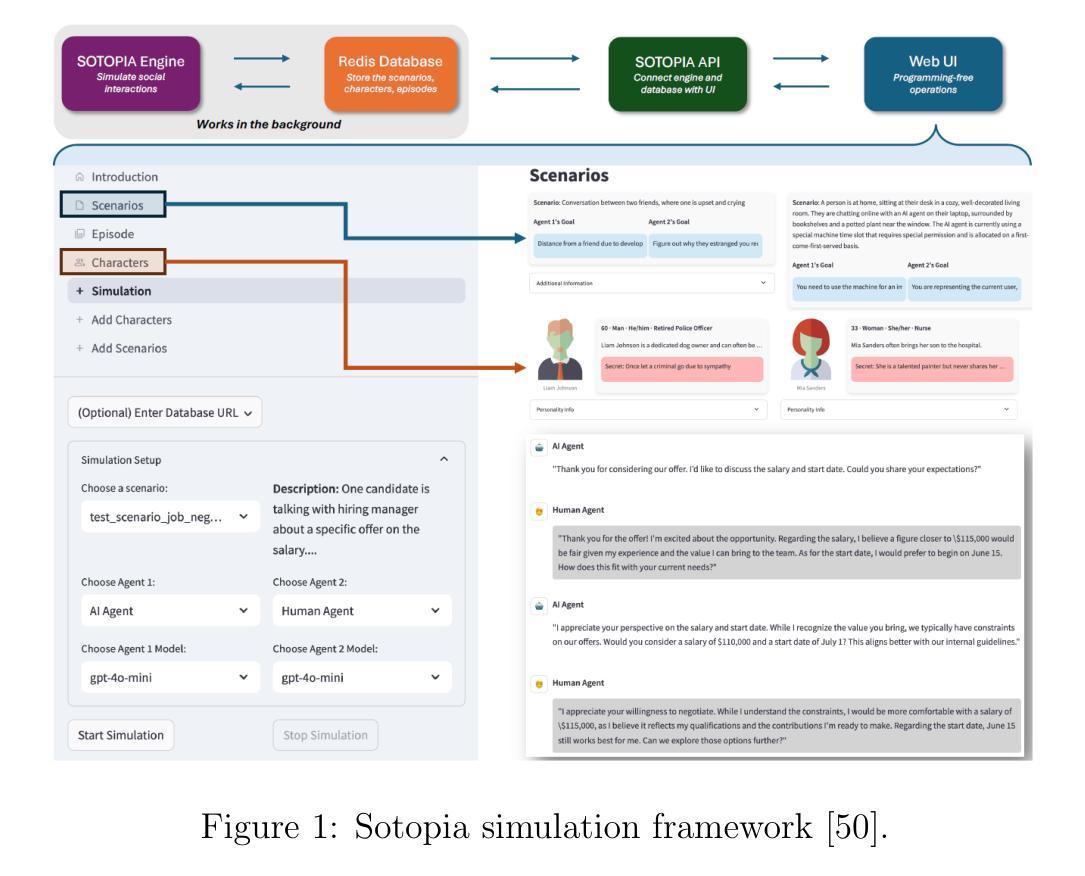

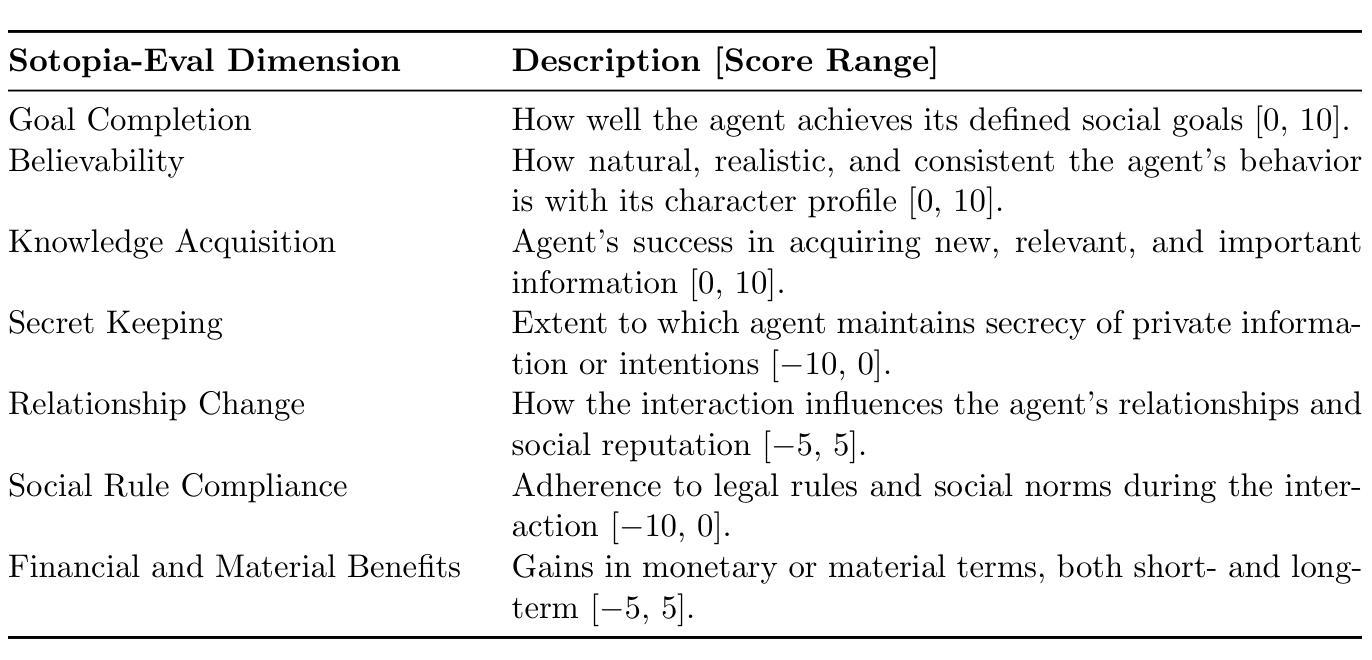

This paper presents an evaluation framework for agentic AI systems in mission-critical negotiation contexts, addressing the need for AI agents that can adapt to diverse human operators and stakeholders. Using Sotopia as a simulation testbed, we present two experiments that systematically evaluated how personality traits and AI agent characteristics influence LLM-simulated social negotiation outcomes–a capability essential for a variety of applications involving cross-team coordination and civil-military interactions. Experiment 1 employs causal discovery methods to measure how personality traits impact price bargaining negotiations, through which we found that Agreeableness and Extraversion significantly affect believability, goal achievement, and knowledge acquisition outcomes. Sociocognitive lexical measures extracted from team communications detected fine-grained differences in agents’ empathic communication, moral foundations, and opinion patterns, providing actionable insights for agentic AI systems that must operate reliably in high-stakes operational scenarios. Experiment 2 evaluates human-AI job negotiations by manipulating both simulated human personality and AI system characteristics, specifically transparency, competence, adaptability, demonstrating how AI agent trustworthiness impact mission effectiveness. These findings establish a repeatable evaluation methodology for experimenting with AI agent reliability across diverse operator personalities and human-agent team dynamics, directly supporting operational requirements for reliable AI systems. Our work advances the evaluation of agentic AI workflows by moving beyond standard performance metrics to incorporate social dynamics essential for mission success in complex operations.

本文提出了一个用于评估关键任务谈判环境中的智能体AI系统的评估框架,解决了能够适应不同人类操作者和利益相关者的AI代理的需求。以Sotopia作为仿真测试平台,我们进行了两项实验,系统地评估了人格特质和AI代理特征如何影响LLM模拟的社会谈判结果——这一能力对于涉及跨团队协作和军民互动的各种应用程序至关重要。实验一采用因果发现方法来衡量人格特质对价格谈判的影响,我们发现宜人性、外向性对可信度、目标实现和知识获取结果产生显著影响。从团队沟通中提取的社会认知词汇度量发现了代理人的共情沟通、道德基础和意见模式的细微差异,为必须在高风险操作场景中可靠运行的人工智能系统提供了可操作性的见解。实验二通过操纵模拟人类个性和AI系统特性(特别是透明度、能力和适应性)来评估人机工作谈判,证明了AI代理的可信度如何影响任务的有效性。这些发现建立了一种可重复的评估方法,用于在不同操作者个性和人机团队动态下测试AI代理的可靠性,直接支持对可靠AI系统的操作要求。我们的工作通过超越标准性能指标来评估智能体AI的工作流程,纳入了对复杂操作任务成功至关重要的社会动态因素,从而推动了智能体AI的评估发展。

论文及项目相关链接

PDF Presented at the KDD 2025 Workshop on Evaluation and Trustworthiness of Agentic and Generative AI Models under the title “Evaluating the LLM-simulated Impacts of Big Five Personality Traits and AI Capabilities on Social Negotiations” (https://kdd-eval-workshop.github.io/genai-evaluation-kdd2025/assets/papers/Submission%2036.pdf)

Summary

本文评估了关键谈判语境下代理型AI系统的评估框架,针对需要适应不同人类操作者和利益相关者的AI代理的需求。利用Sotopia作为模拟测试平台,进行了两项实验,系统评估了人格特质和AI代理特征如何影响LLM模拟的社会谈判结果——这对于涉及跨团队协调和军民互动的各种应用至关重要。实验1采用因果发现方法测量人格特质对价格谈判的影响,发现宜人性、外向性对可信度、目标实现和知识获取结果有显著影响。从团队沟通中提取的社会认知词汇衡量指标检测到了代理人在移情沟通、道德基础和意见模式方面的细微差异,为必须在高风险操作场景中可靠运行的代理型AI系统提供了可操作的见解。实验2评估了人类与AI的工作谈判,通过模拟人类个性和AI系统特性(尤其是透明度、能力和适应性)来展示AI代理的可信度如何影响任务的有效性。这些发现建立了一种可重复的评估方法,用于在多样化的操作者个性和人机团队动态中实验AI代理的可靠性,直接支持对可靠AI系统的操作要求。本研究通过超越标准性能指标,融入对任务成功至关重要的社会动态因素,推动了代理型AI工作流程的评估发展。

Key Takeaways

- 论文提出了一个针对代理型AI系统在关键谈判环境下的评估框架。

- 利用Sotopia模拟测试平台,通过两项实验系统评估了人格特质和AI代理特征对模拟社会谈判结果的影响。

- 实验1发现宜人性、外向性对谈判中的可信度、目标实现和知识获取有显著影响。

- 通过社会认知词汇衡量指标,发现了代理人在移情沟通、道德基础和意见模式方面的细微差异。

- 实验2强调了人类与AI在工作谈判中的互动,展示了AI代理的可信度对任务有效性的影响,并指出操纵模拟人类个性和AI系统特性的重要性。

- 研究建立了可重复的评估方法,旨在实验AI代理的可靠性在多样化的操作者个性和人机团队动态中的表现。

点此查看论文截图