⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-08-26 更新

SAMFusion: Sensor-Adaptive Multimodal Fusion for 3D Object Detection in Adverse Weather

Authors:Edoardo Palladin, Roland Dietze, Praveen Narayanan, Mario Bijelic, Felix Heide

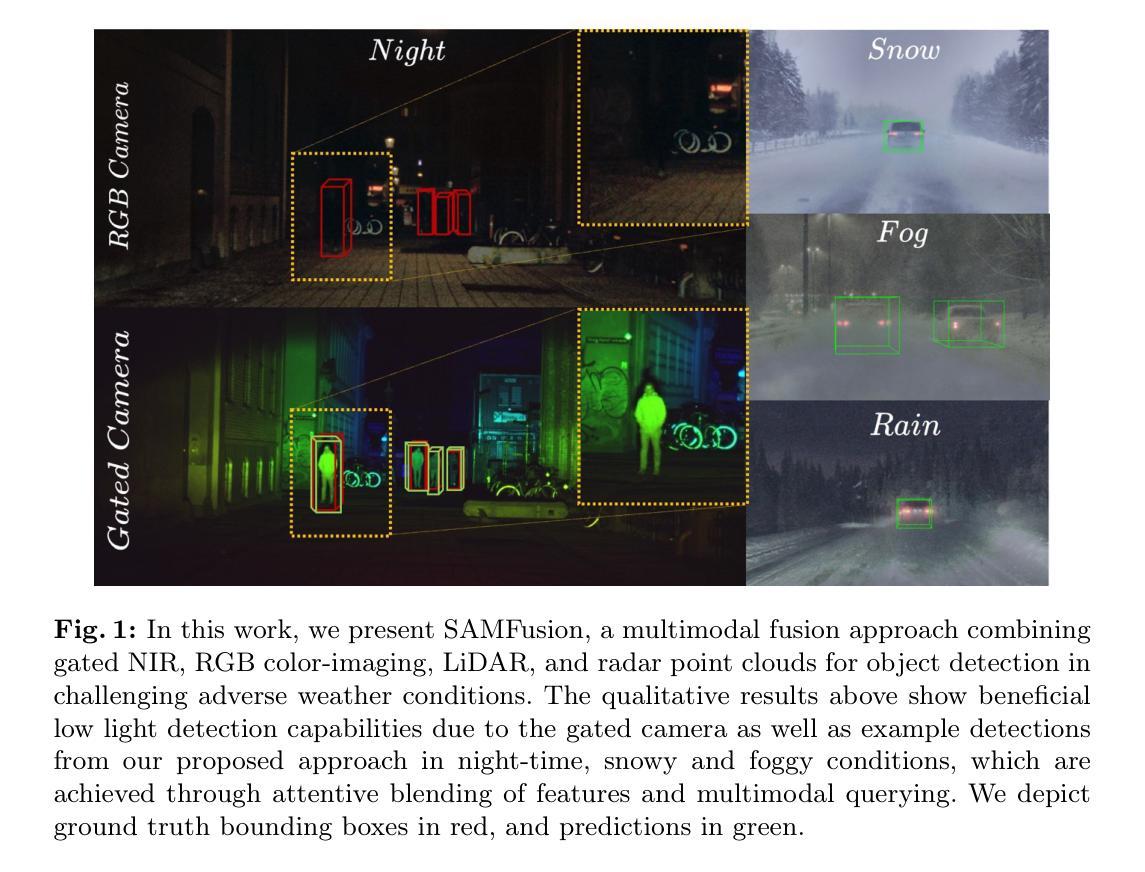

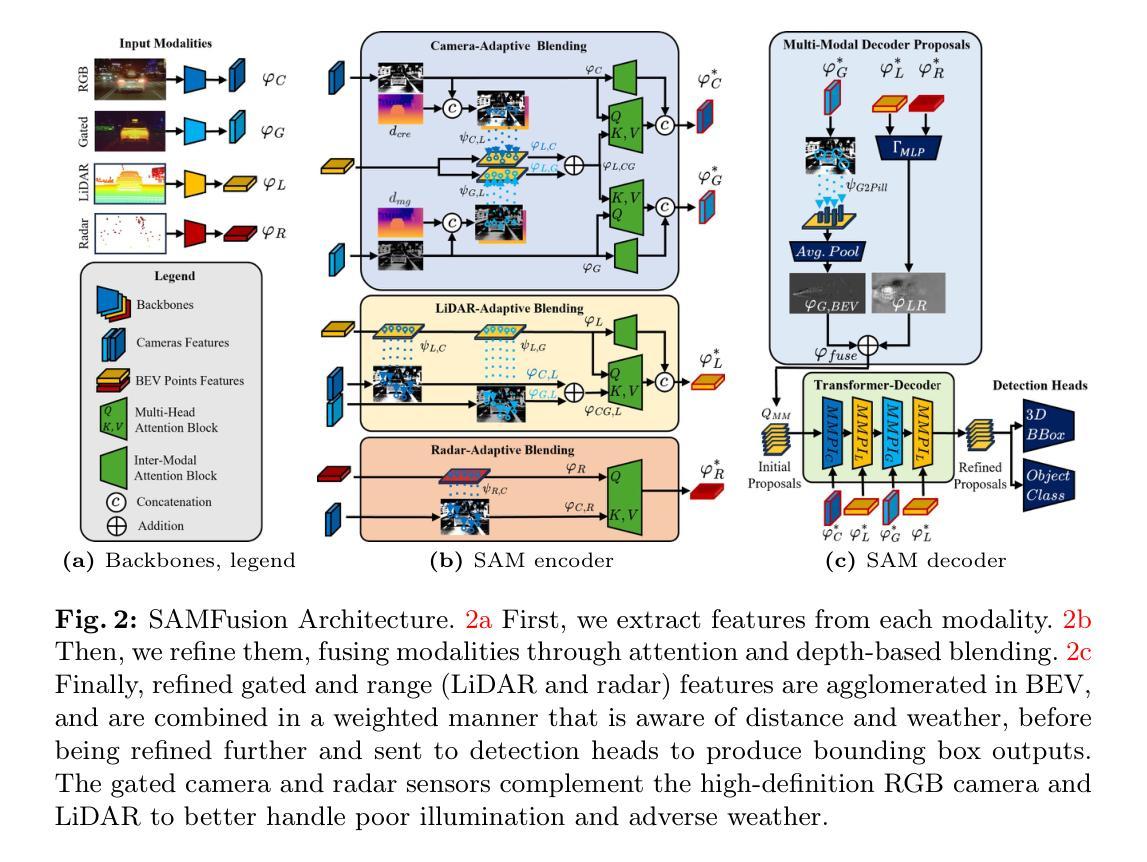

Multimodal sensor fusion is an essential capability for autonomous robots, enabling object detection and decision-making in the presence of failing or uncertain inputs. While recent fusion methods excel in normal environmental conditions, these approaches fail in adverse weather, e.g., heavy fog, snow, or obstructions due to soiling. We introduce a novel multi-sensor fusion approach tailored to adverse weather conditions. In addition to fusing RGB and LiDAR sensors, which are employed in recent autonomous driving literature, our sensor fusion stack is also capable of learning from NIR gated camera and radar modalities to tackle low light and inclement weather. We fuse multimodal sensor data through attentive, depth-based blending schemes, with learned refinement on the Bird’s Eye View (BEV) plane to combine image and range features effectively. Our detections are predicted by a transformer decoder that weighs modalities based on distance and visibility. We demonstrate that our method improves the reliability of multimodal sensor fusion in autonomous vehicles under challenging weather conditions, bridging the gap between ideal conditions and real-world edge cases. Our approach improves average precision by 17.2 AP compared to the next best method for vulnerable pedestrians in long distances and challenging foggy scenes. Our project page is available at https://light.princeton.edu/samfusion/

多模态传感器融合对于自主机器人来说是一项至关重要的能力,能够在存在故障或不确定输入的情况下进行目标检测和决策。虽然最近的融合方法在正常环境条件下表现出色,但这些方法在恶劣天气(例如大雾、雪或由于污染造成的遮挡物)下会失效。我们引入了一种针对恶劣天气条件量身定制的新型多传感器融合方法。除了融合RGB和LiDAR传感器(这些传感器在最近的自动驾驶文献中有所应用)外,我们的传感器融合堆栈还能够从近红外门控相机和雷达模式进行学习,以应对低光照和恶劣天气。我们通过基于注意力的深度融合方案融合多模态传感器数据,并在鸟瞰(BEV)平面上进行学习的精细化,以有效地结合图像和范围特征。我们的检测是通过变压器解码器预测的,该解码器根据距离和可见度对模态进行加权。我们证明,我们的方法在挑战性天气条件下提高了自动驾驶车辆中多模态传感器融合的可靠性,缩小了理想条件和现实世界极端情况之间的差距。与次优方法相比,我们的方法在远距离和具有挑战性的雾天场景中,平均精度提高了17.2AP。我们的项目页面可在[https://light.princeton.edu/samfusion/]上找到。

论文及项目相关链接

Summary

本文介绍了一种针对恶劣天气条件的多传感器融合方法,该方法除了融合RGB和LiDAR传感器外,还能从NIR相机和雷达信号中学习,以解决低光照和恶劣天气下的检测问题。通过深度为基础的融合方案,结合图像和范围特征,使用变压器解码器预测检测结果,根据距离和可见度加权不同的模态。该方法提高了自主车辆在恶劣天气条件下的传感器融合可靠性,缩小了理想条件与真实世界边缘案例之间的差距。

Key Takeaways

- 多模态传感器融合对于自主机器人在恶劣环境下的操作至关重要。

- 现有融合方法在恶劣天气下表现不佳。

- 本文提出了一种新的多传感器融合方法,特别适用于恶劣天气条件。

- 除了RGB和LiDAR传感器外,该方法还能从NIR相机和雷达信号中学习。

- 通过深度为基础的融合方案和变压器解码器进行传感器数据融合和检测结果预测。

- 该方法提高了在恶劣天气条件下自主车辆传感器融合的可靠性。

点此查看论文截图