⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-09-09 更新

TalkToAgent: A Human-centric Explanation of Reinforcement Learning Agents with Large Language Models

Authors:Haechang Kim, Hao Chen, Can Li, Jong Min Lee

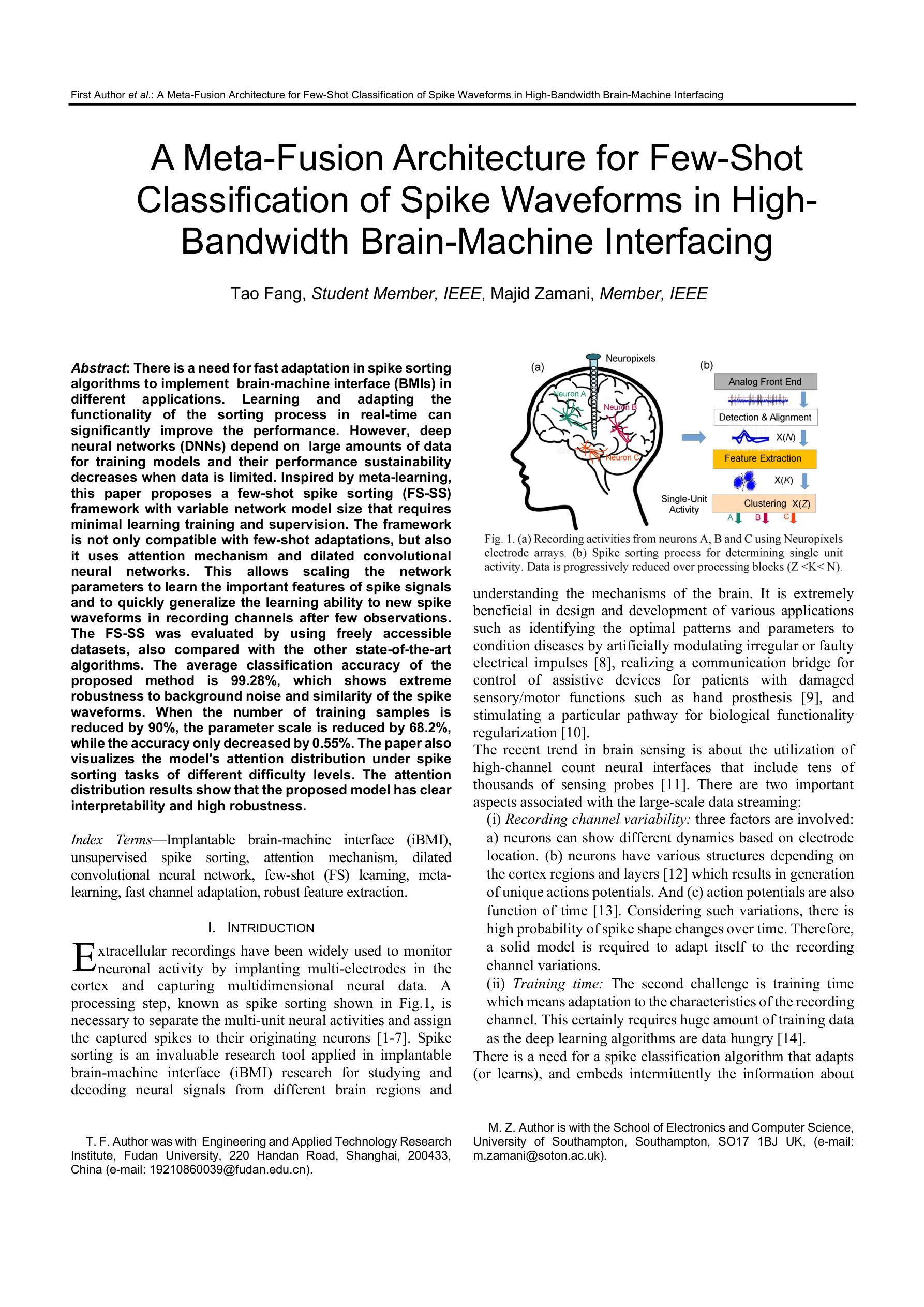

Explainable Reinforcement Learning (XRL) has emerged as a promising approach in improving the transparency of Reinforcement Learning (RL) agents. However, there remains a gap between complex RL policies and domain experts, due to the limited comprehensibility of XRL results and isolated coverage of current XRL approaches that leave users uncertain about which tools to employ. To address these challenges, we introduce TalkToAgent, a multi-agent Large Language Models (LLM) framework that delivers interactive, natural language explanations for RL policies. The architecture with five specialized LLM agents (Coordinator, Explainer, Coder, Evaluator, and Debugger) enables TalkToAgent to automatically map user queries to relevant XRL tools and clarify an agent’s actions in terms of either key state variables, expected outcomes, or counterfactual explanations. Moreover, our approach extends previous counterfactual explanations by deriving alternative scenarios from qualitative behavioral descriptions, or even new rule-based policies. We validated TalkToAgent on quadruple-tank process control problem, a well-known nonlinear control benchmark. Results demonstrated that TalkToAgent successfully mapped user queries into XRL tasks with high accuracy, and coder-debugger interactions minimized failures in counterfactual generation. Furthermore, qualitative evaluation confirmed that TalkToAgent effectively interpreted agent’s actions and contextualized their meaning within the problem domain.

可解释的强化学习(XRL)作为一种有前景的方法,在提升强化学习(RL)代理的透明度方面表现出色。然而,由于XRL结果的有限可理解性和当前XRL方法的孤立覆盖,使得复杂RL策略与领域专家之间存在差距,使用户对使用哪种工具感到不确定。为了应对这些挑战,我们引入了TalkToAgent,这是一个多代理的大型语言模型(LLM)框架,为RL策略提供交互式的自然语言解释。该架构包含五个专业LLM代理(协调器、解释器、编码员、评估器和调试器),使TalkToAgent能够自动将用户查询映射到相关的XRL工具,并澄清代理关于关键状态变量、预期结果或反事实解释的行动。此外,我们的方法通过从定性行为描述或甚至新的基于规则的策略中得出替代场景,扩展了之前的反事实解释。我们在著名的非线性控制基准——四罐过程控制问题上验证了TalkToAgent。结果表明,TalkToAgent成功地将用户查询映射到XRL任务,具有很高的准确性,并且编码器和调试器之间的交互减少了反事实生成中的失败。此外,定性评估证实,TalkToAgent有效地解释了代理的行动,并在问题域内对其含义进行了上下文化。

论文及项目相关链接

PDF 31 pages total

Summary:强化学习(RL)的透明度提升可通过解释性强化学习(XRL)来实现,但仍存在复杂RL策略与领域专家之间的鸿沟。为解决此问题,我们推出TalkToAgent,一个基于多智能体自然语言模型(LLM)的框架,可为RL策略提供交互式自然语言解释。该架构包含五个专业LLM智能体,可实现自动将用户查询映射到相关XRL工具,并澄清智能体的关键状态变量、预期结果或反事实解释等行为。我们的方法还通过从定性行为描述或新的基于规则的策略中推导替代场景,扩展了先前的反事实解释。在四倍水箱过程控制问题上验证了TalkToAgent,结果表明其成功将用户查询映射到XRL任务并实现了高精度的交互。

Key Takeaways:

- XRL提高了RL的透明度,但仍存在与领域专家的沟通鸿沟。

- TalkToAgent是一个基于多智能体LLM的框架,提供交互式自然语言解释,助力理解RL策略。

- TalkToAgent架构包含五个专业LLM智能体,能自动映射用户查询到相关XRL工具。

- 该框架能解释智能体的行为,包括关键状态变量、预期结果和反事实解释。

- TalkToAgent扩展了反事实解释方法,能从定性行为描述和规则策略中推导替代场景。

- 在四倍水箱过程控制问题上的验证显示,TalkToAgent能高精度地将用户查询映射到XRL任务。

点此查看论文截图

Mind the Gap: Evaluating Model- and Agentic-Level Vulnerabilities in LLMs with Action Graphs

Authors:Ilham Wicaksono, Zekun Wu, Theo King, Adriano Koshiyama, Philip Treleaven

As large language models transition to agentic systems, current safety evaluation frameworks face critical gaps in assessing deployment-specific risks. We introduce AgentSeer, an observability-based evaluation framework that decomposes agentic executions into granular action and component graphs, enabling systematic agentic-situational assessment. Through cross-model validation on GPT-OSS-20B and Gemini-2.0-flash using HarmBench single turn and iterative refinement attacks, we demonstrate fundamental differences between model-level and agentic-level vulnerability profiles. Model-level evaluation reveals baseline differences: GPT-OSS-20B (39.47% ASR) versus Gemini-2.0-flash (50.00% ASR), with both models showing susceptibility to social engineering while maintaining logic-based attack resistance. However, agentic-level assessment exposes agent-specific risks invisible to traditional evaluation. We discover “agentic-only” vulnerabilities that emerge exclusively in agentic contexts, with tool-calling showing 24-60% higher ASR across both models. Cross-model analysis reveals universal agentic patterns, agent transfer operations as highest-risk tools, semantic rather than syntactic vulnerability mechanisms, and context-dependent attack effectiveness, alongside model-specific security profiles in absolute ASR levels and optimal injection strategies. Direct attack transfer from model-level to agentic contexts shows degraded performance (GPT-OSS-20B: 57% human injection ASR; Gemini-2.0-flash: 28%), while context-aware iterative attacks successfully compromise objectives that failed at model-level, confirming systematic evaluation gaps. These findings establish the urgent need for agentic-situation evaluation paradigms, with AgentSeer providing the standardized methodology and empirical validation.

随着大型语言模型向代理系统过渡,现有的安全评估框架在评估特定部署风险时面临重大缺陷。我们引入了AgentSeer,一个基于观察力的评估框架,它将代理执行分解为细粒度的操作和组件图,从而实现系统的代理情境评估。我们通过使用HarmBench的单轮和迭代细化攻击对GPT-OSS-20B和Gemini-2.0-flash进行跨模型验证,展示了模型级和代理级漏洞分布之间的基本差异。模型级评估揭示了基线差异:GPT-OSS-20B的ASR(攻击成功率)为39.47%,而Gemini-2.0-flash的ASR为50.00%,两个模型都表现出对社会工程的敏感性,同时保持着基于逻辑的攻击抵抗能力。然而,代理级评估暴露了与代理相关的特定风险,这些风险在传统的评估中是不可见的。我们发现了一些“仅代理”漏洞,这些漏洞仅在代理环境中出现,工具调用使两个模型的ASR提高了24-60%。跨模型分析揭示了通用的代理模式、代理传输操作作为最高风险工具、语义而非语法漏洞机制以及与上下文相关的攻击有效性,以及模型特定的安全配置文件在绝对ASR水平和最佳注入策略方面。直接从模型级攻击转移到代理环境显示性能下降(GPT-OSS-20B:人类注入ASR为57%;Gemini-2.0-flash:28%),而基于上下文的迭代攻击成功地实现了在模型级别失败的目标,证实了系统评估的差距。这些发现凸显了对代理情境评估范式的迫切需求,而AgentSeer提供了标准化的方法和实证验证。

论文及项目相关链接

Summary

随着大型语言模型向代理系统过渡,现有的安全评估框架在评估特定部署风险方面存在重大缺陷。为此,我们引入了基于观察力的评估框架AgentSeer,它将代理执行分解为细粒度的动作和组件图,以实现系统化和情境化的评估。通过跨模型验证,我们发现模型级别的评估与代理级别的脆弱性分布存在根本差异。代理级别的评估揭示了特定的风险。因此,我们强调对代理情境评估的迫切需求,而AgentSeer提供了标准化的方法和实证验证。

Key Takeaways

- 大型语言模型向代理系统过渡时,现有安全评估框架存在评估特定部署风险的缺陷。

- AgentSeer是一个基于观察力的评估框架,能够系统化和情境化地评估代理执行。

- 模型级别的评估和代理级别的评估存在根本差异。代理级别的评估揭示了特定的风险。

- “代理专用”的脆弱性仅在代理环境中出现,工具调用方面的攻击成功率高出24-60%。

- 跨模型分析发现通用代理模式、高风险工具(如代理转移操作)、语义而非句法漏洞机制以及上下文相关的攻击有效性。

- 直接从模型级别攻击转移到代理环境可能导致性能下降,而上下文感知的迭代攻击能够成功攻击目标。

点此查看论文截图

Language-Driven Hierarchical Task Structures as Explicit World Models for Multi-Agent Learning

Authors:Brennen Hill

The convergence of Language models, Agent models, and World models represents a critical frontier for artificial intelligence. While recent progress has focused on scaling Language and Agent models, the development of sophisticated, explicit World Models remains a key bottleneck, particularly for complex, long-horizon multi-agent tasks. In domains such as robotic soccer, agents trained via standard reinforcement learning in high-fidelity but structurally-flat simulators often fail due to intractable exploration spaces and sparse rewards. This position paper argues that the next frontier in developing capable agents lies in creating environments that possess an explicit, hierarchical World Model. We contend that this is best achieved through hierarchical scaffolding, where complex goals are decomposed into structured, manageable subgoals. Drawing evidence from a systematic review of 2024 research in multi-agent soccer, we identify a clear and decisive trend towards integrating symbolic and hierarchical methods with multi-agent reinforcement learning (MARL). These approaches implicitly or explicitly construct a task-based world model to guide agent learning. We then propose a paradigm shift: leveraging Large Language Models to dynamically generate this hierarchical scaffold, effectively using language to structure the World Model on the fly. This language-driven world model provides an intrinsic curriculum, dense and meaningful learning signals, and a framework for compositional learning, enabling Agent Models to acquire sophisticated, strategic behaviors with far greater sample efficiency. By building environments with explicit, language-configurable task layers, we can bridge the gap between low-level reactive behaviors and high-level strategic team play, creating a powerful and generalizable framework for training the next generation of intelligent agents.

语言模型、代理模型和世界模型的融合是人工智能的关键前沿领域。虽然最近的进展主要集中在扩展语言模型和代理模型上,但开发复杂、明确的 world models 仍然是关键瓶颈,特别是对于复杂、长期多代理任务。在机器人足球等领域,通过高保真但结构扁平的模拟器进行标准强化训练的代理通常由于难以处理的探索空间和稀疏奖励而失败。本立场论文认为,开发有能力代理的下一个前沿领域在于创建拥有明确、分层世界模型的环境。我们认为,这最好是通过分层脚手架实现的,复杂的目标被分解为结构化的、可管理的子目标。通过对 2024 年多代理足球研究的系统回顾,我们发现了将符号和分层方法与多代理强化学习(MARL)相结合的趋势。这些方法隐式或显式地构建了一个基于任务的世界模型来指导代理学习。然后我们提出了一个范式转变:利用大型语言模型来动态生成这种分层脚手架,有效地使用语言来实时构建世界模型。这种语言驱动的世界模型提供了内在的课程、密集和有意义的学习信号以及组合学习的框架,使代理模型能够以更高的样本效率获得复杂、战略行为。通过构建具有明确、语言可配置任务层次的环境,我们可以弥低级别反应行为和高级别战略团队合作之间的鸿沟,创建一个强大且通用的框架,用于训练下一代智能代理。

论文及项目相关链接

Summary

本文讨论了语言模型、代理模型和世界模型的融合对人工智能发展的重要性。尽管语言和代理模型已经取得了进展,但复杂、明确的世界模型的发展仍是关键瓶颈,特别是在复杂、长期多代理任务中。通过构建具有明确层次结构的世界模型的环境,可以实现下一阶段代理的发展。这可以通过层次结构支撑来实现,将复杂目标分解为结构化的子目标。结合多代理足球研究的系统审查,发现整合符号和层次方法与多代理强化学习(MARL)的明确趋势。提出利用大型语言模型动态生成这种层次结构,使用语言实时构建世界模型。这种语言驱动的世界模型为代理模型提供了内在的课程、密集和有意义的学习信号以及组合学习的框架,使代理模型能够获取更高效的样本战略行为,并建立具有明确语言配置任务层次的环境,以缩小低级反应行为和高级战略团队游戏之间的鸿沟,为培训下一代智能代理创建一个强大且通用的框架。

Key Takeaways

- 语言模型、代理模型和世界模型的融合是人工智能的重要前沿。

- 世界模型的发展是完成复杂、长期多代理任务的关键瓶颈。

- 通过创建具有明确层次结构的世界模型的环境,可以实现下一阶段智能代理的发展。

- 层次结构支撑是关键,可将复杂目标分解为结构化的子目标。

- 符号和层次方法与多代理强化学习(MARL)的整合是明确趋势。

- 利用大型语言模型可以动态生成层次结构,以指导代理学习。

点此查看论文截图

UI-TARS-2 Technical Report: Advancing GUI Agent with Multi-Turn Reinforcement Learning

Authors:Haoming Wang, Haoyang Zou, Huatong Song, Jiazhan Feng, Junjie Fang, Junting Lu, Longxiang Liu, Qinyu Luo, Shihao Liang, Shijue Huang, Wanjun Zhong, Yining Ye, Yujia Qin, Yuwen Xiong, Yuxin Song, Zhiyong Wu, Aoyan Li, Bo Li, Chen Dun, Chong Liu, Daoguang Zan, Fuxing Leng, Hanbin Wang, Hao Yu, Haobin Chen, Hongyi Guo, Jing Su, Jingjia Huang, Kai Shen, Kaiyu Shi, Lin Yan, Peiyao Zhao, Pengfei Liu, Qinghao Ye, Renjie Zheng, Shulin Xin, Wayne Xin Zhao, Wen Heng, Wenhao Huang, Wenqian Wang, Xiaobo Qin, Yi Lin, Youbin Wu, Zehui Chen, Zihao Wang, Baoquan Zhong, Xinchun Zhang, Xujing Li, Yuanfan Li, Zhongkai Zhao, Chengquan Jiang, Faming Wu, Haotian Zhou, Jinlin Pang, Li Han, Qi Liu, Qianli Ma, Siyao Liu, Songhua Cai, Wenqi Fu, Xin Liu, Yaohui Wang, Zhi Zhang, Bo Zhou, Guoliang Li, Jiajun Shi, Jiale Yang, Jie Tang, Li Li, Qihua Han, Taoran Lu, Woyu Lin, Xiaokang Tong, Xinyao Li, Yichi Zhang, Yu Miao, Zhengxuan Jiang, Zili Li, Ziyuan Zhao, Chenxin Li, Dehua Ma, Feng Lin, Ge Zhang, Haihua Yang, Hangyu Guo, Hongda Zhu, Jiaheng Liu, Junda Du, Kai Cai, Kuanye Li, Lichen Yuan, Meilan Han, Minchao Wang, Shuyue Guo, Tianhao Cheng, Xiaobo Ma, Xiaojun Xiao, Xiaolong Huang, Xinjie Chen, Yidi Du, Yilin Chen, Yiwen Wang, Zhaojian Li, Zhenzhu Yang, Zhiyuan Zeng, Chaolin Jin, Chen Li, Hao Chen, Haoli Chen, Jian Chen, Qinghao Zhao, Guang Shi

The development of autonomous agents for graphical user interfaces (GUIs) presents major challenges in artificial intelligence. While recent advances in native agent models have shown promise by unifying perception, reasoning, action, and memory through end-to-end learning, open problems remain in data scalability, multi-turn reinforcement learning (RL), the limitations of GUI-only operation, and environment stability. In this technical report, we present UI-TARS-2, a native GUI-centered agent model that addresses these challenges through a systematic training methodology: a data flywheel for scalable data generation, a stabilized multi-turn RL framework, a hybrid GUI environment that integrates file systems and terminals, and a unified sandbox platform for large-scale rollouts. Empirical evaluation demonstrates that UI-TARS-2 achieves significant improvements over its predecessor UI-TARS-1.5. On GUI benchmarks, it reaches 88.2 on Online-Mind2Web, 47.5 on OSWorld, 50.6 on WindowsAgentArena, and 73.3 on AndroidWorld, outperforming strong baselines such as Claude and OpenAI agents. In game environments, it attains a mean normalized score of 59.8 across a 15-game suite-roughly 60% of human-level performance-and remains competitive with frontier proprietary models (e.g., OpenAI o3) on LMGame-Bench. Additionally, the model can generalize to long-horizon information-seeking tasks and software engineering benchmarks, highlighting its robustness across diverse agent tasks. Detailed analyses of training dynamics further provide insights into achieving stability and efficiency in large-scale agent RL. These results underscore UI-TARS-2’s potential to advance the state of GUI agents and exhibit strong generalization to real-world interactive scenarios.

在图形用户界面(GUI)的自主代理开发方面,人工智能面临着重大挑战。尽管最近的本土代理模型进展显示出通过端到端学习统一感知、推理、行动和记忆的承诺,但在数据可扩展性、多轮强化学习(RL)、仅GUI操作的局限性以及环境稳定性等方面仍存在未解问题。在这份技术报告中,我们推出了UI-TARS-2,这是一种以GUI为中心的代理模型,通过系统的训练方法解决这些挑战:用于可扩展数据生成的数据飞轮,稳定的多轮RL框架,集成文件系统和终端的混合GUI环境,以及用于大规模推出的统一沙箱平台。经验评估表明,UI-TARS-2在其前身UI-TARS-1.5的基础上取得了显著改进。在GUI基准测试中,它在Online-Mind2Web上达到88.2,在OSWorld上达到47.5,在WindowsAgentArena上达到50.6,在AndroidWorld上达到73.3,超越了Claude和OpenAI代理等强劲基准线。在游戏环境中,它在15款游戏套件中的平均标准化分数为59.8——大约相当于人类性能的60%,并且在LMGame-Bench上与前沿专有模型(例如OpenAI o3)保持竞争力。此外,该模型可以推广到长期信息寻找任务和软件工程基准测试,凸显了其在各种代理任务中的稳健性。对训练动态的详细分析进一步提供了实现大规模代理RL中的稳定性和效率的见解。这些结果强调了UI-TARS-2在推进GUI代理状态方面的潜力,并表现出对现实世界交互场景的强劲推广能力。

论文及项目相关链接

Summary

本技术报告提出UI-TARS-2,一种针对图形用户界面(GUI)自主代理模型的新方法。它解决了数据可扩展性、多回合强化学习、仅GUI操作的局限性以及环境稳定性等挑战。通过数据飞轮实现可扩展数据生成、稳定的多回合RL框架、混合GUI环境(集成文件系统和终端),以及大规模推出的统一沙箱平台等方法实现。经验评估表明,UI-TARS-2在GUI基准测试、游戏环境和软件工程基准测试中表现出色,相较于前任模型有显著改进。

Key Takeaways

- UI-TARS-2是一种针对GUI的自主代理模型,解决了现有挑战。

- 通过数据飞轮实现可扩展数据生成。

- 提出了稳定的多回合强化学习框架。

- 创造了混合GUI环境,集成文件系统和终端。

- 在多个基准测试中表现优异,包括Online-Mind2Web、OSWorld、WindowsAgentArena和AndroidWorld。

- 在游戏环境中达到人类性能的约60%,并与前沿专有模型(如OpenAI o3)竞争。

点此查看论文截图

Dynamic Speculative Agent Planning

Authors:Yilin Guan, Wenyue Hua, Qingfeng Lan, Sun Fei, Dujian Ding, Devang Acharya, Chi Wang, William Yang Wang

Despite their remarkable success in complex tasks propelling widespread adoption, large language-model-based agents still face critical deployment challenges due to prohibitive latency and inference costs. While recent work has explored various methods to accelerate inference, existing approaches suffer from significant limitations: they either fail to preserve performance fidelity, require extensive offline training of router modules, or incur excessive operational costs. Moreover, they provide minimal user control over the tradeoff between acceleration and other performance metrics. To address these gaps, we introduce Dynamic Speculative Planning (DSP), an asynchronous online reinforcement learning framework that provides lossless acceleration with substantially reduced costs without requiring additional pre-deployment preparation. DSP explicitly optimizes a joint objective balancing end-to-end latency against dollar cost, allowing practitioners to adjust a single parameter that steers the system toward faster responses, cheaper operation, or any point along this continuum. Experiments on two standard agent benchmarks demonstrate that DSP achieves comparable efficiency to the fastest lossless acceleration method while reducing total cost by 30% and unnecessary cost up to 60%. Our code and data are available through https://github.com/guanyilin428/Dynamic-Speculative-Planning.

尽管基于大型语言模型的代理在复杂任务中取得了显著的成功,并推动了广泛的采用,但它们仍然面临着关键的部署挑战,因为延迟和推理成本过高。尽管最近的研究已经探索了各种方法来加速推理,但现有方法存在重大局限性:它们要么无法保持性能保真度,要么需要对路由器模块进行大量的离线训练,或者产生过高的运营成本。此外,它们对加速和其他性能指标之间的权衡提供了很少的用户控制。为了解决这些差距,我们引入了动态推测规划(DSP),这是一个异步在线强化学习框架,可以在无需额外预先部署准备的情况下,实现无损加速并大幅降低成本。DSP显式地优化了一个联合目标,以平衡端到端延迟和美元成本,使得从业者可以调整一个参数来引导系统实现更快的响应、更便宜的运营或在连续体中任何一点的平衡。在两个标准代理基准测试上的实验表明,DSP实现了与最快的无损加速方法相当的效率,同时降低了总成本30%,并减少了高达60%的不必要成本。我们的代码和数据可通过https://github.com/guanyilin428/Dynamic-Speculative-Planning获取。

论文及项目相关链接

PDF 19 pages, 11 figures

Summary

本文探讨大型语言模型代理在部署时面临的挑战,如延迟和推理成本过高。针对现有解决方案的局限性,提出动态规划(DSP)框架,通过异步在线强化学习实现无损加速,降低推理成本,无需额外的离线训练路由器模块。DSP优化了针对延迟和成本的综合目标函数,使用户可以根据实际需求调整参数,实现更快的响应速度或更低的操作成本之间的平衡。实验证明,DSP在效率上达到最快无损加速方法水平的同时,总成本降低30%,不必要的成本降低高达60%。

Key Takeaways

- 大型语言模型在部署时面临延迟和推理成本过高的挑战。

- 现有解决方案在加速推理时存在性能保真度损失、需要离线训练路由器模块的问题。

- DSP框架是一种新型解决方案,实现了无损加速和降低推理成本的目标。

- DSP通过异步在线强化学习来实现性能优化,允许用户根据需求调整参数平衡响应速度和操作成本。

- DSP框架无需额外的预部署准备,降低了操作成本和不必要的开销。

- 实验证明,DSP在效率和成本方面都取得了显著成果,总成本降低30%,不必要的成本降低高达60%。

点此查看论文截图

The Complexity Trap: Simple Observation Masking Is as Efficient as LLM Summarization for Agent Context Management

Authors:Tobias Lindenbauer, Igor Slinko, Ludwig Felder, Egor Bogomolov, Yaroslav Zharov

Large Language Model (LLM)-based agents solve complex tasks through iterative reasoning, exploration, and tool-use, a process that can result in long, expensive context histories. While state-of-the-art Software Engineering ( SE) agents like OpenHands or Cursor use LLM-based summarization to tackle this issue, it is unclear whether the increased complexity offers tangible performance benefits compared to simply omitting older observations. We present a systematic comparison of these strategies within SWE-agent on SWE-bench Verified across five diverse model configurations. We find that a simple observation-masking strategy halves cost relative to a raw agent while matching, and sometimes slightly exceeding, the solve rate of LLM summarization. For example, with Qwen3-Coder 480B, masking improves solve rate from 53.8% (raw agent) to 54.8%, while remaining competitive with summarization at a lower cost. These results suggest that, at least within SWE-agent on SWE-bench Verified, the most effective and efficient context management can be the simplest. We release code and data for reproducibility

基于大规模语言模型(LLM)的代理通过迭代推理、探索和工具使用来解决复杂任务,这一过程可能会产生漫长而昂贵的上下文历史。虽然最先进的软件工程(SE)代理,如OpenHands或Cursor,使用LLM基础上的摘要技术来解决这个问题,但尚不清楚这种增加的复杂性是否提供了相对于简单地省略旧观察值的具体性能优势。我们在SWE-bench Verified上对SWE-agent内的这些策略进行了系统比较,涵盖了五种不同的模型配置。我们发现一个简单的观察屏蔽策略可以使成本相对于原始代理减半,同时匹配有时甚至略微超过LLM摘要技术的解决率。例如,在Qwen3-Coder 480B的情况下,屏蔽技术将解决率从原始代理的53.8%提高到54.8%,同时在成本上保持与摘要技术的竞争力。这些结果表明,至少在SWE-bench Verified上的SWE-agent中,最有效和最高效的上下文管理可能是最简单的。我们发布代码和数据以实现可重复性。

论文及项目相关链接

PDF v2: Fixed typos and formatting issues

Summary:基于大型语言模型(LLM)的代理通过迭代推理、探索和工具使用来解决复杂任务,这一过程可能产生漫长而昂贵的上下文历史。尽管最新的软件工程(SE)代理如OpenHands或Cursor使用LLM摘要来解决这一问题,但尚不清楚这种增加的复杂性是否提供了实际性能优势,相较于简单地省略旧观察结果。本文在SWE-bench上对SWE-agent进行了系统比较,发现简单的观察掩蔽策略相较于原始代理可降低一半成本,同时在解决率方面与LLM摘要相匹配,有时甚至略有超出。例如,在Qwen3-Coder 480B的情况下,掩蔽可将解决率从原始代理的53.8%提升至54.8%,同时相较于摘要法保持竞争力且成本更低。这些结果暗示至少在SWE-agent上,最有效的上下文管理可能是最简单的。我们发布代码和数据以实现可重复性。

Key Takeaways:

- LLM-based agents can solve complex tasks through iterative reasoning, exploration, and tool-use, but this process can lead to long and expensive context histories.

- State-of-the-art SE agents like OpenHands or Cursor use LLM-based summarization to address this issue.

- A simple observation-masking strategy can halve the cost compared to a raw agent while matching or slightly exceeding the solve rate of LLM summarization.

- The most effective and efficient context management within SWE-agent on SWE-bench Verified may be the simplest.

- The study found that in some cases, such as with Qwen3-Coder 480B, masking improves the solve rate from 53.8% to 54.8%.

- The results suggest that the complexity of using LLM summarization may not always offer tangible performance benefits compared to simpler strategies.

点此查看论文截图

AI-SearchPlanner: Modular Agentic Search via Pareto-Optimal Multi-Objective Reinforcement Learning

Authors:Lang Mei, Zhihan Yang, Chong Chen

Recent studies have explored integrating Large Language Models (LLMs) with search engines to leverage both the LLMs’ internal pre-trained knowledge and external information. Specially, reinforcement learning (RL) has emerged as a promising paradigm for enhancing LLM reasoning through multi-turn interactions with search engines. However, existing RL-based search agents rely on a single LLM to handle both search planning and question-answering (QA) tasks in an end-to-end manner, which limits their ability to optimize both capabilities simultaneously. In practice, sophisticated AI search systems often employ a large, frozen LLM (e.g., GPT-4, DeepSeek-R1) to ensure high-quality QA. Thus, a more effective and efficient approach is to utilize a small, trainable LLM dedicated to search planning. In this paper, we propose \textbf{AI-SearchPlanner}, a novel reinforcement learning framework designed to enhance the performance of frozen QA models by focusing on search planning. Specifically, our approach introduces three key innovations: 1) Decoupling the Architecture of the Search Planner and Generator, 2) Dual-Reward Alignment for Search Planning, and 3) Pareto Optimization of Planning Utility and Cost, to achieve the objectives. Extensive experiments on real-world datasets demonstrate that AI SearchPlanner outperforms existing RL-based search agents in both effectiveness and efficiency, while exhibiting strong generalization capabilities across diverse frozen QA models and data domains.

最近的研究探索了将大型语言模型(LLM)与搜索引擎相结合,以利用LLM的内部预训练知识和外部信息。特别是,强化学习(RL)已经成为一种有前景的模式,通过多轮与搜索引擎的互动来提升LLM的推理能力。然而,现有的基于RL的搜索代理依赖于单个LLM以端到端的方式处理搜索规划和问答(QA)任务,这限制了它们同时优化这两种功能的能力。在实践中,复杂的AI搜索系统通常会采用大型、固定的LLM(如GPT-4、DeepSeek-R1)来确保高质量的问答。因此,一个更有效和高效的方法是使用一个小型、可训练的LLM专门用于搜索规划。在本文中,我们提出了\textbf{AI-SearchPlanner},这是一种新型的强化学习框架,旨在通过专注于搜索规划来提高固定问答模型的性能。具体来说,我们的方法引入了三个关键创新点:1)解耦搜索规划器和生成器的架构,2)搜索规划的双奖励对齐,以及3)规划和成本的帕累托优化,以实现目标。在真实数据集上的大量实验表明,AI SearchPlanner在有效性和效率方面优于现有的基于RL的搜索代理,同时在不同的固定问答模型和数据域上表现出强大的泛化能力。

论文及项目相关链接

Summary

大型语言模型(LLM)与搜索引擎的整合研究正在兴起,强化学习(RL)已成为增强LLM推理能力的一种有前途的方法。然而,现有RL-based搜索代理依赖于单一LLM同时处理搜索规划和问答任务,这限制了同时优化这两种能力的能力。本文提出了一种新的强化学习框架AI-SearchPlanner,旨在通过专注于搜索规划来提高冻结问答模型的性能。通过引入三个关键创新点,即搜索规划器和生成器的架构解耦、搜索规划的双奖励对齐和规划效用与成本的帕累托优化,实现了目标。实验表明,AI SearchPlanner在有效性和效率上超越了现有的RL-based搜索代理,同时在不同的冻结问答模型和数据领域表现出强大的泛化能力。

Key Takeaways

- 大型语言模型(LLM)与搜索引擎整合是研究的热点。

- 强化学习(RL)用于增强LLM的推理能力。

- 现有RL-based搜索代理依赖于单一LLM处理搜索规划和问答任务,存在优化限制。

- AI-SearchPlanner框架通过专注于搜索规划来提高冻结问答模型的性能。

- AI-SearchPlanner引入搜索规划器和生成器的架构解耦。

- AI-SearchPlanner采用双奖励对齐实现搜索规划。

点此查看论文截图

Skill-Aligned Fairness in Multi-Agent Learning for Collaboration in Healthcare

Authors:Promise Osaine Ekpo, Brian La, Thomas Wiener, Saesha Agarwal, Arshia Agrawal, Gonzalo Gonzalez-Pumariega, Lekan P. Molu, Angelique Taylor

Fairness in multi-agent reinforcement learning (MARL) is often framed as a workload balance problem, overlooking agent expertise and the structured coordination required in real-world domains. In healthcare, equitable task allocation requires workload balance or expertise alignment to prevent burnout and overuse of highly skilled agents. Workload balance refers to distributing an approximately equal number of subtasks or equalised effort across healthcare workers, regardless of their expertise. We make two contributions to address this problem. First, we propose FairSkillMARL, a framework that defines fairness as the dual objective of workload balance and skill-task alignment. Second, we introduce MARLHospital, a customizable healthcare-inspired environment for modeling team compositions and energy-constrained scheduling impacts on fairness, as no existing simulators are well-suited for this problem. We conducted experiments to compare FairSkillMARL in conjunction with four standard MARL methods, and against two state-of-the-art fairness metrics. Our results suggest that fairness based solely on equal workload might lead to task-skill mismatches and highlight the need for more robust metrics that capture skill-task misalignment. Our work provides tools and a foundation for studying fairness in heterogeneous multi-agent systems where aligning effort with expertise is critical.

在多智能体强化学习(MARL)中,公平性常被构建为工作量平衡问题,忽视了智能体的专业能力和真实世界中需要的结构化协调。在医疗保健领域,公平的任务分配要求工作量平衡或专业能力与防止疲劳和高度熟练智能体的过度使用相匹配。工作量平衡是指无论医疗工作者的专业能力如何,将大约相等数量的子任务或均衡的努力分配给医疗工作者。为了解决此问题,我们做出了两项贡献。首先,我们提出了FairSkillMARL框架,该框架将公平性定义为工作量平衡和技能任务对齐的双重目标。其次,我们引入了MARLHospital,这是一个可定制的医疗保健启发环境,用于模拟团队构成和能量受限调度对公平性的影响,因为没有现有的模拟器适合这个问题。我们进行了实验,比较了FairSkillMARL与四种标准MARL方法和两种最先进的公平性指标的组合。我们的结果表明,仅基于平等工作量的公平性可能会导致任务技能不匹配,并强调需要更稳健的指标来捕捉技能任务不匹配的情况。我们的工作提供了研究异质多智能体系统中公平性的工具和基础,在那里,努力与专业能力相匹配至关重要。

论文及项目相关链接

Summary

在强化学习多智能体(MARL)中的公平性通常被构建为工作量平衡问题,忽略了智能体的专长和现实世界领域所需的结构化协调。在医疗领域,公平的任务分配要求工作量平衡或专业技能对齐以防止智能体燃烧和过度使用。工作量平衡是指无论智能体专业知识如何,将大约相等数量的子任务或均衡的努力分配给医疗工作者。我们对此问题做出了两个贡献。首先,我们提出了FairSkillMARL框架,该框架将公平性定义为工作量平衡和技能任务对齐的双重目标。其次,我们引入了MARLHospital,这是一个可定制的医疗保健环境,用于模拟团队构成和能量受限调度对公平性的影响,因为没有现有的模拟器适合这个问题。我们的实验结果表明,仅基于工作量的公平性可能导致任务技能不匹配,并强调需要更稳健的指标来捕捉技能任务的不对齐情况。我们的工作提供了研究异质多智能体系统中公平性的工具和基础,其中努力与专业技能的对齐至关重要。

Key Takeaways

- 多智能体强化学习(MARL)中的公平性不仅关注工作量平衡,还需考虑智能体的专业技能和结构化协调。

- 在医疗领域,公平的任务分配需要同时实现工作量平衡和技能对齐,以预防智能体过度使用或资源浪费。

- 现有模拟器并不适合解决这一问题,因此需要定制化的医疗环境来模拟团队构成和能量限制调度的公平性影响。

- 单纯基于工作量的公平性可能导致任务与技能之间的不匹配。

- 需要更稳健的指标来衡量技能任务的对齐情况,以更全面地评估公平性。

- FairSkillMARL框架为异质多智能体系统中的公平性提供了研究工具和基础。

点此查看论文截图

Graph RAG as Human Choice Model: Building a Data-Driven Mobility Agent with Preference Chain

Authors:Kai Hu, Parfait Atchade-Adelomou, Carlo Adornetto, Adrian Mora-Carrero, Luis Alonso-Pastor, Ariel Noyman, Yubo Liu, Kent Larson

Understanding human behavior in urban environments is a crucial field within city sciences. However, collecting accurate behavioral data, particularly in newly developed areas, poses significant challenges. Recent advances in generative agents, powered by Large Language Models (LLMs), have shown promise in simulating human behaviors without relying on extensive datasets. Nevertheless, these methods often struggle with generating consistent, context-sensitive, and realistic behavioral outputs. To address these limitations, this paper introduces the Preference Chain, a novel method that integrates Graph Retrieval-Augmented Generation (RAG) with LLMs to enhance context-aware simulation of human behavior in transportation systems. Experiments conducted on the Replica dataset demonstrate that the Preference Chain outperforms standard LLM in aligning with real-world transportation mode choices. The development of the Mobility Agent highlights potential applications of proposed method in urban mobility modeling for emerging cities, personalized travel behavior analysis, and dynamic traffic forecasting. Despite limitations such as slow inference and the risk of hallucination, the method offers a promising framework for simulating complex human behavior in data-scarce environments, where traditional data-driven models struggle due to limited data availability.

在城市科学领域,理解人类在城市环境中的行为是一个至关重要的课题。然而,收集准确的行为数据,特别是在新开发地区,仍然面临巨大挑战。最近,由大型语言模型(LLM)驱动的生成代理的进展显示出在没有依赖大量数据集的情况下模拟人类行为的潜力。然而,这些方法通常难以生成一致、与上下文相关和逼真的行为输出。为了解决这些局限性,本文提出了一种新方法——偏好链。该方法结合了图检索增强生成(RAG)与LLM技术,提高了在交通系统中模拟上下文感知的人类行为的能力。在Replica数据集上进行的实验表明,与标准LLM相比,偏好链在符合现实世界交通模式选择方面表现出更好的性能。移动代理的开发突显了所提出方法在模拟新兴城市的城市移动性、个性化旅行行为分析和动态交通预测中的潜在应用。尽管存在推理速度慢和产生幻觉的风险等局限性,但该方法为数据稀缺环境中模拟复杂人类行为提供了一个有前景的框架,传统的数据驱动模型由于数据有限而面临挑战。

论文及项目相关链接

Summary

城市环境中人类行为的理解是城市科学的重要领域。收集准确的行为数据,尤其是在新开发地区,面临挑战。最近,基于大型语言模型(LLM)的生成代理的进步在模拟人类行为方面显示出希望,但生成一致、情境敏感和现实的行为输出方面仍存在困难。为解决这些问题,本文提出Preference Chain方法,它结合了图检索增强生成(RAG)和LLM,提高交通系统中上下文感知的模拟行为。在Replica数据集上的实验表明,Preference Chain在符合现实交通模式选择方面优于标准LLM。该文章开发了一个移动代理,展示了该方法在城市流动性建模、个性化旅行行为分析和动态交通预测中的潜在应用。尽管存在推理速度慢和产生幻觉的风险等局限性,但对于数据稀缺环境中模拟复杂人类行为而言,该方法提供了一个有前途的框架。

Key Takeaways

- 理解城市环境中的人类行为是城市科学的重要领域,但收集准确的行为数据具有挑战性。

- 基于大型语言模型的生成代理在模拟人类行为方面展现出潜力,但仍存在生成一致、情境敏感和现实的行为输出方面的困难。

- Preference Chain方法结合了图检索增强生成(RAG)和LLM,以提高交通系统中的上下文感知模拟行为。

- Preference Chain方法在符合现实交通模式选择方面优于标准LLM。

- 开发了一个移动代理,展示了在城市流动性建模、个性化旅行行为分析和动态交通预测中的应用潜力。

- 该方法尽管存在局限性,如推理速度慢和产生幻觉的风险,但在数据稀缺环境中模拟复杂人类行为方面仍具有前景。

点此查看论文截图

First Steps Towards Overhearing LLM Agents: A Case Study With Dungeons & Dragons Gameplay

Authors:Andrew Zhu, Evan Osgood, Chris Callison-Burch

Much work has been done on conversational LLM agents which directly assist human users with tasks. We present an alternative paradigm for interacting with LLM agents, which we call “overhearing agents”. These overhearing agents do not actively participate in conversation – instead, they “listen in” on human-to-human conversations and perform background tasks or provide suggestions to assist the user. In this work, we explore the overhearing agents paradigm through the lens of Dungeons & Dragons gameplay. We present an in-depth study using large multimodal audio-language models as overhearing agents to assist a Dungeon Master. We perform a human evaluation to examine the helpfulness of such agents and find that some large audio-language models have the emergent ability to perform overhearing agent tasks using implicit audio cues. Finally, we release Python libraries and our project code to support further research into the overhearing agents paradigm at https://github.com/zhudotexe/overhearing_agents.

对于对话式LLM代理(即直接辅助人类用户完成任务的代理)已经进行了大量研究。我们提出了一种与LLM代理交互的替代范式,称为“旁听代理”。这些旁听代理不会积极参与对话——相反,它们会“旁听”人类之间的对话,并在后台执行任务或提供建议以协助用户。在这项工作中,我们从《龙与地下城》(Dungeons & Dragons)游戏的角度探索旁听代理范式。我们进行了一项深入研究,使用大型多模态音频语言模型作为旁听代理来辅助游戏主持人(Dungeon Master)。我们进行了人类评估,以检查此类代理的帮助程度,并发现某些大型音频语言模型具有通过隐式音频线索执行旁听代理任务的涌现能力。最后,我们在https://github.com/zhudotexe/overhearing_agents上发布了Python库和项目代码,以支持对旁听代理范式的进一步研究。

论文及项目相关链接

PDF 9 pages, 5 figures. COLM 2025 Workshop on AI Agents

Summary:提出了另一种与LLM交互的方式——“旁听代理”。这些旁听代理不主动参与对话,而是“倾听”人与人之间的对话,执行后台任务或提供建议以协助用户。通过深度研究使用大型多媒体语音模型作为协助角色D&D游戏玩家的旁听代理进行了人类评估,发现某些大型语音模型具有通过隐性语音提示执行旁听代理任务的涌现能力。最后,我们发布了Python库和项目代码以支持进一步的研究。

Key Takeaways:

- 介绍了一种新型的LLM交互方式——“旁听代理”,这种代理通过“倾听”对话并在后台执行任务或提供建议来协助用户。

- 在D&D游戏中深入探讨了旁听代理的使用方式。

- 通过人类评估发现大型多媒体语音模型可以作为有效的旁听代理。

- 旁听代理可以利用隐性语音提示完成任务,展现出其独特的优势。

- 旁听代理的应用具有广泛的应用前景,可以应用于多种场景下的辅助任务。

- 公开了Python库和项目代码,为其他研究者提供了研究旁听代理的基础和支持。

点此查看论文截图

Advancing Mobile GUI Agents: A Verifier-Driven Approach to Practical Deployment

Authors:Gaole Dai, Shiqi Jiang, Ting Cao, Yuanchun Li, Yuqing Yang, Rui Tan, Mo Li, Lili Qiu

We propose V-Droid, a mobile GUI task automation agent. Unlike previous mobile agents that utilize Large Language Models (LLMs) as generators to directly generate actions at each step, V-Droid employs LLMs as verifiers to evaluate candidate actions before making final decisions. To realize this novel paradigm, we introduce a comprehensive framework for constructing verifier-driven mobile agents: the discretized action space construction coupled with the prefilling-only workflow to accelerate the verification process, the pair-wise progress preference training to significantly enhance the verifier’s decision-making capabilities, and the scalable human-agent joint annotation scheme to efficiently collect the necessary data at scale. V-Droid obtains a substantial task success rate across several public mobile task automation benchmarks: 59.5% on AndroidWorld, 38.3% on AndroidLab, and 49% on MobileAgentBench, surpassing existing agents by 5.2%, 2.1%, and 9%, respectively. Furthermore, V-Droid achieves a remarkably low latency of 4.3s per step, which is 6.1X faster compared with existing mobile agents. The source code is available at https://github.com/V-Droid-Agent/V-Droid.

我们提出V-Droid,一种移动GUI任务自动化代理。与以往利用大型语言模型(LLM)作为生成器直接生成每一步的动作的移动代理不同,V-Droid采用LLM作为验证器,在做出最终决定之前评估候选动作。为了实现这一新模式,我们引入了一个全面的框架来构建验证器驱动的移动代理:离散动作空间构建与只预填充的工作流程相结合以加速验证过程,配对进度偏好训练以显著提高验证器的决策能力,以及可扩展的人机联合注释方案以有效地大规模收集必要数据。V-Droid在多个公共移动任务自动化基准测试中获得了显著的任务成功率:在AndroidWorld上为59.5%,在AndroidLab上为38.3%,在MobileAgentBench上为49%,分别超越了现有代理5.2%、2.1%和9%。此外,V-Droid的每秒步骤执行速度达到惊人的4.3秒,与现有移动代理相比,速度提高了6.1倍。源代码可在https://github.com/V-Droid-Agent/V-Droid找到。

论文及项目相关链接

PDF add baselines, add source code link

Summary:

我们提出了一个名为V-Droid的移动GUI任务自动化代理。与以往使用大型语言模型(LLMs)作为生成器的移动代理不同,V-Droid将LLMs用作验证器,在做出最终决策之前评估候选动作。为实现这一新模式,我们引入了全面的框架构建验证器驱动的移动代理:离散动作空间构建与只预填充工作流程加速验证过程,配对进度偏好训练显著提高验证器的决策能力,以及可扩展的人机联合注释方案有效地大规模收集必要数据。V-Droid在多个公共移动任务自动化基准测试中取得了显著的任务成功率:在AndroidWorld上达到59.5%,在AndroidLab上达到38.3%,在MobileAgentBench上达到49%,较现有代理分别提高了5.2%、2.1%和9%。此外,V-Droid每步延迟时间仅为4.3秒,比现有移动代理快6.1倍。源代码可在网站链接找到。

Key Takeaways:

- V-Droid是一个移动GUI任务自动化代理,采用验证器驱动模式,使用大型语言模型(LLMs)进行动作评估。

- V-Droid引入了一种新的框架构建验证器驱动的移动代理,包括离散动作空间构建、只预填充工作流程、配对进度偏好训练等。

- V-Droid在多个公共移动任务自动化基准测试中取得了显著成绩,任务成功率较高。

- V-Droid实现了低延迟,每步仅4.3秒,较现有移动代理快6.1倍。

- V-Droid具有可扩展性,可通过人机联合注释方案大规模收集数据。

- V-Droid源代码可公开获取,便于进一步研究和开发。

- V-Droid将LLMs用作验证器,提高了决策的准确性和效率。

点此查看论文截图

BRIDGE: Bootstrapping Text to Control Time-Series Generation via Multi-Agent Iterative Optimization and Diffusion Modeling

Authors:Hao Li, Yu-Hao Huang, Chang Xu, Viktor Schlegel, Renhe Jiang, Riza Batista-Navarro, Goran Nenadic, Jiang Bian

Time-series Generation (TSG) is a prominent research area with broad applications in simulations, data augmentation, and counterfactual analysis. While existing methods have shown promise in unconditional single-domain TSG, real-world applications demand for cross-domain approaches capable of controlled generation tailored to domain-specific constraints and instance-level requirements. In this paper, we argue that text can provide semantic insights, domain information and instance-specific temporal patterns, to guide and improve TSG. We introduce ``Text-Controlled TSG’’, a task focused on generating realistic time series by incorporating textual descriptions. To address data scarcity in this setting, we propose a novel LLM-based Multi-Agent framework that synthesizes diverse, realistic text-to-TS datasets. Furthermore, we introduce BRIDGE, a hybrid text-controlled TSG framework that integrates semantic prototypes with text description for supporting domain-level guidance. This approach achieves state-of-the-art generation fidelity on 11 of 12 datasets, and improves controllability by up to 12% on MSE and 6% MAE compared to no text input generation, highlighting its potential for generating tailored time-series data.

时间序列生成(TSG)是一个在模拟、数据增强和反向分析中具有广泛应用的重要研究领域。虽然现有方法在无条件单域TSG方面显示出了一定的前景,但实际应用需要跨域方法,能够适应特定域约束和实例级要求的有控制生成。在本文中,我们主张文本可以提供语义洞察、领域信息和实例特定的时间模式,以指导和改进TSG。我们引入了“文本控制TSG”,这是一项通过融入文本描述来生成真实时间序列的任务。为了解决此环境下的数据稀缺问题,我们提出了一个基于大型语言模型的多智能体框架,该框架可以合成多样且真实的文本到时间序列数据集。此外,我们还推出了BRIDGE,一个混合文本控制TSG框架,它将语义原型与文本描述相结合,以支持域级指导。该方法在12个数据集中有11个数据集上实现了最先进的生成保真度,并且在均方误差和平均绝对误差上比没有文本输入的生成提高了高达12%和6%的控制能力,突显其在生成定制时间序列数据方面的潜力。

论文及项目相关链接

PDF ICML 2025 Main Conference

Summary

文本介绍了时间序列生成(TSG)的研究领域,并指出了现有方法在处理无条件单一领域TSG时的局限性。为了满足现实世界应用中对跨领域TSG的需求,该文提出了文本控制TSG任务,旨在通过引入文本描述来生成真实的时间序列。为解决数据稀缺问题,该文提出了一种基于大型语言模型的多智能体框架,用于合成多样且真实的文本对时间序列数据集。同时,介绍了一种混合的文本控制TSG框架BRIDGE,它结合了语义原型和文本描述,以支持领域级别的指导。该框架在多个数据集上实现了最先进的生成保真度,并且在均方误差和平均绝对误差方面与无文本输入生成相比提高了控制和生成能力。

Key Takeaways

- 时间序列生成(TSG)是一个具有广泛应用前景的研究领域,如模拟、数据增强和反向事实分析。

- 现有方法在无条件单一领域TSG上的表现虽有所承诺,但现实世界的需求更加侧重于跨领域的TSG方法。

- 文本可以提供语义洞察、领域信息和实例特定的时间模式,以指导和改进TSG。

- 引入文本控制TSG任务,旨在通过引入文本描述来生成真实的时间序列。

- 提出一种基于大型语言模型的多智能体框架,用于合成多样且真实的文本对时间序列数据集,以解决数据稀缺问题。

- 介绍了一种混合的文本控制TSG框架BRIDGE,该框架结合了语义原型和文本描述,以提高生成时间序列数据的控制和保真度。

点此查看论文截图

Hierarchical Multi-agent Reinforcement Learning for Cyber Network Defense

Authors:Aditya Vikram Singh, Ethan Rathbun, Emma Graham, Lisa Oakley, Simona Boboila, Alina Oprea, Peter Chin

Recent advances in multi-agent reinforcement learning (MARL) have created opportunities to solve complex real-world tasks. Cybersecurity is a notable application area, where defending networks against sophisticated adversaries remains a challenging task typically performed by teams of security operators. In this work, we explore novel MARL strategies for building autonomous cyber network defenses that address challenges such as large policy spaces, partial observability, and stealthy, deceptive adversarial strategies. To facilitate efficient and generalized learning, we propose a hierarchical Proximal Policy Optimization (PPO) architecture that decomposes the cyber defense task into specific sub-tasks like network investigation and host recovery. Our approach involves training sub-policies for each sub-task using PPO enhanced with cybersecurity domain expertise. These sub-policies are then leveraged by a master defense policy that coordinates their selection to solve complex network defense tasks. Furthermore, the sub-policies can be fine-tuned and transferred with minimal cost to defend against shifts in adversarial behavior or changes in network settings. We conduct extensive experiments using CybORG Cage 4, the state-of-the-art MARL environment for cyber defense. Comparisons with multiple baselines across different adversaries show that our hierarchical learning approach achieves top performance in terms of convergence speed, episodic return, and several interpretable metrics relevant to cybersecurity, including the fraction of clean machines on the network, precision, and false positives.

近期多智能体强化学习(MARL)的进步为解决复杂现实世界任务提供了机会。网络安全是一个显著的应用领域,防御网络免受高级对手的攻击仍然是一项由安全操作团队执行的挑战任务。在这项工作中,我们探索了用于构建自主网络防御的新型MARL策略,以解决诸如策略空间大、部分可观察性以及隐蔽、欺骗性对抗策略等挑战。为了促进高效且通用的学习,我们提出了一种分层的近端策略优化(PPO)架构,该架构将网络防御任务分解为特定的子任务,如网络调查和主机恢复。我们的方法涉及使用增强有网络安全领域专业知识的PPO为每个子任务训练子策略。然后,主防御策略利用这些子策略,协调它们的选择以解决复杂的网络防御任务。此外,子策略可以进行微调,并以最低的成本转移,以应对对抗行为的转变或网络设置的变化。我们在CybORG Cage 4这一先进的网络防御MARL环境中进行了大量实验。与不同对手的多个基准线相比,我们的分层学习方法在收敛速度、每集收益以及网络安全相关的多个可解释指标(包括网络上的干净机器比例、精度和误报)方面均取得了最佳性能。

论文及项目相关链接

PDF 13 pages, 7 figures, RLC Paper

Summary

近期多智能体强化学习(MARL)的进展为解决实际世界的复杂任务提供了机会。本文探讨了将MARL策略应用于网络安全领域,以构建自主网络防御系统。提出一种层次化的近端策略优化(PPO)架构,将网络防御任务分解为子任务,如网络调查和主机恢复等。通过结合网络安全领域专业知识增强PPO,训练针对每个子任务的子策略。一个主防御策略协调子策略的选择,以解决复杂的网络防御任务。实验表明,该方法在收敛速度、每集回报以及网络安全相关的可解释指标上均表现出最佳性能。

Key Takeaways

- 多智能体强化学习(MARL)在解决复杂真实任务中具有优势,特别是在网络安全领域。

- 提出了一种层次化的近端策略优化(PPO)架构,针对网络防御任务进行分解并训练子策略。

- 结合网络安全领域专业知识增强PPO,提高学习效率。

- 主防御策略协调子策略的选择,实现高效的网络防御。

- 子策略可针对对手行为的转变或网络设置的变动进行微调及转移。

- 在CybORG Cage 4环境下的实验表明,该方法在多个关键指标上达到最佳性能。

点此查看论文截图

HyperAgent: Generalist Software Engineering Agents to Solve Coding Tasks at Scale

Authors:Huy Nhat Phan, Tien N. Nguyen, Phong X. Nguyen, Nghi D. Q. Bui

Large Language Models (LLMs) have revolutionized software engineering (SE), showcasing remarkable proficiency in various coding tasks. Despite recent advancements that have enabled the creation of autonomous software agents utilizing LLMs for end-to-end development tasks, these systems are typically designed for specific SE functions. We introduce HyperAgent, an innovative generalist multi-agent system designed to tackle a wide range of SE tasks across different programming languages by mimicking the workflows of human developers. HyperAgent features four specialized agents-Planner, Navigator, Code Editor, and Executor-capable of handling the entire lifecycle of SE tasks, from initial planning to final verification. HyperAgent sets new benchmarks in diverse SE tasks, including GitHub issue resolution on the renowned SWE-Bench benchmark, outperforming robust baselines. Furthermore, HyperAgent demonstrates exceptional performance in repository-level code generation (RepoExec) and fault localization and program repair (Defects4J), often surpassing state-of-the-art baselines.

大型语言模型(LLM)已经彻底改变了软件工程(SE),在各种编码任务中展现了惊人的能力。尽管最近的进步已经能够利用大型语言模型创建自主软件代理来执行端到端的开发任务,但这些系统通常是针对特定的软件工程功能设计的。我们引入了HyperAgent,这是一个创新的全能多智能体系统,通过模仿人类开发者的工作流程,旨在解决跨不同编程语言的广泛软件工程任务。HyperAgent拥有四个专业智能体:规划者、导航者、代码编辑器和执行者,能够处理软件工程任务的整个生命周期,从初步规划到最终验证。HyperAgent在多样化的软件工程任务中设定了新的基准,包括在著名的SWE-Bench基准上进行GitHub问题解析,超越了稳健的基线。此外,HyperAgent在仓库级代码生成(RepoExec)、故障定位和程序修复(Defects4J)方面表现出卓越的性能,经常超越最新技术基线。

论文及项目相关链接

PDF 49 pages

Summary

大型语言模型(LLM)在软件工程(SE)领域带来革命性变革,展现出在各种编程任务中的卓越能力。尽管近期出现了利用LLM进行端到端开发任务的自主软件代理系统,但它们通常仅限于特定的SE功能。我们推出HyperAgent,一个创新的通用多代理系统,旨在通过模仿人类开发者的流程,解决跨不同编程语言的广泛SE任务。HyperAgent由四个专业代理组成——计划者、导航者、代码编辑器和执行者,能够处理从初始规划到最终验证的整个SE任务生命周期。HyperAgent在多样化的SE任务中设定了新的基准,如在著名的SWE-Bench基准上进行GitHub问题解析,超越了稳健的基线。此外,HyperAgent在仓库级代码生成(RepoExec)、故障定位和程序修复(Defects4J)等方面表现出卓越的性能,经常超越最新技术基线。

Key Takeaways

- 大型语言模型在软件工程领域展现卓越能力,引发革命性变革。

- HyperAgent是一个多代理系统,旨在解决跨不同编程语言的广泛软件工程任务。

- HyperAgent由四个专业代理组成,分别负责计划、导航、代码编辑和执行,处理整个软件任务生命周期。

- HyperAgent在多样化的软件工程任务中表现优异,如GitHub问题解析、仓库级代码生成和故障定位与程序修复等。

- HyperAgent系统能够超越现有技术基线,展现出强大的性能。

- HyperAgent通过模仿人类开发者的流程进行设计,具有通用性,可以适应不同的软件任务需求。

点此查看论文截图