⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-09-10 更新

Impact of Labeling Inaccuracy and Image Noise on Tooth Segmentation in Panoramic Radiographs using Federated, Centralized and Local Learning

Authors:Johan Andreas Balle Rubak, Khuram Naveed, Sanyam Jain, Lukas Esterle, Alexandros Iosifidis, Ruben Pauwels

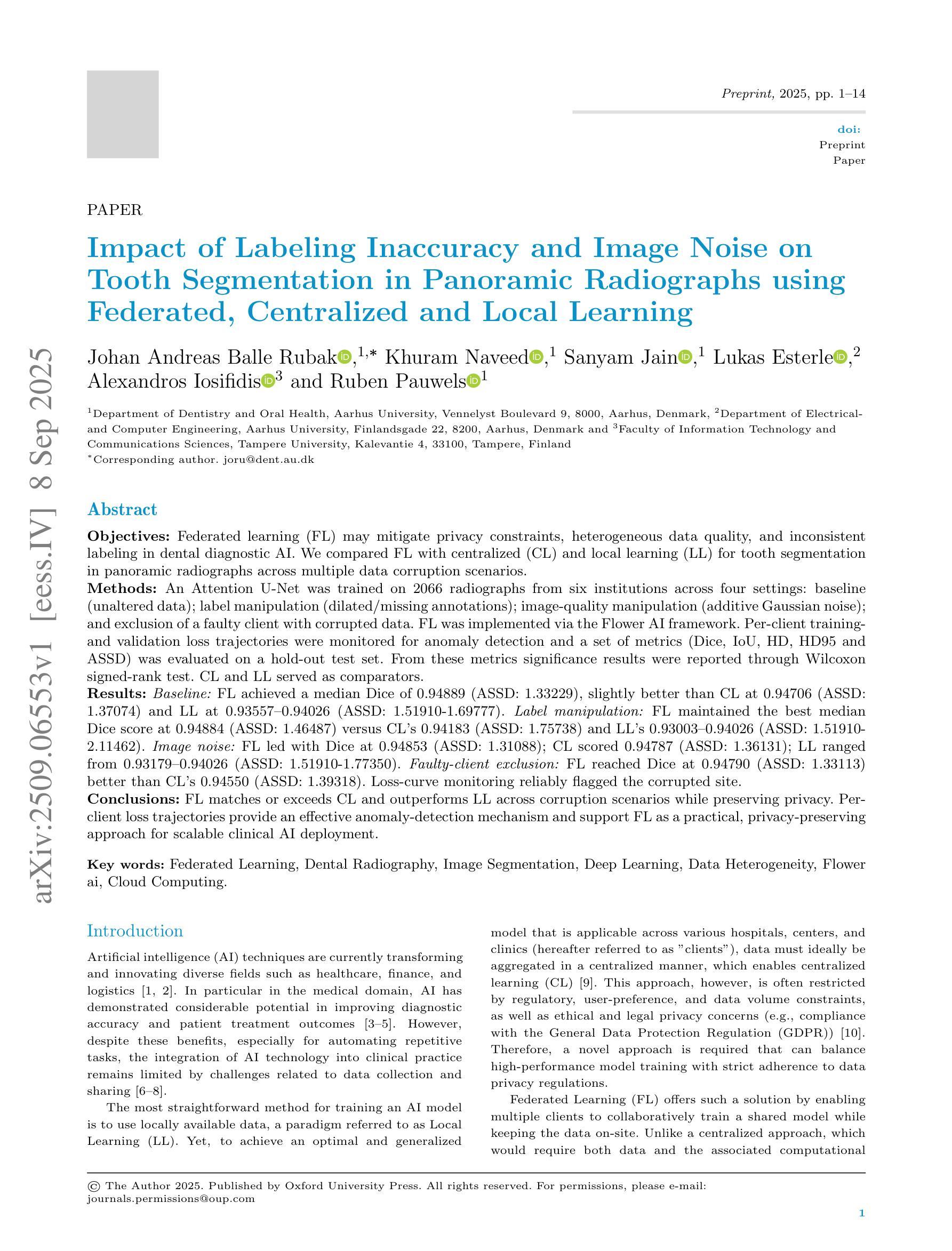

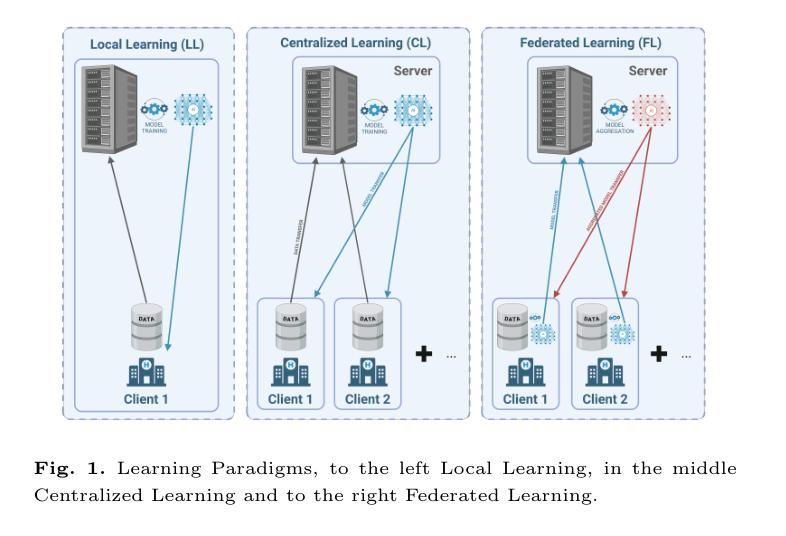

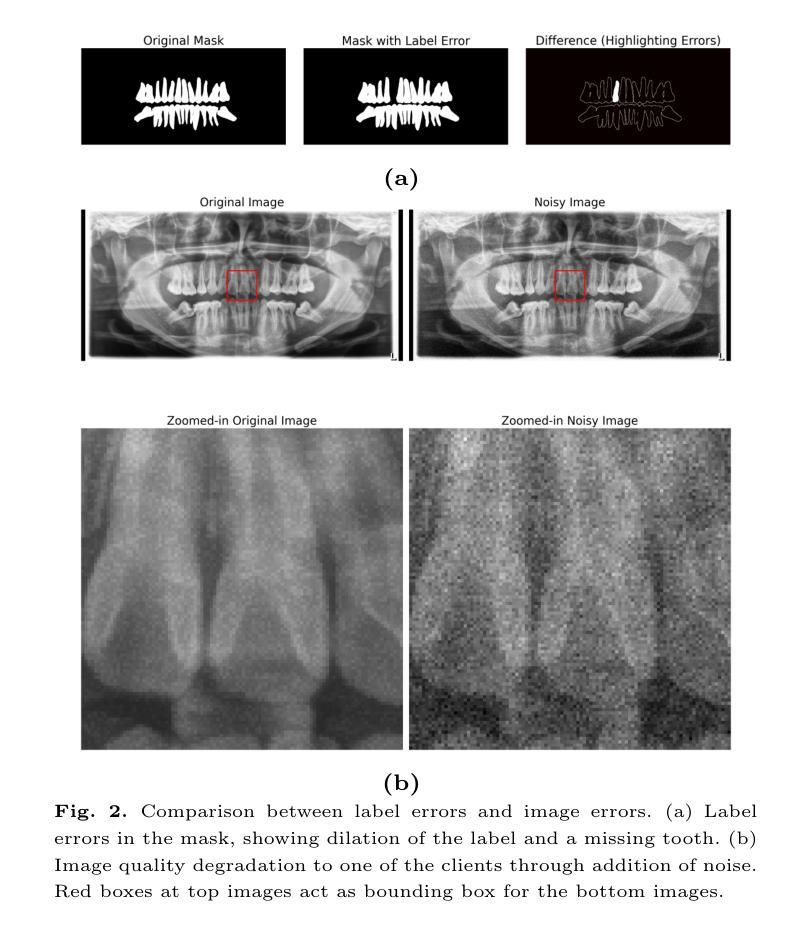

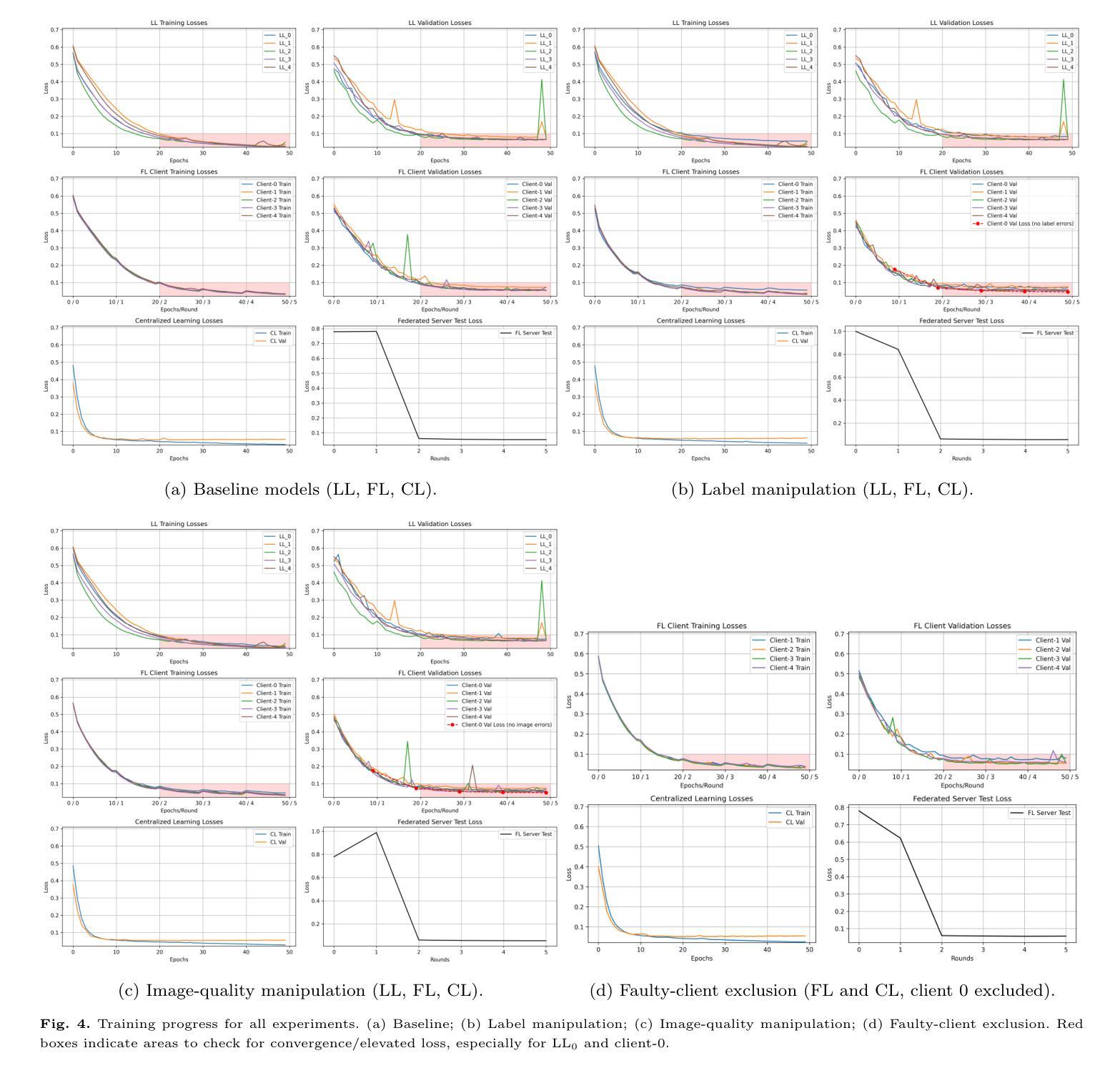

Objectives: Federated learning (FL) may mitigate privacy constraints, heterogeneous data quality, and inconsistent labeling in dental diagnostic AI. We compared FL with centralized (CL) and local learning (LL) for tooth segmentation in panoramic radiographs across multiple data corruption scenarios. Methods: An Attention U-Net was trained on 2066 radiographs from six institutions across four settings: baseline (unaltered data); label manipulation (dilated/missing annotations); image-quality manipulation (additive Gaussian noise); and exclusion of a faulty client with corrupted data. FL was implemented via the Flower AI framework. Per-client training- and validation-loss trajectories were monitored for anomaly detection and a set of metrics (Dice, IoU, HD, HD95 and ASSD) was evaluated on a hold-out test set. From these metrics significance results were reported through Wilcoxon signed-rank test. CL and LL served as comparators. Results: Baseline: FL achieved a median Dice of 0.94889 (ASSD: 1.33229), slightly better than CL at 0.94706 (ASSD: 1.37074) and LL at 0.93557-0.94026 (ASSD: 1.51910-1.69777). Label manipulation: FL maintained the best median Dice score at 0.94884 (ASSD: 1.46487) versus CL’s 0.94183 (ASSD: 1.75738) and LL’s 0.93003-0.94026 (ASSD: 1.51910-2.11462). Image noise: FL led with Dice at 0.94853 (ASSD: 1.31088); CL scored 0.94787 (ASSD: 1.36131); LL ranged from 0.93179-0.94026 (ASSD: 1.51910-1.77350). Faulty-client exclusion: FL reached Dice at 0.94790 (ASSD: 1.33113) better than CL’s 0.94550 (ASSD: 1.39318). Loss-curve monitoring reliably flagged the corrupted site. Conclusions: FL matches or exceeds CL and outperforms LL across corruption scenarios while preserving privacy. Per-client loss trajectories provide an effective anomaly-detection mechanism and support FL as a practical, privacy-preserving approach for scalable clinical AI deployment.

目标:联邦学习(FL)可能缓解隐私限制、数据质量差异以及牙科诊断人工智能中的标签不一致问题。我们在多个数据损坏场景中,将联邦学习与集中式学习(CL)和本地学习(LL)在全景放射图像中的牙齿分割进行比较。方法:在来自六个机构的2066幅放射图像上训练注意力U-Net,包括四种设置:基线(未更改数据)、标签操作(扩张/缺失注释)、图像质量操作(添加高斯噪声)以及排除具有损坏数据的故障客户端。联邦学习通过Flower AI框架实现。我们监测每个客户端的训练和验证损失轨迹以进行异常检测,并在保留的测试集上评估一系列指标(Dice、IoU、HD、HD95和ASSD)。通过Wilcoxon符号秩检验报告了这些指标的显著性结果。CL和LL作为对照。结果:基线:联邦学习的中位Dice系数为0.94889(ASSD:1.33229),略优于集中的0.94706(ASSD:1.37074)和本地的0.93557-0.94026(ASSD:1.51910-1.69777)。标签操作:联邦学习保持最佳的中位Dice评分,达到0.94884(ASSD:1.46487),而集中的为0.94183(ASSD:1.75738),本地的为0.93003-0.94026(ASSD:1.51910-2.11462)。图像噪声:联邦学习的Dice系数为0.94853(ASSD:1.31088);集中的为0.94787(ASSD:1.36131);本地的范围为0.93179-0.94026(ASSD:1.51910-1.77350)。排除故障客户端:联邦学习的Dice系数为0.94790(ASSD:1.33113),优于集中的0.94550(ASSD:1.39318)。损失曲线监控可靠地标记了损坏的站点。结论:联邦学习在腐蚀场景中与集中学习相匹配或表现更好,并优于本地学习,同时保护了隐私。每个客户端的损失轨迹提供了一种有效的异常检测机制,并支持联邦学习作为一种实用的、保护隐私的方法,可用于可扩展的临床人工智能部署。

论文及项目相关链接

摘要

本文研究了联邦学习(FL)在牙齿全景影像中的牙齿分割任务的应用效果,并与集中学习(CL)和本地学习(LL)进行了比较。在多种数据失真场景下,FL表现稳定,能够在数据质量、标签不一致和隐私约束等方面提供优势。通过注意力U-Net网络在来自六个机构的2066张全景影像上进行训练,并在不同场景下评估模型的性能。结果显示,在基线、标签操作、图像质量操作和排除故障客户等场景下,FL的Dice系数和ASSD指标均表现优秀。此外,FL能够保护用户隐私并具有实际应用价值。总结来说,本文验证了联邦学习在处理牙齿影像分割任务时的高效性和实用性。

关键见解

- 联邦学习(FL)在处理牙齿全景影像的牙齿分割任务中表现优异,与集中学习(CL)和本地学习(LL)相比具有优势。

- 在多种数据失真场景下,包括标签不一致、图像质量问题和数据腐败等,FL能够保持稳定的性能。

- 通过注意力U-Net网络进行训练,FL在各项评估指标上均表现出良好的性能。

- 联邦学习能够保护用户隐私,具有实际应用价值。

- 通过监控每个客户的训练和验证损失轨迹,可以有效地检测出异常情况,这支持了联邦学习在可伸缩的临床人工智能部署中的实际应用。

- 联邦学习的优点在于能够应对不同的数据问题并保护隐私,使其成为临床人工智能部署的一种实用方法。

点此查看论文截图