⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-09-30 更新

No-Reference Image Contrast Assessment with Customized EfficientNet-B0

Authors:Javad Hassannataj Joloudari, Bita Mesbahzadeh, Omid Zare, Emrah Arslan, Roohallah Alizadehsani, Hossein Moosaei

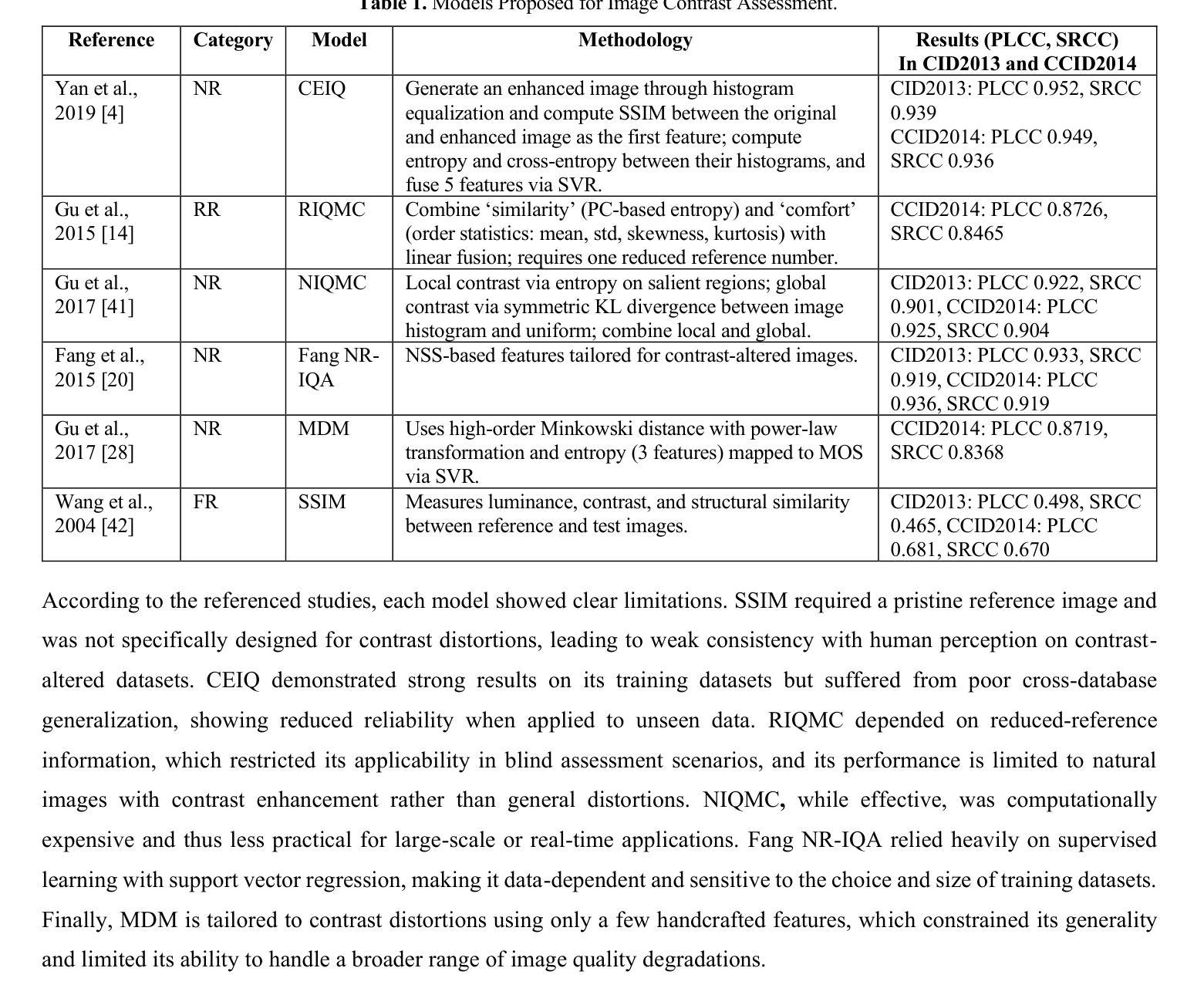

Image contrast was a fundamental factor in visual perception and played a vital role in overall image quality. However, most no reference image quality assessment NR IQA models struggled to accurately evaluate contrast distortions under diverse real world conditions. In this study, we proposed a deep learning based framework for blind contrast quality assessment by customizing and fine-tuning three pre trained architectures, EfficientNet B0, ResNet18, and MobileNetV2, for perceptual Mean Opinion Score, along with an additional model built on a Siamese network, which indicated a limited ability to capture perceptual contrast distortions. Each model is modified with a contrast-aware regression head and trained end to end using targeted data augmentations on two benchmark datasets, CID2013 and CCID2014, containing synthetic and authentic contrast distortions. Performance is evaluated using Pearson Linear Correlation Coefficient and Spearman Rank Order Correlation Coefficient, which assess the alignment between predicted and human rated scores. Among these three models, our customized EfficientNet B0 model achieved state-of-the-art performance with PLCC = 0.9286 and SRCC = 0.9178 on CCID2014 and PLCC = 0.9581 and SRCC = 0.9369 on CID2013, surpassing traditional methods and outperforming other deep baselines. These results highlighted the models robustness and effectiveness in capturing perceptual contrast distortion. Overall, the proposed method demonstrated that contrast aware adaptation of lightweight pre trained networks can yield a high performing, scalable solution for no reference contrast quality assessment suitable for real time and resource constrained applications.

图像对比度是视觉感知的基本要素,对整体图像质量起着至关重要的作用。然而,大多数无参考图像质量评估(NR IQA)模型在真实世界多样化的条件下,难以准确评估对比度的失真。本研究提出了一种基于深度学习的盲对比度质量评估框架,通过对EfficientNet B0、ResNet18和MobileNetV2三种预训练架构进行定制和微调,以感知平均意见得分(MOS)为基准。此外,还构建了一个基于Siamese网络的模型,该模型在捕捉感知对比度失真方面的能力有限。每个模型都配备了一个对比感知回归头(contrast-aware regression head),并通过目标数据增强在包含合成和真实对比度失真的两个基准数据集CID2013和CCID2014上进行端到端训练。性能评估采用皮尔逊线性相关系数(PLCC)和斯皮尔曼秩次相关系数(SRCC),以评估预测分数与人类评分之间的对齐程度。在这三个模型中,我们定制的EfficientNet B0模型在CCID2014上达到了最佳性能,PLCC=0.9286,SRCC=0.9178;在CID2013上,PLCC=0.9581,SRCC=0.9369。该模型超越了传统方法和其他深度基线模型的表现,凸显了其在捕捉感知对比度失真方面的稳健性和有效性。总体而言,该方法证明了对比感知预训练网络的轻量级自适应可以产生一种高性能、可扩展的解决方案,适用于无参考对比度质量评估,适用于实时和资源受限的应用。

论文及项目相关链接

PDF 32 pages, 9 tables, 6 figures

摘要

本文研究无参考图像质量评估(NR IQA)中对比度的评估问题。由于大多数模型在真实世界条件下难以准确评估对比度失真,提出一种基于深度学习的盲对比度质量评估框架。通过微调EfficientNet B0、ResNet18和MobileNetV2三个预训练架构,并结合Siamese网络构建附加模型,以评估对比度失真。修改后的模型具有对比度感知回归头,使用有针对性的数据增强在CID2013和CCID2014两个基准数据集上进行训练。评估性能采用皮尔逊线性相关系数和斯皮尔曼秩次相关系数。结果显示,定制版的EfficientNet B0模型在CCID2014上达到PLCC=0.9286和SRCC=0.9178,在CID2013上达到PLCC=0.9581和SRCC=0.9369,超越传统方法和其他深度基线。证明模型在捕捉感知对比度失真方面的稳健性和有效性。整体而言,所提方法显示,对比度感知的轻量级预训练网络适应可产生高性能、适用于实时和资源受限应用的无参考对比度质量评估解决方案。

关键见解

- 无参考图像质量评估(NR IQA)在对比度的评估上存在挑战。

- 提出一种基于深度学习的盲对比度质量评估框架,通过微调多种预训练模型来解决此问题。

- 引入对比度感知回归头,增强模型对对比度失真的捕捉能力。

- 采用有针对性的数据增强来提高模型的性能。

- 在两个基准数据集CID2013和CCID2014上进行实验,验证模型性能。

- 定制版的EfficientNet B0模型表现最佳,达到很高的评估性能。

点此查看论文截图