⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-10-01 更新

Deep Learning for Oral Health: Benchmarking ViT, DeiT, BEiT, ConvNeXt, and Swin Transformer

Authors:Ajo Babu George, Sadhvik Bathini, Niranjana S R

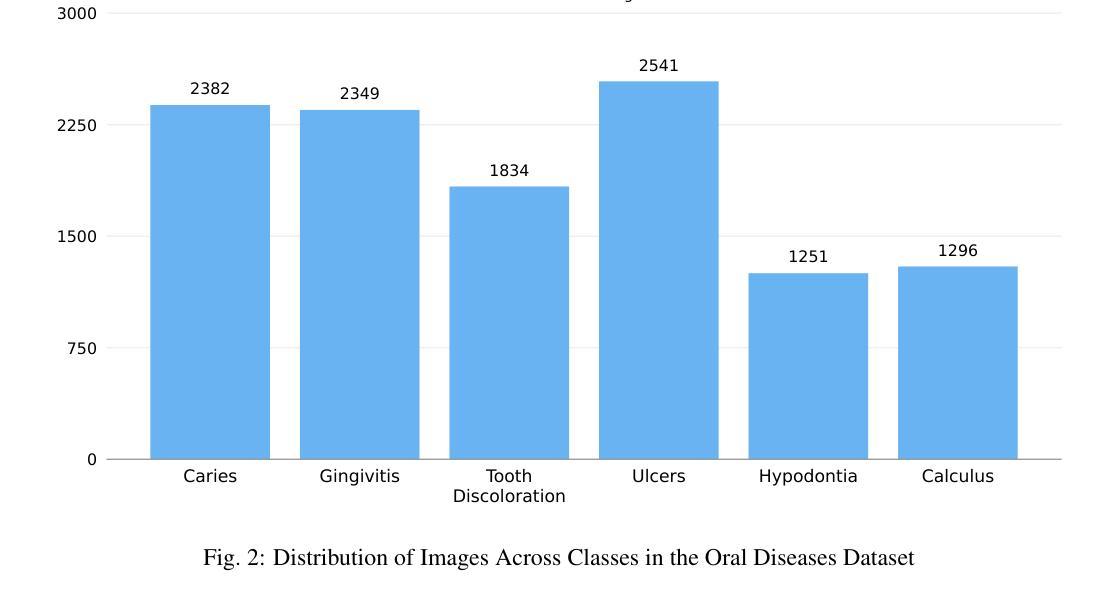

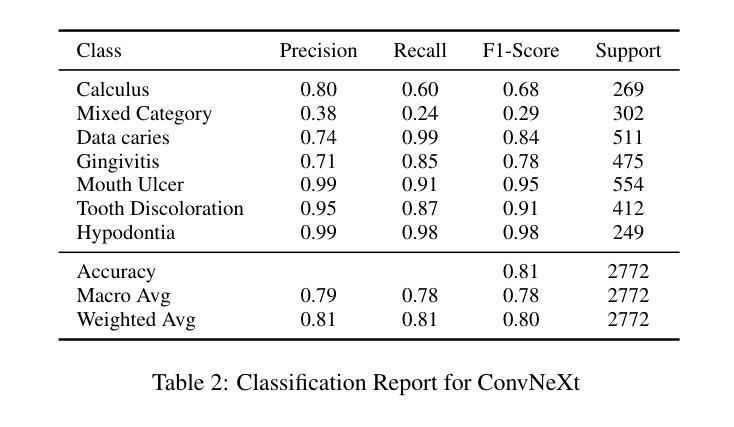

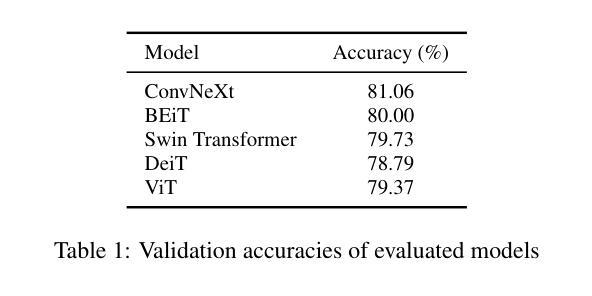

Objective: The aim of this study was to systematically evaluate and compare the performance of five state-of-the-art transformer-based architectures - Vision Transformer (ViT), Data-efficient Image Transformer (DeiT), ConvNeXt, Swin Transformer, and Bidirectional Encoder Representation from Image Transformers (BEiT) - for multi-class dental disease classification. The study specifically focused on addressing real-world challenges such as data imbalance, which is often overlooked in existing literature. Study Design: The Oral Diseases dataset was used to train and validate the selected models. Performance metrics, including validation accuracy, precision, recall, and F1-score, were measured, with special emphasis on how well each architecture managed imbalanced classes. Results: ConvNeXt achieved the highest validation accuracy at 81.06, followed by BEiT at 80.00 and Swin Transformer at 79.73, all demonstrating strong F1-scores. ViT and DeiT achieved accuracies of 79.37 and 78.79, respectively, but both struggled particularly with Caries-related classes. Conclusions: ConvNeXt, Swin Transformer, and BEiT showed reliable diagnostic performance, making them promising candidates for clinical application in dental imaging. These findings provide guidance for model selection in future AI-driven oral disease diagnostic tools and highlight the importance of addressing data imbalance in real-world scenarios

目标:本研究的目的是系统地评估并比较五种最先进的基于transformer的架构(包括Vision Transformer(ViT)、Data-efficient Image Transformer(DeiT)、ConvNeXt、Swin Transformer以及Bidirectional Encoder Representation from Image Transformers(BEiT))在多类别牙科疾病分类中的表现。研究特别关注解决现实世界中的挑战,如数据不平衡问题,这在现有文献中常常被忽视。

研究设计:使用口腔疾病数据集对所选模型进行训练和验证。通过测量验证准确率、精确度、召回率和F1分数等性能指标,特别关注每种架构在管理不平衡类别方面的表现。

结果:ConvNeXt在验证准确率方面表现最佳,达到81.06%,其次是BEiT为80.00%,Swin Transformer为79.73%,三者均表现出较高的F1分数。ViT和DeiT的准确率分别为79.37%和78.79%,但两者在牙病相关类别上表现尤为困难。

论文及项目相关链接

PDF 9 pages,3 figures

摘要

本文旨在系统评估并比较五种先进的基于转换器的架构(包括Vision Transformer、Data-efficient Image Transformer、ConvNeXt、Swin Transformer和Bidirectional Encoder Representation from Image Transformers)在多类牙科疾病分类中的表现。研究特别关注现实世界中常被忽视的数据不平衡等挑战。通过使用口腔疾病数据集进行训练和验证,并测量验证精度、精确度、召回率和F1分数等性能指标,结果显示ConvNeXt以81.06%的验证精度领先,其次是BEiT(80.0%)和Swin Transformer(79.73%)。尽管ViT和DeiT的准确率分别为79.37%和78.79%,但它们对与龋齿相关的类别特别困难。总体而言,ConvNeXt、Swin Transformer和BEit显示出可靠的诊断性能,可作为牙科影像临床应用的候选模型。这些发现为未来人工智能驱动的口腔疾病诊断工具中的模型选择提供了指导,并强调了解决现实世界中的不平衡数据问题的重要性。

关键见解

- 研究评估了五种先进的基于转换器的架构在多类牙科疾病分类中的表现。

- 数据不平衡问题在研究中得到了特别关注。

- ConvNeXt以最高的验证精度表现优异,其次是BEiT和Swin Transformer。

- ViT和DeiT在处理特定疾病类别(如与龋齿相关的类别)时存在困难。

- ConvNeXt、Swin Transformer和BEit在口腔疾病诊断中具有可靠的性能,适合临床应用。

- 研究结果对选择AI驱动的口腔疾病诊断工具中的模型提供了指导。

点此查看论文截图