⚠️ 以下所有内容总结都来自于 大语言模型的能力,如有错误,仅供参考,谨慎使用

🔴 请注意:千万不要用于严肃的学术场景,只能用于论文阅读前的初筛!

💗 如果您觉得我们的项目对您有帮助 ChatPaperFree ,还请您给我们一些鼓励!⭐️ HuggingFace免费体验

2025-10-07 更新

AdaRD-key: Adaptive Relevance-Diversity Keyframe Sampling for Long-form Video understanding

Authors:Xian Zhang, Zexi Wu, Zinuo Li, Hongming Xu, Luqi Gong, Farid Boussaid, Naoufel Werghi, Mohammed Bennamoun

Understanding long-form videos remains a significant challenge for vision–language models (VLMs) due to their extensive temporal length and high information density. Most current multimodal large language models (MLLMs) rely on uniform sampling, which often overlooks critical moments, leading to incorrect responses to queries. In parallel, many keyframe selection approaches impose rigid temporal spacing: once a frame is chosen, an exclusion window suppresses adjacent timestamps to reduce redundancy. While effective at limiting overlap, this strategy frequently misses short, fine-grained cues near important events. Other methods instead emphasize visual diversity but neglect query relevance. We propose AdaRD-Key, a training-free keyframe sampling module for query-driven long-form video understanding. AdaRD-Key maximizes a unified Relevance–Diversity Max-Volume (RD-MV) objective, combining a query-conditioned relevance score with a log-determinant diversity component to yield informative yet non-redundant frames. To handle broad queries with weak alignment to the video, AdaRD-Key employs a lightweight relevance-aware gating mechanism; when the relevance distribution indicates weak alignment, the method seamlessly shifts into a diversity-only mode, enhancing coverage without additional supervision. Our pipeline is training-free, computationally efficient (running in real time on a single GPU), and compatible with existing VLMs in a plug-and-play manner. Extensive experiments on LongVideoBench and Video-MME demonstrate state-of-the-art performance, particularly on long-form videos. Code available at https://github.com/Xian867/AdaRD-Key.

理解长视频仍然是视觉语言模型(VLMs)的一项重大挑战,因为它们的广泛时间长度和信息密度较高。当前大多数多模态大型语言模型(MLLMs)依赖于统一采样,这往往会忽略关键时刻,导致对查询的回应不正确。同时,许多关键帧选择方法都设定了严格的时间间隔:一旦选择了某一帧,排除窗口就会抑制相邻的时间戳以减少冗余。这种策略虽然有效地限制了重叠,但经常错过重要事件附近的短而精细的线索。其他方法则强调视觉多样性,但忽略了查询的相关性。我们提出AdaRD-Key,这是一个用于查询驱动的长视频理解的无需训练的关键帧采样模块。AdaRD-Key最大化统一的关联性多样性最大体积(RD-MV)目标,结合查询条件下的关联分数和对数行列式多样性成分,以产生信息丰富且非冗余的帧。为了处理与视频对齐性较弱的广泛查询,AdaRD-Key采用了一种轻量级的关联感知门控机制;当关联分布显示对齐较弱时,该方法可以无缝地切换到仅多样性模式,增强覆盖范围而无需额外的监督。我们的管道无需训练,计算效率高(可在单个GPU上实时运行),并且可以以即插即用方式与现有的VLMs兼容。在LongVideoBench和Video-MME上的广泛实验证明了其卓越的性能,特别是在长视频上。相关代码可访问:https://github.com/Xian867/AdaRD-Key。

论文及项目相关链接

Summary

本文介绍了处理长视频理解的挑战,现有方法存在的问题以及提出的解决方案。文章指出当前多模态大型语言模型在处理长视频时面临的挑战,包括均匀采样可能忽略关键瞬间和某些关键帧选择方法虽然能有效避免冗余但可能错过重要事件的精细提示。因此,文章提出了一种无需训练的AdaRD-Key方法,结合相关性和多样性目标进行关键帧采样,并能在查询与视频对齐较弱时灵活调整策略。该方法计算效率高,适用于现有视频语言模型。

Key Takeaways

- 当前多模态大型语言模型在处理长视频时存在挑战,如均匀采样可能忽略关键瞬间。

- AdaRD-Key是一种无需训练的关键帧采样方法,用于查询驱动的长视频理解。

- AdaRD-Key结合相关性和多样性目标进行采样,确保信息丰富且非冗余的帧。

- AdaRD-Key采用相关度感知的门控机制,在处理与视频对齐较弱的查询时能提高覆盖率。

- 该方法无需额外监督,计算效率高,能在单个GPU上实时运行。

- AdaRD-Key在LongVideoBench和Video-MME上的表现达到领先水平。

点此查看论文截图

From Long Videos to Engaging Clips: A Human-Inspired Video Editing Framework with Multimodal Narrative Understanding

Authors:Xiangfeng Wang, Xiao Li, Yadong Wei, Xueyu Song, Yang Song, Xiaoqiang Xia, Fangrui Zeng, Zaiyi Chen, Liu Liu, Gu Xu, Tong Xu

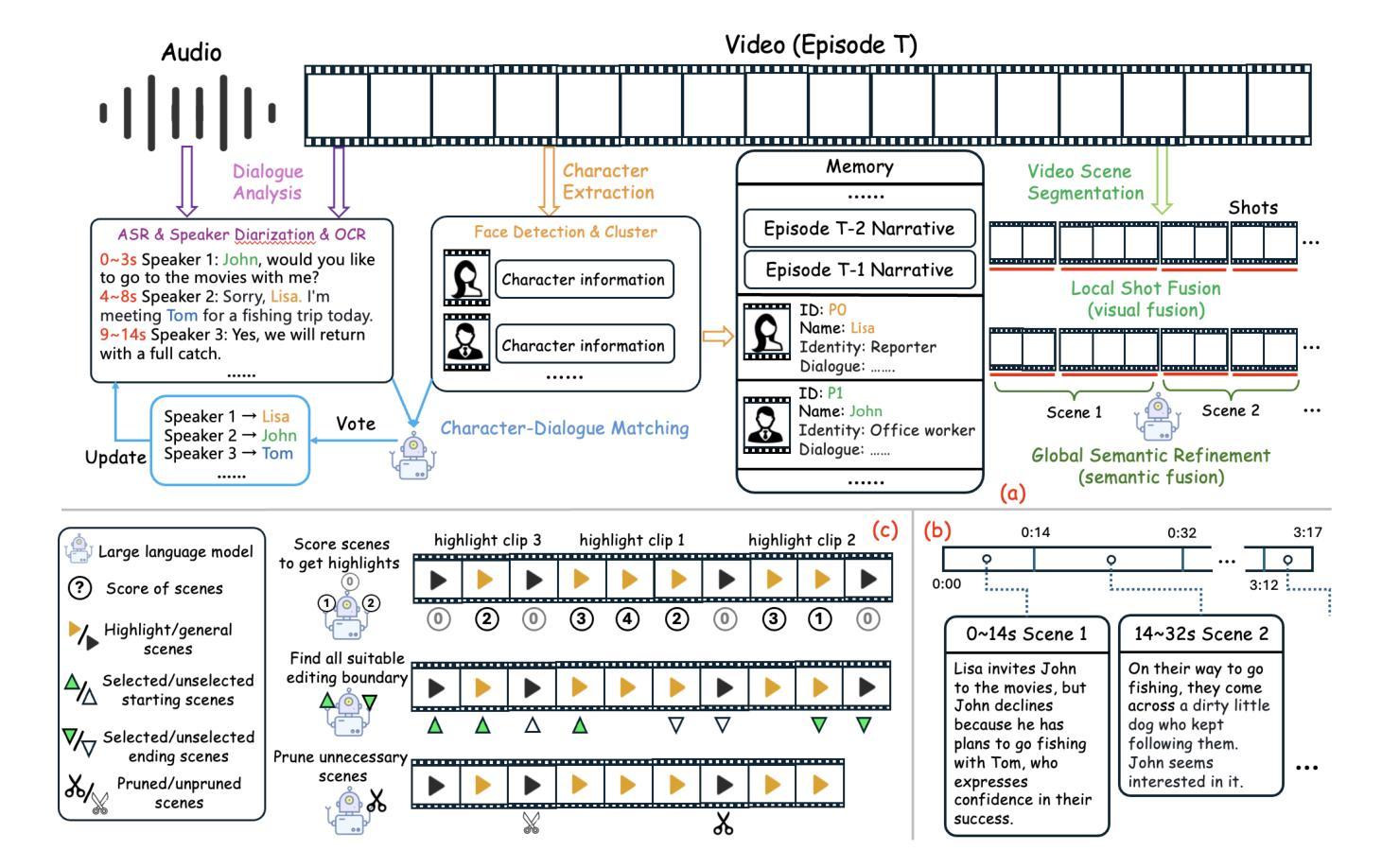

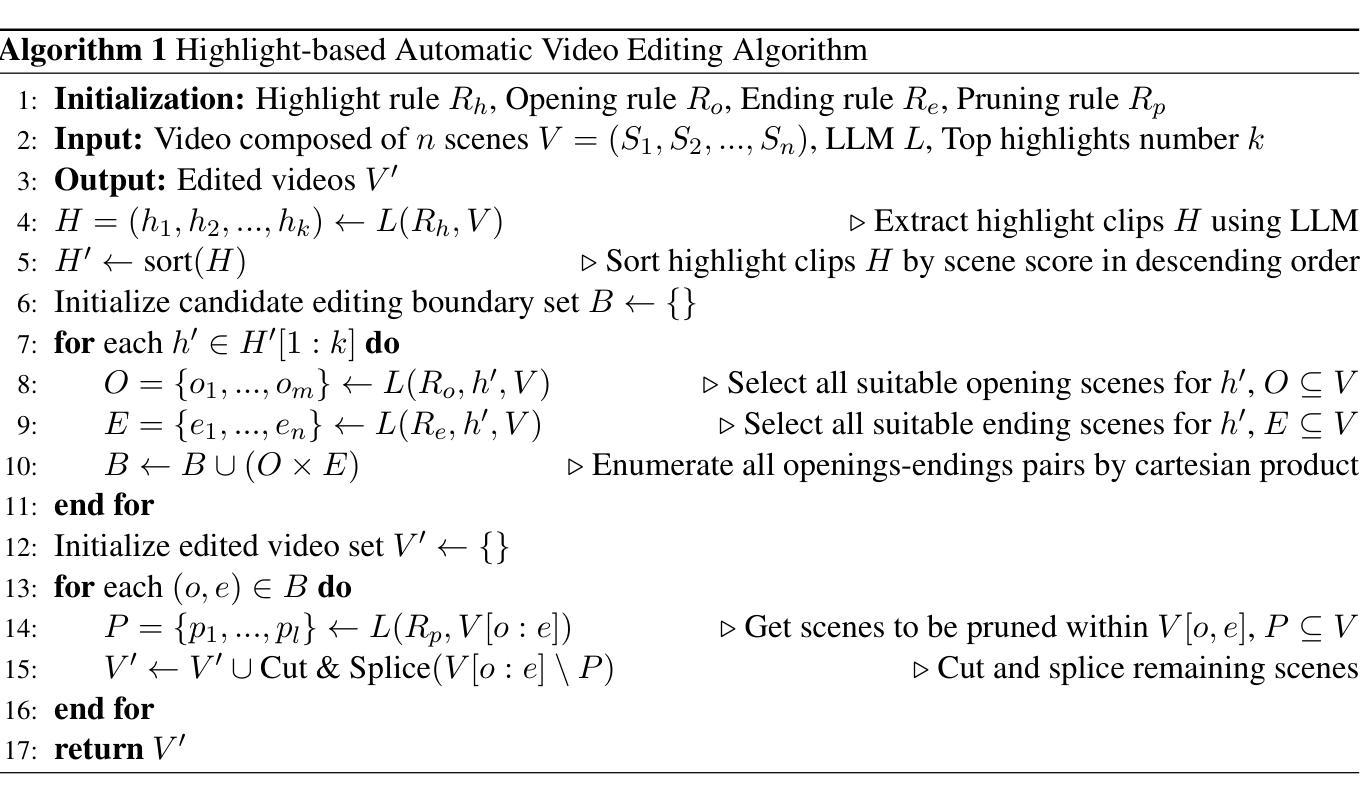

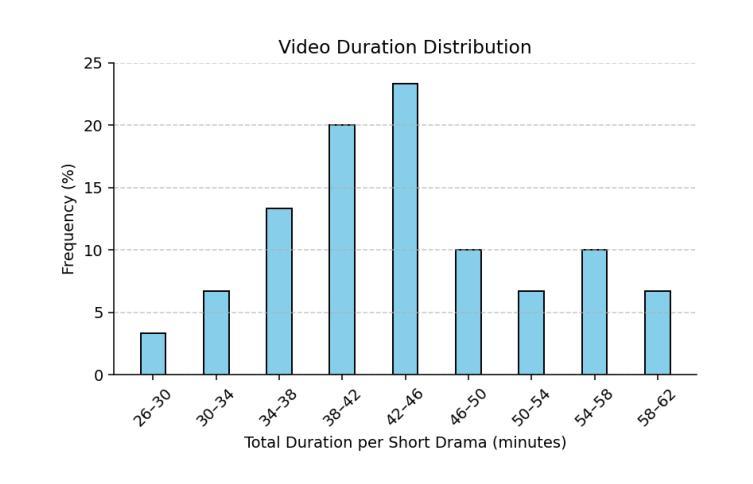

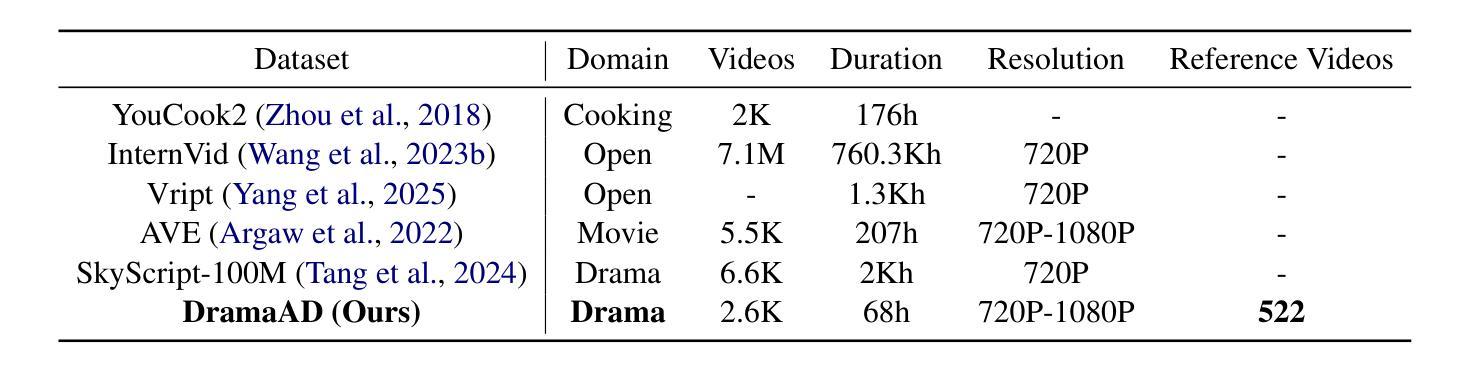

The rapid growth of online video content, especially on short video platforms, has created a growing demand for efficient video editing techniques that can condense long-form videos into concise and engaging clips. Existing automatic editing methods predominantly rely on textual cues from ASR transcripts and end-to-end segment selection, often neglecting the rich visual context and leading to incoherent outputs. In this paper, we propose a human-inspired automatic video editing framework (HIVE) that leverages multimodal narrative understanding to address these limitations. Our approach incorporates character extraction, dialogue analysis, and narrative summarization through multimodal large language models, enabling a holistic understanding of the video content. To further enhance coherence, we apply scene-level segmentation and decompose the editing process into three subtasks: highlight detection, opening/ending selection, and pruning of irrelevant content. To facilitate research in this area, we introduce DramaAD, a novel benchmark dataset comprising over 800 short drama episodes and 500 professionally edited advertisement clips. Experimental results demonstrate that our framework consistently outperforms existing baselines across both general and advertisement-oriented editing tasks, significantly narrowing the quality gap between automatic and human-edited videos.

在线视频内容的快速增长,特别是在短视频平台上,已经产生了对高效视频编辑技术的日益增长的需求,这些技术能够将长视频浓缩成简洁而引人入胜的片段。现有的自动编辑方法主要依赖于ASR转录的文本线索和端到端的片段选择,经常忽略丰富的视觉上下文,导致输出内容不连贯。针对这些限制,我们在本文中提出了一种受人类启发的新型自动视频编辑框架(HIVE),该框架利用多模式叙事理解。我们的方法结合了字符提取、对话分析和叙事摘要,通过多模式大型语言模型实现对视频内容的整体理解。为了进一步提高连贯性,我们应用了场景级分割,并将编辑过程分解为三个子任务:亮点检测、开头/结尾选择以及去除无关内容。为了促进该领域的研究,我们引入了DramaAD,这是一个新的基准数据集,包含超过800个短剧片段和500个专业编辑的广告片段。实验结果表明,我们的框架在一般和面向广告的编辑任务上都优于现有基线,显著缩小了自动编辑和人类编辑视频之间的质量差距。

论文及项目相关链接

PDF Accepted by EMNLP 2025 Industry Track

Summary

本文提出一种人类启发式的自动视频编辑框架(HIVE),结合多模态叙事理解,解决现有自动编辑方法忽略视觉上下文和输出不连贯的问题。该框架融入角色提取、对话分析和叙事摘要,通过多模态大型语言模型实现视频内容的整体理解。为提升连贯性,研究引入场景级分割,将编辑过程细化为亮点检测、开头与结尾选取以及无关内容修剪。同时,推出DramaAD数据集,包含800个短剧片段和500个专业编辑的广告片段。实验证明,该框架在通用和广告导向的编辑任务上均优于现有基线,大幅缩小自动编辑与人工编辑视频的质量差距。

Key Takeaways

- 在线视频内容的快速增长,尤其是短视频平台,对高效视频编辑技术提出更高要求。

- 现有自动编辑方法主要依赖ASR文本的线索和端到端片段选择,忽略视觉上下文,导致输出不连贯。

- 提出的HIVE框架结合多模态叙事理解,实现视频内容的全面理解。

- HIVE框架包含角色提取、对话分析和叙事摘要等功能。

- 为提高连贯性,研究引入场景级分割,细化编辑过程为亮点检测、开头与结尾选取以及无关内容修剪。

- 推出DramaAD数据集,为研究领域提供新的基准测试资源。

点此查看论文截图

SkillFormer: Unified Multi-View Video Understanding for Proficiency Estimation

Authors:Edoardo Bianchi, Antonio Liotta

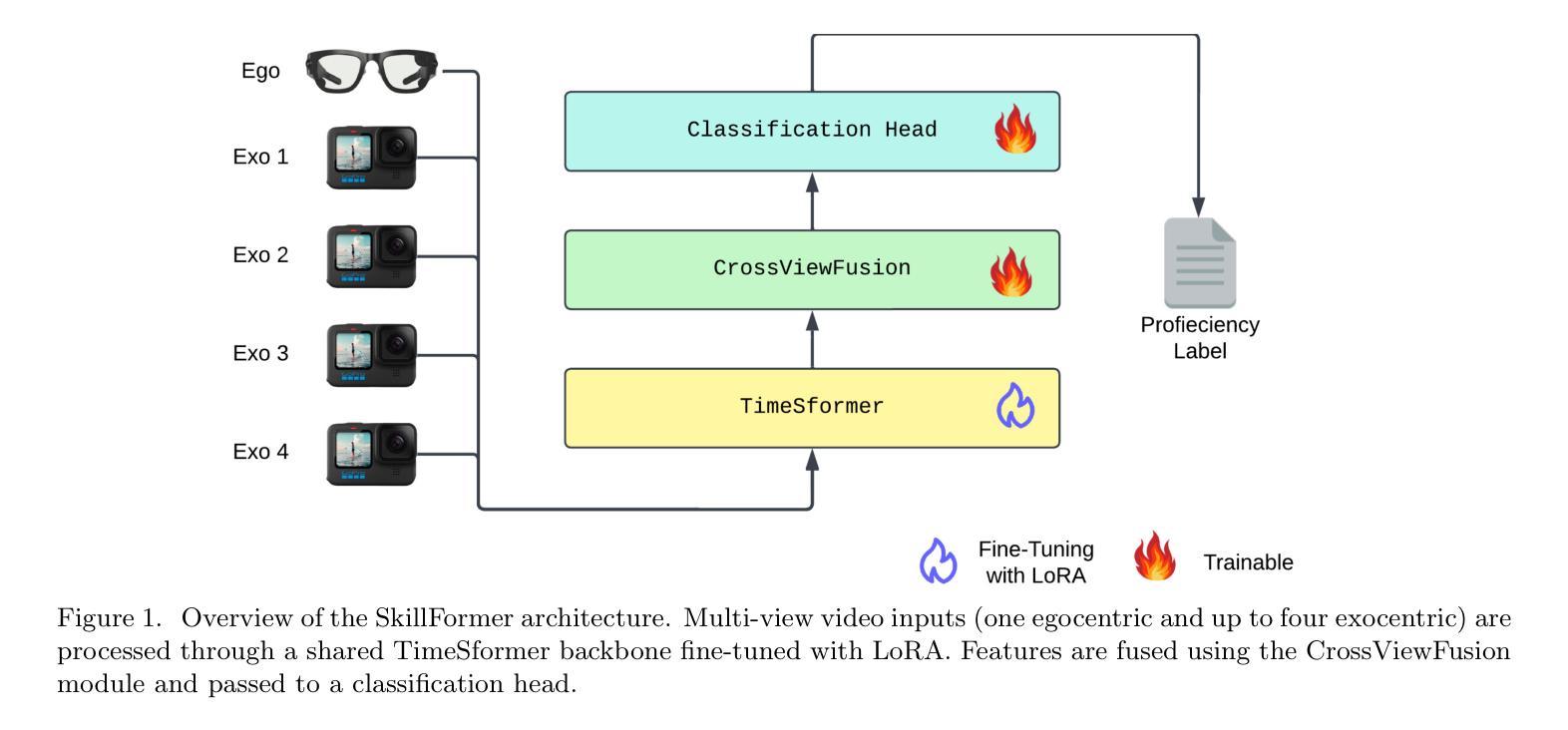

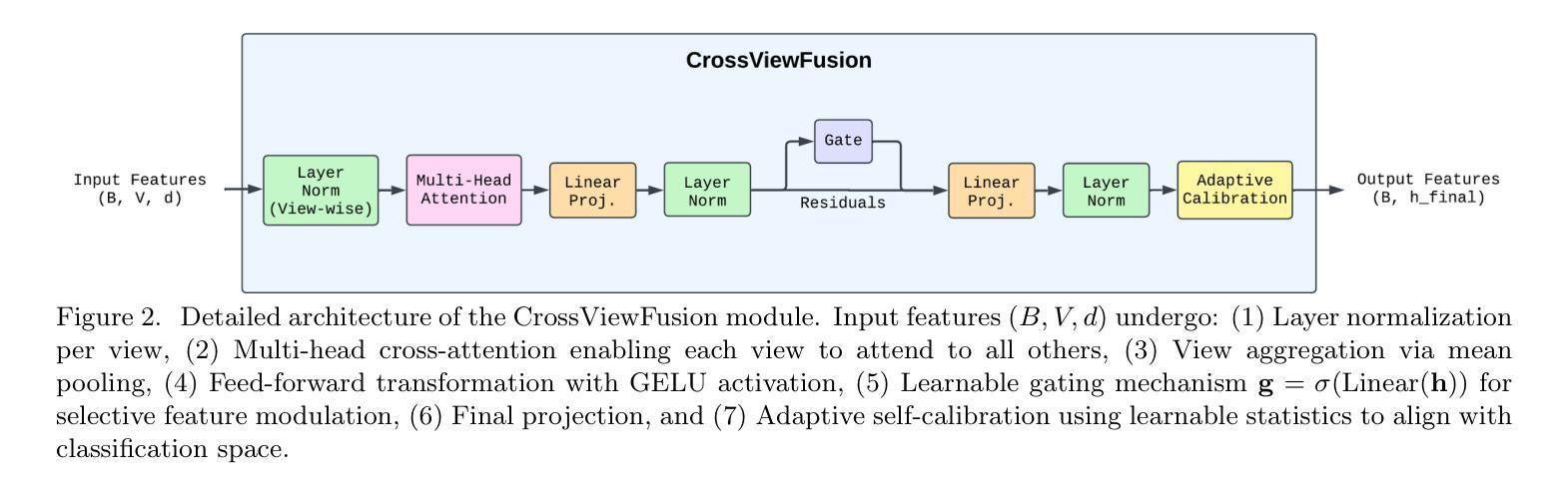

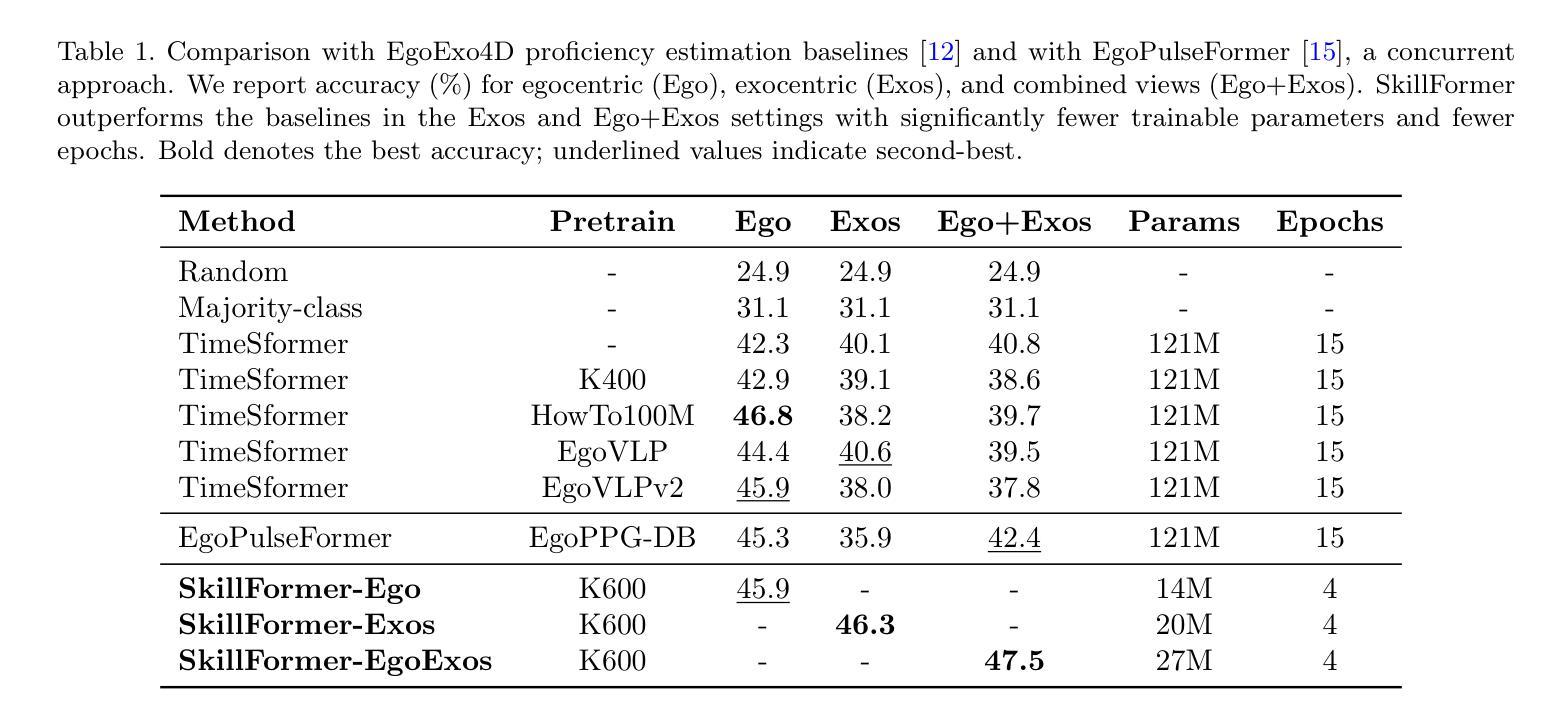

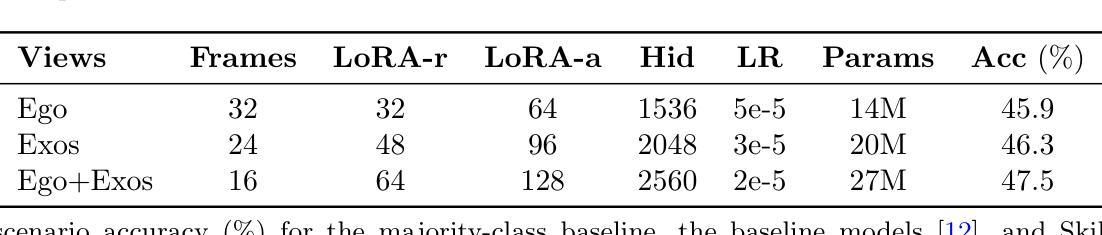

Assessing human skill levels in complex activities is a challenging problem with applications in sports, rehabilitation, and training. In this work, we present SkillFormer, a parameter-efficient architecture for unified multi-view proficiency estimation from egocentric and exocentric videos. Building on the TimeSformer backbone, SkillFormer introduces a CrossViewFusion module that fuses view-specific features using multi-head cross-attention, learnable gating, and adaptive self-calibration. We leverage Low-Rank Adaptation to fine-tune only a small subset of parameters, significantly reducing training costs. In fact, when evaluated on the EgoExo4D dataset, SkillFormer achieves state-of-the-art accuracy in multi-view settings while demonstrating remarkable computational efficiency, using 4.5x fewer parameters and requiring 3.75x fewer training epochs than prior baselines. It excels in multiple structured tasks, confirming the value of multi-view integration for fine-grained skill assessment. Project page at https://edowhite.github.io/SkillFormer

评估人类在复杂活动中的技能水平是一个具有挑战性的问题,其应用场景包括体育、康复和训练。在这项工作中,我们提出了SkillFormer,这是一种基于参数高效的统一多视角能力评估架构,可从第一人称和第三人称视频中进行能力评估。基于TimeSformer骨干网,SkillFormer引入了CrossViewFusion模块,该模块通过多头交叉注意力、可学习门控和自适应自校准融合视图特定特征。我们利用低秩适应(Low-Rank Adaptation)技术仅微调一小部分参数,大大降低了训练成本。实际上,在EgoExo4D数据集上进行评估时,SkillFormer在多视角设置中实现了最先进的准确性,同时展示了显著的计算效率,使用参数减少了4.5倍,训练周期减少了3.75倍,优于先前的基线。它在多个结构化任务中表现出色,证实了多视角融合在精细技能评估中的价值。项目页面:https://edowhite.github.io/SkillFormer

论文及项目相关链接

PDF Accepted at the 2025 18th International Conference on Machine Vision. Project page at https://edowhite.github.io/SkillFormer

Summary

本文介绍了SkillFormer模型,这是一种用于从第一人称和第三人称视频中评估技能水平的参数高效架构。该模型基于TimeSformer,引入了CrossViewFusion模块,通过多头交叉注意力、可学习门控和自适应自校准来融合不同视角的特征。使用低秩适应技术,仅微调一小部分参数,显著降低训练成本。在EgoExo4D数据集上的评估结果表明,SkillFormer在多视角设置中实现了最先进的准确性,同时在计算效率方面表现出色,使用的参数比先前基线少4.5倍,训练周期也减少了3.75倍。这验证了多视角融合在精细技能评估中的价值。

Key Takeaways

- SkillFormer是一个用于评估从第一人称和第三人称视频中的技能水平的模型。

- 该模型基于TimeSformer架构,并引入了CrossViewFusion模块来融合不同视角的特征。

- SkillFormer使用低秩适应技术,通过微调少量参数来降低训练成本。

- 在EgoExo4D数据集上的评估显示,SkillFormer实现了多视角设置中的最佳准确性。

- SkillFormer在计算效率方面表现出色,使用的参数和训练周期比先前的基线少。

- 多视角融合对于精细技能评估具有重要价值。

点此查看论文截图